?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Virtual reference feedback tuning is one of data-driven control method, as only input–output data are used to design two degrees of freedom controller directly, i.e. feed-forward and feedback controller. Given two desired closed-loop transfer functions, virtual input and virtual disturbance are constructed, respectively to obtain one cost function, whose decision variables correspond to the two unknown controller parameters. Then after formulating the cost function as a linear regression form, the classical least squares algorithm is applied to identify the unknown parameter estimators. To quantify the quality or approximation error of the parameter estimators, statistical accuracy analysis is studied for virtual reference feedback tuning control. The detailed computational process about the asymptotic variance matrix is given by using some knowledge from probability theory and matrix theory. Based on our obtained asymptotic variance matrix, two applications of optimal input design and model structure validation are used to illustrate how to use the obtained asymptotic variance matrix. Finally, one simulation example has been performed to demonstrate the effectiveness of the theories proposed in this paper.

1. Introduction

For many industrial production processes, safety and production restrictions are often strong reasons for not allowing controller design in an open-loop system. In such situations, it is urgent to design controllers under the closed-loop conditions. When designing controllers in the closed-loop system, only experimental data can be collected about the whole closed-loop system. The main difficulty of designing controller in a closed-loop system is that the researcher must consider the correlation between the reference input and external disturbance, induced by the feedback loop. Consider one problem about how to design controllers in a closed-loop system, a mathematical model about the considered plant must be known in prior. However, the mathematical description or model of the considered plant can not be easily determined in the industrial process, such as paper production factory and other chemical factories.

Virtual reference feedback tuning control is a data-driven control method, where the model identification process can be neglected to simplify the whole design process. In engineering, the classical PID controller is always used to achieve the desired goal. The PID controller is a parametrized controller, so this special parametrized structure is considered in this paper. If the expected controller is parametrized by one unknown parameter vector, the main merits of virtual reference feedback tuning control are to tune the unknown parameter vector in the controller by using only input–output measured data without any knowledge of plant model. So in the idea of virtual reference feedback tuning control, the process of the designing controller is transformed into tuning the unknown parameter vector.

The cost of developing the mathematical model is very high. To avoid the identification process for the plant, one of direct data-driven method-virtual reference feedback tuning control was proposed to design the controller in 1940s (Guardabassi & Savaresi, Citation2012). The main essence of virtual reference feedback tuning control is that the controller can be designed directly by measured data without any priori knowledge about the plant model. In Bravo et al. (Citation2006), the problem of designing the controller was transformed to identify one unknown parameter vector of the controller. Then the idea of virtual reference feedback tuning control was applied in a benchmark problem (Bravo et al., Citation2016), where a control cost of the 2-norm type was minimized by using a batch of data collected from the plant. Virtual reference feedback tuning control for controller tuning in a nonlinear setup was introduced in Bravo et al. (Citation2017). In Tanaskovic et al. (Citation2017), the extension of virtual reference feedback tuning control to the the design of two degrees of freedom controller was presented to shape the input–output transfer function of the closed-loop system. As the direct data-driven control approach consists of iterative adjustment of the controller's parameters towards the parameter values, the H2 performance criterion is analysed in order to characterize and enlarge the set of initial parameter values (Campi et al., Citation2002). The contribution of Campi et al. (Citation2005) introduces an invalidation test step based on the available data to check if the flexibility of the controller parameter is suitable for the design objectives. In recent years, many papers on virtual reference feedback tuning control are published, for example, in Jianhong (Citation2012), the model-matching problems between the closed-loop transfer function and sensitivity function were considered simultaneously, and the iterative sequence generated by the iterative least squares identification algorithm is analysed for identifying the unknown parameter vector. Virtual reference feedback tuning control for constrained closed-loop system was studied in Jianhong (Citation2014b), where the ellipsoid optimization iterative algorithm was adopted to generate a sequence of ellipsoids with decreasing volume. To compromise the gap between the linear and nonlinear controller, the problem of designing a linear time varying controller was solved by using virtual reference feedback tuning control (Jianhong, 2014a). Then through minimizing one approximation error, the adjustable weights in the linear affine function is given in Jianhong (Citation2013). In Jianhong (Citation2017), virtual reference feedback tuning control is combined into internal model control strategy so that the process of identify the plant is avoided in internal model control. In this paper, we do not consider how to design the controller by using virtual reference feedback tuning control, but here we study the finite sample properties of virtual reference feedback tuning with two degrees of freedom control based on a quadratic criterion applied to a simple closed-loop system structure. It is well known that finite sample properties quantitatively assess the discrepancy between minimizing a theoretical identification cost and its empirical counterpart when only a finite number of data points is available (Bazanella et al., Citation2008). The model quality with many data points is assessed by applying the asymptotic theory under linear model identification condition (Sala & Esparza, Citation2005). But these asymptotic results hold only when the number of data points tends to infinity (Campi & Savaresi, Citation2006). Due to the advantage of no identification process, then virtual reference feedback tuning control is more widely applied in engineering and theory research now.

Here in this paper, based on above mentioned references and our previous published contributions (Jianhong, Citation2012, Citation2013, Citation2014a, Citation2014b, Citation2017, Citation2019), we continue to do the deep research on this direct data-driven control strategy-virtual reference feedback tuning control.As in the commonly used closed-loop system, the feed-forward controller and feedback controller exist simultaneously, and these two controllers correspond to the closed-loop system with two degrees of freedom controllers. There are lots of references on designing these two unknown controllers, for the sake of completeness, here classical model reference control and virtual reference feedback tuning control are reviewed. Through comparing the principles between these two strategies, no any priori knowledge about the considered plant is needed in our virtual reference feedback tuning control. Furthermore after constructing the virtual input and virtual disturbance, we find the problem of designing unknown controllers can be changed into one identification problem, whose decision variables are two unknown parameter vectors. Consider the identification with respect to these two unknown parameter vectors, lots of identification algorithms can be applied directly from references, for example, least squares method, separable gradient method, and parallel distributed method, etc. After these two unknown parameter vectors are identified, we need to testify whether our obtained parameter estimators are biased or asymptotic efficient. The whole process to achieve the above goal is named as statistical accuracy analysis for virtual reference feedback tuning control, i.e. probability theory and matrix theory are applied to construct the asymptotic variance matrix for those two unknown parameter vectors. The two diagonal sub-matrices from the asymptotic variance matrix correspond to the asymptotic variance expressions for the unknown parameter vectors. Here we give a more detailed derivation for this complex asymptotic variance matrix through our own mathematical derivations. Based on this obtained asymptotic variance matrix, optimal input design and model structure validation for closed-loop system can be studied. Then the problems about optimal input design and model structure validation are dependent of the obtained asymptotic variance matrix. Generally to quantify the quality of the parameter estimators, statistical accuracy analysis is studied for virtual reference feedback tuning with two degrees of freedom controllers, and the computational process about the asymptotic variance matrix is given more specifically. This asymptotic variance matrix can describe the approximation error between the true value and its estimator. Also from the point of further research on system identification and data-driven control, this obtained asymptotic variance matrix is beneficial for other subjects, such as optimal input design and model structure validation, etc. To pave the road for these other subjects, here some tedious mathematical derivations are given to get that important asymptotic variance matrix. The main contributions of this paper are to derive the asymptotic variance matrix, corresponding to those two unknown controller parameters, and apply this asymptotic variance matrix to optimal input design and model structure validation. To the best of our knowledge that statistical accuracy analysis for virtual reference feedback tuning control is a first work in control research, due to its very strong mathematical basis.

Contributions: Here in this paper, generally the main contributions of this paper are formulated as follow.

Derive the asymptotic variance matrix, corresponding to those two unknown controller parameters.

Apply this asymptotic variance matrix to optimal input design and model structure validation.

Virtual input and virtual disturbance are constructed, respectively to obtain one cost function, which corresponds to two model-matching problems.

The classical least squares algorithm is applied to identify the unknown controller parameters on the condition of transforming that cost function as its simplified linear regression form.

The paper is organized as follows. In Section 2, the problem formulation is addressed, and the structure of our considered closed-loop system with two degrees of freedom controllers is presented. To compare virtual reference feedback tuning control and classical model reference control, a short introduction of classical model reference control is given to design the two unknown parametrized controllers in Section 3, and the deficiency of classical model reference control is also pointed out. In Section 4, virtual reference feedback tuning control is proposed to design these two parametrized controllers, furthermore virtual input and virtual disturbance are constructed to get an optimization problem. The simple least squares method and its recursive form are proposed to identify the two unknown parameter vectors in Section 5, and also the detailed process of how to formulate that optimization problem to its simplified form is given. The main contribution about statistical accuracy analysis for virtual reference feedback tuning control is studied in Section 6, some knowledge from probability theory and matrix theory are combined to obtain that important asymptotic variance matrix. Based on this obtained asymptotic variance matrix, its application in optimal input design and model structure validation are described in Section 7. In Section 8, one simulation example illustrates the effectiveness of the proposed theories. Section 9 ends the paper with final conclusion and points out our future work.

2. Problem description

Assume the plant is a linear time invariant discrete time single input and single output process. The plant is denoted by a rational transfer function form , and

is unknown. Throughout the closed-loop experimental process, only a sequence of input–output measured data corresponding to the plant

are collected. The input–output relation is described as follows.

(1)

(1) where z is a time shift operator,i.e.

,

is one transfer function of the plant,

is the measured input,

is the measured output corresponding to the plant

,

is the external noise. When

in Equation (Equation1

(1)

(1) ) is unknown but has known bounds, we regard to the uncertainty associated with

as additive noise because of the way it enters the input–output relation in equation (Equation1

(1)

(1) ).

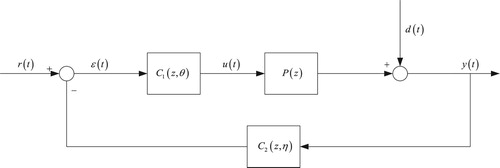

Consider the following simple closed-loop system with two degrees of freedom controllers in Figure , the input–output relations in the whole closed-loop system are written as follows.

(2)

(2) where

is the excited signal,

is one expected transfer function,

and

are two degrees of freedom controllers which are parametrized by the unknown parameter vectors θ and η,respectively, i.e. these two controllers

and

are independently parametrized as the following linear affine forms.

(3)

(3) where

and

denote two known basic function vectors, θ and β are two unknown parameter vectors with dimension n. One main contribution of this paper is to construct the variance matrix for these two unknown parameter vectors θ and β by using probability theory and matrix theory in the case of probabilistic noise

.

Observing Figure , in the classical control system, is always one sensor, and it sends the output signal back to the input. But in some practical control system such as flight control, vehicle control, etc,

maybe be one feedback controller.

Formulating Equation (Equation2(2)

(2) ) again, the new input–output relation in terms of excited input

and external noise

are given as.

(4)

(4)

3. Classical model reference control

In the closed-loop system with two degrees of freedom controllers, the first transfer function from the excited signal to measured output

is closed-loop transfer function (Lecchini et al., Citation2002), and the second transfer function from the external signal

to measured output

is sensitivity function (Milanese & Novara, Citation2004). From Equation (Equation4

(4)

(4) ), the control task of classical model reference control is to tune the unknown parameter vectors θ and η corresponding to two degrees of freedom controllers

in order to achieve to the expected closed-loop transfer function and expected sensitivity function. Given the closed-loop transfer function

and expected sensitivity function

, we want to guarantee that the closed loop transfer function approximates to its expected function

, and the sensitivity function tends to its expected function

too (Alamo et al., Citation2005). The problem of tuning these two unknown parameter vectors θ and η are formulated as the following classical model reference control optimization problem.

(5)

(5) where

is the common Euclidean norm. In Equation (Equation5

(5)

(5) ), before solving this optimization problem with respect to unknown parameter vectors θ and η, the priori knowledge about the plant

may be needed. As the plant

is unknown in this model reference control design method, so the identification strategy is used to identify

. To avoid the identification process of the plant

, virtual reference feedback tuning control is proposed to directly identify the unknown parameter vectors θ and η in two controllers

from the measured input–output data set

, where N is the number of data points.

4. Virtual reference feedback tuning control

Given two controllers , as the closed-loop transfer function from

to

is

, then we apply one arbitrary signal

to excite the closed-loop system (Equation2

(2)

(2) ), the output of the closed-loop system is described as that.

Consider one special excited input

, the necessary condition about that the closed-loop transfer function be

is that the two closed-loop systems have the same output

under a given input (Jianhong, Citation2019). During classical model reference control, this necessary condition holds in case of choosing suitable controller and excited signal. But above description does not hold, due to unknown plant. The idea of virtual reference feedback tuning control means that virtual input

and virtual disturbance

need to be constructed firstly, so we give the detailed process of constructing virtual input

and virtual disturbance

.

4.1. Virtual input

Collecting input–output sequence of the plant

, then for every measured output

, define one virtual input

such that.

(6)

(6) This virtual input

does not exist in reality and it can not be used to generate actual measured output

. But virtual input

can be obtained by equation

, i.e.

is the measured output of the closed-loop system, when the excited signal

is applied with no disturbance

.

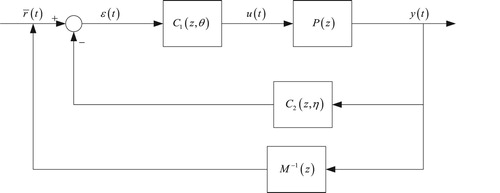

Due to the unknown plant , and when

is excited by

, its output is

, so we choose two suitable controllers

to obtain one expected signal

, if the closed-loop system is excited by virtual input

and

simultaneously. The construction of virtual input

can be seen Figure , where the tracking error

is defined as.

(7)

(7) From Figure we see that when the closed-loop system is excited by

, the expression of

is get.

(8)

(8)

4.2. Virtual disturbance

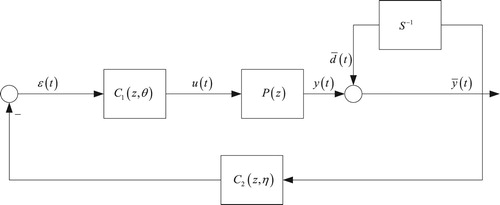

Given the measured output , define one virtual disturbance

to guarantee that when the closed-loop system is excited by virtual disturbance

, the obtained output

is defined as that.

(9)

(9) The construction of virtual disturbance

is in Figure , where this virtual disturbance

satisfied that.

(10)

(10) Equation (Equation10

(10)

(10) ) is used to generate virtual disturbance

, it means that when the closed-loop system is excited by the following signals

, the obtained sinal

is get.

(11)

(11) As

in Equation (Equation11

(11)

(11) ) is not the true output, and instead the true output is

, so

in Equation (Equation11

(11)

(11) ) must be changed into

.

Combining two equations (Equation9(9)

(9) ) and (Equation11

(11)

(11) ), we get that.

(12)

(12) Furthermore we have.

(13)

(13) Using equations (Equation8

(8)

(8) ) and (Equation13

(13)

(13) ), two unknown parameter vectors θ and η in two controllers

can be identified by solving the following optimization problem.

(14)

(14) Observing optimization problem (Equation14

(14)

(14) ), all variables are known except for two parametrized controllers

. More specifically, input–output data

can be collected by sensors, these two expected transfer functions

and

are priori known. Roughly speaking, the plant

is not in optimization problem (Equation14

(14)

(14) ), which is the main contribution in virtual reference feedback tuning control. Furthermore optimization problem (Equation14

(14)

(14) ) embodied that the problem of designing two controllers can be transformed to identify two unknown parameter vectors. This transformation will simplify the latter computational complexity for designing controllers.

5. Identification algorithm

From above descriptions on virtual reference feedback tuning, the main step is to identify two unknown parameter vectors . But now lots of identification algorithms proposed in references are based on probability distribution on external disturbance (Weyer, Citation2000). Generally to validate the applied model in engineering, some uncertainties can be added to the mathematical model. Frequently disturbance inflecting the real system has to be taken into account in the mathematical model in order to ensure a similar behaviour of the real system and the mathematical model. Here the main contribution is about statistical accuracy analysis on virtual reference feedback tuning control, not on the identification algorithm, so for the sale of completeness, here in this section we only use the simple least squares algorithm to identify those two unknown parameter vectors

. In order to apply the classical least squares algorithm, from the theoretical perspective, firstly we transform the problem of identifying unknown parameter vectors in virtual reference feedback tuning control into two linear regression models,which are suitable for least squares algorithm algorithm.

Observing the optimization problem (Equation14(14)

(14) ) in virtual reference feedback tuning control, our goal is to identify two optimal parameter vectors such that the above forms hold. Let

(15)

(15) Then

(16)

(16) It means that

(17)

(17) Using the Equation (Equation3

(3)

(3) ) as.

(18)

(18) Substituting the above linear affine form (Equation3

(3)

(3) ) of the parametrized controller

into above Equation (Equation17

(17)

(17) ), then we have.

(19)

(19) where one special basic function vector is used here.

Rewriting above Equation (Equation19

(19)

(19) ) as one linear regression model.

Hence

(20)

(20) Introduce the regression vector

as that.

Then Equation (Equation19

(19)

(19) ) will be a linear regression model.

(21)

(21) After the unknown parameter vector η is identified and substituting its estimator

into the parametrized controller, then controller

is obtained. Applying this obtained controller

into equation (Equation17

(17)

(17) ), then we have.

(22)

(22) Similarly based on that special basic function vector

, Equation (Equation22

(22)

(22) ) can be also written as another linear regression model.

(23)

(23) where the regressor vector

is defined as.

From these two linear regression models (Equation21

(21)

(21) ) and (Equation23

(23)

(23) ), we see that firstly the unknown parameter vector η can be identified on basis of linear regression model (Equation21

(21)

(21) ), then after substituting its estimator

into the regressor vector

, another unknown parameter vector θ is obtained from linear regression model (Equation23

(23)

(23) ). So here we only give a detailed least squares identification algorithm to identify the unknown parameter vector η in linear regression model (Equation23

(23)

(23) ), the identification of θ is similar to our derivation.

Observing that linear regression model (Equation21(21)

(21) ) with respect to unknown parameter vector η, its least squares algorithm is easily used here, i.e.

(24)

(24) Also its general recursive least squares algorithm is given as.

(25)

(25) where

denotes the parameter estimator at iterative step t, and

is one gain matrix. Furthermore recursive form (Equation25

(25)

(25) ) can be written in the following simplified form.

(26)

(26) This above alternative expression will be useful in the subsequent analysis.

To make the above recursive form hold and retain the convergence of the above least squares algorithm, one condition is needed to guarantee that inverse matrix exist, roughly speaking, this condition corresponds to the persistent excitation, that is described as follows in detail.

Remark

Persistence of excitation

The regression vector is persistent excitation with a level of excitation

, if there exist constant

, such that.

where I is one unit matrix.

The above inequality means matrix is non-singular for all t, the concept of persistent excitation requires that

varies in such a way with time, that the sum of the matrix

is uniformly positive definite over any time instant

.

6. Statistical accuracy analysis

Accuracy analysis is one important factor in system identification and parameter estimation theory, as it can not only show the accuracy property for the parameter estimator, but also provide one useful tool to other research fields, such as optimal input design and model structure validation, etc. Two statistical variables are always used in accuracy analysis, i.e. expectation and variance, as variance can measure the approximation error between the true value and its estimator. If the approximation error is not tolerable, it means the parameter estimator is biased, and the whole process of system identification must be repeated until to obtain one unbiased estimator. The main contribution of this section is to derive the variance matrix for those two unknown parameter vectors through our own mathematical derivation.

Observing that optimization problem (Equation14(14)

(14) ) and applying the collected data set

, where N is the number of total data points. Two unknown parameter vectors

are identified by solving the following optimization problem.

(27)

(27) Then the asymptotic estimators are derived as the following limit form.

(28)

(28) where E is the expectation operation, and

is rewritten as.

(29)

(29) where

and

are defined as

From the point of probability theory (Campi & Weyer, Citation2002), the following asymptotic relations hold on the condition of

.

(30)

(30) Using the first order optimal necessary condition, if

and

are minimal values for their own cost functions,respectively, the following relations hold.

(31)

(31)

(32)

(32) Expanding the above equations with respect to θ, then we get.

(33)

(33) Similarly expanding the above equations with respect to η, then we get.

(34)

(34) Let

, then above two equations are rewritten as.

(35)

(35)

(36)

(36) Using Taylor series expansion formula, the first order partial derivative can be expanded around the asymptotic limit point

, i.e.

(37)

(37) Expanding above equation to get.

(38)

(38) Observing Equation (Equation37

(37)

(37) ) again, the following relation holds.

(39)

(39) From Equation (Equation39

(39)

(39) ). the asymptotic result corresponding to the unknown parameter vector ξ is derived as follows.

(40)

(40) where

denotes one normal distribution with zero mean and variance matrix A. Equation (Equation40

(40)

(40) ) tells us that the parameter estimator

converges to its true asymptotic limit value

, and the approximation error approaches to one Gaussian stochastic variable. Its asymptotic variance matrix A is defined as follows.

(41)

(41) Above Equation (Equation41

(41)

(41) ) tells us these two parameter estimators are two stochastic variables, and the main diagonal elements in matrix A are needed to derive their detailed expressions, as these main diagonal elements correspond to the asymptotic variance sub-matrices about these two unknown parameter vectors

.

Computing the basic partial derivative, the following results hold.

(42)

(42) Using the property of the parametrized controllers separably, it holds that.

(43)

(43) Through some basic partial derivative calculations, we get.

(44)

(44)

Taking expectation operation on both sides of Equation (Equation42

(42)

(42) ), we have.

(45)

(45) where in deriving above Equation (Equation45

(45)

(45) ), the input–output relation (Equation1

(1)

(1) ) is used here, and

denotes the variance for the external disturbance

.

After applying some knowledge from probability theory (Campi & Weyer, Citation2002) to obtain.

(46)

(46) Using Parseval's relation to get.

(47)

(47) where

is the input power spectrum, and

is defined as.

Similarly other asymptotic sub-matrix is also rewritten as.

(48)

(48) Equations (Equation47

(47)

(47) ) and (Equation48

(48)

(48) ) denote the auto-covariance estimations for two gradient estimations.

Then the cross covariance estimation between two gradient estimations is that.

(49)

(49) Substituting equations (Equation47

(47)

(47) ), (Equation48

(48)

(48) ) and (Equation49

(49)

(49) ) into the variance matrix with respect to the first order gradient estimation, we obtain the following result.

(50)

(50) When

, the following equities hold.

(51)

(51)

(52)

(52)

(53)

(53) Applying equations (Equation51

(51)

(51) ), (Equation52

(52)

(52) ) and (Equation53

(53)

(53) ) into the matrix computation process, we have the following results.

(54)

(54) Due to the inverse matrix of matrix (Equation54

(54)

(54) ) is needed during the computation process of the asymptotic variance matrix A, so here we use the property of the block matrix to get.

(55)

(55) where in about equation, to simplify notation, we use the relation between the input power spectrum

and its corresponding correlation function

. Furthermore let us drop the argument w in the input power spectrum

, i.e.

Substituting equations (Equation50

(50)

(50) ) and (Equation55

(55)

(55) ) into the computation of asymptotic variance matrix A,then the asymptotic variance matrix expression corresponding to those two unknown parameter vectors

is derived as that.

(56)

(56) Formulating the product of three matrices in above Equation (Equation56

(56)

(56) ) as follows.

(57)

(57) Now observing this obtained asymptotic variance matrix A, the sub-matrix in the block

is the asymptotic variance matrix

with respect to the unknown parameter vector θ. Similarly the sub-matrix in the block

is the asymptotic variance matrix

with respect to the unknown parameter vector η. As these two sub-matrices are all on the main diagonal, so only the results about these two sub-matrices on the main diagonal are given. After simple but tedious calculation, the asymptotic variance matrix A is given as the following form.

(58)

(58)

(59)

(59) From Equation (Equation58

(58)

(58) ), the asymptotic variance matrix about those two controller parameter vectors

in the closed-loop system is derived. The main contribution of this paper is to use probability theory and matrix theory to derive this asymptotic variance matrix, then asymptotic variance matrices

and

are easily simplified as follows.

(60)

(60) Moreover the missions of these two asymptotic variance matrices

and

can be seen in next Section 7.

7. Application

Consider those two asymptotic variance matrices and

obtained in above section 6 through our own mathematical derivations, the idea case is that the asymptotic variance matrix

or

may be zero or approach to zero with

. So those two asymptotic variance matrices

and

can measure the identification quality or testify whether our parameter estimators are efficient or asymptotic efficient (Care et al., Citation2018). In order to achieve the efficient or asymptotic efficient property, the asymptotic variance matrix obtained here is an important tool to consider. In Section 7, we only give two applications with respect to the asymptotic variance matrices, i.e. optimal input design and model structure validation.

7.1. Optimal input design

The problem of optimal input design is to design an optimal input power spectrum , such that the chosen performance index will be minimized with respect to its decision variable

(Weyer et al., Citation2017). Here for convenience, the trace operation of that asymptotic variance matrix A is chosen as the considered performance index. The goal is to minimize this performance index through designing one optimal input power spectrum

, i.e. from Equation (Equation60

(60)

(60) ), the performance index is chosen as the trace operation of the asymptotic variance matrix A. Then we have the following optimization problem.

(61)

(61) After introducing coefficients

and

to replace some variables in Equation (Equation61

(61)

(61) ), then the optimization problem (Equation61

(61)

(61) ) can be simplified as.

(62)

(62) Using the optimal necessary condition, it suffices to prove the following result holds while satisfying the minimization property, i.e.

(63)

(63) By differentiating with respect to input power spectrum

and by setting the derivative equal to zero, then we have.

(64)

(64) Making use of the formula of roots, the root of Equation (Equation64

(64)

(64) ) can be obtained easily, i.e.

(65)

(65) Equation (Equation65

(65)

(65) ) is one optimal input power spectrum, it can be used into the system identification theory to design the identification experiment.

7.2. Model structure validation

Model structure validation is also from system identification theory, and the standard cross-correlation test is proposed to test the confidence interval for the unknown parameter estimator. Then our obtained asymptotic variance matrix is used to construct one uncertain bound for the parameter estimator. This uncertain bound is named as the confidence interval and it constitutes the guaranteed confidence region test with respect to the parameter estimator under the closed-loop condition.

Based on the obtained asymptotic variance matrix , the parameter estimator

converges to its limit value

, and furthermore

will asymptotically converge to one normal distributed random variable with mean value

and variance matrix

, then it holds that.

(66)

(66) The asymptotic relation (Equation66

(66)

(66) ) can be rewritten as one quadratic form, then one

distribution is get.

(67)

(67) where n is the number of degrees of freedom in

distribution, being equal to the dimension or number of the unknown parameters.

Equation (Equation67(67)

(67) ) implies the random variable

satisfied one uncertain bound.

(68)

(68) with

corresponding to a probability level α in

distribution.

When to quantity the uncertainty on , rather than on

, for every realization

, it holds that.

(69)

(69) It shows that

(70)

(70) Two Equations (Equation69

(69)

(69) ) and (Equation70

(70)

(70) ) give the confidence intervals for the unknown parameter vector θ, then we see the probability level of the event

holds is at least θ. Similarly the above analysis process hold for the other unknown parameter vector η, it is omitted here due to no repeat.

8. Simulation example

Here in this section, one example is used to prove the efficiency of our proposed theories about virtual reference feedback tuning control.

Consider the following discrete time linear time invariant system, the true transfer function form is given as follows.

Furthermore two classical PID controllers are used during the later simulation process.

Two true PID controllers are given as:

The expected closed-loop transfer function and the expected sensitivity function are chosen as follows.

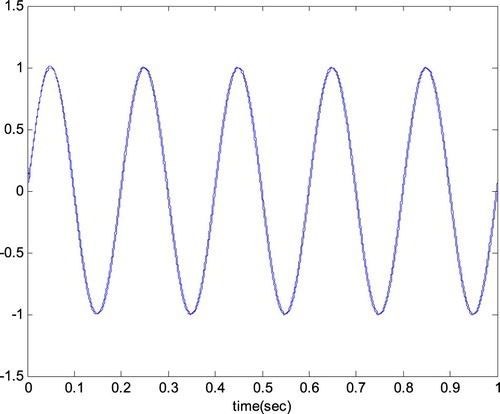

In the whole simulation process, collect the input–output measured data within the closed-loop environment, and the number of data points which we can collect is equal to 2000. Apply this virtual reference feedback tuning control to design the controller parameters, the plant model

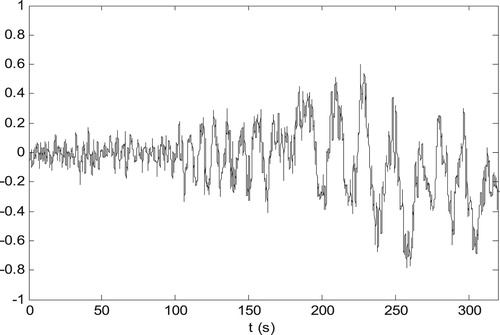

is excited by one sinusoidal input signal, whose curve is plotted in Figure with the number of data points is 1000. In Figure , there are two curves, as one is the continue curve, the other is its discrete curve. The measured output response curve is plotted in Figure . The classical least squares algorithm is used to solve the optimization problem (Equation14

(14)

(14) ). Before starting this recursive algorithm, the initial values of the unknown parameter vector are selected as.

Based on the input and output data

, which are sampled in their own curves,respectively, we need to prove two aspects here, i.e. one is the identification algorithm, and the other is the statistical accuracy analysis or variance analysis with respect to the unknown controller parameters.

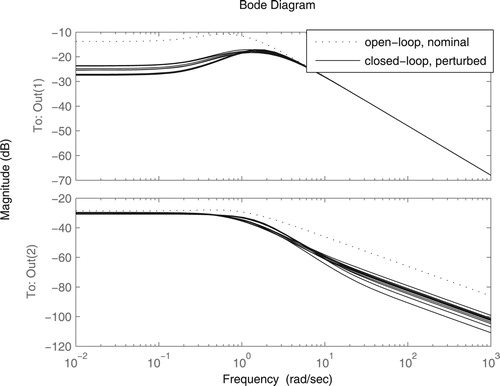

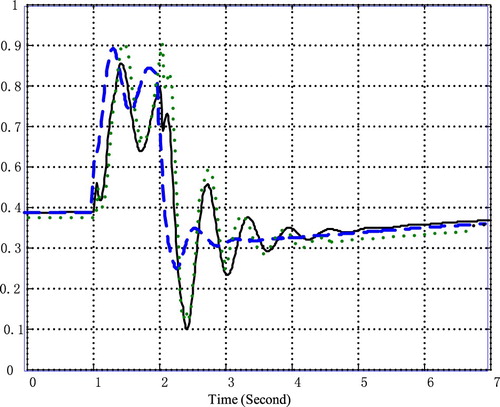

Firstly one experiment is given with the input signal in Figure , and the output signal in Figure , then the classical recursive least squares algorithm is applied to identify the unknown parameter vectors . After the parameter estimators

are obtained, how about the identification accuracy for these parameter estimators

? To illustrate this aspect, we substitute the parameter vector

into the closed-loop transfer function from the excited signal

to the measured output

and compare this actual closed-loop transfer function with its expected or designed closed-loop transfer function

. Figure shows the comparisons of the Bode responses between this actual closed-loop transfer function with its expected closed-loop transfer function

. From Figure , we see these two closed loop transfer functions are very close, it means their approximation error can be neglected. Furthermore in Figure , the open-loop transfer function from

to

is given too, then the merit of the closed-loop system is easily seen.Secondly to illustrate the statistical accuracy analysis or variance analysis for the parameter estimators

, for clarity of presentation, we only give the variance curves for the parameter estimator

in Figure , as only three elements exist in the parameter estimator

. Observing these three variance curves with respect to the three unknown parameters

, from the initialization to 1 s, three variance errors are almost same, then from 1 to 3 s, three variance errors will start to oscillate, and these generated derivation errors experience until to 3 s. From 3 s, three variance errors are stable and approach to one same constant value. Moreover form the variance analysis with respect to the three elements in the parameter vector θ, the classical least squares algorithm is not suited to identify the unknown controller parameters, as the parameter estimators identified by this algorithm are all biased. This identification result is not our expected goal, so other identification algorithms are needed to consider again, for example, our proposed identification algorithms from previous published papers (Jianhong, Citation2012, Citation2013, Citation2014a, Citation2014b, Citation2017, Citation2019). Generally statistical accuracy analysis can used to testify whether or not the identification accuracy is efficient or asymptotic efficient.

9. Conclusion

In this paper, from a statistical point of view, statistical accuracy analysis for virtual reference feedback tuning is studied for closed-loop system with two degrees of freedom controllers. When two desired closed-loop transfer functions are given, virtual input and virtual disturbance are constructed respectively to obtain one cost function, which corresponds to two model-matching problems. The classical least squares algorithm is applied to identify the unknown controller parameters on the condition of transforming that cost function as its simplified linear regression form. Then probability theory and matrix theory are introduced to derive the asymptotic variance matrix of the parameter vector, so that optimal input design and model structure validation can be analysed based on our obtained asymptotic variance matrix.

As this paper is our extended previous research on the basis of our many published papers, relating with virtual reference feedback tuning control, our goal in this paper is to give a complete analysis and summary for our previous work. Then our future work will turn to nonlinear and adaptive control.

Acknowledgments

This work was supported by the Natural Science Foundation of China (Grant No. 60874037) and the Grants from the Foundation of Jiangxi University of Science and Technology (No. jxxjb18020). The first author is grateful to Professor Eduardo F Camacho for his warm invitation in his control lab at the University of Seville, Seville, Spain. Thanks for his assistance and advice on zonotopes in guaranteed state estimation and model predictive control.

Disclosure statement

No potential conflict of interest was reported by the authors.

Data availability statement

The data used to support the findings of this study are available from the corresponding author upon request.

Additional information

Funding

References

- Alamo, T., Bravo, J. M., & Camacho, E. F. (2005). Guaranteed state estimation by zonotopes. Automatica, 41(6), 1035–1043. https://doi.org/10.1016/j.automatica.2004.12.008

- Bazanella, A. S., Gevers, M., Mišković, L., & Anderson, B. D. O. (2008). Iterative minimization of H2 control performance criterion. Automatica, 44(10), 2549–2559. https://doi.org/10.1016/j.automatica.2008.03.014

- Bravo, J. M., Alamo, T., & Camacho, E. F. (2006). Bounded error identification of systems with time varying parameters. IEEE Transactions on Automatic Control, 51(7), 1144–1150. https://doi.org/10.1109/TAC.2006.878750

- Bravo, J. M., Alamo, T., Vasallo, M., & Gegundez, M. E. (2017). A general framework for predictors based on bounding techniques and local approximation. IEEE Transactions on Automatic Control, 62(7), 3430–3435. https://doi.org/10.1109/TAC.2016.2612538

- Bravo, J. M., Suarez, A., Vasallo, M., & Alamo, T. (2016). Slide window bounded error time varying systems identification. IEEE Transactions on Automatic Control, 61(8), 2282–2287. https://doi.org/10.1109/TAC.2015.2491539

- Campi, M. C., Lecchini, A., & Savaresi, S. M. (2002). Virtual reference feedback tuning: A direct method for the design of feedback controllers. Automatica, 38(8), 1337–1346. https://doi.org/10.1016/S0005-1098(02)00032-8

- Campi, M. C., Lecchini, A., & Savaresi, S. M. (2005). An application of the virtual reference feedback tuning method to a benchmark problem. European Journal of Control, 9(1), 66–76. https://doi.org/10.3166/ejc.9.66-76

- Campi, M. C., & Savaresi, S. M. (2006). Direct nonlinear control design: The virtual reference feedback tuning (VRFT) approach. IEEE Transactions on Automatic Control, 51(1), 14–27. https://doi.org/10.1109/TAC.2005.861689

- Campi, M. C., & Weyer, E. (2002). Finite sample properties of system identification methods. IEEE Transactions on Automatic Control, 47(8), 1329–1334. https://doi.org/10.1109/TAC.2002.800750

- Care, A., Csorji, B., Campi, M. C., & Weyer, E. (2018). Finite-sample system identification: An overview and a new correlation method. IEEE Control Systems Letters, 2(1), 61–66. https://doi.org/10.1109/LCSYS.2017.2720969

- Guardabassi, G. O., & Savaresi, S. M. (2012). Virtual reference direct design method: An off-line approach to data-based control system design. IEEE Transactions on Automatic Control, 45(5), 954–959. https://doi.org/10.1109/9.855559

- Jianhong, W. (2012). Virtual reference feedback tuning control for constrained closed loop system. Journal of Shanghai Jiaotong University, 46, 1398–1405. https://doi.org/1006-2467

- Jianhong, W. (2013). Virtual reference feedback tuning control design for nonlinear closed loop system. Electronic Optics and Control, 20, 23–27. https://doi.org/10.3969/j.issn.1671X.2013.01.006

- Jianhong, W. (2014a). Regularization design of virtual reference feedback tuning time varying control. Electronic Optics and Control, 21, 77–82. https://doi.org/10.3969/j.issn.1671-637X.2014.08.017

- Jianhong, W. (2014b). Virtual reference feedback tuning control and iterative minimization identification. Systems Engineering Theory and Practice, 34, 1256–1266. https://doi.org/1000-6788

- Jianhong, W. (2017). Virtual reference feedback tuning design in internal model control. Journal of System Science and Mathematical Science, 37, 355–369. https://doi.org/123.57.41.99/jweb_xtkxysx/CN/93C40

- Jianhong, W. (2019). Zonotope parameter identification for virtual reference feedback tuning control. International Journal of Systems Science, 50(2), 351–364. https://doi.org/10.1080/00207721.2018.1552767

- Lecchini, A., Campi, M. C., & Savaresi, S. M. (2002). Virtual reference feedback tuning for two degrees of freedom controllers. International Journal of Adaptive Control and Signal Processing, 16(5), 355–371. https://doi.org/10.1002/(ISSN)1099-1115

- Milanese, M., & Novara, C. (2004). Set membership identification of nonlinear systems. Automatica, 40(6), 957–975. https://doi.org/10.1016/j.automatica.2004.02.002

- Sala, A., & Esparza, A. (2005). Extension to virtual reference feedback tuning: A direct method for the design of feedback controllers. Automatica, 41(8), 1473–1476. https://doi.org/10.1016/j.automatica.2005.02.008

- Tanaskovic, M., Fagiano, L., Novara, C., & Morari, M. (2017). Data-driven control of nonlinear systems: An on-line direct approach. Automatica, 75, 1–10. https://doi.org/10.1016/j.automatica.2016.09.032

- Weyer, E. (2000). Finite sample properties of system identification of ARX models under mixing conditions. Automatica, 36(9), 1291–1299. https://doi.org/10.1016/S0005-1098(00)00039-X

- Weyer, E., Campi, M. C., & Csáji, B. C. (2017). Asymptotic properties of SPS confidence regions. Automatica, 82, 287–294. https://doi.org/10.1016/j.automatica.2017.04.041