ABSTRACT

Background

Health services interventions are typically more effective in randomised controlled trials than in routine healthcare. One explanation for this ‘voltage drop', i.e. reduction in effectiveness, is a reduction in intervention fidelity, i.e. the extent to which a programme is implemented as intended. This article discusses how to optimise intervention fidelity in nationally implemented behaviour change programmes, using as an exemplar the National Health Service Diabetes Prevention Programme (NHS-DPP); a behaviour change intervention for adults in England at increased risk of developing Type 2 diabetes, delivered by four independent provider organisations. We summarise key findings from a thorough fidelity evaluation of the NHS-DPP assessing design (whether programme plans were in accordance with the evidence base), training (of staff to deliver key intervention components), delivery (of key intervention components), receipt (participant understanding of intervention content), and highlight lessons learned for the implementation of other large-scale programmes.

Results

NHS-DPP providers delivered the majority of behaviour change content specified in their programme designs. However, a drift in fidelity was apparent at multiple points: from the evidence base, during programme commissioning, and on to providers’ programme designs. A lack of clear theoretical rationale for the intervention contents was apparent in design, training, and delivery. Our evaluation suggests that many fidelity issues may have been less prevalent if there was a clear underpinning theory from the outset.

Conclusion

We provide recommendations to enhance fidelity of nationally implemented behaviour change programmes. The involvement of a behaviour change specialist in clarifying the theory of change would minimise drift of key intervention content. Further, as loss of fidelity appears notable at the design stage, this should be given particular attention. Based on these recommendations, we describe examples of how we have worked with commissioners of the NHS-DPP to enhance fidelity of the next roll-out of the programme.

Background

Intervention fidelity is defined as the extent to which an intervention is implemented as intended (Bellg et al., Citation2004). Without a fidelity assessment, it cannot be ascertained whether intervention (in)effectiveness is due to intrinsic intervention features or factors added or omitted during implementation (Bellg et al., Citation2004). Large-scale programmes sometimes commission several different providers (private, state or third sector) to deliver the programme on their behalf, following central guidance, with some room for interpretation. Thus, assessing fidelity of large-scale programmes is particularly important due to the involvement of different people at each stage of the programme, increasing the risk of a drift in fidelity through each stage of implementation. Further, tensions between adaptations to different populations and adapting pre-existing programmes to fit specifications present further challenges to fidelity.

The present article considers drift in intervention fidelity in the roll-out of the National Health Service (NHS) England Diabetes Prevention Programme. NHS England commissioned the NHS Diabetes Prevention Programme (NHS-DPP), which is a nationally implemented behaviour change programme for adults in England at high risk of developing Type 2 diabetes (T2DM), following a review of the international evidence for diabetes prevention programmes (Ashra et al., Citation2015), and a pilot of the programme (Penn et al., Citation2018). The development of the NHS-DPP drew upon widespread experience of such programmes internationally, with previous diabetes prevention trials from multiple countries (e.g. Knowler et al., Citation2002; Tuomilehto et al., Citation2001) suggesting lifestyle programmes to be effective in promoting behavioural change and reducing the incidence of T2DM. These results have translated into routine practice (Aziz, Absetz, Oldroyd, Pronk, & Oldenburg, Citation2015; Dunkley et al., Citation2014), albeit with smaller effect sizes. Despite these smaller effects indicating a drift in fidelity, intervention fidelity has not been systematically considered in any of these countries.

The NHS-DPP was delivered by four independent provider organisations between 2016 and 2019, who worked independently to deliver the programme across England (Valabhji et al., Citation2020). This commissioning approach allows the programme to be delivered at scale and with efficiency, and permits local health services a choice of provider approaches in order to adapt to local context. NHS England stipulated intervention content of the programme within a published Service Specification (NHS England, Citation2016) based on current evidence (Ashra et al., Citation2015, National Institute for Health and Care Excellence (NICE), Citation2012), which the four providers had to adhere to. This specified a minimum of 13 face-to-face group sessions of no more than 15–20 adults with non-diabetic hyperglycaemia, across nine months. A description of the NHS-DPP service and referral process are detailed elsewhere (Hawkes, Cameron, Cotterill, Bower, & French, Citation2020; Howells, Bower, Burch, Cotterill, & Sanders, Citation2021). The use of behaviour change techniques (BCTs) such as setting behavioural goals were intended as the ‘active ingredients’ of interventions designed to change behaviour (Michie et al., Citation2013). The Service Specification emphasised the importance of BCTs to self-regulate behaviour (e.g. action planning, self-monitoring) as core components of the intervention relevant to T2DM prevention (NHS England, Citation2016).

Multiple aspects of fidelity can be assessed, including design (i.e. whether programme plans were in accordance with the evidence base), training (of staff to deliver intervention components), delivery (of intervention components), and receipt (i.e. participant understanding of intervention content) (Bellg et al., Citation2004). Most fidelity research concerns interventions developed by those delivering the evaluation, and previous assessments of diabetes prevention programmes have presented a superficial fidelity assessment, for example, defining fidelity only as whether the intervention was based on a standard curriculum (Aziz et al., Citation2015). By contrast, there are very few independent fidelity assessments of behaviour change interventions applying robust fidelity frameworks, and there are even fewer that assess large-scale programmes (Lorencatto, West, Christopherson, & Michie, Citation2013) and none that involved multiple providers.

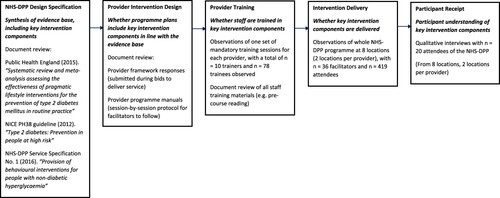

This article aims to highlight lessons for implementation of large-scale programmes using our fidelity evaluation of the NHS-DPP as an exemplar. illustrates a schematic of each fidelity domain assessed in the NHS-DPP. Consideration of multiple domains allows the drift between intended and actual delivery to be investigated, highlighting the dynamic nature of fidelity over time. Previous fidelity assessments have mainly focused on fidelity of delivery (McGee, Lorencatto, Matvienko-Sikar, & Toomey, Citation2018). However, if other domains of fidelity are not accounted for, accurate conclusions cannot be drawn about what happened in an intervention and why (Toomey et al., Citation2020). Thus, our evaluation provides a more comprehensive and systematic assessment of fidelity. We begin by briefly summarising the findings from our fidelity evaluation of the NHS-DPP. Based on these findings, we suggest recommendations for promotion of fidelity for large-scale programme implementation, which we expect would be of particular interest to commissioners and provider organisations.

Key findings

Programme design

Assessing fidelity of design of large-scale programmes is different from the more usual assessments of fidelity within research teams due to the involvement of different stakeholders throughout the intervention design. Specifically, these stakeholders include those synthesising the evidence base, commissioners producing a specification, independent providers designing or adapting their programmes based on this specification, and local NHS organisations who choose the provider. Thus, the risk of a drift in fidelity from the evidence base to providers’ programme plans is higher in large-scale programme implementation.

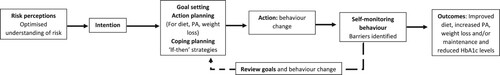

If the theory in behaviour change programmes is poorly chosen, not articulated, or not translated into practice, it will not provide a clear rationale for what should be included in the programme. A logic model is one way to represent a theory of how and why the intervention is expected to work, illustrating anticipated causal pathways between the techniques and desired outcomes (Skivington et al., Citation2021). Neither the commissioners nor the providers developed an explicit logic model for the NHS-DPP. Thus, we developed a logic model detailing the specific BCT content of the NHS-DPP based on the specification documents (summarising the up-to-date evidence base) underpinning the programme (Ashra et al., Citation2015; NHS England, Citation2016, National Institute for Health and Care Excellence (NICE), Citation2012). Our logic model proposed that information on the risk of T2DM was provided to patients at risk in order to promote formation of behaviour change intentions, followed by a self-regulatory cycle including goal setting and monitoring behaviour, to produce desired behavioural changes and reduction in T2DM risk (Hawkes, Miles, & French, Citation2021) (see ). This logic model included a more detailed description of the BCT content and expected mechanisms of action to achieve the desired outcomes in the NHS-DPP, compared to the one developed by Penn et al. (Citation2018) during evaluation of the pilot NHS-DPP in early stages of implementation.

Figure 2. Simplified logic model illustrating how the NHS-DPP is expected to work in achieving health outcomes.

We extracted information on underpinning theory in providers’ programmes using Michie and Prestwich’s Theory Coding Scheme (Michie & Prestwich, Citation2010). We extracted information on programme format and BCTs using the Template for Intervention Description and Replication framework (Hoffmann et al., Citation2014) and the BCT Taxonomy v1 (Michie et al., Citation2013) to determine whether the planned content and BCTs in each of the four providers’ programmes had been designed with fidelity to the NHS-DPP programme specification which was based on the evidence base (Hawkes, Cameron, Bower, & French, Citation2020). The NHS-DPP programme specification documents (NHS England, Citation2016, National Institute for Health and Care Excellence (NICE), Citation2012) were compared with each providers’ programme plans regarding programme format and BCTs.

All four providers had good fidelity of design to the programme format, including planned programme duration, frequency of sessions, and planned group sizes. However, we found variation and a drift in fidelity of BCTs in each providers’ programme plans; providers planned to deliver 74% of specified BCTs (Hawkes, Cameron, Bower, et al., Citation2020). Some of the key BCTs that were missing from some providers’ programme designs included ‘review outcome goals’, ‘pros and cons’, ‘credible source’, and ‘graded tasks’. Overall, justification for providers’ planned BCTs was not clear, varied between providers, and were often not related to the logic model based on the design specification underpinning the programme (Hawkes, Miles, et al., Citation2021). Given that some drift in programme delivery was expected, this lack of fidelity to the NHS-DPP programme specification at the intervention design stage is significant. This highlights the complexity of transferring detailed research-based behavioural interventions into consistent plans across multiple providers, and suggests the need for commissioners to review the granular detail of programme plans to ensure they are in line with the evidence base.

Staff training

A fidelity assessment of staff training of intervention content in large-scale programmes is important because if lack of fidelity in the delivery of the programme is detected, it needs to be clear whether this is due to ineffective training or other contextual factors in programme implementation. Two members of the research team who underwent training in BCT coding and the BCT Taxonomy (BCTTv1 Online Training, Citationn.d.) observed one set of mandatory training courses for each provider. We coded BCTs that staff were trained in during those sessions, including the coding of associated training materials, to evaluate whether the four NHS-DPP providers trained their staff to deliver BCT content with fidelity to their programme plans. We also assessed how thoroughly staff had been trained in BCTs (e.g. whether staff were informed about, directed, instructed, demonstrated, practiced, or modelled BCT delivery) (Hawkes, Cameron, Miles, & French, Citation2021).

Overall, the training courses for staff covered between 46% and 85% of BCTs in the provider programme plans, thus staff were not trained in a number of planned BCTs. One explanation for this could be the lack of explicit underpinning theory or a logic model from the outset, i.e. a lack of clear rationale for intervention contents in the programme. The most commonly used method of training was instructing how to deliver a BCT, rather than practicing or modelling BCT delivery (Hawkes, Cameron, et al., Citation2021). We concluded that providers may need to incorporate more comprehensive BCT training to ensure that staff are given the opportunity to practice BCT delivery during their training courses. If staff were better trained in how to deliver the more complex self-regulatory BCTs in a group setting (see ), this would increase their understanding of how those BCTs work in changing behaviour.

Programme delivery

An assessment of delivery fidelity is important because if providers are not delivering the BCTs highlighted by the evidence base, it would make the programme less effective and would be difficult to establish reasons for programme (in)effectiveness (Bellg et al., Citation2004). We assessed fidelity of delivery of the NHS-DPP to (a) each of the four providers’ programme plans and (b) the programme specification (which was based on the evidence base) from observations of the whole NHS-DPP programme in eight locations across England (i.e. two locations per provider) (French, Hawkes, Bower, & Cameron, Citation2021). Two members of the research team conducted the observations, and each underwent training in the BCT taxonomy (BCTTv1 Online Training, Citationn.d.) prior to data collection.

We found that between 47% and 68% of BCTs included in the programme specification were delivered. Conversely, between 70% and 89% of BCTs included in providers’ programme plans were delivered. There is no clear consensus for what constitutes ‘good’ fidelity, however, there is a general view that > 80% demonstrates ‘high’ fidelity and < 50% demonstrates ‘low’ fidelity (Borrelli, Citation2011; Lorencatto et al., Citation2013). Thus, fidelity to programme specification was low to moderate but fidelity to programme plans was generally high. There was extensive delivery of those BCTs which did not require recipients to enact them, notably providing information about health consequences. There was under delivery of BCTs involving self-regulation of behaviours (e.g. problem solving, reviewing goals) (French et al., Citation2021), which were emphasised as important by the specification documents underpinned by the evidence base (see ).

Further, an in-depth assessment of the quality of goal-setting delivery in the NHS-DPP found that this technique was not delivered in line with what the goal setting literature suggests is the most effective for changing health behaviours (Hawkes, Warren, Cameron, & French, Citation2021). For example, although providers generally encouraged setting specific goals, service users were not encouraged to make a public commitment to their behaviour change and the reviewing of goals was rarely specified (Hawkes, Warren, et al., Citation2021). This may highlight the need for programme developers and delivery staff to be more thoroughly trained in effective delivery of self-regulatory BCTs, for example by demonstrating effective BCT delivery and allowing staff to practice delivery of BCTs during training sessions (Hawkes, Cameron, et al., Citation2021).

Our results suggested that there was a gap between what the evidence base indicated is most effective and programme delivery, largely because of failures to translate the evidence base into the providers’ programme plans (French et al., Citation2021). This illustrates the higher likelihood of a drift in fidelity of delivery of BCTs when there are experts, commissioners, and providers involved at different stages of programme implementation. More consistent use of a logic model would produce greater clarity in reasons for intervention content, which may prevent this drift in fidelity.

Participant receipt

Despite the importance of self-regulatory BCTs in behaviour change programmes, little is known about how participants understand these BCTs, and qualitative evaluation of receipt is rare (Hankonen, Citation2021). Even the most rigorously designed interventions delivered with perfect fidelity will be ineffective at achieving desired behaviour changes if the intervention content is not understood by recipients (Hankonen, Citation2021).

We conducted 20 telephone interviews with participants who attended one of eight NHS-DPP programmes that the research team observed (Miles, Hawkes, & French, Citation2021). Participants were asked about their understanding of self-regulatory BCTs, because those were regarded in the specification as essential for behaviour change (see ). When asked about their understanding of a BCT, user-friendly examples were used to explain what was meant by that BCT and/or prompts were used that referred to relevant delivery material, to help account for differing levels of health literacy across participants. The topic guide was used flexibly in interviews to allow discussion of enactment of BCTs when participants spontaneously shared relevant experiences.

Our research found that participants generally understood the BCTs ‘self-monitoring of behaviours’ and ‘feedback on outcomes’, but the extent to which participants understood ‘goal setting’ and ‘problem solving’ varied. There was a limited recall and understanding of ‘action planning’ across most participants (Miles et al., Citation2021). Some of these findings were consistent with our observations of programme delivery across providers. For example, the BCTs ‘self-monitoring of behaviours’ and ‘feedback on outcomes’ were delivered frequently, and participants described an understanding of these BCTs. There was wide variation in the frequency of delivery of ‘goal setting’ and ‘problem solving’ across providers, which may explain the variation in participant understanding of these BCTs, and ‘action planning’ tended to be delivered infrequently, which may account for the limited recall of this BCT across participants (Miles et al., Citation2021).

Our findings on lack of understanding of some self-regulatory BCTs could be due to the under-delivery of these BCTs (French et al., Citation2021; Hawkes, Warren, et al., Citation2021), or the lack of comprehensive staff training of some of these techniques (Hawkes, Cameron, et al., Citation2021), or both. The large variation in understanding of BCTs between participants (especially goal setting and problem solving) could be attributed to variation in delivery and training across providers, though it is possible that some BCTs, such as self-monitoring of behaviours, may be intrinsically easier to understand and less dependent on frequency of delivery. The lack of understanding of these self-regulatory BCTs would influence the extent to which programmes produce long-term effects in behaviour change.

Discussion

The present programme of research examining fidelity of the NHS-DPP is the most comprehensive evaluation of a nationally implemented programme to date, thus it can provide lessons learned for other large-scale programmes internationally. Early outcomes from the NHS-DPP suggest that the programme appears effective (Valabhji et al., Citation2020), but improvement of future programme implementation and adherence to the evidence base could increase the likelihood of achieving sustained outcomes (Dunkley et al., Citation2014). We suggest recommendations for commissioners and providers to increase fidelity of behaviour change content in other large-scale programmes (see ). We also highlight some policy changes that have happened in the NHS-DPP in response to these recommendations ().

Table 1. Recommendations to enhance fidelity of nationally implemented programmes.

Recommendations for the promotion of fidelity for large-scale programme implementation

There should be an explicit theoretical underpinning of the programme to ensure a clear rationale for inclusion of intervention techniques. A logic model, for example, is one way that multiple theories and evidence sources can be represented diagrammatically. Our analysis of the NHS-DPP identified that many fidelity issues may have been less prevalent if there was a clear underpinning theory from the outset. The inclusion of a logic model during programme design would identify the important BCTs to be translated into programme plans, which may prevent a drift in fidelity at the training and delivery stages of implementation. This recommendation has now been incorporated into the NHS England specification documents for the third wave of commissioning of the NHS-DPP; as a result of the current research, NHS England now require providers to produce a logic model to describe justification for the key intervention components of their programmes, and require providers to explicitly describe how they expect their planned BCTs to work and why.

During the bidding process, commissioners should be clear on the criteria they use to evaluate providers (e.g. clarity of underpinning theory used to select BCTs in intervention design). Robust quality assurance during commissioning would ensure the evidence base is clearly translated into the contents of programme plans. This would reduce the likelihood of a dilution in fidelity in BCTs from the evidence review to commissioning through programme design. Involving a behaviour change specialist during commissioning would ensure gaps in intervention design are detected early on. The research team have since worked with the commissioners of the NHS-DPP for the third wave roll-out of the programme to ensure the BCTs in the evidence base are clearly translated into the NHS-DPP programme specification and the required behaviour change content of the programme is clearly articulated. For example, NHS England now require providers to explicitly describe in their bids how they will support service users with self-regulatory techniques, including support with setting, monitoring and reviewing of goals. Providers who are commissioned to deliver the programme could be further supported to refine their programmes in line with the evidence base before programme delivery; this may be particularly important for providers who have adapted pre-existing programmes for the current specification.

The involvement of a behaviour change specialist at all stages of programme implementation is important. Specialists could deliver the staff training to provide an in-depth understanding on the psychological mechanisms of BCTs, and allowing staff to practice BCT delivery could increase competence (Hawkes, Cameron, et al., Citation2021). This would be particularly advantageous for the more complex self-regulatory techniques underpinned by the evidence base (e.g. goal setting, problem solving). The training should also be appropriate to the session format (e.g. group delivery) and target population (e.g. tailoring techniques to culture, age, ethnicity, etc.). Thus, commissioners should be explicit about the minimum level of qualifications and training required for staff to deliver complex behaviour change content, and ensure providers allocate enough time for the training of such techniques. In response to these recommendations, during commissioning of the third wave of the programme NHS England required providers to explicitly state in their programme bids how staff would be trained in intervention content, specifying that providers should train staff thoroughly in behaviour change content and group delivery skills and allow enough time for front-line staff to practice intervention content before delivering in the field.

Future work of this nature should also consider changes to fidelity over time, once the programme is implemented in the field. Thus, providers should ensure delivery staff receive continued monitoring and feedback from experts in behaviour change so that BCTs are delivered effectively, which also has the potential to increase participant engagement and adherence with the programme. This highlights the conflict between rapid roll-out of large-scale interventions and prioritising implementation fidelity; without thorough training in these techniques and ongoing audit and feedback, it could result in sub-optimal delivery and limited participant understanding of intervention content, impacting on programme outcomes.

Strengths and limitations

This programme of research is the first independent evaluation of the fidelity of a nationally implemented diabetes prevention programme in the world. We assessed each domain of fidelity, building on the National Institute of Health’s Behaviour Change Consortium guidance (Bellg et al., Citation2004), to provide a comprehensive understanding of the behaviour change content included in the NHS-DPP. We have also demonstrated how recommendations arising from this research have been discussed and implemented with stakeholders to improve fidelity of behaviour change content in future roll-outs of the NHS-DPP. However, whilst we hope that these methods will be useful as models for research teams to apply to evaluations of other large-scale programmes with multiple providers, there are limitations to acknowledge.

First, we obtained all relevant documentation from all providers to fully assess the behaviour change content in the design, training, and delivery of the NHS-DPP. However, a challenge of examining fidelity of a programme where the research team were independent of the teams that developed the intervention is that the research team could not ascertain what exactly providers intended to be the ‘active ingredients’ of their interventions, as these were not clearly spelt out. Rather, standardised coding frameworks (e.g. Michie et al., Citation2013; Michie & Prestwich, Citation2010) were used to interpret this. Second, providers were considered to demonstrate fidelity when a BCT stated in the full programme specification was present in providers’ intervention design and delivery. With the sole exception of goal setting, we did not assess how well the BCT was delivered. This pragmatic decision was taken, although there is no compelling evidence that use of a technique once is sufficient, and neither is it clear that BCTs were delivered to a standard required to be effective.

A necessary limitation was that we made decisions regarding how much of the NHS-DPP to attempt to capture. With four providers delivering the programme, it would require a large degree of observation of delivery or training to be entirely sure that our fidelity assessment was a thoroughly reliable assessment. For example, the present programme of work observed one complete set of mandatory training courses across providers (Hawkes, Cameron, et al., Citation2021) and complete delivery of the nine-month NHS-DPP at eight sites involving 35 facilitators observed at 111 sessions (French et al., Citation2021). Such observation cannot be truly representative of an entire programme, however, we aimed to ensure that the research captured what was delivered in complete courses and observed in diverse geographical locations across England, within the constraints of time and resource. Finally, the majority of service users interviewed about their understanding of the behaviour change content (Miles et al., Citation2021) had completed all or most sessions of the NHS-DPP and were interviewed after the nine-month programme had finished; participants who had completed less of the programme may have reported differences in their understanding of BCT content and it is possible that participants had some difficulty recalling the detail of the programme at the time of the interview.

Conclusions

This article identifies lessons learned from a unique fidelity assessment of a nationally implemented behaviour change programme. We suggest recommendations for future implementation of large-scale programmes in which there is a higher risk of a drift in fidelity to ensure interventions are in line with the evidence base regarding effectiveness, and describe examples of policy changes that have happened in the NHS-DPP as a result of this programme of research. These recommendations would incur minor costs, but it is anticipated that they would yield reasonable sized benefits in effectiveness if implemented, through reducing intervention drift.

Ethics statement

The wider programme of research of which this study is a part of was reviewed and approved by the North West Greater Manchester East NHS Research Ethics Committee (Reference: 17/NW/0426, 1 August 2017). Full written consent was obtained from all participants included in this study.

Acknowledgements

The authors would like to thank the NHS-DPP Programme team and all provider organisations for providing all relevant documentation required for this manuscript. We would like to thank the NHS-DPP providers for assisting in the organisation of observations at each site for both the staff training observations and programme delivery observations. We are grateful to all the staff trainers, trainees, facilitators, and attendees who consented to researchers observing and audio-recording sessions in which they were present. With further thanks to the participants who took part in the qualitative telephone interviews for their time and effort. We are also grateful to the Fuse research team at Newcastle University who completed the evaluation of the pilot NHS-DPP and shared early documentation and findings with our wider research team during the early stages of our analysis. We would like to also thank the wider DIPLOMA team who have contributed and provided useful feedback on all outputs for this programme of research. We would like to thank Dr Elaine Cameron for her input into some of the studies this overview paper integrates.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Ashra, N. B., Spong, R., Carter, P., Davies, M. J., Dunkley, A., & Gillies, C. (2015). A systematic review and meta-analysis assessing the effectiveness of pragmatic lifestyle interventions for the prevention of Type 2 diabetes mellitus in routine practice. London: Public Health England. https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/456147/PHE_Evidence_Review_of_diabetes_prevention_programmes-_FINAL.pdf

- Aziz, Z., Absetz, P., Oldroyd, J., Pronk, N. P., & Oldenburg, B. (2015). A systematic review of real-world diabetes prevention programs: Learnings from the last 15 years. Implementation Science, 10(1), 1–7. doi:10.1186/s13012-015-0354-6

- BCTTv1 Online Training. (n.d.). https://www.bct-taxonomy.com/

- Bellg, A. J., Borrelli, B., Resnick, B., Hecht, J., Minicucci, D. S., Ory, M., … Czajkowski, S. (2004). Enhancing treatment fidelity in health behavior change studies: Best practices and recommendations from the NIH behavior change consortium. Health Psychology, 23(5), 443–451. doi:10.1037/0278-6133.23.5.443

- Borrelli, B. (2011). The assessment, monitoring, and enhancement of treatment fidelity in public health clinical trials. Journal of Public Health Dentistry, 71, S52–S63. doi:10.1111/j.1752-7325.2011.00233.x

- Dunkley, A. J., Bodicoat, D. H., Greaves, C. J., Russell, C., Yates, T., Davies, M. J., & Khunti, K. (2014). Diabetes prevention in the real world: Effectiveness of pragmatic lifestyle interventions for the prevention of Type 2 diabetes and of the impact of adherence to guideline recommendations: A systematic review and meta-analysis. Diabetes Care, 37(4), 922–933. doi:10.2337/dc13-2195

- French, D. P., Hawkes, R. E., Bower, P., & Cameron, E. (2021). Is the NHS diabetes prevention programme intervention delivered as planned? An observational study of intervention delivery. Annals of Behavioral Medicine, 55(11), 1104–1115. doi:10.1093/abm/kaaa108

- Hankonen, N. (2021). Participants’ enactment of behavior change techniques: A call for increased focus on what people do to manage their motivation and behavior. Health Psychology Review, 15(2), 185–194. doi:10.1080/17437199.2020.1814836

- Hawkes, R. E., Cameron, E., Bower, P. B., & French, D. P. (2020). Does the design of the NHS diabetes prevention programme intervention have fidelity to the programme specification? A document analysis. Diabetic Medicine, 37(8), 1357–1366. doi:10.1111/dme.14201

- Hawkes, R. E., Cameron, E., Cotterill, S., Bower, P., & French, D. P. (2020). The NHS diabetes prevention programme: An observational study of service delivery and patient experience. BMC Health Services Research, 20(1), 1–12. doi:10.1186/s12913-020-05951-7

- Hawkes, R. E., Cameron, E., Miles, L. M., & French, D. P. (2021). The fidelity of training in behaviour change techniques of intervention design in a national diabetes prevention programme. International Journal of Behavioral Medicine, 28, 671–682. doi:10.1007/s12529-021-09961-5

- Hawkes, R. E., Miles, L., & French, D. P. (2021). The theoretical basis of a nationally implemented Type 2 diabetes prevention programme: How is the programme expected to produce changes in behaviour? International Journal of Behavioral Nutrition and Physical Activity, 18(1), 1–12. doi:10.1186/s12966-021-01134-7

- Hawkes, R. E., Warren, L., Cameron, E., & French, D. P. (2021). An evaluation of goal setting in the NHS England diabetes prevention programme. Psychology & Health, 1–20. doi:10.1080/08870446.2021.1872790

- Hoffmann, T. C., Glasziou, P. P., Boutron, I., Milne, R., Perera, R., Moher, D., … Lamb, S. E. (2014). Better reporting of interventions: Template for intervention description and replication (TIDieR) checklist and guide. BMJ, 348, g1687. doi:10.1136/bmj.g1687

- Howells, K., Bower, P., Burch, P., Cotterill, S., & Sanders, C. (2021). On the borderline of diabetes: Understanding how individuals resist and reframe diabetes risk. Health, Risk & Society, 20, 1–8. doi:10.1080/13698575.2021.1897532

- Knowler, W. C., Barrett-Connor, E., Fowler, S. E., Hamman, R. F., Lachin, J. M., Walker, E. A., & Nathan, D. M. (2002). Reduction in the incidence of Type 2 diabetes with lifestyle intervention or metformin. New England Journal of Medicine, 346(6), 393–403. doi:10.1056/NEJMoa012512

- Lorencatto, F., West, R., Christopherson, C., & Michie, S. (2013). Assessing fidelity of delivery of smoking cessation behavioural support in practice. Implementation Science, 8(1), 1–10. doi:10.1186/1748-5908-8-40

- McGee, D., Lorencatto, F., Matvienko-Sikar, K., & Toomey, E. (2018). Surveying knowledge, practice and attitudes towards intervention fidelity within trials of complex healthcare interventions. Trials, 19(1), 1–14. doi:10.1186/s13063-018-2838-6

- Michie, S., & Prestwich, A. (2010). Are interventions theory-based? Development of a theory coding scheme. Health Psychology, 29(1), 1–8. doi:10.1037/a0016939

- Michie, S., Richardson, M., Johnston, M., Abraham, C., Francis, J., Hardeman, W., … Wood, C. E. (2013). The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: Building an international consensus for the reporting of behavior change interventions. Annals of Behavioral Medicine, 46(1), 81–95. doi:10.1007/s12160-013-9486-6

- Miles, L. M., Hawkes, R. E., & French, D. P. (2021). How is the behaviour change technique content of the NHS diabetes prevention programme understood by participants? A qualitative study of fidelity, with a focus on receipt. Annals of Behavioral Medicine, doi:10.1093/abm/kaab093

- National Institute for Health and Care Excellence (NICE). (2012). PH38 Type 2 diabetes: Prevention in people at high risk. London: National Institute for Health and Care Excellence (Updated September 2017). https://www.nice.org.uk/guidance/ph38/resources/type-2-diabetes-prevention-in-people-at-high-risk-pdf-1996304192197

- NHS England. (2016, March). Service Specification No. 1: Provision of behavioural interventions for people with non-diabetic hyperglycaemia. [Version 01]. https://www.england.nhs.uk/wp-content/uploads/2016/08/dpp-service-spec-aug16.pdf

- Penn, L., Rodrigues, A., Haste, A., Marques, M. M., Budig, K., Sainsbury, K., … Goyder, E. (2018). NHS diabetes prevention programme in England: Formative evaluation of the programme in early phase implementation. BMJ Open, 8(2), e019467. doi:10.1136/bmjopen-2017-019467

- Skivington, K., Matthews, L., Simpson, S. A., Craig, P., Baird, J., Blazeby, J. M., … Moore, L. (2021). A new framework for developing and evaluating complex interventions: Update of medical research council guidance. BMJ, 374, n2061. doi:10.1136/bmj.n2061

- Toomey, E., Hardeman, W., Hankonen, N., Byrne, M., McSharry, J., Matvienko-Sikar, K., & Lorencatto, F. (2020). Focusing on fidelity: Narrative review and recommendations for improving intervention fidelity within trials of health behaviour change interventions. Health Psychology and Behavioral Medicine, 8(1), 132–151. doi:10.1080/21642850.2020.1738935

- Tuomilehto, J., Lindström, J., Eriksson, J. G., Valle, T. T., Hämäläinen, H., Ilanne-Parikka, P., … Salminen, V. (2001). Prevention of Type 2 diabetes mellitus by changes in lifestyle among subjects with impaired glucose tolerance. New England Journal of Medicine, 344(18), 1343–1350. doi:10.1056/NEJM200105033441801

- Valabhji, J., Barron, E., Bradley, D., Bakhai, C., Fagg, J., O’Neill, S., … Smith, J. (2020). Early outcomes from the English national health service diabetes prevention programme. Diabetes Care, 43(1), 152–160. doi:10.2337/dc19-1425