?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Augmented reality (AR) is a widely researched route for navigation support in laparoscopic surgery. Accurate registration is a crucial component for such AR systems. We introduce two methods for interactive registration that aim to be minimally invasive to the workflow and to mimic natural manipulation of 3D objects. The methods utilise spatially tracked laparoscopic tools to manipulate the virtual 3D content. We comparatively evaluated the methods against a reference, landmark-based registration method in a user study with 12 participants. We tested the methods for registration accuracy, time, and subjective usability perception. Our methods did not outperform the reference method on these parameters but showed promising results. The results indicate that our methods present no finalised solutions but that one of them is a promising approach for which we identified concrete improvement measures to be implemented in future research.

1. Introduction

With the ever-growing presence of minimally-invasive surgery, augmented reality (AR) navigation support for laparoscopic procedures has attracted much attention from researchers over the past decade (Bernhardt et al. Citation2017). Laparoscopic AR aims to support surgeons by providing intraoperative navigation information. One surgical procedure that has been investigated by the laparoscopic AR community is partial nephrectomy (Detmer et al. Citation2017).

1.1. Motivation

Effective AR navigation requires an accurate AR registration process. Registration is the process of correctly aligning the virtual and physical worlds’ coordinate systems. In this work, we investigated initial registration for virtual kidney models, e.g. during partial nephrectomy. Initial registration means that no prior rough alignment is required. A range of methods has been proposed in the literature. Within these, one can distinguish between static and interactive methods. Landmark-based methods, being a popular static approach, calculate the registration transformation (consisting of translation, rotation, and scale) on the basis of anatomical or artificial landmarks. Interactive methods allow the user to set these parameters manually. These interactive methods allow the user to conduct the registration iteratively and correct minor registration errors (Nicolau et al. Citation2011).

Interactive AR registration is an application for manual manipulation of virtual three-dimensional (3D) objects. In virtual reality, manipulation of virtual objects in peri-personal space has been well researched. The most promising approach seems to lie in mimicking natural interaction with real objects (Song et al. Citation2012; de Araújo et al. Citation2013; Mendes et al. Citation2019). These approaches have shown to be highly effective in 3D object manipulation tasks (Bowman et al. Citation2012). In laparoscopic surgery, the natural interaction with physical objects occurs via the laparoscopic instruments and video stream. This article introduces two interaction methods that aim to apply the simulated natural interaction approach to the laparoscopic domain. These interaction methods were comparatively evaluated against a reference registration method.

1.2. Related work

One common static registration approach determines the registration transformation based on the positions of a set of paired landmark points. Landmark points can be anatomical or artificial landmarks, as well as external or internal landmarks (Bernhardt et al. Citation2017). Conrad et al. (Citation2016) use four internal anatomical landmarks. These are manually selected in the preoperative virtual model and then recorded with a tracked pointing tool. Another approach lies in making use of landmarks that are relevant to the surgical procedure anyway (Hayashi et al. Citation2016). A different static approach is the use of laparoscopic (stereoscopic) still images with camera tracking to determine the registration transformation (Wang et al. Citation2015). Triangulation is used to calculate the kidney’s position and orientation.

Interactive registration methods allow the user to set some or all of the up to seven degrees of freedom by manual input (Bernhardt et al. Citation2017). This can increase the cognitive workload for the operator and the resulting accuracy depends on the operator’s skills (Detmer et al. Citation2017). On the other hand, interactive approaches allow for an iterative approach and real-time adjustments of the registration (Nicolau et al. Citation2011). One example is the manual rotation and translation of a 3D model until it correctly matches a recent screenshot of the laparoscopic image screen (Chen et al. Citation2014) using a classic desktop interface. Another approach (Pratt et al. Citation2012) determines the translational part of the registration by identifying and recording one landmark and sets the rotation manually. For the closely related field of intraoperatively correcting registration in video see-through AR, É et al. (Citation2018) provide a manual input interface on the AR display device (a tablet computer). Multiple approaches exist that aim to introduce intuitive three-dimensional manipulation to the interactive registration process: For 2D/3D registration of x-ray images, Gong et al. (Citation2013) propose using hand gestures to spatially manipulate the virtual model relative to the screen’s coordinate system. In their second approach, a virtual tool is placed next to the virtual model. A corresponding tracked tool is then placed next to the physical structure. By moving the tracked, physical tool, the virtual model can be manipulated to match the 2D image. In a similar approach, Thompson et al. (Citation2015) track a tool relative to the screen’s coordinate system and manipulate the overlaid 3D model accordingly.

2. Technical methods

We developed two interactive registration methods: The Instrument Control (hereafter InstControl) and the Laparoscope Control (LapControl) method. As a reference, we implemented a landmark-based method (Paired point). We fixed the model scaling; thus, only position and orientation had to be set in the registration task.

2.1. InstControl registration method

In this method, the user sets the virtual model’s translational and rotational degrees of freedom manually. We followed the idea of natural interaction in VR and transferred it to a laparoscopic working space. The concept aims to simulate natural manipulation of the virtual content as if it were located in the laparoscopic surgical space. Moreover, we aimed to reduce the invasiveness of the additional registration task to the surgical workflow. This is achieved by utilising only devices that are already present in the workflow. Thus, the InstControl method enables the user to ‘grab’ and manipulate the virtual object with a tracked laparoscopic instrument or pointing tool. This registration method employs two interaction devices: One optically tracked laparoscopic pointing tool and an input device with at least four buttons. We propose using the laparoscopic camera head’s buttons. In summary, this approach aims to mimic natural interaction with the virtual object as realistically as possible. This is attempted by using the same instrument that would be used to manipulate a real object.

The registration workflow is initiated by pressing a Start button, placing the virtual model 100 mm in front of the pointing tool. The start button is then repurposed for confirmation. The user now has three modes at her disposal that can be activated by holding down one of the three remaining buttons (): In translation mode, the user can ‘grab’ the virtual model with the pointing tool and move it by moving the pointing tool. The model’s centre of gravity imitates the tool tip’s trajectory. In the axis rotation mode, the user can rotate the virtual model around the pointing tool’s axis by rotating the pointing tool itself. In the free rotation mode, the user can rotate the object around any axis that is perpendicular to the tool’s axis by moving the tool tip. The free rotation between each frame t is defined by the rotation between the vector and the vector

, where

is the vector from the model’s centre of gravity to the the pointing tool tip. Finally, the user can confirm the registration by holding down the confirm button for two seconds.

2.2. LapControl registration method

The LapControl method also allows the user to perform the registration manually. This method further reduces the number of devices with which the user interacts: The laparoscope’s camera head itself is used to manipulate the virtual model. Thus, while moving slightly away from highly realistic 3D manipulation, we believe that this method’s key benefit lies in minimising the number of required devices to the laparoscope itself.

This registration method requires the user to interact with the laparoscope and an input device with four buttons. Again, the camera head’s own four buttons can be repurposed for this.

The registration workflow is equivalent to the InstControl workflow. Upon initialisation, the virtual model is displayed 150 mm in front of the laparoscope. A larger distance is chosen for this method to provide the required overview. The user can then translate and rotate the virtual model as above. However, the laparoscopic camera’s position is used for translation and free rotation and the objective’s axis instead of the pointing tool’s axis is used in axis rotation.

2.3. Paired point registration method

We implemented a landmark-based solution as a reference method to compare to our proposed interactive methods. This method requires the user to interact with a mouse or touchscreen interface for registration planning. The user then uses the tracked laparoscopic pointing tool and an input device with at least one button. The method we implemented utilises anatomical, internal landmarks. During an initial planning phase, the user identifies and marks four characteristic points on the virtual model. They then record these points in the patient space by touching them with the tracked laparoscopic pointing tool. The virtual model’s position and orientation are then determined based on the two corresponding point clouds (Arun et al. Citation1987).

2.4. Prototype implementation

2.4.1. Phantom generation

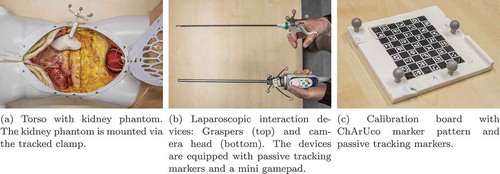

We created three kidney models to simulate laparoscopic renal surgery. These were based on a public Computed Tomography database (Heller et al. Citation2019). We selected three cases with one tumour-free kidney and manually segmented the parenchymal surface of the healthy kidney using 3D Slicer (Fedorov et al. Citation2012). The segmentations were reviewed by a senior urologist and converted to surface mesh models. The models were equipped with an adapter for fixing them in a spatially determined position and orientation. We then printed the phantoms using the fused deposition modelling method. In addition, we created a clamp to mount the phantoms into a torso model and optically track them from the outside ().

2.4.2. Surgical simulation

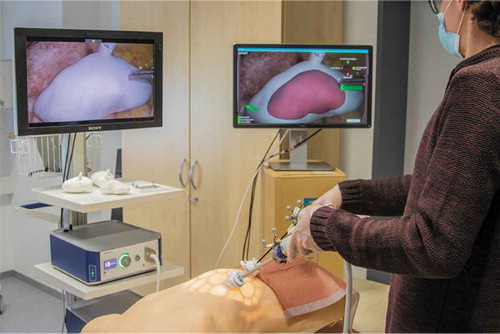

We set up a simulated laparoscopic operation environment using a laparoscopy training torso model (EasyLap training system, HumanX GmbH, Germany). One kidney phantom at a time was mounted into the torso. It should be noted that the anatomical position was more superior than would be anatomically correct. This was due to the fixed structures inside the torso model. The torso model was then closed up and the simulated surgical site was made accessible with two 12 mm trocars. The trocars were placed slightly contralaterally but close to the medial plane. The surgical site could then be accessed with an EinsteinVision© 3.0 laparoscope (B. Braun Melsungen AG, Germany) with a 30º optic in monoscopic mode and laparoscopic graspers. The graspers were used as a generic pointing device. We did not specify which trocar should be used for which tool. Finally, we mounted a mini gamepad to the laparoscope’s camera head to simulate repurposing the camera head’s four buttons to our registration methods (). The resulting overall setup is shown in .

2.4.3. AR prototype

We implemented an AR prototype using Unity 2018 (Unity Technologies, USA) to test the different registration methods. For this purpose, the laparoscopic camera head, the graspers, and the kidney phantom clamp were passively tracked with an NDI Polaris Spectra infrared tracking camera (Northern Digital Inc., Canada).

The graspers were pivot calibrated as a pointing device using the NDI Toolbox software (Northern Digital Inc., Canada). The laparoscopic internal camera parameters were calibrated with optical ChArUco markers (Garrido-Jurado et al. Citation2014) with a method implemented in the OpenCV library (Bradski Citation2000).Footnote1 The spatial transformation between the camera head’s marker body and the camera’s position and orientation was determined as follows: We tracked the transformation of the calibration pattern in the tracking camera’s space TTrack→Calib with a bespoke calibration board (). The external camera calibration parameters yielded the transformation between the calibration board and the laparoscopic camera itself (). Finally, the marker body’s transformation in tracking camera space (

) was directly tracked. The transformation between the camera’s marker body and the camera,

could then be determined as:

For the prototype’s AR display, we duplicated the laparoscopic video stream on a 24” screen that was positioned to the right of the main laparoscopic screen. The virtual kidney model was overlaid on this display as a semi-transparent solid surface. In addition, the screen showed a small mannequin that was rotated alongside the model to provide some rough anatomical orientation information. Finally, the graphical user interface for the various registration methods was displayed on this screen.

3. Evaluation methods

The three registration methods were comparatively evaluated in a user study with a simulated intraoperative registration task. We compared the registration methods with regards to three evaluation criteria: registration accuracy, registration speed, and participants’ subjective usability perception.

3.1. Study design

The study was conducted in a within-subject repeated measures design. The applied registration method was the independent variable with three levels. Each participant performed all three registration tasks in a counterbalanced order.

The evaluation criteria were operationalised into four dependent variables: The translation error was measured as the translational offset between the registered virtual model’s and physical model’s centres of gravity. Rotation error was measured as the rotational offset between the virtual and physical models in degrees. Registration speed was measured as task completion time (TCT) that was required for completing the registration process. Participants’ usability perception was measured using the System Usability Scale (SUS) by Brooke et al. (Citation1996). The SUS questionnaire was translated to German by a native speaker. In addition, we collected participants’ qualitative opinion on the registration methods in a semi-structured interview format.

3.2. Sample design

We recruited 12 participants for the study. The inclusion criteria were a medical background (medical students and physicians) and initial experience with laparoscopy. Training experience with a simulator was sufficient for participation because it provides experience with the particular hand-eye coordination that is required when working laparoscopically. Participants were paid EUR 40.00 for participation.

3.3. Study procedure

The experiment started with an introduction during which we obtained the participants’ written informed consent and demographic information. This was followed by one trial block for each registration method (i.e., three blocks overall). The order in which the registration methods were performed was counterbalanced across participants. Each registration method was performed with a separate kidney model. The order of the kidney models was fixed, thus counterbalancing the assignment between registration methods and kidney models.

At the beginning of each trial block, the experimenter briefly explained the general principle of the respective registration method to the participant. The participant then performed a first practise trial (i.e., the registration process) with step-by-step instructions from the experimenter. Following this, the participant performed a second practise trial independently but with the opportunity to ask questions. After all questions were answered, the experimenter exchanged the kidney phantom and virtual model for new ones. The participant then conducted a final test trial on a new model. The data were recorded during this test trial. Finally, the participant completed the SUS questionnaire for the tested registration method.

After the completion of all three blocks, the experimenter conducted a brief semi-structured interview with the participant. This interview aimed to elicit participants’ qualitative feedback on the three registration methods.

3.4. Data analysis

We conducted repeated-measures analyses of variance (ANOVAs) for the four dependent variables. Data sphericity was tested with Mauchly’s Test. Greenhouse-Geisser (GG) correction was applied in case of sphericity violation. In case of significant findings in an ANOVA, we conducted post-hoc pairwise T-tests with Bonferroni correction. Participants’ comments during experiment performance or from the interview were labelled, clustered and qualitatively analysed by one investigator.

4. Results

4.1. Data exclusion

We excluded two participants from the data analysis: One participant performed with a rotation error of 142.8 in the paired point condition, which equivalates to a z-score of 4.15. This indicates that they exchanged the upper and lower kidney pole. The second participant displayed a translation error of 30.5 mm (). This indicates that at least one highly inaccurate point was selected. These are likely to be visualisation issues rather than interaction issues.

4.2. Participant demographics

Ten (10) participants (five male, five female) were included in the data analysis. They were aged 23 to 36 years old (median = 24.5 years). Eight of them were medical students in their fourth and fifth year and two were physicians with two and nine years experience respectively. One physician reported approximately 200 hours experience with operating laparoscopically; the remaining participants reported between one to 20 hours of laparoscopic trainer experience (median = 2 h).

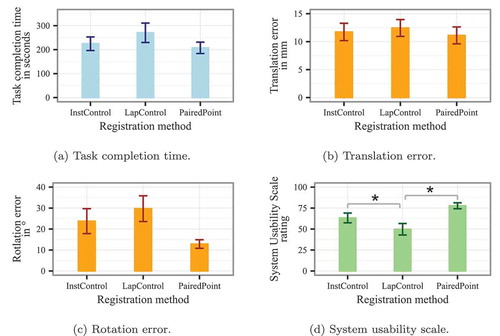

4.3. Statistical results

The descriptive results of our experiment are listed in . The ANOVA results for all dependent variables are shown in . No significant results were found for the TCT (see also ), translation error (), and rotation error (). A significant effect was found for the participants’ SUS rating (). In the post-hoc pairwise t-tests, we found significant differences between the LapControl method and the InstControl method (), as well as between LapControl and the paired point method (

) both other methods. We could not find a significant difference between the InstControl method and the paired point condition (

).

Figure 4. Statistical results for all dependent variables. Error bars show standard errors. The asterisks in (d) indicate pairwise significance in the post-hoc t-tests

Table 1. Descriptive results for all dependent variables with

Table 2. ANOVA results for the registration method’s effects on all dependent variables

4.4. Qualitative participant feedback

Nine general categories of participant comments emerged during qualitative data analysis: These included comments about the methods’ general impression, their clinical applicability, the interaction devices, interaction handedness, characteristic landmark use, camera use, translation manipulation, rotation manipulation, and interaction metaphors. The comments for each interaction method are summarised below. These data are qualitative and no conclusions should be drawn from the reported numbers. These are not necessarily representative due to the open interview question format.

4.4.1. Instrument control

Participants found this method to be particularly well suited for adjustment and correction of registration results. One participant was concerned about potential ‘false positives’, i.e. false confidence in an incorrect registration. Three participants criticised that the method required bimanual interaction with the laparoscope and its buttons in one hand and the pointing instrument in the other hand. However, three other participants gave positive feedback on this aspect as it provides a ‘division of labour’ between both hands. Two participants commented that translation setting with this method is simple, but they and three others reported that rotation was not intuitive: Firstly, because the instrument’s own axis was not aligned with the image plane. Secondly, because the models behaviour was difficult to predict in free rotation – particularly when the instrument tip was outside the field of view (FoV). Two participants commented that the grasp-and-manipulate metaphor was generally realistic and intuitive.

4.4.2. Laparoscope control

Three participants commented that the system is too ‘compressed’ because the entire workflow is completed with one hand. They stated that they would usually hold the laparoscope with their non-dominant hand. The two main points of criticism expressed by participants concerned the FoV and the rotation mode: Four participants criticised that the FoV’s continuous motion during the process affected their sense of direction and repeatedly removed the area of interest from the FoV. One participant said the motion might make them feel dizzy over time. The free rotation was perceived to be rather unpredictable, but one participant said that the axial rotation was easier to imagine than in the InstControl method. Finally, one participant stated that the interaction metaphor was rather unrealistic because the camera would usually not interact with objects in the surgical site directly.

4.4.3. Paired point registration

Overall, four participants stated that they found this method to be the easiest and ‘most trustworthy’ one. However, it was mentioned that a second person might be needed in a clinical setting to perform the planning. One participant also criticised that the method itself would not allow for corrections. Two participants reported that they were very used to using mouse-and-screen interfaces and that this was, therefore, easy to them. However, two participants mentioned that point acquisition required them to monitor the laparoscopic screen and the recording progress bar on the second screen at the same time.

5. Discussion

5.1. Discussion of results

Our evaluation results indicate that the LapControl method may not be well suited for the task at hand. We did not find statistically significant inferiority for the TCT and accuracy parameters. However, descriptive results suggest that this method did not perform well in these measures. Moreover, participants provided rather negative feedback on this method – both quantitatively and qualitatively. The main issue seems to be the FoV’s instability when moving the camera as an input device. This effect is worsened by the fact that targeted laparoscope motion is often distinctly used to gain a better spatial understanding (Bogdanova et al. Citation2016). Using the LapControl method was likely made more difficult by using a laparoscope with a tilted camera because this offsets the tool axis from the optical axis. However, this is a realistic (and likely worst-case) application scenario and therefore a suitable testing scenario. The method grants qualitative benefits by limiting the interaction to one device that is naturally present in the workflow. Nevertheless, these benefits seem to be outweighed by the usability drawbacks that our study exposed.

The InstControl method was rated significantly more usable than the LapControl method by the participants. We also observed trends that it may perform better with regards to TCT and accuracy. However, descriptive results suggest that, in its current form, it may not perform as well as the paired point method. This potential deficit is particularly evident for the rotation accuracy and the subjective feedback. The qualitative feedback suggests that this may have been caused by unintuitive model behaviour during free rotation. We believe that this drawback can be largely mitigated with minor adjustments to the interaction technique that are discussed in the following section.

5.2. General discussion

Our results indicate that the LapControl method is inferior to current methods for initial registration. In contrast, the InstControl method may be a promising approach after some design changes have been implemented: Firstly, the free rotation might get more intuitive by implementing auxiliary visualisations. One such visualisation could show the spatial relationship between the manipulation tool and the manipulated point on the model surface. Another visualisation could show the rotation pivot point to further explain the rotation that is being applied. A different approach to making the free rotation more intuitive could be restricting when it can be applied. This could either be restricted to situations when the pointing tool is within a maximum distance from the model. Or it could be restricted to situations in which the tool is in the camera’s FoV. The latter restriction would have the additional benefit of preventing tissue damage from tool motion outside the FoV. However, it stands to be investigated how likely the issue of using the manipulation tool outside the FoV is to occur in the real application: Anecdotal observation during the study indicated that experienced users primarily used the tool when in FoV. Finally, free rotation for both methods and the axis rotation for the LapControl method might become more intuitive if a function was added that allowed the user to define a rotation axis in a separate interaction step.

One potential applicability issue with our implementation of the InstControl method is that the interaction buttons are currently located on the laparoscope’s camera head. In surgical scenarios, the laparoscope is often guided by a separate person. Therefore, an alternative means of interaction might be useful. This might either include moving the buttons to the pointing tool or implementing a touchless interaction technique, such as gesture or speech control.

Our evaluation setup aimed to simulate a realistic surgical scenario. However, several design aspects are not fully representative of real surgery and may have influenced the results: The kidney phantoms were placed openly in the operative site. Thus, the entire kidney surface was visible to the participants. In contrast, surgeons only dissect the kidney surface parts that need to be accessed. Our trocar setup was another deviation from a realistic surgical environment. We used two 12 mm-trocars to enable participants to choose which one to use for the laparoscope and which one to use for the pointing tool. Some participants used this setup to migrate the laparoscope back and forth between the trocars during a trial. This is not necessarily realistic during surgery. Finally, our participants’ experience with laparoscopic instruments was very limited and their performance was recorded in only one trial for each method. This may have influenced the difficulty of interacting with the laparoscopic surgical space.

It stands to be investigated how the depth perception limitations in our study may have affected the outcome. Our interactive methods relied solely on optical, monoscopic depth cues. Even more so when the manually operated pointing tool was outside of the FoV and, therefore, unusable as a reference. The paired point method, however, provided additional haptic feedback when recording the points on the physical kidney phantom. This may have added difficulty to the interactive methods. Stereoscopic display and the constraint to have the tool in the FoV may help to mitigate this influence.

We have evaluated our two interaction methods as means for initial alignment of the virtual AR content. Another potential application may lie in intraoperative registration correction (e.g., after organ movement or deformation). Future research is required to investigate whether this is a feasible application. This slightly altered application may even mitigate some of the drawbacks of the LapControl method because the required alignment changes would be rather gradual and not as extensive as in our experiment.

Finally, one important scope limitation should be discussed: We compared our interactive methods with a landmark-based method. We chose this reference method because landmark-based methods seem to be a well-established standard approach for initial registration. However, the evident follow-up questions arising from this, are: Firstly, how do our methods compare to previous interactive methods? Secondly, how do existing interactive methods compare to landmark-based methods? Future comparative research will need to show which overall approach is more suitable.

6. Conclusion

This work introduced two natural interaction methods for initial AR registration in laparoscopic surgery. The interaction methods were compared to each other and to a reference landmark-based registration method in a simulated laparoscopy environment. The user study’s results show that one of the introduced interaction concepts is inferior to the reference method and provide a detailed explanation for the apparent inferiority. These findings can inform the development of future interaction methods for laparoscopic AR systems. The second method did not outperform the reference method but our study yielded a number of findings that will help improving natural interaction with virtual objects in laparoscopic AR settings. We see three immediate follow-up research activities that arise from our work. Firstly, the improvement measures that we concluded from our findings need to be integrated into the InstControl method and tested to determine if and how they improve the effectiveness, efficiency, and usability of this method. Secondly, it should be investigated how interactive methods generally compare to landmark-based methods for initial registration. Finally, our improved method should be compared to other interactive methods. Overall, we believe that, while not presenting a finished solution, our work generated valuable findings that can help to improve the overall registration process for laparoscopic AR and thereby to extend its applicability.

Acknowledgments

We thank Daniel Schindele from the Clinic for Urology at the University Hospital Magdeburg (Germany) for his valuable help with the kidney CT image segmentation that was crucial in creating realistic kidney models.

Disclosure statement

The authors have no conflict of interest to declare.

Additional information

Funding

Notes

1. We used the commercially available OpenCV for Unity package (Enox Software, Japan).

References

- Arun KS, Huang TS, Blostein SD. 1987. Least-squares fitting of two 3-d point sets. IEEE Trans Pattern Anal Mach Intell. 9(5):698–700. doi:10.1109/TPAMI.1987.4767965.

- Bernhardt S, Nicolau S, Soler L, Doignon C. 2017. The status of augmented reality in laparoscopic surgery as of 2016. Med Image Anal. 37:66–90. doi:10.1016/j.media.2017.01.007.

- Bogdanova R, Boulanger P, Zheng B. 2016. Depth perception of surgeons in minimally invasive surgery. Surg Innov. 23(5):515–524. doi:10.1177/1553350616639141.

- Bowman DA, McMahan RP, Ragan ED. 2012. Questioning naturalism in 3d user interfaces. Commun ACM. 55(9):78. doi:10.1145/2330667.2330687.

- Bradski G. 2000. The opencv library. Dr Dobb’s J Soft Tools. 25:120–125.

- Brooke J. 1996. Sus-a quick and dirty usability scale. Usab Eval Indus. 189(194):4–7.

- Chen Y, Li H, Wu D, Bi K, Liu C. 2014. Surgical planning and manual image fusion based on 3d model facilitate laparoscopic partial nephrectomy for intrarenal tumors. World J Urol. 32(6):1493–1499. doi:10.1007/s00345-013-1222-0.

- Conrad C, Fusaglia M, Peterhans M, Lu H, Weber S, Gayet B. 2016. Augmented reality navigation surgery facilitates laparoscopic rescue of failed portal vein embolization. J Am Coll Surg. 223(4):e31–4. doi:10.1016/j.jamcollsurg.2016.06.392.

- É L, Reyes J, Drouin S, Collins DL, Popa T, Kersten-Oertel M. 2018. Gesture-based registration correction using a mobile augmented reality image-guided neurosurgery system. Healthcare Technol Lett. 5(5):137–142. doi:10.1049/htl.2018.5063.

- de Araújo BR, Casiez G, Jorge JA, Hachet M. 2013. Mockup builder: 3d modeling on and above the surface. Comput Graph. 37(3):165–178. doi:10.1016/j.cag.2012.12.005.

- Detmer FJ, Hettig J, Schindele D, Schostak M, Hansen C. 2017. Virtual and augmented reality systems for renal interventions: A systematic review. IEEE Rev Biomed Eng. 10:78–94. doi:10.1109/RBME.2017.2749527.

- Fedorov A, Beichel R, Kalpathy-Cramer J, Finet J, Fillion-Robin JC, Pujol S, Bauer C, Jennings D, Fennessy F, Sonka M, et al. 2012. 3d slicer as an image computing platform for the quantitative imaging network. Magn Reson Imaging. 30(9):1323–1341. doi:10.1016/j.mri.2012.05.001

- Garrido-Jurado S, Muñoz-Salinas R, Madrid-Cuevas FJ, Marín-Jiménez MJ. 2014. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 47(6):2280–2292. doi:10.1016/j.patcog.2014.01.005.

- Gong RH, Ö G, Kürklüoglu M, Lovejoy J, Yaniv Z. 2013. Interactive initialization of 2d/3d rigid registration. Med Phys. 40(12):121911. doi:10.1118/1.4830428.

- Hayashi Y, Misawa K, Hawkes DJ, Mori K. 2016. Progressive internal landmark registration for surgical navigation in laparoscopic gastrectomy for gastric cancer. Int J Comput Assist Radiol Surg. 11(5):837–845. doi:10.1007/s11548-015-1346-3.

- Heller N, Sathianathen N, Kalapara A, Walczak E, Moore K, Kaluzniak H, Rosenberg J, Blake P, Rengel Z, Oestreich M, et al. 2019. The kits19 challenge data: 300 kidney tumor cases with clinical context, ct semantic segmentations, and surgical outcomes. [accessed 2019 Sep 15] http://arxiv.org/pdf/1904.00445v1

- Mendes D, Caputo FM, Giachetti A, Ferreira A, Jorge J. 2019. A survey on 3d virtual object manipulation: from the desktop to immersive virtual environments. Comput Graph Forum. 38(1):21–45. doi:10.1111/cgf.13390.

- Nicolau S, Soler L, Mutter D, Marescaux J. 2011. Augmented reality in laparoscopic surgical oncology. Surg Oncol. 20(3):189–201. doi:10.1016/j.suronc.2011.07.002.

- Pratt P, Mayer E, Vale J, Cohen D, Edwards E, Darzi A, Yang GZ. 2012. An effective visualisation and registration system for image-guided robotic partial nephrectomy. J Robot Surg. 6(1):23–31. doi:10.1007/s11701-011-0334-z.

- Song P, Goh WB, Hutama W, Fu CW, Liu X. 2012. A handle bar metaphor for virtual object manipulation with mid-air interaction. In: Konstan JA, editor. Proceedings of the SIGCHI conference on human factors in computing systems. New York (NY): ACM; p. 1297. ACM Digital Library.

- Thompson S, Totz J, Song Y, Johnsen S, Stoyanov D, Ourselin S, Gurusamy K, Schneider C, Davidson B, Hawkes D, et al. 2015. Accuracy validation of an image guided laparoscopy system for liver resection. In: Webster RJ, Yaniv ZR, editors. Medical imaging 2015: image-guided procedures, robotic interventions, and modeling. SPIE Digital Library; 941509. SPIE Proceedings.

- Wang D, Zhang B, Yuan X, Zhang X, Liu C. 2015. Preoperative planning and real-time assisted navigation by three-dimensional individual digital model in partial nephrectomy with three-dimensional laparoscopic system. Int J Comput Assist Radiol Surg. 10(9):1461–1468. doi:10.1007/s11548-015-1148-7.