?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Hyperspectral imaging is one of the most promising techniques for intraoperative tissue characterisation. Snapshot mosaic cameras, which can capture hyperspectral data in a single exposure, have the potential to make a real-time hyperspectral imaging system for surgical decision-making possible. However, optimal exploitation of the captured data requires solving an ill-posed demosaicking problem and applying additional spectral corrections. In this work, we propose a supervised learning-based image demosaicking algorithm for snapshot hyperspectral images. Due to the lack of publicly available medical images acquired with snapshot mosaic cameras, a synthetic image generation approach is proposed to simulate snapshot images from existing medical image datasets captured by high-resolution, but slow, hyperspectral imaging devices. Image reconstruction is achieved using convolutional neural networks for hyperspectral image super-resolution, followed by spectral correction using a sensor-specific calibration matrix. The results are evaluated both quantitatively and qualitatively, showing clear improvements in image quality compared to a baseline demosaicking method using linear interpolation. Moreover, the fast processing time of 45 ms of our algorithm to obtain super-resolved RGB or oxygenation saturation maps per image for a state-of-the-art snapshot mosaic camera demonstrates the potential for its seamless integration into real-time surgical hyperspectral imaging applications.

1. Introduction

Reliable discrimination between tumour and surrounding tissues remains a challenging task in surgery and in particular in neuro-oncology surgery. Despite intensive research and progress in advanced computer-assisted visualisation techniques, most intraoperative surgical evaluations are still heavily reliant on subjective visual assessment from clinicians. Modern intraoperative tissue discrimination techniques therefore often involve the use of interventional techniques, such as fluorescence and ultrasound imaging. However, visual assessment of fluorescence intensities during surgeries is usually qualitative, which hinders accurate, reliable and repeatable measurements for consensus, standardisation, and adoption of fluorescence-guided surgery in the field (Valdes et al. Citation2019). Ultrasound imaging may suffer from poor resolution and restricted field of view, and the interpretation is highly subjective to the experience of the experts (Kaale et al. Citation2021).

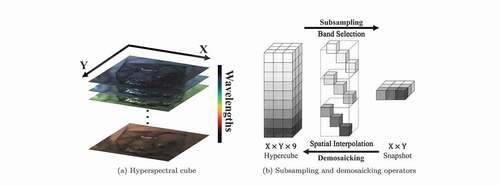

Intraoperative hyperspectral imaging (HSI) provides a non-contact, non-ionising and non-invasive solution suitable for many medical applications (Lu and Fei Citation2014; Shapey et al. Citation2019; Clancy et al. Citation2020). HSI can provide rich high-dimensional spatio-spectral information within the visible and near-infrared electromagnetic spectrum across a wide field of view. Compared to conventional colour imaging that provides red, green, and blue (RGB) colour information, HSI can capture information across multiple spectral bands beyond what the human eye can see, thereby facilitating tissue differentiation and characterisation. Unlike fluorescence and ultrasound imaging, HSI exploits the inherent optical characteristics of different tissue types. It captures the measurements of light that provide quantitative diagnostic information on tissue perfusion and oxygen saturation, enabling improved tissue characterisation relative to fluorescence and ultrasound imaging (Lu and Fei Citation2014). Depending on the number of acquired spectral bands, hyperspectral imaging may also be referred to as multispectral imaging, but for simplicity the hyperspectral terminology will be used. Single hyperspectral image data typically span three dimensions, two of them represent 2D spatial dimensions and the other represents spectral wavelengths, as illustrated in ). Therefore, 3D HSI data are thus often referred to as hyperspectral cubes, or hypercubes in short. In addition, time comes as a fourth dimension in the context of dynamic scenes, such as those acquired during surgery. Hyperspectral cameras can broadly be divided into three categories based on their acquisition methods, namely spatial scanning, spectral scanning and snapshot cameras (Shapey et al. Citation2019; Clancy et al. Citation2020). Spatial scanning acquires the entire wavelength spectrum simultaneously on either a single pixel or a line of pixels using linear or 2D array detector, respectively. The camera will spatially scan through pixels over time to complete the hyperspectral cube capturing. Spectral scanning, on the other hand, is able to capture the entire spatial scene at a certain wavelength with a 2D array detector, and then switches to different wavelengths over time to complete scanning. These two types of spectral cameras are able to acquire hyperspectral data with high spatial and spectral resolution, but long acquisition times prevent them from providing live image displays suitable for real-time intraoperative use.

Figure 1. Examples to illustrate a hyperspectral cube as well as subsampling and demosaicking operations: (a) shows the spatial dimensions (X and Y) and spectral dimension of a hypercube; (b) shows how hyperspectral cube and snapshot mosaic images can be transformed into each other with band selection/spatial interpolation. Due to the space constraint of the image, snapshot mosaicking is taken as an example.

To achieve intraoperative tissue characterisation with HSI in real-time, snapshot cameras are more suitable as they can capture hyperspectral cube data in real-time (Ebner et al. Citation2021). A common type of snapshot camera uses a snapshot mosaic system to acquire the entire hyperspectral cube instantly without the need of a scanning mechanism. The refined pixel filter array, arranged similarly to the

colour filter array on the RGB sensor, allows the snapshot camera to acquire a maximum of

different spectral bands in a single exposure (Geelen et al. Citation2014). Other snapshot hyperspectral imaging approaches, such as coded aperture snapshot spectral imaging (CASSI) (Wagadarikar et al. Citation2008) and micro-lens-based acquisition, have been proposed. In general, the downside of snapshot acquisition is that it sacrifices spatial and spectral resolution to achieve fast data acquisition speeds. ) illustrates the relationship between a high-resolution hyperspectral cube and a

snapshot mosaic image as a simplified example. An

snapshot image is composed of a large number of individual

blocks following mosaic patterns. The

snapshot on the right of ) is an example of a single block captured by the

sensor array.

As the image captured by a snapshot mosaic sensor is in 2D, a demosaicking operation is necessary to restore the spatial and spectral resolution of the image, followed by spectral correction to deal with the parasitic effects of the sensors, such as harmonics, cross-talks and leakage (Pichette et al. Citation2017). Spectral correction can usually be handled by applying a calibration matrix, such as provided by the camera manufacturer, but demosaicking of the snapshot data is challenging. As illustrated in ), the demosaicking operator usually involves splitting the image into different spectral bands, followed by spatial interpolation to fill in the missing data. Common ways of image demosaicking using interpolation methods usually result in poor image quality of reconstructed hyperspectral data, so several approaches have been presented to address this demosaicking problem. For example, Hy-Demosaicing proposed by Zhuang et al. used data-adaptive subsampled signal subspaces for reconstruction of hyperspectral urban images by exploiting the low-rank and self-similarity properties of the hyperspectral images (Zhuang and Bioucas-Dias Citation2018). Deep learning methods for hyperspectral demosaicking were also investigated, such as the similarity maximisation framework proposed for performing end-to-end demosaicking and cross-talk correction for agricultural machine vision (Dijkstra et al. Citation2019).

Despite the development of demosaicking algorithms, research on medical hyperspectral image demosaicking remains limited. The goal of this study is to develop a reliable real-time image demosaicking, spectral correction and associated RGB reconstruction algorithm to recover higher quality medical hyperspectral images suitable for intraoperative applications. Due to the lack of open datasets of snapshot mosaic hyperspectral imaging from intraoperative settings, and more importantly, due to the impossibility of capturing hyperspectral imagery paired for both snapshot and high-resolution sensors, the proposed learning-based demosaicking algorithm makes use of publicly available medical hyperspectral image datasets captured in high spatial and spectral resolution by line-scan cameras for training purposes. Based on high-resolution data, we exploit the knowledge of the physical image acquisition process to simulate images expected from a snapshot mosaic camera as well as their corresponding ideal demosaicked images. This allows us to form image pairs suitable for supervised training. The results have been evaluated with popular full-reference image quality metrics including structural similarity (SSIM) and peak signal-to-noise ratio (PSNR). A first qualitative survey has also been conducted on the reconstructed RGB image quality, and the proposed algorithm has been applied to real snapshot mosaic test images to demonstrate its effectiveness. The speed and quality of the reconstructed image from our proposed algorithm show respectable results, which will facilitate seamless integration into intraoperative hyperspectral imaging systems using snapshot mosaic cameras for responsive surgical guidance (Ebner et al. Citation2021).

2. Material and methods

One of the major challenges for developing learning-based hyperspectral image demosaicking algorithms is the lack of hyperspectral datasets offering paired snapshot and high-resolution data. Such datasets would be even more complex to acquire in intraoperative contexts. We took an alternative approach where synthetic low-resolution snapshot images are generated from high-resolution hyperspectral images captured by line-scan sensors endowed with long acquisition times. Our developed demosaicking algorithm can thus take advantage of the resulting synthetic paired high-resolution/snapshot data. This section first introduces the publicly available hyperspectral line-scan datasets used in the experiments. Next, an overall framework for simulating the snapshot image acquisition process using the line-scan data will be presented, with details on how synthetic snapshot images and ideal demosaicked images are generated. After that, this section introduces the integration of supervised image super-resolution methods into the demosaicking, spectral correction and RGB generation framework.

2.1. Source datasets

Line-scan sensors are able to capture data across hundreds of spectral bands within the visible and near-infrared range. While they require long acquisition times, they provide high spatial and spectral resolution. Line-scan data contains sufficient information to generate snapshot mosaic images with much lower spatial and spectral resolutions. Two publicly available line-scan hyperspectral image datasets have been used in this work and are presented hereafter.

Fabelo et al. (Citation2019) provide a hyperspectral dataset acquired during neurosurgical procedures as part of the HypErspectraL Imaging Cancer Detection (HELICoiD) project. This dataset contains 36 hyperspectral cubes collected from 22 different patients. Their hyperspectral acquisition system acquired intraoperative data containing 826 successive spectral bands within the wavelengths of 400 nm to 1000 nm, with a spectral resolution of 2–3 nm. Preprocessing of the hypercube data was performed as outlined in the paper (Fabelo et al. Citation2019).

Hyttinen et al. (Citation2020) provide the second hyperspectral dataset used in this work. The Oral and Dental Spectral Image Database (ODSI-DB), is a larger dataset containing 316 different oral and dental hyperspectral images. The hyperspectral images acquired in this dataset are from two different cameras. One hundred and seventy-one out of the 316 images were acquired using a Specim IQ (Specim, Spectral Imaging Ltd., Oulu, Finland) line-scan camera, which has a spatial resolution of and a spectral range of 400–1000 nm with 204 spectral bands captured in total. The remaining images were obtained with the Nuance EX (CRI, PerkinElmer, Inc., Waltham, MA, USA) spectral scan camera, with a higher spatial resolution of

but fewer spectral bands. It features 51 bands ranging from 450 to 950 nm. Due to their higher spectral resolution, in this work, only the line-scan (Specim IQ) hyperspectral images were selected for synthetic snapshot image generation. Denser spectral information is indeed beneficial for sampling of sensor responses during our image generation process. The hyperspectral data in this dataset come preprocessed with flat-field correction from a blank reference sample, therefore white-balancing is not necessary for the ODSI-DB.

2.2. Image generation and training pipeline

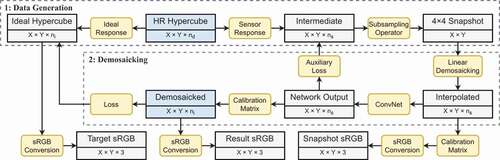

illustrates the pipeline of the demosaicking algorithm for hyperspectral snapshot images. The entire framework consists of two parts. The first part detailed in Section 2.2.1 focuses on the generation of synthetic snapshot image and ideal high-resolution image datasets from high-resolution images (HELICoiD or ODSI-DB). The second part detailed in Section 2.2.2 involves the supervised learning method to obtain high-quality hypercube reconstruction result.

Figure 2. Diagram of the hyperspectral snapshot image demosaicking algorithm simulated using high-resolution line-scan data. The rectangular boxes contain the types of data as well as their corresponding shape, whereas the rounded boxes show the operations in each step. The blue boxes indicate the input and output of the algorithm.

2.2.1. Synthetic image generation process

Synthetic image generation starts from a white-balanced high-spectral-resolution hyperspectral data cube (HELICoiD or ODSI-DB), referred to as HR Hypercube in the diagram. We denote the size of a high-spectral-resolution hyperspectral cube as , where

and

capture spatial and

the spectral dimensions.

Simulating the Spectral Response of the Snapshot Sensor. Snapshot mosaic hyperspectral sensors only capture a discrete number of spectral bands with

typically much smaller than

. For example

for a

mosaic arrangement. Each of the

bands can have a non-trivial spectral response (Pichette et al. Citation2017) (e.g. bimodal and/or heavy tailed response) due to the parasitic effects, such as harmonics, cross-talk and spectral leakage. These responses are nonetheless typically calibrated in factory and can be retrieved from the calibration files of the camera sensor.

An intermediate high-spatial-resolution hyperspectral cube of size can be generated by simulating the effect of camera sensor response on the high-spectral-resolution data. More specifically, the intermediate hyperspectral cubes can be obtained by computing at each spatial location the inner products of the individual sensor responses with the high-resolution spectrum from the input data.

Simulating the Spatial Response of the Snapshot Sensor. Having simulated the spectral response and obtained an intermediate hypercube, the final simulated 2D mosaic image can be derived by applying spatial subsampling as illustrated in ). More specifically, the hypercube is divided into smaller blocks with the same spatial size as the mosaic sensor array, and for each pixel in each individual block, only one value from the

wavelengths is preserved. Therefore, the synthetic snapshot mosaic image is a scalar-valued image of size

.

Simulating the Target Ideal Hyperspectral Data. Given the non-trivial spectral response of the captured spectral bands (harmonics, spectral leakage, cross-talk, etc.), the spectral correction matrix for snapshot systems provided by the camera manufacturer may reconstruct only a subset of

spectral bands to ensure high-fidelity measurements of reconstructed bands.

The resulting bands are designed to approximate ideal sensor measurements by taking into account the response of ideal Fabry-Pérot resonators (Pichette et al. Citation2017). The corresponding optical band-pass response

can be characterised as a Lorentzian function of optical frequency. We express it in terms of the wavelength

, centred around the central wavelength of each snapshot sensor

, with full-width at half-maximum FWHM and with a quantum efficiency QE:

The number and characteristics

, QE and FWHM of the ideal spectral bands are selected to capture all the reliable information contained in the

spectral bands. These are typically provided by the camera manufacturer and are used to fit the measured response curves. A calibration matrix

of size

to map the

spectral measurements to the

ideal spectral bands is also computed in factory and provided by the manufacturer (Pichette et al. Citation2017).

While one could try to recover high-spectral-resolution data from low-spectral-resolution snapshot mosaic data, in many applications, it is sufficient to recover the spatial information lost by the spatial sampling process of the mosaic arrangement while estimating a reliable set of spectral bands. As such, in this work, we aim to recover high-spatial-resolution information for each of the ideal spectral bands. We refer to this target as the ideal hypercube in . It can be estimated from the HR hypercube input data by applying ideal Lorentzian responses to it. Thus, the size of the target ideal hypercube is

.

2.2.2. Learning for demosaicking, spectral correction and RGB generation

Supervised Training Approach for Super-resolved Demosaicking. The synthetic data generation in Section 2.2.1 provides paired high-spatial-resolution ideal hypercubes and 2D snapshot mosaic images. Having access to such datasets, we exploit supervised learning approaches to develop a demosaicking approach, thereby achieving super-resolution of the captured mosaic data.

As outlined in the blue box in , the algorithm starts with a simple bilinear-interpolation-based demosaicking of the snapshot mosaic images. This operation involves grouping the pixels inside the snapshot images according to the position of the sampled spectral bands, and then using bilinear interpolation along the X- and Y-axes to upsample each spectral band back to the original sensor size. The resulting interpolated data are of size , i.e. the same size as the intermediate high-spatial-resolution hypercube. While linear interpolation recovers the snapshot data to its original shape before subsampling, the resulting images can still look blurry. It is now well established that deep learning can effectively refine image details with a fast inference speed (Lugmayr et al. Citation2020), at least when applied to RGB data. In our algorithm, a U-Net (Ronneberger et al. Citation2015) enhanced to accommodate residual units (Kerfoot et al. Citation2019) has been adopted for the super-resolution and demosaicking task. The network contains a contracting path with four downsampling layers and two residual blocks at each resolution, as well as a symmetric expanding path with skip connections.

Rather than directly predicting the target ideal hypercube, we simplify the training procedure and take advantage of the known correction matrix . For this purpose, the network aims at inferring an intermediate hypercube of size

. As such, the network output has the same size and spectral characteristics as the bilinearly interpolated input hypercube but achieves sharper details.

Embedding Spectral Correction. From the initial output hypercube of the network, we compensate for the parasitic spectral effects of the sensor by applying the correction matrix to each spatial location. The size and spectral characteristics of the resulting hypercube match those of the target ideal hypercube. To train the network and the associated spectral correction, we use a loss that captures the error between the inferred corrected hypercube and the ideal hypercube.

In order to provide additional guidance with intermediate supervision, an auxiliary loss between the intermediate hypercube inferred by the residual U-Net and the intermediate synthetic high-spatial-resolution hypercube is also added. The idea behind this auxiliary loss is that instead of directly learning to refine the spatial resolution and compensate for the parasitic spectral effect, the network can be guided to focus solely on the image super-resolution task.

In terms of the choice of loss functions, we investigated two sets of configurations. For L1 loss configuration, both the training loss and the auxiliary loss are set to L1 loss. For perceptual loss configuration, the L1 auxiliary loss is replaced with the feature reconstruction loss component of the perceptual loss (Johnson et al. Citation2016) as this has been shown to enable improved super-resolution performance.

Denoting the (non-spectrally-corrected) output hypercube from the residual U-Net as , the intermediate high-resolution hypercube as

, the pre-trained loss network for the perceptual loss as

, and the ideal hypercube as

, then the total loss

can be expressed as follows, where the weight factor

is set to 0.001 empirically:

sRGB reconstruction. For intuitive visualisation of the result, the linearly interpolated snapshot hypercube data, the demosaicked hypercube results and the ideal hypercube data that serve as the ground truth of the network are all converted into sRGB images. This is achieved by first converting the spectral data (corrected with where relevant) into CIE XYZ colour space using colour matching functions and assuming a D65 illuminant. We then convert the XYZ colour images into linear RGB colour space and apply gamma correction to obtain sRGB images.

3. Results

Implementation Details. In our experiment, sensor information from Ximea xiSpec (MQ022HG-IM-SM4X4-VIS2) snapshot camera was used to simulate the visible range (470–620 nm) mosaic snapshot data. Synthetic image generation and demosaicking were performed on the HELICoiD and ODSI-DB datasets separately. The HELICoiD dataset contains 36 in vivo brain surface hyperspectral cubes in total, which we divided into 3 groups: 24 images for training, 6 images as the validation set and the other 6 images for testing. As for the ODSI-DB dataset, there are 122 hypercubes acquired from the line-scan sensor in total. Seventy-eight hypercubes were used for training, 20 for validation and 24 for testing. Since both datasets have cases where multiple hyperspectral data are obtained from the same subject, the dataset was split manually in order to avoid data from the same subject appearing in different groups.

Both loss configurations described in Section 2.2.2 were tested in the experiment. For perceptual loss configuration, VGG-16 (Simonyan and Zisserman Citation2015) pre-trained network was used for feature extraction during the perceptual loss calculation, and the parameters of VGG-16 were fixed during training. In order to increase the number of training samples and limit the GPU consumption, the hyperspectral data were randomly cropped into smaller patches with a spatial size of . Random flipping and random multiples of

rotation were also performed for data augmentation. The batch size was set to 3 for all training processes, and the evaluation losses are the same as the training losses. Adam optimisation (Kingma and Ba Citation2014) was used with an initial learning rate of 0.0001, and the best training models (lowest evaluation loss) after 10,000 epochs with were selected for the proposed algorithm.

Quantitative Evaluation. Three metrics have been used to evaluate the demosaicking results, including the average L1 error, the structural similarity index (SSIM) and peak signal-to-noise ratio (PSNR). The quantitative results of the demosaicked hyperspectral cubes from the HELICoiD and ODSI-DB datasets are listed in metrics-results. In this table, results from both configurations with different auxiliary losses are shown, where the residual U-Net model with perceptual auxiliary loss performs slightly better than the model with L1 auxiliary loss, but the difference is subtle. However, when it comes to cross-dataset evaluation (ODSI-DB HELICoiD), where the model trained on the ODSI-DB dataset was used to directly test against HELICoiD’s dataset without any fine-tuning, the perceptual loss model outperforms the L1 loss model significantly. It can also be observed that the cross-dataset results are slightly worse compared to the results of the model trained directly with the HELICoiD dataset, but it is still acceptable considering the domain gap between the HELICoiD and ODSI-DB datasets.

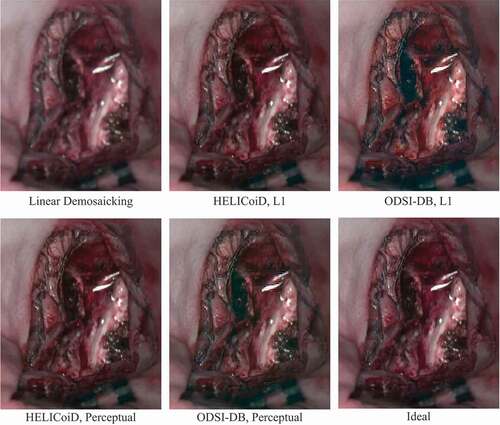

The results were also evaluated based on the perceptual similarity of the sRGB images converted from the hyperspectral data. A perceptual similarity metric, namely LPIPS, was also used to simulate image comparison with human perception (Zhang et al. Citation2018). A lower perceptual score indicates that the two images appear more similar to each other, with a score of 0 representing the best possible case, where the two images are the same. sRGB-results lists the perceptual scores of the sRGB images to evaluate the quality of the demosaicked hypercube data. Here, all the images for testing are from the HELICoiD dataset, and the demosaicking model trained with ODSI-DB is not fine-tuned with any HELICoiD data. The demosaicking algorithm is also compared to the baseline linear demosaicking results, which are derived from the linearly demosaicked and spectral-corrected snapshot images that serve as the input of the residual U-Net as shown in . Similar trends can still be observed from this table, where the perceptual loss model outperforms the L1 model. Also, for the HELICoiD dataset in particular, the supervised learning based demosaicking algorithm achieves substantially better scores compared to linear demosaicking. One hyperspectral cube data from the HELICoiD test set has been selected to illustrate the result qualitatively, as shown in . The sRGB images show that the model trained on the HELICoiD datasets achieve respectable reconstruction results, with the result from the perceptual loss model having a slightly sharper image, which can be observed around the vessels as an example. On the other hand, the model trained on the ODSI-DB dataset can also recover the spatial resolution of the image to some extent compared to linear demosaicking, but it still suffers from artefacts as can be observed around the reflections in the image.

Figure 3. Comparison between the sRGB images converted from the ideal hyperspectral cube and demosaicked results from linear interpolation and supervised learning models.

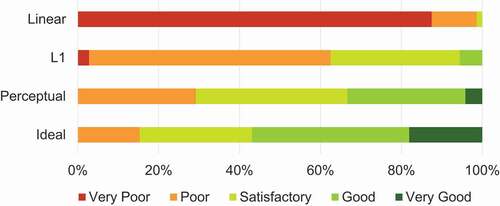

User Study. Besides quantitative analysis of the data, a qualitative user study was conducted to evaluate the quality of the demosaicked images. In this survey, the demosaicked HELICoiD test images were divided into six groups. Each group contains images with the same scene but is generated from four different demosaicking methods, i.e. linear demosaicking, the proposed algorithm with L1 and perceptual losses, as well as the ideal demosaicked image. The images in each group were randomly shuffled, and the label was hidden. Twelve clinical experts were involved in the survey, who subjectively gave a Likert scale rating (integer score from 1 to 5, 5 is of best quality) for each image. The quality scores of all experts are gathered and divided based on the demosaicking methods, and the percentage distributions are shown in the bar graph in . The average score of all linearly demosaicked images is only , and two experts claimed that some images seemed out of focus. The average scores for the proposed algorithm results from L1 and perceptual loss models are

and

respectively, indicating a higher image quality perceptually than linear demosaicking. The ideal demosaicked images achieve the highest average score of

. We have also performed paired

-test between score statistics of linear demosaicking and L1 loss model, L1 loss model and perceptual loss model, as well as perceptual loss model and ideal demosaicking images, and the

-values are all smaller than the significance level of 0.05. This result indicates the differences in subjective image quality scores between different demosaicking methods are all statistically significant.

Figure 4. Percentage distribution of image quality scores in Likert scale given by the clinical experts.

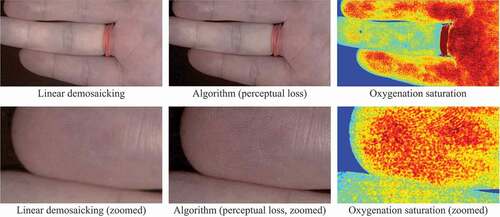

Preliminary Evaluation with Real Data. One of the concerns regarding the supervised learning based demosaicking algorithm is that the entire framework relies heavily on synthetic data. Therefore, a real snapshot mosaic image of a hand captured by Ximea xiSpec (MQ022HG-IM-SM4X4-VIS2) was used to validate the effect of the algorithm, as illustrated in the converted sRGB images in . The difference between linear demosaicking and the proposed algorithm with perceptual loss model can be easily observed when we zoom in to closely investigate the details, where fingerprints can be recovered using the proposed algorithm. This result shows the generalisability of the algorithm, especially considering that the two models were never trained on real snapshot images. We also generated a blood perfusion map using the super-resolved hyperspectral data as shown in based on (Tetschke et al. Citation2016) to demonstrate the potential use of the algorithm in real medical applications. However, the cross-dataset results in and underline the domain gap between different datasets, which may cause image artefacts.

Figure 5. Preliminary test results on real snapshot data converted into sRGB images. The linear demosaicking and the proposed algorithm are compared. The two images on the right also illustrate oxygenation saturation maps derived from hyperspectral information.

Table 1. Quantitative analysis of the demosaicked hyperspectral cubes from the HELICoiD and ODSI-DB hyperspectral datasets. The second row (L1 and Perceptual) refers to the choice of the auxiliary loss in the algorithm. The ODSI-DB HELICoiD column indicates that the result is tested on the HELICoiD dataset using a network trained on the ODSI-DB dataset

Table 2. Perceptual metric scores on the sRGB images generated from the demosaicked hyperspectral cubes. All the results were tested on the HELICoiD dataset, and different demosaicking methods were compared (linear demosaicking, L1 model and perceptual model)

Real-time Performance. We tested our prototype implementation on our computational workstation for clinical research studies (NVIDIA TITAN RTX 24GB, Intel Core i9 9900 K) by taking advantage of Python, C++, OpenGL, Cuda, and Pytorch. The proposed algorithm achieved an overall processing time of approximately 45 ms per input image frame, including frame-grabbing, white balancing, bilinear demosaicking, followed by learning-based super-resolved demosaicking, spectral correction and in the end either sRGB reconstruction or oxygenation saturation map estimation. The Pytorch-based U-Net super-resolution inference runs in 34 ms.

4. Conclusion

In this work, we propose a hyperspectral snapshot image demosaicking algorithm for computer-assisted surgery using synthetic image generation and supervised learning. The simulated snapshot images and their corresponding ideal demosaicked images can be generated from publicly available hyperspectral image datasets acquired by line-scan sensors. A demosaicking framework has been developed with the adoption of a residual U-Net for hyperspectral image super-resolution, which can be trained with the synthetic image pairs. The quantitative and qualitative results show that the supervised learning approach is able to produce better reconstruction results compared to simple linear demosaicking, and it can still achieve a fast processing speed, which is beneficial for integration of the demosaicking algorithm into real-time surgical imaging applications. Future work will include further investigation on the generalisability of the algorithm when more real snapshot data are captured. Since the proposed demosaicking approach separates the learning-based spatial super-resolution from spectral calibration, generalisation of our approach on real snapshot images can be expected, which has been demonstrated by the convincing results achieved with our preliminary real data evaluation. In addition, there is still room for improvements in speed and the image quality of the demosaicking algorithm. Nevertheless, the proposed demosaicking algorithm provides a solid step forward for medical hyperspectral imaging.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Peichao Li

Peichao Li is a PhD student at King's College London supervised by Prof. Tom Vercauteren and Mr. Jonathan Shapey. Peichao received his Bachelor's degree in Engineering from the Australian National University and then completed his MRes degree in Medical Robotics and Image Guided Intervention at Imperial College London.

Michael Ebner

Dr. Michael Ebner is a Royal Academy of Engineering Enterprise Fellow and co-founder & CEO of Hypervision Surgical Ltd. He received his PhD degree in medical image computing from University College London, UK, for his work on volumetric MRI reconstruction from 2D slices in the presence of motion. His developed framework NiftyMIC is used as a clinical research tool at numerous hospitals and leading academic institutions in various countries including the UK, US, Belgium, Austria, Italy, Spain, and China.

Philip Noonan

Dr. Philip Noonan is a postdoctoral research assistant at King's College London. His research focuses on medical physics and medical image analysis.

Conor Horgan

Dr. Conor Horgan is an Innovate UK Innovation Scholar with a PhD in biomedical engineering. He currently works as a research scientist at Hypervision Surgical Ltd. and as a postdoctoral research associate at King's College London. His work focuses on the development of optical systems for intraoperative surgical diagnostics and guidance.

Anisha Bahl

Anisha Bahl is a PhD student at King's College London supervised by Prof. Tom Vercauteren. She graduated from the University of Oxford with an MChem in Chemistry in 2020. Her current project is on developing a hyperspectral imaging system for use in neuro-oncological surgery to visualise tumour boundaries in real-time.

Sébastien Ourselin

Prof Sébastien Ourselin is Head of School, Biomedical Engineering & Imaging Sciences and Chair of Healthcare Engineering at King's College London. Prior to his recent appointment he was Vice-Dean (Health) at the Faculty of Engineering Sciences, Director of the Institute of Healthcare Engineering and of the EPSRC Centre for Doctoral Training in Medical Imaging, Head of the Translational Imaging Group (72 staff) within the Centre for Medical Image Computing (CMIC) and Head of Image Analysis at the Dementia Research Centre (DRC) at UCL.

Jonathan Shapey

Jonathan Shapey is a Senior Clinical Lecturer at King's College London and a Consultant neurosurgeon in skull base surgery at King's College Hospital. He has first-hand experience in the clinical translation of intraoperative hyperspectral imaging devices. He is also the clinical lead and co-founder of Hypervision Surgical Ltd.

Tom Vercauteren

Prof Tom Vercauteren is Professor of Interventional Image Computing at King's College London since 2018 where he holds the Medtronic / Royal Academy of Engineering Research Chair in Machine Learning for Computer-assisted Neurosurgery. From 2014 to 2018, he was Associate Professor at UCL where he acted as Deputy Director for the Wellcome / EPSRC Centre for Interventional and Surgical Sciences (2017-18). From 2004 to 2014, he worked for Mauna Kea Technologies, Paris where he led the research and development team designing image computing solutions for the company’s CE- marked and FDA-cleared optical biopsy device.

References

- Clancy NT, Jones G, Maier-Hein L, Elson DS, Stoyanov D. 2020. Surgical spectral imaging. Med Image Anal. 63:101699. doi:https://doi.org/10.1016/j.media.2020.101699.

- Dijkstra K, van de Loosdrecht J, Schomaker L, Wiering MA. 2019. Hyperspectral demosaicking and crosstalk correction using deep learning. Mach Vis Appl. 30(1):1–21. doi:https://doi.org/10.1007/s00138-018-0965-4.

- Ebner M, Nabavi E, Shapey J, Xie Y, Liebmann F, Spirig JM, Hoch A, Farshad M, Saeed SR, Bradford R, et al. 2021. Intraoperative hyperspectral label-free imaging: from system design to first-in-patient translation. J Phys D: Appl Phys. 54(29):294003. doi:https://doi.org/10.1088/1361-6463/abfbf6.

- Fabelo H, Ortega S, Szolna A, Bulters D, Piñeiro JF, Kabwama S, J-O’Shanahan A, Bulstrode H, Bisshopp S, Kiran BR, et al. 2019. In-vivo hyperspectral human brain image database for brain cancer detection. IEEE Access. 7:39098–39116. doi:https://doi.org/10.1109/ACCESS.2019.2904788.

- Geelen B, Tack N, Lambrechts A. 2014. A compact snapshot multispectral imager with a monolithically integrated per-pixel filter mosaic. In: Advanced fabrication technologies for micro/nano optics and photonics VII; vol. 8974. International Society for Optics and Photonics, San Francisco, California, United States. p. 89740L.

- Hyttinen J, Fält P, Jäsberg H, Kullaa A, Hauta-Kasari M. 2020. Oral and dental spectral image database—odsi-db. Appl Sci. 10(20):7246. doi:https://doi.org/10.3390/app10207246.

- Johnson J, Alahi A, Fei-Fei L. 2016. Perceptual losses for real-time style transfer and super- resolution. In: European Conference on Computer Vision, Amsterdam, The Netherlands. Springer. p. 694–711.

- Kaale AJ, Rutabasibwa N, Mchome LL, Lillehei KO, Honce JM, Kahamba J, Ormond DR. 2021. The use of intraoperative neurosurgical ultrasound for surgical navigation in low- and middle-income countries: the initial experience in Tanzania. J Neurosurg JNS. 134(2):630–637. https://thejns.org/view/journals/j-neurosurg/134/2/article-p630.xml.

- Kerfoot E, Clough J, Oksuz I, Lee J, King AP, Schnabel JA. 2019. Left-ventricle quantification using residual u-net. In: Statistical Atlases and Computational Models of the Heart. Atrial Segmentation and LV Quantification Challenges. Cham: Springer International Publishing. p. 371–380.

- Kingma D, Ba J 2014. Adam: a method for stochastic optimization. In: International Conference on Learning Representations, San Diego, California, United States.

- Lu G, Fei B. 2014. Medical hyperspectral imaging: a review. J Biomed Opt. 19(1):010901. doi:https://doi.org/10.1117/1.JBO.19.1.010901.

- Lugmayr A, Danelljan M, Timofte R 2020. NTIRE 2020 challenge on real-world image super- resolution: methods and results. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, Washington, United States. p. 494–495.

- Pichette J, Goossens T, Vunckx K, Lambrechts A. 2017. Hyperspectral calibration method for CMOS-based hyperspectral sensors. In: Soskind YG, Olson C, editors. Photonic instrumentation engineering IV; vol. 10110. International Society for Optics and Photonics, San Francisco, California, United States; SPIE; p. 132–144.

- Ronneberger O, Fischer P, Brox T. 2015. U-net: convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer- Assisted Intervention, Munich, Germany. Springer. p. 234–241.

- Shapey J, Xie Y, Nabavi E, Bradford R, Saeed SR, Ourselin S, Vercauteren T. 2019. Intraoperative multispectral and hyperspectral label-free imaging: a systematic review of in vivo clinical studies. J Biophoton. 12(9):e201800455. doi:https://doi.org/10.1002/jbio.201800455.

- Simonyan K, Zisserman A. 2015. Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations, San Diego, California, United States. p. 1–14.

- Tetschke F, Markgraf W, Gransow M, Koch S, Thiele C, Kulcke A, Malberg H. 2016. Hyperspectral imaging for monitoring oxygen saturation levels during normothermic kidney perfusion. J Sens Sens Syst. 5(2):313–318. doi:https://doi.org/10.5194/jsss-5-313-2016.

- Valdes PA, Juvekar P, Agar NYR, Gioux S, Golby AJ. 2019. Quantitative wide-field imaging techniques for fluorescence guided neurosurgery. Front Surg. 6:31. https://www.frontiersin.org/article/10.3389/fsurg.2019.00031.

- Wagadarikar A, John R, Willett R, Brady D. 2008. Single disperser design for coded aperture snapshot spectral imaging. Appl Opt. 47(10):B44–B51. doi:https://doi.org/10.1364/AO.47.000B44.

- Zhang R, Isola P, Efros AA, Shechtman E, Wang O. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, Utah, United States. p. 586–595.

- Zhuang L, Bioucas-Dias JM. 2018. Hy-demosaicing: hyperspectral blind reconstruction from spectral subsampling. In: IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain. p. 4015–4018.