?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

3D reconstruction is a useful tool for surgical planning and guidance. Supervised methods for disparity/depth estimation are the state of the art, with demonstrated performances far superior to all alternatives, such as self supervised and traditional geometric methods. However, supervised training requires large datasets, and in this field, data is lacking. In this paper, we investigate the learning of structured light projections to enhance the development of disparity estimation networks. Improving supervised learning on small datasets without needing to collect extra data. We first show that it is possible to learn the projection of structured light on a scene. Secondly, we show that the joint training of structured light and disparity, using a multi-task learning (MTL) framework, improves the learning of disparity. Our MTL setup outperformed the single task learning (STL) network in every validation test. Notably, in the generalisation test, the STL error was 1.4 times worse than that of the best MTL performance. A dataset containing stereoscopic images, disparity maps and structured light projections on medical phantoms and ex vivo tissue was created for evaluation together with virtual scenes. This dataset will be made publicly available in the future.

1. Introduction

Recently, it has been shown that when large datasets are available, deep learning approaches define the state-of-the-art in 3D scene reconstruction (Zhao et al. Citation2020; Laga et al. Citation2020). This is fundamentally due to a neural network’s ability to learn more complex representations of image data than can be handcrafted. However, the coupling of data volume and performance is an issue, particularly for domains that have limited data availability such as surgery (Hashimoto et al. Citation2018; Willemink et al. Citation2020). Capturing large amounts of depth information for surgery, especially Minimally Invasive Surgery (MIS), is laborious, due to issues with hardware constraints and the difficulty of dealing with the tissue; primary issues with the deformation of the tissue which can disturb information capture. This problem is reflected in the SCARED dataset (Allan et al. Citation2021), which is the largest annotated depth dataset for surgical scenes, but only contains 45 unique and complete depth images. Training on small datasets complicates the development of networks (Qi and Luo Citation2020; Brigato and Iocchi Citation2021), due to the risk of overfitting (Ying Citation2019). To obviate ground truth, stereo self-supervision (Godard et al. Citation2017) approaches have been developed; learning disparity by training to warp the left image to become the right and vice versa. However, the results are still considerably worse than what is achieved by supervised networks (Uhrig et al. Citation2017). Accurate 3D reconstruction can provide surgeons a tool for surgical planning and guidance (Hersh et al. Citation2021). Therefore, strategies for overcoming data limitation are desperately needed. Otherwise, the development of accurate neural networks for surgery is unachievable.

Structured light is currently the most dense and accurate approach for creating ground truth information for depth datasets. Example datasets that were created using structured light include NYU (Silberman and Fergus Citation2011), Middlebury Stereo (Scharstein and Szeliski Citation2003; Scharstein et al. Citation2014) and SCARED. Structured light is the projection of patterns into a scene, which when captured by an imaging camera, allows for depth recovery through analysis of the pattern distortions (Salvi et al. Citation2004). Commonly, these pattern projection images are used exclusively for ground truth depth generation. Once depth has been captured, the pattern projection images are discarded afterwards. However, error will occur in the conversion process due to difficulties relating to the environment, surface properties and the hardware (Gupta et al. Citation2011; Jensen et al. Citation2017; Rachakonda et al. Citation2019). Primarily, errors will occur at the pixel classification stage which for example can be caused by reflections or hardware malfunction. This means that the information within the structured light images and the generated depth maps are not the same.

In this paper, we are proposing a unique approach to depth estimation. Firstly, we show that it is possible in itself to teach a neural network to be able to artificially project structured light patterns into a scene. Which is indirectly learning to perform disparity estimation. To the best of our knowledge, this is the first use of a neural network for the learning of the projection of structured light patterns into a scene. Secondly, we show that the dual training of direct disparity regression and structured light projection, via multi-task learning, enhances network training and improves disparity learning without increasing the number of parameters nor requiring the collection of additional ground truth data.

2. Related Work

2.1. Active Sensors

Structured light can be used to produce unique pixel codes for each stereo image, which enables the use of simple matching techniques for the stereo disparity calculation. This approach can provide dense and accurate depth maps for a given stereo image. However, structured light requires a controlled environment, multiple hardware and sometimes temporal variation. Certain structured light patterns require sequential projection of different patterns over time, such as binary/grey code which is what this paper works with. The control of the environment, when performing structured light projection, is important for good pixel classification. Robust classifiers are normally required to prevent incorrect classification (Salvi et al. Citation2004). These limitations have large ramification for setups designed for real-time performance in dynamic environments. Our proposed method for structured light reconstruction removes the need for the additional hardware and the temporal requirement.

2.2. Depth estimation

The state-of-the-art for 3D reconstruction is defined by deep learning approaches that directly regress depth values. The general consensus within the deep learning community is that a neural network will discover a better way to solve the stereo matching problem when given free roam in an end-to-end setup where there is limited human supervision. Examples of these networks could be, RAFTStereo (Lipson et al. Citation2021) or PSMnet (Chang and Chen Citation2018) for stereo and DORN (Fu et al. Citation2018) for monocular. The prevalent issue with these networks is the requirement of large training datasets. In the papers mentioned, the networks are pre-trained on scene-flow which contains approximately 60k images, then fine-tuned on popular sets like KITTI. Although generalisability has been shown to be largely acheivable, the further the data similarity is to what has been trained on, the worse the performance. This poses a large hurdle when the 3D reconstruction task is for specific and niche tasks. In this paper, we show that recycling structured light images, which is data commonly acquired to produce depth ground truth, can improve the performance of disparity estimation techniques especially when data are limited.

2.3. Multi Task Learning

Multi-task learning (MTL) has already been proposed for depth/disparity estimation purposes. In previous works, the training of depth/disparity, has, for example, been combined with the training of semantic segmentation or instance segmentation (Sener and Koltun Citation2018; Kendall et al. Citation2018). These works have shown that it is possible to achieve an improved depth/disparity performance in comparison to when trained as a single task. However, as has been identified in previous literature (Standley et al. Citation2020), MTL does not guarantee improved performance and balancing the combination of tasks and the combination of weights of the loss terms is difficult. In this work we show that it is possible to use structured light reconstruction in a MTL framework to improve disparity learning. Where the ground truth requires no annotation.

3. Method

In this section, we introduce our stereo disparity estimation techniques. Firstly, we propose the first deep learning model to learn the projection of structured light patterns onto a scene. The goal of this model is to show that it is possible to accurately learn structured light patterns that respect the stereoscopic perspectives and verify that structured light information can be used for 3D reconstruction purposes and should not be discarded after ground truth information has been generated; which is what is most commonly done. Secondly, we propose a novel MTL method for disparity estimation, showing that MTL can enhance disparity learning by dual training on structured light.

3.1. Dataset generation

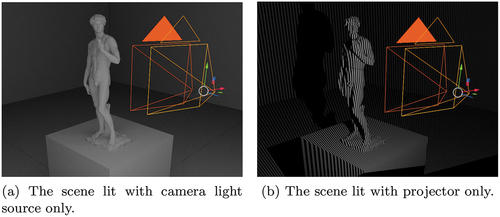

Virtual dataset: Due to the lack of any publicly available datasets containing structured light patterns, we had to create our own dataset. A simulated environment was developed using Blender (Community Citation2018) to enable automatic generation of virtual scenes containing cultural objects, as shown in . The VisionBlender (Cartucho et al. Citation2020) was used to generate the ground truth information.

Figure 1. Demonstration of the virtual environment. The virtual camera is represented in orange and the projector object is represented in yellow.

The virtual environment is simple, consisting of a cubic room of volume 1 and a central podium of size 10

, which is used to hold different objects. All the surfaces in the whole virtual scene are grey and there is no texture, including the surfaces of the objects. The projector (Schell Citation2021) was rigidly attached to the camera object with a rotation of

and a translation of

. 800 cultural artefact objects, were used as the diversity factor in this dataset. The objects contain complex 3D surfaces and a smooth texture, making them challenging to reconstruct using existing 3D reconstruction approaches. All the objects were downloaded from the ‘Scan The World’ project which is hosted by MyMiniFactory (Beck Citation2019). The dimensions were resized using a uniform distribution

. For each object, 10 stereo image-pairs were captured from different view points. The camera is always looking towards the geometrical centre of the object. The distance between the camera and that geometrical centre is sampled from

. Respecting these two constraints, the pose of the 10 viewpoints are chosen at random.

For each viewpoint, the stereo images and the associated ground truth data were generated. The ground truth includes the depth/disparity maps and stereo images without and with eight projected binary patterns. For each projection, the number of vertical lines equals , where n is the projection number. The resolution of the captured images are

. This dataset contains approximately 8000 images.

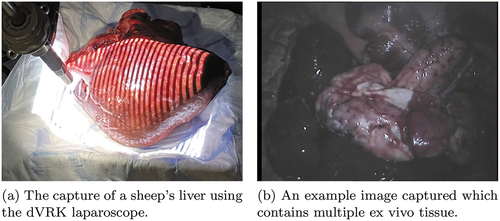

Figure 2. Shown in (a) and (b) is the setup for the medical dataset collection. Image (a) shows an example of tissue captured using the dVRK laparoscopy. (b) does not contain projection patterns and would be fed as an input to the neural network.

Medical dataset: A medical dataset was created using the da Vinci Research Kit (dVRK) (Kazanzides et al. Citation2014) to extend the evaluation of the model onto real images. The dVRK was chosen as it is the research model of the surgical system that is used in clinical practice and commonly used for research in this field. A structured light projector was attached to a da Vinci camera arm and the stereo laparoscope of the machine was used to capture scenes with projected patterns on medical phantoms and ex-vivo organs from sheep, cows and chicken. Gray code was used for this dataset with 9 patterns. The decoding was performed using the three-phase algorithm (Xu et al. Citation2022). A turning table was used to hold the objects and were rotated for multiple perspectives. shows the setup and an example image which would be fed as the input to the neural network (an image without projection patterns).

3.2. Structured light reconstruction

Given a stereo image pair, a neural network has been designed and trained to predict how structured light patterns should project on the scene surfaces of each image, separately. Specifically, the network projects binary and grey code structured light patterns, which consist of vertical bars of white and black colour. To predict these patterns, the UNet (Ronneberger et al. Citation2015) network is trained as a per pixel binary classifier; 1 (white) or 0 (black). These predicted patterns can then be used to generate disparity or depth maps by performing 2D cross correlation over the epipolar lines. This reveals how the network has understood the 3D scene.

The input to the UNet is a pair of rectified stereo images (which do not contain any projection patterns), concatenated along the channel axis, I=[Il;Ir], Il,Ir

. The network is tasked to predict structured light projections in each input stereo image, where every pixel requires a unique code along a horizontal epipolar line.

denotes the ground truth patterns and

the neural network output which is defined as

=[

;

],

,

. The parameter

denotes the number of projected patterns and

for the virtual dataset and

for the medical dataset.

Losses: Two losses were chosen for the structured light learning. Since the network is trained to produce a binary mask, binary cross entropy (bce) loss defined in Eq.(1) is used as the primary loss. To emphasise the pattern edges, an L2 loss is used on the horizontal derivatives as in Eq.(2). These derivatives are calculated by convolving over the images using a Prewitt operator mask, generating and

which are the derivatives of the ground truth and predicted output, respectively, with elements

;

. These two individual losses are summed together in Eq.(3) with a weighting gain of

.

Extracting disparity from structured light: To generate the disparity map using the proposed structure light projection network, cross correlation is performed along the epipolar lines of a pair of rectified stereo images, fully connected along the pattern dimension. A patch, represented by , where k is the reference index, of size

is taken in

, and cross correlation is performed over a range of pixels in

along the epipolar lines. More specifically, for every pixel on the left image, the cross correlation is estimated for pixels on the right image which are along the epipolar line at a distance within the maximum considered disparity for each dataset, individually. In our work, the maximum disparity has been set to

of the width of the image. The cross correlation on the right image will start from the same position as the examined patch on the left image, striding with a step

. The patch size was chosen after tuning.

3.3. Direct disparity estimation with multi task leaning

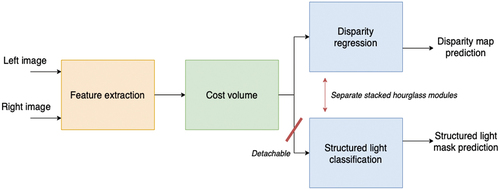

For this task, we are exploring the benefits of training disparity and structured light jointly. Specifically, we investigate the relative effect of introducing the MTL framework, whether or not the MTL framework improves the disparity estimation performance produced using STL. The PSMNet (Chang and Chen) architecture and training procedure were chosen as it is one of the more recognised architectures for disparity estimation and has recorded competitive performances in benchmark challenges. However, to note, any other architecture could have been used. In this work, the PSMNet architecture was modified in such a way that no added complexity or increased number of parameters is introduced for the disparity estimation path. Compartmentalising the design allows the structured light section to be detached when training is complete.

The PSMNet contains a stacked hourglass module to regress disparity. The modification which we introduced to the architecture to create the multi-task framework was done at this point. More specifically, the stacked hourglass module was duplicated; so that there is bifurcation after the cost volume. This results in two parallel paths, one for either task as shown in . A disparity range of 96 was chosen. The training is performed on RGB images with dimensions . In the evaluation stage, the outputs are resized using bilinear interpolation to match the original dimensions which are

for the virtual images,

for the medical images and

for the SCARED images.

Figure 3. Simple block diagram representation of the multi task learning framework that has been applied on top of the PSMNet architecture.

The loss function is composed of the structured light cross entropy loss Eq.(1) and an L2 disparity loss. In our work, three different weighting strategies have been used to combine these loss terms. This is because multi-task learning is complex and different strategies benefit different tasks in a way which is generally unknown before experimentation is performed. Firstly, a simple constant scaling of the structured light loss was done with Eq.(6). Secondly, the training strategy from (Liu et al. Citation2019) was implemented Eq.(7)-(8), which modifies the weights every epoch. Parameters and

are the task weightings; a product of the ratio of the previous epoch’s total losses. Here,

and

. The parameter

is used to balance the task weighting distribution, and

is used to gain the softmax. The values were chosen after extensive experimentation. Thirdly, the uncertainty weighting strategy from (Kendall et al. Citation2018) was implemented as in Eq.(9). The weight

has been introduced to prioritise the disparity learning. The

parameters denote the observed noise, in practice, these are learnable parameters used to weight each loss.

4. Results

4.1. Evaluation of structured light reconstruction

In this section, the reconstruction capability of the proposed artificially projected structured light model is assessed, using the disparity maps generated with cross correlation, as described in Sec. 3.2. The aim of this validation is not to compare the performance of the proposed structured light reconstruction model to state-of-the-art disparity estimation models. Rather, we want to explore the benefits of using this alternative approach for disparity estimation and evaluate whether or not the results from the proposed method meet the expected levels of accuracy produced by the conventional approach of directly regressing disparity. More specifically, the hypothesis that this validation aims to verify is that using the structured light in the training process, through Multi-Task Learning (MTL), offers improved performance compared to Single-Task Learning (STL). Without requiring extra parameters for the disparity estimation path and not requiring extra data collection; alleviating issues of data limitation.

A comparison network was trained to directly estimate disparity; using the same UNet architecture. The network was trained to predict disparity using a scaled Sigmoid. The output is mapped to the width of the image. An L2 on the disparities was used as the loss function. This network was used to control the experiments and remove the influence of the network architecture on the comparison of the results. There are no major architectural differences between the network proposed for structured light reconstruction and this comparison network. With the exception of the final layer which has been modified to accommodate the output dimension requirements. Comparison to state-of-the-art disparity estimation models is out of the scope of this validation as our focus is to prove the viability of reconstructing structure light.

The 3D reconstruction results are presented in . The first and second column contain the mean absolute error (MAE) for each model. Both training and evaluation is performed on greyscale images of dimension . The network was trained on a single NVIDIA GeForce RTX 3080 10GB graphics card. Adam (Kingma and Ba Citation2015) was chosen as the optimiser, with a fixed learning rate of 0.0001. The deep learning model was coded using the PyTorch framework (Paszke et al. Citation2019)

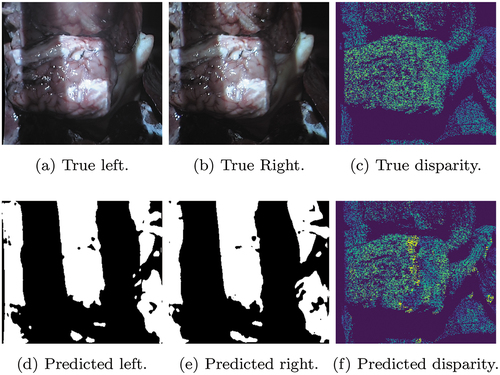

Figure 4. This figure contains an example output from the structured light network applied on the medical dataset. The top row contains the ground truth images and the bottom row shows the predictions.

Table 1. Mean absolute error (MAE) produced by the proposed structured light network (with the disparity produced using the cross correlation) and the direct disparity network.

Virtual dataset validation: The results on the virtual dataset, at the first two rows of , show that it is possible to achieve good reconstruction using the proposed approach. Virtual Seg denotes the metrics for the virtual object and the podium, segmented using masks provided by VisionBlender. Comparison between both approaches shows that comparable performance can be expected. For the virtual data, the training provided to the disparity network is higher quality than the structured light, as the disparity is extracted directly from the simulation environment. We hypothesise that this is the primary cause of the performance difference between the two implementations. The training/test split was 80/20, at the object level.

Medical dataset validation: Taking the networks trained on the virtual scenes, fine-tuning on the medical dataset, shown in , is performed to reveal the benefit of using structured light when the size of the datasets are limited. The training data for the structured light was 9 times larger than for the direct disparity network because nine patterns were collected for every depth map. The results are shown in the bottom row of . The accuracy recorded from the proposed method was twice as high as the direct regression approach. This verifies that having the larger volume of data for structured light training, when the datasets are small, improves the accuracy of the estimated disparity because of the increased complexity of the task and the increased number of training samples. The training/test split was 80/20, at the keyframe level.

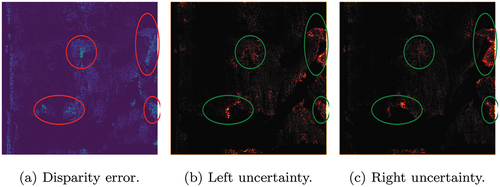

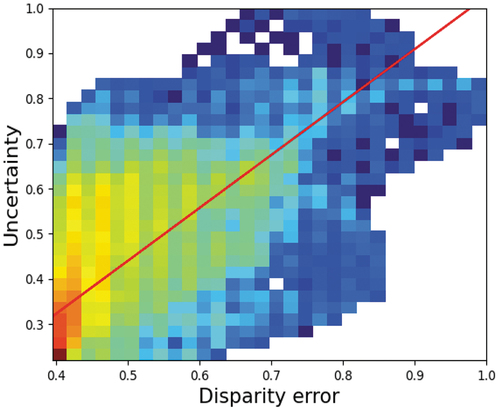

Uncertainty: As the network performs classification, it is easy to acquire the confidence in the predictions (Cao et al. Citation2018); the closer the Sigmoid output is to 0.5 the less confident the network is. An empirical assessment of the correlation between the disparity error and network confidence was made. It was concluded that there was a correlation between confidence and accuracy. An example of this is shown in . The ellipses highlight the same areas in each image. These results show a correlation between areas of high uncertainty and areas of low accuracy. displays a histogram plot of the uncertainty and error in the above example. Each histogram bin represents the number of occurrences for each combination of uncertainty and disparity error. A positive correlation is seen between the disparity error and the uncertainty. This is numerical evidence of the correlation observed in . Analysing confidence is critical for healthcare application and the adoption of deep learning methods in clinical practice. This is an advantage for using structured light reconstruction, over disparity estimation, as this is naturally a classification task (where the classification is the final output).

4.2. Evaluation of the benefit of using multi task learning for the enhancement of disparity learning

In this section, we explore the benefit of using joint training for the purpose of improving the performance of the disparity estimation compared to when performing single task learning. 5 algorithms are explored here. The standard PSMNet for single task learning (disparity) is used as the benchmark; denoted by . Then, the modified, multi-task learning PSMNet is trained 4 times using four different strategies. Firstly,

in Eq.(6) is implemented twice, where

; empirically chosen. For simplicity, we denote the constant gain algorithms using:

and

. Secondly,

in Eq.(8) is implemented; denoted by

. Finally,

in Eq.(9) is implemented; denoted by

.

Virtual dataset validation: Firstly, all algorithms are assessed using the virtual dataset. The results are shown in . Again, Full is the MAE for the entire scene and Seg is the MAE for the podium and object only. This experiment is performed twice, firstly on the entire training set and secondly, on 1/16th of the training set; to see the impact of learning on a smaller dataset. 120 epochs were used for training; we determined this number after multiple experiments, balancing computation time and convergence. For the entire dataset experiment, all networks perform quite similarly, but with two of the multi-task learning algorithms achieving slightly better results. However, on the 1/16th experiment the relative performance of drops, which highlights the regularising benefits of using the proposed MTL framework. The extra complexity and the extra data have prevented overfitting to the fewer training samples. We observe that

also drops in ranking; highlighting the difficulty of balancing the weighting distribution.

Table 2. Comparison of multi task learning vs single task learning on the virtual dataset. Two types of experiments were run, the first was using the full training set, the second was using 1/16th of the training set. Assessing the general performance of each algorithm when training on a large and a small dataset.

Generalisability evaluation: We explore the generalisability properties of the networks trained on the entire virtual dataset by evaluating them on the SCARED dataset and the dense Middlebury 2014 Stereo training dataset. The virtual dataset that was used for training is limited, and therefore, benchmark results were not expected to be achieved. This test was constructed only to compare the performance of using STL and MTL; investigating the impact of the MTL framework with structured light learning. The SCARED dataset contained two test sets (TS8 and TS9), which collectively contain 45 unique images, which were warped to create a much larger volume of test data. So, to avoid the influence of error created during the artificial warping, only the 45 unmodified and complete images are used for evaluation. shows the results of this experiment. The main point of interest here is the position of the algorithm. In all datasets, the

algorithm performs worst. What can be understood from these results is that even though the performance of

was comparable to the multi-task learning performances on the virtual dataset, it has overfitted to the virtual dataset distribution. Whereas, the MTL framework has provided a regularising effect during training, which has resulted in greater generalisability.

Table 3. Generalisability evaluation using SCARED (Allan et al. Citation2021) and the Middlebury 2014 (Scharstein et al. Citation2014). The choice of displaying the depth error for SCARED and disparity error for Middlebury reflects what is commonly done. The results show that the model has achieved poorer generalisability than the MTL approaches. TS denotes the test sets in SCARED. IQR denotes the interquartile range.

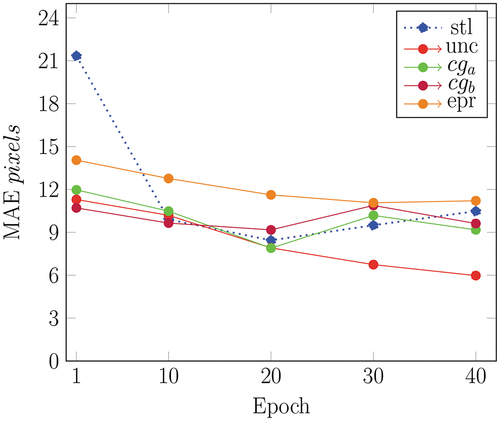

Medical dataset validation: Commonly in medical imaging, training data with ground truth is limited. To tackle this limitation, the standard strategy is to pre-train a neural network on general and large datasets and then fine-tune on data specific to the task. To replicate this scenario, we use the models developed on the entire virtual dataset and fine-tune on the medical dataset that we created. Each network was fine-tuned for 40 epochs. The results shown in highlight the training stability of the compared models. The blue dotted line represents the performance. Overfitting can be inferred from the descent behaviour across the 40 epochs, due to the divergence of the validation accuracy. This is also reflected in the performance for the MTL models:

,

,

. However, for

the training is stable and it also achieves the best disparity MAE performance. Which again demonstrates the benefit of the multi task learning framework, when the correct training strategy is implemented.

5. Conclusion

In this paper, a novel approach to solving disparity estimation has been proposed that uniquely uses structured light information. We have proposed the first neural network to artificially project structured light patterns onto stereo images. This has allowed 3D reconstruction to be achieved using simple post-processing similarity metrics. The proposed 3D reconstruction approach requires no explicit depth information during training. The performance evaluation results show that the proposed model accurately respects the surface geometry and achieves similar performance when compared to a direct regression network. As this proposed approach uses classification, it is also possible to estimate confidence in the disparity predictions, which is critical for tasks with high risk. This research was then extended, by designing a novel MTL framework to jointly predict structured light and disparity. Our validation verifies that introducing this MTL framework improves the generalisability and capability of learning from small datasets, for disparity estimation. All without increasing the number of parameters for the disparity estimation and using data which is already available and does not require extra annotation. Specifically, the MTL model produced results that were consistently better than

, which demonstrates that when the correct multi-task learning strategy is implemented, this is a better approach for developing a direct disparity estimation network. Our future work will focus on expanding our database to allow further validation of our work.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Alistair Weld

Alistair Weld is a PhD student at The Hamlyn Centre, Imperial College London, UK. His research interests include: computer vision, deep learning and robotic surgery.

Stamatia Giannarou

Stamatia Giannarou is a Royal Society University Research Fellow at The Hamlyn Centre, Imperial College London, UK. Her main research interests include: visual recognition and surgical vision.

References

- Allan M, McLeod AJ, Wang CC, Rosenthal JC, Hu Z, Gard N, Eisert P, Fu K, Zeffiro T, Xia W, et al. 2021. Stereo correspondence and reconstruction of endoscopic data challenge. ArXiv Abs/2101,01133.

- Beck J 2019. Scan the world; [https://www.myminifactory.com/scantheworld].

- Brigato L, Iocchi L 2021. A close look at deep learning with small data. 2020 25th International Conference on Pattern Recognition Virtual-Milan, Italy (ICPR):2490–2497.

- Cao Y, Wu Z, Shen C. 2018. Estimating depth from monocular images as classification using deep fully convolutional residual networks. IEEE Trans Circuits Syst Video Technol. 28:3174–3182. doi:10.1109/TCSVT.2017.2740321.

- Cartucho J, Tukra S, Li Y, Elson DS, Giannarou S. 2020. Visionblender: a tool to efficiently generate computer vision datasets for robotic surgery. Comput Methods Biomech Biomed Eng Imaging Vis. 9:331–338. doi:10.1080/21681163.2020.1835546.

- Chang JR, Chen YS 2018. Pyramid stereo matching network. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Salt Lake City, UT, USA: p. 5410–5418.

- Community BO 2018. Blender - a 3d modelling and rendering package. Stichting Blender Foundation, Amsterdam: Blender Foundation. Available from: http://www.blender.org

- Fu H, Gong M, Wang C, Batmanghelich K, Tao D 2018. Deep ordinal regression network for monocular depth estimation. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA: p. 2002–2011.

- Godard C, Aodha OM, Brostow GJ 2017. Unsupervised monocular depth estimation with left-right consistency. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA (CVPR):6602–6611.

- Gupta M, Agrawal A, Veeraraghavan A, Narasimhan SG. 2011. Structured light 3d scanning in the presence of global illumination. Cvpr 2011. 713–720.

- Hashimoto DA, Rosman G, Rus D, Meireles OR. 2018. Artificial intelligence in surgery: promises and perils. Ann Surg. 268:70–76. doi:10.1097/SLA.0000000000002693.

- Hersh AM, Mahapatra S, Weber-Levine C, Awosika T, Theodore JN, Zakaria HM, Liu A, Witham TF, Theodore N. 2021. Augmented reality in spine surgery: a narrative review. HSS J®: The Musculoskelet J Hospital for Spec Surgery. 17:351–358. doi:10.1177/15563316211028595.

- Jensen S, Wilm J, Aanæs H 2017. An error analysis of structured light scanning of biological tissue. In: Scandinavian Conference on Image Analysis, Tromsø, Norway: Springer. p. 135–145.

- Kazanzides P, Chen Z, Deguet A, Fischer GS, Taylor RH, DiMaio SP 2014. An open-source research kit for the da vinci® surgical system. In: 2014 IEEE international conference on robotics and automation (ICRA), Hong Kong, China: IEEE. p. 6434–6439.

- Kendall A, Gal Y, Cipolla R 2018. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA: p. 7482–7491.

- Kingma DP, Ba J. 2015. Adam: a method for stochastic optimization. CoRr Abs/1412 abs/1412. p. 6980.

- Laga H, Jospin LV, Boussaïd F, Bennamoun M. 2020. A survey on deep learning techniques for stereo-based depth estimation. IEEE transactions on pattern analysis and machine intelligence. 44 p. 1738–1764.

- Lipson L, Teed Z, Deng J 2021. Raft-stereo: multilevel recurrent field transforms for stereo matching. 2021 International Conference on 3D Vision (3DV), Virtual-Surrey, UK: p. 218–227.

- Liu S, Johns E, Davison AJ 2019. End-to-end multi-task learning with attention. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, CA, USA. p. 1871–1880.

- Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L, et al. 2019. Pytorch: an imperative style, high-performance deep learning library. In: Advances in neural information processing systems 32. Curran Associates, Inc.; p. 8024–8035. Available from: http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf.

- Qi GJ, Luo J 2020. Small data challenges in big data era: a survey of recent progress on unsupervised and semi-supervised methods. IEEE transactions on pattern analysis and machine intelligence. PP.

- Rachakonda PK, Muralikrishnan B, Sawyer D. 2019. Sources of errors in structured light 3d scanners. In: Dimensional Optical Metrology and Inspection for Practical Applications VIII, Baltimore, Maryland, USA. 10991 (SPIE). p. 25–37.

- Ronneberger O, Fischer P, Brox T. 2015. U-net: convolutional networks for biomedical image segmentation. In: International Conference on Medical image computing and computer-assisted intervention. Munich, Germany: Springer. p. 234–241.

- Salvi J, Pagès J, Batlle J. 2004. Pattern codification strategies in structured light systems. Pattern Recognit. 37:827–849. doi:10.1016/j.patcog.2003.10.002.

- Scharstein D, Hirschmüller H, Kitajima Y, Krathwohl G, Nesic N, Wang X, Westling P. 2014. High-resolution stereo datasets with subpixel-accurate ground truth. German conference on pattern recognition, Münster, Germany: Springer. p. 31–42.

- Scharstein D, Szeliski R 2003. High-accuracy stereo depth maps using structured light. 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2003 Proceedings, Madison, WI, USA. 1 p.I–I.

- Schell J 2021. Projectors; [https://github.com/Ocupe/Projectors].

- Sener O, Koltun V. 2018. Multi-task learning as multi-objective optimization. Adv Neural Inf Process Syst. 31.

- Silberman N, Fergus R 2011. Indoor scene segmentation using a structured light sensor. 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain: p. 601–608.

- Standley TS, Zamir AR, Chen D, Guibas LJ, Malik J, Savarese S. 2020. Which tasks should be learned together in multi-task learning? International Conference on Machine Learning, Virtual, PMLR. p. 9120–9132.

- Uhrig J, Schneider N, Schneider L, Franke U, Brox T, Geiger A 2017. Sparsity invariant cnns. In: International Conference on 3D Vision (3DV), Qingdao, China. p. 11–20.

- Willemink MJ, Koszek WA, Hardell C, Wu J, Fleischmann D, Harvey H, Folio LR, Summers RM, Rubin D, Lungren MP. 2020. Preparing medical imaging data for machine learning. Radiology:192224. 295:4–15. doi:10.1148/radiol.2020192224.

- Xu C, Huang B, Elson DS. 2022. Self-supervised monocular depth estimation with 3-d displacement module for laparoscopic images. EEE Trans Med Robot Bionics. 4(2):331–334. doi:10.1109/TMRB.2022.3170206.

- Ying X. 2019. An overview of overfitting and its solutions. J Phys Conf Ser. 1168:022022. doi:10.1088/1742-6596/1168/2/022022.

- Zhao C, Sun Q, Zhang C, Tang Y, Qian F. 2020. Monocular depth estimation based on deep learning: an overview. Sci China Technol Sci. 63:1–16. doi:10.1007/s11431-020-1582-8.