?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Breast cancer is one of the most common types of cancer in women. Early and accurate diagnosis of breast cancer can increase the treatment chances and decrease the mortality rate. Thus, the development of accurate and reliable Computer-Aided Diagnosis (CAD) systems using breast cancer images is an immediate priority for early diagnosis. Deep learning methods have had a widespread role in CAD systems. In spite of the advantages, most deep learning-based CADs cannot quantify the uncertainty of their predictions. Uncertainty in the predicted results from a CAD system might endanger people’s life in these crucial steps for breast cancer classification. Therefore, in this study, the Monte Carlo Dropout method is utilised as an uncertainty quantification method. Furthermore, differently from most existing studies, we present an uncertainty-aware deep learning model which can classify accurately from different types of images, namely, mammograms and ultrasound. The results show the proposed model achieved the accuracy of 99.95% in mammogram dataset, namely, DDSM and 98.40% and 91.34% in ultrasound datasets, namely, BUSM and BUSI, respectively. Finally, since we found the proposed model shows high uncertainty in misclassifications, we suggested to use the uncertainty values to find appropriate subsets for further inspection to increase the performance.

1. Introduction

Breast cancer is a type of cancer that develops from breast tissue. Worldwide, breast cancer is the most common cancer in women, other than nonmelanoma skin cancer (Waks and Winer Citation2019). Early and accurate diagnosis of breast cancer can increase the treatment chances and decrease the mortality rate (Yassin et al. Citation2018; Balkenende et al. Citation2022).

Using medical imaging is the most effective method for the diagnosis of breast cancer (Yassin et al. Citation2018; J Gaona et al. Citation2020; Murtaza et al. Citation2020; Mi et al. Citation2021; Balkenende et al. Citation2022). Mammography is the gold standard for breast cancer screening. Although, to overcome the limitations of mammography especially in dense breasts, supplemental screening for breast cancer with ultrasound imaging is suggested by clinicians (Geisel et al. Citation2018).

However, accurate diagnosis requires experienced medical specialists and experts. Thus, using a Computer-Aided Diagnosis (CAD) system is required to overcome the operator dependency. Also, CAD system can help to reduce the errors, effort, time, and cost in the diagnosis process (Zaalouk et al. Citation2022).

Over the years, ML (Machine learning) and DL (Deep Learning) methods focused on applications such as electronic health service (Gupta et al. Citation2019), the Internet of Things-based healthcare (Ben Othman et al. Citation2022), data extraction (Bhuyan et al. Citation2022; Li et al. Citation2023) and medical image processing (Ferdous Aurna et al. Citation2022; Ding et al. Citation2023; Wang et al. Citation2024). In recent years, several advances in deep learning (LeCun et al. Citation2015) have had a widespread role in CAD systems in breast cancer classification (Wu et al. Citation2023; Chougrad et al. Citation2018; Al-Antari et al. Citation2018; Muduli et al. Citation2021). Despite the advantages, Deep learning models tend to be overconfident about their predictions, and also classical deep learning methods do not capture uncertainty (Abdar et al. Citation2021; Abdullah et al. Citation2022; Gawlikowski et al. Citation2023).

Many situations can lead to uncertainty in deep learning models. Data uncertainty is introduced from noisy data such as measurement noise. This type of uncertainty is called Aleatoric uncertainty or irreducible uncertainty. Also, there is uncertainty about which values of model parameters will be good at predicting new data. Finally, there is structure uncertainty about the general structure of the model. The latter two uncertainties can be grouped under Epistemic or reducible uncertainty. Epistemic uncertainty can be reduced with more data while aleatoric uncertainty can not. Aleatoric uncertainty and epistemic uncertainty can then be utilised to induce predictive uncertainty (Ghahramani Citation2015; Abdar et al. Citation2021)

Due to use of point estimates of parameters and predictions in classical deep learning models, these models are too confident about their output and decision without taking into consideration the uncertainty associated with data and predictions of the model, so, there are some challenges with unreliability (Ghahramani Citation2015; Abdullah et al. Citation2022). In the other word, prediction uncertainty in CAD system results might endanger people’s life in these crucial steps for clinical diagnostics. Therefore, leveraging uncertainty information is imperative to ensure the safe use of these novel tools (Filos et al. Citation2019; Leibig et al. Citation2017).

In another word, Due to use of point estimates of parameters and predictions in classical deep learning models, these models are too confident about their output and decision without taking into consideration the uncertainty associated with data and the model (Ghahramani Citation2015; Abdullah et al. Citation2022). Yet, In CAD system, the reliability of automated decisions and quantifying such uncertainties are quite important to quantify our confidence about the predictions of the models (Filos et al. Citation2019; Leibig et al. Citation2017). To mitigate this risk, probabilistic approaches are used to estimate the uncertainty in the model predictions (Jospin et al. Citation2022). Bayesian deep learning (BDL) uses a combination of the Bayesian probability theory with classical deep learning to provide a prediction and extract the uncertainty values. The uncertainty is estimated as a probability density over outcomes through this process, known as inference in BDL. However, exact inference is analytically intractable and hence approximate inference has been applied instead (Wang and Yeung Citation2021). Gal and Ghahramani (Citation2015) proposed a new framework that use dropout in deep neural networks as approximate Bayesian inference while mitigate the problem of quantifying uncertainty in deep learning without suffering computational complexity or decreasing test accuracy.

Our literature review identified some research gaps related to the importance of uncertainty quantification in the breast cancer classification tasks. On the other hand, to the best of our knowledge, there are very few studies that have used both types of images (Ultrasound and Mammography Images) simultaneously. Therefore, to achieve high accuracy as well as reliability in breast cancer classification task in Ultrasound and Mammography Images simultaneously, in this paper, we employed an uncertainty-aware deep convolutional neural network model as a CAD system.

Therefore, to achieve high accuracy as well as reliability in breast cancer classification task simultaneously, in this paper, we employed an uncertainty-aware deep convolutional neural network model as a CAD system. Differently from most of existing studies, which just used one type of images in breast cancer classification, we present a simple but efficient deep learning model, which is able to classify accurately different class of images, namely, mammograms and ultrasound. The main contributions of this study are as follows:

We quantified the uncertainty in our proposed deep CNN model using the MC Dropout technique as Bayesian deep learning.

We proposed a simple but efficient deep learning model to breast cancer classification task using Ultrasound and Mammography Images.

We used some pretrained architectures for breast Classification such as Xception, VGG16, ResNet50V2 and DenseNet121 combining a CNN model as a feature extractor.

To demonstrate the importance of having high accuracy in the diagnosis of each class, especially the class of malignancy, we computed the performance of the proposed model for each class separately.

We indicated that the proposed deep CNN model shows high uncertainty in misclassifications. we suggested to leverage the uncertainty information to increase the performance of the CAD system by selecting appropriate subsets as suspicious cases for further inspection.

2. Related works

Researchers present several deep learning‐based CAD systems using mammogram as well as ultrasound images which provide good performance in breast cancer classification (Murtaza et al. Citation2020; Hassan et al. Citation2022). Noushiba Mahbub et al. (Citation2022) proposed a modified CNN and fuzzy analytical hierarchy process for diagnosis of breast cancer. Ahmed Roni et al. (Citation2022) used the Xception model and a generative adversarial network to improve the algorithm’s precision for breast cancer binary classification. Al-masni et al (Citation2018) proposed a CAD model based on YOLO (You Only Look Once) techniques. This YOLO-based CAD system can distinguish between benign and malignant lesions. Ragab et al (Citation2019) presented a model where an AlexNet is used and fine-tuned to classify two classes. Duggento et al (Citation2019) described a CAD based on an ad hoc CNN architecture specialised in breast lesion classification. Chougrad et al (Citation2018) proposed a CAD system based on deep Convolutional Neural Networks (CNN) and fine-tuning strategy.

Similarly, different CAD systems based on machine and deep learning have been proposed for breast cancer classification on ultrasound images. Moon et al (Citation2020) have proposed a model for tumour diagnosis using an image fusion method combined with different image content representations and an ensemble of different CNN architectures on private and public US images. This CNN-based model includes VGGNet, ResNet, and DenseNet. Byra (Citation2021) has proposed a model that employs transfer learning with convolutional neural networks and used deep features through scaling layers for breast mass classification in ultrasound images. Sadad et al (Citation2020) proposed a CAD system by Hilbert transform for reconstructing B-mode images from the raw data followed by the marker-controlled watershed transformation to segment the lesion and hybrid feature set which developed after the extraction of shape-based and texture features from the breast lesion. In this model, the decision tree, k-nearest neighbour and ensemble decision tree model via random under-sampling with Boost (RUSBoost) are utilised to breast cancer classification. Mishra et al (Citation2021) proposed a machine learning-radiomics based classification pipeline. In this scheme shape, texture and histogram-oriented gradients related features have been used and various machine learning models such as random forest, gradient boosting and AdaBoost classifiers are considered. Cao et al. (Citation2020) proposed an approach called noise filter network (NF-Net) to address the problem of noisy labels by incorporating two SoftMax layers for classification and a teacher-student module for distilling the knowledge of clean labels. Zhuang et al. (Citation2020) employed image decomposition to obtain fuzzy enhanced and bilateral filtered images to enrich input information of breast lesions which are presented as inputs to a pre-trained VGG16 model. Al-Dhabyani et al. (Citation2019) used Generative Adversarial Network (GAN) for data augmentation and CNN with Transfer Learning approach have proposed to be used for breast ultrasound image classification. Acevedo and Vazquez (Citation2019) used k-means and GLCM algorithms to segment ultrasound images and a support vector machine algorithm to breast cancer classification.

Additionally, some researchers present different CAD models which use multi-modal breast cancer datasets like mammograms and ultrasound images. Muduli et al. (Citation2021) proposed a deep convolutional neural network model for breast cancer classification from a different class of images, namely, mammograms and sonograms. Assari et al. (Citation2022b) proposed a bimodal deep residual learning model for solid breast mass classification. In the other work (Assari et al. Citation2022a), these authors used a bimodal GoogLeNet-Based CAD system and two-step transfer learning strategy for solid breast mass classification by combining mammograms and corresponding sonograms data.

Although most CAD models are looking for good performance results in different applications, but it is not enough. The CAD models require both high accuracy as well as reliability in breast cancer classification. So, we need uncertainty quantification in classification for achieving a reliable CAD model. Few researchers have worked on Uncertainty Quantification in medical image classification. Song et al. (Citation2021) proposed a Bayesian deep network capable of estimating uncertainty to assess oral cancer image classification. Filos et al. (Citation2019) employed Bayesian deep learning based on the VGG model for diabetic retinopathy classification. Abdar et al. (Citation2023) proposed an uncertainty-aware deep learning feature fusion model for COVID-19 Detection. Thiagarajan et al. (Citation2022) proposed a technique to utilise the uncertainties provided by the Bayesian CNN for the classification of breast histopathological images.

Motivated by these shortcomings in the field of uncertainty estimation of the breast cancer classification task, we develop a reliable uncertainty-aware deep learning-based model for breast cancer classification from a different class of images, namely, mammograms and ultrasound.

3. Materials and methods

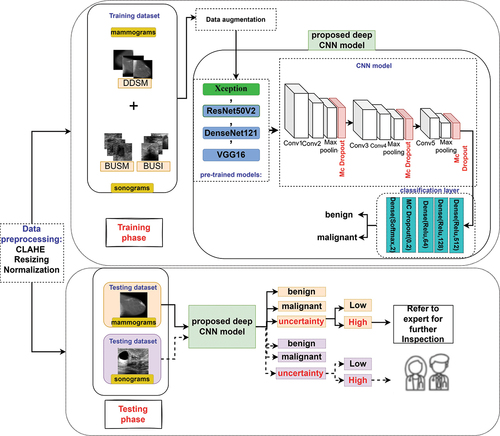

In this paper, an uncertainty-aware deep learning model is utilised for binary breast cancer classification using mammogram as well as sonograms. For better understanding, summary of this study is shown in

Figure 1. A general overview of the proposed uncertainty-aware deep learning-based CAD system in breast cancer classification.

3.1. Datasets

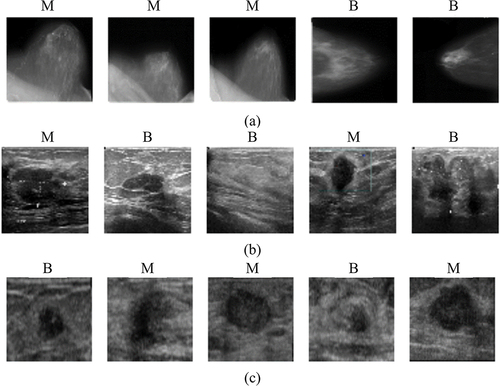

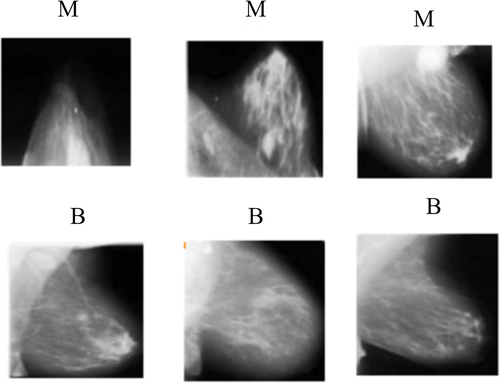

In this study, simulation has been carried out to classify the image as benign or malignant in both mammogram dataset as well as ultrasound datasets. So, we have utilised mammograms and ultrasound images as breast cancer datasets. For mammograms, we have considered a public dataset containing mammography with benign and malignant masses, namely DDSM (Heath et al. Citation2000; Lina and Huang Citation2020; Huang and Lina Citation2020). In the case of sonograms, we have utilised two public datasets, namely, BUSM (Rodrigues Citation2017), and BUSI (Al-Dhabyani et al. Citation2020). shows some random image samples from the DDSM, BUSM and BUSI datasets and details of each dataset used in our study have been tabulated in .

Figure 2. Some random image samples from the mammogram and sonogram datasets were considered in our study. benign and malignant images from (a) DDSM dataset, (b) BUSI dataset and (c) the BUSM dataset. (M is malignant and B is benign).

Table 1. A description of the number of mammogram and sonogram datasets and the number of samples of each dataset in the training and testing from two categories benign(B) and malignant(M).

3.2. Preprocessing

3.2.1. Histogram Equalization

First, an image preprocessing method, namely, Contrast Limited Adaptive Histogram Equalization (CLAHE) was used on the original images.

3.2.2. Resizing and Normalization

In this study, each image was resized to 224 × 224 pixels and its intensity values were linearly normalised to the interval [0, 1].

3.2.3. Data augmentation

The data augmentation method can be used to address the problem of over-fitting due to the small training datasets by using some transformations on them (Al-Dhabyani et al. Citation2019; Shorten and Khoshgoftaar Citation2019; Oza et al. Citation2022). To overcome the over-fitting issue, we used the data augmentation technique. In the training images, the utilised augmentation methods are image rotation and flipping horizontally and vertically using an API Keras Augmentor.

3.3. Model architectures

The considered CNN model consisted of 18 layers such as an input layer in the size of 224 × 224 pixels, convolutional layers, batch normalisation layers, pooling layers, Monte Carlo Dropout layers and fully connected layers. More details of the considered CNN model can be found in .

3.4. Learning method

The ADAM optimiser with initial learning rate of 0.00005 and batch size of 32 is used for training all models. We used the Binary cross-entropy loss function to select the optimal weights. The hyper-parameters of the proposed CNN model are listed in .

Table 2. The value of proposed deep CNN model hyperparameters.

3.5. Transfer learning and fine-tuning

Due to the limited training samples, to train the proposed model we used transfer learning. transfer learning is a method that involves reusing a pre-trained CNN model to learn features as the starting point for a model on a new task (Chang Shin et al. Citation2016; Zhuang et al. Citation2021). By applying this method, the proposed model can achieve higher performance and less uncertainty (Hendrycks et al. Citation2019).

To train the proposed CNN model, we performed transfer learning methods based on some popular pre-trained CNN models that were trained with the ImageNet and we transferred the pre-trained model to the studied datasets for fine-tuning to represent two classes as benign and malignant. In this case, we used the architecture of the pre-trained model combining the proposed CNN model and retrained entire it according to the considered datasets.

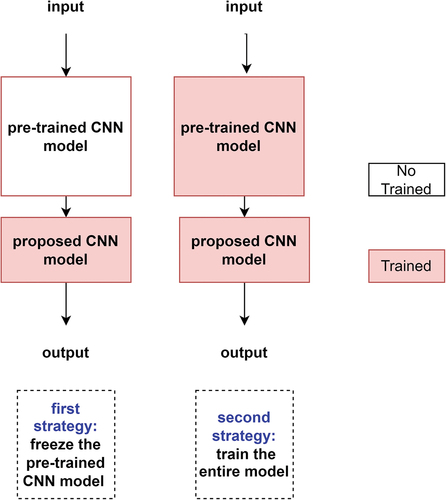

Also, we utilised two transfer learning and fine-tuning strategies. In the first strategy, we froze the pre-trained model in its original form and then used its outputs to feed the proposed CNN model. In the second strategy, we trained the entire model. In this case, we used the architecture of the pre-trained model combining the proposed CNN model and trained it according to the considered datasets. shows two utilised transfer learning and fine-tuning strategies.

3.6. Uncertainty quantification method

In this study, the Monte Carlo Dropout approach was used for uncertainty estimation. Dropout is an effective technique that is being used in deep learning models for regularisation purposes, which solves over-fitting problems in models. During the training step, dropout is discarding some neurons of the Neural Network with a certain probability, called the dropout rate, for each iteration (Srivastava et al. Citation2014). This process is called a stochastic forward pass.

Gal and Ghahramani (Gal and Ghahramani Citation2015) developed a framework casting dropout in deep neural networks as approximate Bayesian inference in deep Gaussian processes. In this method, the dropout can be activated not only during training but also at test time. In another word, this method used T stochastic forward passes on the test time and collected them. It is equivalent to a form of variational inference used to estimate posterior distribution in the BDL (Gal and Ghahramani Citation2016).

The main idea behind the BDL is to consider a posterior distribution over the space of parameters given training inputs X = {x1, … , xN} and their corresponding outputs Y = {y1, … , yN}, instead of a single value and then is looked for the posterior distribution by using Bayes theorem which is written in eq. (1).

Using the BDL model, the predictive probability distribution can calculate an output for a given test sample by integrating, as shown in eq. (2).

However, cannot be computed analytically and should be approximated by various approximation methods such as variational inference (Graves Citation2011; Sun et al. Citation2019). So, according to the variational inference method, in order to find the predictive distribution, can define an approximating variational distribution

instead of true posterior distribution as shown in eq. (3).

In practice, when the dropout method is used, the predictive distribution can be estimated using a Monte Carlo integration. In this way, it is equivalent to perform T stochastic forward passes through the network and averaging the results. It is shown in eq. (4).

Where ,

is a Bernoulli variational distribution or a dropout variational distribution, c is all possible classes that y can take and

is a SoftMax likelihood for classification task which is written in eq. (5).

Where denotes a network function parametrized by the variables

. This means that a network already trained with dropout is a BDL model (Gal and Ghahramani Citation2015). It is an effective method to add uncertainty handling in deep learning models.

Finally, we can use three approaches to evaluate the uncertainty within breast cancer classification task:

Predictive entropy

Mutual information

Predictive variance and standard deviation of the model

In the result subsections, we added the details of each approach by uncertainty quantification metrics.

3.7. Uncertainty quantification metrics

We can measure three metrics to evaluate uncertainty within the classification task: (i) predictive entropy, (ii) mutual information and (iii) the predictive variance of the model.

3.7.1. Predictive entropy

We quantified the uncertainty of our binary classification predictions by predictive entropy (Shannon Citation1984; Gal Citation2016). Predictive entropy is the entropy of predictive distribution , in test points

, which captures the average of the information in the predictive distribution. The predictive entropy defined as eq. (6):

Where indicates the Mc dropout approximated predictive distribution obtained by T stochastic forward passes through the model, defined as eq. (4). Due to the predictive entropy capturing epistemic and aleatoric uncertainty, this quantity is high when either the aleatoric or epistemic uncertainty is high.

3.7.2. Mutual information

The mutual information (Shannon Citation1984; Gal Citation2016) between the posterior over the model parameters and model output

on input

offers a different measure of uncertainty, as shown in eq. (7).

Where is corresponded predictive entropy. The mutual information can be approximated in our setting in a similar way to the predictive entropy approximation, which is shown in eq. (8):

The Mc dropout approximated predictive distribution defined as eq. (4). So, using MC approximation we have:

The mutual information captures only epistemic uncertainty. So, this quantity is high when the epistemic uncertainty is high.

3.7.3 Predictive variance and standard deviation

We can obtain the approximated predictive distribution using the Monte Carlo dropout approach. So, we can consider the predictive variance from the approximated predictive distribution as associated uncertainty. The variance of the predictive distributions can be calculated by eq. (10).

Also, the standard deviation is the square root of the variance in eq. (10).

4. Results

4.1. Evaluation metrics

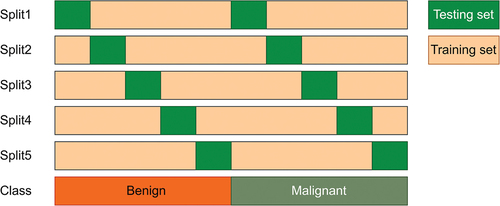

In this work, a variation of five-fold cross-validation (CV), called Stratified five-fold method, has been used to evaluate all of the deep CNN models. It divides the original datasets in to five folds so that each fold contains approximately the same percentage of samples of each target class. The detailed Stratified five-fold cross-validation process is shown in and dataset divisions have been tabulated in .

4.2. Performance metrics

For a binary classification problem, the output comes under one among the four categories: True Positive (TP), False Negative (FN), False Positive (FP), and True Negative (TN).

To evaluate the performance of the proposed model in predicting the type of breast cancer, recall (Sensitivity), precision, accuracy and F1 score were used. The calculation formulas are written in eq. (11–14).

4.3. The proposed deep CNN model results

In our first experiment, to determine the best model for constructing the proposed deep CNN model in mammograms and ultrasound images, we practiced four different pre-trained models combing the proposed CNN model, including Xception (Chollet Citation2016), VGG16 (Simonyan and Zisserman Citation2015), ResNet50V2 (He et al. Citation2016) and DenseNet121 (Huang et al. Citation2018). The models were compared in the metrics of the accuracy and the number of trainable parameters.

The obtained results of 5-fold cross-validation are presented from . In , it can be perceived that in both of mammograms and ultrasound images, the pre-trained Xception combining the CNN model provides the proper performance than the other CNN models with average classification accuracy of 99.95%, 92.11% and 95.60% in the DDSM, BUSI and BUSM datasets, respectively. It should be noted that the pre-trained Xception combining the CNN model had a comparable performance to the single Xception model, while the number of trainable parameters was less. So, we selected pre-trained Xception combining the CNN model for constructing the proposed deep CNN model. Since, we also considered the impact of the uncertainty quantification method on the performance metric of the proposed model, our experiments have been conducted with and without applying uncertainty quantification.

Table 3. Classification performance in (%) of CNN and various pre-trained CNN model with mammograms (DDSM dataset). Results obtained without considering uncertainty.

Table 4. Classification performance in (%) of CNN and various pre-trained CNN model with ultrasound images (BUSI dataset). Results obtained without considering uncertainty.

Table 5. Classification performance in (%) of CNN and various pre-trained CNN model with ultrasound images (BUSM dataset). Results obtained without considering uncertainty.

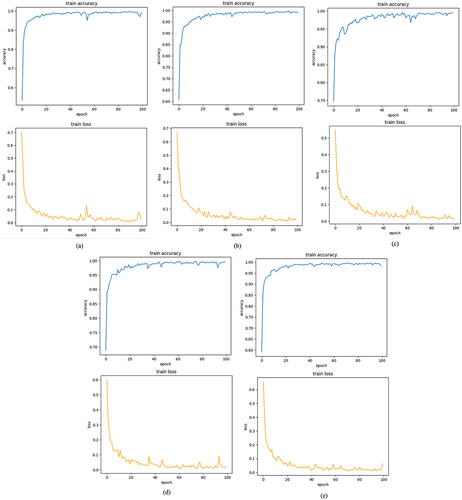

The summary outline of the considered model is listed in . Also, show accuracy and loss curves over epochs of the models obtained using datasets for training phase.

Figure 5. Training accuracy and loss of proposed model versus number of epochs for (a) fold 1, (b) fold 2, (c) fold 3, (d) fold 4 and (e) fold 5.

Table 6. The detail configuration of the proposed CNN architecture.

4.3.1. Breast cancer classification without considering uncertainty

First, we investigated the other performance metrics of the proposed deep CNN model without considering uncertainty. The obtained results are presented in for the sonogram and mammogram datasets.

Table 7. Average classification results of the proposed deep CNN model in breast cancer classification in (%) for BUSM, BUSI and DDSM datasets on 5 folds: results obtained without considering uncertainty quantification.

4.3.2. Breast cancer classification considering uncertainty

The results obtained from the proposed deep CNN model without considering uncertainty show proper classification performance. But it is not enough and the uncertainty estimates should accompany such deep learning models. To estimate the uncertainty of our deep CNN model predictions, we used an uncertainty quantification method, called MC dropout.

The obtained results are presented in for the mammogram and sonogram datasets by the proposed deep CNN model considering uncertainty. To demonstrate the importance of having high accuracy in the diagnosis of each class, especially the class of malignancy, we computed the performance of the proposed model for each class, separately.

Table 8. Classification performance in (%) of the proposed deep CNN model in breast cancer classification using BUSM, BUSI and DDSM datasets: results obtained with considering MC dropout uncertainty quantification (the performance of each class is shown, separately).

As shown in , the proposed deep CNN model considering uncertainty provides an accuracy of 99.95%, 91.34% and 98.40% in the case of DDSM, BUSI and BUSM datasets, respectively.

Table 9. Average classification results of the proposed deep CNN model in breast cancer classification in (%) for BUSM, BUSI and DDSM datasets on 5 folds: results obtained with considering MC dropout uncertainty quantification.

The results obtained using the proposed deep CNN model with and without uncertainty quantification show that the model with uncertainty quantification method has had slightly better performance than the model without uncertainty quantification in the DDSM and BUSM datasets. In general, the result shows well classification performance of the Bayesian Inference and indicates the proposed deep CNN model did not sacrifice accuracy while can estimate the uncertainty values.

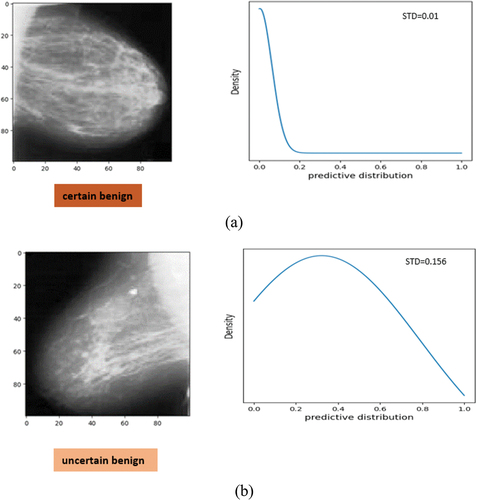

Based on an image, the proposed deep CNN model can be certain () or uncertain () about its predictions. presents examples of breast cancer images and corresponding approximated predictive probability distribution produced by the Bayesian Inference in mammograms. Some images have high and some ones have low uncertainty in prediction, where in , the uncertainty indicated by the standard deviation of the predictive distribution calculated with eq. (14). The mammogram of is confidently classified as benign by the proposed deep CNN model since the uncertainty value (standard deviation) is 0.01. Whereas, shows a case of the model is uncertain. In this case, the predicted label is benign, but with wider predictive distribution, and the uncertainty value (standard deviation) is 0.156.

Figure 6. Examples of breast cancer images and corresponding approximate predictive probability distribution produced by the bayesian inference. (a) image with low uncertainty in prediction from DDSM as certain benign and (b) image with high uncertainty in prediction from DDSM as benign. This case is predicted benign but slightly uncertain. The corresponding standard deviation (STD) as uncertainty is shown.

4.3.3. Relationship between misclassification cases and their uncertainty values

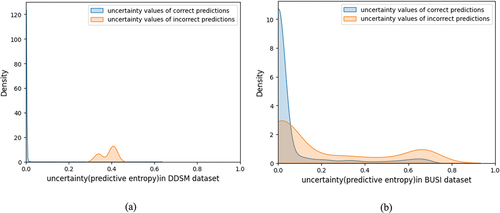

In this part, we investigate the relationship between uncertainty and incorrect prediction cases for increasing the performance of the CAD system by selecting appropriate subsets for further inspection, in order to mimic the human clinical workflow that they usually obtain more information when their predictions are uncertain (Leibig et al. Citation2017).

Some studies have illustrated that there is a relationship between uncertainty and the misclassification rate of a classifier explicitly and this determine that the misclassification rate increases with the growing up of uncertainty in some cases (Leibig et al. Citation2017; Zhou et al. Citation2020; Song et al. Citation2021). We found that the misclassified cases have higher uncertainty values than correct predictions in mammograms as well as ultrasound images. The uncertainty values for DDSM and BUSI test images were plotted and grouped by correct and incorrect predictions (see ). shows incorrect cases have higher uncertainty. Because high uncertainty was indicated of incorrect predictions, therefore this information could be used to find some cases for further inspection and leverage to increase the performance of the breast cancer classification CAD system.

Figure 7. Distribution of uncertainty value for all test data grouped by correct and incorrect model predictions from (a) mammograms (DDSM) and (b) ultrasound images (BUSI).

For example, presents some examples of the mammogram images with high uncertainty (≥0.1) in prediction which can be selected as appropriate subsets for further inspection.

Figure 8. Some examples of breast cancer images with high uncertainty (≥0.1) in predictions from mammograms which can be selected as appropriate subsets for further inspection. Predictive entropy is considered as the model uncertainty. (M and B are predicted labels as malignant and benign, respectively).

5. Discussion

Breast cancer is the most common malignant tumour in women. Using of medical imaging is one of the most effective methods for the diagnosis of breast cancer. Thus, the development of computer-aided diagnosis systems that are capable of accurately and reliably classifying breast cancer images is of immediate priority. Deep learning algorithms have become a good performance model for analysing medical images. In spite of the advantages, most deep learning-based approaches cannot quantify the uncertainty of their predictions. Uncertainty in the predicted results might endanger people’s life in these crucial steps for clinical diagnostics. Therefore, leveraging uncertainty information is imperative to ensure safe and reliable use of these novel tools. Accordingly, to have an accurate and reliable CAD system simultaneously, here, we provide an uncertainty-aware deep learning-based CAD system.

In this study, we proposed an uncertainty-aware deep learning-based CAD system for breast cancer classification task using Ultrasound and Mammography Images.

Differently from most of existing studies, which just used one type of images in breast cancer classification, we present a simple but efficient deep learning model, which is able to classify accurately different class of images, namely, mammograms and ultrasound.

In this work, four pre-trained deep learning models combining the CNN model for breast cancer classification are proposed. The different proposed models were compared according to accuracy and trainable parameters. As listed in , the obtained results show that the Xception combining the CNN model gave the best classification performance in all of the datasets on average. So, this model was selected as the proposed deep CNN model.

We also estimated uncertainty values for each case using the Monte Carlo Dropout method as Bayesian Inference in the proposed deep CNN model. As the results showed in , the proposed deep CNN model could distinguish benign and malignant cases with high accuracy and reliability for breast cancer classification and indicate the proposed deep CNN model as a BNN has slightly better performance than the model without uncertainty quantification () and does not sacrifice accuracy while can estimate the uncertainty values. From , it seems that our proposed deep CNN model, not only can quantify uncertainty values, but also the proposed approach appears to have comparable performance to some competent CAD models applied to the same datasets in both sonograms and mammograms.

Table 10. Comparison of the uncertainty-aware deep learning model with other existing methods tested on the used datasets for breast cancer classification.

Finally, since we found that the proposed deep CNN model shows high uncertainty in misclassifications, so we used the uncertainty values to find appropriate subsets for further inspection, in order to mimic the human clinical workflow that they usually obtain more information when their predictions are uncertain.

5.1. Major advantages and disadvantages

In summary, there are some advantages and disadvantages. The main salient features of our method are given below:

Achieved superior performance for breast cancer classification using Ultrasound and Mammography Images.

Prediction of the uncertainty

Analyzed of results in each class of datasets to ensure the transparency in diagnosis of each class.

The limitations and disadvantages of our proposed method are also presented below:

Unable to return and investigate uncertain samples to medical experts due to lack of a medical team.

Yields better performance using large medical data than for smaller data.

The performance of the model is lower in unbalanced datasets.

6. Conclusion

Uncertainty in the predicted results from a Computer-Aided Diagnosis (CAD) system might endanger people’s life in these crucial steps for breast cancer classification. Therefore, Quantifying the uncertainty will enable the development of safe and reliable models for breast cancer classification.

In this study, we proposed an uncertainty-aware deep learning-based CAD system for breast cancer classification task. Differently from most of existing studies, which just used one type of images in breast cancer classification, we present a simple but efficient deep learning model, which is able to classify accurately different class of images, namely, mammograms and ultrasound.

The proposed deep CNN model achieved 99.95%, 91.34%, and 98.40% accuracy on DDSM, BUSI and BUSM datasets, respectively. Therefore, the results indicate the proposed deep CNN model does not sacrifice accuracy while can estimate the uncertainty values.

The limitations of the proposed uncertainty-aware deep learning-based CAD system will be addressed in our future studies. Thus, in the future, we intend to: (i) improve the generalisation power of the model using various datasets, (ii) the development of the proposed model using multi-modal data, and (iii) investigation of the uncertain samples by medical experts.

Disclosure statement

No potential conflict of interest was reported by the author(s)

Additional information

Notes on contributors

Mohaddeseh Chegini

Mohaddeseh Chegini is a PhD student at Tarbiat Modares university supervised by Dr.Ali Mahlooji Far. Mohaddeseh received her Bachelor’s degree from the Islamic Azad University and then completed her MS degree at Iran University of Science and Technology in biomedical engineering.

Ali Mahlooji Far

Dr. Ali Mahlooji Far is Associate Professor of biomedical engineering in Tarbiat Modares university. His research has been focused on medical Image Processing.

References

- Abdar M, Pourpanah F, Hussain S, Rezazadegan D, Liu L, Ghavamzadeh M, Fieguth P, Cao X, Khosravi A, Acharya UR, et al. 2021. A review of uncertainty quantification in deep learning: Techniques, applications and challenges. Inform Fusion. 76:243–17. doi: 10.1016/j.inffus.2021.05.008.

- Abdar M, Salari S, Qahremani S, Lam HK, Karray F, Hussain S, Khosravi A, Acharya UR, Makarenkov V, Nahavandi S. 2023. UncertaintyFuseNet: robust uncertainty-aware hierarchical feature fusion model with ensemble monte carlo dropout for COVID-19 detection. Inform Fusion. 90:364–381. doi: 10.1016/j.inffus.2022.09.023.

- Abdullah AA, Hassan MM, Mustafa YT. 2022. A review on bayesian deep learning in healthcare: applications and challenges. IEEE Acces. 10:36538–36562. doi: 10.1109/ACCESS.2022.3163384.

- Acevedo P, Vazquez M. 2019. Classification of tumors in breast echography using a SVM algorithm, 2019 International Conference on Computational Science and Computational Intelligence (CSCI). 686–689. doi: 10.1109/CSCI49370.2019.00128

- Ahmed Roni N, Hossain S, Hossain MB, Alam Efat I, Abu Yousuf M. 2022. Deep convolutional comparison architecture for breast cancer binary classification, International Conference on Machine Intelligence and Emerging Technologies, 490:187–200. doi: 10.1007/978-3-031-34619-4_16.

- Al-Antari MA, Al-Masni MA, Choib MT, Han SM, Kim TS. 2018. A fully integrated computer-aided diagnosis system for digital X-ray mammograms via deep learning detection, segmentation, and classification. Int J Med Informat. 117:44–54. doi: 10.1016/j.ijmedinf.2018.06.003.

- Al-Dhabyani W, Fahmy A, Gomaa M, Khaled H. 2019. Deep learning approaches for data augmentation and classification of breast masses using ultrasound images. IJACSA. 10(5):618–627. doi: 10.14569/IJACSA.2019.0100579.

- Al-Dhabyani W, Gomaa M, Khaled H, Fahmy A. 2020. Dataset of breast ultrasound images. Data Brief. 28:104863. doi: 10.1016/j.dib.2019.104863.

- Al-Masni MA, Al-Antaria MA, Park JM, Gi G, Kim TY, Rivera P, Valarezo E, Choi MT, Han SM, Kim TS. 2018. Simultaneous detection and classification of breast masses in digital mammograms via a deep learning YOLO-based CAD system. Comput Meth Programs Biomed. 157:85–94. doi: 10.1016/j.cmpb.2018.01.017.

- Assari Z, Mahloojifar A, Ahmadinejad N. 2022a. A bimodal BI-RADS-guided GoogLeNet-based CAD system for solid breast masses discrimination using transfer learning. Comput Biol Med. 142:105160. doi: 10.1016/j.compbiomed.2021.105160.

- Assari Z, Mahloojifar A, Ahmadinejad N. 2022b. Discrimination of benign and malignant solid breast masses using deep residual learning-based bimodal computer-aided diagnosis system. Biomed Signal Process Contr. 73:103453. doi: 10.1016/j.bspc.2021.103453.

- Ayana G, Choe S. 2022. BUViTNet: breast ultrasound detection via vision transformers. Diagnostics. 12(11):2654. doi: 10.3390/diagnostics12112654.

- Balkenende L, Teuwen J, Mann RM. 2022. Application of deep learning in breast cancer imaging. Semin Nucl Med. 52(5):584–596. doi: 10.1053/j.semnuclmed.2022.02.003.

- Ben Othman S, Almalki FA, Chakraborty C, Sakli H. 2022. Privacy-preserving aware data aggregation for IoT-based healthcare with green computing technologies. Comput Electr Eng. 101:108025. doi: 10.1016/j.compeleceng.2022.108025.

- Bhuyan HK, Chakraborty C, Explainable machine learning for data extraction across computational social system, IEEE Transactions on Computational Social Systems, (2022) 1–15. doi: 10.1109/TCSS.2022.3164993.

- Byra M. 2021. Breast mass classification with transfer learning based on scaling of deep representations. Biomed Signal Process Contr. 69:102828. doi: 10.1016/j.bspc.2021.102828.

- Cao Z, Yang G, Chen Q, Chen X, Lv F. 2020. Breast tumor classification through learning from noisy labeled ultrasound images. Med Phys. 47(3):1048–1057. doi: 10.1002/mp.13966.

- Chang Shin H, Roth HR, Gao M, Lu L, Xu Z, Nogues I, Yao J, Mollura D, Summers RM. 2016. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans Med Imaging. 35(5):1285–1298. doi: 10.1109/TMI.2016.2528162.

- Chollet F. 2016. Xception: deep learning with depthwise separable convolutions. arxiv preprint arXiv:1610.02357. doi: 10.48550/arXiv.1610.02357.

- Chougrad H, Zouaki H, Alheyane O. 2018. Deep convolutional neural networks for breast cancer screening. Comput Meth Programs Biomed. 157:19–30. doi: 10.1016/j.cmpb.2018.01.011.

- Ding S, Wang H, Lu H, Nappi M, Wan S. 2023. Two path gland segmentation algorithm of colon pathological image based on local semantic guidance. IEEE J Biomed Health Informat. 27(4):1701–1708. doi: 10.1109/JBHI.2022.3207874.

- Duggento A, Aiello M, Cavaliere C, Cascella GL, Cascella D, Conte G, Guerrisi M, Toschi N. 2019. An ad hoc random initialization deep neural network architecture for discriminating malignant breast cancer lesions in mammographic images. Contrast Media Mol Imaging. 2019:1–9. doi: 10.1155/2019/5982834.

- Ferdous Aurna N, Abu Yousuf M, Abu Taher K, Azad AKM, Moni M. 2022. A classification of MRI brain tumor based on two stage feature level ensemble of deep CNN models. Comput Biol Med. 146:105539. doi: 10.1016/j.compbiomed.2022.105539.

- Filos A, Farquhar S, Gomez AN, Rudner TGJ, Kenton Z, Smith L, Alizadeh M, de Kroon A, Gal Y. 2019. A systematic comparison of bayesian deep learning robustness in diabetic retinopathy Tasks. arXiv arXiv:1912.10481. doi: 10.48550/arXiv.1912.10481.

- Gal Y, Uncertainty in deep learning, Ph.D. thesis, University of Cambridge. (2016). http://mlg.eng.cam.ac.uk/yarin/thesis/thesis.pdf

- Gal Y, Ghahramani Z. 2015. Dropout as a bayesian approximation: representing model uncertainty in deep Learning. arXiv arXiv:1506.02142. doi: 10.48550/arXiv.1506.02142.

- Gal Y, Ghahramani Z. 2016. Bayesian convolutional neural networks with bernoulli approximate variational inference. arXiv preprint arXiv:1506.02158. doi: 10.48550/arXiv.1506.02158.

- Gawlikowski J, Tassi CRN, Ali M, Lee J, Humt M, Feng J, Kruspe A, Triebel R, Jung P, Roscher R, et al. 2023. A survey of uncertainty in deep neural Networks, 2022 arXiv preprint arXiv:2107.03342v3 56(S1):1513–1589. doi: 10.48550/arXiv.2107.03342.

- Geisel J, Raghu M, Hooley R. 2018. The role of ultrasound in breast cancer screening: the case for and against ultrasound. Semin Ultrasound CT MR. 39(1):25–34. doi: 10.1053/j.sult.2017.09.006.

- Ghahramani Z. 2015. Probabilistic machine learning and artificial intelligence. Nat. 521(7553):452–459. doi: 10.1038/nature14541.

- Graves A, Practical variational inference for neural networks, Proceedings of the 24th International Conference on Neural Information Processing Systems. (2011) 2348–2356. https://proceedings.neurips.cc/paper/2011/file/7eb3c8be3d411e8ebfab08eba5f49632-Paper.pdf

- Gupta AK, Chakraborty C, Gupta B. 2019. Smart medical data sensing and IoT systems design in healthcare. IGI. 201–2023. doi: 10.4018/978-1-7998-0261-7.ch009.

- Hassan NM, Hamad S, Mahar K. 2022. Mammogram breast cancer CAD systems for mass detection and classification. A Review. 81(14):20043–20075. doi: 10.1007/s11042-022-12332-1.

- Heath M, Bowyer K, Kopans D, Moore R, Kegelmeyer WP. 2000. The digital database for screening mammography. Proceedings of the 5th International Workshop on Digital Mammography, Medical Physics Publishing; Madison, WI, USA. Vol. 91, p. 212–218.

- Hendrycks D, Lee K, Mazeika M. 2019. Using pre-training can improve model robustness and Uncertainty arXiv preprint arXiv:1901.09960. doi: 10.48550/arXiv.1901.09960.

- He K, Zhang X, Ren S, Sun J. 2016. Identity mappings in deep residual networks. arXiv preprint arXiv:1603.05027v3. doi: 10.48550/arXiv.1603.05027.

- Huang ML, Lina TY. 2020. Dataset of breast mammography images with masses. Data Brief. 31:105928. doi: 10.1016/j.dib.2020.105928.

- Huang G, Liu Z, van der Maaten L, Weinberger KQ. 2018. Densely Connected Convolutional Networks. arXiv preprint arXiv:1608.06993. doi: 10.48550/arXiv.1608.06993.

- J Gaona Y, Álvarez MJR, Lakshminarayanan V. 2020. Deep-learning-based computer-aided systems for breast cancer imaging: a critical review, Appl. Sci. 10(22):8298. doi: 10.3390/app10228298.

- Jospin LV, Buntine W, Boussaid F, Laga H, Bennamoun M. 2022. Hands-on bayesian neural networks - a tutorial for deep learning users. IEEE Computation Intelligence Magazine. 17(2):29–48. doi: 10.1109/MCI.2022.3155327.

- Kumar Pattanaik R, Mishra S, Siddique M, Gopikrishna T, Satapathy S. 2022. Breast cancer classification from mammogram images using extreme learning machine-based DenseNet121 model. J Of Sensors. 2731364. doi: 10.1155/2022/2731364.

- LeCun Y, Bengio Y, Hinton G. 2015. Deep learning. Nat. 521(7553):436–444. doi: 10.1038/nature14539.

- Leibig C, Allken V, Ayhan MS, Berens P, Wah S. 2017. Leveraging uncertainty information from deep neural networks for disease detection. Scientific Reports. 7(1):17816. doi: 10.1038/s41598-017-17876-z.

- Li W, Gu C, Chen J, Ma C, Zhang X, Chen B, Wan S, DLS-GAN: generative adversarial nets for defect location sensitive data augmentation, IEEE Transactions on Automation Science and Engineering, (2023) 1–17. doi: 10.1109/TASE.2023.3309629.

- Lina TY, Huang ML. 2020. Dataset of breast mammography images with masses. Mendeley Data. 2. doi: 10.17632/ywsbh3ndr8.2.

- Maqsood S, Damaševicius R, Maskeliunas R. 2022. TTCNN: a breast cancer detection and classification towards computer-aided diagnosis using digital mammography in early stages, Appl. Sci. 12(7):3273. doi: 10.3390/app12073273.

- Mi W, Li J, Guo Y, Ren X, Liang Z, Zhang T, Zou H. Deep learning-based multi-class classification of breast digital pathology images. 2021;13:4605–4617. doi:10.2147/CMAR.S312608.

- Mishra AK, Roy P, Bandyopadhyay S, Das SK. 2021. Breast ultrasound tumour classification: a machine learning—radiomics based approach. Expert Syst. 38:e12713. doi: 10.1111/exsy.12713.

- Moon WK, Lee YW, Ke HH, Lee SH, Huang CS, Chang RF. 2020. Computer-aided diagnosis of breast ultrasound images using ensemble learning from convolutional neural networks. Comput Meth Programs Biomed. 190:105361. doi: 10.1016/j.cmpb.2020.105361.

- Muduli D, Dash R, Majhi B. 2021. Automated diagnosis of breast cancer using multi-modal datasets: A deep convolution neural network based approach. Biomed Signal Process Contr. 71:102825. Part B. doi: 10.1016/j.bspc.2021.102825.

- Murtaza G, Shuib L, Abdul Wahab AW, Mujtaba G, Nweke HF, Al-Garadi M, Zulfiqar F, Raza G, Aniza Azmi N. 2020. Deep learning-based breast cancer classification through medical imaging modalities: state of the art and research challenges. 53(3):1655–1720. doi: 10.1007/s10462-019-09716-5.

- Murtaza G, Shuib L, Abdul Wahab AW, Mujtaba G, Raza G. 2020. Ensembled deep convolution neural network-based breast cancer classification with misclassification reduction algorithms. 79(25–26):18447–18479. doi: 10.1007/s11042-020-08692-1.

- Noushiba Mahbub T, Abu Yousuf M, Nasir Uddin M, A modified CNN and fuzzy AHP based breast cancer stage detection system, International Conference on Advancement in Electrical and Electronic Engineering (ICAEEE), (2022) 1–6. doi: 10.1109/ICAEEE54957.2022.9836541.

- Oza P, Sharma P, Patel S, Adedoyin F, Bruno A. 2022. Image Augmentation Techniques for Mammogram Analysis. J Imaging. 8(5):141. doi: 10.3390/jimaging8050141.

- Ragab DA, Sharkas M, Marshall S, Ren J. 2019. Breast cancer detection using deep convolutional neural networks and support vector machines. PeerJ. 7:e620. doi: 10.7717/peerj.6201.

- Rodrigues PS. 2017. Breast ultrasound image. Mendeley Data. 1. doi: 10.17632/wmy84gzngw.1.

- Sadad T, Hussain A, Munir A, Habib M, Khan SA, Hussain S, Yang S, Alawairdhi M. 2020. Identification of breast malignancy by marker-controlled watershed transformation and hybrid feature set for healthcare, Appl. Sci. 10(6):1900. doi: 10.3390/app10061900.

- Shannon CE. 1984. A mathematical theory of communication. Bell Syst Tech J. 27(3):379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x.

- Shorten C, Khoshgoftaar TM. 2019. A survey on image data augmentation for deep learning. J Big Data. 6(1):60. doi: 10.1186/s40537-019-0197-0.

- Simonyan K, Zisserman A. 2015. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556. doi: 10.48550/arXiv.1409.1556.

- Song B, Sunny S, Li S, Gurushanth K, Mendonca P, Mukhia N, Patrick S, Gurudath S, Raghavan S, Tsusennaro I, et al. 2021. Bayesian deep learning for reliable oral cancer image classification. Biomed Optic Express. 12(10):6422–6430. doi: 10.1364/BOE.432365.

- Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. 2014. Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res. 15:1929–1958. http://jmlr.org/papers/v15/srivastava14a.html

- Sun S, Zhang G, Shi J, Grosse R. Functio Variatio Bayes Neural Networks. 2019. arXiv preprint arXiv:1903.05779 doi: 10.48550/arXiv.1903.05779.

- Thiagarajan P, Khairnar P, Ghosh S. 2022. Explanation and use of uncertainty quantified by bayesian neural network classifiers for breast histopathology Images. IEEE Trans Med Imaging. 41(4):815–825. doi: 10.1109/TMI.2021.3123300.

- Waks AG, Winer EP. 2019. Breast cancer treatment: a review. JAMA. 321(3):288–300. doi: 10.1001/jama.2018.19323.

- Wang H, Yeung DY. 2021. A survey on bayesian deep learning. ACM Comput Surv. 53(5):108. doi: 10.1145/3409383.

- Wang H, Zhang D, Feng J, Cascone L, Nappi M, Wan S. 2024. A multi-objective segmentation method for chest X-rays based on collaborative learning from multiple partially annotated datasets. 102.102016. doi: 10.1016/j.inffus.2023.102016.

- Wu Y, Kong Q, Zhang L, Castiglione A, Nappi M, Wan S, CDT-CAD: context-aware deformable transformers for end-to-end chest abnormality detection on X-Ray images, IEEE/ACM Trans Comput Biol Bioinform, (2023) 1–12. doi: 10.1109/TCBB.2023.3258455.

- Yassin NIR, Sh Omran EMFEH, Allam H. 2018. Machine learning techniques for breast cancer computer aided diagnosis using different image modalities: a systematic review. Comput Meth Programs Biomed. 156:25–45. doi: 10.1016/j.cmpb.2017.12.012.

- Zaalouk AM, Ebrahim GA, Mohamed HK, Hassan HM, Zaalouk MMA. 2022. A deep learning computer-aided diagnosis approach for breast cancer. Bioengineering. 9(8):391. doi: 10.3390/bioengineering9080391.

- Zhou X, Wang X, Hu C. 2020. Ran Wang, an analysis on the relationship between uncertainty and misclassification rate of classifiers. Inform Sci. 535:16–27. doi: 10.1016/j.ins.2020.05.059.

- Zhuang Z, Kang Y, Raj ANJ, Yuan Y, Ding W, Qiu S. 2020. Breast ultrasound lesion classification based on image decomposition and transfer learning. Med Phys. 47(12):6257–6269. doi: 10.1002/mp.14510.

- Zhuang F, Qi Z, Duan K, Xi D, Zhu Y, Zhu H, Xiong H, He Q. 2021. A comprehensive survey on transfer learning. P IEEE. 109(1):43–76. doi: 10.1109/JPROC.2020.3004555.