ABSTRACT

The rise of generative AI programmes like ChatGPT, Gemini, and Midjourney has generated both fascination and apprehension in society. While the possibilities of generative AI seem boundless, concerns about ethical violations, disinformation, and job displacement have ignited anxieties. The Singapore government established the AI Verify Foundation in June 2023 to address these issues in collaboration with major tech companies like Aicadium, Google, IBM, IMDA, Microsoft, Red Hat, and Salesforce, alongside numerous general members. This public-private partnership aims to promote the development and adoption of an open-source testing tool for fostering responsible AI usage through engaging the global community. The foundation also seeks to promote AI testing via education, outreach, and as a neutral platform for collaboration. This initiative reflects a potential governance model for AI that balances public interests with commercial agendas. This article analyses the foundation’s efforts and the AI Verify testing framework and toolkit to identify strengths, potential and limitations to distil key takeaways for establishing practicable solutions for AI governance.

Introduction

Although AI has been in existence for decades, it is fair to say that it really entered the public consciousness with the launch of OpenAI’s ChatGPT generative AI chatbot on 30 November 2022. With its easy-to-use interface and conversational interaction style, ChatGPT virtually democratised and even pedestrianised AI with its widespread use. Indeed, the platform reached an astounding 1 million users in its first five days of public release and counts over 100 million users now, with 1.5 billion monthly visitors to its website, according to the latest records (Hu, Citation2023).

However, ChatGPT is by no means the only generative AI platform in existence. Big tech companies like Google and Meta have unveiled their Large Language Models (LLMs), which power generative AI and relatively new entrants like GROK by Elon Musk’s xAI and Ernie from China’s Baidu have also entered the fray. Besides text-based chatbots, AI image generators, including Open AI’s DALL-E, Midjourney and Stable Diffusion, also capture public and commercial interest. In light of fierce industry competition that has attracted sizeable investments, tech companies have stepped up their capacities to launch new generative AI services and functions to outrival each other.

Generative AI has made rapid and dramatic advancements in a short time, with significant new features added to chatbots like ChatGPT, Google Gemini and Microsoft CoPilot – these three can process multimodal input and output in the form of text, image, audio and video. With these enhanced capabilities, organisations and companies across diverse sectors have sought to leverage these chatbots for business process efficiencies. These include personalised and dynamic customer service for many industries, including banking and finance, customised instruction catering to students’ styles and even individually tailored mental well-being support.

Nevertheless, although the realm of possibilities for the application of generative AI seems boundless, concerns about their impact on the information landscape and the future of work have stoked anxieties. Grave concerns have been raised about the lack of accountability and transparency in developing LLMs, abuse of generative AI for disinformation and misinformation at scale, the environmental costs of training and using these LLMs, to fears of mass job disruption and even job displacement. In the face of mounting concerns therefore, AI governance is an increasingly pressing challenge that states worldwide must address with new regulatory frameworks and levers.

Singapore’s technocratic leadership has long been keen to exploit the latest technological trends while managing negative fallout, and the latest AI innovations are no exception. The Singapore government – via its Infocomm and Media Development Authority (IMDA) – established the AI Verify Foundation in June 2023 to boost AI’s safe and responsible application. This foundation, which involves the participation of big technology companies such as Aicadium, Google, IBM and Microsoft, aims to build a network of AI advocates to promote the widespread adoption of AI testing through education and outreach. It has also sought to leverage the collective power of the global open-source community to create the AI Verify testing tool to help ensure responsible AI usage. Our article aims to investigate the provenance, goals and structure of the AI Verify Foundation to assess its potential outcomes. We also examine how the AI Verify Toolkit can be applied and refined so that AI ethics is a priority and not an afterthought and draw lessons from the Singapore experience.

Singapore’s approach to AI governance

Across the historical trajectory of Singapore’s technology adoption, the government has consistently demonstrated a proactive approach in seeking out innovations with commercial and societal benefits, while remaining attentive to addressing potential negative consequences. For example, the Protection from Harassment Act (“POHA”) was passed in November 2014 to protect individuals from being harassed and/or stalked, whether online or in real life (Singapore Legal Advice, Citation2022). Similarly, the Protection from Online Falsehoods and Manipulation Act (POFMA) was enacted in 2019 to counter the troubling proliferation of misinformation and disinformation in online media.

With AI being a game-changing technology that undergirds entire digital infrastructures, systems and processes across all industry sectors, governance clearly cannot hinge on legislation alone. Therefore, Singapore has taken a more multi-faceted and balanced approach to AI governance that aims to facilitate innovation, build public trust, safeguard consumer interests, and even serve as a common global reference point. To strongly signal to the world its commitment to realising this vision, the Singapore government launched the Model AI Governance Framework at the World Economic Forum Annual Meeting in Davos, Switzerland, in January 2021 and a second edition a year later at the same event. The framework was developed to guide businesses and organisations that intend to deploy AI technologies (Yeong, Citation2020).

The framework is based on 11 AI ethics principles consistent with internationally recognised AI frameworks such as those from the EU, OECD, and Singapore (Personal Data Protection Commission, Citation2023). The two guiding principles of Singapore’s framework are that: decisions made by AI should be ‘explainable, transparent and fair’ and that AI systems should be human-centric wherein the design and deployment of AI should incorporate human safety and wellbeing (Choudhury, Citation2021). The motivations driving the Singapore government’s interest to address ethical and governance issues are to foster public trust and promote widespread adoption of AI technologies. This aligns with its observable pro-innovation stance and pragmatic recognition that AI has the potential to bring about significant benefits to society but that risks inhere in its deployment (Yeong, Citation2020). Therefore, the government has publicly committed to ensuring that AI is developed and used responsibly and ethically, launching high profile initiatives such as AI Verify in June 2023.

Most recently, Singapore has announced further plans in AI as captured in its National AI Strategy 2.0, where it will pour SGD70 million into a new initiative to develop Singapore’s research and engineering capabilities in multi-modal Large Language Models. Notably, there are plans to have Singapore build ‘a trusted environment in using AI … [for] … deeper understanding of how LLM work and further research AI governance’ (IMDA, Citation2023c). The National Multimodal LLM Programme (NMLP) will develop a base model with a regional context better attuned to Singapore’s unique linguistic characteristics and multi-lingual environment and our neighbouring Southeast Asian region.

Global picture of AI governance: approaches of selected countries

As AI sweeps through the global economy, governance is high on the agenda of many governments, and some AI governance models have been more clearly articulated and encompassing than others. The European Union has been regarded as trailblazing in this regard. It has assumed an active leading role in AI regulation, with the draft Artificial Intelligence Act (AI Act) being a significant step towards regulating AI in the EU market and aiming to establish a harmonised regulatory framework across member states (European Parliament, Citation2023). The Act outlines criteria for high-risk AI applications, requiring transparency, human oversight, and adherence to ethical norms. In the same vein, the UK government has advanced a pro-innovation approach to AI regulation that seeks to balance innovation with regulation (Department for Science, Innovation & Technology Office for AI, Citation2023). The government has championed an ethical AI framework emphasising transparency, fairness, and accountability in AI systems. Regulatory bodies such as the Information Commissioner’s Office (ICO) are crucial in ensuring compliance with data protection laws while promoting innovation.

The two leading AI superpowers, China and the US have crafted unique respective paths towards AI governance. Given its chequered regulatory landscape, the US has adopted a sectoral approach to AI governance. Agencies like the Federal Trade Commission (FTC) focus on consumer protection, while the National Institute of Standards and Technology (NIST) develops AI standards. The American government also prioritises research and development funding for AI technologies and encourages private-sector collaboration. Most notably, in October 2023, US President Joseph Biden issued a landmark Executive Order that set new standards for ‘AI safety and security, protects Americans’ privacy, advances equity and civil rights, stands up for consumers and workers, promotes innovation and competition [and] advances American leadership around the world’ (The White House, Citation2023).

In contrast to the US’ sectoral approach, China’s AI governance revolves around a top-down strategy and strategic planning, prioritising AI as a catalyst for economic growth. Emphasising a national AI development plan set in 2017, China aims to be a global AI leader by 2030 (Sheehan, Citation2023). The government allocates substantial funding to AI research to foster a robust and globally competitive innovation ecosystem. Focusing on data localisation, China enforces stringent cybersecurity regulations and promotes the development of national AI champions in key sectors. Although China’s espoused ethical guidelines underscore responsible AI use, it has been criticised for using AI in surveillance, notably in public safety initiatives and the controversial social credit system, raising alarm bells about privacy and human rights (Cho, Citation2020). This multifaceted approach reflects China’s ambition for global AI leadership while contending with ethical considerations and international perceptions.

Having achieved early technological innovation in the 70s, 80s, and 90s but now seeking to catch up in AI, Japan has been working to shore up its position in the global race. The country remains a leader in robotics and automation and is heavily invested in utilising AI for economic growth and societal challenges. In 2019, it launched the Society 5.0 initiative, a vision for a future driven by a new industrial revolution that brings together technologies such as artificial intelligence, the Internet of Things and robotics, in a more integrated fashion for greater societal benefit (World Economic Forum, Citation2019). To realise this vision, the Japanese government has sought to further collaboration between public and private sectors, driving innovation through funding, infrastructure, and talent development. Ethical considerations are addressed through the AI Ethics Guidelines, emphasising transparency, fairness, and accountability and focusing on human-centric approaches, although their efficacy has been questioned (Wright, Citation2023).

As the preceding discussion evinces, all governments must grapple with the delicate balance of fostering AI innovation while mitigating risks and upholding ethical standards. International collaboration plays a crucial role, offering pathways to harmonise standards, share best practices, and collectively address the global challenges posed by the rapid advancement of AI technologies. As the AI landscape evolves, these governments must continue to adapt their strategies to ensure a responsible and sustainable integration of AI into society. As the generative AI race throws up a litany of powerful AI applications, it also reveals the deficits of current AI models that warrant robust ethical responses. Whether in Europe or North America, Asia or Africa, the ultimate goal remains a global framework that ensures the benefits of AI are widely shared and the risks mitigated to safeguard a future where AI serves as a force for collective good.

AI Verify Foundation and AI Verify testing framework

The rapid advancement of AI technologies has created a pressing need for robust governance mechanisms to ensure their safe and ethical deployment. This impetus for AI Strategy and Governance guidance is not new, with the OECD Policy Observatory tracking over 1000 AI policy initiatives from 69 countries. However, the widespread adoption of these mechanisms has been hindered by challenges of practical application, subjective interpretation, and the absence of a consensus on metrics for AI governance principles.

With regard to helping companies put ethical guidelines and frameworks into practice as they develop innovations, the AI Verify Foundation is perhaps a potentially illuminating ongoing experiment. One notable characteristic of the foundation is the participation of big technology companies, with Aicadium, Google, IBM, IMDA, Microsoft, Red Hat, and Salesforce being premier members, along with another 60 general members. The espoused goal of such public-private collaboration is to build a network of AI advocates to promote adoption of AI testing through education and outreach. Additionally, it seeks to serve as a neutral platform for open collaboration and idea-sharing on AI testing and governance. Given the interest of tech companies to drive AI adoption, and the government’s impetus to regulate for AI ethicality, such public-private partnerships may help advance the governance of AI such that public interest is served while commercial agendas are met.

A key initiative of the AI Verify foundation is AI Verify, an AI governance testing framework and a software toolkit that helps organisations validate the performance of their AI systems against these principles through standardised tests (Yeong, Lee, & Tan, Citation2023). Developed by the IMDA, the toolkit comprises 11 AI ethics principles, enabling users to conduct technical tests on their AI models and record process checks. User companies can be more transparent about their AI by sharing these testing reports with their shareholders. AI Verify can perform technical tests on standard supervised-learning classification and regression models for most tabular and image datasets.

In the burgeoning and increasingly crowded AI space, it is useful to ask how the AI Verify framework offers an improvement on the status quo. In fact, open-source development libraries such as the IBM AI Fairness 360 and Microsoft FairLearn have been created and made available broadly to AI developers and data scientists. Although useful for the data science community to consider implementation, it took much work for risk and governance practitioners to adopt these tools as part of their risk management functions. Without an independent second- or third-line risk function, the onus lies squarely on model developers to ensure that their models adhere to established principles. While there are also commercial offerings in their early stages, these do need extensive customisation to support the unique models or datasets enterprises use, limiting the current ability for widespread adoption. Compounding the challenge is that although metrics for principles such as fairness or explainability have been established, there still needs to be a universally accepted baseline for these metrics in specific use cases and industries.

In 2022, the AI Verify toolkit was first introduced to address these very challenges. It provided a more streamlined approach to AI governance, combining technical interfacing for developers, on-premise deployment for confidentiality concerns, and reports suitable for consumption by management-level professionals. AI Verify follows a similar approach to creating existing open-source development libraries such as IBM AI Fairness 360 and the Aequitas Bias and Fairness Audit Toolkit. However, it goes a step further by packaging the functionality into a more user-friendly application that can be easily installed. It also incorporates an extensible plugin framework, allowing the community to contribute to expanding its use cases in the future. This focus on accessibility and extensibility distinguishes AI Verify from other solutions in the market. The Foundation also plays a critical role in promoting collaboration and community engagement by adopting an open-source model. It encourages contributions from various stakeholders, aiming to develop best practices and metric baselines for AI governance. This can help foster the adoption of trustworthy AI by building a community that includes AI owners, solution providers, users, policymakers, ethicists, and legal experts. This comprehensive representation enables a better understanding of the challenges and opportunities in AI governance.

The AI Verify toolkit also offers several advantages that distinguish it from existing solutions. While open-source tools mainly target AI developers and data scientists, AI Verify seeks to make the solution accessible to professionals responsible for technology risk management. This inclusive approach ensures that AI governance is not limited to technical experts but includes perspectives from those responsible for managing the risks associated with AI development and deployment. More importantly, this is a crucial step which allows a proper governance structure to be formed with consistent tools and reporting. Additionally, the toolkit’s design addresses a practical concern around sending sensitive training data or the developed model to an external auditor. The toolkit has been built using container technology to address this, enabling enterprises to deploy the solution within their own environment. This allows an assessment to be performed without sending the models or data to an untrusted recipient. These reports can then be shared with interested third parties or auditors.

The toolkit is envisioned to eventually assist in widely accepted governance principles such as transparency, explainability, interpretability, fairness, robustness, and safety. By addressing these governance aspects, AI Verify enables organisations to build AI models that are technically robust and aligned with ethical and responsible practices. At the time of writing, the v0.10 toolkit is in its Minimum Viable Product (MVP) stage, covering seven algorithmic testing capabilities: Explainability (Accumulated Local Effect, Partial Dependence Plot, SHAP Toolbox), Fairness (Fairness for Classification, Fairness for Regression) and Robustness (Image Corruption Toolbox, Robustness Toolbox) (AI Verify, Citation2023). Although it does not yet include assurance capabilities for Generative AI or Large Language Models (LLM), it has significant potential for expansion and improvement. In October 2023, the Foundation published guidance on the Generative AI Evaluation Sandbox with a draft catalogue for understanding and evaluating LLMs. There are expectations for practical capabilities to arise from this initiative, bringing together model developers, app developers, and third-party testers (IMDA, Citation2023b).

Limitations of AI Verify framework and toolkit

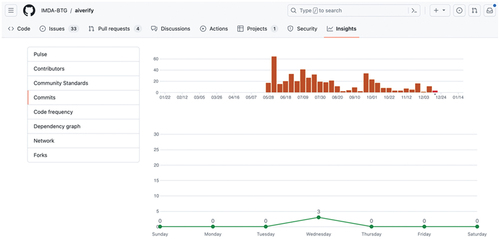

Though the drive to standardise AI governance testing is still in the early stages, AI Verify seeks to address the need for a publicly accessible toolkit to quantitatively measure the ethical and governance challenges around the deployment and usage of AI models. With the hope of broader adoption, it can also encourage public discourse around acceptable norms for principles such as fairness, robustness, and explainability in a measurable way. Adoption will be absolutely essential for the future success of the project. Comparing the current visibility of the project hosted on GitHub with other libraries implementing AI Governance, we can see that more needs to be done to raise the eminence of the project to boost adoption.

While these statistics do not indicate the true potential of the AI Verify solution in its early development stage, some of the possible factors hindering wider adoption are summarised below. Addressing these could result in gaining the momentum necessary to achieve a self-reinforcing adoption and contribution cycle. Various other limitations also inhere in AI Verify and these should also be addressed to enhance adoption.

Limited AI frameworks and algorithms supported

Based on the compatibility documentation, the current models support fundamental frameworks such as scikit-learn, TensorFlow, XGBoost, and LightGBM for classification and regression tasks. These frameworks are well established for most simple to complex machine learning tasks on structured data. Still, it needs a more comprehensive range of potential such as Natural Language Processing (NLP) tasks, Computer Vision, Time Series Analysis, or generation of models using Automated Machine Learning (AutoML) tools. While the project has recently released new capabilities to allow an Application Programming Interface (API) layer to query a model and retrieve its responses, the documentation only covers configuration guidance on setting up the API configuration from the solution. This is reflected in one of the three user case studies on the AI Verify website, where one of the companies adopting the toolkit in its beta testing found limitations in the supported model types, making it challenging to test an HR chatbot implementation leveraging NLP (AI Verify, Citationn.d.).

Unclear messaging for a diverse target audience

One of the strengths of AI Verify is its broad applicability amongst the developer community, AI Governance specialists, and Risk Professionals. The current documentation and public messaging are too diffuse and should be refined to address the needs of and appeal to each of the groups specifically. It would be helpful to identify the benefits of such a project to the target audience in mind, with more relevant details on the steps that these target audience can take to use the solution. This can be achieved by having dedicated sections branching from the main page, providing more specific guidance for the different audiences on the adoption strategies, usage, and examples of how the benefits can be demonstrated with the enterprises.

Lack of practical examples and actual use cases

Practical use cases and examples can help the identified audience above appreciate what they can achieve before they invest time to adopt this solution in their corporate environment. For instance, if the target audience were Risk Professionals, it would be helpful to illustrate how this project can help in their ability to develop their test methodologies to query models using the API and the types of metrics that they can use to draw their conclusion. Similarly, if the target audience was the Data Science team embedding this solution as part of their Machine Learning Operations (MLOps) process, practical use cases of how existing pipelines can be interfaced into the tool to automate the testing and evaluation as the model is being developed. Currently, the Foundation provides three high-level descriptions of user adoption by IBM, Singapore Airlines, and X0PA. The details could be more extensive, with many specifics on how the solution was architected and used within the organisations and how the outcomes were used to drive better governance around AI development.

Lack of scale and reinforced messaging

The Foundation needs to build the scale of its active contributor base to get enhancement ideas and resources to grow its capability. This should be tightly coupled with the continued messaging, roadshows, and enablement sessions to better showcase the utility of AI Verify. The project on GitHub currently needs more traction and activity, with only 14 active contributors (after removing two application accounts) and a low frequency of committed activities, as shown below.

The Foundation should focus on building the community through a dedicated role and in partnerships with professional bodies to bring in contributors across the spectrum of targeted audiences.

Conclusion

AI Verify’s role in setting new benchmarks in AI governance cannot be overstated. As regulations and standards in AI continue to develop, there is a clear need for leadership and clarity in the field. AI Verify steps into this space, potentially driving the industry towards more ethical and responsible AI development and deployment. Such leadership is critical in a landscape where AI’s implications are vast and multifaceted, touching society’s ethical, social, and economic aspects. The AI Verify Foundation and its AI Verify framework represent a significant step forward in AI governance. Its comprehensive approach, emphasis on collaboration, and potential to standardise and elevate AI governance practices make it a notable development in the field. With AI innovation coursing through the globe, the lessons from the AI Verify journey will be illuminating for governments and technology companies worldwide. The foundation’s work could serve as a blueprint for future governance frameworks, influencing how we approach AI ethics and regulation globally. Nevertheless, as this article has highlighted, gaining momentum in adoption and contribution will be critical to the future success of the solution. This has to be achieved through a well-executed strategy in building a community that adopts, provides feedback, and further contributes to the project. Specifically, the AI Verify toolkit needs to be augmented to cater to a broader range of AI tasks and APIs and its value to the developer community, AI Governance specialists, and Risk Professionals should be better conveyed. At the same time, the toolkit can be bolstered through sharing more practical examples and actual use cases, while its scale of its active contributor based can be stepped up to enhance traction and forge a community of adopters and advocates.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- AI Verify. (2023). AI Verify Github. Retrieved from https://github.com/IMDA-BTG/aiverify

- AI Verify. (n.d.). From beta testing to success: How SIA takes responsible AI implementation to the next level with AI Verify. Retrieved from https://aiverifyfoundation.sg/ai-verify-users/how-sia-takes-responsible-ai-implementation-to-the-next-level-with-ai-verify/

- Cho, E. (2020). The social credit system: Not just another Chinese idiosyncrasy. Journal of Public & International Affairs, 1–51. https://jpia.princeton.edu/news/social-credit-system-not-just-another-chinese-idiosyncrasy#:-:text=eunsun

- Choudhury, A. R. (2021). A closer look at Singapore’s AI governance framework. Global Government Forum. Retrieved from https://www.globalgovernmentforum.com/singapores-ai-governance-framework-insights-governments/

- Department for Science, Innovation & Technology Office for AI. (2023). A pro-innovation approach to AI regulation. Retrieved from https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach/white-paper#ministerial-foreword

- European Parliament. (2023). EU AI Act: First regulation on artificial intelligence. News European Parliament. Retrieved from https://www.europarl.europa.eu/news/en/headlines/society/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

- Hu, K. (2023, February 2). ChatGPT sets record for fastest-growing user base – analyst note. Reuters. Retrieved from https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/

- IMDA. (2023a). AI Verify User Guide. Retrieved from https://imda-btg.github.io/aiverify/introduction/how-it-works/

- IMDA. (2023b, October 31). First of its kind generative AI evaluation sandbox for trusted AI by AI Verify Foundation and IMDA. Retrieved from. https://www.imda.gov.sg/resources/press-releases-factsheets-and-speeches/press-releases/2023/generative-ai-evaluation-sandbox

- IMDA. (2023c). Singapore pioneers S$70m flagship AI initiative to develop Southeast Asia’s first large language model ecosystem catering to the region’s diverse culture and languages. Retrieved from https://www.imda.gov.sg/en/resources/press-releases-factsheets-and-speeches/press-releases/2023/sg-to-develop-southeast-asias-first-llm-ecosystem

- Personal Data Protection Commission. (2023). Singapore’s approach to AI governance. Retrieved from https://www.pdpc.gov.sg/Help-and-Resources/2020/01/Model-AI-Governance-Framework

- Sheehan, M. (2023). China’s AI regulations and how they get made. Carnegie Endowment for International Peace. https://carnegieendowment.org/2023/07/10/china-s-ai-regulations-and-how-they-get-made-pub-90117#:~:text=The%202017%20New%20Generation%20AI,China’s%20economy%20and%20national%20power

- Singapore Legal Advice. (2022). Guide to Singapore’s protection from harassment act (POHA). Retrieved from https://singaporelegaladvice.com/law-articles/singapore-protection-harassment-act/

- The White House. (2023). FACT SHEET: President Biden issues executive order on safe, secure, and trustworthy artificial intelligence. Retrieved from https://www.whitehouse.gov/briefing-room/statements-releases/2023/10/30/fact-sheet-president-biden-issues-executive-order-on-safe-secure-and-trustworthy-artificial-intelligence/

- World Economic Forum. (2019). PM Shinzo abe heralds new era for Japan as policies bear fruit. World Economic Forum. Retrieved from https://www.weforum.org/press/2019/01/pm-shinzo-abe-heralds-new-era-for-japan-as-policies-bear-fruit/

- Wright, J. (2023). The development of AI ethics in Japan: Ethics-washing society 5.0? East Asian Science, Technology & Society: An International Journal, 1–18. doi:10.1080/18752160.2023.2275987

- Yeong, Z. K. (2020). Singapore’s model framework balances innovation and trust in AI. OECD AI Policy Observatory. Retrieved from https://oecd.ai/wonk/singapores-model-framework-to-balance-innovation-and-trust-in-ai

- Yeong, Z. K., Lee, W. S., & Tan, W. R. (2023). How Singapore is developing trustworthy AI. World Economic Forum. Retrieved from https://www.weforum.org/agenda/2023/01/how-singapore-is-demonstrating-trustworthy-ai-davos2023/