ABSTRACT

An international research collaboration with researchers from northern Sweden, Finland, Ireland, Northern Ireland, Scotland and developed the ChatPal chatbot to explore the possibility of a multilingual chatbot to promote mental wellbeing in people of all ages. In Sweden the end users were young people. The aim of the current study was to explore and discuss Swedish young peoples’ experiences of using a chatbot designed to promote their mental wellbeing. Young people aged 15–19 filled out an open-ended survey giving feedback on the ChatPal chatbot and their suggestions on improvements. A total of 122 survey responses were analysed. The qualitative content analysis of the survey responses resulted in three themes each containing two to three sub-themes. Theme 1, feeling as if someone is there when needed, which highlighted positive aspects regarding availability and accessibility. Theme 2, human-robot interaction has its limitations, which included aspects such as unnatural and impersonal conversations and limited content availability. Theme 3, usability can be improved, given technical errors due to lack of internet connection and difficulty navigating the chatbot were brought up as issues. The findings are discussed, and potential implications are offered for those designing and developing digital mental health technologies for young people.

Background/Introduction

International research from the past decade shows that there has been an increase in young peoples’ mental ill health [Citation1]. Young people as a group are most at risk of experiencing mental ill health, since it often presents in early life and young people are the least likely group to receive appropriate care [Citation2–4]. The importance of mental health support to young people is clear [Citation5]. The COVID-19 pandemic is also thought to have contributed to long term challenges within the field of mental health, such as increased demand for mental health related services and changes in how these services are being delivered [Citation4,Citation6–8]. Moreover, it is difficult to attract, recruit and retain enough health care staff, in the Arctic north [Citation9]. Mental health related services are according to Piper et al. [Citation4], the range of traditional services and support provided to individuals experiencing mental health issues. Digital mental health or e-mental health services on the other hand includes online services, apps, telepsychiatry and other digital technological solutions that, according to Flore [Citation10], transform the traditional doctor and patient relationship.

In this study, mental health is viewed as one aspect of the holistic view described by the World Health Organization [Citation11] as “fostering physical, mental, social and existential health” (p. 8). Mental health is a state of mental well-being that enables people to cope with the stresses of life, realise their abilities, learn well and work well, and contribute to their community [Citation12] p. 1). A previous study in northern Finland found that teachers play an important role in the wellbeing of students, since they viewed student wellbeing as an integral aspect of their role as a teacher [Citation1]. However, teachers felt that they were unable to provide adequate help to students due to lack of resources, knowledge, and appropriate tools [Citation1]. Additionally, an increase in mental health related issues among university students was identified when available services cannot meet the needs of the students [Citation7]. Teachers also suggested that increasing mental health literacy among both staff and students could increase student wellbeing, by giving their students the tools they need to both take care of their own mental health as well as giving them knowledge on when and how to seek professional help [Citation1].

Since young people are experts on their own everyday experiences and preferences, it is vital to give them a voice and involve them in conversations regarding mental health. Inviting young people to participate in the development of tools to promote mental health is in line with the Conventions of the Rights of the Child [Citation13], which states that children are entitled to have a say in decisions that affect them. This is also echoed in a body of research called “student voice” [Citation14,Citation15].

Simple artificial intelligence (AI)-based solutions have been used within the field of medicine since the 1970s [Citation16]. However, recent research has been focused on using AI within the field of mental health [Citation17–19]. One of the main challenges is developing a service that the users want and professionals endorse and that fits within the capabilities of AI technology [Citation20]. Digital mental health or e-mental health services can be used for various purposes, such as prevention, self-management, treatment information and some therapies [Citation21]. Previous research has also shown that involvement from potential future users in the development of this type of service can be vital to its success [Citation7,Citation19,Citation22,Citation23]. Including end users in the development process can be helpful when it comes to identifying issues with a chatbot [Citation17]. Studies that have reported on trials with chatbots such as Woebot, Tess and Wysa have shown promising results [Citation17,Citation24,Citation25]. Chatbots can lead to higher engagement with the material, compared to static information such as textbooks [Citation17]. Previous work has reported mild to moderate positive effects on mental wellbeing when using a mental health chatbot, meaning that there is potential for chatbots to provide mental health support [Citation25–27].

Another potential benefit of using technology in the field of medicine and mental health care is the potential of bridging the gap between language, culture and the professionals’ understanding of these factors [Citation28]. Persons who speak a minority language, in this case the indigenous Sámi people in Norway, benefited from having their health records available to them online [Citation28]. Indigenous populations in the circumpolar north often do not trust non-indigenous medical specialists and authorities due to their lack of cultural competence [Citation29]. The indigenous people often find doctor’s appointments impractical and therefore put their trust into home treatment or learning about treatment methods via other channels of communication such as television [Citation29]. Similarly, Sámi people seeking care were worried that non-indigenous health professionals would have problems understanding Sámi communication, and they also expressed concern that the health professionals knew nothing about the Sámi culture or history [Citation30]. There seems to be a need for culturally adapted digital services, due to statistics showing that certain minorities are less likely to seek professional help [Citation23,Citation31].

While easily accessible digital solutions (such as apps and chatbots) may be attractive to potential users, there are problematic issues such as the costs of these apps and how time consuming they are may deter people from using them, especially among low-income individuals and students [Citation7,Citation31]. Although, according to Waumans et al. [Citation32], financial barriers are of less importance in western countries. Instead, mental health illiteracy, social stigma, waiting lists and logistical problems are the main issues preventing help seeking [Citation32]. These types of digital interventions may be particularly attractive to some individuals due to their availability and ability to track the user’s mood over time [Citation7,Citation8,Citation10,Citation21], which could be particularly beneficial for patients who struggle to find opportunities to go to physical appointments for different reasons. However, there seems to be low adherence and engagement among young people using mental health apps [Citation33]. There is a discrepancy between how apps are designed compared to what young people want as young people prefer highly interactive, multimodal, and customisable content, possibly including games and social media-like features, instead of static text and image-based content [Citation3]. Spoken language may be more engaging despite coming from a robot [Citation17]. It’s also important to note that not all apps are created equal; as most mental health apps lack evidence-based information or have not been proven to be effective [Citation3].

According to Abbass [Citation34], aspects such as authority to make decisions and responsibility need to be taken into consideration when handing over a task to a machine. At present, it is commonly discouraged to allow machines to make decisions independently, without human involvement [Citation22,Citation34]. The question of who bears the responsibility when the machine malfunctions or causes harm is an ethical dilemma [Citation34]. For example, the antipsychotic drug Abilify MyCite uses a sensor embedded in the tablet connected to an app to track the patient’s dosage, activity levels and adherence to treatment, which brings forward conversations about informed consent and data security [Citation10]. It is important to note that an AI-based application is only as skilled as its creators, meaning that flawed datasets can reproduce biases [Citation22]. Another crucial aspect to consider when developing technology-based solutions is usability, defined by Shackel [Citation35] as: “the capability in human functional terms to be used easily and effectively by the specified range of users, given specified training and user support, to fulfil the specified range of tasks, within the specified range of environmental scenarios’’. Usability and acceptability among professionals should be considered crucial aspects when developing digital health tools [Citation4]. Previous research has also suggested that there is potential for AI-based tools to enhance learning capabilities and decrease cognitive load if the users rate the tool positively from a usability perspective [Citation36]. Usability is an important aspect to keep in mind when designing, discussing, and evaluating systems from the user’s point of view [Citation35,Citation36]. Summing up, there are numerous problems with digital solutions (such as apps and chatbots) according to previous research. However, there also seem to be opportunities using chatbots designed to promote mental wellbeing. Earlier research also pointed to the importance of including the specific target groups, in this case the young people, in the process of developing and testing digital solutions. In other words, it is vital to give young people a voice and involve them in conversations regarding mental health and chatbot development.

Aim

The aim of this study is to explore and discuss Swedish young peoples’ experiences of using a chatbot designed to promote their mental wellbeing.

Methods

This study is part of a larger project called “ChatPal”, a project funded by the Northern Periphery and Arctic Programme (2019–2022) to co-create a chatbot app to support the mental wellbeing of people living in sparsely populated areas [Citation18]. The ChatPal consortium was made up of partners from Northern Ireland, Ireland, Scotland, Sweden, and Finland. The main project objective was the development and testing of a chatbot to support and promote mental wellbeing in rural areas across Europe. The multilingual chatbot (ChatPal) was developed to include content that mental health professionals endorse [Citation20] and what end users wanted and needed from a chatbot [Citation18]. In Northern Sweden, the focus has been on testing the chatbot with young people aged 15 and above.

It is beneficial to include patients, professionals, and the public in the development of an AI-based service for it to be appreciated and accepted by all parties [Citation18,Citation19]. This study focused on young people as the end users, as prior research highlights the importance of involving youth in the advancement of mental health promotion services [Citation37]. Open-ended surveys were used to allow participants to voice their experiences after they were invited to use the ChatPal chatbot, make suggestions and give their opinions. This cooperative and participatory approach with young people is echoed in the body of work on student voice [Citation14,Citation38]. The COREQ checklist developed by Tong et al. [Citation39reporting standards to help to enhance the transparency of qualitative research is added as an appendix (Appendix 1).

Intervention

This study was integrated into psychology classes due to wishes from the professionals and all the participating young people were students enrolled in these classes. The first and second author met with the students in groups, primarily over video conference due to COVID-19 recommendations. Participation in the study was voluntary and the participating students were told that they could use the chatbot for as long as they wished. Using the chatbot was part of an assignment integrated into psychology classes, however, an alternative assignment related to the module was offered to ensure that the students were able to opt out of participating. The participants used the chatbot for anywhere between one day up to three months, the majority used it for between 15 to 30 days. They were told to use it as much as they liked during their free time. However, since participation was integrated into psychology classes, the teachers allocated time for the students to also use the app during school hours if they wished.

The chatbot used by participants was a multilingual mental health and wellbeing chatbot, based on positive psychology [Citation40] targeted at the general population or those with mild-moderate mental ill health. The chatbot is freely available to download to mobile or tablet devices and users can converse with ChatPal in English, Swedish, Finnish and Scottish Gaelic. The chatbot follows a “decision tree” like structure, and for the majority of conversations, the user can select from predefined responses (Appendix 2). The chatbot also contains some AI capabilities, which means the user can input free text and the chatbot will try to direct the user to the appropriate section. As the ChatPal chatbot is focused on mental health and wellbeing, if a user should input something the chatbot does not understand, a fallback message would appear explaining the chatbot only has limited intelligence and direct the user to crisis helplines in their area. In the chatbot, users can complete activities and exercises (e.g. breathing and relaxation), log their mood, share thoughts or gratitude statements, among other activities (Appendix 2).

Context and participants

This study was based on data collected in three municipalities’ high schools in Sweden’s most northern county, Norrbotten, which is in the Arctic region. Initially high school staff in five municipalities’ in Norrbotten who the first and second authors had worked with before were asked to take part in the study. However, staff from two municipalities declined. A combination of convenience sampling and recruitment through gatekeepers was used through established contacts with principals, teachers, and staff from the student health services teams, who extended an invitation to their students to participate in the study. In other words, the authors had a prior relationship with the school staff who acted as gatekeepers, but not the students who participated. The gatekeepers were responsible for relaying the invitations to the students and were also considered valuable actors for dissemination of the results as described by Nygren et al. [Citation41]. Principals, teachers, and staff from the student health services teams ensured that the young people who participated had access to support from professionals, should questions or concerns arise. A total of 129 students were invited to participate and 127 students agreed to participate in the study. The students were able to dismiss the invitation without giving an explanation about the reason. The participants were aged between 15–19, residing in three different municipalities. Participants consisted of 96 girls (75%), 25 boys (20%) and 6 who defined themselves as “other” or did not specify their gender (5%). No exclusion criteria other than declining to participate were used to affect the sample size as all students who wanted to participate were included.

Ethical considerations

Oral and written information about the study were provided to the students in accordance with the Helsinki Declaration [Citation42]. Information about voluntary participation and confidentiality were also provided. All participants participated willingly, and the study was approved by the national ethics committee (Dnr 2020–00808).

Data collection

Data were collected in the three municipalities from February 7th to 1 June 2022. The participants filled out a web-based open-ended survey, which was publicly available online and intended for those who have tested the chatbot. The web-based open-ended survey consisted of background data (gender, age, school, municipality) and how long they used the ChatPal app. The open-ended questions in the survey were; “I appreciated this with the ChatPal chatbot … ”, “This was negative with the ChatPal chatbot … ”, “I suggest the following improvements of the ChatPal chatbot … ”, “I also would like to say this … ” and a space to add anything additional they wanted to convey. By submitting the survey, the participant consented to participating in the study. A total of 127 participants filled out surveys and of these five were considered invalid as two contained irrelevant answers, one with duplicate answers and two blank surveys. The remaining 122 surveys were included in the analysis.

Analysis

The analytic method was inspired by Granehem and Lundman’s [Citation43] description of qualitative content analysis allowing both the manifest and latent content to be described. In other words, what the text says and what the text talks about. In the initial phase the first and second author independently coded the text which had been broken into smaller meaningful units ranging in size from a few words to several sentences. The codes described the content of each meaningful unit and were compiled in a document together with the participants’ quotes. During the second phase the first and second author discussed the codes and grouped them into themes and sub-themes to clarify differences and similarities. The final codes were compiled in a document together with the participants’ quotes. In the third phase, the third and fourth authors were given the document with codes and participants’ quotes and the first draft of the themes and sub-themes. The final themes and sub-themes were decided on after a joint discussion with all authors. The author’s pre-existing knowledge and understanding were thoughtfully considered throughout the analysis process. One male and three female researchers from human work sciences, computer sciences and health sciences made up a broad range of professional experiences adding diversity in the analysis process. See for examples of meaning units, condensed meaning units with interpretations of underlying meaning, sub-themes and themes from content analysis in a way Graneheim and Lundman [Citation43] suggest making the analysis process visible.

Table 1. Examples of meaning units, condensed meaning units with interpretation of underlying meaning, sub-themes and themes from content analysis.

Findings

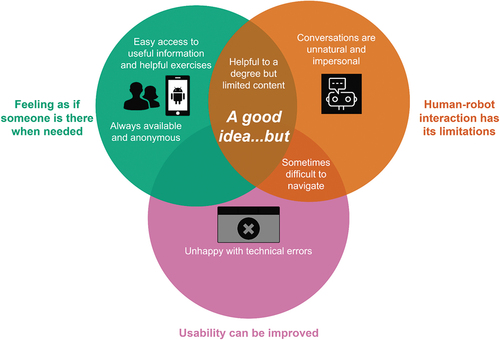

The analysis resulted in three main themes with two-three sub-themes each, and an overarching theme revealing a large variety of young peoples’ experiences of using a chatbot designed to promote their mental wellbeing ().

A good idea … but

The overarching theme gives voice to the participating young people expressing that the chatbot is a good idea. However, they pointed out risks and challenges with the chatbot and offered ways to improve it signalling that it is work in progress. This is further elaborated on in the themes Feeling as if someone is there when needed, Human-robot interaction has its limitations and Usability can be improved and the sub themes.

Feeling as if someone is there when needed

The participating young people described positive experiences of using the chatbot designed to promote their mental wellbeing. This is further described in the subthemes Access to useful information and helpful exercises, and Always available and anonymous.

Easy access to useful information and helpful exercises

According to the young people the chatbot was a good tool to learn about how they could promote their mental wellbeing. “(The chatbot is a) good idea which will most likely help many start talking about mental health, understand it better and learn how to feel better (both) mentally and physically”. They thought that the information was useful, broadening their knowledge about mental health and ways to promote their mental wellbeing. “You receive a lot of information about health problems and exercises you can test to increase wellbeing”. They found the access to exercises particularly helpful. “Some of the exercises were calm and helped you achieve what you needed”. Some of the information was particularly helpful, for example the information about stress reduction and how to promote better sleeping habits, the breathing exercises, and the suggestions on how to do something for someone else or bonding with a friend. “Good idea with a diary so the user can document their wellbeing. Information about improving sleeping habits and breathing exercises also help the user feel better”. The participants pointed out that they found it easy to get access to important information. “A good thing about the chatbot was that it was easy to use, it was not complicated which is an advantage so that everyone can use it”. Further they compared it with time spent searching information via the internet. “It was good to get knowledge directly instead of using Google and reading a lot of unnecessary text”. The exercises offered within the chatbot were not easily found if searched for on the internet. “Good idea with exercises as much of this you may not find on Google”. They also felt empowered. “The chatbot was at its core very supportive and positive and gave me the feeling that I was in control of my life and had the power to make changes if I just learned how”. Further, they liked the feeling of chatting with a good friend, decreasing loneliness. “It can probably be good if you are very lonely and may feel uncomfortable to make contact with other people (and) I like that it was built like a friend”.

Always available and anonymous

The availability was an advantage, according to the young people. “It is available 24/7”. They considered it beneficial to be able to access the chatbot whenever they wanted to. “Convenient that there is always help available, regardless of time and circumstances”. The chatbots availability was compared to professionals with limited time. “Nice of you to keep in mind that not everyone has someone to call or talk to in the middle of the night so that now there is something for everyone”. They described the freedom of accessing the information from anywhere. “It’s good that anyone can use the chatbot regardless of where they are”. Some participants described that the availability made it possible to use the chatbot to pass time. “Would not say that it doesn’t provide much help if one is in need. It is rather sort of a pastime if you want something to do.” The possibility to hold the conversation totally anonymously added, according to the young people, an extra helpful dimension”. ChatPal is anonymous so it can feels good to write … if you have trust problems you know for sure that what you say anonymously to a robot is not being talked about later”, and “It was anonymous which felt safe”,

Human-robot interaction has its limitations

The participating young people described that human-robot interaction has its limitations. This is further described in the subthemes Conversations are unnatural and impersonal and Helpful to a degree but limited content.

Conversations are unnatural and impersonal

The young people found the conversations with the chatbot impersonal, due to pre-programmed answers and limited opportunities for input from the user. “It was difficult to feel that it was personal because it is a robot … ”. While it may not be easy for the young people to pinpoint what makes a conversation feel personal, they suggested for example “the AI … can give different answers not the same (every time)”, and “you want to be able to ask questions such as how are you, what are you doing … to get a different feeling. Right now, it just feels like writing with a robot”. They found the interaction with the chatbot limiting. One participant requested “clearer information about who should use the chatbot, for example that it is not to be used by persons with severe mental illness”. According to the participants the feeling of loneliness could increase as there was not a human contact but only a robot available, “I almost felt more lonely because you were reminded that it was a robot I was talking to”. One of the reasons it felt robotic was because “you cannot properly express how you feel and it only responds with theories and science”. In relation to this, it was also brought up by the participants that their feelings were not validated as the chatbot jumps straight to problem solving; “this kind of forced optimistic method does not always work long term”.

The participants noted that the chatbot “…assumes that the person knows what they need to do to feel better”. Additionally, instead of giving the users a “list” of solutions they suggested asking the user what problems they have and then give them appropriate suggestions. The participants felt that the chatbot “…focused on showing what the chatbot can do rather than focused on helping me” suggesting that the focus should be on the user’s needs.

Helpful to a degree but limited content

According to the young people, the contents of the chatbot were generally received positively. However, they pointed out that information and exercises were too limited and therefore repetitive. “I think the chatbot was quite limited. It felt as if you were reading the same thing over and over”. They suggested that the scripts should be made more diverse in terms of using different words, making the information more interesting. The participants requested more diverse content; while the majority did not dislike the content, they felt that it contained too much text. “The suggestions you receive are way too fact-based. When you feel bad, you don’t want to read an entire Bible. You want to get a question that you can answer yes or no to … then a question ‘Have you tried this’ … ”? and then (when) you answer … yes, there will be more short suggestions. Not a lot of text that you have to read through. No one is able to do that when you’re not feeling well”. They suggested including more videos, animated exercises or possibly even games. Although the participants found the chatbot helpful to a degree they described some limitations and risks with human-robot interaction. “(The chatbot) encourages isolation and phone-addiction”.

Usability can be improved

The participating young people shared disappointment with the chatbot and gave several suggestions on how the chatbot can be improved for increased usability. This is further described in the subthemes Unhappy with technical errors, and Sometimes difficult to navigate.

Unhappy with technical errors

A recurring dissatisfaction with the chatbot among the participants was the recurring technical errors. A handful of prevalent problems were issues with language selection, slow loading speeds and the chatbot simply not responding at all. “It lags quite a lot, and it repeats itself quite often, or sometimes it just doesn’t answer for fifteen minutes”. The participant voiced that the technical issues, specifically the chatbot’s speed, can be such a great obstacle that they choose to not use it. “If the chatbot would’ve been faster, perhaps more people would’ve felt like trying the chatbot, and do more exercises”. Occasionally, users experienced issues with the chatbot directing them to the wrong script when the chatbot misinterpreted a free text input.

Sometimes difficult to navigate

Some participants described the UI [User Interface] as “fun and simple”. Other participants were not as happy with the UI, “It was difficult to chat your way to the menu, too complicated to get where you wanted to go”. According to the participants, navigating the chatbot was confusing. “Difficult to understand how it is structured” and “Hard to understand (the chatbot), I didn’t find it helpful but if (navigation) had been easier the chatbot would have been better”. They described the chatbot as unnecessarily slow-paced, in terms of how much content you must go through before you find the content you are looking for. Suggestions on how to improve the chatbot included “[…] better instructions on how to navigate the chatbot or include a menu which provides access to the different scripts” and “Maybe a search function to find the information you want faster”. In case the user accidentally makes a selection they regret, the participants suggested that “a ‘back-button’ would be useful”. The navigation was at times so difficult that it would have been easier to look elsewhere for the exercises they wanted information about. “(It) took a long time to get to the exercises, so most people would probably have preferred to google for help”. They noted that they needed helpful instructions. “Difficult to understand what to do, you do not know where to press and then you just press anywhere to get past” and “You don’t have any information on how to get back to the main menu if you do something wrong”. A short explanation on how to interact with the app initially and how to access all the content was suggested by the participants.

Discussion

The results of this study revealed that according to the participating young people, a chatbot like ChatPal is a good idea. However, they pointed out risks and challenges and offered several ways to improve the chatbot, signalling that it is work in progress. The participating young people expressed the feeling as if someone is there when needed, which highlighted positive aspects regarding availability and accessibility. They also pointed out that human-robot interaction has its limitations, which included aspects such as unnatural and impersonal conversations and limited content availability. Additionally, usability can be improved, given technical errors due to lack of internet connection and difficulty navigating the chatbot were brought up as issues. Following are their pros and cons with the ChatPal chatbot, and suggestions on future developments experienced by the participating young people.

The findings of this study showed that reachability of the chatbot was positively received by the young people. A chatbot can offer information and support at any time and from anywhere. This is especially fitting in the circumpolar area, which is sparsely populated, with long distances to healthcare services creating a challenge for how to reach all children and adolescents with mental health services [Citation1]. According to the participating young people in this study the chatbot can offer information and support all hours of the day, from anywhere provided there is an internet connection. Thus, there is a need to secure the availability for internet connection throughout the circumpolar area. According to the “Taskforce on improved connectivity in the Arctic” it is essential for improved health care and education services [Citation44].

The findings of this study revealed that the young people expressed trust issues around not receiving the right kind of help within the chatbot. In order for youth to express their experiences it is critical to build rapport and trust between adults and youth [Citation45]. Further, the young people in this study appreciated the accessibility of the chatbot and the possibility of being anonymous when using the chatbot as it made them feel safe. They described the importance of the user being able to access the information from the chatbot without revealing their identity, avoiding being stigmatised. Unfortunately, young people often feel uncomfortable seeking help for mental health issues due to being afraid of being stigmatised [Citation46]. According to young people in previous research, increased knowledge is needed to minimise the stigma surrounding mental health, (Kostenius, Gabrielsson & Lindgren, 2019). One positive aspect of a mental health chatbot is doing just that, supplying information to increase knowledge about mental health issues.

The findings of this study shed light on the young people’s need to receive accurate and reliable information. The young people mentioned the benefits of the information and chatbot content being readily available, instead of having to spend time searching the internet for mental health resources. While many mental health apps and chatbots are available, many do not contain evidence-based information or haven’t been proven to be effective [Citation3]. In addition, some conversational agents have previously given out dangerous medical advice with the potential to cause serious harm if followed [Citation47]. The ChatPal chatbot, which directs users along pre-defined pathways and contains evidence-based information, was co-developed by researchers and end users, based on the needs of people living in sparsely populated areas and targeted at areas that mental health professionals endorse [Citation20]. In the findings of this study the young people speak to the potential for increasing mental health literacy through a chatbot or similar digital tools. They also noted the potential use for educational purposes. Previous research has shown that teachers feel that they do not have enough resources to help students who experience mental health related problems and student health services struggle to meet student needs [Citation1,Citation7].

In the findings of this study the young people expressed the positive feeling as if someone is there when needed. In previous research young people have expressed a need to talk about mental health and meet adults who are available and ready to help when required [Citation48,Citation49]. Although the adults are not present in real life, in a chatbot young people have extended support to the user by offering information and tools for promoting mental health. This may, according to the findings of this study, be a step in the right direction as an alternative option when they require additional support. Especially fitting when resources in the circumpolar area are scarce and medical health care staff are few [Citation1,Citation9].

The findings of this study showed that there are limitations associated with human-robot interactions. The participating young people highlighted the dual nature of chatbot usage concerning loneliness. On one hand, the chatbot can serve as a conversation partner for those who lack human interaction, for example when waking up in the middle of the night and needing someone to talk to. On the other hand, some participants felt an increased sense of loneliness when interacting with a chatbot instead of a human. Further the young people explained that while the chatbot can offer information and exercises, it lacks the emotional support, an important component in human interaction. According to Moshe et al. [Citation33] the use of blended services, digital interventions with human guidance yielded superior results compared to those lacking external guidance. A human presence can potentially enhance the effectiveness of the interventions and address the issue of loneliness [Citation33]. Skaug et al. [Citation50] emphasise that loneliness is a subjective feeling of being socially isolated for example having fewer friends than one desires or feeling less emotionally supported by others than one would like in contrast to objective social isolation or lack of social support. With this notion one can consider that young people’s need for social support are not only experienced in sparsely populated areas but in cities as well. Feelings of loneliness due to social distance are not always connected to where you live but to possibilities to connect, accessing information and finding social support when needed.

The findings of this study highlight another concern raised by the young people which involved the assumption, made by the chatbot, that users can understand their own emotional needs. For individuals struggling to articulate or comprehend their problems, this could become a barrier and can possibly be overcome in a blended service model [Citation33]. During appointments, health professionals can suggest specific exercises or scripts as “homework” for the user as well as helping them understand their emotional needs. The preference for blended services is not only driven by user comfort but also by its positive impact on compliance and user retention [Citation33]. The young people in this study can see the usefulness of a chatbot, but at the same they are aware that chatbots are not useful for everyone. Therefore, using a chatbot together with a health professional could enhance the user experience and ensure higher adherence to the intervention (cf [Citation33].

The findings of this study revealed that the chatbot had too much text and felt too impersonal according to the participating young people. The ChatPal chatbot is designed to “mimic” a text conversation between two people [Citation51], which some participants appreciated, but others requested more varied content. User satisfaction could increase with customisation, social interaction, multimodal interaction for example, video, audio, voice recording, gamification elements and more diverse answers from the chatbot [Citation3]. The young people in this study asked for more free text responses. However this is not currently advised for safety reasons as the chatbot may not be able to give appropriate responses to appropriately deal with the situation without human intervention [Citation22,Citation34,Citation45].

In the findings of this study the young people requested increased usability, ideally the user would be able to interact with the chatbot without having to read a manual or instructions. A short explanation on how to interact with the chatbot, or alternatively a menu to access specific content were suggested by the participating young people in this study. There is potential for AI-based tools to enhance learning capabilities and decrease cognitive load if the users rate the tool positively from a usability perspective [Citation36]. While the chatbot isn’t specifically a learning tool, it does contain information and exercises and, for this reason, usability is an important aspect to keep in mind when designing, discussing and evaluating systems from the user’s point of view [Citation35,Citation36]. Usability and acceptability among professionals [Citation4], young people [Citation46] making room for cultural differences [Citation29] should also be considered crucial aspects when developing digital health tools.

Conclusions

The following is the takeaway message when we listen to the young people who used the chatbot. It might be helpful when developing and using existing chatbots for promoting mental health especially in the arctic regions of the world, yet not limited to this area. The following is what the young people in Sweden experienced and some potential implications are offered for those outside Sweden as well based on the findings of this study:

It’s easy to access - According to the young people a chatbot can offer information and support any time and from anywhere. A potential implication is increasing access to information and support any time via a chatbot. This is especially fitting in the circumpolar area which is densely populated, with long distances to healthcare services creating a challenge for how to reach all young people with mental health services. However, one can feel lonely and be “far away” from support in the midst of a city as feelings of social distance are not always connected to where you live but to possibilities to connect, accessing information and finding social support when needed. A chatbot, such as ChatPal can make this possible.

Being anonymous reduces the threshold - According to the young people a chatbot user can be anonymous lowering the threshold for the user to interact with the chatbot. Having the opportunity to access the information without revealing one’s identity seems to decrease the risk of stigma attached to mental ill health. One implication to take at heart is that a chatbot, such as ChatPal can increase the feeling of safety if the user can be nameless.

Trusting the content - According to the young people being able to trust the content of a mental health chatbot is important. The ChatPal chatbot only contains evidence-based information and advice that has support from professionals. This increased the young peoples’ trust in the chatbot as accurate and reliable information is important to them. A potential implication is that the user of a mental health chatbot is informed that all content has been screened by professionals and researchers.

Blended services are preferred - The young people experienced that human robot interactions have their limitations and expressed a need for a human component alongside the use of the chatbot. Their experiences of using the chatbot ChatPal revealed a two-sided aspect as feelings of loneliness decreased but others felt more lonely. A potential implication is the notion that a chatbot may be viewed as a compliment to human support to reduce the risk of young people feeling alone.

Usability and user satisfaction is crucial - According to the young people increasing customisation, social interaction opportunities, multimodal interactions like video, audio, voice recording, gamification elements and more varied answers from the chatbot could increase user satisfaction. A potential implication is that usability testing is crucial to detect and address issues that may occur, in this study certain technical issues were only discovered once a large group used the chatbot simultaneously. This further highlights the importance of involving end users in the testing process, so these issues can be resolved prior to final service deployment. The young people in this study suggested for example offering clearer instructions on how to use the app early on after logging in to the chatbot or adding a more accessible menu.

Limitations

The ChatPal chatbot was co-designed and developed for people aged 18 and above, however in Sweden this study was conducted with 15–19 year olds. Thus, future development should also involve young people in all aspects of design, development, and evaluation. The chatbot was developed in English and translated, and lacked some of the cultural adjustments, which could be the reason for some of the young people’s critique. Some online sessions were held rather than in person, due to pandemic. The criteria for establishing trustworthiness in qualitative research are according to Lincoln and Guba [Citation52] credibility, dependability,

confirmability and transferability. The participant group in this study was formed exclusively of students, consistent with the aim of the study. Due to the context in which the data collection was made, it also ensured that all participants had tried using the app to some degree. This lends credibility to the data collected [Citation52]. Secondly, data collection and how it’s analysed can morph over time, this should however have been minimised due to the relatively short collection period and pre-made questionnaire that remained the same throughout the study. By presenting the context and participants, Lincoln and Guba [Citation52] claim that this contributes to trustworthiness through transferability. Meaning, a certain degree of generalisability for similar groups can be assumed and potential implications are offered for those outside Sweden to apply the findings to their own work.

Authors’ contributions

The first and second authors met with the young people, introduced the ChatPal chatbot app, distributed the web-based open ended survey, coded and analysed data and drafted the initial version of the manuscript. The third and fourth authors added information throughout the manuscript. The final themes, discussion and conclusions were decided on after a joint discussion with all authors, and all authors reviewed subsequent versions of the manuscript.

Appendix 1 ISSM_COREQ_Checklist.docx

Download MS Word (14.1 KB)Acknowledgments

Thank you to all the participating young people. We appreciate our colleague Karolina Parding’s helpful feedback in the earlier stages of the manuscript and her commitment to the ChatPal project.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Supplementary material

Supplemental data for this article can be accessed online at https://doi.org/10.1080/22423982.2024.2369349.

Additional information

Funding

References

- Onnela A, Hurtig T, Ebeling H. School professionals committed to student well-being. International Journal of Circumpolar Health. 2021;80(1):1873589. doi: 10.1080/22423982.2021.1873589

- Kessler RC, Amminger GP, Aguilar-Gaxiola S, et al. Age of onset of mental disorders: a review of recent literature. Curr Opin Psychiatry. 2007;20(4):359–14. doi: 10.1097/YCO.0b013e32816ebc8c

- Michel T, Tachtler F, Slovak P, et al. A review of youth mental health promotion apps towards their fit with youth media preferences. EAI Endorsed Trans Pervasive Health Technol. 2019;5(17):161419. doi: 10.4108/eai.13-7-2018.161419

- Piper S, Davenport TA, LaMonica H, et al. Implementing a digital health model of care in Australian youth mental health services: protocol for impact evaluation. BMC Health Serv Res. 2021;21(1):1–9. doi: 10.1186/s12913-021-06394-4

- Kostenius C, Gabrielsson S, Lindgren E. Promoting mental health in school - young people from Scotland and Sweden sharing their perspectives. Int J Ment Health Addict. 2019. doi: 10.1007/s11469-019-00202-1

- Fiorillo A, Gorwood P. The consequences of the COVID-19 pandemic on mental health and implications for clinical practice. Eur Psychiatr. 2020;63(1): e32, 1–2. doi: 10.1192/j.eurpsy.2019.3

- Nowrouzi-Kia B, Stier J, Ayyoub L, et al. The characteristics of Canadian University students’ mental health, engagement in activities and use of smartphones: a descriptive pilot study. Health Psychol Open. 2021;8(2):205510292110620. doi: 10.1177/20551029211062029

- Satre DD, Meacham MC, Asarnow LD, et al. Opportunities to integrate mobile app–based interventions into mental health and substance use disorder treatment services in the wake of COVID-19. Am J Health Promotion. 2021;35(8):1178–1183. doi: 10.1177/08901171211055314

- Abelsen B, Strasser R, Heaney D, et al. Plan, recruit, retain: a framework for local healthcare organizations to achieve a stable remote rural workforce. Hum Resour Health. 2020;18(1):63. doi: 10.1186/s12960-020-00502-x

- Flore J. Ingestible sensors, data, and pharmaceuticals: Subjectivity in the era of digital mental health. New Media Soc. 2022. doi: 10.1177/1461444820931024

- WHO. Health promotion evaluation: recommendations to policy-makers. World health organization. 1998 [cited 2022 Sept 14]. Available from: http://apps.who.int/iris/bitstream/handle/10665/108116/E60706.pdf?sequence=1

- WHO. Mental health: strengthening mental health promotion. World health organization. 2007 [cited 2022 Sept 16]. Available from: https://www.who.int/news-room/fact-sheets/detail/mental-health-strengthening-our-response

- CRC. United Nations conventions on the rights of the child. 1989 [cited 2022 Feb 16]. Available from: www.ohchr.org/en/professionalinterest/pages/crc.aspx

- Kidd W, Czerniawski G, editors. The student voice handbook. London: Emerald Publishing; 2011.

- Bergmark U, Kostenius C. Appreciative student voice model – reflecting on an appreciative inquiry research method for facilitating student voice processes. Reflective Pract. 2018;19(5):623–637. doi: 10.1080/14623943.2018.1538954

- Quest D, Upjohn D, Pool E, et al. Demystifying Ai in healthcare: historical perspectives and current considerations. Physician Leadership J. 2021;8(1):59–66.

- Fitzpatrick KK, Darcy A, Vierhile M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): a randomized controlled trial. JMIR Ment Health. 2017;4(2):e19. doi: 10.2196/mental.7785

- Potts C, Ennis E, Bond R, et al. Chatbots to support mental wellbeing of people living in rural areas: can user groups contribute to co-design? J Technol Behav Sci. 2021;6(4):666–666. doi: 10.1007/s41347-021-00222-6

- Zidaru T, Morrow EM, Stockley R. Ensuring patient and public involvement in the transition to AI‐assisted mental health care: a systematic scoping review and agenda for design justice. Health Expectations. 2021;24(4):1072–1124. doi: 10.1111/hex.13299

- Sweeney C, Potts C, Ennis E, et al. Can chatbots help support a person’s mental health? Perceptions and views from mental healthcare professionals and experts. ACM Trans Comput Healthcare. 2021;2(3): Article 25. 1–15. doi: 10.1145/3453175

- Timakum T, Xie Q, Song M. Analysis of E-mental health research: mapping the relationship between information technology and mental healthcare. BMC Psychiatry. 2022;22(1):1–17. doi: 10.1186/s12888-022-03713-9

- Carr S. “AI gone mental”: engagement and ethics in data-driven technology for mental health. J Ment Health. 2020;29(2):125–130. doi: 10.1080/09638237.2020.1714011

- Porche MV, Folk JB, Tolou-Shams M, et al. Researchers’ perspectives on digital mental health intervention co-design with marginalized community stakeholder youth and families. Front Psychiatry. 2022;13:867460. doi: 10.3389/fpsyt.2022.867460

- Fulmer R, Joerin A, Gentile B, et al. Using psychological artificial intelligence (tess) to relieve symptoms of depression and anxiety: randomized controlled trial. JMIR Ment Health. 2018;5(4):e64. doi: 10.2196/mental.9782

- Inkster B, Sarda S, Subramanian V. An empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: real-world data evaluation mixed-methods study. JMIR mHealth uHealth. 2018;6(11):e12106. doi: 10.2196/12106

- Greer S, Ramo D, Chang Y, et al. Use of the chatbot “vivibot” to deliver positive psychology skills and promote well-being among young people after cancer treatment: randomized controlled feasibility trial. JMIR mHealth uHealth. 2019;7(10):e15018. doi: 10.2196/15018

- Ly KH, Ly AM, Andersson G. A fully automated conversational agent for promoting mental well-being: a pilot RCT using mixed methods. Internet Interv. 2017;10(10):39–46. doi: 10.1016/j.invent.2017.10.002

- Fagerlund AJ, Kristiansen E, Simonsen RA. Experiences from using patient accessible electronic health records - a qualitative study within Sámi mental health patients in Norway. Int J Circumpolar Health. 2022;81(1):1, 2025682. doi: 10.1080/22423982.2022.2025682

- Lavoie JG, Stoor JP, Rink E, et al. Cultural competence and safety in circumpolar countries: an analysis of discourses in healthcare. Int J Circumpolar Health. 2022;81(1):1. doi: 10.1080/22423982.2022.2055728

- Ness TM, Munkejord MC. “All I expect is that they accept that I am a sami” an analysis of experiences of healthcare encounters and expectations for future care services among older South Sami in Norway. Int J Circumpolar Health. 2022;81(1):1. doi: 10.1080/22423982.2022.2078472

- Alvarez JC, Waitz-Kudla S, Brydon C, et al. Culturally responsive scalable mental health interventions: a call to action. Transl Issues In Psychological Sci. 2022;8(3):406–415. doi: 10.1037/tps0000319

- Waumans RC, Muntingh ADT, Draisma S, et al. Barriers and facilitators for treatment-seeking in adults with a depressive or anxiety disorder in a Western-European health care setting: a qualitative study. BMC Psychiatry. 2022;22(1). doi: 10.1186/s12888-022-03806-5

- Moshe I, Terhorst Y, Philippi P, et al. Digital interventions for the treatment of depression: a meta-analytic review. Psychol Bull. 2021;147(8):749. doi: 10.1037/bul0000334

- Abbass HA. Social integration of artificial intelligence: functions, automation allocation logic and human-autonomy trust. Cog Comput. 2019;11(2):159–171. doi: 10.1007/s12559-018-9619-0

- Shackel B. Usability - context, framework, definition, design and evaluation. Interact Comput. 2009;21(5–6):339–346. doi: 10.1016/j.intcom.2009.04.007

- Koć-Januchta MM, Schönborn JK, Roehrig C, et al. “Connecting concepts helps put main ideas together”: cognitive load and usability in learning biology with an AI-enriched textbook. Int J Educ Technol High Educ. 2022;19(1):1–22. doi: 10.1186/s41239-021-00317-3

- Häggström Westberg K. Exploring mental health and potential health assets in young people. Doctoral thesis, Halmstad University Dissertations no. 81. Halmstad University, Sweden; 2021.

- Kostenius C, Nyström L. “When I feel well all over, I study and learn better” - experiences of good conditions for health and learning in schools in the Arctic region of Sweden. Int J Circumpolar Health. 2020;79(1):1788339. doi: 10.1080/22423982.2020.1788339

- Tong A, Sainsbury P, Craig J, et al. Developed from: Tong A. Sainsbury, P. & Craig, J. (2007) consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2024;19(6):349–357. Available from: https://cdn.elsevier.com/promis_misc/ISSM_COREQ_Checklist.pdf

- Seligman M. Flourish: a new understanding of happiness and wellbeing and how to achieve them - Martin Seligman. London: Free Press; 2011.

- Nygren K, Janlert U, Nygren L. What happens with local survey findings? A study of how adolescent school surveys are disseminated and utilized in Swedish schools. Scand J Educ Res. 2013;57(5):526–543. doi: 10.1080/00313831.2012.696209

- World Medical Association. Declaration of Helsinki – ethical principles for medical research involving human subjects. 2008. https://www.wma.net/wp-content/uploads/2016/11/DoH-Oct2008.pdf

- Graneheim U, Lundman B. Qualitative content analysis in nursing research: concepts, procedures and measures to achieve trustworthiness. Nurse Educ Today. 2004;4(2):105–117. doi: 10.1016/j.nedt.2003.10.001

- Arctic Council. The arctic council task force on improved connectivity in the arctic. Improving connectivity in the arctic. 2019. Available from: https://oaarchive.arctic-council.org/items/12ef9093-26a2-4f7c-bb5d-f393859538ce

- Grové C. Co-developing a mental health and wellbeing chatbot with and for young people. Front Psychiatry. 2021;11:606041. doi: 10.3389/fpsyt.2020.606041

- Warne M, Snyder K, Gillander Gådin K. Participation and support–associations with Swedish pupils’ positive health. Int J Circumpolar Health. 2017;76(1):1373579. doi: 10.1080/22423982.2017.1373579

- Bickmore TW, Trinh H, Olafsson S, et al. Patient and consumer safety risks when using conversational assistants for medical information: an observational study of Siri, Alexa, and Google Assistant. J Med Internet Res. 2018;20(9):e11510. doi: 10.2196/11510

- Warne M, Snyder K, Gillander Gådin K. Promoting an equal and healthy environment: Swedish students’ views of daily life at school. Qual Health Res. 2013;23(10):1354–1368. doi: 10.1177/1049732313505914

- Nordic Welfare Center. Adolescent health in the Nordic region. Stockholm, Sweden: Health promotion in school settings, Nordic welfare centre; 2019.

- Skaug E, Czajkowski N, Waaktaar T, et al. The longitudinal relationship between life events and loneliness in adolescence: a twin study. Dev Psychol. 2024;60(5):966–977. doi: 10.1037/dev0001692

- Potts C, Lindström F, Bond R, et al. A multilingual digital mental health and well-being chatbot (ChatPal): pre-post multicenter intervention study. J Med Internet Res. 2023;25:e43051. doi: 10.2196/43051

- Lincoln YS, Guba EG. Naturalistic inquiry. Beverly Hills, CA: Sage Publications, Inc.; 1985.

Appendix 1.

COREQ (COnsolidated criteria for REporting Qualitative research) Checklist

COREQ (COnsolidated criteria for REporting Qualitative research) Checklist

Developed from: Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. International Journal for Quality in Health Care. 2007. Volume 19, Number 6: pp. 349 – 357.

Appendix 2.

Screenshots of ChatPal chatbot greeting, main menu and exercises