?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This study aimed to compare and assess different Geographic Object-Based Image Analysis (GEOBIA) and machine learning algorithms using unmanned aerial vehicles (UAVs) multispectral imagery. Two study sites were provided, a bergamot and an onion crop located in Calabria (Italy). The Large-Scale Mean-Shift (LSMS), integrated into the Orfeo ToolBox (OTB) suite, the Shepherd algorithm implemented in the Python Remote Sensing and Geographical Information Systems software Library (RSGISLib), and the Multi-Resolution Segmentation (MRS) algorithm implemented in eCognition, were tested. Four classification algorithms were assessed: K-Nearest Neighbour (KNN), Support Vector Machines (SVM), Random Forests (RF), and Normal Bayes (NB). The obtained segmentations were compared using geometric and non-geometric indices, while the classification results were compared in terms of overall, user’s and producer’s accuracy, and multi-class F-scoreM. The statistical significance of the classification accuracy outputs was assessed using McNemar’s test. The SVM and RF resulted as the most stable classifiers and less influenced by the software used and the scene’s characteristics, with OA values never lower than 81.0% and 91.20%. The NB algorithm obtained the highest OA in the Orchard-study site, using OTB and eCognition. NB performed in Scikit-learn results in the lower (73.80%). RF and SVM obtained an OA>90% in the Crop-study site.

Introduction

Over the last decade, the increasing use of Unmanned Aerial Vehicle (UAVs) platforms has offered a new solution for crop management and monitoring in the framework of Precision Agriculture (PA), as it enables very-high-resolution (VHR) images (Gaston et al., Citation2018; Hunt & Daughtry, Citation2018; Maes & Steppe, Citation2019; Olanrewaju et al., Citation2019; Pádua et al., Citation2017; Radoglou-Grammatikis et al., Citation2020; Tsouros et al., Citation2019). The UAVs allow obtaining data with high temporal frequency, primarily when monitoring small productive areas (Primicerio et al., Citation2012; Zhang & Kovacs, Citation2012). Besides, UAVs have low maintenance costs (Shakhatreh et al., Citation2018), are easy to manipulate (Sheng et al., Citation2010), and can use several sensors at the same time (Maes & Steppe, Citation2019).

To fully benefit from the advantages of using the UAV for data collection, it is crucial to know how to apply effective and automatic image analysis methods with a large computing capacity to obtain maps useful for crops monitoring (Brocks & Bareth, Citation2018; Jiménez-Brenes et al., Citation2017; Schirrmann et al., Citation2016). The availability of UAV’s images created new possibilities for vegetation classification and monitoring with very high spatial detail levels (De Luca et al., Citation2019; Modica et al., Citation2020; Teodoro & Araujo, Citation2016). However, this also posed a challenge for RS because of the more significant intraclass spectral variability (Aplin, Citation2006; Torres-Sánchez et al., Citation2015a) since a single pixel is generally smaller than the object on the earth’s surface that must be detected and cannot acquire all its characteristics (Einzmann et al., Citation2017; Teodoro & Araujo, Citation2016; Torres-Sánchez et al., Citation2015a). The Geographic Object-Based Image Analysis (GEOBIA) (Blaschke, Citation2010; Blaschke et al., Citation2014) approach addresses these issues. GEOBIA is a classification method that divides RS images into significant image objects and evaluates their characteristics on a spatial, spectral, and temporal scale (Hay & Castilla, Citation2006; Solano et al., Citation2019). GEOBIA generates image objects using different segmentation methods rather than analyze and classify individual pixels (Hofmann et al., Citation2011).

In the last decade, machine learning algorithms have attracted considerable attention in RS research (Crabbe et al., Citation2020; Liakos et al., Citation2018; Noi & Kappas, Citation2018) by offering new opportunities for agricultural mapping (M. Li et al., Citation2016; Liakos et al., Citation2018; Ma et al., Citation2017b; Perez-Ortiz et al., Citation2017; Rehman et al., Citation2019). Machine learning algorithms demonstrated effectiveness in classifying weeds (De Castro et al., Citation2018; Pérez-Ortiz et al., Citation2016), disease detection (Abdulridha et al., Citation2019a, Citation2019b; Sandino et al., Citation2018), and land cover (LC) mapping and assessment (M. Li et al., Citation2016; De Luca et al., Citation2019; Ma et al., Citation2017b; Noi & Kappas, Citation2018; Praticò et al., Citation2021; Qian et al., Citation2015). Among the object-based classification algorithms, Random Forests (RF) and Support Vector Machines (SVM) were considered, as reported in M. Li et al. (Citation2016) and Ma et al., Citation2017a), the most suitable supervised classifiers for GEOBIA, being their classification performances very satisfying (Mountrakis et al., Citation2011). However, in recent years, the K-Nearest Neighbour (KNN) algorithm has been widely used for object-based land classification (Crabbe et al., Citation2020; Griffith & Hay, Citation2018; K. Huang et al., Citation2016; Maxwell et al., Citation2018; Noi & Kappas, Citation2018; W. Sun et al., Citation2019). The Normal Bayes (NB) algorithm differs from the three already mentioned because it does not need any parameter setting, which, besides being time-consuming, could be subjective (Qian et al., Citation2015). Several software, both free/open-source and commercials, are available to users to implement GEOBIA algorithms (Teodoro & Araujo, Citation2016).

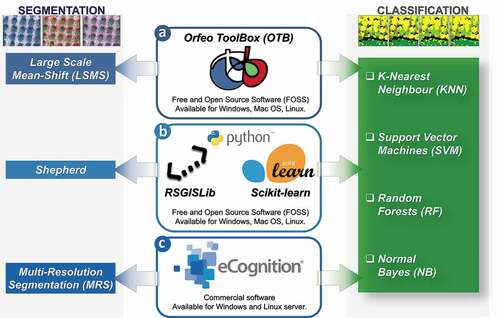

The main objective of the proposed paper is to compare the performance of three different GEOBIA approaches based on four machine learning algorithms (KNN, SVM, RF, and NB) in terms of model performance, accuracy, and requested processing time and to assess their applicability in the PA’s framework. A complete supervised classification of multispectral UAV imagery was implemented in three different software suites for each of the four aforementioned algorithms (). The first one, Orfeo ToolBox (OTB www.orfeo-toolbox.org) (Grizonnet et al., Citation2018), is a comprehensive free and open source geospatial suite. Two python libraries, Remote Sensing and Geographical Information Systems software Library (RGISLib) (Bunting et al., Citation2014, – https://www.rsgislib.org) and Scikit-learn (Pedregosa et al., Citation2011; Varoquaux et al., Citation2015, – https://scikit-learn.org), composing the second software solution we tested and that are freely implementable in different software environments. The third tested software is eCognition, a well-known commercial solution (Trimble Inc, Citation2020).

Figure 1. General scheme detailing the three different Geographic Object-Based Image Analysis (GEOBIA) segmentation and classification approaches as implemented in the three different software suites: a) Orfeo ToolBox (OTB); b) Remote Sensing and Geographical Information Systems software Library (RGISLib) and Scikit-learn Python libraries; and c) eCognition

Moreover, differently from previous research works dealing with the comparison of GEOBIA approaches (De Luca et al., Citation2019; M. Li et al., Citation2016b; Qian et al., Citation2015; Teodoro & Araujo, Citation2016; Vilar et al., Citation2020), we implemented the segmentation step using three different algorithms. The Large Scale Mean-Shift (LSMS) (Michel et al., Citation2015) in OTB, the Shepherd algorithm in the Image Segmentation Module of the RSGISLib (Shepherd et al., Citation2019), and the Multi-Resolution Segmentation (MRS) algorithm (Baatz & Schape, Citation2000) in eCognition.

A workflow was carried out on two different study sites characterized by two different crops, both socioeconomically relevant for the investigated area. The first study site (Orchard-study site) is a citrus orchard of bergamot (Citrus bergamia, Risso), labeled with the protected designation of origin (PDO) label Bergamotto di Reggio Calabria – olio essenziale (“Bergamot of Reggio Calabria – essential oil”). The second study site (Crop-study site) is an onion crop field (Allium cepa L.), labeled with the protected geographical indication label “Cipolla Rossa di Tropea IGP” (“Tropea’s Red Onion PGI”).

To our best knowledge, this research is the first that compares four machine learning algorithms implemented in three different software environments and therefore using three different segmentation algorithms. The aim was to implement a rapid and reliable agricultural mapping, easily replicable in different operational scenarios with minor changes.

This paper is structured as follows. In Section 2, in the first subsection (2.1), a description of the two investigated study sites was provided. The following subsection (2.2) shows the details about data acquisition, the sensor used, and flight data processing. The used software environments were briefly described in addition to the pre-processing, segmentation, and classification steps. Section 3 provides the results of segmentation and classification processes taking into consideration the obtained accuracy. Finally, sections 4 and 5 deal with discussions and conclusions, respectively.

Materials and methods

Study sites

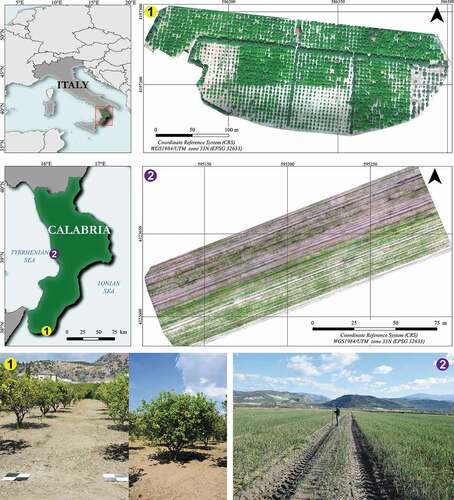

The Orchard-study site () is a citrus orchard (bergamot, Citrus bergamia) located in Palizzi (Province of Reggio Calabria, Calabria, Italy) (37°55ʹ06‖N, 15°58ʹ54‖E, 4 m a.s.l). Inside the orchard, there are long windbreak barriers made up of 20-year-old olive trees. The area where the orchard lies is 5.1 ha. The citrus orchard includes trees aged 5 to 25 years, which height ranges between 1.5 to 4 m, while canopies occupy a mean area of 6 m2. The windbreak barriers include trees with a height similar to neighboring citrus trees (age 25 years).

Figure 2. Geographical location of the two study sites, Orchard-study site, and Crop-study site. On the bottom, we provided two representative photos of them

Crop-study site () is an onion crop located in Campora S. Giovanni, in the municipality of Amantea (Cosenza, Italy, 37°55ʹ06” N, 15°58ʹ 54” E, 4 m a.s.l.). The farm is part of a consortium that includes other producers whose total cultivated area under the onion crop is more than 500 ha. The onions produced are a relevant typical product for this area’s economic and rural development (Messina et al., Citation2020a, Citation2020b). The study area examined is a field of 1 ha. The field is crossed by four paths of 2.5 m each, used for agricultural vehicles’ passage. Inside the field, the presence of weeds whose manual removal is carried out periodically is visible. Typically, the onions transplant took place in early September, while the harvest occurred from mid to the end of January.

Sensors and data acquisition

Imagery for the two study sites was acquired using the Tetracam µ-MCA06 snap (Tetracam Inc. – Chatsworth, NJ, USA) mounted on a multirotor UAV (Multirotor G4 Surveying Robot – Service Drone GmbH). The µ-MCA06 snap is a six sensors narrow-band multispectral camera equipped with its own global navigation satellite system (GNSS). Each sensor shoots simultaneously, and all images are then synchronized via the master channel ().

Table 1. Tetracam µ-MCA06 snap (Global shutter) sensor characteristics bands specification (wavelength and bandwidth)

Due to the different characteristics of the analyzed crops in the two study sites (an orchard and an annual crop), we carried out the surveys with different flight altitudes, 80 and 30 m a.g.l. for Orchard and Crop study sites, respectively. Consequently, the GSD was 4.1 cm for the Orchard-study site and 1.5 cm for the Crop-study site (). All flights were operated under constant scene illumination and cloud-free conditions.

Table 2. Flights and UAV datasets characteristics

All the acquired images were first extracted and then aligned, stacked, and radiometrically calibrated using Pix4Dmapper Pro (version 4.3 – Pix4D SA, Switzerland). The radiometric calibration was provided using three targets (50 cm x 50 cm polypropylene panes in black, white, and grey, respectively) surveyed using the field spectroradiometer Apogee M100. After this process, a reflectance orthomosaic was produced for each of the six bands of Tetracam µ-MCA06 snap and then stacked into a single multiband orthomosaic (B, G, R, RE, NIR1, and NIR2). Moreover, a Digital Surface Model (DSM) was created after the photogrammetric process. The resolution of the DSM of the Orchard-study site is 4.1 cm/pixel, while that of the Crop-study site is 1.5 cm/pixel. For more details about the survey and the photogrammetric workflow, including the radiometric calibration process, please refer to Modica et al. (Citation2020).

Software and libraries for the geographic object-based image analysis (GEOBIA)

OTB is an open-source toolkit developed by the French centre national d’études spatiales (CNES). It provides several applications for image segmentation and supervised or unsupervised classifiers (https://www.orfeo-toolbox.org/CookBook, last access 15 April 2021). In this research, we used the OTB version 7.0.0.

Remote Sensing and Geographical Information Systems software Library (RSGISLib) is an open-source library implemented by Bunting and Clewley in 2008 (Bunting et al., Citation2014). RSGISLib exploits Python language integrating a wide variety of image processing, segmentation, and object-based classification algorithms. RSGISLib uses several libraries, including GDAL, to read and write raster and vector formats (Clewley et al., Citation2014). In this research, RSGISLib library v.4.0.6 was used in the image segmentation step. Scikit-learn (Pedregosa et al., Citation2011) is another open-source package that exploits Python language integrating a wide variety of supervised and unsupervised machine learning algorithms. The Scikit-learn package covers four main topics related to machine learning: data transformation, supervised and unsupervised learning, and model evaluation (Hao & Ho, Citation2019; Pedregosa et al., Citation2011). We implemented the classification algorithms in Scikit-learn v.0.22.1 modules.

In addition, the commercial eCognition version 9.5.1 software (Trimble Inc, Citation2020) was used. This software allows a smooth implementation of many decision-making rules (made available by the software or customizable and implementable by the user) based on distinctive features derived from objects (Drǎguţ et al., Citation2014).

All packages were tested in a workstation with the following characteristics: CPU Intel Xeon E5-2697 v2, 64 GB RAM DDR3 1866 MHz, GPU NVIDIA K5000. The OTB and eCognition algorithms and the Scikit-learn modules ran under Windows 10 Pro operating system (OS) while RGISLib under Ubuntu 19.10 (Linux) OS. This was necessary since RGISLib was created and optimized for the Linux OS environment (Clewley et al., Citation2014; Shepherd et al., Citation2019).

Pre-processing and datasets

Several studies proved that the vegetation indices (VIs) enhanced spectral differences between vegetation/non-vegetation objects in UAV images (Gašparović et al., Citation2020; López-Granados et al., Citation2016; Solano et al., Citation2019; Torres-Sánchez et al., Citation2014; Villoslada et al., Citation2020). Even in our previous work (De Luca et al., Citation2019), the use of a VI, such as the normalized difference vegetation index (NDVI) (EquationEq. 1(Eq.1)

(Eq.1) ) (Rouse et al., Citation1973), improved the classification results. Therefore, before starting the GEOBIA workflow, a green normalized difference vegetation index (GNDVI) (EquationEq. 2

(Eq. 2)

(Eq. 2) ) (Gitelson et al., Citation1996) was calculated for both datasets to increase the spectral information.

We used this index, considering its higher sensitivity to the chlorophyll concentration than NDVI (Candiago et al., Citation2015; Gitelson & Merzlyak, Citation1998) and its high correlation also with other VIs such as the normalized difference red edge index (NDRE), the soil adjusted vegetation index (SAVI), and the green-red normalized difference vegetation index (GRNDVI), as in the case of our two analyzed datasets (Supplementary material, Figure S1).

For all the six bands of the orthomosaic and both GNDVI and DSM layers, a linear band stretching (rescale) in a range of 8 bits [0, 255] was performed. This operation aimed to equalize the range of values of each input variable, reducing the influence of differences in their magnitudes and the effect of potential outliers (Angelov & Gu, Citation2019; Immitzer et al., Citation2016). Subsequently, a layer stacking process was performed by merging the original six bands with GNDVI and DSM. Finally, each of the two datasets resulted in an eight-band orthomosaic: blue (B), green (G), red (R), red edge (RE), near-infrared 1 (NIR1), near-infrared 2 (NIR2), GNDVI and DSM. All these bands were used for all segmentation and classification processes.

Image segmentation algorithms

In the GEOBIA approach, image segmentation is the first process that fragments a digital image into a set of spatially adjacent segments composed of a group of pixels presenting homogeneous features (radiometric, geometric, etc.). Each object on the segmented image should represent a real object on the earth’s surface (Blaschke et al., Citation2014). In this study, three different segmentation approaches, each corresponding to the three different software environments, were implemented for each of the two datasets. shows the values concerning each algorithm’s main input parameters set for each and based on previous experiences (De Luca et al., Citation2019; Modica et al., Citation2020), a thorough exploration of the literature, and a trial-and-error approach.

Table 3. Main segmentation parameters implemented in each segmentation algorithm (Large-scale mean shift, LSMS; Shepherdalgorithm.; multi-resolution segmentation, MRS) and for both study sites

Large-scale mean-shift (LSMS)

LSMS is a segmentation workflow introduced by Fukunaga and Hostetler (Citation1975) and subsequently developed by Michel et al. (Citation2015). It consists of a series of dedicated and optimized algorithms that perform a tile-wise segmentation of extensive VHR imagery (www.orfeo-toolbox.org/CookBook; Michel et al., Citation2015). The LSMS workflow is composed of four successive steps that produce a vector file with artifact-free polygons. Each polygon corresponds to segmented objects containing the radiometric mean and variance of each band. The provided workflow can be synthesized as follow. The first step (1) performs an image smoothing using the LSMS-Smoothing application. In the second step (2), the LSMS-Segmentation algorithm segments the image grouping of all neighboring pixels based on a range distance (range radius) and a spatial distance (spatial radius). The range radius is defined as the threshold of the spectral signature Euclidean distance among features (expressed in radiometry units), while the spatial radius defines the maximum distance to build the neighborhood from averaging the analyzed pixels. Several trial-and-error tests of different range radius values were performed until the best segmentation (visually assessed) was obtained. In the third step (3), LSMS-Merging, the small objects are merged with the nearest radiometrically more similar ones. The last step (4), LSMS-Vectorization, concerns the vectorization of the merged image objects. In the final output, the mean and standard deviation of spectral features are provided for each polygon. The set parameters are shown in .

Shepherd algorithm

Image segmentation on Python was executed using the Shepherd algorithm (Shepherd et al., Citation2019) implemented in the RSGISLib v4.0.6. This algorithm is based on an iterative elimination method, consisting of four steps: (1) A K-means clustering algorithm (MacQueen, Citation1967) is used for a first seeding step that identifies the unique spectral signatures within the image, assigning pixels to the associated cluster center (Z. Wang et al., Citation2010); (2) a clumping process creates unique regions; (3) iterative removal of that region below the minimum mapping unit threshold and merging it to the neighboring clump spectrally closest (assessed through the Euclidean distance). Finally, (4) the obtained clumps are relabeled to be sequentially numbered (Shepherd et al., Citation2019). Also, in this case, the following required parameters were set with a trial-and-error approach: the number of clusters requested by the K-Means algorithm, which defines the spectral separation of the segments (Shepherd et al., Citation2019); the minimum segment size, which represents the minimum mapping unit threshold for the elimination; and the number of maximum iterations. The segmented image was finally vectorized using the RSGISLib Vector Utils module. Feature extraction was performed with the RSGISLib Image Calculations module to obtain the mean and the standard deviation spectral features for each segment. The set parameters are shown in .

Multi-resolution segmentation (MRS)

The MRS algorithm (Baatz & Schape, Citation2000) implemented in eCognition is a bottom-up region-merging strategy starting with one-pixel objects (Aguilar et al., Citation2016). As the first step, MRS identifies single objects of a pixel’s size and then merges them with neighbor objects following a criterion of relative homogeneity. Colour homogeneity is assessed by the standard deviation of the spectral values. The deviation from a compact (or smooth) shape allows measuring the shape homogeneity. The shape parameter deals with the geometric form’s influence on the segmentation compared to the color, and its value ranges between 0 and 0.9. In contrast, the compactness parameter takes into account the combined effect of shape and smoothness. Concerning the scale parameter, several scholars showed its importance in determining the size and dimension of objects generated by the segmentation (De Luca et al., Citation2019; Ma et al., Citation2017b, Citation2015; Modica et al., Citation2020; Witharana & Civco, Citation2014; Yang et al., Citation2019). The higher the scale parameter values, the larger the obtained image objects, while, conversely, its low values resulting in smaller image objects. The set parameters are shown in .

Image classification algorithms

For each of the two study sites, the following four classification algorithms were implemented and assessed, KNN, SVM, RF, and NB. As previously described (section 2.1), we choose these two study sites with significantly diverse cultivations (i.e., an annual crop and a tree orchard) to compare the performance and the obtained results of the three different software environments and four different supervised classification algorithms. Due to these different scenarios, the classification step was implemented based on five LC classes in the Orchard-study site (i.e., Bergamot, Olive, Grass, Bare Soil, Shadows) and three LC classes in the Crop-study site (i.e., Onion, Weeds, Bare soil).

After the segmentation process, the choice of trainers is one of the most critical steps affecting the final quality of the classification results (Ma et al., Citation2015). Since the segmentation step was carried out autonomously in each of the three software environments, i.e., obtaining a different number and shape of segments, we selected the training objects from a shared set of points to be as objective as possible. The existing literature on training sample size and its effect on classification accuracy offers a varying range of answers to this problem, with variable results depending on several factors, such as sensor, algorithm, analyzed landscape, etc. (Ma et al., Citation2015; Maxwell et al., Citation2018; Millard & Richardson, Citation2015; Noi & Kappas, Citation2018; Qian et al., Citation2015; Ramezan et al., Citation2021). In practice, the number of object-based training samples is usually determined based on the user experience (Qian et al., Citation2015). However, these studies commonly observed that different algorithms become insensitive to the increase of sample size: e.g., in Qian et al. (Citation2015), this happened (for all the KNN, NB, SVM, and DT algorithms) when the size of the samples is more than 125 per class. Therefore, to train each of the four supervised algorithms, two samples of 800 and 500 points for Orchard and Crop study sites, respectively, were randomly sampled on the QGIS environment. The total number of random points was chosen to potentially have about 160 training samples per class for both study sites. Considering the high number of sample points, the random sample approach allows that the number of trainers per class to be almost proportional to their abundance and quantitative distribution on the scene, thus avoiding that individual classes become over-represented (Maxwell et al., Citation2018; Millard & Richardson, Citation2015; Ma et al., Citation2015). Additionally, to accept the resulting distribution, we fixed as a threshold that each class must be represented by at least 5% of the total number of trainers (40 for the Orchard-study site and 25 for the Crop-study site). We used this threshold based on the work of Noi and Kappas (Citation2018) that used this percentage as a minimum training size in their tests achieving good accuracy results with SVM, RF and KNN, while the tests by Qian et al. (Citation2015) showed that good accuracy levels can still be reached with a number of 25 trainers per class using SVM, KNN, NB and Decision Tree algorithms.

Subsequently, these points were superimposed on each of the three obtained segmentation output files to select and extract by position the polygons that include them. These selected polygons were then labeled with the corresponding LC class by an on-screen interpretation done by the same operator. The visual interpretation was supported by a good knowledge of the two study sites by the operator. All the pixels that composed a reference polygon belonged to a specific LC for all three segmentation results. Each LC class’s spectral signatures were characterized based on the respective training polygons for each of the three software environments (Supplementary material, Figure S2). Moreover, for each training polygon, we extracted the features’ objects (mean and standard deviation). The KNN is a non-parametric supervised classifier algorithm (Aguilar et al., Citation2016) widely used in GEOBIA applications (Crabbe et al., Citation2020; Georganos et al., Citation2018; Griffith & Hay, Citation2018; K. Huang et al., Citation2016). This algorithm assigns a class to an object based on the class to which the neighboring objects belong in an N-dimensional feature space. The value of the parameter k defines the number of neighbors to be analyzed in the feature space, the only one to be set in the KNN that determines the classifier’s performance (M. Li et al., Citation2016; Qian et al., Citation2015). Higher values of k reduce the effect of noise in classification, although they lead to less distinction between the different classes’ boundaries, consequently a higher generalization (Maxwell et al., Citation2018; Trimble Inc, Citation2020).

The SVM is a supervised non-parametric classifier algorithm based on kernel functions belonging to the statistical learning theory algorithms (Cortes & Vapnik, Citation1995; Vapnik, Citation1998), which allows performing a multiclass classification. The SVM learns the boundary between training samples belonging to different classes to train the algorithm, projecting them into a multidimensional space and finding a hyperplane, or a set of hyperplanes that maximize the separation of the training dataset between the predefined number of classes (C. Huang et al., Citation2002; Mountrakis et al., Citation2011). In this study, we implemented a linear kernel-type function with a C model-type. The C parameter deals with misclassification size allowed for non-separable training data and regulates the training data’s rigidity (Cortes & Vapnik, Citation1995; Vapnik, Citation1998).

The RF is a machine learning algorithm proposed by Breiman (Citation2001) and improved by Cutler et al. (Citation2007). RF is a decisional tree’s method that randomly creates a forest comprising many decision trees, each independent from the other (M. Li et al., Citation2016). All the trees are trained with the same features but on different training sets derived from the original one, utilizing a bootstrap aggregation, namely bagging. The algorithm generates an internal and impartial estimation of the generalization error using “out-of-bag” (OOB) samples, which include observations that are in the original data and that do not recur in the bootstrap sample (Cutler et al., Citation2007). The main parameters that have to be set to determine the process performance are the number of trees, the maximum tree depth, and the minimum number of samples per node (Belgiu & Drăguţ, Citation2016; Breiman, Citation2001).

The NB is a parametric supervised classifier based on Bayes’ probability theorem (Liakos et al., Citation2018; Qian et al., Citation2015; Rehman et al., Citation2019) and uses a training set to calculate the probability that an object belongs to a given category or not. The algorithm estimates mean vectors and covariance matrices for every class using them for prediction. This classifier assumes that the feature data distribution function is a Gaussian mixture, one component per class (Bradski & Kaehler, Citation2008), and does not require parameter setting (Qian et al., Citation2015).

To ensure an objective comparison of the obtained classifications and the software performance, we set the same parameter values for the three classifiers (KNN, SVM, and RF) that require parameter setting, in the three software environments (OTB, Scikit-learn, and eCognition) and for both the study-sites. We tested several parameter values concerning the RF and SVM parameters based on those recommended in another previous work carried out by the same research group (De Luca et al., Citation2019). Moreover, in selecting the more effective values of the algorithms’ parameters, we also have taken into account several research studies that dealt with this issue (M. Li et al., Citation2016; Noi & Kappas, Citation2018; Qian et al., Citation2015; Rodriguez-galiano et al., Citation2012; H. Sun et al., Citation2018). In , the parameter values set for each of the three classification algorithms were reported.

Table 4. Main parameter values set in all the three software environments for each of the three implemented classification algorithms that require parameter setting, K-Nearest Neighbour (KNN), Support Vector Machines (SVM) and Random Forest (RF)

Accuracy assessment

Segmentation accuracy assessment

In order to statistically analyze the obtained segmentations, we calculated several descriptive statistics: number, area, perimeter, etc., of the segments. Additionally, we calculated the Mean Shape Index (MSI) (Equationeq. 3)(eq. 3)

(eq. 3) by the ratio between the sum of the object perimeter (m) and the square root of the object area (m2), divided by the total number of objects, as follows:

Where N is the total number of objects, pij is the perimeter, and aij the jth object area, while π is inserted as a constant value to adjust to a circular form. MSI assumes values of 1 for circular objects and increases without limits with the increasing complexity and irregularity of the analyzed objects.

Several object-based validation methods have been developed to evaluate the segmentation results (Clinton et al., Citation2010; Costa et al., Citation2018; Möller et al., Citation2013; Su & Zhang, Citation2017; Su Ye et al., Citation2018; Zhang et al., Citation2015). These are based on different objective metrics, criteria, and indices, describing and quantitatively evaluating various aspects of the obtained results. They are mainly divided into supervised and unsupervised approaches, in which they differ because the former requires a set of reference data on which to base the comparative calculations (Belgiu & Drǎguţ, Citation2014; Costa et al., Citation2018; Su & Zhang, Citation2017; Ye et al., Citation2018). Many studies evaluate the quality of segments by considering a criterion based on either geometric or non-geometric characteristics (Costa et al., Citation2018). Among the latter, the spectral separability through the Bhattacharyya Distance (BD) (Bhattacharyya, Citation1943) is one of the most used (Costa et al., Citation2018; D. Li et al., Citation2015; Radoux & Defourny, Citation2008; L. Wang et al., Citation2004; Xun & Wang, Citation2015). It is assumed that a good segmentation corresponds to a relatively high spectral separability between two different classes and a minimum within the polygons of the same LC class, as a function of the LC classes they represent (Costa et al., Citation2018).

Our study used both geometric and non-geometric metrics to have a complete characterization of the errors obtained.

In the geometric approach, each dataset’s segmentation results were evaluated by determining the geometrical similarity level with the correspondent reference object manually digitalized, using supervised geometric metrics. The comparison was carried out by overlapping and associating each reference polygon (divided by LC classes) with the respective segmented polygons using the matching criteria proposed in Clinton et al. (Citation2010), assuming:

X = {xi: i = 1 … n} the set of n reference objects;

Y = {yj: j = 1 … m} the set of m objects deriving from the segmentation process;

area (xi ∩ yj) the area of geo-intersection of reference object xi and segmented object yi;

Ŷi a subset of Y, representing all yj that intersect a reference object xi such that Ŷi = {yj: area(xi ∩ yj) ≠ 0};

a subset of Ŷi such that:

- Yai = {yj: centroid(xi) in yj}

- Ybi = {yj: centroid(yj) in xi}

- Yci = {yj: area(xi ∩ yj)/area(yi) > 0.5}

- Ydi = {yj: area(xi ∩ yj)/area(xi) > 0.5}

so, .

The validation metrics were calculated on the set (

).

The association criteria and subsequent geometric and non-geometric metrics were applied to the three representative square sample areas (20 m x 20 m in the Orchard-study site; 2 m x 2 m in the Crop-study site) randomly distributed over each field (). These sample areas were sized, taking into account the different GSD (1.5 cm in the Orchard-study site and 4.1 cm in the Crop-study site) and the dimensional difference of the class objects of the two study sites. The geometric metrics used to validate the segmentation were the OverSegmentation (OSeg; Equationeq. 4)(eq. 4)

(eq. 4) and the UnderSegmentation (USeg; Equationeq. 5)

(eq. 5)

(eq. 5) . Moreover, these two metrics are combined using a third index, the D index (Equationeq. 6

(eq. 6)

(eq. 6) ), based on their root-mean-square (Clinton et al., Citation2010):

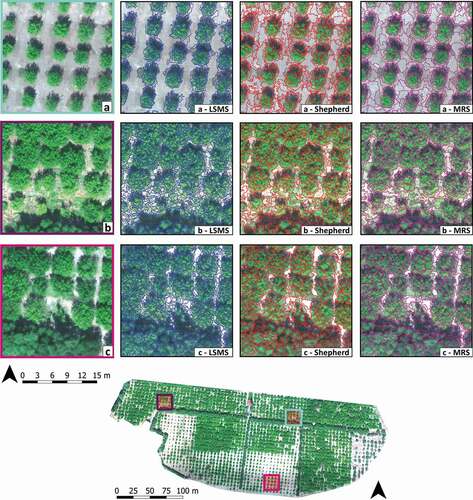

Figure 3. The figure shows a comparison of the three obtained segmentations in the Orchard-study site showed in three representative sample areas. According to the three sample areas (a, b, and c), the figure is organized in three rows and in four columns. In the first column, the RGB images are provided while, in the second column, the Large-Scale Mean-Shift (LSMS) segmentation (in blue), in the third column the Shepherd algorithm segmentation (in red), and in the fourth column, the Multi-Resolution Segmentation (MRS) (in purple)

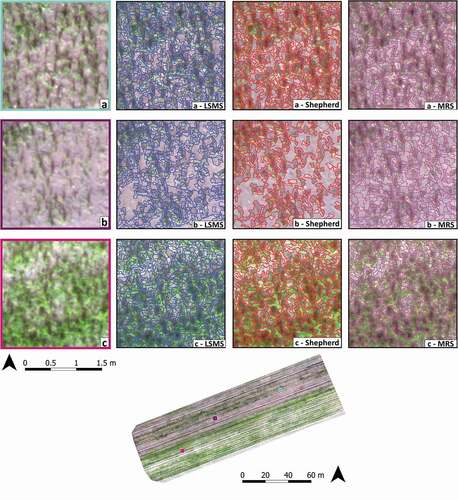

Figure 4. The figure shows a comparison of the three obtained segmentations in the Crop-study site showed in three representative sample areas. According to the three sample areas (a, b, and c), the figure is organized in three rows and in four columns. In the first column, the RGB images are provided while, in the second column, the Large-Scale Mean-Shift (LSMS) segmentation (in blue), in the third column the Shepherd algorithm segmentation (in red), and in the fourth column, the Multi-Resolution Segmentation (MRS) (in purple)

Each object receives an individual metric value, referred to as local assessment. The assessment has been translated in global terms by averaging the values resulting from every single calculation of

to obtain a single value that expresses the complete segmentation’s validation (Clinton et al., Citation2010; Costa et al., Citation2018). The three metrics’ values range from 0 to 1, where 0 defines the optimal overlapping (perfect segmentation) and 1 the worst. The D index can be considered a combined descriptor of the entire classification quality (Clinton et al., Citation2010). In an ideal case in which the segmented polygon yj is identical to the reference one xi, all the metrics achieve the lowest value of 0.

This study adopted the BD index (Equationeq. 6)(eq. 6)

(eq. 6) method as a non-geometric approach to validate the segmentation. It is computed as below (D. Li et al., Citation2015; Xun & Wang, Citation2015) (Equationeq. 7

(eq. 7)

(eq. 7) –Equation9

(eq. 9)

(eq. 9) ):

where x, y can represent the two different LC classes (i.e., interclass analysis) or two different objects of the same class (i.e., intraclass analysis). M(x) and M(y) are the matrices composed of mean reflectance values of all polygons analyzed for each spectral band. S(x) and S(y) are the covariance matrices of M(x) and M(y), respectively. T[], I[] and det[] refer to transpose, inverse, and determinant of a matrix, respectively. BD values range between 0 and 2, with higher values representing higher (i.e., better) separability.

The mean BD value was calculated for each segmentation resulting from the different algorithms through the average of the BDs calculated between the defined LC classes (interclass analysis). Then, the BD was calculated iteratively among the various polygons of the same class. The obtained values were averaged to have BD’s mean value for each class (intraclass analysis).

Classification accuracy assessment

For all the tested GEOBIA classifications, a sample of 500 random points was created for each of the two datasets scenes to carry out a proper classification accuracy validation. We implemented the simple random sample selection of these points considering that we compared different classification algorithms, whose classifications could lead to different class compositions. Other sample schemes, such as stratified random or equalized stratified random sampling, would require higher user influence and a priori knowledge of area and position occupied by each class, decreasing the objectivity of the comparison and increasing processing times. In an operational workflow of PA, stratified random schemes would not be suitable in terms of cost and benefits. Furthermore, given the very high number of sample points (500) randomly distributed over the whole scene, they represent a good sample, also in terms of class composition.

Every point was labeled according to the defined LC classes by visual interpretation (reference truth). Subsequently, for each of the four classifiers and in each of the three different segmented vector layers, all polygons containing the sampling points were selected. For each of them, the reference truths were compared with the classified LC class. The producer’s accuracy (the ratio between the correctly classified objects in a given class and the number of validation objects for that class), the user’s accuracy (the ratio between the correctly classified objects in a given class and all the classified objects in that class), and the overall accuracy (OA) (i.e., the total percentage of correct classification) were calculated (Congalton & Green, Citation2019). Finally, from these measures, we calculated the F-score (Goutte & Gaussier, Citation2005; Ok et al., Citation2013; Shufelt, Citation1999; Sokolova et al., Citation2006) for every single class (Fi) (Equationeq.10)(eq. 10)

(eq. 10) and the multi-class F-score (FM) (Equationeq. 11

(eq. 11)

(eq. 11) ) (Sokolova & Lapalme, Citation2009) that represents the mean of all LC classes.

The F-score represents the harmonic mean of its components, recall (r), and precision (p). Considering that r and p have the same meaning of producer’s and user’s accuracy, respectively, they were replaced in Equationequations 10(eq. 10)

(eq. 10) and Equation11

(eq. 11)

(eq. 11) . The Fi and the FM share the same formula (Equationeq. 10

(eq. 10)

(eq. 10) and Equation11

(eq. 11)

(eq. 11) ).

Where i is a single LC class and n the total number of LC classes while the producer’sM accuracy is as follows (Equationeq. 12)(eq. 12)

(eq. 12) :

And user’sM accuracy is (Equationeq. 13)(eq. 13)

(eq. 13) :

Statistical comparison between classification results: McNemar’s test

An additional objective comparison was carried out in order to evaluate the relative significance of differences observed between classifications, the McNemar’s test (Dietterich, Citation1998; McNemar, Citation1947; Foody, Citation2004). It is a non-parametric test based on a contingency table of 2 × 2 dimension constructed using the binary distinction between correct and incorrect class allocations, widely used to determine if statistically significant differences are present between pairs of classifications (Y. Gao et al., Citation2011); Millard & Richardson, Citation2015; Quan et al., Citation2020; Belgiu & Csillik, Citation2018; Kavzoglu, Citation2017; Dietterich, Citation1998; Sokolova et al., Citation2006). This test is suitable to assess the difference of multiple classification accuracy performances when the same set of validation and training samples are used (Foody, Citation2004; Y. Gao et al., Citation2011). Supposing the comparison of two classification outputs (a and b), the McNemar’s test can be expressed as (Equationeq. 14)(eq. 14)

(eq. 14) :

Where fab is the number of validation samples incorrectly classified by classification a but correctly classified by the classification b, and fba the number of validation samples incorrectly classified by classification b but correctly classified by classification a. McNemar’s test uses a Chi-squared distribution with one degree of freedom (Dietterich, Citation1998; Foody, Citation2004), and the associated p-value is calculated. A p-value smaller than 0.05 indicates that the differences between the two observations a and b are statistically significant, while a p-value higher than 0.05 indicates that the differences between the two observations are statistically not significant.

The contingency table was constructed using the validation polygons already employed for accuracy assessment. Considering the comparison of each pair combination of classifications, the four values that constitute the 2 × 2 contingency table are: i) the number of polygons correctly classified by both classifications (Yes/Yes); ii) the number of polygons incorrectly classified by both classifications (No/No); iii) the number of polygons correctly classified by the first classification but incorrectly classified by the second classification (Yes/No); iv) the number of polygons incorrectly classified by the first classification but correctly classified by the second classification (No/Yes). The McNemar’s test was computed using the contingency_tables.mcnemar function of the Python module statsmodule.

Results

Analysis and comparison of the obtained segmentations

To quantitatively assess the segmentation performance, several geometric (OSeg, USeg and D index) and non-geometric (BD) metrics were computed for each algorithm and dataset how explained in Section 2.7.1. Before that, each process was timed, a series of descriptive metrics were calculated and compared, and a prior visual assessment was carried out. To show the different segmentations obtained using LSMS, Shepherd and MRS algorithms, in , we reported the three representative subsets in which the segmentation accuracy was assessed. In each of them, the segmentation layer was superimposed on the RGB image using a different color for each algorithm, blue (LSMS), red (Shepherd algorithm), and purple (MRS).

For each of the three implemented segmentation algorithms and the two study sites, reports the processing time, the number of segments, and the following main characteristics of obtained segments: a) area, b) perimeter, c) perimeter/area ratio, and d) mean shape index (MSI). For each characteristic, the mean (μ), the standard error (SE), and the standard deviation (σ) were provided. The processing time was calculated considering the time needed to calculate the spectral features and excluding the time required for the project preparation and parameters setting. As explained in section 2.3, the tests were performed using the same workstation.

Table 5. The table shows the most descriptive metrics of the obtained segmentations and the requested processing time for each segmentation algorithm (Large-scale mean-shift, LSMS; Shepherdalgorithm.; multi-resolution segmentation, MRS) in both study sites

Referring to both study sites, the processing time lasted, respectively, 28 and 37 minutes using LSMS, 74 and 916 in Shepherd algorithm, 2 and 4 minutes using MRS. In the Orchard-study site, the segmentation processes provided a number of segments ranging from 25,396 (Shepherd algorithm) to 63,298 (LSMS). The MRS algorithm produced 35,342 segments. In the Crop-study site, the number of segments ranging from 1,077,647 of the Shepherd algorithm to 1,725,820 of the MRS algorithm, while the LSMS produced 1,580,899 segments. In this study site, both MRS and LSMS segmentation algorithms produced a similar and large number of segments compared to those obtained using the Shepherd algorithm. These aspects can be noticed looking at the values of the mean perimeter length of the segments and, in the same case, the shape of the single segments seems to be very similar, according to the close values of MSI. The total number of segments and their mean area (in m2) with standard deviation, reported in , gives a useful general measure of the relationship between size and number of image objects, which has a direct impact on the subsequent classification steps (Ma et al., Citation2015; Torres-Sánchez et al., Citation2015a). The mean segment size, as more detailed in , varies between 0.81 ± 0.04 m2 (σ = 9.01) (LSMS) and 2.02 ± 0.01 m2 (σ = 1.10) (Shepherd algorithm), and between 0.006 ± 0.00 m2 (σ =0.008) (LSMS) and 0.009 ± 0.00 m2 (σ = 0.06) (Shepherd algorithm), for Orchard and Crop study sites, respectively. The mean perimeter length follows the trend of the mean segment size, varying from 7.89 ± 0.06 m (σ = 14.80) (LSMS) and 15.88 ± 0.05 m (σ = 7.12) (Shepherd algorithm) for Orchard-study site, and between 0.45 ± 0.00 m (σ = 0.18) m (MRS) and 0.60 ± 0.00 m (σ = 1.34) (Shepherd algorithm), for Crop-study site. MSI result is very similar for segmentations obtained using LSMS and MRS in the Orchard-study site (2.60 and 2.64, respectively). Similarly, it is higher for the Shepherd algorithm (3.17), therefore denoting segments more complex and more irregular than the other two algorithms. In the Crop-study site, the obtained segments are more compact and homogeneous. This is true especially for MRS and LSMS algorithms (MSI of 1.71 and 1.81, respectively), while the Shepherd algorithm led to objects with more irregular geometries (MSI = 1.913).

shows the supervised segmentation metrics (i.e., geometric and non-geometric) calculated for each study site and algorithm. These included two geometric metrics, that is, OSeg, USeg, representing over- and under-segmentation errors calculated separately and combined later to provide a third complementary metric, the D index. The non-geometric BD expresses the spectral separability between polygons and is defined at inter- and intra-class levels.

Table 6. Segmentation accuracy obtained applying the three geometric segmentation indices (OSeg, OverSegmentation; USeg, UnderSegmentation; D index) and the non-geometric segmentation index (BD, Bhattacharyya index) at inter- and intra-class level. Data are referred to the three sample areas in each study site and for each of the three segmentation algorithms (Large-scale mean-shift, LSMS; Shepherd algorithm.; multi-resolution segmentation, MRS)

The segmentation evaluation metrics indicated a large discrepancy between over- and under-segmentation errors but with very similar behavior for all three algorithms in both study sites. It is evident how OSeg remains high in both study sites, never going below 0.625 (Shepherd algorithm., in Crop-study site), with a maximum value reached by LSMS in Orchard-study site (0.724). The USeg values are low for all the three algorithms and both study sites, ranging from 0.142 (MRS in Crop-study site) to 0.222 (Shepherd algorithm in Orchard-study site). For both study sites, the highest values of the USeg index concerned the Shepherd algorithm (0.222 and 0.205, respectively). The D index’s highest value was reached in the Orchard-study site by LSMS (0.724); the same study site’s lower value was obtained with the MRS algorithm (0.571). In the Crop-study site, the D index reached the lowest value (0.526) using the MRS algorithm.

The BD, which expresses the mean spectral separability, as detailed in section 2.7, was calculated both between the different LC classes (BD inter-class) and between the same LC class’s polygons (BD intra-class). The results show how the segmented polygons have a high average separability between different classes, finding very similar values between the various algorithms and study sites (values ranging from 1.634 to 1.767). Within the same LC class, the spectral separability remains low with a minimum value of 0.584 for MRS and the highest value of 0.803 for the Shepherd algorithm, both in the Crop-study site.

Classification accuracy overview

A synoptic accuracy overview of the obtained results for all the four implemented classifiers was provided in , expressed as overall, producer’s and user’s accuracy values, and Fi (single-class) FM (multi-class) values.

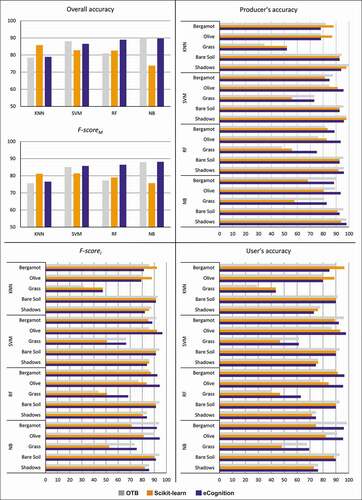

Figure 5. Orchard-study site. User’s, Producer’s and Overall accuracies, and F-scorei (single-class) and F-scoreM (multi-class) values obtained for each algorithm (K-Nearest Neighbour, KNN; Support Vector Machines, SVM; Random Forests, RF; Normal Bayes, NB) in every software environment (Orfeo ToolBox, OTB; Scikit-learn; eCognition)

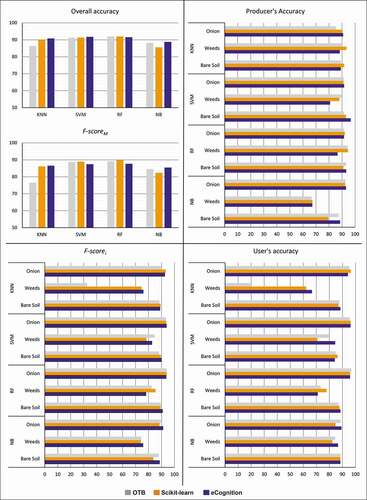

Figure 6. Crop-study site, user’s, producer’s and overall accuracies, and F-scorei (single-class) and F-scoreM (multi-class) values obtained for each algorithm (K-Nearest Neighbour, KNN; Support Vector Machines, SVM; Random Forests, RF; Normal Bayes, NB) in every software environment (Orfeo ToolBox, OTB; Scikit-learn; eCognition)

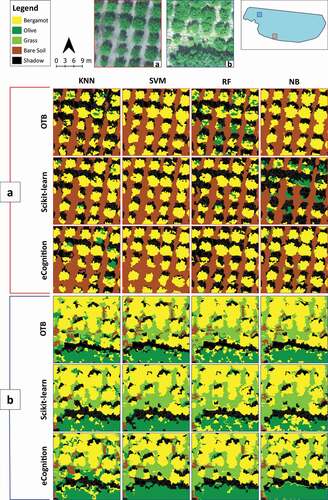

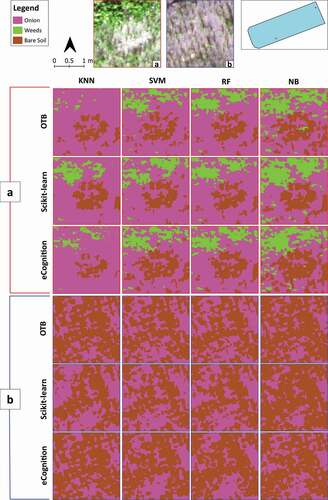

Moreover, 4 columns x 3 rows of visual comparison matrices were implemented to comprehensively picture the obtained image classification results in the two study sites (). In these matrices, images are organized according to the four classification algorithms in matrix columns (KNN, SVM, RF and NB) and the three software environments (OTB, Scikit-learn and eCognition) in matrix rows. To this end, we choose two sample areas of the whole scenes for each of the two study sites to show and visually compare the obtained classifications ().

Figure 7. Orchard-study site. The figure reports two visual comparison matrices showing the obtained classification results in two significant portions of the whole scene (a and b). Their visualization in visible (RGB), and their localization on the study site are provided on the top side. In each of the two visual matrices (a and b), the images are organized according to the four classification algorithms, K-Nearest Neighbour (KNN), Support Vector Machines (SVM), Random Forests (RF), and Normal Bayes (NB) (matrix columns), and the three software environments, Orfeo ToolBox (OTB), Scikit-learn and eCognition (matrix rows)

Figure 8. Crop-study site. The figure reports two visual comparison matrices showing the obtained classification results in two significant portions of the whole scene (a and b). Their visualization in visible (RGB), and their localization on the study site are provided on the top side. In each of the two visual matrices (a and b), the images are organized according to the four classification algorithms, K-Nearest Neighbour (KNN), Support Vector Machines (SVM), Random Forests (RF), Normal Bayes (NB) (matrix columns), and the three software environments, Orfeo ToolBox (OTB), Scikit-learn and eCognition (matrix rows)

OA and FM’s highest values were obtained in the Orchard-study site using the NB algorithm, 89.68% and 89.76% (OA) on OTB, and 87.88% and 88.15% (FM), on eCognition. The same algorithm performed the lowest values on Scikit-learn (73.80% and 75.73%, for OA and FM, respectively). The SVM and RF algorithms follow at performance level with slightly lower OA values on eCognition (86.57% and 88.96%, respectively) and gradually lower on OTB (88.0% and 81.0%) and Scikit-learn (82.80% and 82.60%, respectively). In Scikit-learn, the highest OA was reached by the KNN algorithm, while the FM’ highest value with SVM.

OA of RF and SVM was above 90% in all three software suites in the Crop-study site. For the other two algorithms, KNN and NB, the lowest OA performance was achieved on OTB and Scikit-learn, respectively. Observing the single class accuracies, in the Bergamot class, the highest Fi value was found using the NB algorithm on OTB (93.95%) while the respective user’s accuracy was 98.46%, and the producer’s accuracy corresponded to 89.82%. As for the Olive class, the highest values of accuracies were reached by SVM performed on eCognition (97.78%, 95.65%, and 96.70% for user’s, producer’s, and Fi accuracies, respectively). We found the lowest user’s accuracy values in the Grass class, ranging from 29.03% (KNN on OTB) to a maximum value reached in eCognition by the NB classifier (69.35%). The Fi follows the trend with the lowest value equal to 31.58% (KNN on OTB). Focusing on the Shadows LC class, the producer’s accuracy never falling below 91.84%, reaching 100% using the KNN algorithm implemented on OTB. The users’ accuracy has lower values but showed the same trend, as well as Fi, whose highest value is 87.50% (KNN on OTB).

In the Crop-study site, as for the Onion class user’s accuracy values, there are not many differences in the obtained results of the implemented algorithms in the different software environments, maintaining averaged values around 95.0%. Only with the NB algorithm, slightly lower values were obtained. All the algorithms performed values of the producer’s accuracy higher than 90.0%, except for KNN implemented on OTB (85.27%). The Fi has values not lower than 90.0% except for NB on Scikit-learn (88.45%). As far as the Weeds class is concerned, the highest values of the user’s accuracy were obtained using NB (86.67%) and SVM (84.44%), both performed in eCognition. We obtained the lowest value implementing KNN in OTB, which provided a value of 20.0%.

The difference in accuracy between every pair of classifications was statistically analyzed using McNemar’s test. In total, 66 combinations of classification were statistically compared for each study site. In are reported the resulted p-values for each classification pair, indicating when the difference was statistically non-significant (p-value > 0.05) or significant (p-value < 0.05). For the Orchard-study site, the accuracy results of NB, performed in Scikit-learn, are significantly different from those of all other classifications. Similar results are showed for KNN of OTB and eCognition. On the other hand, NB, used through the other two software, showed similitude with SVM. Regardless of the software used, the two classifiers RF and SVM, show multiple boxes with p-value > 0.05 compared to each other. The KNN of Scikit-learn did not express significant differences when compared to most other classifications. For the Crop-study site, the NB still maintains statistically significant differences with most of the other classifications. However, there are more statistical similarities between the different classification results. The RF and SVM achieved no significant differences in all software, with SVM of eCognition showing no difference (p-value = 1) than RF of all three software.

Table 7. McNemar’s Test p-values obtained comparing the classification accuracy results of each couple of classifications (combination of software + classifier) in the Orchard-study site. The values indicate when the difference between two classifications accuracy results are statistically significant (p-value < 0.05) or not-significant (p-value > 0.05)

Table 8. McNemar’s Test p-values obtained comparing the classification accuracy results of each couple of classifications (combination of software + classifier) in the Crop-study site. The values indicate when the difference between two classifications accuracy results are statistically significant (p-value < 0.05) or not-significant (p-value > 0.05)

Discussion

Performance of the different segmentation algorithms

In line with the results of Clewley et al. (Citation2014), the MRS algorithm was the fastest for both study sites, with a processing time ranging from 2 minutes (Orchard-study site) to 4 minutes (Crop-study site). However, the open-source OTB, which allows setting the available RAM for a running process during each of the four segmentation steps, performed good processing times (28 and 37 minutes for Orchard and Crop study sites, respectively). LSMS and Shepherd algorithms perform a tile-based segmentation, and both OTB and RSGISLib are designed to optimize RAM and CPU usage. However, although RSGISLib completed the segmentation process in few minutes for both study sites (about 7 minutes for both cases), the extraction of the spectral features of each segment (mean and standard deviation) required almost all the process-time on the Crop-study site (lasted more than 15 hours). Indeed, the considerable number of segments led to a critical slowdown of the system in RSGISLib. Moreover, Python language does not use all multi-processors by default (mono-thread). This leads to a slowdown of the high-magnitude computational processes. Several approaches can be implemented to solve this problem to make the program using the entire CPU and/or GPU computing capabilities (e.g., multiprocess, multithread, CUDA) (Gorelick & Ozsvald, Citation2020). However, their use requires experienced programming operators and, for now, is beyond our research aims.

The segmentation results differ in the number and size of the obtained segments, but this was expected since all three software exploited different algorithms. In the Orchard-study site, the LSMS provided the highest number of segments (mean area of 0.81 m2), about double that of the other two algorithms. Consequently, compared to the Shepherd algorithm and MRS algorithms, the segment size differences were particularly evident. This is corroborated by the higher standard deviations of mean segment size and perimeter (±9.01 and ±14.80, respectively). This is also reflected in the over-segmentation errors, which for LSMS are higher than the other two (). However, observing , it would seem that LSMS has been able to segment better in some cases and thus represent the various and heterogeneous shapes of the objects. For example, a single shadow or a bare soil object among the vegetation corresponds to a single segment, closely corresponding to its actual shape (-LSMS). Where the ground surface was continuous, larger segments were generated (with two segments covering more than 500 and 2000 m2, respectively).

Meanwhile, a tree canopy and other vegetation covers have been split into many more segments than the other two algorithms. This can be explained by the different behavior of the scene’s features, as De Castro et al. (Citation2018) have shown. Indeed, the soil and the shadows had more similar spectral characteristics than the vegetation, spectrally and structurally heterogeneous. The irregular structure of the vegetational cover affected these surfaces’ spectral variability. This condition is particularly evident in VHR images, where it is expected that many segments are composed of heterogeneous regions (Hossain & Chen, Citation2019; Torres-Sánchez et al., Citation2015a).

On the other hand, the bare soil’s homogeneous reflectance behavior could be due to its smoothed surface (low roughness). The same behavior, with less evidence, can also be noticed in the Shepherd algorithm segmentation.

The variation in segment size was not particularly evident in the Crop-study site, confirmed by a standard deviation that never exceeds 0.06 m2 (). Indeed, the MSI index reaches lower values than the Orchard-study site, which means less irregularly-shaped segments. This was due to vegetation’s size and structure (herbaceous), which were more homogeneous than the Orchard-study site (trees and grasses). Moreover, in the Orchard-study site, the bergamot trees have different ages (from 5 to 25 years old) along the field, leading to a greater diversification of the crowns’ size. shows that the number of segments is compared to the mean object size, which can be considered a representation of the segmentation scale (Torres-Sánchez et al., Citation2015a). The LSMS algorithm created a smaller segmentation scale than the others on the Orchard-study site, with a higher number of segments (more of them double than segmentation created by Shepherd algorithm), and characterized by a mean segment area of 0.81 m2. However, as mentioned before, the variability of the segments’ size was higher, with a standard deviation of 9.01. The Shepherd algorithm produced a lower number of larger segments (i.e., larger scale), with an average area of approximately 2 m2. Regarding the Crop-study site, both the LSMS and MRS algorithms showed a minor scale with a similar mean area of segments, 0.01 m2. In this case, the Shepherd algorithm produced a slightly larger scale (mean area of segments equal to 0.01 m2) and more irregular segments in both study sites. The MSI was 1.92 (± 0.53) for the Orchard-study site and 3.17 (±0.88) for the Crop-study site. In general, a smaller scale of segmentation (i.e., smaller segments) is preferred in PA, which concerns the early detection of possible plant’ stress status (Meena, Citation2019), and a smaller segmentation scale permits the distinction of small plants, portions of the canopy of single trees, or inter and intra-rows weeds. Typically, an inversely proportional correlation exists between the segmentation scale and the image resolution. The higher are the spatial resolution, the smaller the segmentation scales, and vice versa (M. Li et al., Citation2016; Ma et al., Citation2017b). Furthermore, the scene’s intrinsic characteristics and the study’s aim influence the variability of segmentation (M. Li et al., Citation2016; Ma et al., Citation2017b). The analysis of the geometric (OSeg, USeg and D) and non-geometric (BD) segmentation metrics corroborate the considerations mentioned above. The former is based on the calculation of the proportion of the area that each object has in common with the reference polygon, while the latter is based on the spectral separability of the classes (Clinton et al., Citation2010; Costa et al., Citation2018). The obtained results () confirm that most of the objects were over-segmented for all the segmentations, and a few of them were under-segmented. Although optimal segmentation would occur with lower levels of under-segmentation and over-segmentation, several authors (Costa et al., Citation2018; Y. Gao et al., Citation2011); D. Li et al., Citation2015; Liu & Xia, Citation2010; De Luca et al., Citation2019) stated that over-segmentation errors are preferable to the under-segmentation ones to obtain high levels of classification accuracies. When over-segmentation occurs, it is still potentially possible to associate a polygon to its real class and for the polygon to belong entirely to the latter (Liu & Xia, Citation2010). However, Belgiu and Drǎguţ (Citation2014) affirm that a high accuracy level can still be achieved when the under-segmentation is not very high. Under-segmentation errors produce polygons corresponding to more than one real object on the ground, generating critical misclassification errors and, therefore, more associated with thematic errors (Costa et al., Citation2018; Y. Gao et al., Citation2011); Liu & Xia, Citation2010). Moreover, Costa et al. (Citation2018) consider that although polygons’ geometric properties are essential, thematic properties (e.g., intraclass spectral affinity) are much more so when a land cover classification is a primary purpose. Summarizing, in the case of land cover classification within the framework of PA, segmentation can be considered satisfactory if the under-segmentation error remains low, the intraclass spectral properties are homogeneous, and the spectral separability between classes is high. Concerning the last point, the BD results show how the three segmentation algorithms obtained a high spectral separability between the different classes. At the class level, their values are low (i.e., spectral homogeneity). This is expected behavior demonstrating how segmentation, despite the over-segmented results, maintains an objective spectral/thematic coherence among the polygons (Costa et al., Citation2018). Polygons belonging to the same class (objects similar to each other) should have a low spectral separability. In contrast, different classes (objects different from each other) should show this diversity at a spectral level.

Although indicating a certain degree of thematic coherence of the obtained segmentation, non-geometric methods are not able to explicitly inform about which type of error predominates (under or over-segmentation). Only combining geometric and non-geometric metrics allows obtaining a complete and complementary analysis for a more in-depth characterization of the obtained results. Furthermore, as stated by Drǎguţ et al. (Citation2014) and Prošek and Šímová (Citation2019), and confirmed by the review of Costa et al. (Citation2018), considering the pros and cons, the visual evaluation of the result is still a method used for the purpose. For this reason, this last approach also supported the validation of the segmentation. The size of segments, depending on the segmentation scale and tuned through the value of each algorithm’s main parameter, i.e., the range radius for LSMS, k for Shepherd algorithm, and scale for MRS, led to results that can be considered very satisfactory. For our purpose, the segmentation scale leads to satisfactory results, representing a good compromise between the results’ quality and the algorithms’ computational requirements. However, LSMS seems to have performed the most coherent segmentation referring to the objects’ heterogeneous spectral and structural characteristics on the Orchard-study site. On the other hand, it resulted in many segments, therefore, much more complicated to manage, requiring higher hardware and software performances for its easy visualization and analysis. Since the same good performance of MRS, coupling with a halved number of segments allowed better management of the output files, and we believe it can be the best compromise. Besides, this algorithm requires a short execution time, allowing running several tests and quickly optimizing its segmentation parameters. Regarding the Crop-study site, the Shepherd algorithm required a computational time to extract spectral features too high if compared to the other two. However, LSMS obtained good results with reasonable processing time. Even in this case, the execution time is, however, in favor of the eCognition environment, which, it should be noted, is a commercial and expensive software suite, while the other two are free and open-source solutions.

Assessment of the machine learning algorithms’ classifications

As highlighted by several scholars (M. Li et al., Citation2016; Ma et al., Citation2017b; Mountrakis et al., Citation2011; Noi & Kappas, Citation2018; Yang et al., Citation2019), SVM, RF, and KNN are among the most used supervised classifiers in the literature, with generally good results of accuracy on agriculture and land cover classification. All these three machine learning algorithms seem to show, indeed, a similar classification performance in the Orchard-study site (), except for the RF on OTB, which maintains some differences. Looking at the classification results of the Crop-study site, it can be noticed that KNN underestimated weeds. Also, in the case of NB, the obtained results are satisfactory.

Looking at the OA (), it appears how SVM and RF are the most stable classifiers. These two algorithms are less influenced by the software used and the scene’s characteristics, as confirmed by OA values never lower than 81.0% and 91.20% for Orchard and Crop study sites, respectively. However, in the Orchard-study site, and referring to the FM, the SVM resulted as the most stable classifier, confirming the findings of Noi and Kappas (Citation2018) and Qian et al. (Citation2015) that highlight how SVM is the algorithm with constant and high accuracy.

In the Orchard-study site, the NB algorithm ran in OTB and eCognition performed the highest OA classification (89.68%). On the other hand, NB ran in Scikit-learn performed worse. It is evident that it does not differ much from the result given by SVM. Our findings are in line with those shown in Qian et al. (Citation2015), where the accuracy of NB was similar to SVM accuracy and significantly high for a number of training samples greater than 100 per class. In our case, the training samples were more than 100 for all LC classes except for Weeds and Grass (Table S1, supplementary material). The NB classifier is generally one of the most sensitive to sample size because, being a parametric algorithm, it uses training samples to estimate parameter values for the data distribution (Qian et al., Citation2015; Rehman et al., Citation2019). Thus, a higher number of samples can improve the estimated parameters (Qian et al., Citation2015). However, SVM resulted in being the least sensitive to the number of samples, and an increase of this number may not significantly improve the classification accuracy (Qian et al., Citation2015) because, instead of all training samples, it uses the support vectors to define the separating hyperplane (C. Huang et al., Citation2002; Mountrakis et al., Citation2011; Qian et al., Citation2015). However, the NB algorithm does not need to set parameters, and therefore it has proved to be the quickest way to image classification. In contrast, the setting of the parameter values directly influences the results of the other algorithms. Qian et al. (Citation2015) found that SVM is the most sensitive and proved, as also shown in Noi et al. (Noi & Kappas, Citation2018), that KNN gives its best performance with the lower value of k (<10). Also, for KNN, it is proven that the number of training samples influences the accuracy of the results in a directly proportional way (Noi & Kappas, Citation2018; Qian et al., Citation2015). Following our findings, and taking into account the consideration of the algorithms’ performances and the training sample size, we can recommend fixing the number of the random sample size (ni) at least equal to 150 multiplied the number of considered classes (LCn) (Equationeq. 15)(eq. 15)

(eq. 15) .

Observing the results of only concerning the investigated crop species of each study site (bergamot and olive for Orchard-study site, and onion for Crop-study site) (), the four classification algorithms recorded high values of producer’s and user’s accuracy and Fi. The Bergamot, Olive, and Onion classes were detected in all cases with values no lower than 85.0%. There was an overestimation of the grass falling between olive and bergamot trees in the Orchard-study site, with a misidentification of grass with bergamot or olive, and vice versa. The cause was probably the spectral similarity between these three species, which, as in Peña et al. (Citation2013), occurs mainly in the early part of the vegetative growth. Indeed, many plant species that have adapted to the thermo-pluviometric regimes typical of the Mediterranean environment (hot and dry summer), including olive trees, have adopted a typical phenological cycle in which, in addition to the main vernal budding phase, there is a second vegetative restart following a summer stasis that allows plants to limit evapotranspiration (Connor & Fereres, Citation2010; Fiorino, Citation2018; Iniesta et al., Citation2009; Palese et al., Citation2010). On the other hand, Citrus trees have more than one phase of vegetative growth in the Mediterranean environment, including in the summer and autumn seasons (Primo-Millo & Agusti, Citation2020). In these cases, as explained in Pande-Chhetri et al. (Citation2017), the training phase’s spectral features could not be enough to differentiate these classes. However, according to several scholars (Gašparović et al., Citation2020; López-Granados et al., Citation2016; Solano et al., Citation2019; Torres-Sánchez et al., Citation2014; Villoslada et al., Citation2020), the use of GNDVI enhanced spectral differences between vegetation and no-vegetation classes in VHR images, and it allowed to obtain good results of accuracy, despite the same spectral response of the plant coverings. This research was implemented in two heterogeneous study sites in terms of field structure and plant phenological activity. Although seasonal phenological variations could affect the spectral vegetation signature, this would not compromise the method’s validity, and only minor adjustments of the segmentation parameters would be required, depending on the different spectral values between the relative pixels. Moreover, in Orchard-study site, a significant improvement is linked to the addition of the DSM, as will be explained later.

Detecting herbaceous vegetation and weeds within crop seems to be a common challenge in the context of vegetation mapping (Gašparović et al., Citation2020; López-Granados, Citation2010; López-Granados et al., Citation2016; Peña et al., Citation2013, Citation2015; Perez-Ortiz et al., Citation2017; Torres-Sánchez et al., Citation2013, Citation2015a; Zisi et al., Citation2018). Indeed, other works, in order to cope with this problem, combined machine learning approaches with different advanced semiautomatic techniques in which the characteristics relate to the position and structure of the weeds in-field (De Castro et al., Citation2018; J. Gao et al., Citation2018; Peña et al., Citation2013; Perez-Ortiz et al., Citation2017; Pérez-Ortiz et al., Citation2015, Citation2016). Other authors performed more advanced deep learning techniques (Csillik et al., Citation2018; Dos Santos Ferreira et al., Citation2017; Espejo-Garcia et al., Citation2020; H. Huang et al., Citation2020) or used hyperspectral sensors (Ishida et al., Citation2018; Pantazi et al., Citation2016; Ravikanth et al., Citation2015; Zhang & Xie, Citation2013). However, this study’s accuracy values do not differ much from those mentioned research, most of them concerning monitoring orderly vegetation. This study was conducted on two scenarios characterized by different, complex, and heterogeneous conditions from an agronomic and structural perspective.

Since the VHR DSM, derived from the photogrammetric process, is efficient for plant height detection (De Castro et al., Citation2018; Zisi et al., Citation2018), the use of it as an additional input layer increased the accuracy in Orchard-study site, characterized by higher variability in the vegetation height (trees and herbaceous), as already demonstrated in other works (De Luca et al., Citation2019; Vilar et al., Citation2020; Zisi et al., Citation2018). This was fundamental since the three vegetation LC classes’ spectral signature was practically identical in the Orchard-study site. However, some misclassification still occurred in the discrimination of grass in narrow rows between trees. The modeled surface of the DSM, in these cases, is strongly influenced by the height of the surrounding trees. In the Crop-study site, the DSM was probably not decisive in the discrimination of onions from weeds since the height of both was very similar. In the Crop-study site, we obtained non-satisfactory results only with the KNN performed in OTB, with a user’s accuracy of 20.0% for the weeds class.

In addition to the classification algorithm’s choice, other parameters influence the accuracy, such as segmentation scale, characteristics of the trainers, sample scheme, object-features used, etc. (M. Li et al., Citation2016a; Ma et al., Citation2017a). A positive correlation exists between classification accuracy and the number of training samples (M. Li et al., Citation2016; Ma et al., Citation2015, Citation2017b; Noi & Kappas, Citation2018), and the method with which the training samples were chosen could significantly influence the obtained accuracy (Ma et al., Citation2015). Indeed, our randomized approach allowed comparing the different algorithms, especially within different software environments, in an unbiased way (Congalton & Green, Citation2019). Ma et al. (Citation2015) recommend the stratified random sample method because it allows managing the sample distribution between the different classes in the function of their quantitative distribution on the scene. However, it is not as easy to implement as the simple randomized approach due to the necessity to know the quantitative-qualitative distribution of the classes before the classification, and it may not always be possible.