?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The presence of heterogeneous image disparities often leads to inferior quality in the generated difference images during change detection. This paper proposes a self-supervised change detection of heterogeneous images based on a difference algorithm. Firstly, a combination of phase consistency and a simplified pulse-coupled neural network (PC-SPCNN) is used to fuse the heterogeneous images, and the result is used to compute the difference image (DI). The new DI generation method can generate the standard and exponential difference images. Secondly, the hierarchical FCM clustering algorithm is improved to extract stable and correct self-supervised samples by difference images so that the clustering process is not overly dependent on thresholds. Then, the support vector machine classifier is trained based on the heterogeneous images, the fused images, and self-supervised sample sets, and the information from the fused images is utilized to increase the feature dimension for better detection of changes. Finally, the support vector machine classifier automatically detects whether the intermediate pixels are changed and produces the change detection results. The experimental results confirm the improvements made by the proposed method in difference image extraction, training sample selection, and clustering algorithm, and the stability of the method exceeds that of the state-of-the-art change detection methods.

Introduction

Heterogenous remote sensing image change detection (CD) can relieve the limitations of traditional CD, hampered by adverse lighting conditions, imaging period, and modality. It can meet the technical requirements of land-based applications such as emergency response. However, heterogeneous images with different spectral, textural, and geometric information characteristics pose challenges to high-performance CD (Lv et al., Citation2022; Shi et al., Citation2023; Yin et al., Citation2023).

Recent research methods have been used to find changed regions by clustering the DI to obtain sample data and using trained classification models. However, poor-quality difference images often result in significant overlapping of changed and unchanged information, making it difficult to classify and analyze difference images accurately (Dong et al., Citation2020).

For example, Xuan et al. (Citation2022) constructed a new DI generation method ratio – mean ratio (RMR) by fusing multiplication-based ratio (R) DI and mean ratio (MR) DI, which preserves image details and reduces noise. However, the method must be extended to multiple scales and rely on suitable fusion weights. Based on the up-to-date literature, it is expected to fuse multiple DIs linearly, often without considering the weights associated with each DI (Shi et al., Citation2021; Xu et al., Citation2023). Jiang et al. proposed a local energy weighting (LEW) method to fuse individual DIs instead of the commonly used equal weights, and the method is susceptible to the quality of individual DIs (Jiang et al., Citation2022). The quality of the DI significantly impacts clustering performance and self-supervised sample extraction. In the self-supervised sample extraction stage, the quality of the DI significantly impacts the sample’s accuracy. Low-quality difference images may contain noise, reducing the accuracy of sample set construction.

Gao et al. (Citation2016) utilized the hierarchical FCM clustering algorithm to use the first cluster of the second-level clustering results as changed pixels and compare the intermediate pixels with the threshold number to complete the classification of intermediate and unchanged pixels. However, the method relies on the threshold number, which is prone to the problem of the insufficient number of changed samples and the low quality of unchanged samples. Geng et al.(Citation2019) utilized the hierarchical FCM clustering algorithm to use the first and last clusters of the second level of clustering as the changed and unchanged sample data, respectively, which leads to a decrease in the number of changed and unchanged samples and an excessive number of intermediate pixels, which increases the difficulty of subsequent classification and also relies on the threshold number (Gao et al., Citation2016). Difference images affect the clustering accuracy of the changed and unchanged pixels, so obtaining high-quality difference images and using more accurate clustering algorithms and classification models is necessary to improve the CD performance(Jiang et al., Citation2022). Sun et al. performed CD through a Markov co-segmentation model. The model can fuse the pre- and post-difference images during the segmentation process and enhance the robustness of the images through an iterative framework, which improves the final detection performance. However, the operation efficiency of the method is low (Sun et al., Citation2021).

Compared with the above methods, deep learning-based methods are characterized by incredible computational difficulty, high hardware requirements, and more complex model design (Li et al., Citation2022). Deep learning-based classification methods perform better. However, the detector’s performance needs to be improved by increasing the training dataset and changing the training hyperparameters (Davari et al., Citation2021). The supervised deep learning-based synthetic aperture radar image CD method uses the Deep Belief Network (DBN) as the deep architecture. This network’s training process includes unsupervised feature learning and supervised network fine-tuning. By training the DBN using input images and images generated by morphological operators, a dataset with an appropriate amount and diversity of data can be provided, and a method to significantly reduce the amount of computation without compromising the training performance is introduced. The method exhibits better performance and higher accuracy while achieving shorter processing time. However, its drawback is that a large amount of data is required, and the lack of data affects the accuracy of the neural network (Samadi et al., Citation2019). Li et al. (Citation2021) proposed a change detection network for optical and SAR remote sensing images based on depth panning, which utilizes image panning to reduce the differences of heterogeneous remote sensing images and an improved depth network to detect the changes. Depth-divisible convolution exhibits satisfactory performance, which reduces the number of model parameters and the time. However, too few parameters lead to the instability of the network, and the computational cost is very high. Du et al. (Citation2023) proposed a multitask change detection framework of optical and SAR images, which avoids complex operations and many parameters and reduces the computational cost. However, it is still a simple framework with room for improvement and needs to be generalized to different types of sensors.

The performance of deep learning-based methods may depend on the quality of large datasets. Once the dataset changes, the parameters and network structure must be manually adjusted, which consumes a lot of labor and time (Davari et al., Citation2021; H. Li et al., Citation2022). DI-based CD methods are relatively easy to implement and do not require training data, which can reduce the accumulation of human error to complete the self-supervised extraction of sample data (Ye et al., Citation2016). Therefore, we consider self-supervised learning, DI, and clustering algorithms to reduce sample extraction’s labor and material costs to achieve CD. However, the existing methods have the following problems that need to be solved:

Significant differences and image noise exist between heterogeneous images, which leads to lower CD accuracy.

The quality of difference images is easily affected by the differences between heterogeneous images and noise. Improving the quality of difference images is a research direction to improve the model’s performance (Jiang et al., Citation2020; Liu et al., Citation2016).

Clustering methods involve the setting of a threshold, the size of which directly affects the number and quality of self-supervised samples extracted(Niu et al., Citation2018; Ou et al., Citation2022; Pan et al., Citation2022).

Therefore, existing heterogeneous CD methods regarding high-quality DI generation, CD accuracy, and clustering of changed and unchanged pixels must be improved. To this end, this paper proposes a self-supervised CD of heterogeneous images based on a difference algorithm. First, the PC-SPCNN (Fu et al., Citation2023) fusion method is used to fuse the heterogeneous images to generate the transition image so that it is comparable to the original image and can provide complementary information. It is similar to the original image regarding radiance spectrum and channel number and is highly sensitive to changed pixels. Second, standard DIs and exponential DIs are generated based on heterogeneous and fused images to mitigate the unfavorable effects of heterogeneous image differences and noise, improve the quality of DIs, and preserve detailed information. Then, a bi-hierarchical FCM clustering method extracts high-quality changed and unchanged samples on standard DIs and exponential DIs. Finally, the heterogeneous and fused images are utilized for training, and a support vector machine classifier is used for prediction to obtain more detailed and accurate CD results. Experimental results from heterogenous image datasets of three scenes confirm that the proposed method does not require auxiliary information from other hyperspectral or high spatial resolution image data. At the same time, it can obtain satisfactory CD results without the need for large datasets. Unlike previous combined DI methods, the proposed method uses standard and exponential difference images to extract changed and unchanged samples separately instead of combining difference images to generate final CD results. This makes the detection accuracy independent of the weights of individual difference images and does not overly rely on the threshold setting in the clustering process (Ferraris et al., Citation2020).

The remaining sections of this paper are organized as follows: Section 2 describes the proposed method NR-CFI-BI-FCM-SVM in detail, Section 3 introduces the experimental dataset as well as the evaluation metrics, Section 4 demonstrates the performance of our method through comparative experiments and proves the effectiveness of the improved DI and classifier modules through ablation experiments. Finally, Section 5 summarizes the paper and provides an outlook for future research.

Method

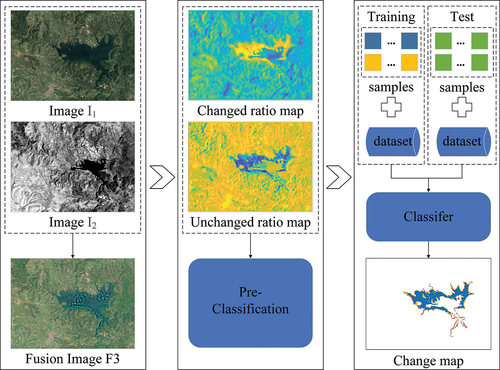

The self-supervised CD method for heterogeneous images proposed in this paper adds the fused image into the neighborhood ratio computation to generate high-quality difference images and reduce the influence of the feature differences of the heterogeneous images and noises on the extraction of the correct samples. It is not a direct comparison of the heterogeneous images to extract the samples, the proposed method utilizes the support vector machine (SVM) to classify heterogeneous and fused images with enriched information to obtain highly accurate CD results. As shown in , the proposed method comprises three stages: high-quality DI generation, automatic sample sets extraction, and CD.

The PC-SPCNN method fuses heterogeneous images to generate spatiotemporally fused images with detailed spectral, textural, and spatial information. High-quality standard and exponential DIs are generated based on heterogeneous images and their fused images.

Based on the standard and exponential DIs, stable and correct self-supervised samples are automatically extracted using the bi-hierarchical FCM clustering method.

Train the SVM classifier based on the heterogeneous images, fused images, and automatically extracted sample sets. Use the trained SVM classifier to automatically determine whether the intermediate pixels are changed and produce the final CD results.

Difference image extraction based on neighborhood ratio combined with fused images(NR-CFI)

DI-based methods are used more in homologous image CD, such as difference, ratio (R), improved ratio (IR), logarithmic ratio (LR), mean value ratio (MR), and their different combinations, to obtain DI. However, these methods do not apply to heterogeneous images because the heterogeneous images are so different that it does not make sense to compare the pixel values directly. It is prone to generate much noise. IR differs from R in that it does not require a dual-thresholding algorithm, reducing the difficulty of CD. Suppose denotes the pixel intensity values in the image at the position of

, m

, n

, where M and N indicate the height and width of the image

, respectively. Then IR is expressed as equation(1)(Zhuang et al., Citation2018).

Where, denotes the DI obtained via the IR method, while

represents the intensity value of the pixel DI at position

in

. A high value indicates that the pixel remains unchanged, and vice versa. MR differs from IR in that it uses local area averaging to reduce the effect of noise on CD (Seo et al., Citation2018). The MR method is expressed as equation(2) (Zhuang et al., Citation2018)

Where, and

represent the mean intensity values of the neighborhood space centered on the location

for images

and

, respectively, with a size of R×R. A high value of

indicates that the corresponding pixel remains unchanged, and vice versa. The MR method can detect various changes and suppress the noises to a certain extent, improving CD accuracy (Zhuang et al., Citation2018). The NR method effectively combines the advantages of IR and MR methods. It uses the heterogeneity measure

as the weight ratio, where a low

value indicates local homogeneity and a high

value indicates local heterogeneity, which can reduce the difficulty of detecting heterogeneous image changes. It is expressed as equation(3)(Zhuang et al., Citation2018).

Where, denotes the neighborhood ratio at the image location

and describes the local similarity between images

and

(Fan et al., Citation2023). A higher similarity corresponds to a higher probability of the pixel remaining unchanged,

denotes the set of neighborhood space centered at the location

, while

represents the pixel intensity value of the x-block matrix within the neighborhood space centered at the location

,

denotes the grayscale variance of the neighborhood, and

denotes the grayscale mean value of the neighborhood (Gao et al., Citation2016).

However, when used for heterogeneous image CD, the performance is unsatisfactory and has four drawbacks (Seo et al., Citation2018). First, only the heterogeneity measure values were calculated at the exact locations of the multi-temporal images (Zhuang et al., Citation2018). Second, the heterogeneity measure value is a weight that maintains a balance between suppressing noise and preserving detailed information (Zhuang et al., Citation2018). Thus, its desired dynamic range is [0,1]. However, when the standard deviation of the neighborhood area is higher than its mean, the heterogeneity measure is greater than 1. Third, the intensity of the changed pixels in the obtained DIs should be small, and the intensity of the unchanged pixels should be significant (Seo et al., Citation2018). However, the difference images computed by Seo et al. show greater intensity in the changing pixels. Finally,

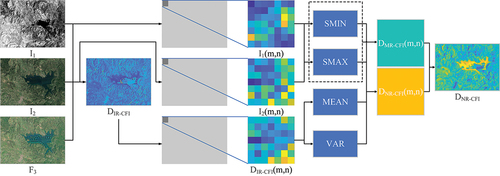

is more suitable for SAR image CD. Based on this, this paper proposes a DI generation method based on neighborhood ratio combined with fused images, which improves the accuracy of self-supervised sample extraction and effectively suppresses the noise compared with the traditional DI algorithm. The flow of the

generation is shown in the as follows:

The improved method can balance noise suppression and detailed information preservation by heterogeneity measures. Moreover, it can use fused images to retain detailed spectral, textural, and spatial information, which can mitigate the adverse influence of heterogeneous differences and accompanied noises and retain the detailed changed and unchanged information. Further, the proposed method generates two types of DI, the standard and exponential DIs. The improved

one can be expressed as equation (5).

Where, indicates the fused image. When

is used, a high neighborhood ratio value indicates that the pixel has changed and can be used to generate the standard DI. Conversely, When

is employed, a high neighborhood ratio value indicates that the pixel is unchanged and can be utilized in the exponential DI.

,

, and

are summed along the third dimension to extract data features, respectively, ensuring that the computation is in the same dimension. By adding the fused image, the standard DI can reduce the noise caused by the difference of the heterogeneous images and improve the extraction accuracy of the changed pixels. Exponential difference images reduce noise by exponentially amplifying the feature differences between heterogeneous images while ensuring the acquired unchanged pixels are correct. By extracting pixel-level features, not only the spectral values of each pixel are taken into account, but also the rich texture information and spatial context information (Seo et al., Citation2018).

The imaging mechanisms of heterogeneous images are different, and the physical meanings of the image element values and the number of channels are not the same, resulting in the spectral information and texture information are not comparable, so it is necessary to construct transitional images that are comparable to the original image. Therefore, this paper generates an excessive image by fusing the front and back time-phase images. The transitional images are spectrally similar to the spectral information of the original image, which is spectrally comparable. At the same time, the transitional images contain rich texture information, and the spectral values of the changed pixels change significantly due to the injection of texture information. Therefore, the transitional images constructed by fusing the before and after temporal phases can provide complementary information, which is not only comparable to the original image in terms of radiance spectrum, channel number, etc., but also can have a higher sensitivity to the change image elements, which can effectively assist in generating self-supervised samples and can perform more detailed and accurate CD.

The fusion method used in this paper is PC-SPCNN (Fu et al., Citation2023), which solves the severe spectral distortion and incomplete and inconspicuous feature injection in RS image CD methods. It exhibits exceptional resilience against nonlinear radiometric disparities and noise while preserving spectral and spatial information (Wu et al., Citation2021). The processing results of are shown in . The generated spatiotemporal fused images have both detailed spectral and textural information. Thus, the proposed method that generates DIs based on heterogeneous images and fused images can reduce the influences of image differences and noise and improve the separability of changed and unchanged regions.

Bi-hierarchical FCM (BI-FCM)clustering algorithm

Based on the difference images, CD has high detection accuracy and fast computational speed, but the thresholding problem affects the CD accuracy. The number of change samples is small when extracting changed samples and unchanged samples using the hierarchical FCM clustering algorithm, and the extraction of unchanged samples is overly dependent on the threshold value (Chen et al., Citation2020; Gao et al., Citation2016; Geng et al., Citation2019; Hou et al., Citation2021; Zhan et al., Citation2018; Zheng et al., Citation2021). Therefore, in this paper, in order to select credible samples to train the support vector machine binary classification model, we use the Bi-hierarchical FCM clustering algorithm to complete the automatic extraction of changed and unchanged samples, and the steps of the algorithm are as follows:

The FCM algorithm is executed on the DI to divide the pixels into three clusters:

,

and

, which represent changed pixels, intermediate pixels, and unchanged pixels, respectively. The number of pixels in

is denoted by

. The upper bound on the number of actual changed pixels is defined as TT =

, where the

is experimentally determined to be 1.2 (Gao et al., Citation2016).

Execute the FCM algorithm on the DI to divide the pixels into five clusters:

,

,

,

and

, which are arranged in descending order based on their mean value. Among them, the mean value of pixels

is the largest, and the mean value

is the smallest. A cluster with a higher mean value indicates a higher change probability. The number of pixels in the five clusters is

,

,

,

and

. Set t = 1, c=

, and assign the pixels

to the class

(Gao et al., Citation2016).

Set t = t + 1 and c = c +

(Gao et al., Citation2016).

If c < TT, assign the pixels in

to

. Otherwise, assign the pixels in

to

. Execute step 3 until t = 5(Gao et al., Citation2016).

Output pre-classification results are composed of labels {

,

,

} (Gao et al., Citation2016).

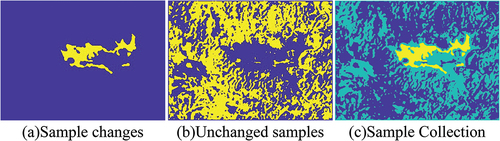

The non-zero element of the classification result is set to 1 to obtain the binarization matrix, where 1 denotes the clustered region, and 0 denotes the other regions.

The binarized matrix is labeled to obtain the label matrix and the number of labels.

Remove small areas of clustering results by traversing each label.

An image showing the changed pixels is obtained by labeling the regions with a clustering result value of 1. Repeat the above steps to obtain an image showing unchanged pixels.

10) Combine the changed pixel and unchanged pixel regions, where the unchanged pixel is labeled 0, the change pixel is labeled 1, and the intermediate pixel is labeled 0.5.

In the standard DI, the intensity value of the changed pixels is significant, and the intensity value of the unchanged pixels is small. In the exponential DI, the intensity value of the unchanged pixels is significant, and the intensity value of the changed pixels is small. The accurately changed pixels and unchanged pixels are obtained by the DI and exponential DI, respectively. This avoids the problem of a small number of change samples and poor accuracy of unchanged samples. Changed and unchanged sample extraction are independent and have no requirement for the magnitude of the pixel values, and it does not involve problems related to determining the double threshold value.

Unlike the hierarchical FCM clustering algorithm, step (6) increases the number of changed and unchanged samples, and step (8) improves the accuracy of the extraction of changed and unchanged samples. The extracted changed pixels and unchanged pixels using the bi-hierarchical FCM clustering algorithm are completely correct, and the results are shown in (a) and (b). The obtained sample set is shown in (c), with yellow pixels referring to changed pixels () and blue pixels representing unchanged pixels (

). These changed and unchanged pixels are selected as training samples. The cyan pixels in (

) represent the intermediate pixels, and their changed and unchanged states are determined by the SVM classifier. The acquired samples were divided into training and test sets, comprising 65% and 35% total samples, respectively.

Change detection

CD can be defined as a binary classification problem involving categorizing pixels into changed and unchanged pixels. Compared to state-of-the-art neural network methods, choosing SVM as a classifier has low requirements for training samples, hardware and software. In this process, the appropriate feature selection plays a pivotal role (Asokan & Anitha, Citation2019; Lantzanakis et al., Citation2020; Shirani et al., Citation2023). This paper uses information from fused images in the SVM model to increase the feature dimensionality and detect the correct change information that cannot be detected from original heterogeneous images. Cross-validation techniques are employed to select optimal hyperparameters to maximize the performance of SVM models.

Experimental datasets and evaluation metrics

Experimental datasets

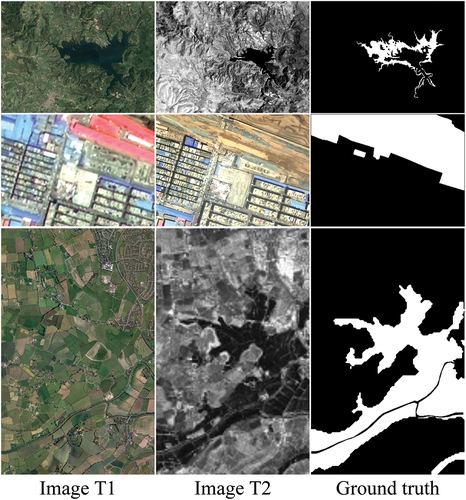

This paper uses three sets of heterogeneous images to validate fused images’ impact on DI generation and sample extraction accuracy, as shown in . The detailed information on datasets is shown in (Longbotham et al., Citation2012; Mignotte, Citation2020). Dataset 1 is from Google Earth and Landsat-5 sensors (Sun et al., Citation2021). Dataset 2 is from GF1 and GF2 sensors. Dataset 3 is from TerraSAR-X and QuickBird2 sensors (Geng et al., Citation2019). All datasets are resampled, registered, and cropped to cover the same geographical area (Geng et al., Citation2019).

Table 1. Data set information.

Evaluation metrics

This paper adopts the F1-measure (Fm), Kappa coefficient (Kappa coefficient, Kc), and PCC index to quantitatively evaluate the performance of the method (Agapiou, Citation2020). The corresponding measurement equations are expressed as equation (6), (7), and (8) (Gao et al., Citation2016; Xuan et al., Citation2022).

Fm represents the comprehensive evaluation metric, simultaneously integrating precision and recall. Meanwhile, the Kappa coefficient is utilized to assess consistency (Pan et al., Citation2022). PCC denotes the classification correctness rate, while Nu and Nc denote the number of unchanged and changed actual pixels. The formulas for each metric are as follows: (9), (10), (11), (12), where TP, FP, TN, and FN stand for True Positives, False Positives, True Negatives, and False Negatives, respectively (Sun, Lei, Guan, Wu, et al., Citation2022; Xuan et al., Citation2022).

Experimental results and evaluation

Change detection based on different difference images

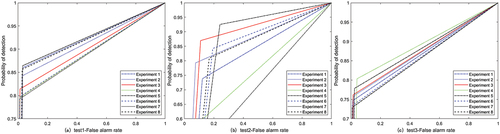

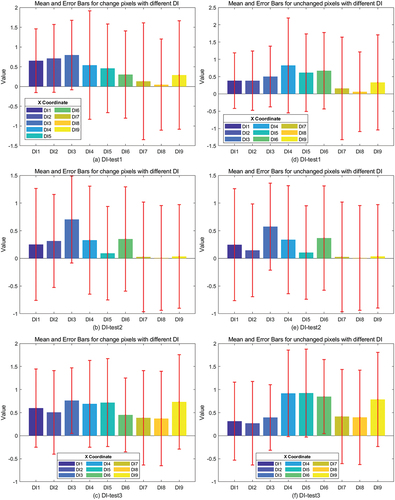

The DIs of datasets I, II, and III are depicted in , respectively, where the value of changed pixels is higher than that of unchanged pixels in the standard difference images (DI1-T1/T2, DI2-F3/T2, DI3-(T1+F3)/T2). In contrast, the value of unchanged pixels is higher than that of changed pixels in the exponential DI (DI4-T1/T21.5, DI5-F3/T21.5, DI6-(T1+F3)/T21.5, DI7-T1/T22, DI8-F3/T22, DI9-(T1+F3)/T22). The CD results vary with different DIs. The CD accuracies for datasets I, II, and III are presented in , , and , respectively, and the ROC curves are shown in , (b), and (c), respectively.

Table 2. Data 1 Change detection accuracy of different difference images.

Table 3. Data 2 Change detection accuracy of different difference images.

Table 4. Data 3 different difference image change detection accuracy.

Compared to experiments 1 and 2 of dataset 1, the Kappa coefficient of experiment 3 is 5.63 and 7.12% higher. The remote sensing images of dataset 1 have high spectral variability, which leads to many errors in changed sample extraction based on the DI1 and DI2. Comparatively, the fusion of the heterogeneous images significantly reduces the spectral disparities. The accuracy of actual changed pixel detection is highest based on the DI3-(T1+F3)/T2, and thus the corresponding Kappa coefficient reached 0.8020. Compared with experiments 1 and 2 of dataset 2, the Kappa coefficient of experiment 3 is improved by 4.04% and 11.97%, respectively. The remote sensing images in dataset 2 have significant differences in texture information, resulting in elevated errors in changed sample extraction based on the DI1 and DI2. By adding spectral and textural information, the fused images exhibit high homogeneity so that the adverse effect of texture differences on CD is weakened based on the DI3-(T1+F3)/T2. Thus, the actual changed pixels are detected with the highest accuracy. Compared to experiments 1 and 2 of dataset 3, the Kappa coefficient of experiment 3 is 1.5 and 1.88% higher. The remote sensing images of dataset 3 have high spectral variability, which leads to many errors in changed sample extraction based on the DI1 and DI2. Comparatively, the fusion of the heterogeneous images significantly reduces the spectral disparities.

Compared with experiments 4, 5, 6, 7, 8, and 9 on Dataset 1, the Kappa coefficients of experiment 3 are improved by 0.14–9.32%. Dataset 1 achieves good CD on DI4, DI6, DI7, and DI8, which can accurately identify the actual unchanged pixels. Particularly, the Kappa coefficient on DI6 is as high as 0.8020, which retains the completely changed and unchanged detail information. Compared with experiments 4, 5, 6, 7, 8, and 9 of dataset 2, the Kappa coefficients of experiment 3 are improved by 12–31.61%. At the same time, the range of pixel values of the DI4, DI5, DI7, DI8, and DI9 is small, which can easily cause confusion between the actual changed and the unchanged pixels. In contrast, the DI6-(T1+F3)/T21.5 value range is extensive, leading to good CD accuracy. Experiments on Dataset 3 achieve good CD accuracy on six exponential DIs, and compared to other experiments, the Kappa coefficients of experiment 3 are improved by 0.74–2.26%. In particular, the range of values in the DI6-(T1+F3)/T21.5 is the most extensive and facilitates the distinction between actually changed and unchanged pixels.

Therefore, the standard DI3-(T1+F3)/T2 can obtain high-quality DI and improve the accuracy of changed sample extraction, and the exponential DI6-(T1+F3)/T21.5, which ensures the accuracy of unchanged sample extraction.

To quantitatively analyze the quality of DIs, we use the Davies-Bouldin index based on intra-cluster distance and inter-cluster distance, which can measure the tightness and separation of clusters(Wu & Chow, Citation2004). A smaller intra-cluster distance for DIs indicates better tightness, and a large inter-cluster distance between changed and unchanged pixels indicates better separation. Thus, a small Davies-Bouldin index indicates the preferred clustering result with good tightness and separation. According to the Davies-Bouldin validity index, optimal clustering minimizes (Roy et al., Citation2023; Wu & Chow, Citation2004)

Where C is the number of clusters, Sc is the average of the distances between all samples in cluster k and its cluster center, which measures the clusters’ closeness, and is the distance between cluster k and cluster j centers, which measures the degree of separation between the clusters (Baik et al., Citation2001).

represents the mean and Davies-Bouldin index for different DI changed pixels and unchanged pixels, and the outcomes are depicted using error bars, with the results for datasets I, II, and III displayed in the first, second, and third columns, respectively. The central point of the error bars denotes the pixel’s mean value, while the length of the bars represents the Davies-Bouldin index. Shorter error bars indicate better tightness and separation of changed and unchanged pixels, and vice versa.

Comparing the different DIs in Experiment 1, in (a), the mean value of DI3 is more significant than that of DI1 and DI2, which is favorable for extracting changed pixels. The length of its error bars is also shorter, indicating that the changed pixels are more tightly clustered and better separated from the unchanged pixels, which is favorable for the dividend of the changed and unchanged pixels. The mean value of DI7 and DI8 is smaller, which is favorable for extracting changed pixels. However, the length of the error bars is longer, which is unfavorable for the distinction between changed and unchanged pixels. In (d), the mean values of DI1, DI2, and DI3 are all larger, which is unfavorable for the extraction of unchanged pixels, comparing the mean values of DI4, DI5, DI6, DI7, DI8, and DI9, the values of DI4 and DI6 are more significant, which is favorable for the extraction of unchanged pixels. The length of the error bars of DI6 is shorter than that of DI4, indicating that the tightness of unchanged pixels in DI6 is better. The separation of unchanged and changed pixels is better, which is favorable for the difference between unchanged pixels and other pixels’ distinction.

Comparing the different DIs in Experiment 2, in (b), the mean value of DI3 is more significant than that of DI1 and DI2, which is favorable for the extraction of changed pixels, and the length of the error bars is shorter, indicating that the changed pixels are more tightly clustered and better separated from the unchanged pixels. The mean values of DI7, DI8, and DI9 are smaller, which is favorable for extracting changed pixels. However, the length of the error bars is longer, which is unfavorable in distinguishing changed pixels from unchanged pixels. In (e), the mean value of DI2 is smaller than that of DI1 and DI3, which is favorable for extracting the unchanged pixels. However, the length of the error bars is longer, which is unfavorable for the distinction between unchanged and changed pixels. Among DI4, DI5, DI6, DI7, DI8, and DI9, the mean value of DI6 is the largest, and its error bar is the shortest, which is favorable for the distinction between changed and unchanged pixels.

Comparing the different DIs of Experiment 3, in (c), the situation of DI values is similar to (a), in (f), the situation of DI values is similar to (d). In summary, through the quantitative analysis of DI plots, it is further verified that the standard DI3-(T1+F3)/T2 is favorable for the extraction of changed samples, and the exponential DI6-(T1+F3)/T21.5 is favorable for the extraction of unchanged samples. Therefore, this paper uses DI3-(T1+F3)/T2 for changed sample extraction, and DI6-(T1+F3)/T21.5 for unchanged sample extraction.

CD under different fusion methods

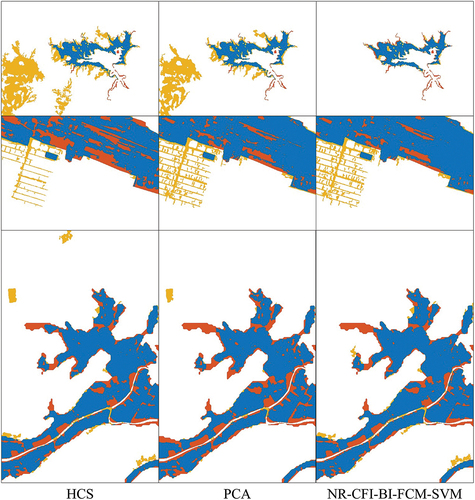

This subsection compares three methods based on different image fusion algorithms to analyze the effect of fusion methods on the CD results, including HCS(Hao et al., Citation2019), PCA (Wang et al., Citation2005), and PC-SPCNN (Fu et al., Citation2023). The CD results of the three datasets are shown in , where red represents the (miss detection) MD pixels, orange represents the (false alarm) FA pixels, and blue represents the correct CD pixels. The accuracy results are shown in , , and , respectively. The PC-SPCNN-based method maintains a more stable CD accuracy. Therefore, the PC-SPCNN algorithm is used for image fusion in this paper.

Table 5. Change detection accuracy of different fusion methods for Data 1.

Table 6. Data 2 different fusion methods change detection accuracy.

Table 7. Data 3 different fusion methods change detection accuracy.

Comparison of different CD methods

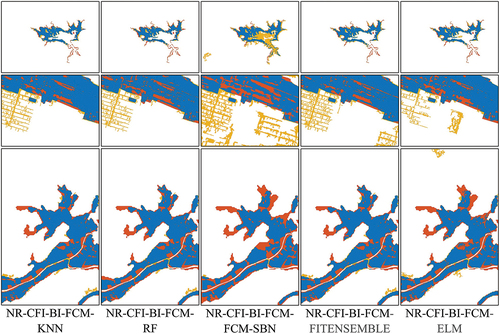

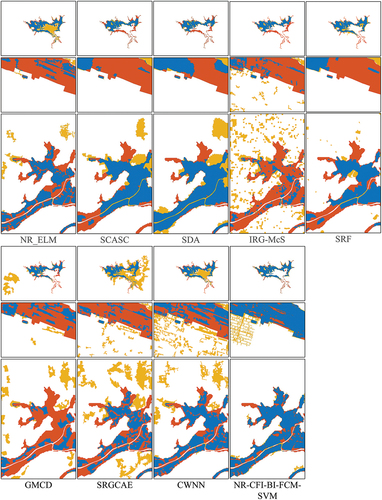

To validate the efficacy of SVM in a self-supervised CD method based on a difference algorithm, five competing classifiers, including k-Nearest Neighbor (KNN)(Liu & Chen, Citation2022), random forest (RF) (Mastro et al., Citation2022), fuzzy C means-sensitive Bayesian network (FCM-SBN) (Li et al., Citation2023), FITENSEMBLE (Matikainen et al., Citation2016), and extreme learning machine (ELM) (Gao et al., Citation2016), are employed for comparison. The method was validated on three heterogeneous image datasets, where the same training samples were fed into the five classifiers for comparison. The results are shown in . In addition, the method proposed in this paper is compared with other existing CD methods, including neighborhood-based ratio and extreme learning machine (NR_ELM) (Gao et al., Citation2016), Sparse-constrained adaptive structure consistency (SCASC) (Sun et al., Citation2021), spectral domain analysis (SDA) (Sun et al., Citation2022), iterative robust graph and Markovian co-segmentation (IRG-McS) (Sunet al., Citation2021), Structural regression fusion (SRF) (Sun et al., Citation2023), and deep learning-based graph convolutional network and metric learning (GMCD) (Tang et al., Citation2021), structural relationship graph convolutional autoencoder (SRGCAE) (H. Chen et al., Citation2022) and convolutional-wavelet neural networks (CWNN)(Gao et al., Citation2019) method, and shows the results of CD.

Figure 12. Change detection results of different classifier methods for three heterogeneous image datasets.

Figure 13. Change detection results of different method changes of three groups of heterogeneous images.

The KNN method updates the changed and unchanged pixel labels based on contextual information (Liu & Chen, Citation2022). The RF method is based on the classification and regression tree (generalized likelihood ratio test, CART) algorithm without any assumptions of the data frequency distribution (Mastro et al., Citation2022). The FCM-SBN uses the FCM algorithm to decompose the pixel into several signal classes with different fuzzy memberships and utilize SBN to estimate the pixel-wise posterior probability vector for CD (Li et al., Citation2023). FITENSEMBLE accomplishes change and un-change classification by constructing a classification tree set (Matikainen et al., Citation2016). As a CD classifier, ELM can adapt to different conditions and capture the nonlinear relationships of multi-temporal images (Gao et al., Citation2016). NR-ELM uses NR to obtain the changed or unchanged pixels with high probabilities and classify the original image by the ELM model (Gao et al., Citation2016). SCASC method performs CD based on structural consistency by comparing the structure of two images rather than their pixel values (Sun et al., Citation2021). SDA decomposes the source signal into a spectrally constrained regression signal and a change signal to fulfill the CD tasks (Sun et al., Citation2022). GMCD is an unsupervised CD method leveraging graph convolutional networks (GCN) and metric learning (Tang et al., Citation2021). The Structural Relational Graph Convolutional Autoencoder (SR-GCAE) learns robust and representative features from graphs for multimodal remote sensing image CD (Chen et al., Citation2022). In CWNN, the dual-tree complex wavelet transform is incorporated into a convolutional neural network to classify changed and unchanged pixels (Gao et al., Citation2019).

Examples of change detection

shows the detection results for Data 1: NR-CFI-BI-FCM-FCM-SBN and NR-ELM significantly overestimate the change region since the FCM-SBN and ELM classifiers have significant detection errors for changed and unchanged pixels. The SCASC method utilizes graphical extraction to obtain the structural information of the image. However, this method underestimates the change region and needs to improve the DI obtained from the regression process. On the other hand, the SDA method decomposes the source signal into the regression signal and the change signal, which restricts the spectral characteristics of the regression signal, underestimates the region of change, and needs to improve its DI. Similarly, IRG-McS underestimates changed regions, and SRF overestimates changed regions. GMCD is a multiscale decision fusion used to integrate DIs, which overestimates the change region. SRGCAE is a DI fusion strategy based on change intensity variance, which overestimates the change region. Similarly, CWNN overestimates the changed area. By training high-quality DIs, NR-CFI-BI-FCM-KNN, NR-CFI-BI-FCM-RF, NR-CFI-BI-FCM-FITENSEMBLE, NR-CFI-BI-FCM-ELM, and NR-CFI-BI-FCM-SVM obtain more accurate CD results with relatively few overestimated change regions. In contrast, NR-CFI-BI-FCM-SVM has the smallest overestimated change regions with clear boundaries under the SVM classification model due to the combination of detailed information from the fused images.

Due to many shadows, the alignment accuracy of Data II is poor, resulting in many CD errors. 、13 shows the detection results of Data II: NR-CFI-BI-FCM-FCM-SBN, NR-CFI-BI-FCM-FITENSEMBLE, and NR-CFI-BI-FCM-ELM severely overestimated the change regions, indicating that the FCM-SBN, FITENSEMBLE, and ELM classifiers detected a large number of incorrect changed and unchanged pixels. NR-ELM, SCASC, SDA, IRG-McS, SRF, GMCD, and SRGCAE severely underestimated the change region, indicating that the DIs obtained by these methods need to be further improved. The CWNN has more areas for false detection and miss detection. NR-CFI-BI-FCM-KNN, NR-CFI-BI-FCM-RF, and NR-CFI-BI-FCM-SVM obtain more accurate CD results by training high-quality DIs, but the region of change is slightly underestimated. In contrast, NR-CFI-BI-FCM-SVM committed the slightest underestimation of the change region.

shows the detection results for Data III. Compared with NR-CFI-BI-FCM-ELM, the detection error of NR-ELM is evident, which verifies the reliability of high-quality DI in heterogeneous image CD. In addition, the classification results based on NR-CFI-BI-FCM-KNN, NR-CFI-BI-FCM-RF, NR-CFI-BI-FCM-FCM-SBN, NR-CFI-BI-FCM-FITENSEMBLE, and NR-CFI-BI-FCM-SVM are accurate. In contrast, SCASC, SDA, IRG-McS, SRF, GMCD, SRGCAE, and CWNN methods have significant errors in detecting changed and unchanged pixels.

Quantitative analysis of change

The CD accuracy for Data I is presented in . Compared with the NR_ELM method, the FA rate of the NR-CFI-BI-FCM-ELM method is reduced by 0.0232, and the Kappa coefficient is improved by 0.1391, which verifies the superior performance of in heterogeneous image CD. Similarly,

achieves promising results based on KNN, RF, FITENSEMBLE, and SVM classifiers. In particular, the SVM-based method obtained the highest Kappa coefficient of 0.8020 with a decreased FA rate compared to the other classifiers. The MD rate of NR-CFI-BI-FCM-FCM-SBN is lower than that of NR-CFI-BI-FCM-SVM, while the FA rate is improved by 0.0440 by establishing a many-to-many relationship between the signal class and the ground cover types. Compared to NR-CFI-BI-FCM-SVM, SCASC, SDA, and IRG-McS have lower FA rates. However, these methods confuse many changed pixels with unchanged pixels, which leads to higher MD rates. Further, the SRF, GMCD, and SRGCAE methods have higher FA rates and lower MD rates. In contrast, the method proposed in this paper has a low FA rate and an MD rate of 0.0135 and obtains better Kappa, F1, and PCC values than all other competing methods.

Table 8. Change detection accuracy of different classifier methods for Data 1.

Table 9. Change detection accuracy of different methods for Data 1.

The CD accuracy for Data II is presented in . Compared with the NR_ELM method, the FA rate of the NR-CFI-BI-FCM-ELM method is increased by 0.0467, the MD rate is reduced by 0.2325, and the Kappa coefficient is improved by 0.5210, respectively, which verified the reliability of the improved in heterogeneous image CD. Similarly, high-quality DIs obtained accurate CD results on KNN, RF, FITENSEMBLE, and SVM classifiers. At the same time, the Kappa coefficient ranked first, and the MD rate was the lowest at 0.0458 when using SVM classifiers for CD. Compared with the NR-CFI-BI-FCM-SVM method, the FA and MD rates of the NR-CFI-BI-FCM-FCM-SBN are increased by 0.1180 and 0.0573, respectively. Compared with the NR-CFI-BI-FCM-SVM method, the SCASC, SDA, IRG-McS, SRF, GMCD, and SRGCAE methods decreased the FA rate by 0.0525, 0.0490, 0.0167, 0.0512, 0.0465, and 0.0118, respectively, but increased the MD rate by 0.2688, 0.1642, 0.2468, 0.2486, 0.2013, and 0.2473, respectively. In contrast, the CWNN method increased the FA and the MD rates by 0.0893 and 0.1529, respectively. From , it can be observed that the CD FA rates for Data II are relatively high due to the shadows, which result in poor alignment accuracy. However, the proposed method’s final Kappa, F1, and PCC values are the highest.

Table 10. Change detection accuracy of different classifier methods for Data 2.

Table 11. Change detection accuracy of different methods for Data 2.

The CD accuracy for Data III is presented in . Compared with the NR_ELM method, the MD rate of the NR-CFI-BI-FCM-ELM method is increased by 0.0589, and the FA rate is reduced by 0.2714, which evidenced the reliability of the improved in the heterogeneous images CD. Similarly, the KNN, RF, FCM-SBN, FITENSEMBLE, and SVM classifiers also achieved satisfactory results. Among them, the SVM-based CD method obtained a Kappa coefficient of 0.8063 and a low MD rate of 0.0606. Compared with the NR-CFI-BI-FCM-SVM method, the SCASC, IRG-McS, SRF, GMCD, SRGCAE, and CWNN methods increased the FA rate by 0.0497, 0.0896, 0.0064, 0.0575, 0.1230 and 0.0576, and the MD rate by 0.0286, 0.1461, 0.0529, 0.1484, 0.0741 and 0.0317, respectively, and compared with the NR-CFI-BI-FCM-SVM method, the SDA method decreased the MD rate by 0.0111 but increased the FA rate by 0.0774. In contrast, the proposed method demonstrated superiority over other competitors in terms of Kappa value, F1 value, and PCC value.

Table 12. Change detection accuracy of different classifier methods for Data 3.

Table 13. Change detection accuracy of different methods for Data 3.

In conclusion, the improved performs better in heterogeneous image CD. Compared with various classifiers, the SVM-based CD model utilized in this proposed method exhibits the highest level of stability. Furthermore, compared with competitive approaches, our method demonstrates the lowest instances of false and missed detections and achieves the highest Kappa coefficient across all three datasets.

Conclusion

This paper proposes a self-supervised change detection method based on a difference algorithm to overcome the problem of poor quality of the DI caused by heterogeneous image differences and noises. Although existing change detection methods also adopt the fusion idea, the difference in this paper is that the fused data is used to suppress the differences and noise of the heterogeneous images, which improves the neighborhood ratio difference algorithm. In order to reduce the labor and material cost of sample extraction, we improved the hierarchical FCM clustering algorithm. Unlike the existing sample extraction method, the changed sample and the unchanged sample extraction are independent in this paper. The standard difference image and the exponential difference image are used respectively to ensure the quality of the sample extraction and to avoid the problem of the accuracy of the change detection being dependent on the threshold value. Change detection is accomplished using the improved neighborhood ratio difference image method, which improves change detection accuracy. This process realizes self-supervised sample extraction and improves the efficiency of change detection. Experimenting with heterogeneous image datasets from three distinct scenes confirms the effectiveness of the proposed method.

Our approach exhibits high accuracy and stability compared to up-to-date CD methods. The ablation experiments of competitive classifiers also justify our method’s adoption of the SVM classifier. In addition, experiments have confirmed that adding fused images without auxiliary hyperspectral and high spatial resolution image data can generate high-quality DIs, which can be used to extract high-quality samples. Furthermore, the clustering method used does not overly depend on the thresholds.

However, the proposed method has two limitations that must be addressed in future work. First, the chosen parameters for calculating the exponential DI may not be optimal, and an iterative framework will be used to improve the CD accuracy. Second, when working with multi-source remote sensing images with complex spectral and textural features, the fusion method employed in this study might not yield a consistently stable outcome. Therefore, the fusion method has to be improved to enhance the adaptability of the proposed method.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Agapiou, A. (2020). Evaluation of Landsat 8 OLI/TIRS level-2 and sentinel 2 level-1C fusion techniques intended for image segmentation of archaeological landscapes and proxies. In A. Agapiou (Ed.), Remote Sensing, 12(3), 579. https://doi.org/10.3390/rs12030579

- Asokan, A., & Anitha, J. (2019). Change detection techniques for remote sensing applications: A survey. Earth Science Informatics (Vol. 12, pp. 143–21).

- Baik, S.-H., Park, S.-K., Kim, C.-J., & Kim, S.-Y. (2001). Two-channel spatial phase shifting electronic speckle pattern interferometer. Optics Communications, 192(3–6), 205–211. https://doi.org/10.1016/S0030-4018(01)01223-8

- Chen, H., Yokoya, N., Wu, C., & Du, B. (2022). Unsupervised multimodal change detection based on structural relationship graph representation learning. IEEE transactions on geoscience and remote sensing (Vol. 60, pp. 1–18).

- Chen, Y., Ming, Z., & Menenti, M. (2020). Change detection algorithm for multi-temporal remote sensing images based on adaptive parameter estimation. Institute of Electrical and Electronics Engineers Access, 8, 106083–106096. https://doi.org/10.1109/ACCESS.2020.2993910

- Davari, N., Akbarizadeh, G., & Mashhour, E. (2021). Corona detection and power equipment classification based on GoogleNet-AlexNet: An accurate and intelligent defect detection model based on deep learning for power distribution lines. IEEE Transactions on Power Delivery, 37(4), 2766–2774. https://doi.org/10.1109/TPWRD.2021.3116489

- Dong, H., Ma, W., Wu, Y., Zhang, J., & Jiao, L. (2020). Self-supervised representation learning for remote sensing image change detection based on temporal prediction. Remote Sensing, 12(11), 1868. https://doi.org/10.3390/rs12111868

- Du, Z., Li, X., Miao, J., Huang, Y., Shen, H., & Zhang, L. (2023). Concatenated deep learning framework for multi-task change detection of optical and SAR Images. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing (Vol. 17, pp. 719–731).

- Fan, J., Zhang, M., Chen, J., Zuo, J., Shi, Z., & Ji, M. (2023). Building change detection with deep learning by fusing spectral and texture features of multisource remote sensing images: A GF-1 and sentinel 2B data case. Remote Sensing, 15(9), 2351.

- Ferraris, V., Dobigeon, N., & Chabert, M. (2020). Robust fusion algorithms for unsupervised change detection between multi-band optical images—A comprehensive case study. In V. Ferraris, N. Dobigeon, & M. Chabert (Eds.), Information Fusion (Vol. 64, pp. 293–317).

- Fu, Y., Yang, S., Li, Y., Yan, H., & Zheng, Y. (2023). A novel SAR and optical image fusion algorithm based on an improved SPCNN and phase congruency information. International Journal of Remote Sensing, 44(4), 1328–1347. https://doi.org/10.1080/01431161.2023.2179899

- Gao, F., Dong, J., Li, B., Xu, Q., & Xie, C. (2016). Change detection from synthetic aperture radar images based on neighborhood-based ratio and extreme learning machine. Journal of Applied Remote Sensing, 10(4), 046019–046019. https://doi.org/10.1117/1.JRS.10.046019

- Gao, F., Wang, X., Gao, Y., Dong, J., Wang, S. J. I. G., & Letters, R. S. (2019). Sea ice change detection in SAR images based on convolutional-wavelet neural networks. IEEE Geoscience and Remote Sensing Letters, 16(8), 1240–1244. https://doi.org/10.1109/LGRS.2019.2895656

- Geng, J., Ma, X., Zhou, X., & Wang, H. (2019). Saliency-guided deep neural networks for SAR image change detection. IEEE Transactions on Geoscience and Remote Sensing, 57(10), 7365–7377. https://doi.org/10.1109/TGRS.2019.2913095

- Hao, F., Feng, Y., & Guan, Y. J. C. (2019). A novel botulinum toxin TAT-EGFP-HCS fusion protein capable of specific delivery through the blood-brain barrier to the central nervous system. Cns\& Neurological Disorders-Drug Targets, 18(1), 37–43. https://doi.org/10.2174/1871527317666181011113215

- Hou, Z., Li, W., Tao, R., & Du, Q. (2021). Three-order tucker decomposition and reconstruction detector for unsupervised hyperspectral change detection. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 14, 6194–6205. https://doi.org/10.1109/JSTARS.2021.3088438

- Jiang, A., Dai, J., Yu, S., Zhang, B., Xie, Q., & Zhang, H. (2022). Unsupervised change detection around subways based on sar combined difference images. Remote Sensing, 14(17), 4419.

- Jiang, X., Li, G., Liu, Y., Zhang, X.-P., & He, Y. (2020). Change detection in heterogeneous optical and SAR remote sensing images via deep homogeneous feature fusion. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing (Vol. 13. pp. 1551–1566).

- Lantzanakis, G., Mitraka, Z., & Chrysoulakis, N. (2020). X-SVM: An extension of C-SVM algorithm for classification of high-resolution satellite imagery. IEEE transactions on geoscience and remote sensing (Vol. 59. pp. 3805–3815).

- Li, H., Zhu, F., Zheng, X., Liu, M., & Chen, G. (2022). MSCDUNet: A deep learning framework for built-up area change detection integrating multispectral, SAR, and VHR data. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 15, 5163–5176. https://doi.org/10.1109/JSTARS.2022.3181155

- Li, X., Du, Z., Huang, Y., & Tan, Z. (2021). A deep translation (GAN) based change detection network for optical and SAR remote sensing images. ISPRS Journal of Photogrammetry and Remote Sensing, 179, 14–34. https://doi.org/10.1016/j.isprsjprs.2021.07.007

- Li, Y., Li, X., Song, J., Wang, Z., He, Y., & Yang, S. (2023). Remote-sensing-based change detection using change vector analysis in posterior probability space: A context-sensitive bayesian network approach. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 16, 3198–3217. https://doi.org/10.1109/JSTARS.2023.3260112

- Liu, J., Gong, M., Qin, K., & Zhang, P. (2016). A deep convolutional coupling network for change detection based on heterogeneous optical and radar images. IEEE transactions on neural networks and learning systems (Vol. 29. pp. 545–559).

- Liu, Y.-W., & Chen, H. (2022). A fast and efficient change-point detection framework based on approximate $ k $-nearest neighbor graphs. IEEE Transactions on Signal Processing, 70, 1976–1986. https://doi.org/10.1109/TSP.2022.3162120

- Longbotham, N., Pacifici, F., Glenn, T., Zare, A., Volpi, M., Tuia, D., Christophe, E., Michel, J., Inglada, J., & Chanussot, J. (2012). Multi-modal change detection, application to the detection of flooded areas: Outcome of the 2009–2010 data fusion contest. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing (Vol. 5. pp. 331–342).

- Lv, Z., Huang, H., Li, X., Zhao, M., Benediktsson, J. A., Sun, W., & Falco, N. J. P. O. T. I. (2022). Land cover change detection with heterogeneous remote sensing images: Review, progress, and perspective. Proceedings of the IEEE, 110(12), 1976–1991. https://doi.org/10.1109/JPROC.2022.3219376

- Mastro, P., Masiello, G., Serio, C., & Pepe, A. (2022). Change detection techniques with synthetic aperture radar images: Experiments with random forests and sentinel-1 observations. Remote Sensing, 14(14), 3323. https://doi.org/10.3390/rs14143323

- Matikainen, L., Hyyppä, J., & Litkey, P. (2016). Multispectral airborne laser scanning for automated map updating. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 41, 323–330. https://doi.org/10.5194/isprs-archives-XLI-B3-323-2016

- Mignotte, M. (2020). A fractal projection and Markovian segmentation-based approach for multimodal change detection. IEEE transactions on geoscience and remote sensing, 58(11), 8046–8058.

- Niu, X., Gong, M., Zhan, T., & Yang, Y. (2018). A conditional adversarial network for change detection in heterogeneous images. IEEE Geoscience and Remote Sensing Letters,16(1), 45–49.

- Ou, X., Liu, L., Tu, B., Zhang, G., & Xu, Z. (2022). A CNN framework with slow-fast band selection and feature fusion grouping for hyperspectral image change detection. IEEE transactions on geoscience and remote sensing, 60, 1–16.

- Pan, J., Cui, W., An, X., Huang, X., Zhang, H., Zhang, S., Zhang, R., Li, X., Cheng, W., Hu, Y. (2022). MapsNet: Multi-level feature constraint and fusion network for change detection. International Journal of Applied Earth Observation and Geoinformation (Vol. 108, p. 102676).

- Pan, J., Li, X., Cai, Z., Sun, B., & Cui, W. (2022). A self-attentive hybrid coding network for 3D change detection in high-resolution optical stereo images. Remote Sensing, 14(9), 2046. https://doi.org/10.3390/rs14092046

- Roy, S. K., Deria, A., Hong, D., Rasti, B., Plaza, A., & Chanussot, J. (2023). Multimodal fusion transformer for remote sensing image classification. IEEE Transactions on Geoscience and Remote Sensing, 61, 1–20. https://doi.org/10.1109/TGRS.2023.3286826

- Samadi, F., Akbarizadeh, G., & Kaabi, H. (2019). Change detection in SAR images using deep belief network: A new training approach based on morphological images. IET Image Processing, 13(12), 2255–2264. https://doi.org/10.1049/iet-ipr.2018.6248

- Seo, D. K., Kim, Y. H., Eo, Y. D., Lee, M. H., & Park, W. Y. (2018). Fusion of SAR and multispectral images using random forest regression for change detection. ISPRS International Journal of Geo-Information, 7(10), 401. https://doi.org/10.3390/ijgi7100401

- Shi, J., Liu, X., Yang, S., Lei, Y., & Tian, D. (2021). An initialization friendly Gaussian mixture model based multi-objective clustering method for SAR images change detection. Journal of Ambient Intelligence and Humanized Computing, 1–13.

- Shi, J., Wu, T., Yu, H., Qin, A. K., Jeon, G., & Lei, Y. (2023). Multi-layer composite autoencoders for semi-supervised change detection in heterogeneous remote sensing images. Science China Information Sciences (Vol. 66, p. 140308).

- Shirani, K., Solhi, S., & Pasandi, M. (2023). Automatic landform recognition, extraction, and classification using kernel pattern modeling. Journal of Geovisualization and Spatial Analysis, 7(1), 2.

- Sun, Y., Lei, L., Guan, D., & Kuang, G. (2021). Iterative robust graph for unsupervised change detection of heterogeneous remote sensing images. IEEE Transactions on Image Processing, 30, 6277–6291. https://doi.org/10.1109/TIP.2021.3093766

- Sun, Y., Lei, L., Guan, D., Kuang, G., & Liu, L. (2022). Graph signal processing for heterogeneous change detection. IEEE Transactions on Image Processing, 60, 1–23.

- Sun, Y., Lei, L., Guan, D., Li, M., & Kuang, G. (2021). Sparse-constrained adaptive structure consistency-based unsupervised image regression for heterogeneous remote-sensing change detection. IEEE transactions on geoscience and remote sensing, 60, 1–14.

- Sun, Y., Lei, L., Guan, D., Wu, J., & Kuang, G. (2022). Iterative robust graph for unsupervised change detection of heterogeneous remote sensing images. Pattern Recognition (Vol. 131, p. 108845).

- Sun, Y., Lei, L., Li, X., Tan, X., & Kuang, G. (2021). Structure consistency-based graph for unsupervised change detection with homogeneous and heterogeneous remote sensing images. IEEE transactions on geoscience and remote sensing, 60, 1–21.

- Sun, Y., Lei, L., Liu, L., Kuang, G., Ren, K., & Peng, J. (2023). Coupled temporal variation information estimation and resolution enhancement for remote sensing spatial–temporal–spectral fusion. IEEE Transactions on Geoscience Remote Sensing, 61, 1–18. https://doi.org/10.1109/TGRS.2023.3335418

- Tang, X., Zhang, H., Mou, L., Liu, F., Zhang, X., Zhu, X. X., & Jiao, L. (2021). An unsupervised remote sensing change detection method based on multiscale graph convolutional network and metric learning. IEEE transactions on geoscience and remote sensing (Vol. 60, pp. 1–15).

- Wang, Z., Ziou, D., Armenakis, C., Li, D., & Li, Q. (2005). A comparative analysis of image fusion methods. IEEE Transactions on Geoscience and Remote Sensing, 43(6), 1391–1402. https://doi.org/10.1109/TGRS.2005.846874

- Wu, J., Li, B., Qin, Y., Ni, W., Zhang, H., Fu, R., & Sun, Y. (2021). A multiscale graph convolutional network for change detection in homogeneous and heterogeneous remote sensing images. International Journal of Applied Earth Observation and Geoinformation, 105, 102615.

- Wu, S., & Chow, T. W. (2004). Clustering of the self-organizing map using a clustering validity index based on inter-cluster and intra-cluster density. Pattern Recognition, 37(2), 175–188. https://doi.org/10.1016/S0031-3203(03)00237-1

- Xu, D., Li, M., Wu, Y., Zhang, P., Xin, X., & Yang, Z. (2023). Difference-guided multiscale graph convolution network for unsupervised change detection in PolSAR images. Neurocomputing, 555, 126611.

- Xuan, J., Xin, Z., Liao, G., Huang, P., Wang, Z., & Sun, Y. (2022). Change detection based on fusion difference image and multi-scale morphological reconstruction for SAR images. Remote Sensing, 14(15), 3604.

- Ye, S., Chen, D., & Yu, J. (2016). A targeted change-detection procedure by combining change vector analysis and post-classification approach. ISPRS Journal of Photogrammetry and Remote Sensing, 114, 115–124. https://doi.org/10.1016/j.isprsjprs.2016.01.018

- Yin, H., Ma, C., Weng, L., Xia, M., & Lin, H. (2023). Bitemporal remote sensing image change detection network based on siamese-attention feedback architecture. Remote Sensing, 15(17), 4186. https://doi.org/10.3390/rs15174186

- Zhan, T., Gong, M., Jiang, X., & Li, S. (2018). Log-based transformation feature learning for change detection in heterogeneous images. IEEE Geoscience and Remote Sensing Letters, 15(9), 1352–1356. https://doi.org/10.1109/LGRS.2018.2843385

- Zheng, X., Chen, X., Lu, X., & Sun, B. (2021). Unsupervised change detection by cross-resolution difference learning. IEEE Transactions on Geoscience and Remote Sensing, 60, 1–16. https://doi.org/10.1109/TGRS.2021.3079907

- Zhuang, H., Fan, H., Deng, K., & Yu, Y. (2018). An improved neighborhood-based ratio approach for change detection in SAR images. European Journal of Remote Sensing, 51(1), 723–738. https://doi.org/10.1080/22797254.2018.1482523