?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The distributional pattern of words in language forms the basis of linguistic distributional knowledge and contributes to conceptual processing, yet many questions remain regarding its role in cognition. We propose that corpus-based linguistic distributional models can represent a cognitively plausible approach to understanding linguistic distributional knowledge when assumed to represent an essential component of semantics, when trained on corpora representative of human language experience, and when they capture the diverse distributional relations that are useful to cognition. Using an extensive set of cognitive tasks that vary in the complexity of conceptual processing required, we systematically evaluate a wide range of model families, corpora, and parameters, and demonstrate that there is no one-size-fits-all approach for how linguistic distributional knowledge is used across cognition. Rather, linguistic distributional knowledge is a rich source of information about the world that can be accessed flexibly according to the conceptual complexity of the task at hand.

Introduction

Linguistic distributional knowledge emerges from our experience with language. Humans are continually exposed to a rich environment of natural language and, through this exposure, learn patterns of linguistic distributional information; that is, statistical regularities in the occurrences of different words in different contexts (e.g. Hall et al., Citation2018; Lazaridou et al., Citation2017; Wonnacott et al., Citation2008). Famously summarised by Firth (Citation1957, p. 179) as “You shall know a word by the company it keeps”, these regularities form the basis of the distributional hypothesis: words with similar meanings tend to appear in similar contexts. For instance, the word cat tends to appear in contexts concerning pet, fur, collar, purring, claws, and so on. The word kitten tends to appear in many of the same contexts, and the similarity of cat and kitten can thus be estimated by the similarity of their contexts. Linguistic distributional knowledge therefore represents conceptual knowledge as statistical patterns of how words are distributed in relation to one another (Barsalou et al., Citation2008; Connell, Citation2019; Connell & Lynott, Citation2014; Louwerse, Citation2011; Louwerse & Jeuniaux, Citation2010; Vigliocco et al., Citation2009), and empirical research shows that it is powerful enough to support a variety of conceptual processes (e.g. Connell & Lynott, Citation2013; Lenci et al., Citation2018; Louwerse & Jeuniaux, Citation2008).

Research on linguistic distributional models (LDMs)Footnote1 has developed computational means of capturing and approximating word meaning from statistical analyses of associations between words and their contexts in large corpora of text. Where the corpora are reasonably representative of a natural linguistic environment, the associations learned by an LDM can be considered to approximate those which could be learned by a person exposed to that environment. While specific LDMs differ in their learning mechanisms, their common goal of constructing distributional representations of meaning has become increasingly important to the cognitive sciences since the mid 1990s. At a theoretical level, the potential ability to extract complex meaning from a limited set of words has led some researchers to suggest that LDMs could go some way to solving Plato’s problem (i.e. poverty of the stimulus: Landauer & Dumais, Citation1997). Indeed, early LDMs such as Latent Semantic Analysis (LSA: Landauer & Dumais, Citation1997) and the Hyperspace Analog to Language (HAL: Lund & Burgess, Citation1996) were able to approximate human performance in an impressive set of tasks, such as TOEFL synonym matching (Landauer & Dumais, Citation1997), semantic priming (Lund et al., Citation1995), and category typicality rating (Connell & Ramscar, Citation2001). However, the limitations of LDMs soon emerged (e.g. Glenberg & Robertson, Citation2000; Perfetti, Citation1998). For instance, LDMs have difficulty inducing novel actions for objects (Glenberg & Robertson, Citation2000), at least in part because the distributional patterns in language are limited to the kinds of human experience about which people have talked or written (Connell, Citation2019). Nonetheless, the ability of LDMs to capture many aspects of meaning should not be underestimated, and researchers from across the cognitive sciences have continued to debate the extent to which distributional information plays a role in human cognitive processing (e.g. Andrews et al., Citation2014; Connell & Lynott, Citation2014; Dove, Citation2014; Günther et al., Citation2019; Kumar, Citation2021; Lenci, Citation2018; Louwerse, Citation2011; Lupyan & Lewis, Citation2019; McNamara, Citation2011).

In the present paper, we review the role of linguistic distributional knowledge in cognition and examine how LDMs can contribute to our understanding of this important area. We first discuss the cognitive plausibility of LDMs as a general approach to modelling human cognition, from the perspective of the symbol grounding problem, the representativeness of training corpora in terms of human language experience, and the nature of conceptual relations captured by LDMs. We then turn to specific approaches of how LDMs model linguistic distributional knowledge using different model families and corpora that vary in size and quality, and discuss how the largely parallel literatures in distributional semantics and linguistic–simulation research have led to different assumptions regarding how linguistic distributional knowledge is used in cognition. In the remainder of the paper, we report the most comprehensive investigation to date of linguistic distributional knowledge in cognition. We construct a large set of LDMs (540 in total) that vary systematically across model families, training corpora, and parameters, and evaluate their ability to capture human performance across a broad set of cognitive tasks, from conceptually simple tasks that rely on a single paradigmatic relation to conceptually complex tasks that require sophisticated processing of a wide variety of semantic relations, particularly of the abstracted bag-of-words type. Overall, we find that LDMs successfully model human behaviour in all tasks but that the optimal LDM varies as the conceptual complexity increases, indicating that there is no one-size-fits-all approach for how linguistic distributional knowledge is used across cognition. Rather, the data support a task-dependent, flexible approach to the use of linguistic distributional knowledge in cognition. We discuss the cross-disciplinary theoretical and methodological implications of viewing linguistic distributional knowledge as a rich source of information about the world that can be accessed flexibly according to cognitive need.

Cognitive plausibility of linguistic distributional models

The cognitive plausibility of LDMs has been a concern since their inception and continues to be a matter of debate (Barsalou, Citation2017; Boleda & Herbelot, Citation2016; Glenberg & Robertson, Citation2000; Günther et al., Citation2019; Perfetti, Citation1998). Some critics have targeted low-level implementational details of specific models, such as the use of supervised learning in Mikolov, Chen, et al.’s (Citation2013) Word2vec models (e.g. Huebner & Willits, Citation2018; cf. Hollis, Citation2017). For our present purposes, however, we focus in this section on issues that are general to LDMs as an approach to modelling human cognition, namely symbol grounding, choice of training corpus, and nature of captured distributional relations.

Symbol grounding

First is the symbol grounding problem. The ungrounded nature of representations within LDMs makes them theoretically problematic as a sole account of meaning. When words are connected only to other words, their grasp on semantics quickly runs into the artificial circularity of Searle’s (Citation1980) Chinese room (see also Harnad, Citation1990), and this problem remains a perennial point of discussion in theoretical reviews of the linguistic distributional approach (e.g. Emerson, Citation2020; Glenberg & Robertson, Citation2000; Kumar, Citation2021). However, according to linguistic–simulation theories of concepts and cognition, linguistic distributional knowledge is explicitly grounded in simulations of perceptual and action experience. These theories propose that human conceptual knowledge is represented partly as associative patterns of how words are distributed in relation to one another and partly as an embodied simulation (i.e. partial replay) of sensorimotor experience, and include accounts such as language as situated simulation (LASS: Barsalou et al., Citation2008), the symbol interdependency hypothesis (Louwerse, Citation2011; Louwerse & Jeuniaux, Citation2008), and the linguistic shortcut hypothesis (Connell, Citation2019; Connell & Lynott, Citation2014), amongst others (e.g. Lynott & Connell, Citation2010; Vigliocco et al., Citation2009). Critically, when words are connected to sensorimotor (sometimes called embodied) representations as well as to other words, they are not subject to the symbol grounding problem. For example, linguistic distributional knowledge of dog may include words such as collar, tail, cat, walkies, etc., and each of these words is grounded in sensorimotor information (e.g. visual, auditory, hand action) in its own right. Indeed, some linguistic–simulation accounts argue that sensorimotor experience of a referent concept is not necessary for grounding, and that distributional connections between words can help to infer grounded representations where they are lacking (Louwerse, Citation2011; see also Johns & Jones, Citation2012). Other work in computational cognitive modelling has aimed to create grounded models of conceptual representation by incorporating both forms of information (e.g. Banks et al., Citation2021; Bruni et al., Citation2014; Lazaridou et al., Citation2017; Riordan & Jones, Citation2011).

The implication of this theoretical perspective is that linguistic distributional knowledge cannot be expected to account for all conceptual knowledge, and therefore LDMs – as computational instantiations of linguistic distributional knowledge – cannot be expected to model all of semantics. Nonetheless, linguistic distributional knowledge can assume some of the burden of conceptual processing because, while every word is ultimately grounded in sensorimotor information, it does not have to be grounded every time it is processed (Connell, Citation2019; Louwerse, Citation2011). In that sense, LDMs are cognitively plausible if they are assumed to model an essential component of semantics that is grounded in a complementary sensorimotor component.

Training corpus

The second issue is that of the size and content of training corpora in relation to human language experience. Corpus size is important to the cognitive plausibility of LDMs, because if a model can only approximate human behaviour using a corpus that is orders of magnitude larger than that accumulated in a human lifetime of language experience, then it is not a plausible model of how linguistic distributional knowledge works in humans (cf. Hollis, Citation2017). The corpora underlying successful LDMs vary enormously in size, from 11 million words in the TASA corpus used by Latent Semantic Analysis (LSA: Landauer & Dumais, Citation1997) to one trillion words in the Google corpus used in Web 1T n-grams (Brants & Franz, Citation2006). But how many words has a typical adult accumulated in a lifetime of language experience? People in modern, literate societies tend to experience language through spoken interactions with other people, broadcast media such as television, and reading written texts. Brysbaert et al. (Citation2016) estimate spoken language experience from social interactions at a total of 11.69 million tokens per year (based on recoded data from Mehl et al., Citation2007). Watching television is another important form of spoken language experience, which Brysbaert and colleagues estimate at an upper bound of 27.26 million words per year, but this upper bound is based on a rather implausible 20 h a day of non-stop viewing (subtitle corpus data from van Heuven et al., Citation2014). Reading text clocks up written language experience even more quickly than spoken language experience, with an estimate of 105 million words per year at the upper bound, though this again is based on a rather implausible 16 h a day of rapid reading (Brysbaert et al.’s estimates of reading rates from e.g. Carver, Citation1989).

Using Brysbaert et al.’s collated figures, let us imagine a person whose average day contains a typical amount of social interactions (11.69 million words/year), plus 2 h of watching television (2.73 million words/year), and 1 h of reading any form of text (6.57 million words/year). This person’s language experience, based on a reasonable approximation of human activity, comes to approximately 21 million words per year. A 20-year old (assuming this pattern from age 5) would have language experience of 315 million words. By age 60, it would have increased to 1.15 billion words. These estimates are of course highly variable. Someone who never reads and watches television for one extra hour each day will accumulate language experience (15.8 million words/year) at approximately half the rate of someone who never watches television and instead reads for an extra two hours each day (31.4 million words/year). This relatively minor variation in behaviour would lead to language experience of 237 million words for a 20-year-old television fan, but 1.73 billion words for a keen 60-year-old reader.

In short, these estimates suggest that the cumulative total language experience of an English-speaking adult appears to range legitimately from a couple of hundred million words up to a couple of billion words. Any LDMs that use corpora in this size range are cognitively plausible in their assumed extent of language experience, but small corpora of tens of millions of words, and large corpora of tens of billions to trillions of words, are implausible.

However, the content of language experience is another matter. Very large corpora comprising billions of words tend to be based on uncorrected text scraped from the web (e.g. UKWAC has 2 billion words: Baroni et al., Citation2009; Google News corpus has up to 100 billion words: Mikolov, Chen, et al., Citation2013; Common Crawl corpus expands monthly but has been used up to 840 billion words: Pennington et al., Citation2014). As well as containing relatively high levels of noise (i.e. typos and other non-word tokens: Baroni et al., Citation2009), the very nature of web-scraped corpora will bear little resemblance to the language experience of a human who accumulates up to 2 billion words over decades of social interactions, consuming media, and reading text.

By contrast, high-quality, professionally curated corpora, that aim to bring together a representative collection of spoken and written English in a given dialect, tend to be a lot smaller. For instance, the British National Corpus (BNC: BNC Consortium, Citation2007) contains approximately 10% spoken content (i.e. mostly spontaneous conversation from a demographically balanced sample of speakers, with some formal spoken contexts such as lectures, news commentaries, radio show transcripts, and business/committee meetings) and 90% written content (i.e. texts from a wide range of ages and contexts, such as children’s essays, leaflets, brochures, magazines, newspapers, fiction and nonfiction books, and television scripts). Its content is high-quality corrected text that is representative of British English, and is cognitively plausible in its resemblance to the content of human language experience, but, at 100 million words, its size is under the lower bound of adult language experience.

A third group of corpora has become popular in recent years, based on the subtitling of television and film, that tends to lie in between the web-scraped and professional corpora in terms of both size and content. Typically, these corpora contain transcripts of both unscripted and scripted speech, from television shows and movies across a range of genres directed at both children and adults. Subtitle corpora generated from automated or amateur transcriptions are large but prone to error (e.g. the English portion of OpenSubtitles-2016 has 2.5 billion words: Lison & Tiedemann, Citation2016, but includes machine translations with grammatical and translation errors, Lison & Dogruöz, Citation2018), while those based on professional transcriptions for DVDs or public broadcasters are smaller but higher quality (e.g. the SUBTLEX-UK corpus is based on 200 million words of corrected subtitles for the British Broadcasting Corporation: van Heuven et al., Citation2014).

In summary, there exists a certain tension between cognitively plausible content and cognitively plausible size of available corpora for LDMs. Professional, representative corpora that balance spoken (both social and media) and written sources are relatively small but comprise the most plausible content, followed by medium-sized subtitle corpora that contain a representative range of spoken media sources, while very large web-scraped corpora that comprise unrepresentative written sources are the least plausible. Nonetheless, there is some evidence that differences in corpus content become less important once corpus size is large enough, although it may depend on the particular task used to evaluate performance (e.g. Bullinaria & Levy, Citation2012). It therefore remains an open question which form of training corpus (from relatively small but high quality to large but noisy) can best approximate human language experience in an LDM, and whether the efficacy of this approximation generalises across tasks and models.

Nature of distributional relations

Third, and final, is the nature of distributional relations captured by LDMs. From a theoretical perspective, LDMs are generally assumed to approximate human experience of linguistic distributional knowledge rather than to model its learning mechanisms literally; that is, they address Marr’s (Citation1982; see also Bechtel & Shagrir, Citation2015) computational and to some extent algorithmic level of cognitive modelling, but not the implementational level. As an approximation, the forms of linguistic distributional knowledge captured by LDMs include syntagmatic and paradigmatic relations (de Saussure, Citation1916; Hjelmslev, Citation1961), both of which are plausibly useful to human cognition (e.g. Murphy, Citation2003; Nelson, Citation1977; Sloutsky et al., Citation2017), as well as more generalised non-syntagmatic, non-paradigmatic relations that we discuss below.Footnote2

Syntagmatic relations are built from words appearing in complementary syntactic positions within the same sentential structure. For example, in the sentence she has blue eyes, the words blue and eyes are syntagmatically related due to the syntactic positions they occupy in relation to one another (i.e. adjective modifies noun). Such relations can be learned from a single occurrence, but more generally, if blue usually co-occurs in this syntactic role with eyes across language experience, then one could expect the word blue to evoke the word eyes on a syntagmatic basis. Syntagmatic relationships of this sort reflect a range of semantic information, including concept properties via adjectives (e.g. blue–eyes, happy–childhood), constituent parts via possessives (e.g. dog–tail, tractor–wheels), and thematic relationships such as agent actions (e.g. cat–miaow, customer–pay), object functions (throw–ball, sit–chair), and thematic agent-patient roles (e.g. dog–ball, boat–river) via verb structure.

Paradigmatic relations, on the other hand, are built from words appearing in the same syntactic positions across similar sentential contexts, even if they never appear together. For instance, in the additional sentence he has brown eyes, the words blue and brown are paradigmatically related because each word independently occurs in the same syntactic position within the shared context of eyes. Such relations require multiple exposures to learn, but in general, if blue and brown both co-occur in this syntactic role in relation to eyes across language experience, then one could expect the word blue to evoke the word brown on a paradigmatic basis. Paradigmatic relations therefore capture similarity of meaning and syntactic substitutability in a way that syntagmatic relations do not, and reflect semantic information that includes synonyms (e.g. blue–azure, run–sprint), antonyms (e.g. hot–cold, rise–fall), shared categories (e.g. dog–cat, happy–angry), and taxonomic classes (e.g. dog–animal, chair–furniture).

With a few exceptions (e.g. Jones & Mewhort, Citation2007; Padó & Lapata, Citation2007), LDMs tend to ignore syntactic structure entirely and concentrate instead on the unordered presence of words within a particular section of text (i.e. the “bag of words” approach: see Lapesa & Evert, Citation2017, for discussion). In this way, LDMs can capture other forms of linguistic distributional relations that do not rely on syntactic role and hence cannot be neatly fit into syntagmatic or paradigmatic relations. We term these relations, which are learned regardless of syntax, bag-of-words relations. For example, words that co-occur across sentence boundaries do not occupy syntactic positions in relation to one other, but the presence of these words in sequential sentences nonetheless makes it likely that they are broadly related. The sentences He stubbed his toe. “Ow!”, he yelped. will not connect stubbed–ow or toe–ow in either a syntagmatic or paradigmatic sense, but an LDM that ignores sentence boundaries will pick up the relationship on the basis of their co-occurrence. Another case comes from words that frequently appear in the same context but across a wide variety of syntactic positions: strictly, each syntactic role should create a separate syntagmatic and/or paradigmatic relation, which in turn makes it very difficult to generalise a strong relationship across instances. For instance, the words Paris and France are clearly related but appear in a wide variety of syntactic roles in relation to one other: The capital of France is Paris; She lives in Paris, France; Paris is the largest city in France; Rural France and Paris are very different; They played at Stade de France in Paris. An LDM that ignores syntax will count all these co-occurrences in the same way and generalise to form a strong Paris–France relationship. It remains unclear to what extent these bag-of-words relations provide systematically important semantic information, but since they appear to capture situational and thematic context, and are often spontaneously produced by participants in production tasks (where they tend to be coded as temporal or general associative relations: Wu & Barsalou, Citation2009; or remain as unclassified thematic relations: Jouravlev & McRae, Citation2016), it is plausible that they are useful in conceptual processing (e.g. Paris evokes France; stubbed evokes ow).

Notably, the three distributional relations vary in their complexity and how easy they are to process. Semantic relations that can be learned paradigmatically (e.g. categorical relations, synonyms) tend to be regarded as relatively simple and low-level compared to relations that are learned syntagmatically (e.g. object properties, thematic roles: Chaffin & Hermann, Citation1987; Mudrik et al., Citation2014). For instance, paradigmatic relations drive the majority of responses in free association tasks (Burke & Peters, Citation1986; Cramer, Citation1968), particularly the first associates that come to mind (De Deyne & Storms, Citation2008). Syntagmatic relations are still important in free association, but are dispreferred, particularly for nouns (Burke & Peters, Citation1986; De Deyne & Storms, Citation2008), which represent the most frequent word class in English (e.g. van Heuven et al., Citation2014). Such findings suggest that paradigmatically learned relations (e.g. synonyms error–mistake; shared categories cat–dog; taxonomic classes cat–animal) are typically simpler and easier to process than syntagmatically learned relations (e.g. object properties honey–sweet; function bed–sleeping; agent action cat–miaow). Bag-of-words relations appear to be more complex again, in that they represent a form of semantic relation that cannot be learned either paradigmatically or syntagmatically but rather serve to link together concepts in an abstracted manner outside syntactic roles. For example, the concept pairs apple–gravity, ship–ahoy, and stubbed–ow are each related in some way, but the relation does not emerge from the syntactic structures that produce syntagmatic and paradigmatic relations; rather, it emerges from high-level thematic, situational, or other nebulous relations. As well as such differences in complexity at the level of the individual relation, the way in which different semantic relations are combined together also affects complexity at the collective level of the discourse or stimulus set. Processing a particular semantic relation facilitates processing other stimuli that use the same relation (i.e. relation priming: Estes & Jones, Citation2006; Hristova, Citation2009), which means that a sequence of diverse relations (e.g. superordinate category cat–mammal, synonym error–mistake, function bed–sleeping) will be overall more conceptually complex than a sequence of repeated relations (e.g. synonyms blue–azure, error–mistake, run–sprint). Thus, differential reliance on paradigmatic, syntagmatic, and/or bag-of-words relations allows one to estimate how conceptually complex a cognitive task might be; we return to this point later with reference to the current study.

LDMs are therefore cognitively plausible in how they approximate human linguistic distributional knowledge, at least in terms of capturing syntagmatic, paradigmatic, and bag-of-words relations that vary in conceptual complexity. Nonetheless, different types of LDM capture these relations to differing extents, as we discuss in the next section, which means that not all LDMs are necessarily equal in their approximation of linguistic distributional knowledge.

Approaches to modelling linguistic distributional knowledge

In recent years, research on LDMs has tended to fall into two broad camps that have pursued parallel but largely distinct areas of investigation: distributional semantics of text processing and linguistic–simulation accounts of concepts and cognition.

Distributional semantics research has developed directly from the distributional hypothesis in linguistics (Firth, Citation1957; Harris, Citation1954) and is currently concentrated in the fields of computational/corpus linguistics and machine learning, with applications in areas such as information retrieval, natural language processing, and data mining. A key feature of this work has been the continuous development of ever more sophisticated methods of modelling distributional information with a view to enhancing the state-of-the-art LDM performance in a given domain (i.e. which model does best across systematic comparisons: Baroni et al., Citation2014; Bullinaria & Levy, Citation2007, Citation2012; Kiela & Clark, Citation2014). Systematic comparisons of LDMs in this area have tended to evaluate models based on their performance in benchmarking tasks that focus on paradigmatic relations, such as synonym and analogy detection, similarity and relatedness judgements, and semantic and syntactic categorisation (e.g. Bullinaria & Levy, Citation2007; Lapesa & Evert, Citation2014). Performance is typically evaluated by comparing model data with objectively correct answers (e.g. multiple choice scoring in a vocabulary test) or with explicit human responses (e.g. ratings on a Likert scale). For instance, when tasked with selecting which out of bottle and cask is a better synonym for barrel, an LDM might select the candidate whose linguistic contexts most closely resemble those of barrel, indicating contextual substitutability (i.e. one word can substitute for another in many contexts when their meanings are similar). The best LDM would be the one which could most reliably select the correct response for any such task. Similarly, an LDM tasked with scoring the relatedness of word pairs such as boat:river and boat:cat can quantify the extent to which the words in each pair share similar linguistic contexts. The best LDM for this task would be one that can most successfully distinguish related from unrelated word pairs in a way that mirrors human ratings of semantic relatedness.

Recent work in distributional semantics strongly favours predict models and very large corpora. Predict models, also known as word embedding models,Footnote3 are neural networks that are trained to predict a given word from its context (or the context from a given word, as the case may be), and have gained acceptance as the state of the art in distributional semantics by significantly outperforming alternatives in systematic comparisons (e.g. Baroni et al., Citation2014; Mikolov, Chen, et al., Citation2013; Pereira et al., Citation2016; Zhang & LeCun, Citation2015; but see also Levy & Goldberg, Citation2014a; Pennington et al., Citation2014; Sahlgren & Lenci, Citation2016). The freely available Word2vec tool (Mikolov, Citation2017; Mikolov, Chen, et al., Citation2013) is perhaps the most popular implementation of predict models, and has become the standard against which other LDMs are compared (e.g. FastText: Bojanowski et al., Citation2016; GloVe: Pennington et al., Citation2014). By using vector geometry to calculate the similarity between two words, predict models are capable of detecting similarity between words without direct co-occurrence (i.e. reflecting higher-order relations): for instance, even if cask and barrel never appear together in the same context, predict models will score them as highly similar because their respective contexts contain many overlapping words at similar frequencies (e.g. wine, beer, storage, cellar). Such models are typically trained on very large but noisy corpora that comprise billions of words scraped from the Web, such as UKWAC (2 billion words: Baroni et al., Citation2009) or Google News corpus (up to 100 billion words; e.g. 6 billion words: Mikolov, Chen, et al., Citation2013). Very large corpora have become the norm in distributional semantics research because, although corpus size is inversely related to corpus quality, LDM performance has been shown to increase with corpus size (e.g. Bullinaria & Levy, Citation2012; De Deyne et al., Citation2015; Recchia & Jones, Citation2009). Training predict models on very large corpora has therefore become the de-facto standard approach in distributional semantics research for representing word meaning (Chersoni et al., Citation2020; Moreo et al., Citation2019; Naik et al., Citation2019).

Linguistic–simulation accounts of the conceptual system, on the other hand, have arisen from theoretical and experimental cognitive psychology and thus follow a different tradition to distributional semantics research. These accounts propose that the human conceptual system comprises two essential interlinked components: linguistic distributional knowledge of how words and phrases appear in statistical patterns one each other, and grounded simulations of sensorimotor–affective experience (Barsalou et al., Citation2008; Connell, Citation2019; Connell & Lynott, Citation2014; Louwerse, Citation2011; Louwerse & Jeuniaux, Citation2008; Vigliocco et al., Citation2009). A critical feature of these accounts is that linguistic distributional information is assumed to have a flexible rather than a constant role in conceptual processing, and that reliance on such information depends on a number of factors including the nature of the task, surrounding context, and general processing goals (see Connell, Citation2019; Connell & Lynott, Citation2014). Empirical work in linguistic–simulation research built on the successes of early LDMs such as LSA and HAL in modelling human performance, and has tended to focus on testing whether humans use linguistic distributional information in particular conceptual tasks (i.e. whether or not LDM data can predict human performance independent of other related predictors). For example, when investigating conceptual combination – that is, the ability to generate a new composite concept from two existing concepts (e.g. octopus apartment) – Connell and Lynott (Citation2013) found that the frequency with which two nouns co-occur in the same context can predict how easily they can be understood as a novel conceptual combination. In a very different paradigm on spatial cuing of attention, Goodhew et al. (Citation2014) presented a central cue word followed by an unrelated target letter at the top or bottom of the screen, and found that the spatial cuing effect was predicted by how often the cue word co-occurred with the spatial word for the target location (e.g. dream co-occurs with up more often than down and cues attention upward). When viewed collectively, most studies in linguistic–simulation research that use LDMs tend to model human data across a diverse range of tasks that rely on a broad variety of conceptual relationships rather than simply paradigmatic relations. Moreover, performance is typically evaluated by comparing model data with implicit measures of human processing effort in a given task (e.g. response times: RT) rather than with explicit human responses (e.g. ratings or proportion of correct responses). In the above example from Goodhew et al. (Citation2014), the words dream and up are not paradigmatically related (i.e. they do not occur in the same syntactic role across similar contexts), but their meanings are syntagmatically related in that they sometimes appear in the same syntactic frame (e.g. to dream up an idea). Others of their stimuli do not lend themselves to obvious syntagmatic relations and may instead rely on more abstracted bag-of-words relations to connect the words (e.g. god–up, castle–up). The best LDM for capturing human performance in such tasks would be the one that can most reliably identify such relationships and predict response times for an upward target.

There is also great diversity in the LDMs currently employed in linguistic–simulation research, with recent work successfully utilising a variety of model families (i.e. predict, count vector, n-gram), with a variety of corpus sizes, to model conceptual processing. To date, predict models have only seen limited use in linguistic–simulation research, but have proven useful in predicting human concreteness and imageability ratings when trained on a relatively small but high quality corpus (Rotaru et al., Citation2016), and also have been employed in more general psycholinguistic research (e.g. Mandera et al., Citation2017; Troyer & Kutas, Citation2020). In linguistic–simulation research, count vector and n-gram models are more common.

Count vector models learn by counting the co-occurrences of words and context within a corpus, applying transformations to the word–context count matrix, and using vector geometry to calculate the similarity between two words (see Bullinaria & Levy, Citation2007; Riordan & Jones, Citation2011; Turney & Pantel, Citation2010, for an overview of differences within this model family). Like predict models, count vector models can detect higher-order relationships between words without direct co-occurrence (e.g. even if barrel and cask never appear in the same context, they will score as highly related if their contexts overlap). Their architectures are fundamentally different in their approach to distributional learning, however: while predict models represent error-driven predictive learning, count vector models represent error-free Hebbian learning (Kumar, Citation2021). Several off-the-shelf LDMs, such as Latent Semantic Analysis (LSA: Landauer & Dumais, Citation1997) and the hyperspace analogue to language (HAL: Lund & Burgess, Citation1996), are count vector models. There is no consistent approach to corpus size and quality in this model family, with training corpora varying from a few million words of high-quality text to billions of words of low-quality text. Nonetheless, data from count vector models have been found to be a good predictor of human performance in a number of conceptual tasks, from ratings of concept abstractness (Lenci et al., Citation2018) and typicality (Connell & Ramscar, Citation2001), to concrete/abstract semantic decision (Hargreaves & Pexman, Citation2014), geographic mapping (Louwerse & Zwaan, Citation2009), and word–colour associations in synaesthetes (Goodhew & Kidd, Citation2017).

N-gram models operate more simply: they count the co-occurrences of words up to a window of size n around the target word and compare two words by examining their (transformed) co-occurrence frequency. As such, n-gram models represent an error-free Hebbian approach to distributional learning (like count vector models) and reflect direct co-occurrences, also known as first-order relations (e.g. dream and up must appear together often to score as highly related). Despite its apparent simplicity, first-order co-occurrence is theoretically important both as the basis for statistical learning of semantic knowledge (e.g. Unger et al., Citation2020), and as a means to determine whether human meaning induction in statistical learning can rely on the surface structure of language (as opposed to requiring a more complex algorithm to extrapolate higher-order relations: Louwerse, Citation2011). The n-gram model most often used in linguistic–simulation research is the Google Web 1T 5-gram frequencies (Brants & Franz, Citation2006), an off-the-shelf model that is based on an extremely large but low-quality corpus of one trillion tokens of web-scraped text. Data from n-gram models have proven a good predictor of human data in conceptual tasks that include integrative semantic priming (Jones et al., Citation2017), rating affective valence and arousal (Recchia & Louwerse, Citation2014), conceptual combination (Connell & Lynott, Citation2013), geographic mapping (Louwerse & Zwaan, Citation2009), and spatial cuing of attention (Goodhew et al., Citation2014). Indeed, despite the apparently limited scope of n-gram models in capturing only direct co-occurrences, they can replicate many of the key effects captured by the more complex count vector model LSA (Louwerse, Citation2011).

One likely reason why linguistic–simulation research has successfully used such a diverse range of models to predict human performance is that all three model families can capture both syntagmatic and paradigmatic relations to differing extents. N-gram models, by indexing first-order co-occurrences, capture syntagmatic relations such as blue-eyes. However, there is some evidence that first-order co-occurrences simultaneously capture paradigmatic relationships (Melamud et al., Citation2014; Rapp, Citation2002; Sahlgren, Citation2006) because at least some paradigmatically related words frequently co-occur in their own right (e.g. a sentence like blue and brown eyes are common in Europe will allow an n-gram model to capture the blue–brown relation that would normally be characterised as paradigmatic). Similarly, antonyms often co-occur (e.g. hot and cold water), as do items from the same or superordinate category (e.g. adopt a cat or dog from an animal sanctuary). N-gram models also capture bag-of-words relations by indexing across sentence boundaries (e.g. stubbed and ow can be linked regardless of syntax). Count vector models – and predict models – index second-order co-occurrences in their use of vector geometry to compare contexts, and hence capture paradigmatic relations such as blue–brown on the basis of their shared context with eyes and other terms. There is some evidence that count vector models can also simultaneously capture syntagmatic relations, if not quite as effectively as paradigmatic relations (Lapesa et al., Citation2014) because at least some syntagmatically related words often share similar contexts (e.g. blue and eyes will each appear in contexts concerning man, woman, child, face, etc.). That is, the fact that co-occurring words often separately appear in similar contexts across language means that count vector models can pick up at least some relations that are usually characterised as syntagmatic. However, the evidence is more limited for the ability of predict models to detect syntagmatic relations. The neural network architectures and training schemes of predict models in their conventional form are optimised for paradigmatic relations and generally perform poorly at capturing syntagmatic relations (Asr & Jones, Citation2017; O. Levy et al., Citation2015), but some studies have shown a limited ability to detect relations usually characterised as syntagmatic, such as concept properties like eyes–blue (Rubinstein et al., Citation2015) and thematic relationships like boat–river (Kacmajor & Kelleher, Citation2019). Both count vector and predict model families can capture bag-of-words relations by generalising across similar contexts (e.g. stubbed and ow can be linked regardless of syntax by a shared context involving pain). In short, although performance of the three model families varies by their exact instantiations and parameter settings, it is possible to characterise their form of linguistic distributional knowledge in broad terms. N-gram models specialise in capturing syntagmatic relations but also capture paradigmatic relations; count vector models capture both paradigmatic and syntagmatic relations, though the latter ability is weaker; and predict models specialise in paradigmatic relations but have a limited ability to capture syntagmatic relations. In addition, all models can capture bag-of-words relations, although it remains unclear whether each model family does so with equivalent effectiveness. All three model families can therefore approximate linguistic distributional knowledge that is useful to conceptual processing.

To summarise, the specialisation of distributional semantics and linguistic–simulation research into two parallel fields has resulted in a number of complementary strengths and weaknesses. Distributional semantics research has systematically tested a wide range of LDMs in order to optimise performance, but has tended to focus on a restricted range of relatively simple conceptual tasks that rely on a limited variety of semantic relations and/or predominantly paradigmatic relations, and evaluate performance based on explicit dependent measures such as ratings or synonym choice. By contrast, linguistic–simulation cognitive research has tended to use off-the-shelf LDMs without systematic comparisons, but has examined a wide range of conceptual tasks that vary in conceptual complexity by their reliance on diverse semantic relations (including syntagmatic and bag-of-words), and evaluate performance based on both explicit and implicit dependent measures (e.g. both ratings and response times). There has been some, if limited, crossover between distributional semantics and linguistic–simulation research, particularly in computational cognitive modelling that attempts to integrate LDMs with some form of grounding in perceptual and/or action information (e.g. Andrews et al., Citation2009; Banks et al., Citation2021; Johns & Jones, Citation2012; Lazaridou et al., Citation2017). For example, Riordan and Jones (Citation2011) examined a number of distributional semantics models, including the early models of LSA and HAL alongside more advanced models like Bound Encoding of the Aggregate Language Environment (BEAGLE: Jones & Mewhort, Citation2007), in combination with sensorimotor feature models in their ability to predict categorical clustering. In this sense, Riordan and Jones used a common distributional semantics methodology (i.e. the systematic comparison of multiple LDMs) from the theoretical perspective of linguistic–simulation research (i.e. conceptual knowledge comprises both linguistic distributional and sensorimotor information). In general, however, such multidisciplinary crossovers remain uncommon in the context of the wider literature.

As a result, distributional semantics and linguistic–simulation research have developed some different theoretical assumptions on how linguistic distributional knowledge should be modelled. The predominant view in distributional semantics research is based on a tacit “one-size-fits-all” assumption for how distributional information should best fit human data: predict models trained on very large (and noisy) corpora are the de facto standard for forming distributional word representations, regardless of the semantic task being modelled (e.g. Baroni et al., Citation2014; Mikolov, Chen, et al., Citation2013; Naik et al., Citation2019). The implication of this assumption is that there exists an optimal LDM that is appropriate for modelling all forms of linguistic distributional knowledge in cognition. Such a one-size-fits-all assumption contrasts with the fact that there is no dominant view in linguistic–simulation research for how distributional information should be modelled. Empirical work in this area has successfully fit human data using a range of model families (count vector, n-gram, and predict models) and corpus sizes from small to very large. Moreover, because linguistic–simulation theories contain the explicit assumption that the use of linguistic distributional knowledge in conceptual processing is flexible and responsive to a range of factors including task demands, available processing resources, and processing goals (Connell, Citation2019; Connell & Lynott, Citation2014; see also Barsalou et al., Citation2008; Louwerse, Citation2011), it is not clear how a one-size-fits-all approach to linguistic distributional knowledge is consistent with the linguistic–simulation theoretical perspective.

The current study

In this paper, we address the following unanswered questions regarding the role of linguistic distributional knowledge in cognition. First, is the assumption of distributional semantics research regarding a one-size-fits-all approach for linguistic distributional knowledge correct? That is, does the common consensus – that predict models trained on very large corpora are the best approach – generalise to all conceptual processing in human cognition? Or alternatively, could the success of this approach be inherently restricted to the sorts of conceptual tasks that rely on similarity of meaning and other paradigmatic relations? If predict models trained on very large corpora are indeed the best approach for modelling human data in all tasks, then it implies that paradigmatic relations learned from vast quantities of language experience underpin the linguistic distributional knowledge that people use in conceptual processing, and that syntagmatic relations, and quality of language experience, are of limited (if any) independent utility.

Second, and in contrast to the first question, is the tenet of flexibility in linguistic–simulation theories correct in how the use of linguistic distributional knowledge varies enormously by task and other factors? That is, do the empirical findings of linguistic–simulation research – that n-gram, count, and predict models, trained on corpora of varying size, all successfully predict some forms of human conceptual processing – mean that different conceptual tasks require different models and/or training corpora? Specifically, is there a systematic relationship between the appropriateness of a given model family and corpus, and the particular characteristics of the task in question? Such characteristics could include a task’s reliance on conceptually complex relations across the stimulus set, or its uses of implicit measures of processing effort (e.g. response times) over explicit judgements (e.g. ratings). If the best approach for modelling human data varies according to the characteristics of the task, then it implies that all forms of relation – syntagmatic, paradigmatic, and other – underpin the linguistic distributional knowledge that people use in conceptual processing, and that each is flexibly employed to suit task demands. Moreover, if smaller, high-quality corpora are best for modelling human data in certain tasks, it also implies that the quality of language experience is more important than quantity when it comes to developing the relevant linguistic distributional knowledge.

To address these questions, we undertook what we believe to be the largest and most comprehensive examination of linguistic distributional knowledge in cognition to date. We systematically investigated three families of LDM that are commonly used in cognitive psychology and psycholinguistic research (n-gram, count vector and predict vector models), using three corpora that vary in size (from 100 million to 2 billion words) and quality (from professionally collated to web-scraped), across a range of context window sizes (radii of 1–10 around a target word), for a variety of model-specific parameter values (distance metric and embedding size). Critically, we evaluated each model by testing its ability to predict performance in a wide range of cognitive tasks that varied in conceptual complexity.

The conceptual complexity of a task is determined by how many different forms of conceptual/semantic relation are featured in a set of stimuli, and whether each individual semantic relation can be learned syntagmatically, paradigmatically, or via bag-of-words distributions. In this sense, it is important to distinguish it from cognitive complexity: whereas conceptual complexity is concerned with the complexity of the semantic relations in the specific set of stimuli used across a task, cognitive complexity is concerned with the intrinsic processing demands of executing a task from start to finish.Footnote4 We therefore operationalised a task’s conceptual complexity according to how the following three criteria applied to its specific stimulus set: (a) diversity of semantic relations; (b) use of syntagmatic relations rather than paradigmatic; and (c) use of bag-of words distributional relations rather than paradigmatic or syntagmatic. Thus, an increase in conceptual complexity can be conferred by greater diversity of semantic relations featured across the task’s stimulus set, increased use of syntagmatic relations, and particularly increased use of high-level bag-of-words relations.

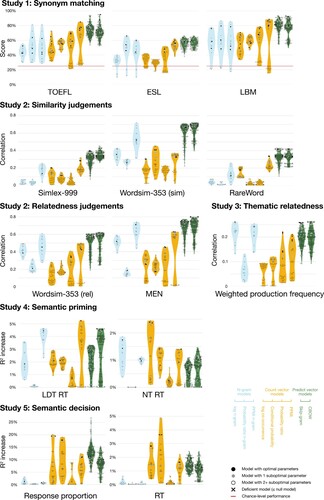

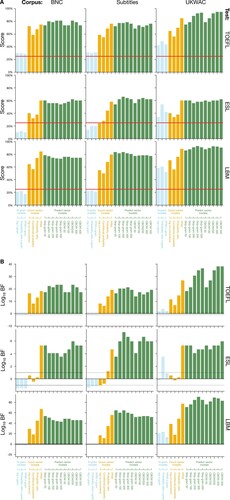

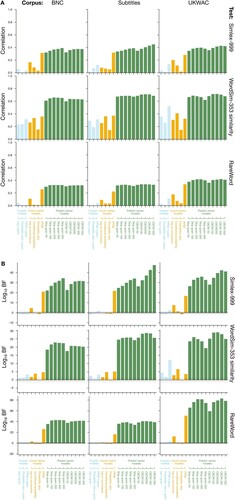

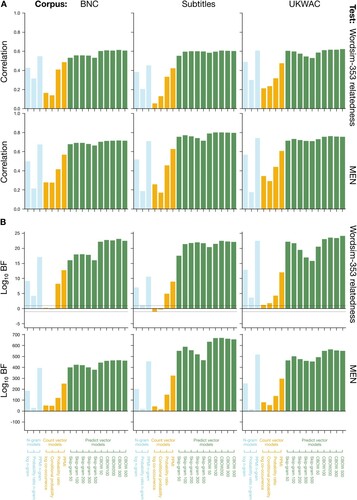

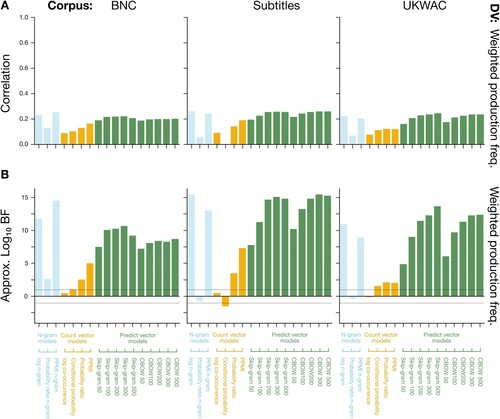

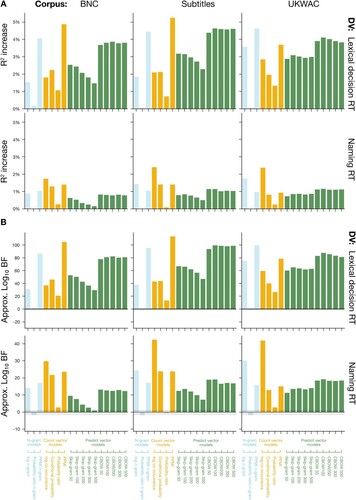

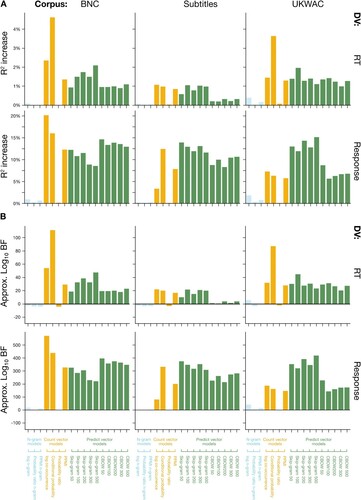

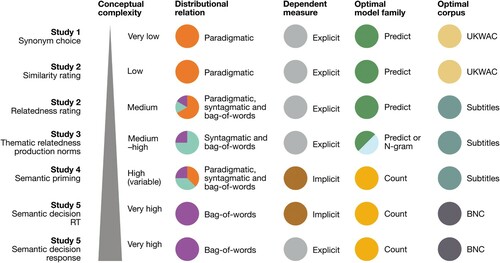

These criteria allowed us to select a range of tasks that varied systematically in conceptual complexity. The conceptually simplest task was synonym selection (Study 1), which features the same paradigmatic relation (i.e. synonyms) across all stimuli. Slightly more complex was Study 2’s similarity judgement, which still relied on paradigmatic distributional relations, but this time featured some diversity of semantic relations across its stimulus set (e.g. synonyms, antonyms, shared categories). Study 2’s relatedness judgement was more complex again because, although its stimuli were predominantly paradigmatic, it included a moderately diverse range of semantic relations that included some syntagmatic and bag-of-words relations (e.g. shared categories, compositional, thematic). Thematic relatedness production (Study 3) specifically sought to move away from relatively simple paradigmatic relations, and represented moderately high conceptual complexity by its reliance on a diverse range of syntagmatic relations (e.g. temporal, functional) with some bag-of-words relations included. In Study 4, we examined semantic priming in both lexical decision and word naming with a highly conceptually complex stimulus set that featured a very diverse range of semantic relations across all three distributional relations: paradigmatic (e.g. synonyms, antonyms, shared categories), syntagmatic (compositional, functional, object property), and a smaller number of bag-of-words relations (e.g. broad thematic or situational). Finally, the most conceptually complex task was abstract–concrete semantic decision (Study 5), where the semantic relation in question could not be learned paradigmatically or syntagmatically, and instead – if linguistic distributional knowledge were to be at all useful to the task – relied entirely on high-level bag-of-words relations. Within these tasks, we also systematically varied the format of the dependent measure, where datasets reflected explicit semantic responses (Studies 1–3), implicit measures of processing effort (Study 4), or a combination of both (Study 5). In total, we examined 540 different models on each of 13 test datasets, using Bayesian model comparisons to make recommendations as to the optimal model, corpus type and parameter settings.

This series of modelling studies allowed us to investigate whether there exists a one-size-fits-all recommendation for which LDM is the most appropriate at modelling human cognitive processing, or whether model and corpus appropriateness varied systematically according to the conceptual complexity of the task and/or the implicit versus explicit nature of the dependent measure.

General method

All datasets, analysis code, and results are available online at https://osf.io/uj92m/.

Linguistic distributional models

We examine three families of LDM: count vector models, n-gram models, and predict models. While there exists an enormous number of LDMs in distributional semantic research, our goal for this paper is specifically not to perform a state-of-the-art comparison of all such models, both for reasons of relevance and cognitive plausibility. Rather, our explicit goal in this paper is to examine the off-the-shelf distributional models that are widely and currently used in cognitive and psycholinguistic research, which can be classified by their abilities to capture our distributional relations of interest (i.e. paradigmatic, syntagmatic, bag-of-words). We outline below a number of different instantiations of each model family, and a number of associated parameters per model; a summary of all LDMs examined in the present paper can be found in .

Table 1. Summary of all models, corpora, and parameters tested, where total number of tested LDMs is 540.

Count vector models

Context-counting vector LDMs gained popularity in cognitive psychology with the introduction of LSA (Landauer & Dumais, Citation1997), which defined the context of a word as the document or paragraph in which it was found. While document-level contexts continue to be used for topic modelling in document retrieval (e.g. Griffiths et al., Citation2007; Řehůřek and Sojka, Citation2010), the models we consider instead follow the HAL approach (Lund & Burgess, Citation1996), which defines a word’s context as the collection of other words found within a fixed distance of where that word is found (e.g. a window of five words on either side of the word of interest). We chose this approach both because of its superior performance in systematic comparisons with human data (Riordan & Jones, Citation2011), and because it allowed us to examine the impact of context window size systematically across all model families.

When it comes to defining co-occurrence windows, there are a number of variations in the literature. Some models (e.g. Lund & Burgess, Citation1996; Rohde et al., Citation2006) use a centre-weighted counting method, where the contribution of context words closer to the target within the window is weighted with a flat, triangular or Gaussian kernel. Other variations include looking at only context words found to the left, only to the right, both left and right separately, or both left and right together (see Bullinaria & Levy, Citation2007; Patel et al., Citation1998, for systematic overviews of the effects of these parameters). While there may be psychological reasons to distinguish between left and right context (Dye et al., Citation2017; Jones & Mewhort, Citation2007), Bullinaria and Levy (Citation2007) showed that the difference in performance between uniformly and linearly weighted windows, and between left, right, and combined contexts, is relatively small. Thus, in our analyses, we define co-occurrence using a uniformly weighted, symmetric window around the target word (left and right sides together) in accordance with the bag-of-words approach (i.e. we make no assumptions of structure in the text), in order to constrain our already-large number of models and tests and to avoid potentially arbitrary choices in the weighting kernel.

Several sources use forms of dimensionality reduction on the target-context co-occurrence matrix, such as singular-value decomposition (Landauer & Dumais, Citation1997; O. Levy et al., Citation2015), principal components analysis (Louwerse & Connell, Citation2011), or simply removing (Burgess et al., Citation1998; Bullinaria & Levy, Citation2007; Levy & Bullinaria, Citation2001) or reweighting (Bullinaria & Levy, Citation2012) columns corresponding to low-frequency or low-variance contexts. When surveying options of dimensionality reduction, Bullinaria and Levy (Citation2007, Citation2012) did not find substantial improvement given the theoretical overhead involved (see also Louwerse, Citation2011). As such, because our motivation is to compare a broad range of models on a broad range of tasks rather than optimising performance on any single task, we avoid using such dimensionality-reduction strategies in the present paper.

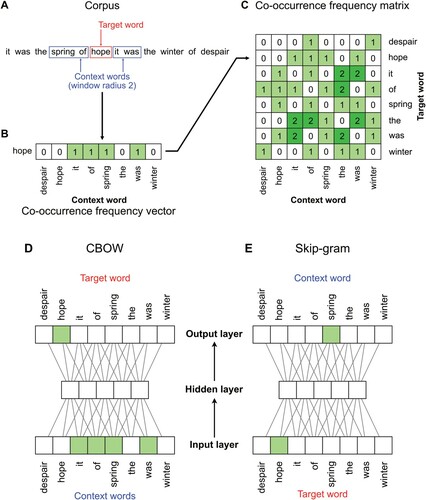

For the present research, given a large corpus of text, the context of a particular target word t is the collection of words within some fixed distance r of t. The co-occurrence frequency vector for t is a list, for each word c in the corpus vocabulary, of the number of times c is found in the context of t. Thus, for a context window of radius r, the co-occurrence frequency vector for t has entries indexed by the unique words in the corpus. Note that in this definition, the words order in the context does not affect the resultant values. Vectors are compiled over target words into a co-occurrence frequency matrix whose rows are indexed by the unique words in the corpus as targets and whose columns are indexed by unique words as context. For context windows which are symmetric around the target word, this matrix is symmetric when target and context words are arranged in the same order. See for an illustrative example of how a co-occurrence frequency matrix is computed.

Figure 1. Schematic architectures of vector-based count and predict model families, trained on a small corpus with a context window of radius 2. In count vector models A–C, each word in the corpus is selected in turn as a target and words falling within a fixed radius of the target are selected as context words (A). The frequency of co-occurrences within the corpus of the target word and each context words are recorded in a vector (B), and vectors for each target word are compiled into a co-occurrence frequency matrix (C). In predict models D–E, either an aggregate of all context words is used to predict the target word (D: CBOW), or the target word is used to predict each context word (E: Skip-gram). Networks are feed-forward and fully connected; these schematic representations are a simplification of the implementation details of CBOW and skip-gram in Word2vec (see Mikolov, Chen, et al., Citation2013).

We considered four count vector models that differ in their transformation of the co-occurrence frequency matrix. For each of these models, we let r take values 1, 3, 5 and 10. This choice spans the range of popular and high-performing window sizes (J. Levy et al., Citation1999; O. Levy et al., Citation2015; Mandera et al., Citation2017).

Log co-occurrence frequency: Log frequency is often used in place of raw co-occurrence-counts as a better-performing alternative that compensates for the skewed distribution of word frequencies in language (e.g. Louwerse & Connell, Citation2011). The log co-occurrence frequency model has word vectors defined as the log-transformed frequency count of finding a context word c and target word t together within a context window of radius r:

The

is a smoothing term which lets the model be defined even where

(i.e. where the co-occurrence frequency is zero).

Conditional probability: The vector components of the conditional probability model are the probability of finding a particular context word c, given the target word t, within a context window of radius r:

Here,

is the probability of finding a particular context–target pair, where k is the size of the corpus, and

is the probability of finding a given target word.

Probability ratio: The ratio of probabilities model compares the probability of finding a context c and target t together to the probabilities of finding c and t separately (Bullinaria & Levy, Citation2007):

Here, the probability of the context

is defined in the same way as the target word probability:

.

Positive pointwise mutual information (PPMI): Pointwise mutual information (PMI; Church & Hanks, Citation1990) is an information-theoretic measure defined as the log ratio of probabilities:

PMI is sometimes used directly (e.g. Recchia & Jones, Citation2009). However, many sources (e.g. Baroni et al., Citation2014; Bullinaria & Levy, Citation2007, Citation2012; Mandera et al., Citation2017) have found that superior results can be achieved by restricting PMI to positive values (positive PMI; PPMI), thereby only considering word co-occurrences which are more frequent than expected:

PPMI is often selected as the de facto best count model for general tasks in distributional semantics research (e.g. Baroni et al., Citation2014; Bullinaria & Levy, Citation2012; Kiela & Clark, Citation2014; Mandera et al., Citation2017).

N-gram models

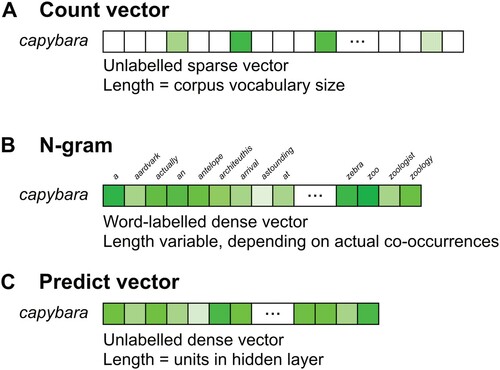

N-gram models have long been employed in corpus analysis, computational linguistics, and cognitive psychology, with Google's Web 1T 5-gram corpus (Brants & Franz, Citation2006) being a popular recent example.Footnote5 N-gram models are conceptually simpler versions of count vector models in that they are based on the same underlying method of counting word-to-word co-occurrences. However, there are critical differences in word representation and comparison. Whereas a count vector model represents each word in the corpus as a fixed-length vector of ordered, unlabelled co-occurrences (one dimension for each unique word token in the corpus), an n-gram model represents a word as a labelled list of frequencies for each other word with which it co-occurs in the corpus (see for an illustration). It is important to note, therefore, that two words can only be meaningfully compared using an n-gram model if they have actually occurred at least once within the same context window; otherwise, the co-occurrence frequency is automatically zero (i.e. target word and context word never co-occurred).

Figure 2. Word representation in each model family. A: In a count vector model, a word's representation is an unlabelled, sparse vector of length equal to the number of unique words in the corpus. B: In an n-gram model, a word's representation is a labelled, dense list of nonzero co-occurrences whose length varies with the diversity of co-occurring words. C: In a predict vector model, a word's representation is an unlabelled, dense vector of length equal to the size of the neural network's hidden layer (i.e. its embedding size).

We consider three n-gram models that differ in their transformation of co-occurrence frequencies. In general, n-gram models use the same method for constructing the co-occurrence frequency matrix as count vector models; as with the count vector models, we let window radius r take values 1, 3, 5 and 10. Since the n in n-gram comprises a sequence of the target word plus its surrounding context words, these window radii correspond to 2-, 4-, 6-, and 11-grams.

Log n-gram frequency: Based on the same calculations as the log co-occurrence frequency count vector model, this model defines the relationship between two words t and c as their log-transformed co-occurrence frequency within a context window of radius r.

Probability ratio n-gram: Based on the same calculations as the probability ratio count vector model, this model defines the relationship between two words t and c as the probability of finding them together within a context window of radius r compared to the probabilities of finding them separately within the corpus.

PPMI n-gram: Based on the same calculations as the PPMI count vector model, this model defines the relationship between two words t and c as their log-transformed probability ratio, with negative values treated as zero.

Predict models

Many modern predict models are based on artificial neural network architectures, including those implemented in the popular software tool Word2vec (Mikolov, Chen, et al., Citation2013). These models are realised as neural networks that map between context and target words with a single hidden layer, illustrated schematically in , where words are represented in input and output layers by Huffman codes. Predict models are also vector models, where the vector representation of a target word comprises the row of weights between the input layer and the hidden layer.

We consider two predict models that differ in their direction of prediction.Footnote6 As with the count vector models, we let r take values 1, 3, 5 and 10 during training; though as is usual for implementations of such models, the actual width of the window before and after the target word at each training step is randomly selected from .

Continuous bag of words (CBOW): This model is trained to predict the target word from the unordered collection of the context words. The mean of the context words’ codes is used as input (Mikolov, Chen, et al., Citation2013).

Skip-gram: This model is trained to predict each of the context words separately from the target word, and effectively reverses the learning direction of CBOW.

There are a great many potential parameters available for predict models. The most obvious is the specific architecture of the neural network, namely the number of units in its hidden layer. This is the embedding size, denoted e. Unlike count vector models, neural network-based predict models must implicitly perform dimensionality reduction whenever the hidden layer is smaller than the input layer, which will always be the case for the models we employ in this paper. For each of the models below, we trained with , matching values used by Mandera et al. (Citation2017). The Word2vec implementations of CBOW and skip-gram models have further optimisation and regularisation steps which have been found to improve performance (Mikolov, Sutskever, et al., Citation2013). Negative sampling involves updating only a randomly selected subset of network weights at each training step in the negative cases (i.e. words not found in the window), and sub-sampling involves randomly ignoring high-frequency words with a fixed probability. Following the advice of Baroni et al. (Citation2014) and Mandera et al. (Citation2017), we used a fixed value of 10 for negative sampling, and sub-sampled with probability of

. We constructed and trained our predict models in Python 3.7 using version 2.2 of the Gensim package (Řehůřek and Sojka, Citation2010), which implements CBOW and skip-gram in a manner compatible with the original Word2vec.

Distributional measures between words

Whereas n-gram models represent words as variable-length, labelled “look-up tables”, count and predict vector models both represent words as unlabelledFootnote7, fixed-dimensionality vectors (see ). As such, calculating a distributional score or distance between two words requires a different process for vector and n-gram LDMs.

In an n-gram LDM, two words are compared simply by looking up one word's distributional score in the context of the other. For instance, the PPMI n-gram model represents a word such as cat by the collection of words that co-occur with cat within radius , alongside their respective PPMI scores. The words cat and claws can then be compared directly using the value

.

By contrast, in a vector LDM, two words are compared by selecting their respective fixed-dimensionality vector representations in the model and calculating the distance between them using vector geometry. For example, the PPMI count vector model represents a word such as cat by the vector of PPMI values between cat and every other word in the corpus; and the word claws is similarly represented by the vector of all PPMI values between claws and every other word in the corpus. Comparing the words cat and claws involves comparing their respective vectors using some measure of distance in high-dimensional vector space. For count and predict vector models, we therefore use three popular distance measures to compare words’ vector representationsFootnote8:

Euclidean distance can be regarded as the “natural” straight-line distance measure in a vector space. It is defined as:

While Euclidean distance is widely used (e.g. Lund & Burgess, Citation1996; Patel et al., Citation1998), it is affected by the overall magnitude of each word vector and is typically inferior to alternatives which are not affected by vector magnitude (Bullinaria & Levy, Citation2007, Citation2012).

Cosine distance is a widely used distance metric (e.g. Landauer & Dumais, Citation1997; Mandera et al., Citation2017; Recchia & Louwerse, Citation2014) that is normalised by overall vector magnitude (and thus not affected by it). It is defined as:

where

is the angle between the vectors u and v. For count vector models, cosine distance has often been found to be the best-performing distance metric across a range of tasks (e.g. Baroni et al., Citation2014; Bullinaria & Levy, Citation2007, Citation2012; Lapesa & Evert, Citation2014). It should be noted that cosine distance, thus defined, is not a true distance metric in the mathematical sense, since it violates the identity-of-indiscernibles and triangle-inequality axioms (see Griffiths et al., Citation2007; Nematzadeh et al., Citation2017, for discussion), and is perhaps better thought of as a measure of vector dissimilarity. Despite the technical inaccuracy,

is conventionally referred to as a distance, and we follow that convention in the present paper. We note, in addition, that human similarity judgements also may not conform to the axioms for a distance metric (Tversky, Citation1977; Yearsley et al., Citation2017).

Correlation distance is a version of cosine distance with mean centering:

where

and

are the means of u and v respectively. In practice, we found that correlation distance gives almost identical results to the more widely used cosine distance in many scenarios. However, some authors including Kiela and Clark (Citation2014) recommend correlation over cosine as a distance measure for LDMs. Like cosine distance, correlation distance is not a distance metric in the mathematical sense, but nonetheless is commonly used and provides a convenient way to express the dissimilarity of vectors.

Corpora

The distributional properties of words and their contexts are estimated from large corpora of text which are representative of the language to varying extents. The size, quality and source (i.e. spoken or written language) of training corpora have been found to affect the performance of LDMs on various tasks (Bullinaria & Levy, Citation2012; De Deyne et al., Citation2015; Mandera et al., Citation2017; Recchia & Jones, Citation2009).

We trained LDMs on three corpora that varied in size, quality, and source.Footnote9 Coming from different sources, each corpus required both individual and shared cleaning and pre-processing steps (detailed below). All corpus processing was done using Python 3.7 and version 3.2 of the Natural Language Toolkit software library (NLTK; Bird et al., Citation2009).

BNC: The British National Corpus (BNC; BNC Consortium, Citation2007) is a very high-quality corpus of 100 million words of spoken and written language. It represents a collection of 4,049 documents of British English language from the early 1990s, collected from a variety of sources, and includes approximately 90 million words of written language and 10 million words of spoken language (both prepared and spontaneous speech). It is a professional corpus of a high quality that was designed to be representative of modern British English. It has been widely used to train LDMs (e.g. Landauer & Dumais, Citation1997; Bullinaria & Levy, Citation2007; Levy & Bullinaria, Citation2001; McDonald, Citation2000; Patel et al., Citation1998). The BNC is provided as a collection of XML files, including document metadata and part-of-speech tagging. A schema for automatic removal of all non-textual tagging is also available from the Oxford Text Archive (Citation2009), yielding a corpus of plain-text documents.

Subtitles: The Subtitles corpus is a reasonably high-quality corpus of 200 million words of spoken language, representing a collection of subtitles from 45,099 television programmes and films broadcast by the British Broadcasting Corporation (BBC) channels in the period 2010–2012. A corpus of BBC subtitles was first used to compile the SUBTLEX-UK database of word frequencies, which outperformed BNC word frequencies in predicting word recognition performance (van Heuven et al., Citation2014). The programmes contain a mixture of scripted and unscripted (i.e. spontaneous) speech for audiences ranging from newborn to adult, across a wide range of topics and genres. It is a high-quality corpus, having been professionally transcribed, although it was not explicitly designed to be representative of British English. Raw subtitle files contain many elements apart from the words spoken during the broadcast, such as non-linguistic descriptions of events and sounds taking place, and numeric mark-up describing the order and timing of utterances. As well as removing all timestamps and associated formatting elements, we removed segments which were likely descriptions of sounds, events or metadata (e.g. LAUGHTER AND APPLAUSE or Subtitles by Red Bee Media Ltd). Two documents were excluded for containing invalid formatting.

UKWAC: The United Kingdom Web as Corpus (UKWAC; Baroni et al., Citation2009) is low-quality corpus of approximately 2 billion words of written language. It comprises text scraped from webpages with .uk domains between 2005 and 2007, where medium-frequency words from the BNC were used as seed words to select pages. It is provided as a collection of plain text files (without HTML markup) and associated source metadata. UKWAC is much larger than the other two corpora, but has been subjected to minimal quality control and therefore contains a much higher level of noise, including typos (e.g. htink instead of think), misspellings (e.g. dissapear instead of disappear), and run-together words (e.g. wantto instead of want to). It has previously been used to train LDMs, particularly predict models (e.g. Baroni et al., Citation2009; Bullinaria & Levy, Citation2012; Mandera et al., Citation2017; J.P. Levy et al., Citation2017; O. Levy et al., Citation2015; Pereira et al., Citation2016). We removed all source metadata prior to further processing.

We processed all three corpora as consistently as possible. After the individual pre-processing steps described above, all corpora were tokenised using the Penn Treebank word tokenizer in NLTK, modified to account for additional non-alphanumeric symbols found with high frequency in the corpora (e.g. £). Resulting tokens were converted to lower case, and most grammatical punctuation was removed. Further details of the tokenization procedure are available in supplementary materials.

Other commonly used corpus pre-processing steps include the removal of low-frequency tokens (Bullinaria & Levy, Citation2007; Levy & Bullinaria, Citation2001; Lund & Burgess, Citation1996; Mikolov, Chen, et al., Citation2013; O. Levy et al., Citation2015), and the removal of high-frequency tokens or those appearing in a “stop list” (Bullinaria & Levy, Citation2012; Lowe & McDonald, Citation2000; Rapp, Citation2003; Riordan & Jones, Citation2011). This is often done to reduce computational cost, but after a thorough investigation, Bullinaria and Levy (Citation2012) found that doing so led to little performance gain over several evaluation criteria. Since we were able to complete all computations without pruning linguistic tokens from the corpus, we did not use such approaches in order to retain maximum vocabulary coverage for the evaluation procedures, and to avoid making psychologically unmotivated alterations to the LDM algorithms.

Evaluation tasks

Since our goal was to examine the efficacy of LDMs in fitting human data across a range of cognitive tasks, we required a suite of tasks which (a) used words as stimuli, (b) involved access to semantics (i.e. conceptual processing), and (c) varied in the complexity of their conceptual processing and use of implicit vs. explicit dependent measures. In order of increasing conceptual complexity (i.e. operationalised as greater diversity of semantic relations in the stimulus set, greater reliance on syntagmatic over paradigmatic relations, and greater reliance on bag-of-words relations over syntagmatic and paradigmatic), we selected the following five tasks: synonym selection (Study 1), similarity and relatedness judgement (Study 2), thematic relatedness production (Study 3), semantic priming (Study 4), and semantic decision (Study 5). Studies 1–3 involve explicit task responses as dependent measures (e.g. ratings, word choice), Study 4 involves an implicit measure of processing effort (i.e. response times), and Study 5 involves both. summarises the task characteristics, and each one is described in more detail in its relevant study below.

Table 2. Overview of evaluation tasks.

Study 1: Synonym selection