?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

We investigated the brain responses associated with the integration of speaker facial emotion into situations in which the speaker verbally describes an emotional event. In two EEG experiments, young adult participants were primed with a happy or sad speaker face. The target consisted of an emotionally positive or negative IAPS photo accompanied by a spoken emotional sentence describing that photo. The speaker's face either matched or mismatched the event-sentence valence. ERPs elicited by the adverb conveying sentence valence showed significantly larger negative mean amplitudes in the EPN and descriptively in the N400 time windows for positive speaker faces - negative event-sentences (vs. negatively matching prime-target trials). Our results suggest that young adults might allocate more processing resources to attend to and process negative (vs. positive) emotional situations when being primed with a positive (vs. negative) speaker face but not vice versa. Post-hoc analysis indicated that this interaction was driven by female participants. We extend previous eye-tracking findings with insights into the timing of the functional brain correlates implicated in integrating the valence of a speaker face into a multi-modal emotional situation.

1. Introduction

Emotions play a central role in human social interactions and language processing. On a daily basis we face the challenge of deciphering and interpreting emotions in faces, events and linguistic input. Moreover, outside of the lab, we seldom process emotions in isolation. This means, more often than not, we need to decipher and interpret emotions from different modalities and domains based on the (emotional) context in which they are embedded. For example, in a theme park, a speaker with a smile on their face might utter a happy sentence describing how kids are enjoying a roller coaster ride. Yet, from personal experience we may know that emotional situations featuring facial expressions and language do not always match in the emotions portrayed: Our interaction partner could just as well look sad, for example, due to some inner conflict we are not aware of, while at the same time uttering a happy sentence that describes how kids are enjoying a roller coaster ride in a theme park. By taking into account that emotions in faces, events or linguistic input are rarely encountered in isolation, the present studies investigated how emotional facial expressions of a speaker may create expectations and modulate the processing of emotionally (mis)matching event-sentence pairs. The results of our studies can further contribute to accounts of situated language processing (e.g. Knoeferle & Crocker, Citation2006; Münster & Knoeferle, Citation2018).

1.1. Emotional face, picture and language processing in isolation

The underlying processing mechanisms of emotional content have already been the focus of numerous investigations in a) faces (Calvo & Beltran, Citation2013; Eimer & Holmes, Citation2007; Rellecke et al., Citation2012; see Schindler & Bublatzky, Citation2020 for a review), b) events / pictures (Carretie et al., Citation2001; Cuthbert et al., Citation2000; Schupp et al., Citation2000; see also Olofsson et al., Citation2008 for a review) and c) isolated written words (e.g. Bayer et al., Citation2012; Citron et al., Citation2013; Herbert et al., Citation2018; Kanske & Kotz, Citation2007; Kissler et al., Citation2009; Liu et al., Citation2013): When researched in isolation, emotional (vs. neutral) facial expressions modulate emotion-sensitive ERP components at the earliest processing stages (see also Schindler & Bublatzky, Citation2020 for a review): The N170 / VPP (vertex positive potential) discriminates faces from other objects and usually peaks around 140–180 ms post-stimulus onset. It tends to be larger for emotional (vs. neutral) faces (Hajcak et al., Citation2012). The EPN (early-posterior negativity, a negative-going deflection mostly largest at posterior electrodes that usually peaks around 250 ms post-stimulus), has also often been observed to be larger for emotional vs. neutral emotional faces (e.g. Bayer & Schacht, Citation2014; Eimer & Holmes, Citation2007; Rellecke et al., Citation2012; Schupp et al., Citation2004). Another EEG signature that can be modulated by emotional (vs. neutral) content is the LPC / LPP (late positive complex / late positive potential, e.g. Rellecke et al., Citation2012; Schupp et al., Citation2004; Wieser & Brosch, Citation2012). The LPC is a positive-going, long-lasting deflection, often starting around 300 ms post-stimulus. It can last for the full stimulus duration and it is typically largest at midline centro-parietal electrodes (Hajcak et al., Citation2012).

Emotion-sensitive ERP components do not just discriminate between emotional and neutral facial expressions but can also be modulated by differences in positive vs. negative emotional facial expressions. Emotionally negative (i.e. angry / fearful) faces tend to elicit larger mean amplitudes in EPN and LPC compared to emotionally positive (i.e. happy) faces (e.g. Bayer & Schacht, Citation2014; Rellecke et al., Citation2012). Yet, as Schindler and Bublatzky (Citation2020) mention, most studies only contrast happy with angry or fearful faces but not with sad faces. Since sad facial expressions, in contrast to angry or fearful expressions, do not - from an evolutionary point of view - indicate imminent danger, attentional resources to sad faces might differ from those to angry and fearful faces. Hence, it is unclear if the observed biases also hold for the comparison between happy and sad facial expressions. There is, however, some evidence for a positive (i.e. happy vs. negative, i.e. sad / angry) face advantage (Calvo & Beltran, Citation2013; Liu et al., Citation2010; see also Schacht & Sommer, Citation2009).

Our brains' responses to emotional (vs. neutral) pictures are similar to the ones to emotional (vs. neutral) faces: The EPN and the LPC are both enhanced for emotional compared to neutral scenes (see Hajcak et al., Citation2012; Olofsson et al., Citation2008 for a review). Similarly to the negativity bias in faces, negative pictures tend to show larger EPN and LPC responses compared to emotionally positive pictures (Bayer & Schacht, Citation2014; but see Weinberg & Hajcak, Citation2010 for contrasting evidence in the EPN time window).

In contrast to emotional pictures and faces, emotional language does not rely on concrete visual features conveying emotions, such as a smile in a face or an event in which children are joyfully riding a roller coaster. Despite the arbitrary mapping between physical form and meaning, research has shown that like emotional facial expressions and pictures, emotional language in contrast to neutral language also elicits EPN and LPC effects (e.g. Bayer & Schacht, Citation2014). Moreover, research on emotional language suggests a positivity bias manifesting itself in larger EPN and LPC effects for positive compared to negative words (e.g. Bayer & Schacht, Citation2014; Kissler et al., Citation2009; Schacht & Sommer, Citation2009; see Kauschke et al., Citation2019 for a review).

Hence, while research regarding valence biases in emotional faces and pictures tends to show a bias for negative over positive emotions, at least when comparing happy with fearful / angry faces, research on emotional language processing suggests a bias for positive over negative emotions. Using a within-subject design to compare these effects across the three domains, Bayer & Schacht's (Citation2014) results further underlined the negativity bias for emotional pictures and faces and the positivity bias for emotional words, as indicated by larger EPN and LPC effects to negative (vs. positive) pictures and faces and to positive (vs. negative) words.

Even when emotions from different domains are used within one study, a more naturalistic contextual and multi-modal embedding of emotions featuring emotional speaker faces, emotional scenes and emotional language is hardly ever the focus of investigation in (electrophysiological) research. Yet, Wieser and Brosch (Citation2012) suggest that emotional information in, for example, visual events and faces is automatically combined to form a contextual unit which can lead to the modulation of (emotion-sensitive) ERP components. The specifics of the modulation, however, depend on the emotions portrayed in each of the entities and their unique contextual combination (Wieser & Brosch, Citation2012).

1.2. Emotion processing in context

Some studies (Hietanen & Astikainen, Citation2013; Krombholz et al., Citation2007; Paulmann & Pell, Citation2010) investigated the processing of emotional faces in social context: Measuring N400 mean amplitude ERPs, Paulmann and Pell (Citation2010), for example, assessed how and when emotional prosodic fragments as primes influenced emotionally matching or mismatching facial expressions (targets). The N400 is a negative-going component that typically peaks around 400 ms after stimulus onset and is largest over centro-parietal electrodes. The N400 is also usually larger for semantically incongruent / mismatching compared to congruent / matching stimuli within a context (Kutas & Hillyard, Citation1984; see also Kutas & Federmeier, Citation2011), but has also been observed in affective priming studies and is assumed to indicate violations of emotional expectations (e.g. Kotz & Paulmann, Citation2007; Krombholz et al., Citation2007; Schirmer et al., Citation2002). Paulmann & Pell's (Citation2010) results indicated a typical centro-parietal N400 effect for mismatching (vs. matching) prime-target pairs when the prime stayed on the screen for 400 ms. However, no differences were found depending on emotional valence.

Further, Hietanen and Astikainen (Citation2013) showed that participants quickly evaluate and process happy and sad emotional facial expression depending on their embedding in emotionally positive and negative IAPS pictures and that the valence of the context can modulate emotional face processing. In their affective priming EEG study, Hietanen and Astikainen (Citation2013) presented participants with positively and negatively valenced pictures from the International Affective Picture System (IAPS) database (Lang et al., Citation2008) as primes. These picture primes were followed by emotionally happy and sad facial expressions as targets. Hence, prime and target could either be positively or negatively matching or mismatching in emotional valence. Their EEG data revealed that the EPN was more negative following negative (vs. positive) prime pictures and negative (vs. positive) target faces. Moreover, they found a significant prime-target interaction in the EPN time window: While there was no effect of picture prime valence on the sad target faces, for happy target faces the amplitude was more negative following the negative (vs. positive) picture primes. Interestingly, Hietanen and Astikainen (Citation2013) also found a reversed priming effect in the N400 time window. This reversed priming effect showed larger positive amplitudes in the N400 time window for mismatching (vs. matching) primed target faces. In the LPP time window, the main effect of target face was significant in that the amplitude was more positive after sad (vs. happy) faces. Hietanen & Astikainen's (Citation2013) analysis also indicated a significant prime-target interaction: For sad faces, the amplitude was more positive after mismatching compared to matching picture primes; there was, however, no difference between matching and mismatching picture primes for happy face targets.

As these studies indicate, emotional context (e.g. emotional prosody, words or pictures) can modulate how emotional faces are processed. However, not many studies to date have investigated the influence of an emotional speaker face on subsequent emotion processing, let alone on the processing of multi-modal emotionally valenced event-sentence pairs.

1.2.1. The influence of faces on emotion and language processing

Interestingly, even without the presence of a visually perceived speaker, Schindler and Kissler (Citation2018) showed that the mere belief of being situated in a social context with another human being can modulate emotion processing: In their EEG study, Schindler and Kissler (Citation2018) first videotaped interviews of participants. They then made the same participants believe that their video would be evaluated by either another unknown human or by a computer (i.e. the senders). While the participants' EEG was recorded, they read positive and negative adjectives that could describe a person (e.g. sympathetic, impolite) and were told that the sender would be deciding at the same time whether, based on the participant's videotaped interview, the adjective described them well. This socio-emotional setting revealed a larger EPN and LPP for human (vs. computer) sender. Additionally, the LPP was also larger for positive (vs. negative) feedback.

Even when emotions do not play a role in a social setting, the mere presence of a speaker's face can affect language comprehension: Hernandez-Gutierrez et al. (Citation2021) presented participants in their EEG experiment with a semantically sensible or insensible spoken sentence. This sentence was accompanied by either a picture of the speaker's (neutral) face or a scrambled face. Participants' task was to decide via button-press if the sentence made sense or not. Their results revealed a broadly-distributed N400 effect for sentences that did not make sense (vs. that made sense). This N400 effect for insensible (vs. sensible) sentences was in addition significantly larger for conditions with faces (vs. the scrambled faces). The authors attributed the broad scalp distribution of the N400 effect to the fact that they used spoken instead of written language (cf., Li et al., Citation2008; Wang et al., Citation2011). Hence, the mere combination of a face with spoken language creates a (minimal) social situation which can modulate our brain responses to the linguistic input.

However, not many studies to date have investigated how and whether an emotional facial expression modulates the processing of subsequent emotional content. Yet, in mimicry research, Foroni and Semin (Citation2011) investigated the influence of subliminally presented emotional facial expressions as primes on evaluative judgments of cartoons. Their results showed that even subliminally presented face primes influenced the judgments of the subsequent cartoons. Hence, the prime faces set up expectations for the following content and crucially also affected the processing of this content.

Moreover, one eye-tracking study has shown that emotional faces as primes can also constrain the processing of subsequent emotional context. Carminati and Knoeferle (Citation2013) set up a “speaker-talking-about-events context” using emotional faces, IAPS pictures as emotional events, and spoken language: They presented (younger and older) adults with positive or negative natural facial expressions as primes. Those primes were introduced as the speaker of the following sentence. Following this speaker prime face, two events of opposite emotional valence were depicted side by side on the screen. Additionally, participants heard an either positively or negatively valenced German sentence describing one of the events while they inspected the images. For instance, the two events would show people involved in a car accident (negative) and children enjoying a roller coaster ride (positive). A translated example sentence would be: I think that the children ride happily on the roller coaster. Hence, the emotional speaker prime face could be either positively or negatively matching or mismatching the emotional event-sentence target. Participants' eye-movements were measured while they listened to the sentence and inspected the events. The eye-gaze data was analysed in different sentence regions. After NP1 onset until the end of the sentence, (younger) participants' fixations to the events were mediated by the speaker's prime face in that they fixated the negative event more than the positive when the prime face was negative (vs. positive) and they fixated the positive event more than the negative when the prime face was positive (vs. negative). Eye-movement results additionally indicated that they fixated the negatively valenced event more during the processing of a negatively valenced sentence following a negative (vs. positive) facial expression. Carminati and Knoeferle (Citation2013) suggested that the results indicate a facilitation in processing the negative sentence when they were primed with a negative speaker face. However, processing of the positive sentence was not facilitated by the positive prime face.

Building on the above findings and specifically on Carminati and Knoeferle (Citation2013), we conducted two EEG studies to test the effects of a relatively naturalistic situation including emotional speaker faces as primes on the processing of emotional event-sentence pairs as targets. In our experiments, young adult participants first encountered an emotionally positive or negative speaker face, i.e. the prime face. This prime face was followed by a visually-displayed positive or negative IAPS event picture (henceforth: event) and accompanied by a matching positive or negative spoken sentence. Hence, we are not only using emotional faces, events and sentences in a within-study design but crucially combine these different emotional modalities to create emotionally matching and mismatching trials. This multi-modal, situated-language-processing setup permitted us to investigate whether and how comprehenders' brain responses during spoken emotional event-sentence processing are modulated by a speaker's emotional facial expression.

If Carminati & Knoeferle's (Citation2013) results that the facial expression of a speaker can modulate subsequent processing of emotional event-sentence targets extend to the underlying neural processing mechanisms, we expected ERP differences in event-sentence processing depending on (mis)matching emotional speaker prime faces. These differences should manifest themselves in larger EPN, N400 and / or LPC effects in mismatching compared to matching prime face - event-sentence trials (see Hypotheses 2.2 for more in-depth information).

2. Experiment 1

2.1. Methods

2.1.1. Participants

We tested 25 right-handed, monolingual German participants (,

) between 18 and 30 years of age (12 females). Participants reported no neurological impairments and normal hearing and vision. They did not learn a second language before age 6. Participants were paid 25€ for their participation. We obtained approval from the ethics board of the German association for linguistics (DGfS, Laboratory ethics approval, valid from 17 September 2016 to 16 September 2022).

2.1.2. Materials and pretest

We used two faces (one female, one male) with their corresponding happy and sad emotional facial expressions as primes (see and ). These faces were chosen based on a previous rating study as having the most pronounced happy and sad emotional facial expressions compared to their neutral facial expression out of 15 faces (see DeCot, Citation2011; unpublished Master thesis, ,

,

, see also Carminati & Knoeferle, Citation2013).

We constructed 80 items. Each item consisted of an emotionally valenced positive and negative IAPS event and two corresponding positively and negatively valenced sentences that describe the content of the respective events. In total, there are hence 80 positive and 80 negative IAPS events accompanied by 80 positive and 80 negative sentences.

We controlled the IAPS events for valence and arousal: The events differed significantly in valence ratings between positive () and negative (

) events. However, positive and negative events did not differ significantly in their arousal ratings (

arousal positive events = 4.97,

arousal negative events = 4.91). We did not choose events with extreme arousal ratings above a mean rating of 6.6 for ethical concerns and because participants might have found the more extreme IAPS events too disturbing. Also the positive and negative event pairs were controlled for the number of people in the event.

The German positive and negative sentences were constructed to have the following structure (see ): The sentence beginning consisted of the first person pronoun followed by an opinion verb (e.g. I think) plus the German translation of the relative pronoun that.Footnote1 This beginning was emotionally neutral and ambiguous regarding event content. The sentence beginning was hence identical for the positive and negative sentences of an item. Following the relative pronoun, the subject of the sentence, i.e. first noun phrase (NP1) was still emotionally neutral in valence but disambiguated whether the sentence matched the event or not. If the subject of the sentence (NP1) referred to the event, then event and sentence also matched in emotional valence. However, if NP1 did not refer to the event, then sentence valence and event valence also mismatched. Following NP1, the object of the sentence, i.e. the second noun phrase (NP2) carried some emotional valence. The strongest emotional valence was carried by the adverb of the sentence which was followed by the sentence-final verb. Emotional nouns (NP1 and NP2) had been rated on a 9-point Likert scale from very positive to very negative by 20 participants who did not take part in the EEG experiments. Since adjectives and adverbs are typically indistinguishable in German, adverbs were taken from a previously rated list of German adjectives (Kissler et al., Citation2009). T-tests confirmed that positive and negative NP2 nouns ( positive = 6.46,

negative = 3.07) and adverbs (

positive = 6.8,

negative = 2.76) differed significantly in their valence ratings. NP1 nouns were neutral in emotional valence and did not differ significantly in their valence ratings (

in negative sentences= 4.04,

in positive sentences = 5.93). All nouns, adverbs and verbs were controlled for and did not differ within their word class in word frequencies. Word frequencies were obtained using the Cosmas II web corpus (I/II, Citation1991–2021). Additionally, NPs, adverbs and verbs in a positive / negative sentence pair were matched for syllable length within an item, word class and position in the sentence.

Table 1. Sentence construction.

Each item thus had 2 sentences and 2 events associated with it. Additionally, each item sentence was recorded by a male and a female voice and word onsets were aligned for each positive / negative sentence pair of an item. Speaker gender and prime face gender always matched. Each experimental session comprised 320 trials and 4 practice trials. We did not include any fillers due to experimental duration and trial presentation was fully randomised.

2.1.3. Design

Given our materials and procedure (see Sections 2.1.2 and 2.1.4), the experiment had a 2 (speaker prime face valence: positive vs. negative) × 2 (event valence: positive vs. negative) × 2 (sentence valence: positive vs. negative) design in which event and sentence valence could mismatch. However, for our current research question, event and sentence always matched either in positive or in negative emotional valence. Hence, we only analysed the 2 (speaker prime face valence: positive vs. negative) × 2 (event-sentence target valence: positive vs. negative) design (see ). Our conditions of interest regarding our research question were the conditions in which the emotional speaker's prime face either matched (conditions a) and d)) or mismatched (conditions b) and c)) the emotional valence of the event and the sentence, see Section 2.2.Footnote2. We will not go into detail regarding the other conditions since they were not part of our current research question and hypotheses, but see Supplementary Material for a table including all conditions.

Table 2. Experimental conditions of interest.

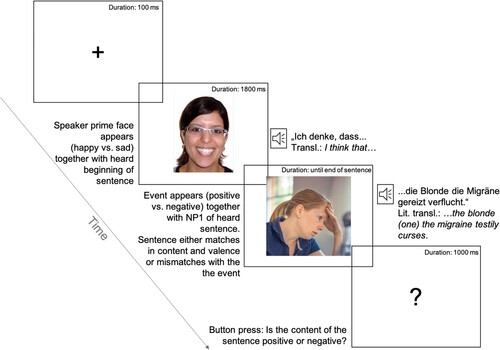

2.1.4. Procedure

During the experiment, participants first saw a black cross. They were asked to fixate the cross and keep their eyes on this position during a trial (see for an example in condition b)). After the cross (displayed for 100 ms), the speaker's prime face (happy or sad) appeared for 1800 ms on the screen. Simultaneously, participants heard the beginning of the sentence “Ich denke, dass…” (I think that…). This beginning was neutral in emotional valence and did not set up specific expectations about how the sentence would continue (see ). Subsequently, the positive or negative target event appeared together with the heard NP1 of the sentence and participants listened to the sentence continuation while looking at the event. The sentence either described the event or was unrelated to the event. If the sentence referred to the event, the emotional valence of the event and the sentence always matched. Recall that for our current research question, only matching event-sentences were taken into consideration. After sentence end, the event disappeared and a question mark appeared for 1000 ms on the screen, prompting the participants to issue a keyboard button press to indicate whether the sentence content was positive or negative. Button press position for positive / negative was balanced across participants. The experiment was programmed using Presentation (Neurobehavioral Systems, Inc., Berkeley, CA, Citationn.d.) and trigger events were set for the face onset, the NP1 onset (which is emotionally neutral but disambiguates whether the sentence is about the picture or not) and the adverb onset (which carries strong emotional valence information). The experiment took about 60 minutes. Participants were given a short break every 10–15 minutes, i.e. 5 breaks in total.

2.1.5. EEG recording and preprocessing

We recorded the EEG with a 32 channel BrainVision actiCap slim system (Brain Products GmbH, Gilching, Germany, Citationn.d.a) at a sampling rate of 1000 Hz. The Ground was placed at AFz position. We used the right mastoid as an online reference and placed an additional electrode at the left mastoid for offline re-referencing. 6 electrodes above and below the eyes and the outer canthi were used to capture vertical and horizontal eye-movement artifacts. Pre-processing was done using BrainVision Analyzer 2 (Brain Products GmbH, Gilching Germany, Citationn.d.b). Off-line, data was downsampled to 250 Hz. Bad trials (except eye-movement artifacts) were marked and we ran an ocular correction ICA. Following the ICA, the data was filtered with a low cutoff of 0.1 Hz and a high cutoff of 30 Hz using zero phase shift Butterworth filters. The data was then re-referenced to the average of both mastoids. To further filter out any remaining eye-movement artifacts, we created bipolar EOG channels. The data was then segmented across conditions from −100 to 900 ms based on word region onsets (triggers were set for the face,Footnote3 NP1 and adverb onset). Baseline correction was applied to the 100 ms preceding trigger onset, i.e. −100 ms. Following baseline correction, we performed a semi-automatic artifact rejection to filter out any remaining artifacts. Participants with more than 20% artifacts in one of the analysed word regions were removed from the data set and replaced with new participants.Footnote4 The final data set was then segmented further by condition. Finally, mean amplitude averages per condition, subject and analysis region were exported for the statistical analysis.

2.2. Hypotheses

Recall that Carminati & Knoeferle's (Citation2013) eye-tracking results suggest that participants processing of negative sentences was facilitated by a negative speaker prime face (as indicated by more fixations towards the negative compared to the positive event picture during sentence processing) but that the processing of positive sentences was not influenced by the positive or negative emotional prime faces. If we can conceptually replicate these effects, our EEG results would corroborate the time course of these effects (see Section 1.2.1) and moreover also extend them by informing us about the underlying functional brain correlates. Hence, if we can replicate previous eye-tracking results on emotional facial priming and event-sentence mismatches, we should find larger mean amplitude differences between conditions b) vs. d), i.e. the positive prime face mismatches the negative event-sentence vs. the negatively matching speaker prime face - event-sentence, compared to d) vs. a), i.e. the negative speaker prime face mismatches the positive event-sentence vs. the positively matching speaker prime face - event-sentence (see ). This would support the eye-tracking results, i.e. it might suggest that participants attend more to negative event information and might hence experience greater processing costs if the prime face mismatches in the negative compared to the positive event-sentence targets. This difference should emerge during the NP1 region which gives away the emotional valence of the event since NP1 and event onset coincide (see Section 2.1.4) and links the event to the first noun phrase of the sentence. However, a later effect at the adverb region might also be possible since participants in our experiment (in contrast to Carminati & Knoeferle, Citation2013) did not have an event preview time and thus had to process the emotional event visually and the first noun phrase auditorily at the same time and link the noun phrase to the event, likely yielding higher processing costs compared to Carminati and Knoeferle (Citation2013) where only the noun phrase presented new information at NP1 onset. Note, however, that while Carminati and Knoeferle (Citation2013) focussed on processing facilitation as indicated by more fixations to the emotionally matching event picture, we would interpret larger mean amplitudes in response to the mismatching event-sentence targets as indicating the allocation of more attentional resources and higher processing costs.

2.3. Analysis

We analysed the accuracy and speed with which participants responded to the comprehension questions. However, participants were not instructed to respond as quickly and accurately as possible and we did not have specific hypotheses for the behavioural data. Accuracy data was analysed using a generalised linear mixed model (Bates, Mächler, et al., Citation2015) with family set to “binomial” due to the binary nature of the data. Reaction-time data was measured from the onset of the speaker prime face until button press and was log transformed. Incorrectly answered trials and trials with 2.5 SD above condition mean were treated as outliers and removed from the data. The cleaned reaction-time data was analysed using a linear mixed model (Bates, Mächler, et al., Citation2015). Fixed factors in both accuracy and reaction time analyses were speaker prime face (positive vs. negative) and positive vs. negative event-sentence. All main effects and interactions were specified in the fixed effect structure of the models. Random intercepts were specified for subjects but not for items, since the programming script did not include any item-specific information and trial presentation was fully randomised within the presentation software. We included random slopes for all main effects and interactions of the fixed effects in the subject random effect structure. Random effect structure reduction started with the maximal model supported by the data and followed Bates, Kliegl, et al. (Citation2015). The most parsimonious model for the accuracy data was: Accuracy ∼ face * event_sentence+ (1+face+event_sentence|subject), data=data, family=“binomial”. The most parsimonious model for the reaction-time data was: ln_RT ∼ face * event_sentence + (1|subject), data=data. p-values were obtained using Satterthwaite approximation (Kuznetsova et al., Citation2017; see also Luke, Citation2017).

For the EEG data, we analysed mean amplitudes in the NP1 and adverb regions by means of a type III ANOVA (Fox & Weisberg, Citation2019) and divided the analysed word regions into two time windows showing early, i.e. 250–400 ms (the EPN time window) and later i.e. 300–600 ms (the N400 time window) effects. We did not analyse even later time windows (e.g. LPP) since visual inspection did not suggest any differences. To analyse our data according to our hypotheses, we performed 2 (speaker prime face positive vs. negative) x 2 (positive vs. negative event-sentence) ANOVAs inlcuding electrode as an additional factor. For the EPN region, the following cluster was used: P3, P4, P7, P8, PO9, O1, Oz, PO10. For the N400, the following cluster was used: C3, C4, Cz, CP1, CP2, P3, P4, P7, P8, Pz, O1, O2, Oz. Additional ANOVAs across all head electrodes were performed if the grand average graphs indicated a broader effect distribution. All significant interactions were followed-up with post-hoc pairwise comparisons and are Bonferroni-corrected.

2.4. Results

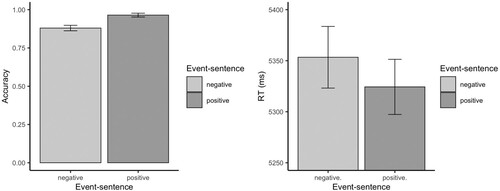

2.4.1. Accuracy and reaction times

Participants responded to 91% of all comprehension questions correctly. The reaction-time and accuracy analyses furthermore revealed a main effect of event-sentence: Participants responded to the comprehension questions (i.e. Was the content of the sentence positive or negative?) significantly more often correctly (,

,

,

, see ) and also faster (

,

,

,

, see ) when the event-sentence was positive (vs. negative). The accuracy analysis moreover revealed a significant speaker prime face x event-sentence (

,

,

,

) interaction. However, post-hoc pairwise comparisons revealed that the speaker prime face valence neither significantly affected the positive, nor the negative event-sentence targets.

2.4.2. EEG

2.4.2.1. NP1 region

In the NP1 region, the EEG analysis did not reveal any significant differences between our conditions of interest.

2.4.2.2. Adverb region

The analysis in the adverb region did, however, reveal a significant main effect of speaker prime face in the EPN ,

, p < .001,

) and N400 (

,

, p < .001,

) time windows: Mean amplitude negativities were significantly larger following positive (vs. negative) speaker prime faces. Additionally, the data revealed a significant main effect of event-sentence in the EPN (

,

,

,

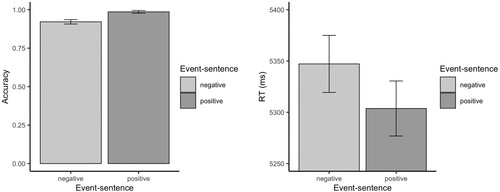

) but not in the N400 time window in that mean amplitude negativities were larger for negative (vs. positive) event-sentence targets. Crucially, however, the analysis furthermore revealed a significant speaker prime face x event-sentence interaction showing significantly larger mean amplitude negativities in the EPN (

,

, p < .001,

) and N400 (

,

, p < .001,

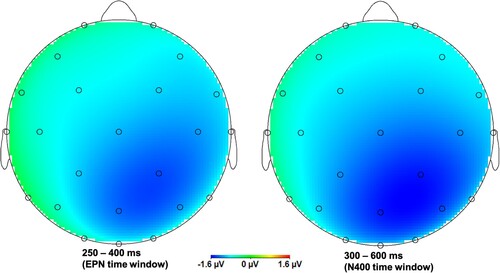

) time windows for negative event-sentence targets when primed with a positive (vs. negative) speaker face (see ). further provides an overview of the EEG results for each experiment by word region and analysed time window. Interestingly, as post-hoc comparisons revealed, mean amplitudes for the positive event-sentence targets were not modulated by positive or negative speaker prime face valenceFootnote5. shows that the negativity for negative event-sentence targets primed with a positive speaker face starts in the EPN time range and is strongest in the N400 time range. Moreover, as the topography shows, the negativity is broadly distributed in both time windows.

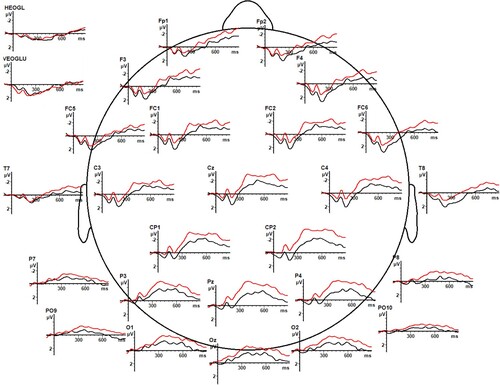

Figure 5. Adverb region: Mean amplitude differences between conditions in which the speaker prime face was positive (i.e. red line) vs. negative (i.e. black line) and event-sentence target was negative (Experiment 1).

Figure 6. Adverb region: Topography for the positive prime face - negative event-sentence target minus negatively matching trials, i.e. matching negative trials subtracted from mismatching positive prime face - negative event-sentence trials (Experiment 1).

Table 3. Summary result comparison across Experiments 1, 2 and the combined analysis for all word regions and time windows.

2.5. Discussion

The behavioural data revealed a main effect of event-sentence valence indicating faster and more accurate responses to positive (vs. negative) event-sentence targets (see ). Our EEG results also revealed a significant main effect of event-sentence in the EPN time window of the adverb region: negative event-sentence targets showed more negative-going mean amplitudes than positive event-sentence targets. We will discuss the direction of these effects in the Section 5. Our results in the adverb region further indicate that mean amplitude negativities were larger in the EPN and N400 time windows following positive (vs. negative) speaker prime faces,Footnote6 regardless of event-sentence valence. Crucially, however, the EEG data revealed a speaker prime face by event-sentence interaction in the adverb region: A positive (vs. negative) speaker prime face elicited more negative-going mean amplitudes in the EPN and N400 time windows in the mismatching negative (but not the matching positive) event-sentence targets. and the negative event-sentence targets. When the positive speaker prime face needs to be integrated into a negative event-sentence, a conflict might arise and expectations based on the speaker's facial expression are violated. This might indicate that the positive (vs. negative) prime face created a stronger expectation towards the following event-sentence target, resulting in larger ERPs when this expectation was violated by a mismatch in emotional valence.

However, looking at , it becomes clear that the wave forms start to diverge already at the onset of the adverb region. In fact, an additional ANOVA confirmed a significant speaker prime face x event-sentence interaction in the pre-stimulus baseline of the adverb region (,

, p = .005,

). This difference in the baseline indicates that the effects we see in the adverb region might already start earlier, i.e. in the NP2 region. However, even though the NP2 region carries some emotional valence information (i.e. the migraine, the melon, see ), the adverb conveys the strongest emotional valence. Hence, we did not expect any effects in the NP2 region and did not set trigger events, preventing us from analysing it post-hoc. To address this issue, Experiment 2 aimed to replicate the present findings from Experiment 1 and to further investigate potential earlier effects, starting in the NP2 region.

3. Experiment 2

3.1. Methods

For the participants, Experiment 2 was identical to Experiment 1. In order to capture potential effects in the NP2 region, we set an additional trigger event at the onset of the NP2 region. Data analysis was also identical to Experiment 1 except for the additional analysis of the NP2 region.

3.1.1. Participants

We tested another 25 right-handed, monolingual German participants () between 18 and 30 years of age (15 females).Footnote7 Participants reported no neurological impairments and normal hearing and vision. They did not learn a second language before age 6. Participants were paid 25€ for their participation. We obtained approval from the ethics board of the German association for linguistics (DGfS, Laboratory ethics approval, valid from 17 September 2016 to 16 September 2022).

3.2. Results

3.2.1. Accuracy and reaction times

Participants responded to 95% of all comprehension questions correctly, regardless of condition. The reaction-time and accuracy analyses replicated the main effect of event-sentence valence found in Experiment 1: Participants responded to the comprehension question significantly more often correctly (,

,

,

, see ) and also faster (

,

,

,

) when the event-sentence was positive (vs. negative, see ).

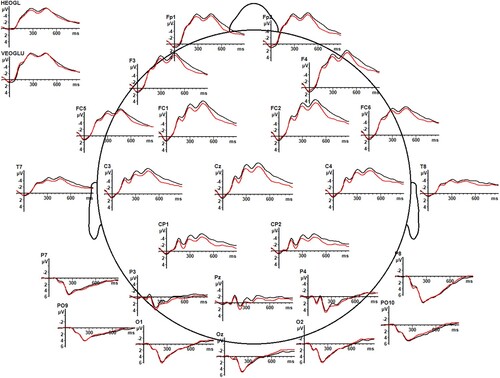

3.2.2. EEG

3.2.2.1. NP1 region

Different from Experiment 1, in which we did not find any effects in the NP1 region, in Experiment 2 (see for a result comparison across the experiments), a significant main effect of speaker prime face (,

, p = .036,

) emerged in the N400 (but not in the EPN) time window of the NP1 region, showing more negative-going mean amplitudes for positive (vs. negative) speaker prime faces, regardless of (mis)matching event-sentence. Additionally, a significant main effect of event-sentence valence emerged in the EPN (only in the analysis across all electrodes:

,

, p = .001,

) and in the N400 time window (

,

, p = .004,

). The main effect of event-sentence valence in the N400 time window was also significant in the analysis across all electrodes (

,

, p < .001,

). As shows, negative event-sentence targets elicited broadly distributed, larger negativities than positive event-sentence targets.

3.2.2.2. NP2 region

This broadly distributed main effect of event-sentence valence was also significant in the EPN and N400 time window of the NP2 region (which was not analysed in Experiment 1), both in the electrode cluster (EPN, marginal: ,

, p = .053,

; N400:

,

, p < .001,

) and in the analysis across all electrodes (EPN:

,

, p < .001,

; N400:

,

, p < .001,

). It shows the same pattern as in the NP1 region, i.e. larger negativities for negative (vs. positive) event-sentence targets (cf., ). No other significant effects emerged in the NP2 region.

3.2.2.3. Adverb region

The analysis in the adverb region replicated the broadly distributed significant main effect of event-sentence valence in the EPN time window across all electrodes (,

,

,

). Distinct from Experiment 1, the main effect of event-sentence valence was also significant in the N400 time window (N400 electrode cluster:

,

, p = .010,

; across all electrodes:

,

, p < .001,

). Interestingly, however, the data pattern is reversed in the adverb region compared to Experiment 1: mean amplitude negativities were larger for positive (mean amplitude for N400 electrode cluster: −1.5 μV vs. negative: −1.2 μV) event-sentence targets in both analysed time windows. We did not replicate the speaker prime face x event-sentence valence interaction that was present in the EPN and N400 time windows of the adverb region in Experiment 1 (see 3.3 for a discussion on individual differences).

3.3. Discussion

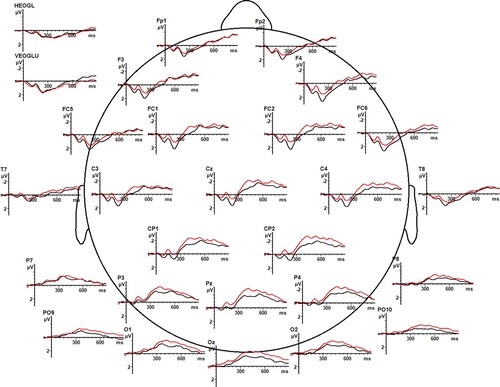

Experiment 2 replicated the behavioural main effect of event-sentence valence in both reaction times and accuracy, indicating faster and more accurate responses for positive (vs. negative) event-sentence targets. Interestingly, we only partially replicated the EEG results from Experiment 1: Both studies showed significantly larger mean amplitude negativities following positive (vs. negative) speaker prime faces and larger negativities for negative (vs. positive) event-sentence targets, although partially in different word regions. While the main effect of speaker prime face, i.e. larger mean amplitude negativities for positive (vs. negative) speaker prime faces, was present in the adverb region (EPN and N400 time window) in Experiment 1, in Experiment 2 it manifested itself in the NP1 region (N400 time window). Additionally, while in Experiment 1 the main effect of event-sentence valence, i.e. larger mean amplitude negativities for negative (vs. positive) event-sentence targets, was only significant in the EPN time window of the adverb region, it was much more pervasive in Experiment 2: The main effect of event-sentence valence was significant in all analysed word regions (i.e. NP1, NP2 and adverb) and both EPN and N400 time windows. However, while the data pattern of the significant event-sentence valence effect in the adverb region of Experiment 2 indicated larger mean amplitude negativities for positive (vs. negative) event-sentence targets, the data pattern in all other word regions (in Experiment 1 and 2) showed the opposite pattern: Mean amplitude negativities were significantly larger for negative (vs. positive) event-sentence targets (cf., ).

In addition, although Experiment 2 could not replicate the significant speaker prime face x event-sentence interaction from the adverb region in Experiment 1, the mean amplitudes in the adverb region in Experiment 2 showed the same pattern as in Experiment 1: They were larger for trials in which the speaker's prime face was positive and the event-sentence valence negative compared to negatively matching speaker prime face - event-sentence targets (e.g. mean amplitude for N400 electrode cluster when speaker prime face was positive and event-sentence was negative: −1.27 μV vs. negative prime face - negative event-sentence: −1.13 μV).

A possible reason for the non-significant interaction in the adverb region in Experiment 2 compared to Experiment 1 could be high inter-subject variability in processing these mismatches. On an individual subject level, in Experiment 1, 17 out of the 25 participants descriptively showed the observed pattern, while in Experiment 2 this was only the case for 12 out of 25 participants.

With regard to the condition differences starting already at adverb onset, the added NP2 region analysis in Experiment 2 supported our prediction that the conditions start to differ already before the adverb region. Given that the participants saw the event picture the entire time while listening to the sentence, temporally extended effects might not be unusual, since the time to form expectations as to how the speaker will continue with their sentence after hearing I think that was relatively long. Even though the prime face x event-sentence interaction was not significant in the NP2 region, the mean amplitudes indicated the same data pattern as observed in the following adverb region. Hence, even though the speaker prime face x event-sentence interaction seemed to be prone to high inter-subject variability, it seemed to be triggered at the earliest point of speaker prime face - event-sentence mismatches. The individual differences could also explain why the data pattern for the significant main effect of event-sentence was reversed in the adverb region of Experiment 2 (i.e. larger mean amplitude negativities for positive (vs. negative) event-sentence targets) compared to all other word regions in both experiments.

4. Combined EEG analysis of Experiments 1 and 2

Since the results from Experiment 1 could only partially be replicated and inter-subject variability seemed to be high, especially in Experiment 2, we combined the data from the NP1 and adverb regions in Experiments 1 with the data in these word regions from Experiment 2 to see which effects would hold up in these word regions given the increased power with 50 participants. This post-hoc analysis was possible as both experiments were identical in their set-up and materials. Recall that the only differences between Experiments 1 and 2 were the participants tested and the added NP2 region trigger in Experiment 2. The data analysis was identical to the analyses in Experiments 1 and 2. Additionally, the behavioural data from both experiments was combined into one analysis. Further, we included participant gender as a factor in the behavioural and EEG data analyses to explore one type of individual differences (post-hoc; we thank an anonymous reviewer for that suggestion). This was possible since both Experiments 1 and 2 were approximately balanced for participant gender.

4.1. Results

4.1.1. Accuracy and reaction times

The behavioural data analysis on the combined data confirmed the main effect of event-sentence in both the accuracy (, SE = 0.16, z = −5.75, p < .001) and reaction times (

, SE = 0, t = 4.06, p < .001). The reaction time and accuracy analyses including participant gender revealed interesting gender x event-sentence interactions (accuracy:

, SE = 0.12, z = −2.4, p < .05, RT:

, SE = 0, t = −1.81, p = .070). Post hoc comparisons indicated faster and less accurate responses for women (vs. men) only after negative (but not positive) event-sentence targets, i.e. a speed-accuracy trade-off. See Appendix A.2 for graphs on these post-hoc findings.

4.1.2. NP1 region

The combined analysis confirmed the significant main effect of event-sentence valence in the EPN time window of the NP1 region from Experiment 2 (see for a comparison of results across the Experiments). This effect was, as in Experiment 2, only significant in the analysis across all electrodes (,

, p < .001,

), indicating broadly distributed larger mean amplitude negativities for negative (vs. positive) event-sentence targets. No other effects regarding our conditions of interest were significant in the NP1 region (cf., ).

4.1.3. Adverb region

The adverb region analysis confirmed the significant main effect of speaker prime face found in Experiment 1 in the EPN and N400 time windows in the analysis on the EPN (,

,

,

), N400 (

,

, p = .001,

) electrode clusters, respectively, and in the analysis across all electrodes (EPN time window:

,

, p = .002,

; N400 time window:

,

, p = .043,

): Mean amplitude negativities were larger following positive (vs. negative) speaker prime faces.Footnote8 Additionally, the analysis on the EPN electrode cluster confirmed the significant main effect of event-sentence in the EPN time window (

,

, p = .003,

) from Experiment 1, indicating larger mean amplitude negativities for negative (vs. positive) event-sentence targets. Crucially, the analysis also confirmed the significant speaker prime face x event-sentence interaction in the EPN time window (in the analysis on the EPN electrode cluster only:

,

, p = .011,

) found in Experiment 1. As shows, mean amplitude negativities are larger for positive speaker prime face - negative event-sentence targets compared to negatively matching speaker prime face - event-sentence targets. Even though the interaction was, unlike in Experiment 1, only significant in the EPN time window, shows that this effect is (descriptively) not limited to the EPN electrode cluster and the EPN time window but rather broadly distributed and long-lasting. Post-hoc pairwise comparisons confirmed the significant difference between the mismatching positive speaker's prime face - negative event-sentence and the matching negative speaker prime face - event-sentence. Just like in Experiment 1, the comparison between negative speaker prime face - positive event-sentence target and matching positive speaker prime face - event-sentence targets was not significant. Although the prime face x event-sentence interaction is, unlike in Experiment 1, not significant in the N400 time window, the mean amplitude negativities are still larger for positive speaker prime face - negative event-sentence targets (−1.75 μV) compared to the negatively matching speaker prime face - event-sentence targets (−1.17 μV).

Figure 9. Adverb region: Mean amplitude differences between positive (i.e. red line) vs. negative (i.e. black line) speaker' prime face and negative event-sentence valence across Experiments 1 and 2.

Including participant gender (post-hoc) as a factor in the analysis on the combined data for the adjective region revealed that gender does mediate the speaker prime face by event-sentence interaction in the EPN time window (in the EPN electrode cluster only): ,

, p = .019,

, but no other main effect or interaction in either time window was significantly mediated by gender. Crucially, while women showed the prime face by event-sentence interaction, men did not (see Appendix A.3 for an interaction graph).

4.2. Discussion

The analysis across both experiments disambiguates and strengthens the results from Experiments 1 and 2 (see for comparison of the results across the Experiments): In the EPN time window of the NP1 region, participants were only susceptible to the overall valence difference between the positive and the negative event-sentence targets, showing larger mean amplitude negativities in response to negative (vs. positive) event-sentence trials. Since NP1 was emotionally neutral but referred to the event, this larger negativity in response to negative (vs. positive) event-sentence trials can likely be attributed to the valence of the event (i.e. the IAPS picture and not the NP1) alone. Additionally, this main effect of event-sentence carried over to the EPN time window of the adverb region, which carried strong emotional valence. Further, encountering the emotional adverb, the main effect of speaker prime face in the EPN and N400 time windows indicates that participants re-activated the emotional facial expression of the speaker: Mean amplitude negativities were larger following the positive (vs. negative) prime faces.Footnote9 In contrast to the event-sentence effect in the NP1 region, the speaker prime face effect in the adverb region is likely attributed to the linguistic input carrying strong emotional valence, since at that point, participants knew already from the preceding linguistic context (the NP1 and NP2, e.g. literal translation: …the blonde the migraine…) whether if the sentence referred to the event or not. Additionally, the main effect of prime face was present regardless of the event-sentence target valence. Crucially, the speaker's prime face and event-sentence also interacted with each other in the EPN region of the adverb: mean amplitude negativities were larger when a positive speaker face primed the negative event-sentence trial compared to when the speaker's prime face was negatively matching with the event-sentence trial. Interestingly, as the post-hoc analysis indicated, only female participants showed this interaction. The interaction effect was descriptively also present in the N400 time window and might indicate early emotional processing issues based on violated expectations. There was no modulation of gender in the N400 time window.

5. General discussion

In these EEG experiments, participants first encountered an emotionally happy or sad speaker prime face. This speaker prime face was followed by a visually displayed positive or negative event (an IAPS picture) which was accompanied by an emotionally positive or negative spoken sentence. We investigated whether and how comprehenders' brain responses during emotional event-sentence processing are modulated by the speaker's emotional facial expression. Crucially, using spoken language, our study is among the first to investigate EPN effects in emotional spoken language processing (Grass et al., Citation2016; but see also Mittermeier et al., Citation2011; Rohr & Rahman, Citation2015) and likely the first to study multi-modal sentence (as opposed to single word) processing. We hypothesised, based on the eye-tracking results by (Carminati & Knoeferle, Citation2013), that mean amplitude differences in the NP1 or adverb region should be larger for positive speaker prime face - negative event-sentence targets vs. negatively matching trials compared to negative speaker prime face - positive event-sentence targets vs. positively matching trials. The results across the two experiments revealed significantly faster and more accurate responses for positive (vs. negative) event-sentence trials. The EEG data furthermore revealed a significant speaker prime face by event-sentence interaction in the adverb region showing indeed more negative-going mean amplitudes in the EPN time window when the speaker's prime face was happy and event-sentence valence was negative (vs. the negatively matching trials). Mean amplitudes did, however, not differ when the speaker's prime face was sad and event-sentence valence was positive (vs. the positively matching trials). Interestingly, significantly larger mean amplitude negativities for positive (but not for negative) speaker prime faces emerged in EPN and N400 time windows of the adverb region. In contrast, larger mean amplitude negativities for negative (but not for the positive) event-sentence trials emerged in the EPN time windows of the NP1 and adverb regions. This main effect of event-sentence also emerged in the behavioural data. In the following, we will discuss each effect separately, starting with the main effects followed by the interaction.

5.1. The effect of the speaker prime face during event-sentence processing

The analysis across both experiments revealed that mean amplitude negativities were significantly larger for positive (vs. negative) speaker prime faces, regardless of event-sentence valence. This effect emerged in the adverb but not in the NP1 region and was broadly distributed over the scalp, spanning over both analysed time windows, i.e. the EPN and the N400. Interestingly, this main effect of speaker prime face did not emerge at the earliest possible time, i.e. at the onset of the event and when hearing the first noun phrase, but only emerged when participants encountered the strongly emotionally valenced adverb of the sentence. There are two possible explanations for the late emergence of this effect that are not mutually exclusive: 1. Right after participants have heard the emotionally neutral beginning of the sentence (I think that…) and the speaker's emotional prime face disappears, the emotional event is displayed on the screen and they hear the first noun phrase of the continuing sentence describing the event (e.g. …the blonde …, see ). Hence, new and multi-modal visual and linguistic information has to be recognised, processed and interpreted incrementally. This high cognitive demand may have overshadowed any effect the speaker's prime face might have had at this point. 2. The emotional valence of the speaker's prime face is of little relevance for the more immediate goal of establishing reference in the NP1 region between the depicted event and the NP1, which is necessary in order to correctly process and interpret the event-sentence scenario.

By the time the emotional adverb is encountered, the referential match between the event and the sentence has been successfully established. With the onset of the strongly emotionally valenced adverb, the memory of the speaker's emotional facial expression might have been triggered and the prime face valence re-activated. Larger mean amplitude negativities for happy (vs. sad) emotional prime faces might support the positivity bias for emotional face processing (Kauschke et al., Citation2019; Liu et al., Citation2013). However, there is an ongoing debate about whether there is in fact a positivity or a negativity bias in emotional face processing (Kauschke et al., Citation2019). While behavioural studies often show recognition advantages for positive (vs. negative) emotional faces (e.g. Leppänen & Hietanen, Citation2004) and interpret these effects as a positivity bias (Liu et al., Citation2013), eye-tracking and ERP studies often show more fixations and larger amplitudes to negative (vs. positive) faces and interpret this as a negativity bias (e.g. Bayer & Schacht, Citation2014). Yet, as (Schindler & Bublatzky, Citation2020) point out in their review, most studies compare happy with angry and fearful facial expressions, leaving out sad emotional faces. However, while angry and fearful faces are threat-related and thus evolutionary point to imminent danger, which demands heightened attentional selection (Schindler & Bublatzky, Citation2020), this is not the case for sad faces. It is thus possible that when happy faces are compared with angry and / or fearful faces, a negativity bias as for instance reflected in larger EPNs for negative (vs. positive faces) emerges (e.g. Bayer & Schacht, Citation2014) since angry and / or fearful faces are emotionally more salient (Schindler & Bublatzky, Citation2020) within this comparison. However, when comparing happy with sad facial expressions, this reasoning might no longer hold, rendering in contrast the positive facial expressions more salient (cf., Kauschke et al., Citation2019). This is also supported by Liu et al. (Citation2013) who, like our study, only used happy and sad (plus neutral) (schematic) facial expressions in their EEG study and found support for the positivity bias. An additional post-hoc analysis of the ERPs following the trigger event to prime face onset, underlined this reasoning and the findings by Liu et al. (Citation2013) in showing larger relative mean amplitude negativities to happy (vs. sad) speaker prime faces in the 200–300 ms time window. However, in contrast to these studies, our study did not focus on ERP effects during face processing alone but investigated how the facial expression of the speaker influences event-sentence processing. Hence, the effects we observe for the prime face are the effects of an emotional face while processing a comparatively more complex emotional situation. Related ERP priming effects for positive (vs. negative) emotional IAPS pictures or video sequences while processing emotional words have also been reported by Kissler and Koessler (Citation2011) and Kissler and Bromberek-Dyzman (Citation2021).

5.2. The effect of event-sentence valence

In addition to the main effect of speaker prime face in the adverb region, a significant main effect of event-sentence in the EPN time windows of the NP1 and adverb region emerged. Mean amplitude negativities were larger for negative (vs. positive) event-sentence targets. This main effect of event-sentence emerged also in the behavioural reaction time and accuracy data: Reaction times were longer and accuracy was lower for negative (vs. positive) event-sentence targets for responding to the question whether the heard sentence content was positive or negative. Interestingly, the post-hoc analysis including participant gender revealed faster reaction times and lower accuracies for women compared to men but only for negative event-sentence trials.

If we assume that the EPN amplitude is related to the amount of attention that salient emotional stimuli receive (Aldunate et al., Citation2018; Schupp et al., Citation2004), participants likely allocate more attentional resources to the more salient negative (vs. positive) event-sentence targets. Likewise, negative (vs. positive) event-sentence targets are reacted to more slowly and less accurately (i.e. in turn faster and more accurate responses for positive event-sentence targets). Thus, they receive more attention and need more attentional resources to be processed. This main effect of event-sentence already starts in the NP1 region when linguistic valence information is not yet present, and continues throughout the NP2 (see Section 3.2) and adverb region. Recall that the adverb region carries strong emotional valence information. These recurring word-evoked (NP1, NP2, adverb) EPN effects might suggest that multi-modal perceptions, rather than independent event and sentence perceptions are stored: Our words are embedded in sentences and combined with the picture events form a multi-modal emotional situation. This multi-modal emotional situation might, in line with the language-as-context view (Barrett et al., Citation2007), be stored as emotional perceptions in which language and visual context are not processed independently of each other. This is also supported by the Conceptual Act Theory, which states that language plays a large role in creating and modulating emotion categories, which are formed based on individual experiences (Barrett, Citation2006). Given these considerations, it is likely that more attentional resources are devoted to the negative (vs. positive) event-sentence targets as indicated by larger mean negative amplitudes in the EPN time window. This might be the case because negative situations / perceptions are more salient and hence show a negativity bias (cf., Bayer & Schacht, Citation2014).

We can only speculate about reasons for the interesting post-hoc finding that the behavioural event-sentence effect interacted with participant gender. Since women have been shown to outperform men in emotion recognition, especially in negative emotional contexts (for a review see, e.g. Kret & De Gelder, Citation2012), but might also use emotion regulation strategies in negative situations less efficiently than men (McRae et al., Citation2008), they might assign valence faster to negative situations than men. However, women might also be less accurate in doing so because they might, at the same time, engage in emotion regulation strategies that are more cognitively demanding, resulting in more errors in valence assignment.

5.3. The influence of the speaker prime face on event-sentence processing

Central to our research question, we also found a significant speaker prime face by event-sentence interaction in the adverb region: That interaction resulted from more negative-going amplitudes in the EPN time window, when the speaker's prime face was happy and event-sentence valence was negative (vs. the negatively matching trials). However, there was no such difference when the speaker's prime face was sad and event-sentence valence was positive (vs. the positively matching trials). This interaction suggests clear modulation of event-sentence processing by the speaker's prime face: While processing the emotionally valenced adverb of the sentence together with the event, participants (consciously or unconsciously) re-actived the speaker's prime face. When this prime face was happy (vs. sad) it modulated the processing of the negative event-sentence. This suggests that they allocated more attentional resources during the adverb region to this speaker prime face-event-sentence mismatch compared to the mismatch when the speaker's face was sad (vs. happy) and event-sentence was positive. Although the interaction was no longer significant in the N400 time window in the combined analysis, the effect descriptively carries on until the end of the segment. Hence, participants are very likely not just processing but at least to some degree also integrating the emotional prime face into the event-sentence target. Since the N400 effect can indicate violations of emotional context expectations (e.g. Kotz & Paulmann, Citation2007; Krombholz et al., Citation2007; Schirmer et al., Citation2002) and the need for refining initial conceptual representations (Kutas & Federmeier, Citation2011) we suggest that, upon encountering the negative adverb, participants needed to refine their initial conceptual representations based on the happy speaker's prime face when the event-sentence targets were negative. This was on the other hand not the case, when the speaker's prime face was sad and had to be integrated into the positive event-sentence. One additional tentative explanation for why this effect emerged only for positive speaker prime faces in combination with negative event-sentences might be that positive emotions can be used to attenuate the negative feelings in negativly emotional (e.g. stressful) situations (Tugade & Fredrickson, Citation2004). Maybe in our study, when encountering the negative event-sentence targets that were primed by a positive speaker face, participants re-activated the positive speaker face to regulate the negative emotional feeling produced by the negative event-sentence target. This was, on the other hand, not necessary for positive event-sentence trials.

Regarding previous eye-tracking research, we take our results to extend the eye-tracking findings by Carminati and Knoeferle (Citation2013): They found processing facilitation for the negative sentences (i.e. indicated by more fixations to the negative event) when the younger adults were primed with a negative (vs. positive) facial expression (negative face - negative sentence vs. positive face - negative sentence). There was, however, no facilitation in processing the positive sentence when primed with negative or positive facial expressions. Additionally, they found a main effect of picture valence indicating more fixations to the negative (vs. positive) event picture. This result is complementary to our main effect of event-sentence showing more negative-going mean amplitudes for negative (vs. positive) event-sentence targets.

Finally, the speaker prime face x event-sentence interaction only emerged in the adverb region, i.e. when the emotional valence of the depicted event was highlighted by language, but not before. Hence, even though participants' expectations as to what follows a positive emotional speaker face were already violated when encountering the negative event, they seemed to only actively process the mismatch when the adverb confirmed the violation of their expectation. This emphasises not only the role of language for emotion processing (Lindquist, Citation2017) but also supports the suggestion that language is involved in creating emotional perceptions (Barrett, Citation2006; Barrett et al., Citation2007). These emotional perceptions can, according to Conceptual Act Theory, vary from person to person, even though we, as a language community, might ascribe a single emotion, e.g. anger, to these differing emotional perceptions (Barrett, Citation2006, Citation2009). Additionally, our findings that emotion and language processing are tightly linked during on-line sentence comprehension can further contribute to accounts of situated language processing (e.g. Knoeferle & Crocker, Citation2006; Münster & Knoeferle, Citation2018).

However, in line with large individual differences in emotional perceptions (Barrett, Citation2006, Citation2009) our results in Experiments 1 and 2 seem to reflect strong inter-individual variability. One reason for the high inter-individual variability of our results might be the relatively long time between prime face onset and event-sentence target onset (i.e. a prime duration of 1800 ms) whereas previous research indicated that affective priming has been most robustly shown for short Inter-Stimulus-Intervals (e.g. Spruyt et al., Citation2007).

Another reason for this high variability might be the multi-modal emotional situation that we created: in contrast to most other (EEG) studies investigating emotions, our design included positive and negative emotions in faces, event pictures and sentences. These faces, events and sentences interacted with each other to form a multi-modal emotional situation. How people process these multi-modal situations might depend on a number of individual factors, such as working memory capacity (Just & Carpenter, Citation1992) or language processing skills (Nieuwland & Van Berkum, Citation2006), but could also depend on, e.g. trait anxiety, emotional disposition and intelligence or depression proneness (e.g. Maier et al., Citation2003) - none of these were presently assessed. We did post-hoc, however, find that participant gender as one instance of individual differences mediated the speaker prime face x event-sentence interaction: the analysis suggested that the interaction was carried mainly by the female participants. Male participants did not show the interaction. Female (in contrast to male) participants showed significantly larger negative mean amplitudes when a positive (vs. negative) speaker prime face was followed by a negative (vs. positive) event-sentence target. We can only speculate on potential reasons for this effect. Many studies in emotion processing suggest that women are better at expressing and recognising emotions, yet the picture does not seem to be at all clear-cut (see e.g. Kret & De Gelder, Citation2012 for a review; and Stevens & Hamann, Citation2012; Thompson & Voyer, Citation2014 for meta analyses). Nevertheless, Stevens and Hamann (Citation2012) found in their meta-analysis of fMRI and PET studies that women show greater activation in reponse to negative emotions (in contrast to men), a finding that is supported by Thompson and Voyer (Citation2014) and indicates that women might be more negatively affected by negative emotions compared to men. Additionally, McRae et al. (Citation2008), investigating gender differences in emotion regulation, suggested that women might use positive emotions more (compared to men) to down-regulate negative emotion, while men might in general be more efficient in regulating negative emotions. Taken together, a very speculative explanation for our post-hoc finding that women but not men show the prime face x event-sentence interaction might be that women show an effect of the positive speaker prime face during negative event-sentence processing because they down-regulate the negative event-sentence using the positive prime face. Men on the other hand might not down-regulate the negative event-sentence (maybe because they do not perceive the negative event-sentence as very negative) or might use a different (more efficient, see McRae et al., Citation2008) strategy that does not involve the positive speaker prime face. To shed light on language and emotion processing in more naturalistic situations, clearly more research is needed on which, why, and how (individual) factors impact emotion processing in context.

6. Conclusion

Concluding, our results indicate that the emotional facial expressions of our interlocutors are rapidly taken into account when processing multi-modal emotionally valenced event-sentence pairs. As our results indicate, this might especially be the case when the speaker's face sets up an emotionally positive expectation for the upcoming event-sentence pair. When the emotional valence of this situation violates the expectation that the comprehender set up based on the speaker's facial expression, i.e. that what will follow will also be emotionally positive, our brains need to allocate more processing resources to attend to and process the mismatching negative emotional situation. Interestingly, this seems to be only the case for women but not men. Investigating emotional language processing in more naturalistic, multi-modal contextual situations is, especially in EEG research, a challenging endeavour. Our study hence only provides a starting point for future research that takes on this challenge.

Author contributions

JK, PK and KM designed the experiments. KM conducted the studies and analysed the data. KM wrote the manuscript. JK and PK edited, provided feedback and contributed to the article. All authors approved the submitted version.

Supplemental Material

Download Zip (691 KB)Acknowledgments

We thank Carsten Schliewe for support in programming the experiments, Ana-Maria Plesça for assistance in data collection and Dimitra Tsiapou for assistance in preparing the behavioural data for analysis. We acknowledge support by the Open Access Publication Fund of Humboldt-Universität zu Berlin.

Data availability statement

The raw data supporting the conclusions of this article can be made available upon request to the first author.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes

1 See Supplementary Material for a list of all German original sentences and their literal and paraphrased English translations.

2 Note that a design was used to test hypotheses about event-sentence (mis)matches relating to thematic role-relations between the person shown on the IAPS picture and the subject of the sentence. These hypotheses were not relevant for our current purpose of investigation.

3 We were not interested in differences in valence processing for the prime face apriori. Yet, a reviewer asked to see a comparison of ERPs for the valence-levels of the speaker prime face. To address the reviewer's request, we analysed prime face processing post-hoc. See Appendix for more information on this analysis.

4 Due to excessive eye-movements to the IAPS pictures and extreme heat during data collection resulting in sweat artifacts, 15 participants were replaced to arrive at a dataset of 25 participants.

5 As shows, condition differences were not limited to the EPN and N400 electrode clusters but distributed across all electrodes. An analysis across all head electrodes confirmed that all reported significant main effects and the interaction were also significant in the analysis across all head electrodes

6 Recall, however, that we did not analyse any effects of the face in isolation but that the effect of the prime face is always measured during the processing of the event-sentence target. See Appendix for a post-hoc analysis of the face in isolation across both experiments combined.

7 Due to excessive eye-movements to the IAPS pictures and extreme heat during data collection resulting in sweat artifacts, 9 participants were replaced to arrive at a dataset of 25 participants.

8 For the combined analysis, we additionally (post-hoc) analysed the effect of the prime face in isolation based on visual inspection of the grand averages (between 200 and 300 ms post face onset) and the pre-defined time windows (EPN and N400 time windows). Only the analysis in the 200-300ms-post-face-onset time window showed significantly larger relative negativities for the positive (vs. negative) speaker prime face. No other significant effects in processing the different level of the speaker's facial expression emerged. See Appendix A.1 for a grand average graph and additional information on this analysis.

9 This assumption is further strengthened by the main effect of the prime face in isolation in the 200–300 ms time window already indicating larger relative negativities for positive (vs. negative) speaker prime faces.

References

- Aldunate, N., López, V., & Bosman, C. A. (2018). Early influence of affective context on emotion perception: EPN or early-N400? Frontiers in Neuroscience, 12, 708. https://doi.org/10.3389/fnins.2018.00708

- Barrett, L. F. (2006). Solving the emotion paradox: Categorization and the experience of emotion. Personality and Social Psychology Review, 10(1), 20–46. https://doi.org/10.1207/s15327957pspr1001_2

- Barrett, L. F. (2009). Variety is the spice of life: A psychological construction approach to understanding variability in emotion. Cognition and Emotion, 23(7), 1284–1306. https://doi.org/10.1080/02699930902985894

- Barrett, L. F., Lindquist, K. A., & Gendron, M. (2007). Language as context for the perception of emotion. Trends in Cognitive Sciences, 11(8), 327–332. https://doi.org/10.1016/j.tics.2007.06.003

- Bates, D., Kliegl, R., Vasishth, S., & Baayen, H. (2015). Parsimonious mixed models. arXiv Preprint arXiv:1506.04967 .