?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Generativity, the ability to create and evaluate novel constructions, is a fundamental property of human language and cognition. The productivity of generative processes is determined by the scope of the representations they engage. Here we examine the neural representation of reduplication, a productive phonological process that can create novel forms through patterned syllable copying (e.g. ba-mih → ba-ba-mih, ba-mih-mih, or ba-mih-ba). Using MRI-constrained source estimates of combined MEG/EEG data collected during an auditory artificial grammar task, we identified localised cortical activity associated with syllable reduplication pattern contrasts in novel trisyllabic nonwords. Neural decoding analyses identified a set of predominantly right hemisphere temporal lobe regions whose activity reliably discriminated reduplication patterns evoked by untrained, novel stimuli. Effective connectivity analyses suggested that sensitivity to abstracted reduplication patterns was propagated between these temporal regions. These results suggest that localised temporal lobe activity patterns function as abstract representations that support linguistic generativity.

Introduction

Rule and constraint-based models of language processing (cf. Jackendoff, Citation2002; Prince & Smolensky, Citation2004) rely on abstract linguistic variables to explain the generativity that is the hallmark of human thought. Like the variables used in algebra or computer programming, linguistic variables enable generalisation by allowing a single computation or structural constraint to apply to a potentially open set of specific instances (Jackendoff & Audring, Citation2020). Variables were originally proposed in linguistic theory to explain the generativity that enables language users to generate and evaluate an infinite number of new forms (Chomsky, Citation1965). For example, the rule that the regular English past tense can be formed by adding an -ed to a verb form requires the representation of a variable, VERB, that applies to all verb forms regardless of familiarity or phonological similarity to one another. Variables describing nouns, verbs and their modifiers account for how speakers generate and evaluate the grammaticality of inscrutable sentences like Colorless green ideas sleep furiously (Chomsky, Citation1957). Similarly, variables that capture consonants as a class appear to be needed to explain the ability of Hebrew speakers to generalise native constraints on the patterning of consonants in root morphemes to novel forms that contain phonemes not found in Hebrew (Berent et al., Citation2012). Variable-dependent models describing the patterning of classes of speech sounds in words or syllables, or the classes of words defined by grammatical and semantic roles are used to capture structural constraints on the acquisition, perception, and production of language, as well as its breakdown after pathology (Berent, Citation2013; Garraffa & Fyndanis, Citation2020; Pinker, Citation1984). Given the variable's foundational role in theory, it is striking that the neural basis of variable representation has been largely unexplored.

In both rule- or constraint approaches and non-rule-based approaches including Hierarchical Bayesian Inference (Tenenbaum et al., Citation2011) or deep learning (LeCun et al., Citation2015), generativity depends on the representation of abstract features that capture classes of items that do not share common input features. For example, convolutional neural network models discover a hierarchy of increasingly abstract representations at each model level that allow them to categorise or label novel images that lack reliable pixel-by-pixel correspondences with other images in their classes (LeCun et al., Citation2015). Similarly, Hierarchical Bayesian Inference (HBI) models can discover hierarchical levels of category abstraction (e.g. chichuahua < dog < animal) and use those abstract representations to make probabilistic inferences about the world (e.g. a chihuahua might also be a dog, but cannot be both a dog and a cat) (Kemp & Tenenbaum, Citation2008). In all of these approaches, the scope of a representation determines the scope of generalisation. Abstraction can either widen generalisation by capturing a broad class of exemplars, or narrow generalisation by delimiting a smaller class of exemplars.

The productive linguistic process of reduplication provides a critical test case for the role of variables in linguistic generativity (Berent, Citation2002; Berent et al., Citation2002; Marcus, Citation2001; Marcus et al., Citation1999). Reduplication is a morphophonological process in which words or parts of words are duplicated to change their meaning or grammatical properties. Importantly, reduplication can be applied to a universally-quantified class of phonological inputs regardless of their phonemic constituency or phonological similarity to familiar reduplicated forms (Marcus, Citation2001). In formal terms, reduplication requires a variable such as WORD or SYLLABLE to capture an open set of potentially repeatable forms. Here, abstraction of an open class of elements produces broad generativity, which is not limited by the phonemic similarity between any potentially reduplicable syllable or identifiable unit that are known to undergo reduplication. Muysken (Citation2013) observes that reduplication is a universal strategy in improvised language behaviour used when speakers with limited overlapping proficiency in a common language communicate, as in the cases of communication involving early language learners or the creation of pidgins in early language contact. It is also a common process used productively in many world languages to mark diverse properties including tense, case, number, intensity, size, and collectivity. Rubino (Citation2013) examined a diverse sample of 368 world languages, and found that 35 had productive processes limited to the reduplication of a whole word, while 278 had productive processes related to reduplication of both whole words and portions of words. Only 55(15%) lacked any form of productive reduplication. Examples include whole word reduplication marking plurality in Japanese (e.g. yama – mountain; yamayama -mountains) and partial word reduplication marking tense in Tagalog (e.g. sulat – writing; su-sulat -will write). While reduplication frequently involves adjacent copying of an element, a number of languages also employ discontinuous reduplication, in which morphologically distinct elements are placed between a base element and the copied form (e.g. in Khasi: tuh – steal; tuh sat tuh – crafty) (Mattiola & Masini, Citation2022). Although Rubino does not recognise English as a reduplicating language, it has several forms of productive reduplication, including contrastive reduplication, a complex process involving interactive phonological, morphological, syntactic and lexical factors that limits the interpretation of an element, as in the sentence “It's a tuna salad, not a SALAD-salad” (Ghomeshi et al., Citation2004). A number of languages, including the Semitic languages, place constraints on the patterning of reduplicated phonemes and syllables that influence online speech perception (Berent et al., Citation2002). Research into the learnability of artificial grammars shows that human infants readily acquire repetition-based grammars and generalise them to novel exemplars (Marcus et al., Citation1999). Indeed, grammars based on repetition generalise more robustly than grammars based on other properties (Gomez et al., Citation2000; Tunney & Altmann, Citation2001).

The purpose of this study was to identify localised patterns of brain activity associated with reduplication patterns and determine whether they function as variables or abstract representations with variable-like scope. Current neural research does not provide strong evidence for neural representation of phonological variables. Related research using between-trial repetition of visual or auditory stimuli has identified both repetition-driven neural suppression and occasionally enhancement effects, primarily associated with bilateral primary sensory cortices (Segaert et al., Citation2013). These effects are sensitive to task and design and are related to changes in top-down neural influences on those cortices (Ewbank et al., Citation2011; Hsu et al., Citation2014; Summerfield et al., Citation2008). They do not involve the generation or evaluation of novel forms based on abstract properties, and so do not require variable representation.

We operationalise neural representation as a pattern of regional activity in a functionally relevant brain area that reliably indexes a thing or category and has identifiable downstream influences on activity in other regions that plausibly relates to an observable cognitive ability (Dennett, Citation1987; Kriegeskorte & Diedrichsen, Citation2019). To function specifically as a representation of a variable, these patterns of neural activity should be evoked by novel inputs irrespective of their similarity to known items and should support generative operations including the evaluation of novel forms.

We collected simultaneous magnetoencephalography (MEG) and electroencephalography (EEG) data in adult subjects while they completed an artificial grammar learning task used to explore abstract rule learning in infants (Marcus et al., Citation1999). In each block, subjects first heard a series of thirty trisyllabic CVCVCV nonsense words (exposure stimuli) with a common syllable reduplication pattern (e.g. AAB as in chih-chih-sha), and then were asked to indicate by button press whether subsequent nonsense words (test stimuli) composed of different syllables (e.g. ba-ba-mih) “came from the same imaginary language”. We compared the neural responses to test stimuli in the three syllable reduplication conditions (AAB, ABB, and the discontinuous reduplication pattern ABA) using three complementary approaches: activation contrast, neural decoding, and effective connectivity analyses. Specifically, to determine whether there is abstract representation of reduplication we examined whether previously unheard nonsense words evoke localised patterns of activation that support machine learning categorisation (i.e. neural decoding) of syllable reduplication and explored potential causal downstream consequences of those localised activations using effective connectivity measures. We reasoned that for subjects to determine whether the reduplication pattern of a novel stimulus is consistent with target pattern irrespective of its phonemic content, they must represent the reduplication pattern of that stimulus.

Materials and methods

Participants

Twelve right-handed adults (8 females, age range 23–40 years, mean 31.7) participated in this study. None of the participants reported a history of hearing loss, speech/language or motor impairments, and all were native speakers of Standard American English and self-identified as monolingual. Informed consent was obtained in compliance with the Partner's Healthcare Human Subjects Review Board and all study procedures were compliant with the principles for ethical research established by the Declaration of Helsinki. Participants were paid for their participation.

Materials

The auditory stimuli were unique trisyllabic nonwords. These were formed by concatenating a pair of phonetically diverse sets of syllables. Syllables were recorded independently to avoid the introduction of coarticulatory cues to other syllables. Each pairing was used to create 2 items each with AAB, ABA, and ABB patterning of syllables in which each syllable occurred an equal number of times in all syllable positions (see Table S1). Six syllables (/ðɪ/, /θu/, /ʒu/, /ʃa/, /tʃɪ/, and /dʒɪ/) were recorded by a female American English talker [AS] and combined to form 90 exposure items. Sixteen different syllables (/ba/, /pɪ/, /ga/, /mɪ/, /fu/, /va/, /ta/, /dɪ/, /nɪ/, /zɪ/, /sɪ/, /la/, /rɪ/, /ji/, /hɪ/, /ka/, and /wa/) were recorded by a male American English talker [DG] and combined to form 720 test syllables. We designed exposure and test stimuli to minimise featural overlap. Exposure items employed affricates and interdental and palatal fricatives as consonants. Test items employed stops, sonorant consonants (nasals, glides and liquids) and labiodental and alveolar fricatives. There was overlap in vowels between the exposure and test sets, but a significant proportion of words (29.2%) had the same vowel in all syllables, and so syllable identity alone was not reliably contrastive for reduplication patterns. Auditory stimuli were recorded at a sampling rate of 44.1 kHz with 16-bit sound and manipulated using PRAAT (Boersma & Weenick, Citation2015) to equate syllables for duration (250 ms) and mean intensity.

Procedure

Within a single session, subjects performed a delayed two-alternative forced choice categorisation task during simultaneous MEG and EEG recording. The purpose of the task was to ensure that subjects were attending to syllable repetition patterns. Prior to beginning the experiment, subjects were presented with an example of the task with correct and incorrect answers revealed to ensure they understood the instructions and goals of the task. The pretraining stimuli consisted of four-word sequences (e.g. red-red-blue-blue, dog-cat-cat-dog) reflecting patterns not used in the experiment (e.g. AABB, ABBA). Participants were given 4 examples of a pattern and then given examples and told “this is part of the language” if a test item fit the target pattern or “this is not part of the language” if it did not.

The actual task consisted of 720 test trials, presented in 6 blocks of 120 trials each. Subjects were given a chance to rest between blocks to prevent fatigue. At the beginning of each block, subjects were instructed to listen to a stream of nonsense words that all belonged to the same “invented language” (the exposure items). All auditory stimuli in each stream followed the same trisyllabic reduplication pattern: either an ABA, AAB, or ABB (two blocks of each). They were also told that after hearing the stream of nonsense words, they would hear different individual potential “words” (the test items) and would have to respond with a green button press if the individual word belonged to the same invented language of the preceding stream and a red button press if it did not. In each block, 60 trials featured auditory stimuli following the same reduplication rule in the preceding exposure stream (i.e. the correct response is green). The remaining 60 trials were evenly split between the other syllable reduplication rules (30 per remaining rule). Trial order was randomised within each block, with only one exemplar derived from each syllable pairing presented within blocks. Block order was pseudorandomised between subjects, with the restriction that the same target pattern never occurred in two consecutive blocks.

Experimental trials began with a 500-ms presentation of a visual fixation stimulus (+). This fixation interval was immediately followed by the presentation of a 750-ms spoken trisyllabic nonsense word. The fixation stimulus disappeared at the offset of the nonsense word. After 500 ms, a question mark appeared on the screen, prompting subjects to indicate whether the item belonged to the invented language from the preceding exposure stimuli by pressing one of two keys on a keypad using their left hand. No feedback was given after correct responses, but incorrect responses were followed by the 2-second presentation of a red “X”. There was a 500-ms intertrial interval.

Data acquisition and estimation of cortical source currents

MEG and EEG data were simultaneously collected using a whole head Neuromag Vectorview system (MEGIN, Helsinki, Finland) in a magnetically shielded room. The 306-channel MEG system has 102 magnetometer and 204 planar gradiometer sensors. It also features a 70 channel EEG cap with a nose reference, and two electro-oculograms (EOG) to identify blink- and eye-movement artifacts. MEG and EEG data were filtered between 0.1 and 300 Hz and sampled at a rate of 1000 Hz. The location of all EEG electrodes, four head-position indicator coils, and about 100 additional surface points on the scalp were determined relative to two auricular landmarks and the nasion prior to data collection using a FastTrack 3D digitizer (Polhemus, Colchester, VT). The position of the head within the MEG sensor array was measured at the beginning of each block during testing.

Following the MEG/EEG testing we collected anatomical MRI data from each participant using either a Siemens 1.5T Avanto or 3T Trio 32-channel “TIM” system. High-resolution 3D T1-weighted head-only anatomical images were obtained using an MPRAGE sequence (TR = 2730 ms, T1 = 1000 ms, TE = 3.31 ms, FOV = 256 mm, slice thickness = 1.33 mm). Freesurfer (Fischl, Citation2012) was used to reconstruct the cerebral cortex as approximately 300,000 vertices over a tessellated surface for each subject. Individual subject MRI data were aligned and averaged together using a spherical morphing technique.

MRI-constrained cortical minimum-norm estimates of the source for the combined MEG and EEG were created using the MNE processing stream (22). Sharon et al. (Citation2007) demonstrate that combining simultaneously acquired MEG and EEG data provides more accurate source localisation than is offered by monomodal analysis due to the complementary properties of the MEG and EEG signals. For the forward model, 3-compartment Boundary Element Model (brain, skull, and scalp) was constructed for each subject using the skull and scalp surfaces segmented from the MRI. The source space was defined by assigning current dipoles at about 10,000 vertices of each reconstructed cortical hemisphere; the orientation of the dipoles was not constrained. Source estimates were reconstructed from 200 ms before to 1000 ms after the onset of each novel auditory test stimulus; the 200-ms interval preceding stimulus onset was used as the baseline. Source estimates were calculated at the individual participant level, and then transformed onto the averaged group cortical surface for the identification of regions of interest (ROIs).

Regions of interest

All analyses were performed on a common set of ROIs. Because Granger causation analysis imposes the most stringent constraints on ROI identification, we determined the ROIs based on an algorithm designed to identify a set that meets the assumptions of Granger analysis (Gow & Caplan, Citation2012). This algorithm is automated within our Granger Processing Stream (https://www.nmr.mgh.harvard.edu/software/gps). Ideally, Granger analyses should be carried out over the broadest possible set of non-redundant signals. To do this, we averaged MNE source estimates associated with test stimuli across all subjects and conditions for the interval of 100–500 ms and identified the dipole in the 95% percentile for mean activation. We chose this time interval to reflect the period of earliest cortical sensitivity to speech signals to the end of the window typically used to measure the lexically sensitive N400 event-related potential (Marinković, Citation2004). Although this period does not reflect the full duration of test stimuli, it is long enough to capture critical speech processing dynamics related to reduplication of the first syllable in AAB tokens, but short enough to mostly exclude activation associated with task-specific response preparation, including the potential initiation of responses accepting an AAB token as the member of the target “language” with an AAB pattern based on the first two syllables. Once we selected the strongest dipoles, we eliminated all weaker dipoles within 5 mm of them. For the remaining dipoles, we normalised the individual activation functions, and performed pairwise similarity comparisons using a Euclidean distance measure. Based on these measures, we eliminated the weaker dipole if activation differences were within 0.6 standard deviations of one another. This process identified a set of 68 centroid dipoles that we used to seed individual ROIs. We estimated the spatial extent of ROIs based on these averaged data by growing patches around them that included all contiguous dipoles with similar activation functions, again based on the comparison of normalised activation functions of the centroid and contiguous dipole activation functions, this time including all dipoles with a difference within 0.5 standard deviations of the centroid's. Two other parameters within the algorithm, continuity, and spatial weight, were set at the default values of 1 and 0.6 respectively. Note that all parameters used in ROI identification were set heuristically to provide a comprehensive of potentially causal sources as required by Granger analysis. These parameters yielded ROIs that were large enough to be subdivided into multiple signals to support machine classification. Posthoc analyses showed that ROI selections were relatively stable across a range of potential parameter settings. Table S2 shows the MNI coordinates of the centroids for each ROI along with an anatomical label for that point provided by Freesurfer's automatic parcellation tool using the Desikan Atlas (Desikan et al., Citation2006). Figure S1 shows the spatial extent of each ROI visualised over an averaged cortical surface. Although the ROIs were defined using averaged subject data, all subsequent analyses were conducted based on individual subject data, with the ROIs projected from the averaged cortical surface onto individual cortical surfaces.

Comparison of activation time courses

Activation differences were evaluated by pairwise comparisons of the mean activation time courses in the three experimental conditions (AAB-ABA, ABB-ABA and AAB-ABB). These comparisons provided 5 potential reduplication contrasts including: two second-syllable contrasts of reduplicated minus non-reduplicated syllables (AAB-ABA and AAB-ABB), two third-syllable contrasts of non-reduplicated minus reduplicated syllables (AAB-ABA and AAB-ABB), and one third-syllable contrast between continuous reduplication (ABB) minus distributed reduplication (ABA). Note that all neural analyses (except control analyses specifically examining target match/mismatch effects) examined specific test stimulus reduplication patterns collapsing across target pattern conditions. The averages were based on all dipoles within the ROI patches projected onto individual subjects’ cortical surfaces.

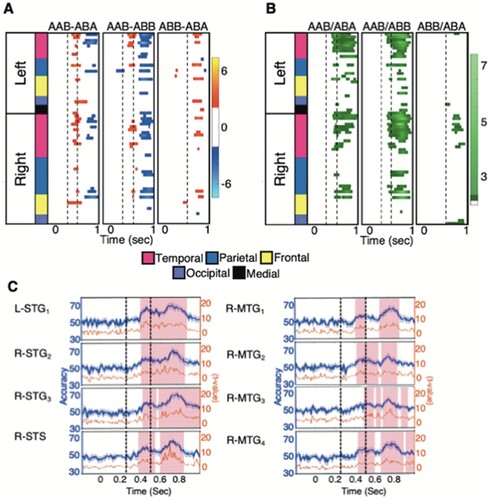

Figures 2(A) and S2 show the t-values associated with activation comparisons. We used non-parametric permutation-based cluster statistics (Cichy et al., Citation2014; Maris & Oostenveld, Citation2007) to identify clusters of subsequent timepoints with between-condition activation differences (1000 permutations; cluster defining threshold p < 0.05 from two-tailed t-test; cluster significance level p < 0.05). Activation differences were generally restricted to the interval between 200–400 ms after the onset of a contrast between a reduplicated and non-reduplicated syllable. Accordingly, ROIs were identified as showing reliable sensitivity to reduplication if they showed significant clusters during this time window for all five reduplication contrasts found in the three condition comparisons.

Neural decoding analysis

Decoding analyses relied on a linear support vector machine (www.csie.ntu.edu.tw/~cjlin/libsvm) to perform two-way classification of within region MNE activation timeseries. Each of the 68 ROIs was divided into 8 subdivisions. We then averaged MNE source estimates at each timepoint for every vertex within a subdivision correcting for orientation for each individual trial. This yielded 8 timeseries per ROI per trial covering the interval from 200 ms pre-stimulus onset to 1000 ms post-onset. In addition to providing a substantial window on pre-contrast activation that can be used as a control interval to observe potential spurious decoding, this window provides full coverage of stimulus presentation plus a 250 msec post-stimulus interval in which to observe potential cumulative effects or decoding with longer rise times. Activation timeseries were vector normalised to minimise the influence of gross activation differences. Trials were downsampled to 100 Hz and bundled into groups of 10 within each condition and averaged to improve SNR. One hundred random realizations of this bundling were performed to eliminate potential sampling bias. Separate support vector machine classifiers were trained for each ROI for each pair of experimental conditions (ABA versus AAB, ABA versus ABB, and AAB versus ABB) using timeseries from randomly selected pairs of bundled and averaged trials from the contrasted conditions. Accuracy was assessed using a leave-one trial-out technique for each timepoint and pairwise condition contrast at the single subject level iterating through all trials. Overall accuracy at each time point on untrained trials was calculated by averaging the performance of each classifier across subjects. As in the case of activation comparisons, we used non-parametric permutation-based cluster analyses (Cichy et al., Citation2014; Maris & Oostenveld, Citation2007) to identify clusters of above chance classification in combined subject data using a cluster defining threshold of p < 0.05, and cluster significance level of p < 0.05.

Secondary analyses examined the influence of task effects, specifically the detection of target mismatch (e.g. detecting a token with an ABA pattern in a block with a target/exposure pattern of ABB). To control for stimulus pattern, analyses focused on tokens with the same reduplication pattern in blocks where they either matched the target/exposure pattern (e.g. ABB stimuli in ABB blocks) or mismatched it (ABB in ABA blocks). These analyses did not include AAB stimuli because they introduced match/mismatch at a different point in the stimulus (the second versus third syllable). These analyses were conducted using the same methods that we used for reduplication pattern contrasts. We used the ABB versus ABA reduplication contrast as a reference condition to examine the timing of mismatch versus match versus reduplication contrasts because both contrasts were based on the same stimulus items and shared a contrast onset at the beginning of the third syllable.

Effective connectivity analyses

Effective connectivity analyses were conducted using a Kalman filter-based implementation of partial Granger-Geweke causation analysis (Gow & Caplan, Citation2012; Milde et al., Citation2010). Granger causation analysis is premised on the idea that causes both precede and uniquely predict their effects. It is implemented by measuring all potentially causal sources and generating two kinds of predictive models. The first is a full model that uses all signals to predict a single in the system. The second is a restricted model that leaves one signal out of the predictive model. The missing signal is said to Granger cause changes in the predicted signal to the extent that removing the missing signal increases prediction error compared to the full model. Our source space estimates of activation are particularly well suited for Granger analysis because they capture simultaneous activity over all cortical surfaces, have the necessary temporal resolution to support predictive timeseries analyses of task-related activation, and are not subject to hemodynamic confounds that affect Granger analysis of BOLD-fMRI data. The use of Kalman filters to create instantaneous predictions of activation has two distinct advantages. The Kalman gain function addresses the potential noisiness of MEG and EEG measures by modulating the impact of outlier data on the model. Also, by providing instantaneous predictions at each timepoint, it obviates concerns about the stationarity of signals that are often a stumbling block in the analysis of evoked brain responses.

Our analyses focused on the interval of 350–500 ms in the AAB, ABB and ABA conditions, i.e. 100–250 ms after the onset of the second syllable. We chose this interval to reflect the period of successful support vector machine classification of these conditions for the 8 consistent decoding regions. We focused on the two contrasts, AAB-ABB and AAB-ABA, that showed successful decoding in this window. Note that while ABB and ABA show different overall reduplication patterns, decoding results suggest that activation in this period reflects activation before onset of reduplication-related activation effects for these tokens. We did not compare processes related to the third syllable, which could be affected by potential refractory dynamics from an earlier reduplication within the stimulus. We included the 50 ms immediately preceding the classification interval to capture dynamics that lead up to it and excluded the last 100 ms of the decoding interval to avoid possible influence from the third syllable, which showed a different reduplication pattern in the two contrasts. The two separate analyses (AAB-ABB and AAB-ABA) were treated as replications to ensure robustness of our results.

All analyses involved predictive models based on all 68 ROIs identified by algorithm. As described in Gow and Caplan (Citation2012), predictive models were premised on averaged MNE activation timeseries, with each timeseries reflecting the average activation across all trials in a given condition for a subject at the most strongly activated vertex within the ROI for that subject across trials. The strategy of independently selecting vertices to represent each ROI in each participant addressed potential individual variation in brain morphology and functional localisation while supporting group level interpretation of results within identifiable and generalisable cortical units. Cross-trial averaging also increases the robustness of source estimates. The use of a Kalman filter combined with the spatial distributional properties of the MEG signal and an ROI identification algorithm that enforced homogeneity of activation within the ROI and ensured that these dipoles were representative of activation in their ROI.

We adapted our Granger analyses in two ways to directly examine the propagation of reduplication information between regions of interest. Timeseries associated with ROIs that reliably show above chance decoding of reduplication contrasts were replaced in analyses of their potential roles as information flow sources or sinks in connections between pairs of regions. Because our support vector machine analyses were trained on eight activation timeseries per ROI, we used cross-trial averages of each of the same eight timeseries to collectively represent activity in that region in analyses examining that ROI's influence on another region. This meant that full predictive models took 75 activation timeseries to represent the 68 ROIs. Restricted models were generated by removing the eight timeseries as a set, leaving 67 activation timeseries. In analyses examining a decoding region's role as an information flow sink, the subject's averaged MNE activation timeseries for that region were replaced with normalised SVM classification accuracy timeseries series. These modifications allowed us to directly examine how the flow of the same information that supports decoding influences the downstream decoding for these ROIs.

We attempted to identify the optimal autoregressive model order for predictions using Akaike's and Bayesian Information Criteria (AIC, BIC), but neither produced clear minima and so model order was set heuristically at 5 steps corresponding to 5 milliseconds. Predictions for full and restricted models were generated for every potential directed pairwise interaction of ROIs at each timepoint.

The strength of effective connectivity was quantified using the Granger causation index or GCi (Milde et al., Citation2010):

where PE(n) and PE(n,1) are the prediction errors of the full and restricted autoregressive models, respectively, for the prediction of a future value of signal j. We assigned a p-value to the value of each GCi using a bootstrapping method (Milde et al., Citation2010). This involved generating a null distribution of GCi values for each timepoint and directed connection with 2000 trials of resampled data.

We compared the strength of Granger causation between experimental conditions using a binomial test based on the difference in the number of timepoints within a time window of interest that reached threshold (p < 0.05) (Tavazoie et al., Citation1999). All reported results were significant and showed the same sign in both AAB-ABA and AAB-ABB contrasts after false discovery rate (FDR) correction (Benjamini & Hochberg, Citation1995).

Results

Behavioural

Participants performed the artificial grammar task with high accuracy (mean 95.9%, SE 1.94%), confirming that they were paying attention to the nonword stimuli.

Region of interest (ROI)

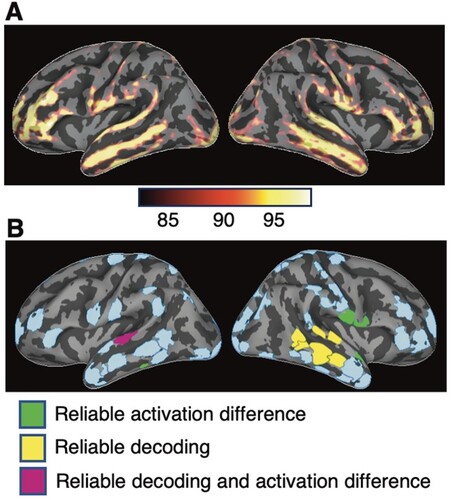

Averaged estimates of the overall event-related cortical source currents revealed a broad bilateral activation pattern extending beyond the traditional perisylvian language centres ((A)). Based on the estimated spatiotemporal patterns of activation in all conditions, an automated algorithm designed to determine a set of ROIs that meets the assumptions of Granger causation analysis (Gow & Caplan, Citation2012) identified a comprehensive set of 68 ROIs ((B) and Table S1). These ROIs were used for all activation contrast, neural decoding, and effective connectivity analyses.

Figure 1. Event-related cortical activation and regions of interest (ROIs). (A) Spatial distribution of estimated activity, averaged across all conditions and subjects for the interval of 100–500 ms after the stimulus onset. The activity is visualised on the lateral view of averaged inflated cortical surfaces. The colour scale indicates the percentile of the activation magnitude among all source locations. (B) ROIs identified based on the estimated spatiotemporal patterns of activity. Colour coding indicates regions that in subsequent analyses showed reliable activation differences (green), successful decoding (yellow), or both (magenta, region L-STG1) across all reduplication pattern contrasts. The other regions (pale blue) showed neither activation differences nor decoding. Descriptive names and locations of the ROIs are given in Figure S1 and Table S1.

Activation contrasts for repeated vs. non-repeated syllables

To evaluate potential contributions of low-level stimulus repetition enhancement or suppression effects, we first examined pairwise activation contrasts between the conditions with different syllable reduplication patterns (Figures 2(A) and S2). Two contrasts between reduplicated/repeated and non-reduplicated/repeated syllables were available at the second syllable position (AAB-ABA and AAB-ABB) and two at the third syllable (AAB-ABA, and AAB-ABB). The third syllable contrast between ABB and ABA provided a contrast in repetition, even though both syllables are duplicated (ABB continuously and ABA discontinuously). Permutation based cluster analysis (Maris & Oostenveld, Citation2007) identified significant differences between conditions in five ROIs that aligned with all five contrasts between reduplicated and non-reduplicated syllables (green and magenta regions in (B)): left superior temporal gyrus (L-STG1), left inferior temporal gyrus (L-ITG2), right superior temporal gyrus (R-STG4), right postcentral gyrus (R-postCG6) and right precentral gyrus (R-preCG3). In all cases, the activation was stronger for the repeated syllable than for the non-repeated syllable, starting approximately 150 ms after the onset of the repetition contrast. This localisation pattern is consistent with that found in speech perception studies using between-trial word repetition (Bergerbest et al., Citation2004; Graves et al., Citation2008). Here, however, repetition produced enhanced activation, whereas those previous studies all found repetition suppression. Repetition enhancement has been found in the visual domain, where it has been attributed to low-level temporal summation of either anticipated or recently activated patterns and new stimulation (James & Gauthier, Citation2006; Noguchi et al., Citation2004).

Neural decoding of reduplication contrasts

The potential role of spatially distributed population coding of reduplication within ROIs was assessed by neural decoding analysis. Support vector machine classifiers were applied on individual subject data using multiple vector-normalised activation time courses from each ROI. Figures 2(B) and S3 show the accuracy of pairwise classification of the different reduplication conditions. Cluster analyses (Cichy et al., Citation2014; Maris & Oostenveld, Citation2007) showed intervals of successful reduplication pattern classification for a number of regions, generally starting about 150 ms after the onset of a reduplicated syllable and lasting 100 ms or longer ((C) and Figure S4). Eight ROIs showed reliable decoding in this interval in the five pairwise classifiers that examined syllabic contrasts in reduplication pattern (including four contrasts between reduplicated and non-reduplicated syllables, and one contrast between continuous and discontinuous reduplication). All of these regions were in the temporal lobe (yellow and magenta regions in (B)): left superior temporal gyrus (L-STG1), two portions of right superior temporal gyrus (R-STG2, R-STG3), right posterior temporal sulcus (R-STS1), and four portions of the middle to posterior right middle temporal gyrus (R-MTG1, R-MTG2, R-MTG3 and R-MTG4). The finding that among these 8 regions, only L-STG1 showed significant differences in the activation contrast suggests that reliable decoding by these regions is not broadly reduceable to repetition enhancement effects.

Figure 2. Time courses of pairwise activation contrasts and decoding accuracy between conditions with different reduplication patterns. (A) Activation differences (t-values) between the experimental conditions for all ROIs as a function of time. Dotted vertical lines indicate the onset time of a repeated syllable. The cortical lobes where the ROI resided are indicated by colours in the column at left; names of the ROIs can be found in Figure S2. (B) Pairwise decoding accuracy (t-values) between conditions. (C) Decoding accuracy (blue: %-correct; yellow: t-values) for the 8 ROIs that reliably supported decoding of reduplication contrasts, shown for the AAB versus ABB comparison. Shaded regions mark the results of cluster-based permutation test for statistically significant decoding accuracy across the subjects (p < 0.05).

An additional 8 left hemisphere ROIs (L-STG2, L-MTG1, L-MTG2, L-MTG3, L-MTG4, L-MTG5, L-ITG2, L-postCG2) and 4 right hemisphere ROIs (R-postCG4, R-postCG5, R-postCG6, R-preCG2) showed reliable decoding of all 4 contrasts between reduplicated and non-reduplicated syllables, but not the contrast between the third syllables of the locally reduplicated B in the ABB pattern and the discontinuously reduplicated A in the ABA pattern. This suggests that these ROIs were sensitive to the presence versus absence of reduplication, but not the overall reduplication pattern of test stimuli.

Neural decoding of rule matching

Successful decoding does not necessarily imply that variable representations are encoded in brain activity. The grammaticality judgement task depends on the detection of reduplication patterns, and so it is possible that the activation patterns driving successful classification reflect an error signal evoked when tokens do not match a target pattern. Therefore, we performed additional control decoding analyses comparing trials in which the same stimulus reduplication patterns matched the target patterns (e.g. ABA stimuli in blocks with ABA target) with trials in which they did not (ABA stimuli in blocks with ABB target). Four of the eight regions found to reliably decode reduplication also decoded stimulus-target mismatch (Figure S5). In three of these regions (R-STG3, R-STS1, and R-MTG3) stimulus-target mismatch was only decodable after the offset of the period during which reduplication was decodable. L-STG1 showed some temporal overlap between intervals of decoding reduplication and stimulus-target mismatch, with reduplication becoming decodable before stimulus-target mismatch. This shows that activation related to the representation of stimulus reduplication patterns was temporally, and in the case of R-STG2, R-MTG1, R-MTG2 and R-MTG4, also spatially separable from activation related to task-induced identification of mismatch between the stimulus and target patterns.

Effective connectivity analyses of the downstream effects of reduplication decoding

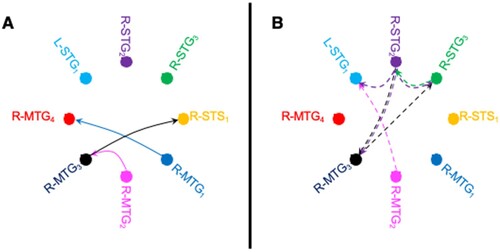

To determine whether local patterns of neural activity evoked by reduplication also influence downstream neural processing, we used a Kalman filter-based implementation of Granger causation analysis that captures directed flow of information between brain regions (Gow & Caplan, Citation2012; Milde et al., Citation2010). We examined differences in effective connectivity separately for AAB-ABA and AAB-ABB reduplication contrasts in the interval of 100–250 ms after the onset of the second syllable. Analyses reflected timeseries from all 68 brain regions, but focused on interactions between the 8 regions that reliably decoded reduplication contrasts. When a syllable was reduplicated, particularly in a design like the present one in which some form of repetition was found in each token, we hypothesise that information content was changed in two ways. Not only was the new information that repetition has taken place introduced, but also uncertainty about the interpretation of predictable phonological content was decreased since the repeated vowel was always predictable based on the repeated consonant that preceded it. Given this assumption, results showing more information flow in the reduplicated second syllable condition ((A)) reflect the transfer of repetition information and results showing more information flow in the non-repeated condition ((B)) reflect the transfer of new phonetic or phonological information. The presence of both types of information flow may support both the high accuracy of behavioural repetition judgments, and the listeners’ ability to recognise phonological content.

Figure 3. Cortical information flow associated with reduplication. Effective connectivity between the 8 ROIs that supported reliable decoding of reduplication contrasts in the interval of 100–250 ms after the onset of the second syllable in which reduplication of the second syllable (A) created stronger information flow (solid lines) or (B) weaker information flow (dashed lines). Line colour reflects the source of information flow. In both cases, only those connections are depicted that showed significant differences between conditions in both AAB-ABA and AAB-ABB contrasts, as measured by Granger causality analysis (p < 0.05, FDR-corrected).

Granger analyses revealed clear evidence of the propagation of information about reduplication patterns in processing interactions between the 8 ROIs that reliably support the decoding of reduplication contrasts. We found 10 directed connections in which activation patterns in one ROI that support decoding Granger cause changes in decoding accuracy as a function of reduplication contrasts for both AAB-ABB and AAB-ABA after correction for false discovery rate in each. These included 3 connections in which reduplicated syllables evoked stronger information flow than non-reduplicated syllables ((A)), and 7 in which non-reduplicated syllables evoked stronger flow ((B)). The three connections that were stronger for reduplicated syllables, and thus are hypothesised to involve the flow of information about reduplication were from R-MTG1 to R-MTG4, from R-MTG2 to R-MTG3, and R-MTG3 to R-STS1. This pattern reflects a general posterior to anterior flow of information in the right middle temporal gyrus that reflects the hypothesised flow from early (posterior) to late (anterior) middle temporal regions in the ventral speech pathway. The stronger flow of information between regions found when syllables were not reduplicated was broader, with the three bilateral superior temporal gyrus regions (L-STG1, R-STG2 and R-STG3) interacting with each other, and interactions between two right middle temporal gyrus regions (R-MTG2 and R-MTG3). Within this pattern, we found reciprocal connections between R-STG2 and R-STG3, and between R-MTG3 and R-STG2.

Discussion

The purpose of this work was to identify patterns of localised neural activity that serve as representations of linguistic variables. Consistent with the results of prior studies aimed at demonstrating variable-mediated processing in infants (Marcus et al., Citation1999), adult participants showed high rates of accuracy in a task requiring the evaluation of syllable reduplication patterns in a set of phonemically diverse untrained nonwords. The within-region activity of a set of 8 mostly temporal lobe regions (1 left hemisphere) known to be associated with the representation of phonetic and phonological structure was found to support the decoding of syllable reduplication across 5 different syllable reduplication contrasts. In these regions, significant decoding was observed in a period beginning roughly 150 ms after the onset of a syllable reduplication pattern contrast. Although one region, left posterior STG showed both repetition enhancement effects and successful decoding of reduplication contrast with similar time courses, control analyses showed that successful decoding was not dependent on low-level repetition effects on overall ROI activation. Additional control decoding analyses showed that the decoding of reduplication patterns in individual tokens was not attributable to the detection of mismatch between individual tokens and target reduplication patterns. While 8 ROIs supported decoding of such mismatch, only 4 of those regions also supported reduplication pattern classification, and in all cases mismatch decoding was only achieved after the decoding of the reduplication pattern. Effective connectivity analyses demonstrated that within-ROI activation patterns that supported reduplication pattern classification in some ROIs Granger-caused changes in decoding accuracy in others, demonstrating that these patterns had downstream effects on neural processing – a key criterion that they function as representations. Collectively, the present results suggest that the localised activation patterns function as neural representations of a property, i.e. syllable reduplication, that appears to require variable representation.

It is noteworthy that the distribution of reliable decoding regions was restricted to bilateral portions of the superior temporal gyrus (L-STG1), and right hemisphere portions of the superior temporal sulcus (R-STS1) and middle temporal gyrus (MTG), which are all strongly implicated in the processing and representing of the sound structure of language. Electrocorticography provides clear evidence that bilateral STG is sensitive to a variety of non-variable spectrotemporal properties of the speech signal related to phonetic feature cues used to distinguish phonemes and envelope amplitude cues that signal syllabic structure (Li et al., Citation2019; Mesgarani et al., Citation2014; Oganian & Chang, Citation2019). This does not rule out the possibility that these regions also encode variable information about phonemic or syllabic structure. STG activation is shaped by input from a broadly distributed network of regions associated with higher levels of representation during speech perception (Gow & Olson, Citation2016; Gow et al., Citation2008), as well as the integration of current and past inputs (Gwilliams et al., Citation2018; Yildiz et al., Citation2016). Drawing on these phenomena, Li et al. (Citation2019) hypothesise that STG activation reflects the integration of inputs over time mediated by recurrent connections between auditory columns. Within this broad framework, current evidence that decoding accuracy within bilateral STG is influenced by both STG-to-STG and MTG-to-STG information flow points to a potential mechanism for the discovery of reduplication by the recurrent integration and comparison of recent and current syllabic inputs. Sensitivity to specific instances of reduplication would not constitute variable representation, but the detection of specific instances of reduplication would seem to be a necessary first step in the discovery of features that capture reduplicated syllables as a variable class through hierarchical abstraction processes such as those employed by convolutional neural networks or Hierarchical Bayesian Inference models (LeCun et al., Citation2015; Tenenbaum et al., Citation2011).

The MTG ROIs that decoded reduplication stand out in several respects. These areas are bilaterally implicated in the processing of whole word properties and some forms of morphological marking (Gow, Citation2012; Hickok & Poeppel, Citation2007; Marslen-Wilson & Tyler, Citation2007). Four right hemisphere MTG regions (R-MTG1, R-MTG2, R-MTG3, R-MTG4,) decoded all 5 reduplication contrasts. An additional 5 left hemisphere MTG regions (L-MTG1, L-MTG2, L-MTG3, L-MTG4, and L-MTG5) decoded the 4 contrasts between reduplicated and non-reduplicated syllables, but not the contrast between the locally reduplicated third syllable in ABB tokens, and its discontinuously reduplicated counterpart in ABA tokens. It is not clear why we find a right hemisphere bias, One possibility is that it reflects hemispheric differences in the temporal integration of auditory inputs. Noting that speech processing elicits stronger 25–35 Hz low gamma oscillations over left hemisphere speech areas, and stronger 4–8 Hz theta oscillations over their right hemisphere homologues, Giraud and Poeppel (Citation2012) suggest that left hemisphere regions primarily integrate auditory input over short temporal windows of 20–40 ms consistent with phonemic processing, while their right hemisphere homologues primarily integrate over windows of 250 ms, consistent with syllabic processing. Such a window is too small to allow integration over multiple reduplicated syllables, but it may contribute to the representation of individual reduplicated syllables, with the discovery of reduplication supported by lower frequency (e.g. delta) oscillations which integrate over multisyllabic intervals.

Evidence that reduplication is a universal strategy used by speakers with limited common proficiency in a common language suggests that the ability to represent reduplication is a universal representational primitive (Muysken, Citation2013) that is exploited by languages that employ productive reduplication processes in morphology. The fact that reduplication patterns are encoded in brain regions independently implicated in morphological processing suggests that these representations could be harnessed for morphology. It is unlikely though that listeners treated reduplication in the artificial grammar used in the present study as a morphological process, because the nonword stimuli have no semantic content or any other forms of recognisable psuedoaffixation to invite morphological interpretation.

While these results demonstrate that abstract reduplication patterns may be represented neural, they do not address the sources of such representations, or establish what kinds of processes they support. The frequency of reduplication processes across world languages and evidence of early infant generalisation of reduplication rules are consistent with their innateness (Ghomeshi et al., Citation2004; Rubino, Citation2013). However, the impressive generalisation powers of deep learning models (cf. LeCun et al., Citation2015), and work suggesting that simple recurrent neural network models can simulate infant performance on reduplication pattern-based generalisation given assumptions about pretraining or network architecture (Altmann, Citation2002; Endress et al., Citation2007) points to the possibility that such representations could reflect discoverable features. In the case of Altmann's model, performance depended on pretraining in individual syllable identification to produce stable internal representations of potentially reduplicatable units. This suggests that models must learn first order representations of syllabic units before they can learn second order representations of the relationships between those units.

The current findings provide some new perspective on the long-standing debate between adherents of symbolic and associationist accounts of linguistic generativity (cf. Fodor & Pylyshyn, Citation1988; Marcus, Citation2001; Pinker & Ullman, Citation2002; Rumelhart & McClelland, Citation1986; Seidenberg & Plaut, Citation2014). Within symbolic frameworks such as those favoured in generative linguistic theory and some computational models of general cognition (cf. Fodor & Pylyshyn, Citation1988; Jackendoff, Citation2002) these results may be interpreted as evidence for the neural plausibility of cognitive variables. In these frameworks, generativity is the result of operations performed over variables (Marcus, Citation2001) rather than tokens that may be marked for variable features such as reduplication. It is perhaps notable that none of the brain regions shown to reliably decode reduplication contrasts in this study are independently associated with the application of phonological rules.

In contrast, association accounts of generative ascribe generativity to patterns of associations between tokens based on similarity. The early connectionist Trace model (McClelland & Elman, Citation1986) demonstrates how rule-like phonotactic constraints and biases might emerge from item-based association. Like human listeners (Massaro & Cohen, Citation1983), the Trace model “repairs” unacceptable consonant clusters in novel nonword stimuli (e.g. interpreting an input ambiguous between /l/-and /r/ as /l/ to yield the legal onset sl- in s_i, but as /r/ to yield the legal onset /shr/ in sh_i). This bias towards producing attested (/sl/, /∫r/) versus unattested (*/sr/. */∫l/) onsets is the result of top-down influences on phoneme interpretation from partially activated words that share those clusters (e.g. slide, slam, slow). This interpretation is consistent with neural evidence for lexical mediation of phonotactic repair and phonotactic frequency effects (Gow & Nied, Citation2014; Gow & Olson, Citation2015; Gow et al., Citation2021). The current results suggest though that the neural representation of a specific wordform may include specification of its reduplication pattern in addition to specification of its phonemic and syllabic structure. This raises the possibility that the generalisation of lawful constraints on reduplication to novel forms (cf. Berent, Citation2002; Berent et al., Citation2002) could be the result of top-down support from partially activated words that share reduplication patterns with input words.

The current results do not resolve the debate between symbolic and associationist accounts, but they do allay weakly grounded conjecture about both the neural implausibility of variable-based symbolic accounts, and the generative potential of associationist models. Future work may bring the debate closer to resolution by determining whether neural representation of reduplication pattern is intrinsic in wordform representation as required by associative account, or separable from wordform representation for purposes of rule-based operations conducted over variables as required by a symbolic account. If the representation of reduplication proves to be separable from the representation of word tokens, additional work will be needed to determine how variable representations are extracted and reassociated with token-based representations after variable-based operations are completed.

We suggest that abstract representation of reduplication is just one example of abstraction in a spectrum of abstract variable representations in language and cognitive processes. From the earliest stages of perceptual processing neural systems form equivalence classes. From feature detectors in primary sensory cortices to categorical perception and the formation of conceptual categories, neural systems extract and rely on equivalence classes to generalise and generate new forms. While these results do not resolve questions about the nature of the processes that rely on variable-based representations of stimulus properties to produce generative thought, future progress will benefit from developing independent, empirically derived characterisations of the representations upon which any mechanism must depend.

Supplemental Material

Download MS Word (16.6 MB)Acknowledgements

We thank our participants for making this work possible and Olivia Newman, who contributed to data collection, analysis and visualisation and Skyla Lynch who also contributed to data analysis. Dimitrios Pantazis provided the code for support vector machine analyses of MEG data, Tom Sgouros for provided computing support, and Nao Matsuda assisted in MEG data acquisition. Ev Fedorenko, Ray Jackendoff, David Caplan, and Gerry Altmann commented on earlier drafts of this manuscript. Lorraine Tyler and two anonymous reviewers gave feedback on the submitted manuscript.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The authors confirm that the data supporting the findings of this study are available within the article [and/or] its supplementary materials by request from DWG. Code and documentation for our Granger Processing Stream (GPS) is available at https://www.nmr.mgh.harvard.edu/software/gps GPS relies on Freesurfer software for MRI analysis and automatic cortical parcellation (https://surfer.nmr.mgh.harvard.edu/) and MNE software for reconstruction of minimum norm estimates of source activity based on combined MRI, MEG and EEG data (https://pypi.org/project/mne/). Support vector machine code can be found at (www.csie.ntu.edu.tw/~cjlin/libsvm).

Additional information

Funding

References

- Altmann, G. T. M. (2002). Learning and development in neural networks – The importance of prior experience. Cognition, 85(2), B43–B50. https://doi.org/10.1016/S0010-0277(02)00106-3

- Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B (Methodological), 57(1), 289–300. https://doi.org/10.1111/j.2517-6161.1995.tb02031.x

- Berent, I. (2002). Identity avoidance in the Hebrew lexicon: Implications for symbolic accounts of word formation. Brain and Language, 81(1-3), 326–341. https://doi.org/10.1006/brln.2001.2528

- Berent, I. (2013). The phonological mind. Trends in Cognitive Sciences, 17(7), 319–327. https://doi.org/10.1016/j.tics.2013.05.004

- Berent, I., Marcus, G. F., Shimron, J., & Gafos, A. I. (2002). The scope of linguistic generalizations: Evidence from Hebrew word formation. Cognition, 83(2), 113–139. https://doi.org/10.1016/s0010-0277(01)00167-6

- Berent, I., Wilson, C., Marcus, G. F., & Bemis, D. K. (2012). On the role of variables in phonology: Remarks on Hayes and Wilson 2008. Linguistic Inquiry, 43(1), 97–119. https://doi.org/10.1162/LING_a_00075

- Bergerbest, D., Ghahremani, D. G., & Gabrieli, J. D. (2004). Neural correlates of auditory repetition priming: Reduced fMRI activation in the auditory cortex. Journal of Cognitive Neuroscience, 16(6), 966–977. https://doi.org/10.1162/0898929041502760

- Boersma, P., & Weenick, D. (2015). Praat: Doing phonetics by computer [Computer program]. Version 6.0.55, Retrieved June 13, 2019, from http://www.praat.org/. In (Version 6.0) http://www.praat.org/

- Chomsky, N. (1957). Syntactic structures. Mouton.

- Chomsky, N. (1965). Aspects of the theory of syntax. The MIT Press.

- Cichy, R. M., Pantazis, D., & Oliva, A. (2014). Resolving human object recognition in space and time. Nature Neuroscience, 17(3), 455–462. https://doi.org/10.1038/nn.3635

- Dennett, D. C. (1987). The intentional stance. The MIT Press.

- Desikan, R., Ségonne, F., Fischl, B., Quinn, B., Dickerson, B., Blacker, D., Buckner, R. L., Dale, A. M., Maguire, R. P., Hyman, B. T., Albert, M. S., & Killiany, R. (2006). An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage, 31(3), 968–980. https://doi.org/10.1016/j.neuroimage.2006.01.021

- Endress, A. D., Dehaene-Lambertz, G., & Mehler, J. (2007). Perceptual constraints and the learnability of simple grammars. Cognition, 105(3), 577–614. https://doi.org/10.1016/j.cognition.2006.12.014

- Ewbank, M. P., Lawson, R. P., Henson, R. N., Rowe, J. B., Passamonti, L., & Calder, A. J. (2011). Changes in “top-down” connectivity underlie repetition suppression in the ventral visual pathway. Journal of Neuroscience, 31(15), 5635–5642. https://doi.org/10.1523/jneurosci.5013-10.2011

- Fischl, B. (2012). Freesurfer. NeuroImage, 62(2), 774–781. https://doi.org/10.1016/j.neuroimage.2012.01.021

- Fodor, J. A., & Pylyshyn, Z. W. (1988). Connectionism and cognitive architecture: A critical analysis. Cognition, 28(1-2), 3–71. https://doi.org/10.1016/0010-0277(88)90031-5

- Garraffa, M., & Fyndanis, V. (2020). Linguistic theory and aphasia: An overview. Aphasiology, 34(8), 905–926. https://doi.org/10.1080/02687038.2020.1770196

- Ghomeshi, J., Jackendoff, R., Rosen, N., & Russell, K. (2004). Contrastive focus reduplication in English (the salad-salad paper). Natural Language & Linguistic Theory, 22(2), 307–357. https://doi.org/10.1023/B:NALA.0000015789.98638.f9

- Giraud, A. L., & Poeppel, D. (2012). Cortical oscillations and speech processing: Emerging computational principles and operations. Nature Neuroscience, 15(4), 511–517. https://doi.org/10.1038/nn.3063

- Gomez, R. L., Gerken, L., & Schvaneveldt, R. W. (2000). The basis of transfer in artificial grammar learning. Memory & Cognition, 28(2), 253–263. https://doi.org/10.3758/bf03213804

- Gow, D. W. (2012). The cortical organization of lexical knowledge: A dual lexicon model of spoken language processing. Brain and Language, 121(3), 273–288. https://doi.org/10.1016/j.bandl.2012.03.005

- Gow, Jr., D. W., & Caplan, D. N. (2012). New levels of language processing complexity and organization revealed by granger causation. Frontiers in Psychology, 3, 506. https://doi.org/10.3389/fpsyg.2012.00506

- Gow, D. W., & Nied, A. (2014). Rules from words: A dynamic neural basis for a lawful linguistic process. PLoS ONE, 9(1), 1–12. https://doi.org/10.1371/journal.pone.0086212

- Gow, D. W., & Olson, B. B. (2015). Lexical mediation of phonotactic frequency effects on spoken word recognition: A Granger causality analysis of MRI-constrained MEG/EEG data. Journal of Memory and Language, 82, 41–55. https://doi.org/10.1016/j.jml.2015.03.004

- Gow, D. W., & Olson, B. B. (2016). Sentential influences on acoustic-phonetic processing: A Granger causality analysis of multimodal imaging data. Language, Cognition and Neuroscience, 31(7 ), 841–855. https://doi.org/10.1080/23273798.2015.1029498

- Gow, D. W., Schoenhaut, A., Avcu, E., & Ahlfors, S. (2021). Behavioral and neurodynamic effects of word learning on phonotactic repair. Frontiers in Psychology, 12, 590155. https://doi.org/10.3389/fpsyg.2021.590155

- Gow, D. W., Segawa, J. A., Ahlfors, S. P., & Lin, F.-H. (2008). Lexical influences on speech perception: A Granger causality analysis of MEG and EEG source estimates. NeuroImage, 43(3), 614–623. https://doi.org/10.1016/j.neuroimage.2008.07.027

- Graves, W. W., Grabowski, T. J., Mehta, S., & Gupta, P. (2008). The left posterior superior temporal gyrus participates specifically in accessing lexical phonology. Journal of Cognitive Neuroscience, 20(9), 1698–1710. https://doi.org/10.1162/jocn.2008.20113

- Gwilliams, L., Linzen, T., Poeppel, D., & Marantz, A. (2018). In spoken word recognition, the future predicts the past. The Journal of Neuroscience, 38(35), 7585–7599. https://doi.org/10.1523/JNEUROSCI.0065-18.2018

- Hickok, G., & Poeppel, D. (2007). The cortical organization of speech processing. Nature Reviews Neuroscience, 8(5), 393–402. https://doi.org/10.1038/nrn2113

- Hsu, Y. F., Hämäläinen, J. A., & Waszak, F. (2014). Repetition suppression comprises both attention-independent and attention-dependent processes. Neuroimage, 98, 168–175. https://doi.org/10.1016/j.neuroimage.2014.04.084

- Jackendoff, R. (2002). Foundations of language: Brain, meaning, grammar, evolution. Oxford University Press.

- Jackendoff, R., & Audring, J. (2020). Morphology and memory: Toward an integrated theory. Topics in Cognitive Science, 12(1), 170–196. https://doi.org/10.1111/tops.12334

- James, T. W., & Gauthier, I. (2006). Repetition-induced changes in BOLD response reflect accumulation of neural activity. Human Brain Mapping, 27(1), 37–46. https://doi.org/10.1002/hbm.20165

- Kemp, C., & Tenenbaum, J. B. (2008). The discovery of structural form. Proceedings of the National Academy of Sciences, 105(31), 10687–10692. https://doi.org/10.1073/pnas.0802631105

- Kriegeskorte, N., & Diedrichsen, J. (2019). Peeling the onion of brain representations. Annual Review of Neuroscience, 42(1), 407–432. https://doi.org/10.1146/annurev-neuro-080317-061906

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436–444. https://doi.org/10.1038/nature14539

- Li, H. G., Leonard, M. K., & Chang, E. F. (2019). The encoding of speech sounds in the superior temporal gyrus. Neuron, 102(6), 1096–1110. https://doi.org/10.1016/j.neuron.2019.04.023

- Marcus, G. F. (2001). The algebraic mind: Integrating connectionism and cognitive science. MIT Press.

- Marcus, G. F., Vijayan, S., Bandi Rao, S., & Vishton, P. M. (1999). Rule learning by seven-month-old infants. Science, 283(5398), 77–80. https://doi.org/10.1126/science.283.5398.77

- Marinković, K. (2004). Spatiotemporal dynamics of word processing in the human cortex. The Neuroscientist, 10(2), 142–152. https://doi.org/10.1177/1073858403261018

- Maris, E., & Oostenveld, R. (2007). Nonparametric statistical testing of EEG- and MEG-data. Journal of Neuroscience Methods, 164(1), 177–190. https://doi.org/10.1016/j.jneumeth.2007.03.024

- Marslen-Wilson, W. D., & Tyler, L. K. (2007). Morphology, language and the brain: The decompositional substrate for language comprehension. Philosophical Transactions of the Royal Society B: Biological Sciences, 362(1481), 823–836. https://doi.org/10.1098/rstb.2007.2091

- Massaro, D. W., & Cohen, M. M. (1983). Phonological context in speech perception. Perception & Psychophysics, 34(4), 338–348. https://doi.org/10.3758/BF03203046

- Mattiola, S., & Masini, F. (2022). Discontinuus reduplication: A typological sketch. STUF - Language Typology and Universals, 75(2), 271–316. https://doi.org/10.1515/stuf-2022-1055

- McClelland, J. L., & Elman, J. L. (1986). The TRACE model of speech perception. Cognitive Psychology, 18(1), 1–86. https://doi.org/10.1016/0010-0285(86)90015-0

- Mesgarani, N., Cheung, C., Johnson, K., & Chang, E. F. (2014). Phonetic feature encoding in human superior temporal gyrus. Science, 343(6174), 1006–1010. https://doi.org/10.1126/science.1245994

- Milde, T., Leistritz, L., Astolfi, L., Miltner, W. H., Weiss, T., Babiloni, F., & Witte, H. (2010). A new Kalman filter approach for the estimation of high-dimensional time-variant multivariate AR models and its application in analysis of laser-evoked brain potentials. NeuroImage, 50(3), 960–969. https://doi.org/10.1016/j.neuroimage.2009.12.110

- Muysken, P. (2013). Language contact outcomes as the result of bilingual optimization strategies. Bilingualism: Language and Cognition, 16(4), 709–730. https://doi.org/10.1017/S1366728912000727

- Noguchi, Y., Inui, K., & Kakigi, R. (2004). Temporal dynamics of neural adaptation effect in the human visual ventral stream. Journal of Neuroscience, 24(28), 6283–6290. https://doi.org/10.1523/jneurosci.0655-04.2004

- Oganian, Y., & Chang, E. F. (2019). A speech envelope landmark for syllable encoding in human superior temporal gyrus. Science Advances, 5(11). https://doi.org/10.1126/sciadv.aay6279

- Pinker, S. (1984). Language learnability and language acqusition. Harvard University Press.

- Pinker, S., & Ullman, M. T. (2002). The past and future of the past tense. Trends in Cognitive Sciences, 6(11), 456–463. https://doi.org/10.1016/S1364-6613(02)01990-3

- Prince, A., & Smolensky, P. (2004). Optimality theory: Constraint interaction in generative grammar. Blackwell.

- Rubino, C. (2013). Reduplication. Max Planck Institute for Evolutionary Anthropology. Retrieved December 23, 2020, from http://wals.info/chapter/27

- Rumelhart, D. E., & McClelland, J. L. (1986). PDP models and general issues in cognitive science. In D. E. Rumelhart, J. L. McClelland (Eds.), Parallel distributed processing: Explorations in the microstructure of cognition (pp. 110–146). Bradford Books/MIT Press.

- Segaert, K., Weber, K., de Lange, F. P., Petersson, K. M., & Hagoort, P. (2013). The suppression of repetition enhancement: A review of fMRI studies. Neuropsychologia, 51(1), 59–66. https://doi.org/10.1016/j.neuropsychologia.2012.11.006

- Seidenberg, M. S., & Plaut, D. C. (2014). Quasiregularity and its discontents: The legacy of the past tense debate. Cognitive Science, 38(6), 1190–1228. https://doi.org/10.1111/cogs.12147

- Sharon, D., Hämäläinen, M. S., Tootell, R. B., Halgren, E., & Belliveau, J. W. (2007). The advantage of combining MEG and EEG: Comparison to fMRI in focally stimulated visual cortex. NeuroImage, 36(4), 1225–1235. https://doi.org/10.1016/j.neuroimage.2007.03.066

- Summerfield, C., Trittschuh, E. H., Monti, J. M., Mesulam, M. M., & Egner, T. (2008). Neural repetition suppression reflects fulfilled perceptual expectations. Nature Neuroscience, 11(9), 1004–1006. https://doi.org/10.1038/nn.2163

- Tavazoie, S., Hughes, J. D., Campbell, M. J., Cho, R. J., & Church, G. M. (1999). Systematic determination of genetic network architecture. Nature Genetics, 22(3), 281–285. https://doi.org/10.1038/10343

- Tenenbaum, J. B., Kemp, C., Griffiths, T. L., & Goodman, N. D. (2011). How to grow a mind: Statistics, structure, and abstraction. Science, 331(6022), 1279–1285. https://doi.org/10.1126/science.1192788

- Tunney, R. J., & Altmann, G. T. (2001). Two modes of transfer in artificial grammar learning. Journal of Experimental Psychology: Learning, Memory, and Cognition, 27(3), 614–639. https://doi.org/10.1037/0278-7393.27.3.614

- Yildiz, I. B., Mesgarani, N., & Deneve, S. (2016). Predictive ensemble decoding of acoustical features explains context-dependent receptive fields. The Journal of Neuroscience, 36(49), 12338–12350. https://doi.org/10.1523/JNEUROSCI.4648-15.2016