ABSTRACT

Although listening to speech and reading text rely on different lower-order cognitive and neural processes, much of the literature on higher-order comprehension assumes engagement of a common conceptual-semantic system which is unaffected by input modality. However, few studies have tested this assumption directly. Moreover, many neuroimaging studies of reading present sentences in an artificial, cognitively demanding word-by-word rapid serial visual presentation (RSVP) format. We report behavioural and fMRI experiments investigating whether presentation format (Spoken, Written, or RSVP) modulates commonly reported behavioural and neural costs associated with reinterpretation of sentences that contain lexical ambiguities. Reinterpretation-related processing costs were exaggerated in the RSVP format, both for response times on a behavioural task and neural activation in left inferior frontal gyrus. Presentation format can interact with higher-order language processes in complex ways, and we urge language researchers to carefully consider the role of presentation format in study design and interpretation of research findings.

Introduction

A tacit assumption underlying much of the neuroimaging and behavioural literature on language comprehension is that higher-level language processes, such as the resolution of lexical-semantic ambiguity, operate in a similar manner irrespective of the format in which the linguistic material is presented. While listening to speech and reading written material engage different low-level, unimodal sensory regions, language processing pathways are assumed to converge onto a common amodal conceptual-semantic system that is engaged during sentence processing in any given input modality (Binder et al., Citation2009; Hickok & Poeppel, Citation2004; Mesulam, Citation1998). Functional neuroimaging studies comparing the spoken and written input modalities have supported this assumption by demonstrating convergent activation for auditory and visual language in multimodal cortical regions in the temporal and left inferior frontal lobes (Marinkovic et al., Citation2003; Spitsyna et al., Citation2006). Based on the assumption that extracting meaning from spoken and written material engages the same cognitive and neural processes, most neuroimaging studies investigating semantic aspects of sentence processing have presented stimuli in a single modality only (Crinion et al., Citation2003; Fedorenko et al., Citation2011; Rodd et al., Citation2005; Rodd et al., Citation2012; Rodd, Johnsrude, et al., Citation2010; Rodd, Longe, et al., Citation2010; Vitello et al., Citation2014; Zhu et al., Citation2012). Similarly, much behavioural work on higher-level language processing has been conducted on either spoken or written sentences alone, under the assumption that conclusions from either presentation modality apply to language processing more generally.

The evidence base for many psycholinguistic phenomena has relied on aggregation of data from single-modality studies of spoken and written language processing. For example, the rich literature on lexical-semantic ambiguity resolution suggests that, in comparison to matched unambiguous sentences, ambiguous sentences tend to be more difficult to understand, take longer to process, and are associated with additional neural activity in typical language regions, most notably left inferior frontal gyrus (IFG) (Duffy et al., Citation1988; Kambe et al., Citation2001; Rodd, Citation2020; Rodd et al., Citation2012; Simpson, Citation1981; Swinney, Citation1979; Twilley & Dixon, Citation2000; for a recent review see Rodd, Citation2020). Crucially, the evidence for these behavioural and neural “processing costs” associated with lexical-semantic ambiguity resolution comes from studies that presented sentences in either spoken or in written format (Mason & Just, Citation2007; Rodd et al., Citation2005; Rodd et al., Citation2012; Vitello & Rodd, Citation2015; Zempleni et al., Citation2007). However, no study to date has attempted to test the assumption that these ambiguity-related processing costs are unaffected by input modality by directly comparing the magnitude of processing costs across different language presentation formats.

A fundamental difference between the spoken and written format is that written material remains visibly accessible to the reader. In contrast to listeners, readers can control the rate and order of language input themselves, including whether they return to earlier parts of a sentence during reading to facilitate comprehension. This serves to mitigate demands on memory processes, but also hampers use of the whole-sentence written format for studies which rely on time-locking cognitive processes or neural responses to specific words or phrases within a sentence. Neuroimaging researchers in particular, therefore, have often relied on alternative visual presentation techniques which standardise temporal exposure to written sentence content across participants, such as phrase-by-phrase or word-by-word presentation (e.g. Fedorenko et al., Citation2011; Hoenig & Scheef, Citation2009; Zhu et al., Citation2012).

One popular presentation technique is rapid serial visual presentation (RSVP), where the words that make up a sentence are presented individually in quick succession in the centre of the visual field, minimising readers’ eye movements and enabling measurement of responses time-locked to specific sentence elements. RSVP has been used in neuroimaging studies of high-level language processing under the assumption that, although low-level processing may differ from natural whole-sentence reading, high-level comprehension processes remain the same. However, RSVP is a highly artificial sentence format that is unfamiliar to most research participants, and its use has been associated with poorer text comprehension in comparison to natural whole-sentence reading (Masson, Citation1983; Schotter et al., Citation2014; Waters & Caplan, Citation1996; Wlotko & Federmeier, Citation2015). As RSVP is widely used in neuroimaging to enable modelling of event-related haemodynamic responses, an understanding of potential interactions between this presentation format and complex comprehension processes (such as those involved in ambiguity resolution) is essential. This is particularly the case because evidence of leftIFG involvement in top-down control of language processing and working memory (e.g. Bookheimer, Citation2002; Curtis & D’Esposito, Citation2003; Kaan & Swaab, Citation2003; Nozari & Thompson-Schill, Citation2016; Postle, Citation2006; Thompson-Schill et al., Citation1997) raises the possibility that previous findings of leftIFG activation in response to RSVP stimuli could have conflated higher-level language-related activation with activation related specifically to the processing demands of the presentation format.

It follows that a key question for the field is whether, when investigating higher-order language processes, it matters, not only which stimuli are presented, but how stimuli are presented to participants. To our knowledge, only a single previous neuroimaging study has directly compared the effects of presentation format on behavioural and haemodynamic measures during higher-level language processing. Lee and Newman (Citation2009) used an explicit comprehension task to investigate the effects of syntactic complexity during reading of sentences presented in RSVP and whole-sentence format, and found that the presentation format influenced both behavioural and neural measures of sentence processing. The adverse effects of syntactic complexity on comprehension accuracy were enhanced when sentences were presented in RSVP format. Presentation format also modulated haemodynamic responses to syntactic complexity, but in the opposite direction. Greater syntactic complexity effects were observed in left IFG during whole-sentence reading compared to reading RSVP sentences, in the context of an explicit comprehension task; though the authors attributed this finding to the additional neural processing required for baseline non-complex sentences in RSVP format.

The above findings demonstrate that how linguistic information is presented does indeed influence behavioural and neural measures of one type of higher-order comprehension process, the successful resolution of syntactic complexity. An important outstanding question is whether such effects occur in higher-level sentence comprehension processes beyond syntactic processing. Additionally, it is unclear whether the previously demonstrated processing differences between RSVP and whole-sentence text relate to the transience and temporal constraints of the RSVP format, or to its artificiality as a mode of language presentation.

In the present study, we investigated the impact of language presentation format on behavioural and functional neuroimaging measures of a different higher-order comprehension process, the resolution of lexical-semantic ambiguity in sentence context. The two experiments presented in this study used sentences that contained ambiguous words with a more frequent (“dominant”) meaning and a less frequent (“subordinate”) meaning, e.g. “bark”. In the absence of informative prior context, such ambiguities are initially resolved towards the dominant meaning (Rodd, Citation2018; Twilley & Dixon, Citation2000). In each sentence, a single ambiguous word was presented within a neutral sentential context until the final word in the sentence, which constrained the context in favour of the subordinate meaning of the ambiguous word (e.g. “The woman thought that the bark must have come from her neighbour's willow.”). Comprehenders were therefore initially led down a “garden path” (selecting the dominant meaning), and needed to revise their interpretation upon encounter of the final word. This stimulus design had the advantage of allowing partial temporal separation of lower-level processing during sentence presentation from the higher-order processes of revising and reinterpreting sentence meaning that were triggered by the sentence-final word.

Ambiguous sentences were presented in three formats (spoken, whole-sentence written, and RSVP). This design allowed the RSVP format, a mode of sentence presentation rarely encountered in daily life and therefore likely to be associated with greater attentional and working memory demands, to be contrasted with naturalistic and familiar spoken and whole-sentence written formats. The use of spoken sentences provided a transient, externally-paced but naturalistic language presentation format for comparison with RSVP and whole-sentence reading. We did not attempt to equalise the processing demands of each format, but instead utilised each as they are typically presented in psycho linguistic and neurolinguistic experiments. Our aim was to use separate behavioural (Experiment 1) and fMRI (Experiment 2) paradigms to investigate whether the idiosyncratic processing demands of these typical presentation formats modulate the well-established behavioural and neural costs of reinterpreting sentence meaning to resolve semantic ambiguity, either in the context of an explicit comprehension task or during implicit language comprehension.

Experiment 1: behavioural processing costs of ambiguity resolution

Experiment 1 compared the behavioural processing costs associated with ambiguity resolution in the Spoken, Written, and RSVP presentation formats. Participants performed an explicit Meaning Coherence Judgement task (“Sense” vs “Nonsense”) on ambiguous and matched unambiguous sentences, as well as semantically anomalous filler sentences, presented in Spoken, whole-sentence Written and RSVP formats. A correct decision for an Ambiguous sentence (“Sense”) required successful reinterpretation of lexical-semantic ambiguity. Accuracy rates and response times for correct sentence judgements were compared across the three presentation formats. Methods and hypotheses for Experiment 1 were preregistered prior to data analysis (https://osf.io/9ryxu/).

In line with the previous literature on the behavioural processing costs associated with lexical-semantic ambiguity resolution (e.g. Christianson et al., Citation2001; Duffy et al., Citation1988; Kambe et al., Citation2001), we expected that ambiguous sentences, which required sentence reinterpretation, would be associated with lower accuracy and longer response time on correct trials compared to the unambiguous sentences. We predicted that these ambiguity effects on accuracy and response times in the Meaning Coherence Judgement task would be exaggerated in the RSVP format compared to the Spoken and Written conditions (see e.g. Lee & Newman, Citation2009).

Method

Participants

A total sample size of 108 participant was targeted (see pre-registration document) based on a sample size calculation with G*Power prior to data collection. This calculation was – conservatively – based on a 2×3 repeated measures, within-factors ANOVA design to ensure sufficient statistical power to detect a small effect (.1) at a significance threshold of α = .05. The analyses included data from 108 speakers of British English (71 female, aged 18–35, MAge = 26.0 +/– 4.9 years), who were residing in the UK at the time of the study, had normal or corrected-to-normal vision, and had no history of significant neurological or developmental language disorder. All participants either self-identified as native speakers of British English (n = 104), or had acquired the language before age 11 (n = 4). Sixty-seven of our participants had completed a university degree, while 41 had not completed university-level education. An additional 16 participants were excluded from analyses due to procedural or technical difficulties with data collection.

Participants were recruited via the web-based recruitment service Prolific (www.prolific.ac; Damer & Bradley, Citation2014), and completed the study remotely in their own time. Ethical approval for this study was obtained from the UCL Department of Language and Cognition Ethics Chair as part of a larger research programme. Written informed consent was obtained from all participants, and they were remunerated for participation at standard UCL rates.

Materials

Stimuli for Experiments 1 and 2 were based on a set of 90 sentences, each containing a different ambiguous key noun (e.g. “bark”, see Ambiguous example in ). The sentential context before the ambiguous key noun was neutral, and therefore compatible with dominant and subordinate meanings of the ambiguous word. The final word disambiguated the key noun to its less frequent, subordinate meaning (e.g. “willow”). Key noun and final word were separated by 4–8 intervening words, to allow initial key noun meaning selection to take place prior to encountering the final word. Since key nouns were biased in their relative meaning frequency, it was assumed that comprehenders would initially select the dominant meaning, and would then need to reinterpret the sentence after processing the disambiguating final word (e.g. Twilley & Dixon, Citation2000). Therefore, the critical window for sentence reinterpretation began at the final word in the sentence.

Table 2. Stimulus characteristics. Sentences in the Ambiguous condition contained an ambiguous key noun (e.g. “bark”) that was disambiguated towards its subordinate meaning by the final word (e.g. “willow”). Control sentences in the Unambiguous condition contained a frequency-matched and length-matched unambiguous key noun and final word. Means (SD) are reported for psycholinguistic characteristics of the stimuli.

Extensive stimulus piloting was conducted to confirm the dominance bias of key nouns used in the sentences (main noun single-word dominance score, see ). In addition, piloting confirmed that when the whole sentence context including the disambiguating final word was presented, key noun meaning preference favoured the subordinate meaning (sentence-level subordinate meaning suitability rating, see ). Piloting therefore confirmed that the sentences in the Ambiguous condition generally led comprehenders down a metaphorical “garden-path”, with initial dominant meaning selection, followed by context favouring the subordinate meaning that necessitated sentence reinterpretation to achieve successful comprehension. For details about the piloting procedures please see the Supplementary Materials associated with Blott et al. (Citation2021, https://osf.io/hn3bu/). A list of all stimuli used in the present study can be found at https://osf.io/m87vg/.

For each Ambiguous sentence, an Unambiguous control version of the sentence was created by replacing the key noun with an unambiguous noun, and substituting a new final word that provided context consistent with the unambiguous word meaning (see Unambiguous example in ). Key nouns and final words in Ambiguous and Unambiguous sentence versions were matched on syllable length and lemma frequency (stimulus characteristics are summarised in ). The focus in these experiments was the process of post-sentence reinterpretation rather than within-sentence meaning selection, and the choice to construct matched Ambiguous and Unambiguous sentence pairs using the same sentence frame with different key nouns and final words was designed to create a balance between matching stimuli as closely as possible while reducing the risk of confounds; for example, limiting surface familiarity between stimulus pairs to avoid practice effects, and removing ambiguous key nouns from Unambiguous sentences to avoid inadvertent disambiguation.

In Experiment 1, a subset of 63 matched pairs of Ambiguous and Unambiguous sentences were selected at random from the full stimulus set, and were used as coherent experimental sentences. The remaining 27 sentence pairs from the full stimulus set were modified for use as semantically anomalous filler sentences, in which meaning coherence was violated; thus Anomalous filler sentences contained either an ambiguous (e.g. “scoop”) or an unambiguous (e.g. “quest”) key noun (see Anomalous conditions in ). In the Anomalous filler sentences, the final word was altered so that the context it provided was always incompatible with key noun meaning; for Anomalous sentences with ambiguous key nouns the final word was incompatible with both the dominant and the subordinate meaning. Key nouns were not duplicated across conditions.

Table 1. Example stimuli in the two coherent conditions (Ambiguous, Unambiguous) and the anomalous filler conditions.

All stimuli were prepared in three presentation formats: Spoken, Written, and RSVP. Auditory sentences were recorded by a female speaker of British English. Visual items were presented in black font on a white background.

Design

In this experiment, Ambiguous and Unambiguous, and Anomalous conditions were presented in each of the three formats; Spoken, Written and RSVP. Each participant completed a total of 180 experimental trials, divided equally between the three presentation formats. Of the 60 trials allocated to each format, 42 trials (70%) were coherent sentences (21 Ambiguous and 21 Unambiguous) and 18 trials (30%) were semantically anomalous fillers (9 with ambiguous and 9 with unambiguous key nouns). Stimuli were presented to each participant in three experimental blocks. Each block included two 10-trial miniblocks for each of the three presentation formats. Within each format-specific miniblock, 70% of trials were coherent (a combination of Ambiguous and Unambigous trials), and 30% were anomalous. All participants encountered the format-specific miniblocks in the same pseudorandomised order but saw or heard different stimuli in these miniblocks. For individual participants, the matched coherent Ambiguous and Unambiguous sentence pairs were presented in the same format, but in different experimental blocks. The assignment of sentence pairs to the different presentation formats was counterbalanced across participants. This design ensured that, across participants, each ambiguous key noun appeared in all three presentation formats, but was presented only once, in a single format, to each individual participant. Only the coherent Ambiguous and Unambiguous conditions were included in the analyses.

Procedure

The experiment was run using the experiment platform Gorilla (www.gorilla.sc/about; Cauldron Inc.; Anwyl-Irvine et al., Citation2019). Participants completed the study online on their own computers using headphones. Before the main task, participants completed a demographic questionnaire on their age, sex, education and language background.

In the Meaning Coherence Judgement task, participants were instructed to listen to or read each sentence carefully for comprehension, and to decide as quickly and as accurately as possible whether each sentence made sense or not. A visual symbol (an ear or eye image) was shown on the screen at the start of each miniblock to cue participants to expect either spoken or written stimuli. No additional cues were given as to the format of visual presentation (i.e. Written vs RSVP). After presentation of a sentence, two response buttons appeared (“Sense” and “Nonsense”) for participants to select with their mouse. The position of these response buttons on the screen was counterbalanced across participants.

In the Spoken condition, duration of sentence stimuli ranged between 2.8–4.0 s (MDuration = 3.5 s). The presentation software was unable to support the automatic playing of sound files, so participants clicked a button on the screen with the mouse to begin playing each file manually. Response buttons appeared immediately after the end of the audio file. For the Written condition, whole sentences were presented on the screen for the mean duration of all audio files (3500 ms) plus an extra 1000 ms; response buttons appeared at the end of this 4500 ms interval. The presentation duration was designed to allow sufficient time to read and resolve Ambiguous stimuli while the sentence was still visible on the screen. For the RSVP conditions, each word in the sentence was presented consecutively in the centre of the screen for a duration of 250 ms; the end of the sentence was indicated by a full stop at the end of the final word. Response buttons appeared 250 ms after presentation of the final word. Trials were separated by a fixation cross presented in the centre of the screen for 2500 ms.

Before the experimental trials, participants practised the Meaning Coherence Judgement task on four coherent Unambiguous and two Anomalous Unambiguous sentences (two sentences per format) which were not repeated in in the main experiment. Immediate feedback on response accuracy was given for the practice trials, but not during the main experiment. Participants took an enforced 30 s break between each experimental block, and the overall duration of the Meaning Coherence Judgement task was approximately 25–30 min.

Data analysis

Analyses were conducted in RStudio (v. 3.4.2; RStudio Team, Citation2015), using mixed effects modelling (Baayen et al., Citation2008; Barr et al., Citation2013). Analyses were conducted on the coherent Ambiguous and Unambiguous conditions only. Response times were log-transformed for all analyses. Trials in which response times were below 250 ms were removed from the analyses under the assumption that these were accidental button presses. Separate analyses were conducted for response accuracy and response time on correct trials, using the glmer() function of the lme4 package (v.1.1.17; Bates et al., Citation2015) with the bobyqa optimiser, and the lmer() function from the same package, respectively. Hypothesis tests were based on model comparisons by likelihood ratio tests, and Chi-squared and p-values (with α set at .05, unless stated otherwise) from these tests are reported. All models included fixed effects for Ambiguity (deviation-coded variables: Ambiguous −1/2, Unambiguous 1/2), Format (Format.code1 with Spoken deviation-coded as 1/3, Written as 1/3, RSVP as −2/3, and Format.code2 with Spoken deviation-coded as 1/2, Written as −1/2, and RSVP as 0), and their interactions. Models with maximal random effects structures (a random intercept by items, and a random intercept and slope for Ambiguity, Format, and their interaction by subjects) were fitted where possible (Barr et al., Citation2013). In case of convergence issues, we went through the following steps iteratively until the model converged: (1) remove correlations between the random effects by subjects, (2) remove random intercept by subjects, (3) remove the random slope that explains the least variance in the maximal model. We treated the Format codes and Ambiguity x Format interaction codes as pairs, and our reports of main effects of Format and an Ambiguity x Format interaction are based on removing the pair of relevant codes, unless specified.

Results

Accuracy

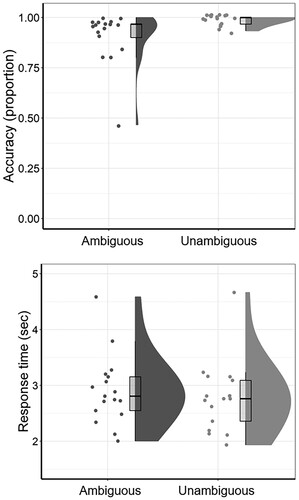

The maximal model that would converge contained fixed effects for Ambiguity, Format, and their interaction, as well as a random intercept by items and a random intercept and random slopes for Ambiguity and the Ambiguity x Format interaction by subjects, without accounting for correlations between the random effects. Detailed results from model comparisons can be found in supplemental materials. There was a significant main effect of Ambiguity, with Ambiguous sentences associated with lower accuracy (MTotal = 0.77, SDTotal = 0.42) than their Unambiguous counterparts (MTotal = 0.94, SDTotal = 0.23; see , and ; β = 1.75, SE = 0.07, z = 24.58; Model comparison: χ2(1) = 216.45, p <.001). There was also a significant main effect of Format, with the RSVP format associated with the lowest accuracy rates (see , and ; Format.code1 (Spoken and Written > RSVP): β = −0.35, SE = 0.07, z = −5.44; Format.code2 (Spoken > Written): β = −0.94, SE = 0.08, z = −0.53; Model comparison: χ2(2) = 28.24, p = .002). The Ambiguity x Format interaction, however, was not statistically significant (p = .833). Since accuracy of Meaning Coherence judgements for Ambiguous sentences reflects successful retrieval and integration of the subordinate meaning of the key noun, the lack of a significant Ambiguity x Format interaction suggests that presentation format did not significantly influence participants’ ability to resolve ambiguity successfully.

Figure 1. Accuracy rates and response times in the Meaning Coherence Judgement task. Boxplots show median and interquartile range of accuracy scores (top panel) and response times (bottom panel) for the Ambiguous and Unambiguous condition in each presentation format.

Table 3. Summary of descriptive statistics for accuracy and response times in the Meaning Coherence Judgement task for each of the presentation formats (means with standard deviations in parentheses).

Response time

The maximal model that converged contained fixed effects for Ambiguity, Format, and their interaction, as well as a random intercept by items and a random intercept and random slopes for Format by subjects, including correlations between random effects. Detailed results from model comparisons can be found in supplemental materials. Response times on correct trials in the Meaning Coherence Judgement task were significantly longer when participants needed to resolve an ambiguity to arrive at the correct interpretation (MTotal = 1213.77, SDTotal = 928.91) than when the sentence was unambiguous (MTotal = 993.3, SDTotal = 737.66; see and ; β = −0.08, SE = 0.004, t = −19.89; Model comparison: χ2(1) = 388.5, p <.001). There was also a significant main effect of Format on response times (see and ; Format.code1 (Spoken and Written > RSVP): β = −0.01, SE = 0.01, t = −1.03; Format.code2 (Spoken > Written): β = 0.13, SE = 0.01, t = 16.5; Model comparison: χ2(2) = 135.57, p < .001). Importantly, these main effects were qualified by a significant Ambiguity x Format interaction, suggesting that the magnitude of ambiguity-related costs to response times depended on the format in which sentences had been presented (see and ; Ambiguity x Format.code1 (Spoken and Written > RSVP): β = −0.04, SE = 0.01, t = −4.35; Ambiguity × Format.code2 (Spoken > Written): β = −0.06, SE = 0.01, t = −6.76; Model comparison: χ2(2) = 64.45, p < .001).

We decomposed this interaction by running separate mixed models with Ambiguity as a fixed factor, a random intercept by items and random intercept and random Ambiguity slope by subjects for each format. Detailed results from model comparisons can be found in supplemental materials. Although there were statistically significant ambiguity-related response time costs in the Spoken format (β = −0.1, SE = 0.01, t = −13.91; Model comparison: χ2(1) = 111.25, p < .001), in the Written format (β = −0.03, SE = 0.01, t = −4.8; Model comparison: χ2(1) = 22.99, p < .001; maximal model was an intercept-only model), and in the RSVP format (β = −0.1, SE = 0.01, t = −13.76; Model comparison: χ2(1) = 110.2, p < .001), the ambiguity costs to response times were exaggerated relative to the Written condition in the Spoken and RSVP format.

Discussion

Experiment 1 provided a clear demonstration of the effects of ambiguity and presentation format on higher-order language comprehension processes. Consistent with previous studies, our results confirmed that lexical-semantic ambiguity impedes successful sentence comprehension: the presence of ambiguity significantly reduced the likelihood of accurate comprehension, even in the context of an explicit comprehension task, and significantly increased response times relative to an unambiguous baseline. Additionally, the RSVP format was associated with significantly reduced comprehension accuracy compared to the Spoken and Written formats, irrespective of the presence or absence of ambiguity. This finding suggests that the relatively unnatural word-by-word presentation format reduced participants’ general ability to build up coherent sentence-level meaning representations (see also e.g. Masson, Citation1983; Schotter et al., Citation2014). We were particularly interested in the modulation of ambiguity processing costs by presentation format. Although format did not affect the cost of ambiguity to comprehension accuracy, we did find that format significantly influenced the temporal costs of disambiguation, resulting in exaggerated “garden-path” effects to response times for both the RSVP and Spoken formats (mean effect sizes of about 260 and 290 ms), compared to the whole-sentence Written format (mean effect size of about 90 ms). The present data do not suggest evidence for different speed-accuracy tradeoff patterns in the different formats. Although participants tended to respond faster in the Written format compared to the other formats, the Written format was not also associated with a greater likelihood for errors. Similarly, although the ambiguity effect on response times was largest for the Spoken and RSVP formats, the ambiguity effect on accuracy was at least as big as in the Written format. Taken together, these findings suggest that, in contrast to the whole-sentence Written format, successful disambiguation in the RSVP and Spoken formats was achieved at the expense of increased processing time. We will return to potential explanations of this finding in the General Discussion.

Experiment 2: neural processing costs of ambiguity resolution

Complementing the behavioural results from the explicit comprehension task in Experiment 1, Experiment 2 used functional MRI during implicit language processing to investigate whether presentation format modulated neural activity within cortical language regions that have previously been shown to be involved in the processing of ambiguity and reinterpretation. We expected to replicate previous findings of ambiguity-related increases in haemodynamic responses in typical language regions including left IFG, compared to the control sentences (e.g. Mason & Just, Citation2007; Rodd et al., Citation2005; Zempleni et al., Citation2007). In Experiment 2, the key interest lay in determining whether the magnitude of ambiguity-related effects in this region was influenced by how sentences were presented. We predicted that, in line with the differential effects of presentation format on the neural activation costs of syntactic complexity that have been previously reported (Lee & Newman, Citation2009), we would observe significant differences between the presentation formats in terms of ambiguity-related neural activity.

Method

Participants

Data from 17 monolingual native speakers of English (12 female, aged 20–44, MAge = 24.8+/−5.4 years) were included in the analyses. An additional 5 participants were excluded from analyses due to withdrawal of consent, or technical difficulties with data collection. Participants were recruited from the UCL SONA participant pool, and had on average spent 6 years (SD = 1.4, range: 2–8 years) in formal education settings after age 16. All participants were self-reported right-handers, had no hearing or uncorrected visual impairments, nor any history of neurological illness or head injury. UCL ethical approval for this study was obtained via the Birkbeck-UCL Centre for Neuroimaging (BUCNI) departmental ethics chair. Written informed consent was obtained from all participants, and they were financially compensated for participation at standard UCL rates.

Materials

Sentence stimuli for Experiment 2 consisted of the full set of 90 matched pairs of Ambiguous and Unambiguous sentences (see ). No semantically anomalous sentences were used in Experiment 2. Stimuli for the three presentation formats, Spoken, Written and RSVP, were created for the Ambiguous and Unambiguous versions of each sentence. For the Spoken format, each sentence was recorded digitally by a female speaker of British English. For the Written format, to enable consistent stimulus presentation with the software used, digital images were created of each sentence written as 2–3 lines of text in black font on a white background. Similarly, for the RSVP format, separate digital images of each word in a sentence, written in black font on a white background, were created.

In order to enable identification of brain regions that demonstrated preferential responses to intelligible language, Experiment 2 also included low-level unintelligible baseline conditions for each presentation format, created by modifying the original sentence stimuli. For the Spoken condition, Unintelligible stimuli were created by spectral rotation (around a 2kHz frequency) of the original sentences, following the signal processing procedure described by Scott and colleagues (Citation2000; see also Blesser, Citation1972). This technique preserves the acoustic complexity of speech stimuli but renders them unintelligible. For Written and RSVP conditions, Unintelligible stimuli were created by substituting each letter in the original sentences with a corresponding false font symbol. The false font was adapted from an obsolete near-Eastern alphabet whose characters had a letter-like structure but minimal direct resemblance to Roman letters (Melchert, Citation2004), meaning that false font characters had limited links to phonological representations for our participants (Warren, Citation2013). As with the intelligible conditions, Unintelligible stimuli for the Written format were digital images of whole-sentence stimuli written as 2–3 lines of false-font “text”, while stimuli for the RSVP format were separate digital images of each “word” in a sentence; stimuli were black font on a white background.

fMRI data acquisition

Whole-brain functional images were collected on a Siemens Avanto 1.5-T MR scanner with a 32-channel head coil, using a continuous-acquisition method. Echo-planar image volumes (232–251 volumes per participant) were acquired over three runs of approximately 12 min each, using a gradient-echo EPI sequence (TR = 3000 ms, TE = 50 ms, isometric 3 mm voxels). In addition, anatomical images were acquired using a T1-weighted MPRAGE sequence (TR = 2730 ms, TE = 3.57 ms, isometric 1 mm voxels, duration 5.5 min).

Design

In this experiment, Ambiguous, Unambiguous, and Unintelligible conditions were presented in each of the three formats; Spoken, Written and RSVP. There were no Anomalous filler trials in Experiment 2. Each participant completed a total of 234 experimental trials, divided equally between the three presentation formats. Of the 78 trials allocated to each format, 30 trials were Ambiguous sentences, 30 were Unambiguous sentences, and 18 were Unintelligible stimuli. Stimuli were presented to each participant in three experimental runs. Each run included two 13-trial miniblocks for each of the three presentation formats. Each format-specific miniblock included 5 trials each of the Ambiguous and Unambiguous conditions, and 3 Unintelligible trials. Three pseudorandomised miniblock orders were assigned randomly across runs for each participant. Within each miniblock, Ambiguous, Unambiguous and Unintelligible trials were presented in a pseudorandomised order. For individual participants, the Ambiguous, Unambiguous and Unintelligible versions of a given sentence were presented in the same format, but in different experimental runs. The assignment of corresponding Ambiguous, Unambiguous and Unintelligible sentence versions to the different presentation formats was counterbalanced across participants. As in Experiment 1, this design ensured that, across participants, each ambiguous key noun appeared in all three presentation formats, but was presented only once, in a single format, to each individual participant.

Procedure

Stimuli were presented using MATLAB (Mathworks Inc., Natick, MA, USA) and the Cogent 2000 toolbox (www.vislab.ucl.ac.uk/cogent/index.html). Auditory stimuli were presented binaurally through MRI-compatible insert earphones (Sensimetrics, Malden, MA, USA, Model S-14), which provided a manufacturer-evaluated scanner noise attenuation level of 20–40 dB. Visual stimuli were projected onto a screen placed in front of the bore of the MRI scanner and viewed by an angled mirror above the participant’s head. Sound volume levels and stimulus readability were checked prior to the first functional run using spoken and written stimuli which were not repeated in the experiment proper. Participants also heard and saw examples of the unintelligible stimuli before commencing the experiment.

Participants were instructed to listen to or read each sentence carefully for comprehension during scanning. An explicit task was avoided in Experiment 2 in order to avoid confounding neural activation related to ambiguity resolution with activation related solely to the non-specific demands of an overt task (Wright et al., Citation2011). Participants were informed prior to scanning that some trials would consist of nonsense sounds or letters, and were instructed to pay attention to these trials even though the stimuli would be incomprehensible. For the Unintelligible Written condition, participants were given the additional instruction to move their eyes across the line of false font text from left to right as if reading English.

As in Experiment 1, a visual symbol (an ear or eye image) shown on the screen at the start of each miniblock cued participants to expect either spoken or written stimuli, with no additional cues given to distinguish between visual presentation formats. During the Spoken conditions, duration of sentence stimuli ranged between 2.4–4.0 s (MDuration = 3.1 s). For the written conditions, whole-sentence stimuli were displayed on the screen for the same duration as the corresponding auditory sentence stimulus plus an additional 1500 ms; this presentation duration was designed to allow sufficient time to read and resolve Ambiguous stimuli while the sentence was still visible on the screen. For the RSVP conditions, each word in the sentence was presented consecutively in the centre of the screen for a duration of 250 ms; the end of the sentence was indicated by a full stop at the end of the final word. Thus, the presentation parameters for all three sentence formats were as close as possible to Experiment 1.

Trials were separated by a fixation cross presented in the centre of the screen. Fixation cross display time was 2500 ms plus a randomly-jittered interval of 1000–3000 ms, so that the overall inter-trial interval varied between 3500–5500 ms. Participants took short (30–60 s) breaks between each experimental run. Anatomical images were obtained after the completion of the three functional runs.

As fMRI scanning did not involve an explicit comprehension task, two computerised behavioural tests were conducted immediately after scanning to obtain explicit measures of stimulus engagement during scanning (Recognition Memory test) and disambiguation ability (Explicit Disambiguation test). These tests were conducted outside the scanner approximately 5–10 min after completion of the scanning session, with each test lasting approximately 15 min; participant were not warned prior to scanning that these tests would be conducted. Both tests were delivered outside the scanner in a quiet testing environment on a laptop using the Cogent toolbox running in MATLAB. The Recognition Memory test assessed recall of experimental sentence stimuli, and served as a check that participants had paid attention to the stimuli presented during scanning despite the absence of an overt task. A subset of 72 Ambiguous fMRI stimuli (sampled evenly across the experiment; 3 from each miniblock within each run) plus 72 foil ambiguous sentences with the same subordinate-biased late-disambiguating structure (not presented during scanning) were presented in randomised order to participants, who were instructed to read each sentence and to decide whether they had seen or heard it during scanning. Unambiguous sentence stimuli were not included in the Recognition Memory Test to limit the length of the test. All fMRI and foil sentences were presented in written whole-sentence format, irrespective of the presentation format of the Ambiguous stimuli during scanning.

Following the Recognition Memory test, the Explicit Disambiguation test was used to confirm that individual participants could successfully disambiguate the specific sentences used in the fMRI experiment. This test was also designed to generate approximate measures of whole-sentence reading time for the Written condition, for use in fMRI analyses (see Image Analysis). Stimuli were taken from the fMRI experiment and included all 90 Ambiguous sentences, as well as 30 randomly selected Unambiguous sentences included to provide a control condition without increasing the length of the task too much. All stimuli were presented in randomised order in written whole-sentence format, irrespective of their presentation format during scanning. Participants were instructed to press a button as soon as they had read through the whole sentence once; button press latency provided a measure of whole-sentence reading time. After reading each sentence, participants were then asked to select the correct meaning of the key noun within the sentence context. Three response options were presented for key-noun meaning in each Ambiguous sentence: the subordinate (correct) meaning, the dominant (incorrect) meaning, and a third “other” option. For Unambiguous sentences, key-noun meaning response options were the correct meaning, an incorrect unrelated meaning, and “other”. Participants were instructed to select the meaning they had attributed to the word after understanding the whole sentence in the scanner, or, if unable to recall the sentence, to choose the meaning most appropriate to the sentence context. The position of the correct meaning was randomised across trials.

Data analysis

Behavioural tests

Analyses of behavioural data were conducted in RStudio (v. 3.4.2; RStudio Team, Citation2015), by means of mixed effects models with random effects for subjects and items (Baayen et al., Citation2008; Barr et al., Citation2013). Following procedures for confirmatory hypothesis tests outlined in Barr and colleagues (Citation2013), model complexity was reduced only when there were convergence issues, based on the same iterative steps as detailed in the Method section for Experiment 1.

Analysis models for the Recognition Memory test were constructed to investigate the effects of sentence presentation format and scanning run on recognition memory accuracy; logit mixed effects models were fitted using the glmer() function with the bobyqa optimiser from the lme4 package (v. 1.1.17; Bates et al., Citation2015).

Accuracy scores and reading times for correct trials in the Explicit Disambiguation post-test were analysed to test for behavioural effects of Ambiguity. Reading times were calculated as the latency from stimulus appearance to button press and log-transformed prior to entry into linear mixed effects models, which were fitted with the lmer() function from the lme4 package (v. 1.1.17; Bates et al., Citation2015). We also explored whether the sentence presentation format during scanning affected disambiguation accuracy in the post-test. Logit mixed effects models were fitted to data from the Ambiguous sentences only with the glmer() function within the lme4 package (v. 1.1.17; Bates et al., Citation2015).

FMRI data

The functional images from the fMRI experiment were preprocessed and analysed using SPM12 (Wellcome Trust Centre for Neuroimaging, London, UK). Preprocessing steps included within-subject realignment, within-subject co-registration of the T1-weighted structural image to the mean EPI image, and spatial normalisation of the EPI images to standard MNI space by the unified segmentation method (as described in Ashburner & Friston, Citation2005). The data were spatially smoothed using an 8 mm FWHM Gaussian kernel. For one participant, a single run was excluded from the fMRI analyses due to excessive head motion.

Statistical analyses were performed using the general linear model and Gaussian random field theory as implemented in SPM12 (Friston et al., Citation1994). Each of the three functional runs was modelled separately. The design matrices included onsets and durations for the miniblock presentation format cues (the ear or eye symbols), movement parameters, and temporal and dispersion derivatives. Rest served as an implicit baseline. A high-pass filter of 128 s was applied to remove low-frequency noise. Two separate models were used to analyse the data in each presentation format: Intelligibility (intelligible > unintelligible), and Ambiguity (Ambiguous > Unambiguous) contrast models.

Intelligibility contrast models

An initial model was used to investigate activation related to within-sentence processing of intelligible language in each presentation format at the whole-brain level. For each participant, sentence onset and duration for all intelligible stimuli (Ambiguous plus Unambiguous) and for the unintelligible stimuli were modelled using separate regressors for each presentation format. Ambiguous and Unambiguous stimuli were combined into a single Intelligible condition for each format because stimuli were designed so that sentence processing did not differ between these stimuli until the sentence-terminal word. Individual-subject contrast images from the comparison of Intelligible > Unintelligible conditions were entered into three separate second-level one-sample t-test models to determine format-specific within-sentence intelligibility effects at the group level. This analysis was used to identify region preferentially responsive to intelligible language, so that activation within language-responsive cortex could be identified in the subsequent whole-brain analyses of ambiguity effects.

Ambiguity contrast models

The initial model was elaborated in order to investigate post-sentence activation related to ambiguity resolution in each presentation format at the whole-brain level. For each participant, in addition to the modelling of format-specific sentence onset and duration for the combined Intelligible (i.e. Ambiguous and Unambiguous) and the Unintelligible conditions, sentence offsets for the Ambiguous and the Unambiguous sentences were added to the model as separate sets of events for each presentation format. Sentence offset was modelled explicitly because this was the point at which processing of Ambiguous and Unambiguous sentences was designed to diverge; in Ambiguous sentences, the offset of the disambiguating sentence-terminal word was assumed to trigger semantic mismatch detection and reinterpretation processes (Rodd et al., Citation2012; Twilley & Dixon, Citation2000). The addition of sentence-offset modelling inevitably resulted in a degree of temporal overlap between within-sentence and post-sentence neural activity. However, this model did enable the effects of ambiguity resolution on post-sentence activity to be at least partly separated from general within-sentence processing of intelligible language. In the Spoken and RSVP conditions, sentence offset corresponded to the end point of stimulus presentation. In the Written condition, the reading times obtained for each stimulus in the Disambiguation post-test were used as estimations of individual sentence offset times for each participant (for a small number of outlier reading times that differed by more than 1500 ms from the duration of the corresponding sentence’s audio file in the Spoken condition, the audio file duration was substituted as a proxy offset time). Individual-subject contrast images from first-level analyses were entered into second-level models to determine ambiguity effects, and interactions between ambiguity and format at the group-level.

Region-of-interest analyses

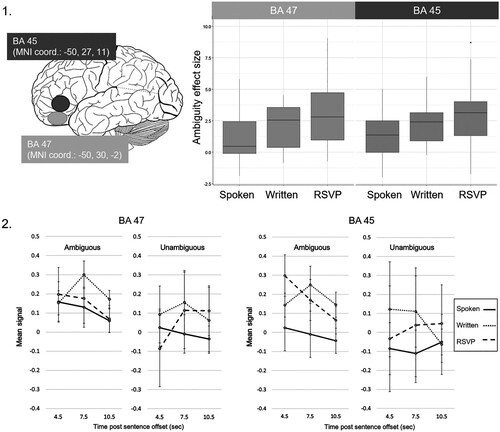

In addition to whole-brain analyses, the interaction between ambiguity and presentation format effects was explored using regions-of-interest (ROI) analyses. ROI location was determined independently of the current dataset using activation peaks from previous studies that have contrasted sentence-offset activity for subordinate-constrained ambiguous sentences and unambiguous control sentences presented in auditory format (Rodd et al., Citation2013), and presented phrase-by-phrase in visual format (Zempleni et al., Citation2007). Peaks from these two studies were used to create ROIs in the present study only if they were also present in our intelligible language contrasts. This procedure ensured that ROIs in the current study were located in regions which were likely to be responsive to meaningful language stimuli in our sample (based on their presence in the group-level intelligible > unintelligible contrast), and have also previously been found to be responsive to manipulations of semantic ambiguity (although see Poldrack, Citation2006, for the difficulties with reverse inferences from anatomical locations to cognitive function in the absence of participant-specific functional localisers). Only two peaks fulfilled these selection criteria, both located within left IFG: a more anterior and ventral peak located within BA 47 (MNI coordinates [−50, 30, −2]), and more posterior and dorsal peak located within BA 45 ([−50, 27, 11]). Once activation peaks fulfilling these criteria had been identified, the MarsBar toolbox for SPM 12 (Brett, Anton, Valabregue, & Poline, Citation2002) was used to create spherical 8mm-radius ROIs centred on each of these peaks, and individual-participant mean differences in sentence-offset responses to Ambiguous versus Unambiguous stimuli were extracted from each ROI for each presentation format. The resulting difference scores were entered into a one-way ANOVA (JASP v.0.9.2; JASP Team, Citation2018) to identify effects of presentation format on the difference scores; significant effects were examined further by means of paired-samples t-tests.

Results

Behavioural results

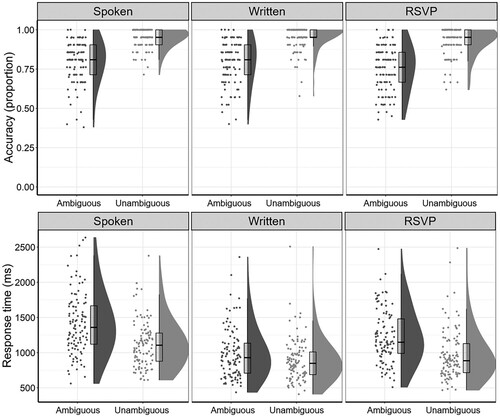

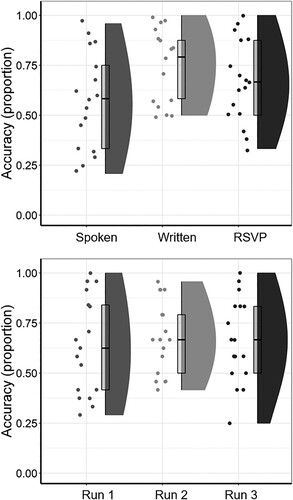

The mean proportion of correct responses for Ambiguous sentences in the Recognition Memory test indicated adequate levels of attention during scanning (MTotal = 0.66, SDTotal = 0.2, range 0.36–0.94). Detailed results from model comparisons can be found in supplemental materials. Comparisons between scanning runs demonstrated no significant effect of the interval between scanning and testing on Recognition Memory (Run 2 and Run 3 > Run 1: β = −0.15, SE = 0.17, z = −0.92; Run 2 > Run 3: β = 0.05, SE = 0.17, z = 0.31; Model comparison: χ2(2) = 0.87, p = .644, maximal model did not account for correlations between random intercept and slopes by subjects). One-sample t-tests demonstrated that participants’ Recognition Memory accuracy for sentences in the Spoken (M = 0.57, SD = 0.24), Written (M = 0.74, SD = 0.18) and RSVP (M = 0.67, SD = 0.21) conditions were significantly greater than chance levels of 0.5 (Spoken: t(407) = 3, p = .003; Written: t(407) = 10.9, p < .001; RSVP: t(407) = 7.13, p < .001; all compared against a Bonferroni-corrected α-level of .017), as can also be seen in . There was, however, a significant main effect of in-scanner presentation format on participants’ ability to recall Ambiguous scanning stimuli during the Recognition Memory test (see ), suggesting that memory for the Spoken condition was comparatively poor (Spoken and Written > RSVP: β = 0.04, SE = 0.14, z = 0.28; Spoken > Written: β = −0.9, SE = 0.17, z = −5.39; Model comparison: χ2(2) = 29.94, p < .001, maximal model was an intercept-only model).

Figure 2. Accuracy rates in the post-scan Recognition Memory test. Boxplots show median and interquartile range of accuracy scores. by scanning run (top panel) and presentation format (bottom panel).

The mean proportion of correct responses to Ambiguous stimuli in the post-scan Explicit Disambiguation test demonstrated that participants were generally able to successfully disambiguate the Ambiguous sentences used in the experiment (MTotal = 0.91, SDTotal = 0.11, range 0.56–1.00), though it should be noted that the Disambiguation task could not differentiate between successful immediate disambiguation at the time of first stimulus presentation during scanning, and delayed disambiguation at the time of second stimulus presentation during the post-scan task. As shown in , significant effects of Ambiguity were observed on disambiguation accuracy (β = 1.91, SE = 0.77, z = 2.47; Model comparison: χ2(1) = 7.72, p = .006), and on sentence reading times of correct trials (β = −0.02, SE = 0.01, t = −2.27; Model comparison: χ2(1) = 4.83, p = .028). Detailed results from model comparisons can be found in supplemental materials. Ambiguous sentences were associated with significantly lower accuracy (MAcc = 0.91, SDAcc = 0.28) and longer reading times (MRT = 2.92s, SDRT = 1.07s) than Unambiguous sentences (MAcc = 0.99, SDAcc = 0.11; MRT = 2.77s, SDRT = 1.21s). No significant effects of scanning presentation format or run on disambiguation accuracy (Ambiguous trials only) were observed (Spoken and Written > RSVP: β = 0.12, SE = 0.26, z = 0.46; Spoken > Written: β = −0.22, SE = 0.3, z = −0.72; Model comparison: χ2(2) = 0.15, p = .927; Run 2 and Run 3 > Run 1: β = −0.25, SE = 0.19, z = −1.29; Run 2 > Run 3: β = −0.002, SE = 0.24, z = 0.01; Model comparison: χ2(2) = 1.51, p = .471, maximal models were intercept-only models, see supplemental materials for detailed results from these model comparisons).

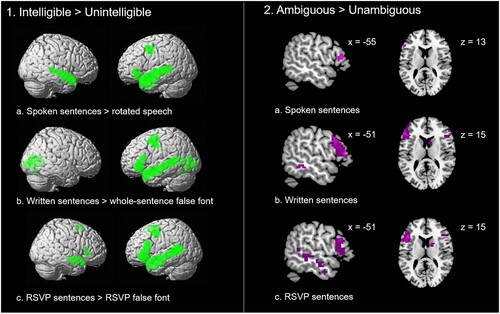

Whole-brain analyses

Panel 1 in shows the results of the basic contrast of intelligible > unintelligible stimuli. In the Spoken condition, haemodynamic responses to intelligible speech were contrasted with responses to spectrally rotated speech, which revealed activations in a fronto-temporal network of regions usually associated with speech comprehension (see Price, Citation2012, and Tyler & Marslen-Wilson, Citation2008 for reviews). In both written conditions, responses to meaningful written sentences were compared with responses to false font stimuli. Activations associated with the processing of meaningful visual language stimuli were observed in a fronto-temporal network, and therefore consistent with previous studies (e.g. Price, 2012; Tyler & Marslen-Wilson, Citation2008). Peaks from this contrast can be found in supplemental materials.

Figure 4. Results of the whole-brain analyses. Brain activation in the contrast of intelligible > unintelligible sentences (panel 1), and ambiguous > unambiguous sentences (panel 2) in each of the presentation formats.

Panel 2 in illustrates the contrast of Ambiguous > Unambiguous sentences in each presentation format. Ambiguity effects were found in each format (peaks can be found in supplemental materials). The location of ambiguity-related activations was similar to those observed in previous studies of semantic ambiguity resolution in the auditory format (e.g. Rodd et al., Citation2005; Rodd et al., Citation2012), and in the visual format (e.g. Zempleni et al., Citation2007). Ambiguity effects were present in left frontal areas in all three presentation formats.

Region-of-interest results

The location of the two left IFG ROIs within BA 47 and BA 45 is illustrated on the left in panel 1 of . A 3 (Format) x 2 (Region) ANOVA comparing the ambiguity-related activation [Ambiguous > Unambiguous] in the three presentation formats across the two ROIs, revealed a significant main effect of Format, F(2,32) = 4.23, p = .023, η2 = 0.21, on the ambiguity effects. Neither the main effect of Region, F(1,16) = 0.02, p = .901, η2 = 0.001, nor the Format x Region interaction, F(2,32) = 2.32, p = .114, η2 = 0.13, were statistically significant.

Figure 5. Results of the ROI analyses. Panel 1. Illustration of the approximate location of the BA 45 and BA 47 ROIs in the left IFG (left). Boxplots showing median and interquartile range of ambiguity effects (Ambiguous > Unambiguous contrast) in each of the presentation formats, and ROIs (right). Panel 2. Peri-stimulus time histograms of mean BOLD signal extracted from BA 47 (left) and BA 45 (right).

Although the results of this ANOVA did not provide evidence of distinct ambiguity response profiles in the two IFG subregion ROIs, in view of the distinct language processing roles attributed to these regions previously (see e.g. Binder et al., Citation2009; Thompson-Schill et al., Citation1997), it was of theoretical interest to confirm the effects of Format on ambiguity responses in each ROI separately. Therefore, a 1×3 ANOVA was conducted for each ROI, comparing the difference in activation level in response to Ambiguous versus Unambiguous sentences, and was followed-up with individual t-tests. In the more anterior BA 47, there was a significant main effect of Format, F(2,32) = 4.59, p = .018, η2 = 0.22, on the observed ambiguity effects (Ambiguous > Unambiguous contrast). One-sample t-tests demonstrated large ambiguity effects significantly greater than zero in the Written (t(16) = 3.64, p = .002, Cohen’s d = 0.88), and the RSVP condition (t(16) = 4.66, p < .001, Cohen’s d = 1.13), but a non-significant effect in the Spoken condition (t(16) = 2.09, p = .053, Cohen’s d = 0.51). Post-hoc paired-samples t-tests comparing the ambiguity effects between the presentation formats revealed that ambiguity effects in the RSVP modality were significantly larger than those in the Spoken condition (t(16) = 2.73, p = .015, Cohen’s d = 0.66), and those in the Written condition (t(16) = 2.18, p = .044, Cohen’s d = 0.53; see ). The magnitude of ambiguity effects did not significantly differ between Spoken and Written conditions (t(16) = −0.79, p = .441, Cohen’s d = 0.19). Inspection of peri-stimulus time histograms extracted from the BA47 region for each Ambiguous and Unambiguous condition suggested for the Written and RSVP conditions, the ambiguity effect represented a change from a low level of activity in this region in the Unambiguous conditions to a higher level of activity in the Ambiguous conditions (see panel 2 in ).

In the more posterior BA 45, the main effect of presentation format on the observed ambiguity effects was not statistically significant, F(2,32) = 3.11, p = .058, η2 = 0.16. One-sample t-tests demonstrated ambiguity effects significantly greater than zero in all three presentation formats (Spoken: t(16) = 2.84, p = .012, Cohen’s d = 0.69; Written: t(16) = 5.78, p < .001, Cohen’s d = 1.4; RSVP: t(16) = 4.34, p < .001, Cohen’s d = 1.05). Similar to BA 47, post-hoc pair-wise comparisons between presentation formats using paired-samples t-tests demonstrated significantly larger ambiguity effects in the RSVP condition than the Spoken condition, t(16) = 3.06, p = .008, Cohen’s d = 0.74. However, the magnitude of ambiguity effects in the Written condition, though numerically intermediate between the effects observed in the other two conditions, did not differ significantly from either of them (Written vs. RSVP: t(16) = −0.64, p = .532, Cohen’s d = 0.16); Written vs. Spoken: (t(16) = 1.78, p = .094, Cohen’s d = 0.43). Inspection of peri-stimulus time histograms extracted from the BA45 region for each Ambiguous and Unambiguous condition suggested for the Written and RSVP conditions, the ambiguity effect represented a change from a low level of activity in this region in the Unambiguous conditions to a higher level of activity in the Ambiguous conditions. In the case of the Spoken condition, the ambiguity effect appeared to represent an increase from relative underactivity in the Unambiguous condition.

Discussion

The aim of Experiment 2 was to investigate the effects of presentation format-specific processing demands on higher-order neural language comprehension processes. Results from the post-scan Disambiguation test demonstrated that the participants were generally able to disambiguate the sentences, and had access to the relevant vocabulary to do so. The Disambiguation task confirmed the finding from Experiment 1 that sentences that require ambiguity resolution are more difficult to process than sentences that do not contain ambiguities; even with prior exposure during scanning, participants showed lower accuracy rates in selecting the appropriate meaning for the ambiguous main noun than for the equivalent unambiguous noun in the control sentences. These results are in line with the striking findings that have lead to the formulation of the “good enough” framework of language processing (see e.g. Christianson et al., Citation2001; Ferreira & Patson, Citation2007).

The fMRI results revealed ambiguity effects on haemodynamic responses in the Spoken, whole-sentence Written, and RSVP formats that were consistent with previous studies (e.g. Braze et al., Citation2011; Hoenig & Scheef, Citation2009; Rodd et al., Citation2012; Rodd et al., Citation2005; Vitello, Citation2014; Zhu et al., Citation2012). Importantly, these analyses demonstrated the critical finding that the magnitude of ambiguity-related processing costs in a key language region, left IFG, was modulated by the format in which sentences were presented. We observed that ambiguity effects in an anterior (BA 47) and a more posterior (BA 45) subregion of left IFG were significantly increased when the sentences were presented in the less naturalistic and more cognitively demanding word-by-word RSVP format.

General discussion

The present study investigated whether the processing demands of typically used presentation formats in psycho linguistic and neurolinguistic research affect higher-level semantic processes during language comprehension. In separate behavioural and neuroimaging experiments, processing of sentences requiring resolution of lexical-semantic ambiguity was compared to comprehension of unambiguous sentences in three commonly-used presentation formats (Spoken, whole-sentence Written, and RSVP). In both experiments, sentence stimuli for the ambiguous conditions were structured around late disambiguation to the subordinate meaning of an ambiguous key noun, so that successful comprehension was predicated primarily on reinterpretation of the sentence after encountering the sentence-terminal disambiguating word. Interactions between presentation format and the expected reinterpretation-related costs to behavioural measures during a meaning coherence judgement task and haemodynamic responses in ambiguity-responsive leftIFG regions during attentive comprehension were investigated.

In line with previous studies of ambiguity resolution, reinterpretation-related processing costs were consistently found in all three presentation formats (Spoken, Written and RSVP), both at the behavioural and the neural level (Vitello & Rodd, Citation2015). Explicit ambiguity resolution during a behavioural task (Experiment 1) was associated with significantly lower accuracy and longer response times compared to matched unambiguous sentences in all three formats. Implicit comprehension of sentences containing semantic ambiguities (Experiment 2) was associated with significantly greater haemodynamic responses in an anterior leftIFG region (BA47) and a more posterior leftIFG region (BA45) in all presentation formats, with the exception of the Spoken format in BA47 (which was statistically non-significant, p = .053, Cohen’s d = 0.51); post-scan behavioural testing confirmed the same pattern of ambiguity-related behavioural effects on sentence comprehension accuracy and speed. It is worth noting that although all of our sentence stimuli were designed to disambiguate an ambiguous word towards an unexpected meaning (thereby triggering reinterpretation processes), we did not tightly control the degree to which our ambiguous key words were biased towards a single, dominant meaning. Extensive piloting suggested that our stimulus set included a mix of more or less strongly biased ambiguous words; it is therefore possible that participants successfully resolved the ambiguity in sentences with less strongly biased ambiguous words during a first-pass through the sentence (therefore eliminating the need for reinterpretation), simply by virtue of our targeted “subordinate” meaning being more generally accessible (for such effects of word-meaning bias in eye-tracking studies see e.g. Duffy et al., Citation1988; Sereno & Rayner, Citation1993). Comparisons between the unambiguous condition and our ambiguous condition therefore likely resulted in a relatively conservative estimate of the true costs of reinterpretation. Future studies of reinterpretation-related processing costs may increase statistical power by selecting strongly biased ambiguous words only, e.g. by using large-scale word-meaning dominance norms that have now become available (e.g. Gilbert & Rodd, Citation2022, for norms of British English).

Apart from providing important replications of previously observed reinterpretation-related processing costs, our key research question was whether the format in which linguistic information is encountered can modulate such higher-level semantic processes. Importantly, in both experiments, the magnitude of reinterpretation-related processing costs varied significantly between sentence presentation formats. In Experiment 1, the magnitude of the ambiguity-related cost to processing speed (though not accuracy) during explicit sentence comprehension was greater for both the RSVP and the Spoken formats relative to the whole-sentence Written format. In Experiment 2, the magnitude of the ambiguity-related increase in neural activity during implicit sentence comprehension was greater for the RSVP format than for either of the other two formats within BA47, and was greater for the RSVP format compared to the Spoken format within BA45.

The functional organisation of leftIFG remains a controversial topic, with opinion divided between domain-specific (e.g. distinctions between phonology, syntax and semantics, see Friederici, Citation2012; Grodzinsky, Citation2000) and process-specific (e.g. top-down control processes related to conflict resolution or more general executive functions, see Bookheimer, Citation2002; Curtis & D’Esposito, Citation2003; Kaan & Swaab, Citation2003; Nozari & Thompson-Schill, Citation2016; Postle, Citation2006; Thompson-Schill et al., Citation1997) models of left IFG function. While the two leftIFG regions demonstrated somewhat different patterns of interaction between presentation format and ambiguity, the absence of a significant interaction with region meant that a clear differentiation between the functional profiles of these two regions could not be made.

Although relative differences between formats were not identical in the behavioural and neuroimaging experiments, the results from both experiments indicate that it was the less naturalistic RSVP format which had the greatest impact on sentence comprehension in general, and semantic ambiguity resolution in particular. In Experiment 1, the RSVP format was associated with reduced accuracy rates for all sentence types (ambiguous and unambiguous) compared to the other presentation formats. This is consistent with previous work demonstrating that word-by-word presentation renders comprehension more difficult or effortful during explicit and implicit sentence comprehension (e.g. Masson, Citation1983; Schotter et al., Citation2014; Wlotko & Federmeier, Citation2015). The RSVP format was also associated with a significantly greater impact on the processing costs of ambiguity resolution compared to conventional whole-sentence Written presentation, both in terms of behavioural response times in Experiment 1 and neural activity within BA47 in Experiment 2.

Unlike whole-sentence Written presentation, the RSVP format precludes the use of visual input as an external working-memory buffer (Lee & Newman, Citation2009) and prevents overt re-reading as a means to aid reinterpretation of ambiguous sentences. Eye-tracking research has shown that readers tend to make eye movements towards earlier portions of a sentence when faced with processing difficulties (e.g. Frazier & Rayner, Citation1982; Paape & Vasishth, Citation2022). Evidence for increased backwards-directed eye movements has been found in particular for stimuli where readers are faced with unexpected information that disambiguates an earlier ambiguous sentence element, suggesting that physical re-reading may play an important role in the successful recovery from misinterpretations during reading (e.g. Blott et al., Citation2021; Jacob & Felser, Citation2016; Pickering & Traxler, Citation1998; Slattery et al., Citation2013). Resolving semantic ambiguity in the RSVP format may therefore place particular strain on working memory, as memory externalisation (i.e. relying on physical re-reading) is not possible. In addition, the RSVP format is associated with little, if any, prior practice and exposure – in contrast to whole-sentence reading, which is a highly practiced everyday skill from childhood for typical adults. It would therefore be surprising if the unfamiliar RSVP format did not place additional cognitive demands on the reader. We argue that, compared to whole-sentence reading, the RSVP format places greater demands on working memory (likely involving both storage and processing components), not only during sentence reading itself, but also during reinterpretation to resolve semantic ambiguity. The increased ambiguity-related behavioural and neural processing costs observed in the present study when visual sentences were presented word-by-word rather than as whole sentences may therefore reflect the enhanced impact of format-related working memory and executive control demands on sentences that require reinterpretation to achieve successful comprehension.

In addition to working memory demands, other format-related differences in cognitive aspects of sentence comprehension may have contributed to the increased ambiguity costs observed with RSVP presentation. The ambiguous sentences used in the present study incorporated a considerable structural and temporal distance between the ambiguous noun and the disambiguating sentence-terminal word, and may thus have encouraged “digging-in” effects (see e.g. Hagoort, Citation2003; Metzner et al., Citation2017). Such effects occur when comprehenders become increasingly committed to their initial analysis, and find it harder to re-analyse a sentence (Tabor & Hutchins, Citation2004). When words are presented sequentially, digging-in effects may become exaggerated compared to whole-sentence presentation during reading (Magliano et al., Citation1993). Mechanisms that are available during natural reading but lacking during RSVP reading, such as parafoveal preview and the ability to make regressive eye movements to previous parts of the sentence, could lead to an exaggeration of sentence wrap-up effects at the offset of Ambiguous sentences. In the present study, reinterpretation could only occur after the disambiguating sentence-terminal word; to capture this reinterpretation process behavioural response times (in Experiment 1) and neural activation (in Experiment 2) were recorded from sentence offset, meaning that measures of disambiguation coincided with post-sentence wrap-up. The significant increase in ambiguity effects in the RSVP format compared to naturalistic whole-sentence reading might therefore reflect an interaction between format-related post-sentence wrap-up effects and the specific demands for reinterpretation posed by late-disambiguating sentences. In the case of the increased ambiguity-related neural activity observed in BA47 with RSVP, this explanation fits in particularly well with Hagoort's (Citation2005) account of left IFG function, which views anterior left IFG as fundamental to the process of binding together word meanings, and forming unified representations of sentence meaning.

Although the comparisons between RSVP and whole-sentence Written formats provide evidence supporting the idea that format-induced cognitive differences can influence the processing costs of ambiguity resolution, additional evidence from comparisons between the RSVP and Spoken formats suggests that task-related factors can also interact with format effects. RSVP mimics the transient nature of speech. Processing of RSVP sentences therefore might be expected to show a similar response pattern to the Spoken format if the cognitive demands of dynamic stimuli were the primary determinant of processing costs. This was indeed what we observed for the behavioural costs of sentence reinterpretation during the explicit comprehension task in Experiment 1, where ambiguity-related effects did not differ significantly between these two formats. However, the relative effects of these two formats on ambiguity-related neural activation in both leftIFG ROIs during implicit sentence comprehension in Experiment 2 followed a different pattern; compared to RSVP, the Spoken format was associated with significantly smaller ambiguity-related increases in haemodynamic responses in BA 45 and BA47. It is possible that this finding reflects the use of continuous rather than sparse MRI sequences to enable modelling of haemodynamic responses specifically time-locked to the encounter of disambiguating information; the presence of scanner noise may have systematically affected comprehensibility of the Spoken condition, minimising the difference in haemodynamic responses to ambiguous and unambiguous sentences. However, each participant’s ability to hear and understand auditory sentence stimuli clearly over scanner noise was checked prior to data acquisition, and Recognition Memory Test accuracy scores for Spoken sentences were significantly above chance. The discrepancy between RSVP and Spoken format effects that we observed depending on the nature of the comprehension paradigm (explicit vs implicit) may also have a more theoretically-interesting, cognitive explanation. When comprehension difficulties are encountered in the absence of task-related contexts or objectives to facilitate engagement with comprehension, comprehenders may tend to adopt “good-enough” sentence processing strategies (e.g. Christianson et al., Citation2001; Ferreira, Citation2003; Ferreira & Patson, Citation2007), whereby sentence processing remains at a shallow or superficial level, without representations of sentence meaning being fully specified or ambiguities fully resolved.

Greater reliance on good-enough processing during listening could explain the relatively reduced ambiguity effects found in the Spoken condition. It has previously been argued that such strategies of underspecification of sentence-level meaning representations may be more likely during listening than reading due to the associated time pressure for comprehension of transient speech stimuli (Sanford & Sturt, Citation2002). Although there was no explicit task during scanning to impose time pressures on comprehension, the pacing of stimulus delivery essentially constrained the amount of time participants could devote to comprehension of one sentence before the next was presented. Superficial, underspecified processing of ambiguous sentences would be expected to reduce engagement in sentence reinterpretation, limiting the amount of additional neural activation observed in response to ambiguous sentences. This was the response pattern we observed for the Spoken format in the BA47 ROI, where the difference in activation between Ambiguous and Unambiguous Spoken sentences was only of borderline significance. The same conditions of stimulus transience and time-limited processing should also apply to the RSVP sentences, so that underspecified processing of sentence meaning, and its consequences on neural activation, might also be expected for the RSVP Ambiguous sentences. However, the magnitude of the ambiguity-related increase in activation observed in Experiment 2 was significantly larger for the RSVP format compared with the Spoken format in both leftIFG ROIs, suggesting a much greater level of engagement with processing Ambiguous RSVP sentences. It seems plausible that factors such as the relative unfamiliarity or artificiality of RSVP sentence presentation, the increased working memory demands of serial word-by-word presentation in this format, or the relative salience of seeing the unexpected disambiguating word in isolation at the end of the RSVP word sequence may have been sufficient to drive more active engagement in comprehension of ambiguous sentences beyond “good-enough” processing, even in the absence of an explicit task, in a way that the more familiar and less attentionally engaging Spoken format did not. Taken together, the results from both experiments suggest that ambiguity-related processing costs may vary, not only due to format-related differences in cognitive demands (e.g. working memory load, executive control demands) during sentence processing, but also depending on the extent that task characteristics (such as explicit vs implicit comprehension) interact with presentation format to encourage or hamper engagement with full specification of sentence meaning.