ABSTRACT

In unrelated sign languages event structure is reflected in the dynamic form of verbs, and hearing non-signers are known to be able to recognise these visual event structures. This study assessed the time course of neural processing mechanisms in non-signers to examine the pathways for incorporation of physical-perceptual motion features into the linguistic system. In an EEG study, hearing non-signers classified telic/atelic verb signs (two-choice lexical decision task). The ERP effects reflect differences in perceptual processing of verb types (early anterior ERP effects) and integration of perceptual and linguistic processing required by the task (later posterior ERP effects). Non-signers appear to segment signed input into discrete events as they try to map the sign to a linguistic concept. This might indicate the potential pathway for co-optation of perceptual features into the linguistic structure of sign languages.

Introduction

In the course of human evolution, the ability to identify and interpret discrete events in the fluidly changing environment was one of the most critical functions of cognition. As humans developed the ability to communicate using language, information about actions – their structure, temporal parameters, and participants – took a central role in linguistic communication in the form of verbs and their linguistic features. Verbs and their arguments are central to any communicative message, and consistencies in their relationships form the core of linguistic patterns across languages (Evans & Levinson, Citation2009; Greenberg, Citation1963). Every sentence in linguistic communication is centred on transmitting information about an action or an event, that is, predication. The verb and its arguments that form the core of every sentence can describe an event in two ways: as having an inherent boundary, or an endpoint (i.e. verbs such as drop or arrive), or not inherently bounded or limited (i.e. verbs such as sleep or walk). Those that describe events with an inherent endpoint are called telic; those that describe events that are not bounded are termed atelic (Pustejovsky, Citation1991; Tenny, Citation1994; Vendler, Citation1967; Verkuyl, Citation1972). Development of appropriate use of verb markings for tense and finiteness (telicity) is one of the most challenging stages of language acquisition, especially for children with Developmental Language Disorder (aka specific language impairment; Eyer et al., Citation2002; Rice, Citation2003; Schwartz & Leonard, Citation1984; Sheng & McGregor, Citation2010). Prior research suggests that from the standpoint of neural computations, language and action processing overlap substantially (Blumenthal-Dramé & Malaia, Citation2019); thus, understanding how action processing feeds into language processing can be groundbreaking in terms of modelling language disorders, identifying them early, and developing therapies. The hypothesis that language builds on general, non-linguistic abilities – such as the ability to identify, parse, and interpret actions – has not been conclusively tested in spoken languages, as they differ from action in modality (auditory vs. visual). Sign languages, on the other hand, allow investigating the processes of action comprehension and language understanding within a single (visual) modality and further evaluating the relationship between action comprehension and language understanding at various processing stages, from sensory perception to higher cognition.

In sign language verbs, event structure is often perceptually reflected in the form of the signs, that is, the hand articulator motion dynamics. Previous work has indicated that hearing non-signers infer aspectual meaning from visually presented signs and can identify the boundedness/telicity parameter of never-before-encountered sign language verbs (Kuhn et al., Citation2021; Strickland et al., Citation2015). However, the mechanisms of skill transfer between action and language processing are not well understood. What neural mechanisms do non-signers rely on when they correctly classify the telicity of signed verbs? To examine this question, we presented hearing non-signers with the task of perceiving and sorting verb signs in unknown sign languages while recording their ERPs to these stimuli.

Sign languages and event visibility

Sign languages are produced in the three-dimensional signing space by manual (hand and arm) and non-manual means (e.g. position of eyebrows, head, upper body). Sign languages are natural languages, with complex hierarchical constructs at all levels of linguistic analysis, from kinematically-based phonology to syntax. Since about the 1980s, psycholinguistic and neuroimaging studies have shown that sign languages recruit a left-hemispheric fronto-temporal network in the brain, similar to that responsible for spoken language processing (for an overview see e.g. Cardin et al., Citation2020; Corina & Lawyer, Citation2019; Emmorey, Citation2021; Gutiérrez-Sigut & Baus, Citation2021; Hickok & Bellugi, Citation2010). However, visual modality does impact the structure of sign languages in specific ways. For example, while spoken languages also have some “sound symbolic” words they employ (the phenomenon termed onomatopoeia, where for example the spoken German word “Miau” is used to refer to a cat), sign languages in general demonstrate a higher degree of linguistically-encoded iconicity (i.e. strong identifiable relationship between visual form and meaning) across a variety of unrelated language families (cf. Wilbur, Citation2008).

Klima and Bellugi (Citation1979) described the rich repertoire of grammatical aspectual marking in American Sign Language (ASL), with subsequent work decomposing the movement components for different event types (Wilbur, Klima & Bellugi, Citation1983; Wilbur, Citation2005, Citation2009). Similar verbal modulations for grammatical aspect marking have been described for a number of other sign languages.Footnote1 Wilbur (Citation2003) further observed that ASL lexical verbs can be analyzed as telic and atelic based on their phonological form, with telic verbs having a more rapid deceleration to the place of articulation at the end of the sign reflecting semantic end-state of affected arguments.Footnote2 The observation that semantic verb classes are characterised by certain movement profiles was formulated as the Event Visibility Hypothesis (EVH; Wilbur, Citation2008). Empirical evidence for the EVH came from motion capture research, which indicated systematic kinematic distinctions between telic and atelic predicates, whereby the endpoint of the event in telic signs is marked by a higher peak velocity and significantly faster deceleration at the end in contrast to atelic signs (Krebs et al., Citation2021; Malaia et al., Citation2008; Malaia & Wilbur, Citation2012; Wilbur et al., Citation2012).

Homologies between event representations in language and action

Humans rely on dynamic features of visual motion for perceptual segmentation of the visual and linguistic signal, as well as for gesturally expressing event boundaries. Multiple studies have shown that reality is segmented into events at multiple scales simultaneously (Zacks, Braver, et al., Citation2001; Zacks, Tversky, et al., Citation2001). Such event segmentation studies typically ask participants to watch a video with a dynamic scene and indicate time-points at which the participants think an action is completed; participants can do so at fine-grained and coarse-grained boundaries. Across cohorts, participants show remarkable agreement in identifying the timing boundaries of both coarse and fine-grained events, either in realistic scenarios (familiar scenes, e.g. how one folds laundry), or in abstract moving-dot experiments (Kurby & Zacks, Citation2008; Speer et al., Citation2007; Zacks, Braver, et al., Citation2001). Further, humans appear to be sensitive to the internal temporal profile of events, which helps them differentiate between unbounded and bounded events (Ji & Papafragou, Citation2020). For instance, Ji and Papafragou (Citation2020) used a non-linguistic category identification task, in which participants were presented with pairs of bounded and unbounded events, and had to extract a generalisation about one member of these pairs. Participants built assumptions about event boundaries even when the boundary itself was obscured, indicating that viewers are not only sensitive to event boundedness, but also distinguish it from event completion. The viewers could identify bounded and unbounded events even when linguistic encoding was suppressed by a secondary task of counting numbers (Ji & Papafragou, Citation2020).

The ability to identify, hierarchically structure, and remember segmented portions of the signal appears to be transferable between action and linguistic domains. Strickland et al. (Citation2015) provided an example of action-to-language processing transfer, showing that non-signers are capable of identifying telic/atelic semantics of unknown sign language verbs in the absence of any prior exposure to a sign language. Non-signers, who were shown videos of sign language verbs differing in event structure and resulting motion signatures, were asked to select the likely meaning of the observed sign from two English verbs. Participants accurately inferred lexical aspectual meaning from visual stimuli, distinguishing between atelic and telic signs with unknown meaning. The fact that non-signers were able to make sense of the visual signal suggests that the presence/absence of a dynamic visual boundary was sufficient for action segmentation. Then, due to the linguistic nature of the task, inference about event structure of the verb would have been carried out on the basis of action segmentation (telic vs. atelic). Strickland et al. (Citation2015, p. 5968) concluded that both linguistic notions of telicity and mapping biases between telicity and visual form were universally accessible, shared between signers and non-signers.

A reverse phenomenon – language-to-action transfer of skill in segmentation of visual signal – has been demonstrated in a series of experiments in which signers and non-signers were asked to reproduce dynamic point-light drawings (Klima et al., Citation1999). The signers, but not the speakers, made a crucial distinction between strokes and transitions in the point-light display: the signers did not draw the lines which represented transitional motion between “strokes” of the drawings. The stimuli were not linguistically informative for any of the participants; however, the signing participants were able to extrapolate their linguistic experience in visual segmentation of a signal to a non-linguistic task that focused on action segmentation and structuring. Thus, while non-signers and signers appear capable of relying on similar motion cues for segmentation of the visual signal and assignment of meaning, signers appear capable of more nuanced structuring of the visual signal.

Another kind of language-to-action transfer can be observed regarding the gesturing of (non-signing) spoken language users. Schlenker (Citation2020), investigating the use of pro-speech gestures,Footnote3 observed visual boundary marking for gestures that expressed telic events, but not for gestures expressing atelic events. King and Abner (Citation2018) also compared the production of ASL atelic/telic signs and the production of gestures by hearing non-signers asked to gesturally express the meaning of predicates without using speech. To assess boundedness and repetition, the gestures and signs were coded on a 7 point scale with respect to their form components: (a) boundary (i.e. a sudden stop or deceleration, a change in handshape, or contact at the end of a sign), (b) repetition (i.e. repeated movement across space, opening, closing, or changing of handshape), and (c) compositeness (i.e. a sequential combination of different gesture parts). In line with earlier findings, it turned out that ASL telic predicates had a significantly higher boundedness score, while atelic predicates had a higher repetition score. Notably, the same effects for boundedness and repetition were observed for gestures produced by non-signers (although the effects were stronger in ASL than in gesture). Thus, multiple studies contribute to the evidence that there appears to be a common phenomenon of visual mapping between event structure and its manual representation, which may be strengthened/grammaticalised as language conventionalises (e.g. in sign languages). This suggests that patterns of event structure representation in the visual modality might be based on cognitive biases that shape information expression in the visual modality. Additional support for the claim that manual gestures can be grammaticalised into visual language systems has been provided by research on artificial sign language learning. For instance, Motamedi et al. (Citation2022) examined how linguistic structure emerges in artificial manual sign systems by combining an iterated learning paradigm (in which participants are asked to learn from gestures produced by previous participants) with a silent gesture paradigm (i.e. hearing non-signers have to communicate only by gesture). The authors observed the development of categorical markers distinguishing nouns and verbs, which became systematic and regular through the repeated use and transmission of the gestures. That said, it is important to remember that the participants in such studies are already users of a language, that is, they already have conceptual and linguistic distinctions between nouns and verbs prior to engaging in the tasks.

From multiple perceptual features experimentally tested as potentially relevant for visual action comprehension (e.g. distance between pairs of moving objects, relative location, speed, acceleration, etc.), changes in speed of individual objects emerged as the feature most highly correlated with event boundary identification. Specifically, action start and end times, as identified by participants, are highly correlated with increases and decreases of speed (or acceleration and deceleration) (Zacks et al., Citation2006). As described previously, rate of deceleration is also one of the motion features used for differentiating telic from atelic verbs in sign language production. At the neural level, these changes in speed of individual objects were associated with increased activity in the area of the brain termed MT+, and a nearby region in the superior temporal sulcus – both associated with processing of biological motion (Zacks et al., Citation2006). Very similar neural activations were reported in sign-naïve participants observing signed sentences in ASL involving telic and atelic verbs (Malaia, Ranaweera, et al., Citation2012); yet, signers observing the same stimuli show focused activation in the left inferior frontal gyrus, an area related specifically to language processing. This indicates that while both signers and non-signers operate on the same perceptual information (i.e. both visually process the perceptual-kinematic difference between telic and atelic ASL signs), only familiarity with the language allows low-level perception of motion differences in the signal to be processed as information at the linguistic levels.

This combination of observations, namely that: (1) hearing non-signers process the perceptual-kinematic difference between atelic and telic verbs in ASL (Malaia, Ranaweera, et al., Citation2012), (2) hearing non-signers associate unfamiliar (pseudo-)signs involving a dynamic visual boundary with telic events and (pseudo-)signs without a visual boundary with atelic events (Kuhn et al., Citation2021; Strickland et al., Citation2015), and (3) Deaf signers and hearing non-signers process telic and atelic signs differently at the neuroanatomical level (Malaia, Ranaweera, et al., Citation2012), show that a perceptual-kinematic velocity feature used for non-linguistic event segmentation (available to non-signers) is incorporated into the language system to be processed as an abstract linguistic feature by Deaf signers (see Malaia, Ranaweera, et al., Citation2012).Footnote4 Although the cross-linguistic nature of motion-based interpretation of lexical aspect in sign languages is widely attested (Wilbur, Citation2008), along with the consistency of both signers and non-signers in interpreting signs with such features (Kuhn et al., Citation2021; Strickland et al., Citation2015), the neural bases of this universal mapping from motion features to linguistic features are not, as yet, well-described.

The present study

To investigate the neural timeline of mapping between visual motion and linguistic event structure, we recorded ERP data during (online) processing of telic and atelic signs of different sign languages in hearing non-signers. Participants were asked to label the viewed signs with a two-alternative-forced-choice task (2AFC) in their native language using answer choices presented in written German, and, additionally, to indicate how certain they were of their decision (see below for a more detailed description of the task). The sign language stimuli, which represented unknown input for the participants, consisted of signed telic and atelic verbs from Turkish Sign Language (TİD), Italian Sign Language (LIS) and Sign Language of the Netherlands (NGT) (from Strickland et al., Citation2015), as well as telic and atelic signed verbs from Croatian Sign Language (HZJ). Based on previous research (Kuhn et al., Citation2021; Strickland et al., Citation2015), we hypothesised that non-signers would be able to accurately classify the telic/atelic verbs. In line with Ji and Papafragou (Citation2020), we expected to see higher classification accuracy for bounded events (telic signs).

Previous neurolinguistic studies also showed that hearing non-signers relied on sensory/occipital cortices (including MT+ region) when processing telic vs. atelic signs (Malaia, Ranaweera, et al., Citation2012). We thus expected that the sensory perceptual difference between verb types would be reflected on the neurophysiological level in ERPs within early time windows (before 300 msec post-sign onset). The 300 msec temporal threshold for perceptual vs. conceptual processing of visual stimuli is based on Greene and Hansen (Citation2020), who demonstrated prevalence of perceptual feature processing prior to 300 msec post-stimulus onset, and conceptual feature processing thereafter. Our research question focused on the processing mechanisms involved in the form-to-meaning mapping/integration process, including both early (perceptual) and late (linguistic/conceptual) ones. Thus, we assessed possible effects in later time windows (past 300 msec post-sign onset) in addition to early perceptual effects. Due to the linguistic nature of the task, linguistic processing indicators could be expected in both conditions (telic and atelic). However, based on prior behavioural research, it could be expected that the timeline for the process of linguistic mapping/integration might differ between telics and atelics.

Hypothesis 1: if the mapping between visual forms of telic and atelic signs unfolds in a qualitatively different manner with respect to linguistic mapping, for instance due to higher attentional load during the observation of telic signs (Malaia, Citation2014; Malaia, Wilbur, and Milković, Citation2013; Malaia, Wilbur, and Weber-Fox, Citation2013), then ERP effects indicating differences between telic and atelic signs would be expected in later time windows (after 300 msec post-sign onset). Linguistic mapping/integration processing might differ between telic and atelic stimuli because they differ in event structure: telic signs, visually, involve an endpoint, while atelic signs do not.

Hypothesis 2: if the mapping between visual forms of telic and atelic signs proceeds in a similar manner (apart from the difference in perceptual processing due to observed velocity differences), no differences in the morphology of ERPs to telic vs. atelic signs would be expected in later time windows (i.e. after the 300 msec post-sign onset); only early perceptual effects (before the 300 msec post-sign onset) would be observed.

Materials and methods

Participants

Twenty seven participants (21 female) were included in the final analysis, with a mean age of 22.96 years (SD = 3.98; range = 16 to 31 years).Footnote5 An a priori power analysis was conducted with G*Power 3.1 (Faul et al., Citation2009) to determine the required sample size for the planned analysis using repeated measures ANOVA. Given an alpha value of .05 and an effect size of .15, 27 participants were required to achieve a power of .97. The participants were native German speakers who all had knowledge in (an)other spoken language(s) (languages varied widely, and included English, Spanish, Italian, Croatian, Portuguese, French, Turkish, Danish, and Swedish). All of the participants were hearing students without competence in any sign language. All of them were right-handed (tested by an adapted German version of the Edinburgh Handedness Inventory; Oldfield, Citation1971). None showed any neurological or psychological disorders at the time of the study. All had normal or corrected vision and were not influenced by medication or other substances impacting cognitive ability. The participants either received 20€ per session for participation, or were awarded credit hours for their study program.

Materials and design

A 1 × 2 design with the two-level factor Telicity (Telic signs; Atelic signs) was used. Thirty-six verbs were presented in each condition (72 critical verb signs), with 72 fillers, resulting in a total of 144 items (stimuli were presented in 6 blocks, each block consisting of 24 signs). The stimuli consisted of signs that were used in the study of Strickland et al. (Citation2015), that is signed atelic and telic verbs from Turkish Sign Language (TİD), Italian Sign Language (LIS) and the Sign Language of the Netherlands (NGT), supplemented by additional signs from Croatian Sign Language (HZJ) to achieve the appropriate stimuli number.Footnote6 For each of the sign languages, 9 atelic and 9 telic verb signs were presented.Footnote7 The fillers consisted of atelic and telic signs of Austrian Sign Language (ÖGS).Footnote8 All of the presented signs constitute unknown signs for the hearing non-signing participants. The signs were recorded individually, that is without any sentence context or carrier phrase. A full list of German words used as answer items is presented in the Appendix.

Four stimuli lists were used for data collection. In order to get a balanced stimuli design (with a balanced occurrence of answer items) two lists were created for randomisation. This was necessary, because the stimuli involved an uneven number of stimuli of 9 telic and 9 atelic verbs per language (this was taken from Strickland et al.’s study design) resulting in a non-balanced distribution of matching/non-matching answer choices on the left/right presentation side (i.e. the side at which the answer was presented on the screen). Both of these lists were pseudo-randomised with the program CONAN two times resulting in 4 lists. The two lists used for randomisation were created in the following way: Each answer item was presented as an answer choice four times in the experiment. Each answer item appears twice on the left side and twice on the right side. Each answer item appears one time as matching answer on the left side (i.e. matching with the previously presented critical stimuli with respect to telicity) and one time as matching answer on the right side. Each answer choice appears one time as non-matching answer on the left side and one time as non-matching answer on the right side. Specific combinations of answer choices were only presented once (e.g. the combination of the answer choices enter and discuss were only presented once). The number of matching answer items was counterbalanced regarding the side of answer presentation (left vs. right): 36 matching answer items occur on the left side and 36 matching answer items occur on the right side. The number of atelic/telic answers was counterbalanced regarding the side of answer presentation (left vs. right): 36 atelic and 36 telic verbs occur on the left side and 36 atelic and 36 telic verbs occur on the right side. The two lists counterbalance the distribution of the matching answers regarding the side of presentation (left vs. right) in that across two participants the answers for both telic and atelic stimuli involve 9 matching answers on the left side and 9 matching answers on the right side with respect to each of the languages (HZJ, LIS, TİD and NGT).

The two lists were pseudo-randomised with the following constraints: At least 10 items had to appear between the same items/verbs (e.g. between the sign for RUN in LIS and RUN in NGT). No more than three videos of the same sign language (signed by the same signer) appeared in a row. No more than three atelic or telic verbs appeared in a row. The (non-)matching answer was allowed to appear maximally three times in a row on the same presentation side (left vs. right). The same answer items appeared with a gap of a minimum of 10 intervening verbs. In each of the six blocks half of the items (N = 12) was telic, the other half was atelic. Within each block stimuli from different languages (NGT, HZJ, LIS and TİD) were each presented at least 2 times and maximally 4 times. In each block 12 filler items occurred (six ÖGS telic and six ÖGS atelic verbs).

Checking the stimuli for dynamic effects

To check whether there were any systematic differences in timing in the stimuli, paired t-tests comparing specific time points within the structures and the durations between those time points were calculated. For each video we defined 4 time points: (1) when the movement of the hand(s) and/or arm(s) start(s), (2) when the target handshape of the sign is established, (3) when the target handshape reaches the target location where the movement of the verb sign starts (this time point was used for measuring ERPs), and (4) when the sign ends (sign offset was defined as the time point when the hand(s) move(s) away from the final location where the verb movement stopped, such as when the hands go down or backwards towards the signer, and/or when the hand orientation changes and/or when the handshape loses its tension). Paired t-tests involved the factor Telicity (with the levels atelic and telic). In the following only significant effects (p ≤ .05) are reported.

Comparison of time points revealed a significant difference for sign offset. For sign offset a significant effect of Telicity [t(35) = 5.26, p < .001] was observed [mean: atelic: 1.98 (.30), telic: 1.61 (.34)].Footnote9 Hence, signs ended significantly later in the atelic condition compared to the telic condition (sign offset occurred on average 362 msec later in the atelic condition).

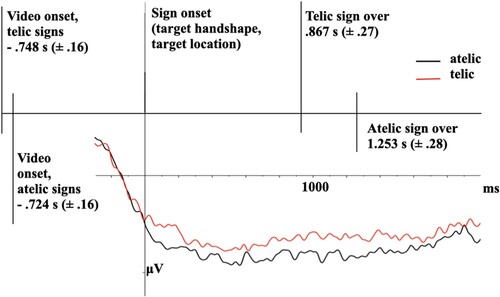

Comparison of durations between time points revealed significant differences for the interval from the time point when the target handshape reaches the target location to the time point when the sign ended. For this interval a significant effect of Telicity [t(35) = 6.36, p < .001] was observed [mean: atelic: 1.25 (.28), telic: .87 (.27)]. Thus, the duration from the time point when the target handshape reaches the target location to sign offset lasts significantly longer in the atelic condition compared to the telic condition (the interval lasted on average 386 msec longer in the atelic condition). illustrates the experimental timeline of stimulus dynamics in telic and atelic verb signs.

Procedure

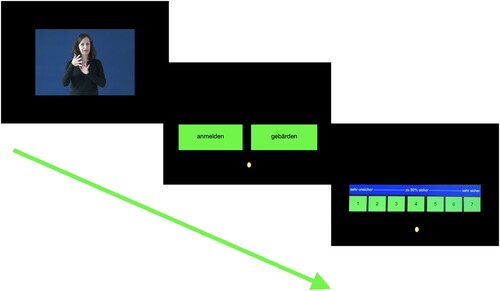

The task was programmed and presented using Presentation (Neurobehavioural Systems, Albany, CA). Participants were seated approximately 60 cm from the monitor. The material was presented in 6 blocks (24 verbs were presented in each block). Every trial started with the presentation of a stimulus video that was presented in the middle of the screen with a size of 820 × 540 (25 fps). The video was followed by a two-choice decision task on the lexical aspect of the observed video, similar to the labelling task as used by Strickland et al. (Citation2015; Experiment 3). Participants were asked to guess the meaning of the presented sign by forced-choice selection from two answer choices in written German. To ensure that the participants could not determine the meaning of the signs by iconically relating the meaning to the sign (Strickland et al., Citation2015), both answer choices constituted incorrect translations, that is, both answers did not show the meaning of the presented sign, but one answer choice matched the stimulus with respect to telicity. Thus, one telic and one atelic lexical item were presented. After the labelling task the participants rated how certain they were of their decision on a 7 point Likert scale. The points on the scale were defined as follows: one stands for “very unsure”, four means “about 50% sure” and seven indicates “very sure”. Participants provide answers by mouse clicks. Prior to the actual experiment, a training block was presented to familiarise participants with task requirements and permit them to ask questions in case anything was unclear. The training block consisted of 4 videos (presenting 2 atelic and 2 telic verbs in two blocks; 2 videos were presented in one block) which were not presented in the actual experiment. The duration of breaks after each block was determined by the participants themselves. See for a schematic illustration of stimuli presentation. Participants were instructed to avoid eye movements and other motions during the presentation of the video material and to view the videos with attention. After the experiment we asked the participants what strategy they used for making their decisions when classifying the unknown material. The instruction was given in written form. The participants filled out a written questionnaire containing demographic questions and questions relevant for EEG data recording (e.g. questions about handedness). Informed consent was obtained in written form.

Figure 2. Schematic illustration of the stimuli presentation: Every trial started with the presentation of a stimulus video that was presented in the middle of the screen with a size of 820 × 540 (25 fps). The video was followed by a two-choice decision task on the lexical aspect of the observed video. Answer choices were presented in written German. After the labelling task the participants rated how certain they were of their decision on a 7 point Likert scale. The points on the scale were defined as follows: one stands for “very unsure”, four means “about 50% sure” and seven indicates “very sure”.

EEG recording

The EEG was recorded from twenty-six electrodes (Fz, Cz, Pz, Oz, F3/4, F7/8, FC1/2, FC5/6, C3/4, CP1/2, CP5/6, P3/7, P4/8, O1/2, PO9/10) fixed on the participant’s scalp by means of an elastic cap (Easy Cap, Herrsching-Breitbrunn, Germany). Horizontal eye movements (HEOG) were registered by electrodes at the lateral ocular muscles (left and right, one at each outer canthi of the eyes) and vertical eye movements (VEOG) were recorded by electrodes fixed above and below the left eye. All electrodes were referenced against the electrode on the left mastoid bone and offline re-referenced against the averaged electrodes at the left and right mastoid. The AFz electrode functioned as the ground electrode. The EEG signal was recorded with a sampling rate of 500 Hz. For amplifying the EEG signal we used a Brain Products amplifier (high pass: .01 Hz). In addition, a notch filter of 50 Hz was used. The electrode impedances were kept below 5 kΩ. Offline, the signal was filtered with a bandpass filter (Butterworth Zero Phase Filters; high pass: .1 Hz, 48 dB/Oct; low pass: 20 Hz, 48 dB/Oct).

Data analysis

Behavioural data

The effects of Telicity and Language were examined separately for (a) the participants’ accuracy regarding the two-choice decision task, (b) the participants’ inferences of telic meanings (i.e. analyzing how many of the atelic and telic signs were classified as telic), (c) the participants’ reaction times regarding the two-choice decision task (starting from the time when the two lexical choices appeared on the screen), (d) the participants’ certainty ratings (on the 7 point Likert scale), and (e) the participants’ reaction times regarding certainty ratings. The statistical analyses were conducted using mixed-effects models. We defined a model that included an interaction between the two-level factor TELICITY (telic vs. atelic) and the four-level factor LANGUAGE (LIS, TİD, HZJ and NGT) as fixed effects. The random effects structure consisted of by-participant and by-item random intercepts. Sum coding was used for main effects testing. This model was used for analysing the dependent variables a-e (listed above).

To analyze participants’ accuracy regarding the two-choice decision task (a) as well as participants’ inferences of telic meanings (b), we used logistic mixed-effects regression (i.e. generalised linear mixed model) using the lme4 package (Bates et al., Citation2015) in R (R Core Team, Citation2018).Footnote10 For Likert-scale certainty ratings, ordinal mixed-effects logistic regression was performed using the ordinal package (Christensen, Citation2019) in R.Footnote11 To analyze reaction times, linear mixed-effects model (LME) analyses were performed using the lme4 package.Footnote12 Reaction time data was log-transformed. A t-value of 2 and above was interpreted as indicating a significant effect (Baayen et al., Citation2008); p-values were assessed using the lmerTest package; p-values were obtained by using maximum likelihood estimators. Effects and interactions with p below .1 are reported.

In order to control for the length of the telic vs. atelic signs (i.e. atelic signs are longer in duration compared to telic signs), the same models were computed including the variable Length (centred) as a continuous covariate.Footnote13

In addition, the reports that participants gave after the experiment about whether they used any strategy for decision making and if yes, what kind of strategy they used, were evaluated.

ERP data

In order to determine the onset and offset of the observed effects, we computed a 50 msec time window analysis. Statistical evaluation of the ERP data was carried out by comparison of the mean amplitude of the ERPs within the time window, per condition and per subject in two regions of interest (ROIs). The factor ROI involved the levels anterior = F3, F4, F7, F8, FC1, FC2, FC5, FC6, Fz, Cz, and posterior = P3, P4, P7, P8, PO9, PO10, O1, O2, Pz, Oz. The signal was corrected for ocular artifacts by the Gratton and Coles method (a method for off-line removal of ocular artifacts; Gratton et al., Citation1983) and screened for artifacts (minimal/maximal amplitude at −75/+75 μV). Data are baseline-corrected to −300 to 0. For each condition not more than 5% of the trials were excluded after artifact rejection. The percentage of trials remaining after artifact rejection is 95% for the telic condition (48 trials excluded) and 96% for the atelic condition (39 trials excluded). Over 66% of the critical trials were left after artifact correction for all individual participants. All items were considered for ERP analysis. Statistical analysis was carried out in a hierarchical manner, that is, only significant interactions (p ≤ .05) were included in a step-down analysis. For statistical analysis of the ERP data an analysis of variance (ANOVA) was computed including the factors of condition TELICITY (atelic vs. telic) and ROI. Only significant ERP effects (p ≤ .05) are reported.

Trigger marking

ERPs were time-locked to the point at which the target handshape reached target location from which the movement of the verb sign began. This point in time – prior to any sign-internal movement – was defined as sign onset. For signs with handshape-internal movement (e.g. involving a handshape change), target handshape was reached when the initial handshape (i.e. the handshape visible before the onset of internal movement) was formed. For two-handed signs, target handshape was reached when both hands showed the handshape.Footnote14

Results

Behavioural data

Accuracy regarding the two-choice decision task

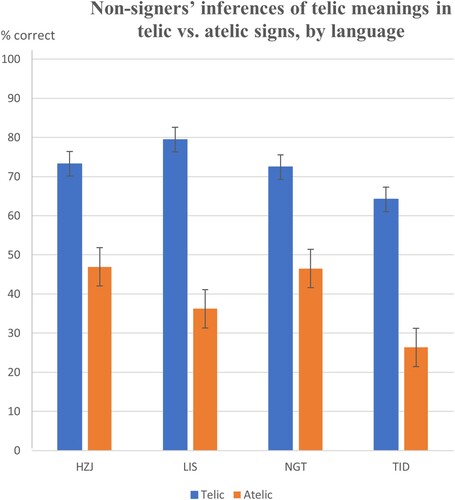

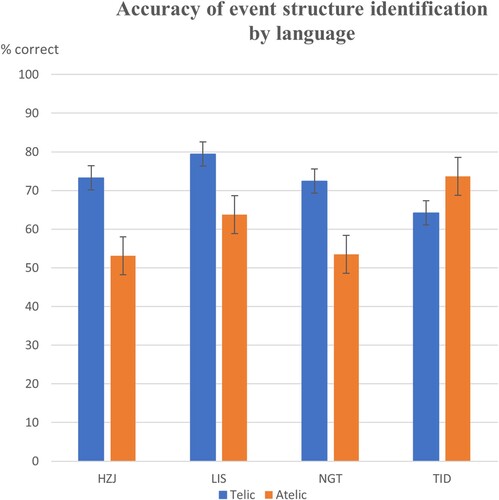

provides an overview of participants’ accuracy in percent per language and telicity (see also Table 1 in Appendix B). Participants gave correct responses above chance level (i.e. with more than 50% accuracy in a 2AFC task) regarding telic and atelic signs; this was observed for all languages tested in the study. Descriptive data analysis suggests that participants were more accurate with respect to the telic condition compared to the atelic condition; this was observed for all languages, except TİD which shows the opposite effect. For all four sign languages, measures of discriminability across participants (Macmillan & Creelman, Citation2004; Macmillan & Kaplan, Citation1985) averaged d' = 1.2 for telic and d' = 0.5 for atelic stimuli. This difference was significant when compared using paired t-test (each participant’s d’ per condition, two-tailed): t(26) = 6.146, p < .001. For three sign languages, when taken individually, telic signs were significantly easier to detect than atelic ones; for TİD, the opposite was true ().

Figure 3. Participants’ accuracy of event structure identification. The error bars show standard deviation.

Table 1. Measures of discriminability across participants.

The mixed-effects model for participants’ accuracy regarding the two-choice decision task revealed a main effect of Telicity approaching significance (Estimate: −.257; Standard error: .154; p = .0951) (for more detailed results see Table 1 in Appendix C). The same model including Length of signs as covariate does not provide a better fit.

Participants’ inferences of telic meanings

In line with Strickland et al. (Citation2015) we analysed the behavioural data according to participants’ inferences of telic meanings, that is whether they classified the signs as telic or atelic. The responses were classified as telic (comprising telic signs that were classified correctly as telic plus atelic signs that were classified incorrectly as telic) and atelic (comprising atelic signs that were classified correctly as atelic plus telic signs that were classified incorrectly as atelic). This analysis showed that participants tended to classify telic signs as telic to a greater extent than they classified atelic signs as telic; this was observed regarding all languages tested in the study.

The mixed-effects model for participants’ inferences of telic meanings regarding the two-choice decision task revealed a significant main effect of Telicity. Atelic signs were less likely classified as telics (Estimate: −.9217; Standard error: .1547; p < .001) (for more detailed results see Table 2 in Appendix C). The same model including Length of signs as covariate does not provide a better fit. provides an overview of participants’ inferences of telic meanings in percent per language and telicity (see also Table 2 in Appendix B).

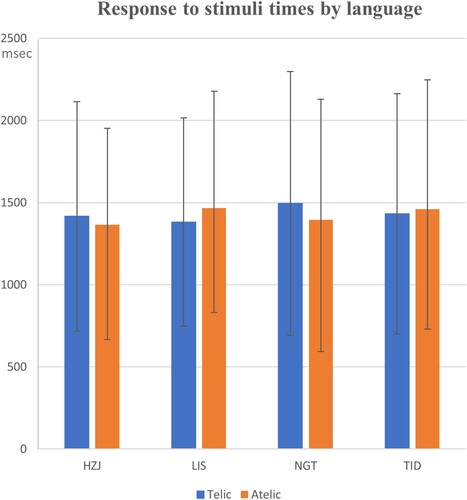

Reaction times regarding the two-choice decision task

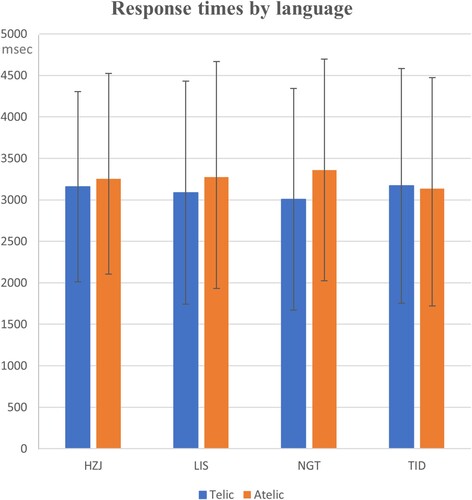

provides an overview of participants’ reaction times for the two-choice decision task per language and telicity (see also Table 3 in Appendix B).

Figure 5. Participants’ reaction times regarding the two-choice decision task. The error bars show standard deviation.

Participants made their decisions faster in the telic condition compared to the atelic condition; in descriptive analysis this was observed for all languages, except TİD. However, linear mixed models did not indicate an effect of Language, while the effect of Telicity on response times approached significance (Estimate: .0265298; Standard error: .0150037; t = 1.768; p = .0813) (for more detailed results see Table 3 in Appendix C). The same model including Length of signs as covariate does not provide a better fit.

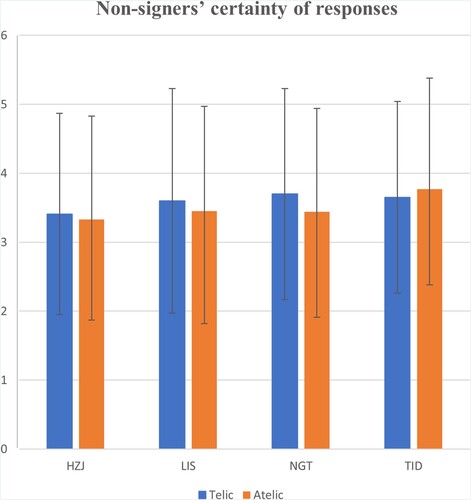

Certainty ratings on Likert scale

provides an overview of participants’ certainty ratings per language and telicity (see also Table 4 in Appendix B).

Descriptive data analysis shows that regarding both the atelic and telic condition, participants were slightly below 50% sure about their decisions (on the Likert scale 4 was defined as “about 50% sure”). The mixed-effects model for participants’ certainty ratings revealed a main effect of Language [HZJ] approaching significance (Estimate: −.265328; Standard error: .158417; p = .094). HZJ signs were rated lower than average (i.e. lower than the grand mean over all conditions) (for more detailed results see Table 4 in Appendix C). The same model including Length of signs as covariate does not provide a better fit.

Reaction times regarding certainty ratings on Likert scale

provides an overview of the reaction times for participants’ certainty ratings per language and telicity (see also Table 5 in Appendix B). The mixed-effects model for participants’ reaction times regarding certainty ratings revealed an interaction Telicity[atelic] × Language[NGT] approaching significance (Estimate: −.029803; Standard error: .017597; t = −1.694; p = .0947) (for more detailed results see Table 5 in Appendix C). A post hoc Tukey test did not reveal any significant effects. The same model including Length of signs as covariate does not provide a better fit.

Strategy that was used by the participants for doing the two-choice decision task

The participants reported the following strategies: They used their knowledge about gestures. In particular, they compared the signs with gestures that hearing persons use. They thought about which gestures they would use, how they would gesture the word, or which movement they would use as a gesture to support the conversation. One participant reported that she imagined expressing the movements by herself and thought about what this might mean.

Participants also looked at the facial expression of the signer, that is whether the signer showed a positive or negative facial expression (smiling vs. serious face).

The participants tried to build up an association between the (movement of the) sign and the answer choices, or to build up an association between the sign and “a real movement”, or an activity/action. They tried to assign the movements to the activities. One participant thought about which action (which word) the sign reminds her of more. Another participant reported that she instinctively looked for associations between movement and finger position and accordingly tried to determine a natural commonality. Participants tried to find similarities between the sign and the actual action, that is, they tried to assess which movements could be similar to the activities. They thought about which one of the two activities the sign is more similar to. One participant tried to infer gestures of what the activity looks like. Another participant reported that she compared the two answer terms with each other, that is contrasting physical activities and non/less-physical activities, suggesting that the potential of representing these concepts iconically in sign languages was considered.

Participants not only looked at the movement of the signs for decision making, but also at specific features of motion (i.e. direction, speed, duration): They looked at whether the movement was fast or slow, up or down, whether the sign was directed towards the body or away from the body. One participant reported that she made her decision depending on whether something happens slowly or fast; she looked at the duration of the movement.

Many of the participants noted that lip reading was possible with one specific signer who used German mouthing (and who signed the ÖGS filler material for the present study).

Some of the participants also reported that they sometimes proceeded according to the exclusion criterion. Also guessing was used for doing the task.

ERP data

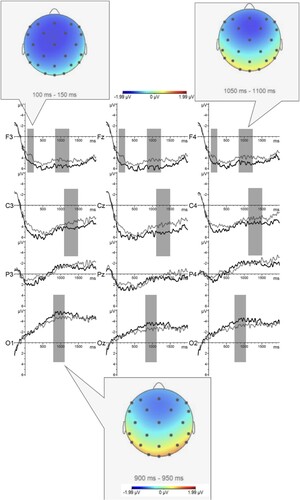

With respect to the time point when the target handshape of the sign reached target location, data analysis revealed a more anteriorly distributed negative effect for telic compared to atelic signs in the 0 to 200 msec time window, a broadly distributed negative effect in the 200 to 250 msec and the 300 to 400 msec time windows, and an anterior negative effect in the 500 to 550 msec time window. Additionally, anterior negative effects for telic compared to atelic signs were observed in the 650 to 800 msec, in the 850 to 1300 msec and the 1400 to 1500 msec time windows. Furthermore, posteriorly distributed positive effects for telic compared to atelic signs were revealed in the 600 to 700 msec time window, in the 750 to 1050 msec time window and in the 1250 to 1300 msec time window (see ).

Figure 8. ERP and topographic differences between neural responses to atelic (black line) and telic (grey line) stimuli, starting from time of target handshape in target location for sign onset. Time ranges with statistically significant differences between condition are marked by grey rectangles and illustrated by topographic out-takes.

The 50 msec time window analysis is presented in (see Appendix D for a more detailed table including statistical ANOVA results as well as mean amplitudes and standard deviations for conditions within non-significant time windows). Only significant effects (p ≤ .05) are reported.

Table 2. ERP – 50 msec time window analysis.

Summary of results

Behavioural data analysis revealed that non-signers classified telic/atelic signs with relatively high accuracy. Telic signs were correctly identified as telic more often. Statistical analysis of decision times offered some evidence that decisions for telic stimuli were made faster than for atelic stimuli (p = .0813).Footnote15 The certainty ratings showed that participants were slightly below 50% sure about their decisions regarding both the atelic and telic condition. The length of the signs does not impact the behavioural data.

Processing differences for telic compared to atelic verbs were also revealed at the neurophysiological level. Beginning from sign onset (i.e. target handshape positioned in target location), statistically significant neural differences in processing appeared across several time ranges anteriorly (0–200 msec, 500–550 msec, 650–800 msec, 850–1300 msec, and 1400–1500 msec), posteriorly (600–700 msec, 750–1050 msec, and 1250–1300 msec), and in a broadly distributed manner (200–250 msec and 300–400 msec) (see ; ).

Discussion

The present study examined the neurophysiological bases of action-to-language mapping in hearing non-signers, carrying out an A2FC task of classifying unfamiliar verb signs as bounded or unbounded. Of particular focus was the question as to the possible differences in neural processing mechanisms for telic vs. atelic verb signs with respect to the integration of sensory perceptual features with linguistic concepts.

Behavioural data analysis indicates that non-signers classify signs as telic or atelic with high accuracy, replicating previous results (Kuhn et al., Citation2021; Strickland et al., Citation2015). In a majority of the sign languages, telics were classified more accurately as compared to atelics. Statistical analysis offered some evidence that in the telic condition participants were able to respond faster (trending p = .0813) and with more certainty (trending p = .094) about their decision. The finding that participants classified telic signs more accurately than atelic signs is in line with Ji and Papafragou (Citation2020) who report that the category of bounded events was identified with greater ease compared to that of unbounded events. Ji and Papafragou (Citation2020) suggest that the involvement of an internal structure that culminates in defined endpoints makes bounded events easier to individuate, track, compare to each other and generalise over compared to unbounded events. The present data extends this observation with respect to sign language stimuli and the use of a linguistic task.

ERP data analysis revealed differences in processing time course between telic vs. atelic signs in early (prior to 300 msec past stimulus onset), as well as later time windows. The effects in early time windows (starting at sign onset) likely reflect the difference in perceptual processing of sign velocity and acceleration for decision-making.Footnote16 Anterior and posterior ERP effects for telics compared to atelics appearing in later time windows likely reflect differences in cognitive/linguistic processing in support of Hypothesis 1, proposing qualitatively different mapping/integration processes for telic verbs. Significant effects observed for time windows after 867 msec (850–1300 msec and 1400–1500 msec time windows) could also involve offset processing of the telic verbs (which ended before the atelics which ended on average at 1253 msec). That the observed ERP effects in early vs. later time windows reflect different mechanisms, that is, perceptual processing as well as the integration of perceptual features with linguistic concepts, is evidenced by the spatiotemporal distribution of the effects. In the initial time windows (0 to 200 msec), only anterior electrodes demonstrated statistically significant differences between conditions. The later effects, however, were observed either as a broadly distributed activation, or effects differing in valence between anterior and/or posterior electrodes.

Previous work showed that the telicity in verbs may facilitate online language processing, for example, in resolution of garden path sentences. Malaia et al. (Citation2009; Citation2012) investigated the effects of verbal telicity on syntactic reanalysis of reduced relative clauses, in which the verb in the relative clause was either telic or atelic. Sentences with atelic verbs imposed higher processing costs at the disambiguation point, as compared to sentences with telic verbs. In interpreting a garden-path sentence, two distinct processing steps are required: identification of the event (i.e. retrieval of the event template with participant thematic roles from memory), and assignment of thematic roles, which is biased toward assignment of Agent/Subject thematic role to the first noun in the sentence. Comprehension of reduced relative clauses, with a reversed order of arguments (e.g. “The actress awakened by the writer left in a hurry”.) prompts online re-assignment of thematic roles. This step appears to proceed more rapidly in sentences with telic verbs, potentially because, by virtue of having the boundary, they trigger the extraction of the event template with the thematic roles, thereby facilitating thematic role re-assignment. Atelic verbs, which do not provide the conceptual boundary for event segmentation, do not appear to trigger the same processing mechanism (e.g. “The actress worshipped by the writer left in a hurry”).

In the present study, participants viewed unfamiliar signs, followed by an offline classification task. ERP effects thus might reflect the segmentation operation the participants had to carry out for visually bounded, that is, telic signs. Although the segmentation operation might require more effort (e.g. attentional allocation and memory reference) at the point of being carried out, it is likely to facilitate the participants’ performance in the classification task later (which was carried out offline, and did not contain an inherent time restriction). Therefore, the observed online ERP effects for telic compared to atelic signs (observed in later time windows) might potentially stem from two different sources: recruitment of additional processing resources for telics in the segment toward the end of each sign, or release of cognitive resources past sign offset. The crucial point, however, is that the difference is observable at the neurophysiological level, suggesting that action-to-language mapping processes differ between visually bounded and unbounded events (in this case, telic vs. atelic verb signs). Whether the same ERP effects would be observed if a non-linguistic task were used is not entirely clear. Earlier research (e.g. Huettig et al., Citation2010; Trueswell & Papafragou, Citation2010) suggests that it may be that language-specific factors do not shape core biases in event perception and memory, but rather that language can be recruited optionally for encoding events (“thinking for speaking”). However, while signers might rely on conceptual mapping to language when the task facilitates such mapping, signers have been shown to process visual boundedness as linguistic distinction (Malaia, Wilbur, et al., Citation2012). The present findings might be indicative of the potential evolutionary pathway for co-optation of physical-perceptual motion features into the linguistic structure of sign languages. Cross-linguistic similarities in event visibility (i.e. visual representation of event structure) have been described for a number of unrelated sign languages (Malaia & Milkovic, Citation2021). Sign languages, however, further differ in linguistic representation of event structure, such that, for example, realisation of end-state marking might take on various forms. For example, the end state of the verb “arrive” in ASL is expressed by the phonological feature [direction] involving a path movement and a rapid movement to a stop with contact of the two hands, while ÖGS uses the feature [supination] involving a change of orientation (Schalber, Citation2006). Сomparative analysis of motion capture data indicates a variety of strategies for the mapping between physical parameters for articulator motion, and linguistic features that incorporate boundedness. For example, in ASL, the endpoint of the event in telic signs is marked phonologically by a significantly faster deceleration at the end of the verb, as compared to atelic signs (Malaia et al., Citation2008; Malaia & Wilbur, Citation2012). HZJ recruits the same physical parameter – speed of dominant hand motion – to express a regular morphological process that is used to produce an alternation between two forms of a verb from one stem, such that the same root would appear with shorter, sharper movement to convey bounded (both perfective and telic) meaning (Milković, Citation2011). In ÖGS the distinction between telic and atelic verb signs is encoded phonologically (like in ASL), such that telic signs are produced with faster deceleration/acceleration and jerk, and are shorter in duration compared to atelic signs (Krebs et al., Citation2021). Thus, although all three languages mark event structure in an iconic way (as proposed by the EVH), they show language-specific characteristics with respect to how event structure is represented and expressed. However, despite these differences, non-signers can classify these iconically motivated forms accurately, because articulator motion profiles overall are similar to motion profiles of observed events, and use salient end point marking to represent event structure. We would like to note that telic signs in TİD might utilise somewhat different motion patterns as compared to telic signs of other sign languages (LIS, NGT, and HZJ), which might be yet another illustration of cross-linguistic differences among sign languages. The nature of dynamic cue(s) for telicity that have resulted in the observed differences in non-signer responses to TİD vs. other sign language stimuli in the behavioural task remains an open question for further research.

An interesting question raised in debriefing concerned non-manuals – in sign language research, this term encompasses a variety of markers, from syntactic to semantic and pragmatic, performed using facial action – such as brow raise, brow furrow, mouth position changes, etc. Several of the participants (N = 5, 18.52%) in the present study reported that they looked not only at the hands and arms, but also considered facial expression when trying to make sense of what they saw. None of these participants responded that facial expression was the only thing they looked at. Among the stimuli presented, the only type with consistent mouthing were the fillers in ÖGS, which does have mouth movements equivalent to spoken German lip-movements (Schalber, Citation2015). A follow-up investigation of mouthing in perception of ÖGS is ongoing.

The videos of LIS, NGT, and TID used in the present study were previously used by Strickland et al. (Citation2015) behavioural investigation, which assessed whether the gestural boundary constituted a marker for telicity (Strickland et al., Citation2015, experiments 7–10). The findings suggested that the gestural boundaries and trilled movement were two main phonological features across sign languages on which non-signing participants based their classification. Specifically, participants were asked to rate the degree to which they perceived a “gestural boundary” or repetitive movement present in the signs. Strickland et al. (Citation2015) reported that signs classified as telic were rated as having more boundaries than repeated movement, whereas signs classified as atelic were rated as having more repeated movement than boundaries. Based on debriefing result, the participants in the present study also used the movement parameter to classify the verbs.

Conclusion

The present study is the first, to our knowledge, to investigate the time course of neural mechanisms for how visual features of events, such as movement dynamics, are mapped to linguistic event structure. Our results demonstrate that non-signers both identify the perceptual differences in motion features when viewing signs denoting bounded and unbounded events, but also recruit different processing mechanisms when integrating the perceptual information with linguistic concepts in their native language. The present work provides further neurophysiological evidence for the event segmentation theory in perception (Zacks & Swallow, Citation2007; Zacks & Tversky, Citation2001), as well as the EVH (Wilbur, Citation2003, Citation2008, Citation2010). The observed mechanisms might be indicative of the potential evolutionary pathway for co-optation of perceptual features into the linguistic structure of sign languages (cf. Bradley et al., Citation2022). Sign language phenomena that have a connection between visual form and underlying meaning, and are similar in unrelated sign languages, provide a unique perspective on the linking mechanisms between language universals and perceptual features, and how these impact perception, cognition, and action in linguistic and non-linguistic contexts.

Supplemental Material

Download PDF (189.2 KB)Acknowledgements

We want to thank Brent Strickland and his colleagues for providing their video material, Marijke Scheffener and Roland Pfau for re-signing and filming the NGT videos for our study, Brigita Sedmak and Marina Milković for filming and providing the HZJ video material, and Waltraud Unterasinger for signing the video material in Austrian Sign Language. Many thanks to all participants taking part in this study.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

Notes

1 For several sign languages free morphemes (auxiliaries) as well as bound morphemes (expressed by modulation of the movement parameter) indicating various kinds of grammatical aspect marking have been described (e.g. Pfau et al., Citation2012).

2 For the purposes of the present analysis, we will focus on inherently telic verbs, that is verbs which are lexically telic (as opposed to telicity emerging at the verb phrase level, cf. Tenny, Citation1994; Vendler, Citation1967; Verkuyl, Citation1972).

3 Gestures that contribute to the semantics of an expression and which are used instead of spoken words during spoken language communication.

4 Per convention Deaf with upper-case D refers to deaf or hard of hearing humans who define themselves as members of the sign language community. In contrast, deaf refers to audiological status.

5 The higher number of female participants was due to the difficulty of obtaining a gender-balanced participant ratio during intermittent lockdowns resulting from COVID-19.

6 We aimed to replicate the findings of Strickland et al. (Citation2015) in order to have a sound basis for interpreting the findings on neural processing.

7 The mean length of the critical stimulus videos as well as standard deviation and range of video length per condition (values given in seconds): Atelics: Mean: 2.06; sd: .47; Range: 1–3; Telics: Mean: 1.75; sd: .55; Range: 1–3.

8 ÖGS telic/atelic signs functioned as fillers in this experiment, because these stimuli include an additional information channel, mouthing, which non-signers can use for classifying unknown signs. Mouthing constitutes a (part of a) spoken language word (of the surrounding spoken language; German in the case of ÖGS) which is silently produced by the lips simultaneously to the other sign parameters (Sandler & Lillo-Martin, Citation2006).Thus, it is assumed that different mechanisms are involved in the processing of the ÖGS material compared to the non-ÖGS stimuli (Krebs et al., Citation2023).

9 Mean durations are given in seconds; standard deviations are presented in parentheses.

10 coded in R as glmer = glmer(Accuracy ∼ Telicity*Language + (1|Participant) + (1|Item)).

coded in R as glmer = glmer(Telic response ∼ Telicity*Language + (1|Participant) + (1|Item)).

11 coded in R as clmm = clmm(Rating ∼ Telicity*Language + (1|Participant) + (1|Item)).

12 coded in R as lme = lmer(log(Reaction time + 1) ∼ Telicity*Language + (1|Participant) + (1|Item)).

13 The duration of the signs was calculated from the time point when the target handshape reaches the target location where the movement of the verb sign starts (defined as sign onset) to the offset of the sign (when the hand(s) move(s) away from the final location where the verb movement stopped, and/or when the hand orientation changes and/or when the handshape loses its tension). Mean durations and standard deviations (SD) for atelics and telics (in seconds): Atelics: Mean = 1.253; SD = .273; Telics: Mean = .867; SD = .264.

14 The exception to this were two-handed signs with alternating (i.e. non-symmetric) movement: for some of these signs it was observed that the movement of the sign begins before the target handshape was entirely established on the non-dominant hand; in these cases target handshape was determined to be reached when it was established on the dominant hand (even if the handshape was not entirely established on the non-dominant hand).

15 p-values are graded measures of the strength of evidence against the null hypothesis (Amrhein et al., Citation2017). In this case, there is 8% probability that the response times to atelic and telic stimuli do not differ, and 92% probability that responses to telic stimuli are faster than those to atelic stimuli. Thus, marginally significant p = .08 offers some evidence against the null hypothesis, although it does not approach the threshold of 5%.

16 Visual perceptual processing and decision-making result in frontal ERP effects due to insular and executive cortex engagement as early as 100 msec post-stimulus onset (cf. Perri et al., Citation2019; Mussini et al., Citation2020).

References

- Amrhein, V., Korner-Nievergelt, F., & Roth, T. (2017). The earth is flat (p<0.05): significance thresholds and the crisis of unreplicable research. PeerJ, 5, e3544. https://doi.org/10.7717/peerj.3544

- Baayen, R. H., Davidson, D. J., & Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59(4), 390–412. https://doi.org/10.1016/j.jml.2007.12.005

- Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1–48. https://doi.org/10.18637/jss.v067.i01

- Blumenthal-Dramé, A., & Malaia, E. (2019). Shared neural and cognitive mechanisms in action and language: The multiscale information transfer framework. WIRES Cognitive Science, 10(2), e1484. doi:10.1002/wcs.1484

- Bradley, C., Malaia, E. A., Siskind, J. M., & Wilbur, R. B. (2022). Visual form of ASL verb signs predicts non-signer judgment of transitivity. PLoS One, 17(2), e0262098. https://doi.org/10.1371/journal.pone.0262098

- Cardin, V., Campbell, R., MacSweeney, M., Holmer, E., Rönnberg, J., & Rudner, M. (2020). Trends in language acquisition research. Understanding Deafness, Language and Cognitive Development. Essays in Honour of Bencie Woll, 25, 159–181. https://doi.org/10.1075/tilar.25.09car

- Christensen, R. H. B. (2019). “Ordinal–regression models for ordinal data.” R package version 2019.12-10. https://CRAN.R-project.org/package=ordinal

- Corina, D. P., & Lawyer, L. A. (2019). The neural organization of signed language. In G. I. de Zubicaray, & N. O. Schiller (Eds.), The Oxford handbook of neurolinguistics (pp. 402–424). Oxford University Press.

- Emmorey, K. (2021). New perspectives on the neurobiology of sign languages. Frontiers in Communication, 6(6), 1–20. https://doi.org/10.3389/fcomm.2021.748430

- Evans, N., & Levinson, S. C. (2009). The myth of language universals: Language diversity and its importance for cognitive science. Behavioral and Brain Sciences, 32(5), 429–448. doi:10.1017/S0140525X0999094X

- Eyer, J. A., Leonard, L. B., Bedore, L. M., McGregor, K. K., Anderson, B., & Viescas, R. (2002). Fast mapping of verbs by children with specific language impairment. Clinical Linguistics & Phonetics, 16(1), 59–77. https://doi.org/10.1080/02699200110102269

- Faul, F., Erdfelder, E., Buchner, A., & Lang, A. G. (2009). Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41(4), 1149–1160. https://doi.org/10.3758/BRM.41.4.1149

- Gratton, G., Coles, M. G., & Donchin, E. (1983). A new method for off-line removal of ocular artifact. Electroencephalography and Clinical Neurophysiology, 55(4), 468–484. https://doi.org/10.1016/0013-4694(83)90135-9

- Greenberg, J. H. (1963). Some universals of grammar with particular reference to the order of meaningful elements. In J. H. Greenberg (Ed.), Universals of human language (pp. 73–113). MIT Press.

- Greene, M. R., & Hansen, B. C. (2020). Disentangling the independent contributions of visual and conceptual features to the spatiotemporal dynamics of scene categorization. The Journal of Neuroscience, 40(27), 5283–5299. doi:10.1523/JNEUROSCI.2088-19.2020

- Greenhouse, S. W., & Geisser, S. (1959). On methods in the analysis of profile data. Psychometrika, 24(2), 95–112. doi:10.1007/BF02289823

- Grose, D. (2012). Lexical semantics: Semantic fields and lexical aspect. In R. Pfau, M. Steinbach, & B. Woll (Eds.), Sign language. An international handbook (pp. 432–462). Mouton de Gruyter.

- Gutiérrez-Sigut, E., & Baus, C. (2019). Lexical processing in sign language comprehension and production. In J. Quer, R. Pfau, & A. Herrmann (Eds.), The Routledge handbook of theoretical and experimental sign language research. Routledge. https://doi.org/10.31219/osf.io/qr769

- Hickok, G., & Bellugi, U. (2010). Neural organization of language: Clues from sign language aphasia. In J. Guendouzi, F. Loncke, & M. J. Williams (Eds.), The handbook of psycholinguistic & cognitive processes: Perspectives in communication disorders (pp. 685–706). Taylor & Francis.

- Huettig, F., Chen, J., Bowerman, M., & Majid, A. (2010). Do language-specific categories shape conceptual processing? Mandarin classifier distinctions influence eye gaze behavior, but only during linguistic processing. Journal of Cognition and Culture, 10(1-2), 39–58. doi:10.1163/156853710X497167

- Ji, Y., & Papafragou, A. (2020). Is there an end in sight? Viewers’ sensitivity to abstract event structure. Cognition, 197, 104197. doi:10.1016/j.cognition.2020.104197

- King, R., & Abner, N. (2018). Representation of event structure in the manual modality: Evidence for a universal mapping bias. In Workshop on the Emergence of Universals. The Ohio State University.

- Klima, E. S., & Bellugi, U. (1979). The signs of language. Harvard University Press.

- Klima, E. S., Tzeng, O. J., Fok, Y. Y. A., Bellugi, U., Corina, D., & Bettger, J. G. (1999). From sign to script: Effects of linguistic experience on perceptual categorization. Journal of Chinese Linguistics Monograph Series, 13, 96–129. https://www.jstor.org/stable/23826111

- Krebs, J., Malaia, E. A., Wilbur, R. B., & Roehm, D. (2023). Visual boundaries in sign motion: Processing with and without lip-reading cues. Experiments in Linguistic Meaning, 2, 164–175. https://doi.org/10.3765/elm.2.5336

- Krebs, J., Strutzenberger, G., Schwameder, H., Wilbur, R. B., Malaia, E., & Roehm, D. (2021). Event visibility in sign language motion: Evidence from Austrian Sign Language (ÖGS). Proceedings of the Annual Meeting of the Cognitive Science Society, 43, 362–368. https://escholarship.org/uc/item/67r14298

- Krifka, M. (1992). Thematic relations as links between nominal reference and temporal constitution. In I. A. Sag, & A. Szabolcsi (Eds.), Lexical matters (pp. 29–53). CSLI Publications.

- Kuhn, J., Geraci, C., Schlenker, P., & Strickland, B. (2021). Boundaries in space and time: Iconic biases across modalities. Cognition, 210, 104596. https://doi.org/10.1016/j.cognition.2021.104596

- Kurby, C. A., & Zacks, J. M. (2008). Segmentation in the perception and memory of events. Trends in Cognitive Sciences, 12(2), 72–79. https://doi.org/10.1016/j.tics.2007.11.004

- Macmillan, N. A., & Creelman, C. D. (2004). Detection theory: A user’s guide. Psychology Press.

- Macmillan, N. A., & Kaplan, H. L. (1985). Detection theory analysis of group data: Estimating sensitivity from average hit and false-alarm rates. Psychological Bulletin, 98(1), 185. doi:10.1037/0033-2909.98.1.185

- Malaia, E. (2014). It still isn’t over: Event boundaries in language and perception. Language and Linguistics Compass, 8(3), 89–98. https://doi.org/10.1111/lnc3.12071

- Malaia, E., Borneman, J., & Wilbur, R. B. (2008). Analysis of ASL motion capture data towards identification of verb type. In J. Bos, & R. Delmonte (Eds.), Semantics in text processing. STEP 2008 conference proceedings (pp. 155–164). College Publications.

- Malaia, E., & Milković, M. (2021). Aspect: Theoretical and experimental perspectives. In J. Quer, R. Pfau, & A. Herrmann (Eds.), The Routledge handbook of theoretical and experimental sign language research (pp. 194–212). Routledge.

- Malaia, E., Ranaweera, R., Wilbur, R. B., & Talavage, T. M. (2012). Event segmentation in a visual language: Neural bases of processing American Sign Language predicates. Neuroimage, 59(4), 4094–4101. https://doi.org/10.1016/j.neuroimage.2011.10.034

- Malaia, E., & Wilbur, R. B. (2012). Kinematic signatures of telic and atelic events in ASL predicates. Language and Speech, 55(3), 407–421. https://doi.org/10.1177/0023830911422201

- Malaia, E., Wilbur, R. B., & Milković, M. (2013). Kinematic parameters of signed verbs. Journal of Speech, Language, and Hearing Research, 56(5), 1677–1688. https://doi.org/10.1044/1092-4388(2013/12-0257)

- Malaia, E., Wilbur, R. B., & Weber-Fox, C. (2009). ERP evidence for telicity effects on syntactic processing in garden-path sentences. Brain and Language, 108(3), 145–158. https://doi.org/10.1016/j.bandl.2008.09.003

- Malaia, E., Wilbur, R. B., & Weber-Fox, C. (2012). Effects of verbal event structure on online thematic role assignment. Journal of Psycholinguistic Research, 41(5), 323–345. https://doi.org/10.1007/s10936-011-9195-x

- Malaia, E., Wilbur, R. B., & Weber-Fox, C. (2013). Event end-point primes the undergoer argument: Neurobiological bases of event structure processing. In B. Arsenijević, B. Gehrke, & R. Marín (Eds.), Studies in the composition and decomposition of event predicates. Studies in linguistics and philosophy, vol 93 (pp. 231–248). Springer.

- Milković, M. (2011). Verb classes in Croatian Sign Language (HZJ): Syntactic and semantic properties [Doctoral dissertation]. University of Zagreb.

- Motamedi, Y., Montemurro, K., Abner, N., Flaherty, M., Kirby, S., & Goldin-Meadow, S. (2022). The seeds of the noun–verb distinction in the manual modality: Improvisation and interaction in the emergence of grammatical categories. Languages, 7(2), 95. https://doi.org/10.3390/languages7020095

- Mussini, E., Berchicci, M., Bianco, V., Perri, R. L., Quinzi, F., & Di Russo, F. (2020). The role of task complexity on frontal event-related potentials and evidence in favour of the epiphenomenal interpretation of the Go/No-Go N2 effect. Neuroscience, 449, 1–8. https://doi.org/10.1016/j.neuroscience.2020.09.042

- Oldfield, R. C. (1971). The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia, 9(1), 97–113. doi:10.1016/0028-3932(71)90067-4

- Perri, R. L., Berchicci, M., Bianco, V., Quinzi, F., Spinelli, D., & Di Russo, F. (2019). Perceptual load in decision making: The role of anterior insula and visual areas. An ERP study. Neuropsychologia, 129, 65–71. https://doi.org/10.1016/j.neuropsychologia.2019.03.009

- Pfau, R., Steinbach, M., & Woll, B. (2012). Tense, aspect, and modality. In R. Pfau, M. Steinbach, & B. Woll (Eds.), Sign language. An international handbook (pp. 186–204). Mouton de Gruyter.

- Pustejovsky, J. (1991). The syntax of event structure. Cognition, 41(1–3), 47–81. doi:10.1016/0010-0277(91)90032-Y

- R Core Team. (2018). R: A language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org/

- Rice, M. (2003). A unified model of specific and general language delay: Grammatical tense as a clinical marker of unexpected variation. In Y. Levy, & J. Schaeffer (Eds.), Language competence across populations: Toward a definition of specific language impairment (pp. 63–95). Erlbaum.

- Sandler, W., & Lillo-Martin, D. (2006). Sign languages and linguistic universals. Cambridge University Press.

- Schalber, K. (2006). What is the chin doing? An analysis of interrogatives in Austrian sign language. Sign Language & Linguistics, 9(1-2), 133–150. https://doi.org/10.1075/sll.9.1-2.08sch

- Schalber, K. (2015). Austrian sign language. In J. Bakken Jepsen, G. De Clerck, S. Lutalo-Kiingi, & W. B. McGregor (Eds.), Sign languages of the world: A comparative handbook (pp. 105–128). De Gruyter.

- Schlenker, P. (2020). Gestural grammar. Natural Language & Linguistic Theory, 38(3), 887–936. https://doi.org/10.1007/s11049-019-09460-z

- Schwartz, R. G., & Leonard, L. B. (1984). Words, objects, and actions in early lexical acquisition. Journal of Speech, Language, and Hearing Research, 27(1), 119–127. https://doi.org/10.1044/jshr.2701.119

- Sheng, L., & McGregor, K. K. (2010). Object and action naming in children with specific language impairment. Journal of Speech, Language, and Hearing Research, 53(6), 1704–1719. https://doi.org/10.1044/1092-4388(2010/09-0180)

- Speer, N. K., Zacks, J. M., & Reynolds, J. R. (2007). Human brain activity time-locked to narrative event boundaries. Psychological Science, 18(5), 449–455. https://doi.org/10.1111/j.1467-9280.2007.01920.x

- Strickland, B., Geraci, C., Chemla, E., Schlenker, P., Kelepir, M., & Pfau, R. (2015). Event representations constrain the structure of language: Sign language as a window into universally accessible linguistic biases. Proceedings of the National Academy of Sciences, 112(19), 5968–5973. https://doi.org/10.1073/pnas.1423080112

- Tenny, C. (1994). Aspectual roles and the syntax-semantics interface. Kluwer.

- Trueswell, J. C., & Papafragou, A. (2010). Perceiving and remembering events cross-linguistically: Evidence from dual-task paradigms. Journal of Memory and Language, 63(1), 64–82. https://doi.org/10.1016/j.jml.2010.02.006

- Vendler, Z. (1967). Linguistics in philosophy. Cornell University Press.

- Verkuyl, H. (1972). On the compositional nature of aspects. Reidel.

- Wilbur, R. B. (2003). Representations of telicity in ASL. Chicago Linguistic Society, 39(1), 354–368.

- Wilbur, R. B. (2005). A reanalysis of reduplication in American Sign Language. In B. Hurch (Ed.), Studies in reduplication (pp. 593–620). Berlin/New York: de Gruyter.

- Wilbur, R. B. (2008). Complex predicates involving events, time, and aspect: Is this why sign languages look so similar? In J. Quer (Ed.), Signs of the time: Selected papers from TISLR 2004 (pp. 217–250). Signum Press.

- Wilbur, R. B. (2009). Productive reduplication in ASL, a fundamentally monosyllabic language. In M. Kenstowicz (Ed.), Data and Theory: Papers in Phonology in Celebration of Charles W. Kisseberth, a special issue of Language Sciences (Vol. 31, pp. 325–342).

- Wilbur, R. B. (2010). The semantics-phonology interface. In D. Brentari (Ed.), Cambridge language surveys: Sign languages (pp. 357–382). Cambridge University Press.

- Wilbur, R. B., Klima, E. S., & Bellugi, U. (1983). Roots: On the search for the origins of signs in ASL. Chicago Linguistic Society, 19, 314–336.

- Wilbur, R. B., Malaia, E., & Shay, R. A. (2012). Degree modification and intensification in American Sign Language adjectives. In M. Aloni, V. Kimmelman, F. Roelofsen, G. W. Sassoon, K. Schulz, & M. Westera (Eds.), Logic, language and meaning (pp. 92–101). Springer.

- Zacks, J. M., Braver, T. S., Sheridan, M. A., Donaldson, D. I., Snyder, A. Z., Ollinger, J. M., Buckner, R. L., & Raichle, M. E. (2001). Human brain activity time-locked to perceptual event boundaries. Nature Neuroscience, 4(6), 651–655. https://doi.org/10.1038/88486

- Zacks, J. M., & Swallow, K. M. (2007). Event segmentation. Current Directions in Psychological Science, 16(2), 80–84. https://doi.org/10.1111/j.1467-8721.2007.00480.x

- Zacks, J. M., Swallow, K. M., Vettel, J. M., & McAvoy, M. P. (2006). Visual motion and the neural correlates of event perception. Brain Research, 1076(1), 150–162. https://doi.org/10.1016/j.brainres.2005.12.122

- Zacks, J. M., & Tversky, B. (2001). Event structure in perception and conception. Psychological Bulletin, 127(1), 3–21. https://doi.org/10.1037/0033-2909.127.1.3

- Zacks, J. M., Tversky, B., & Iyer, G. (2001). Perceiving, remembering, and communicating structure in events. Journal of Experimental Psychology: General, 130(1), 29–58. https://doi.org/10.1037/0096-3445.130.1.29