?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Non-literal interpretations of implausible sentences such as The mother gave the candle the daughter have been taken as evidence for a rational error-correction mechanism that reconstructs the intended utterance from the ill-formed input (…gave the daughter the candle). However, the good-enough processing framework offers an alternative explanation: readers sometimes miss problematic aspects of sentences because they are only processing them superficially, which leads to acceptability illusions. As a synthesis of these accounts, I propose that conscious rational inferences about errors on the one hand and good-enough processing on the other are competing latent processes that simultaneously occur within the same comprehender. In support of this view, I present data from a two-dimensional grammaticality/interpretability judgment task with different types of subtly ill-formed sentences. Both conscious rational inference and good-enough processing predict positive interpretability judgments for such sentences, but only good-enough processing also predicts positive grammaticality judgments. By fitting a lognormal race model jointly to judgments and response latencies, I show that conscious rational inference and good-enough processing, as well as purely grammar-driven processing, actively trade off with each other during reading. Furthermore, individual differences measures reveal that participant traits such as linguistic pedantry, interpretational charity, and analytic/intuitive cognitive styles contribute to variability in the processing patterns.

Introduction

Consider the sentence in (1):

| (1) | The mother gave the candle the daughter. | ||||

Depending on one's point of view, this sentence is either implausible or ungrammatical. If the sentence is interpreted literally, it means that the daughter was given to the candle. If the sentence is not interpreted literally, and we assume that the utterer meant to say that the candle was given to the daughter, they must have either swapped the order of the arguments by mistake, or forgotten the word to before the daughter. Furthermore, in a spoken conversation, it is also possible that the comprehender may have missed or misheard a word. It has been suggested that language users make these kinds of inferences about possible speech errors and other communicative slips all the time, in order to cope with the noisiness inherent in everyday communication (Gibson et al., Citation2013; Levy, Citation2008b).Footnote1 This behaviour is rational in the sense that it is purposive (Chater & Oaksford, Citation1999): the language user's goal is to reconstruct the intended message by making use of prior knowledge about sensible things that people might say. By combining this knowledge with the (subjective) probability of different kinds of errors, they can recover the most likely interpretation. In the case of (1), the inference would be that it was probably the candle that was given to the daughter, given the plausibility of the scenario and the plausibility of an argument swap as a possible speech error (Poppels & Levy, Citation2016).

The pure “rationalist” perspective of language processing assumes an idealised comprehender with perfect knowledge of language statistics (Gibson et al., Citation2013) who is not bounded by cognitive resource constraints. Levy (Citation2008b) assumes that the error-correction process in cases like (1), where mentally editing the input yields an a-priori more plausible analysis, causes additional mental effort compared to cases in which error correction is not needed or not possible (see also Chen et al., Citation2023; Gibson et al., Citation2013; Levy et al., Citation2009; Ryskin et al., Citation2021).Footnote2 It is thus assumed that comprehenders may go to great lengths to find out what a given sentence is most likely supposed to mean: they may invest more cognitive resources into mental error correction (Levy, Citation2008b), and/or reread parts of the sentence (Levy et al., Citation2009). This assumption may not always be realistic.

A longstanding and influential line of work in decision-making research has highlighted the fact that human rationality is bounded by constraints such as time pressure, incomplete information, and fluctuating motivation (e.g. Gigerenzer & Selten, Citation2002; Selten, Citation1990; Simon, Citation1972). Drawing from this literature, the “good enough” processing framework (e.g. Ferreira & Patson, Citation2007) in psycholinguistics has highlighted the goal of saving cognitive resources by occasionally omitting effortful processing steps. In the case of (1), this could mean that the comprehender only computes a “bag of words” style parse of the sentence and otherwise mostly relies on prior event plausibility (Kuperberg, Citation2016; Paape et al., Citation2020), fails to register the absence of the word to, and/or fails to actively monitor their comprehension (Glenberg et al., Citation1982).

Due to the shared prediction that readers sometimes adopt non-literal interpretations and partly rely on event plausibility, it has historically proven difficult to disentangle the predictions of the good-enough processing framework from those of the rational inference framework (Brehm et al., Citation2021). For this reason, some authors have treated rational inference as a subtype of good-enough processing (e.g. Dempsey et al., Citation2023; Goldberg & Ferreira, Citation2022). However, a closer look at the assumptions and predictions of the two frameworks reveals important differences. In contrast with the view that examples like (1) activate a potentially costly error correction mechanism, good-enough processing predicts that such sentences can often be processed with relatively little effort, assuming that the actual compositional tructure is simply ignored or at least heavily downweighted.

Good-enough processing incorporates CitationSimon's (Citation1955; Citation1956) concept of satisficing: it is assumed that readers do not fully optimise their interpretation processes in the sense that they take all available information into account, but may instead settle for an imperfect representation of a given sentence once their current aspiration level is reached (Christianson, Citation2016; Ferreira et al., Citation2009). When proper incentives to process utterances deeply and attentively are lacking, readers may save cognitive resources by partly ignoring the syntactic structure of a sentence and adopting a superficially plausible reading (Christianson et al., Citation2010; Ferreira, Citation2003), failing to revise initial misinterpretations in the face of disambiguating material (e.g. Christianson et al., Citation2001), or underspecifying their syntactic analysis of a sentence so that its semantic representation remains vague (Dwivedi, Citation2013; Swets et al., Citation2008; von der Malsburg & Vasishth, Citation2013).

It is clear that rational inference and good-enough processing serve different, potentially conflicting goals: to reconstruct the intended meaning of a sentence by invoking additional processes beyond the “normal” parsing of the literal string, or to save cognitive resources by omitting some parts of “normal” parsing. Given that both goals are plausible drivers of human reading (and listening) behaviour, how can they be reconciled? One possibility is to move away from the focus on average behaviour that dominates most of psycholinguistics (Yadav et al., Citation2022), and which may obscure a more complex reality: reconstructive and effort-saving mechanisms may be active to different degrees across different individuals, or within the same individual at different times, or even concurrently (Brehm et al., Citation2021). Some speakers may set relatively low aspiration levels and often go with their “gut feeling” when interpreting utterances, while others may expend mental effort to try and reconstruct what the other person probably wanted to say.

Importantly, there likely exists a third type of individual or processing mode that is rarely discussed in the literature: people who are pedantic – which I use neutrally here – in the sense that they are completely faithful to the linguistic stimulus, and who take sentences like (1) literally, responding with “That's nonsense!”, “I don't know what you're trying to say!”, or “What an unusual thing to happen!”. Literal interpretations of implausible sentences are robustly attested (e.g. Ferreira, Citation2003; Gibson et al., Citation2013) and have been linked to high verbal working memory (Bader & Meng, Citation2018; Meng & Bader, Citation2021; Stella & Engelhardt, Citation2022). A completely input-faithful speaker would arguably be an embodiment of pure grammatical competence in the Chomskyan sense (Wray, Citation1998), which makes literal responses theoretically highly interesting. Any realistic model of sentence comprehension should thus take into account that different people or even one and the same person may, depending on the situation, be “inferencers”, “slackers”, or “pedants”.

How can each of these processing “modes” be identified? One promising avenue is to use error awareness as an indicator. In the “slacker” mode of processing, ill-formed sentences should be very likely to pass unnoticed. Such cases are known as linguistic illusions, where an ungrammatical sentence passes as grammatical and/or an implausible sentence passes as plausible (e.g. Muller, Citation2022; Phillips et al., Citation2011; Sanford & Sturt, Citation2002). The rational inference account is underspecified with regard to error awareness: Levy (Citation2008b, p. 237) states that a copy editor needs to “notice and (crucially) correct mistakes on the printed page” but also that “in many cases, these types of correction happen at a level that may be below consciousness – thus we sometimes miss a typo but interpret the sentences as it was intended” (see also Huang & Staub, Citation2021b).

That rational inference can be conscious is implied by studies that have used highly explicit tasks such as retyping of sentences (Ryskin et al., Citation2018) or judging how likely one sentence is to be changed into a different one due to a speech error (Zhang, Ryskin, et al., Citation2023). There is also evidence that error correction via rational inference leads to increased P600 amplitudes (Li & Ettinger, Citation2023; Ryskin et al., Citation2021), which have been linked to conscious detection of anomalies during reading (Coulson et al., Citation1998; Rohaut & Naccache, Citation2017; Sanford et al., Citation2011). Crucially, while the additional processing steps involved in rational inference may not always be conscious, they should be comparatively more likely to rise to consciousness than good-enough processing, which implies the absence of one or more processing steps. Finally, the “pedantic” processing mode naturally predicts error awareness, in the sense that the utterance is identified as being ungrammatical or nonsensical.

In addition to differences between people, there are likely to be differences between error types that create variability in how a speaker responds to a sentence (Frazier & Clifton, Jr., Citation2015). Some sentences, such as the “depth charge” sentence No head injury is too trivial to be ignored (Wason & Reich, Citation1979) cause an illusion of acceptability almost invariably across individuals (Paape et al., Citation2020), while other sentence types may show large amounts of variability both across and within speakers (Christianson et al., Citation2022; Frank et al., Citation2021; Goldshtein, Citation2021; Hannon & Daneman, Citation2004; Leivada, Citation2020). The aim of the present study is to quantify this variability between speakers and sentences across six different constructions that are known to cause linguistic illusions, and to identify traits that correlate with speakers' dispositions towards rational inference or good-enough processing. lists the six constructions under investigation.

Table 1. Constructions used in the experimental study.

An additional contribution of the present work is the use of a computational modelling approach to investigate how responses to sentences are generated in real time. In the model presented below, conscious rational inference, good-enough processing and outright rejection of a sentence are treated as latent processes that compete and trade off with each other to produce a response. In combination with the empirical breadth of the experimental design, this allows for the comparison of the three processes across different linguistic constructions. Furthermore, the model allows for a systematic investigation of participant-level traits that affect each of the latent processes.

I will now introduce the logic of the experiment, followed by the description of the procedure, and finally the implementation of the computational model.

Experimental study

The sentence judgment study presented below had three main aims:

| (1) | To quantify the relative contributions of rational inference and good-enough processing to different linguistic illusions. | ||||

| (2) | To investigate individual-level trade-offs between rational inference and good-enough processing across different illusions. | ||||

| (3) | To investigate the effect of individual-level traits such as linguistic pedantry on rational inference, good-enough processing, and outright rejection of illusion sentences. | ||||

In order to achieve the first aim, the study used a novel two-dimensional sentence judgment task in which participants simultaneously judge whether they feel that they understand the sentences (“get it”/“don't get it”), in addition to whether they think that the sentences are formally correct (“correct”/“incorrect”). Under good-enough processing, readers should occasionally miss formal grammatical errors, resulting in the impression that illusion sentences are both well-formed and interpretable (“get it, correct”). Under rational inference, by contrast, it is plausible to assume that readers notice the errors – especially when instructed to look out for them – but can nevertheless reconstruct the presumably intended sentence (“get it, but incorrect”). I assume that the explicit task demands will cause all rational inferences to be conscious rather than non-conscious. This linking assumption appears justified given the aforementioned reliance on explicit tasks in the relevant literature on rational inferences, but I will later explore the possibility that some inferences may also be non-conscious. The remaining two judgment options (“don't get it, incorrect”/“don't get it, but correct”) are not covered in any depth by either theory, but may nevertheless show differences between illusions: readers may outright reject some ungrammatical constructions more readily than others.

To achieve the second aim, I analyse the correlations between the subject-level random effects in a hierarchical computational model. The model assumes that a given manipulation has an average effect across all participants, and that the individual effects for each participant are normally distributed around this average. Analysing the correlations between individual effects allows for statements of the form “Participants who show more evidence of good-enough processing for illusion X will show more evidence of good-enough processing for illusion Y”. Furthermore, and perhaps more interestingly, correlations can be analysed not only within but across response types: “Participants who show more evidence of good-enough processing for illusion X will show less evidence of drawing rational inferences for illusion Y”. Finding such negative correlations would strengthen the case for shared cognitive mechanisms across different illusions, and crucially also yield insights into whether and how these mechanisms compete within individuals.Footnote3 The presence of trade-offs would lend support to the notion that good-enough processing and rational inference serve conflicting goals, and suggest that both mechanisms draw on a shared pool of resources, as has been suggested in other models of conflict tasks (e.g. Lee & Sewell, Citation2024).

The third aim is to uncover the underlying factors that contribute to individual differences in the processing of illusion sentences by collecting additional measures outside of the sentence judgment task. The first measure I use is a questionnaire that covers linguistic pedantry, interpretational charity, motivation, and attention. The second measure comes from a syllogistic reasoning task with believable and unbelievable syllogisms, which is intended to uncover individual differences in cognitive style (Stupple et al., Citation2011; Trippas et al., Citation2018, Citation2015): a more analytic cognitive style “denotes a propensity to set aside highly salient intuitions when engaging in problem solving” (Pennycook et al., Citation2012, p. 335). Having an analytic cognitive style should increase an individual's tendency to favour analytical grammar rules and logic over “quick and dirty”, good-enough processing of illusion sentences.

It is important to note that there is broad agreement in the literature that the illusion constructions tested in the current study are ill-formed at both the prescriptive and the descriptive level: there is no variety of English, vernacular or otherwise, that “allows” sentences with missing lexical verbs or sentences in which direct and indirect objects appear in reverse order without an added preposition (e.g. The mother gave the candle the daughter). The errors tested here are thus not “native errors” in the sense of Bradac et al. (Citation1980), that is, constructions that speakers might use but that they are taught to avoid in formal education, or on which the majority of naive and expert judgments fundamentally disagree (with the possible exception of depth charge sentences; see general discussion). Consequently, while the judgments of formal correctness elicited in the current study may activate participants' prescriptive attitudes to some degree – which is part of the rationale behind the task – they also plausibly reflect their natural intuitions with regard to what constitutes a well-formed sentence of English. The instructions (reproduced below) were deliberately designed to strike a balance between allowing for superficial, good-enough processing on the one hand and triggering error awareness on the other: no comprehension questions were asked and participants were explicitly instructed not to “overanalyze” the sentences, but they were also told to be on the lookout for errors.

It should be stressed that the goal of the present design is not to establish that the illusions in question exist, that is, that the illusion variants of the different constructions are more acceptable/interpretable than their ungrammatical counterparts; against the background of the existing literature, this is taken as a given. By contrast, the present design is focussed mainly on the comparison between illusion sentences and their grammatical counterparts: good-enough processing predicts “get it, correct” judgments for both illusion sentences and their grammatical controls, whereas rational inference predicts “get it, incorrect” judgments for illusion sentences but not for controls.

Participants

Participants were tested in three groups that were recruited over Prolific (https://www.prolific.co; Palan & Schitter, Citation2018). Group 1 completed only the sentence judgment task, Group 2 additionally completed the questionnaire, and Group 3 additionally completed the questionnaire and the syllogistic reasoning task. Group 1 consisted of 100 self-identified native speakers of English currently living in the US. The first 50 participants were initially paid £2.83 eachFootnote4, which was adjusted to £3.55 after review, as the completion time estimate had been too low. The remaining 50 participants were paid £3.55 each. The data of one participant were subsequently removed because response times were consistently too short to be realistic, leaving data from 99 participants. Groups 2 and 3 consisted of 157 participants and 100 participants, respectively, recruited from the same subject pool. Participants in Group 2 were paid £3.55 each while participants in Group 3 were paid £5.65 each due to the longer experiment duration.

Materials

12 inversion sentences were adapted from Cai et al. (Citation2022). Inversion sentences appeared in two conditions, the normal condition and the inverted condition, as shown in (2). In 6 items, both the inverted sentence and the control sentence used the direct object construction, while in the other 6 items both sentences used the prepositional object construction with to.

| (2) | The mother gave | ||||

12 agreement attraction sentences were adapted from Parker and An (Citation2018). Agreement attraction sentences appeared in three conditions, as shown in (3). All participants saw the waitress/girls (attraction) condition. In Group 1, 50 participants saw only the waitress/girl (ungrammatical) condition as a control, while the other 50 participants saw only the waitresses/girls (grammatical) condition as a control. Group 2 saw only the grammatical control condition, while Group 3 saw no agreement attraction sentences at all.Footnote5

| (3) | The | ||||

12 depth charge sentences were adapted from O'Connor (Citation2015). Depth charge sentences appeared in three conditions, as shown in (4). All participants saw the no/too (illusion) condition. In Group 1, 50 participants saw only the no/so condition (sensible) as a control, while the other 50 participants saw only the some/too condition (not sensible) as a control. The remaining participants saw only the so condition as a control.

(4) In Maria's class, …

(a) … no test is so difficult that she would fail it.

(b) … no test is too difficult to fail.

(c) … some tests are too difficult to fail.

The depth charge illusion is often difficult to spot even for trained linguists (Wason & Reich, Citation1979). The crucial insight is that the degree phrase too difficult to fail is pragmatically incongruous (compare too easy to fail). The illusory property of this construction is that having a negation at the beginning of the sentence (no test) can completely mask the incongruity. However, in logical terms, asserting that no test has the property of being too difficult to fail should not repair the incongruity (Paape et al., Citation2020).

12 comparative illusion sentences were adapted from O'Connor (Citation2015). Comparative illusion sentences appeared in two conditions, the plural condition (grammatical), and the singular condition (ungrammatical), as shown in (5). All comparative illusion sentences contained a sentence-final subordinate clause (because …; due to …) to make them sound more natural.

| (5) | Last fall, more engineers relocated to San Francisco than | ||||

12 missing VP sentences were taken from Langsford et al. (Citation2019). Missing VP sentences appeared in two conditions, the VP2 condition (grammatical) and the no-VP2 condition (ungrammatical), as shown in (6).

| (6) | The ancient manuscript that the grad student who the new card catalog had confused a great deal | ||||

12 NPI sentences were adapted from Parker and Phillips (Citation2016). NPI sentences appeared in three conditions, as shown in (7). All participants saw the the authors/no critics (embedded negation) condition. 50 participants saw only the no authors/the critics condition (grammatical) as a control, while the other 50 participants saw only the the authors/the critics condition (ungrammatical) as a control. The remaining participants saw only the grammatical control condition.

| (7) |

| ||||

In addition to the 72 illusion and control sentences, there were also 24 fillers. Of these, 12 were garden-path sentences (e.g. The farm hand believed that while the fox stalked the geese continued to peck …), 6 contained “malaphors” or “idiom blends” (e.g. Bill wasn't the sharpest bulb in the box), and 6 were relatively long but well-formed sentences of different types.

Group 2 completed the same judgment task as Group 1, followed by an additional questionnaire that appeared at the end of the experiment. Participants were asked to what extent they agreed or disagreed with the following four statements:

| (1) | Doing the experiment was fun for me. | ||||

| (2) | It bothers me a lot when people use incorrect grammar. | ||||

| (3) | I usually assume that what people say makes sense. | ||||

| (4) | In my everyday life, when I read a text, I always pay close attention to every sentence. | ||||

There were five levels on the scale: “completely disagree”, “somewhat disagree”, “undecided”, “somewhat agree”, “completely agree”.

Group 3 completed a syllogistic reasoning task in addition to the judgment task and the questionnaire. The materials consisted of 64 syllogisms in four conditions resulting from crossing the factors validity (valid versus invalid) and believability (believable versus unbelievable). These factors were manipulated between items, that is, each syllogism appeared in only one condition. Examples for each condition are shown in (8). The structure of the syllogisms varied between items. Some materials were novel while others were adapted from previous studies (Goel & Vartanian, Citation2011; Hayes et al., Citation2022; Solcz, Citation2011).

| (8) (a) | Either the sky is blue or it is green. The sky is not green. Therefore, the sky must be blue. (valid, believable) | ||||

| (b) | All rabbits are fluffy. All fluffy creatures are tadpoles. Therefore, all rabbits are tadpoles. (valid, not believable) | ||||

| (c) | If an animal is a feline, then it purrs. If an animal purrs, then it is a cat. Therefore, if an animal is a cat, then it is a feline. (invalid, believable) | ||||

| (d) | Some sodas are beverages. All sodas are carbonated drinks. Therefore, some carbonated drinks are not beverages. (invalid, not believable) | ||||

Procedure

All subjects gave informed consent to participate in the study,Footnote6 which was run on the PCIbex farm (Schwarz & Zehr, Citation2021). Given the potential importance of task demands for the results, the experimental instructions are reproduced here in their entiretyFootnote7:

People often make mistakes when they speak or write. They will say things like “is sufficient enough for”, “pales next to comparison of”, or even “I'm going to get some bed”. Such utterances can be considered incorrect or nonsensical, but it is nevertheless clear what the person in question was trying to say. In this study, you are supposed to judge both the formal correctness of the sentences you will read, as well as indicate whether you know what the other person meant.

Some of the sentences will be very complex, and you may feel that you don't understand them, but still get the impression that they are formally correct. Sometimes you may be completely unsure. This is fine; you can just choose the appropriate answer option (“no idea”).

Don't “overanalyze” the sentences – try to read normally as much as possible. There will be no detailed comprehension test. We are mainly interested in whether you feel that you understood the sentence.

In each trial, the stimulus sentence was presented at the centre of the screen, with five possible judgment options shown directly below ():

The time elapsed from presentation of the sentence to choosing a response was recorded for each trial.Footnote8 There was no time limit. Responses could be chosen by either clicking on them or by pressing the appropriate number key. Sentences were rotated through the conditions in a Latin squares fashion. There were no practice trials. The median duration of an experimental session in Group 1 was 20 minutes. The median duration of an experimental session for Group 2 was 21 minutes.

In Group 3, the order of the sentence judgment task and the syllogistic reasoning task was counterbalanced across participants, so that 50 participants completed the reasoning task first and the remaining 50 participants completed the judgment task first. In the syllogistic reasoning task, participants were instructed to judge the syllogisms purely based on logic (“logically valid” versus “not logically valid”), and to assume that the premises were true, even if they didn't make sense. As for the judgment task, the entire syllogism was presented on the screen, with the response options shown underneath. At the end of the experiment, the same questionnaire as in Group 2 was administered. The median duration of an experimental session in Group 3 was 33 minutes.

Data preparation

The data were analysed in R (R Core Team, Citation2022). The reaction time and response data from all three participant groups were combined into one data set. Trials with response times below 2 seconds or above 60 seconds were dropped, which resulted in a loss of 5% of the data. Additionally, trials with “no idea” responses were dropped, which resulted in a loss of 0.5% of the remaining data.Footnote9 The final data set contained 22,884 observations from 355 participants.

Descriptive results

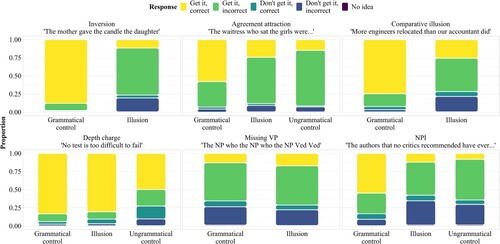

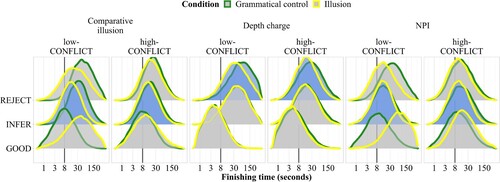

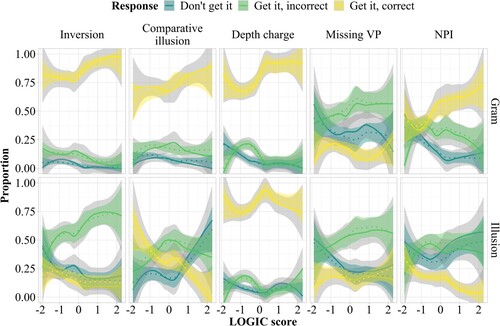

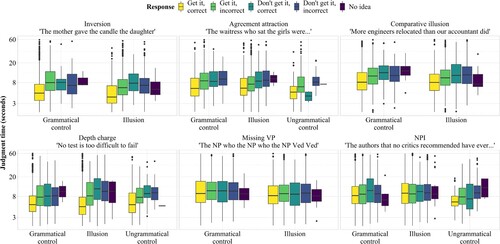

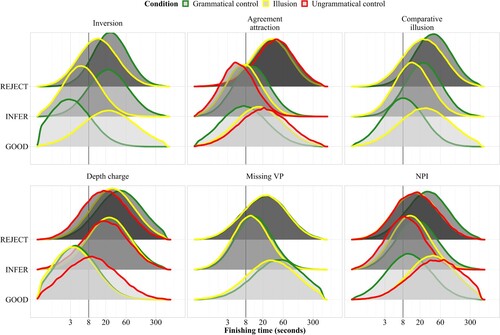

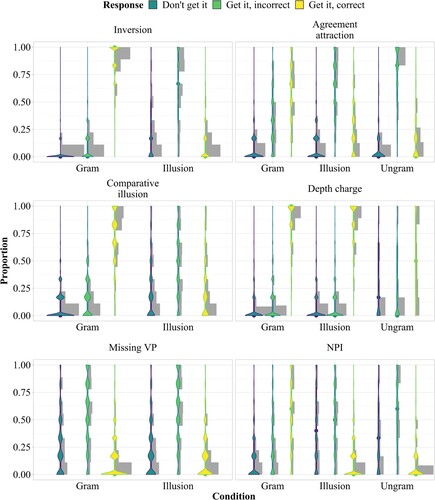

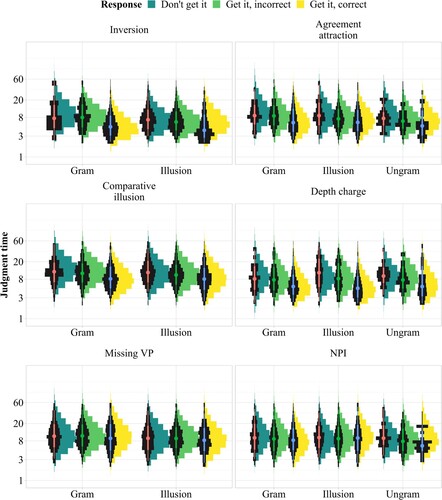

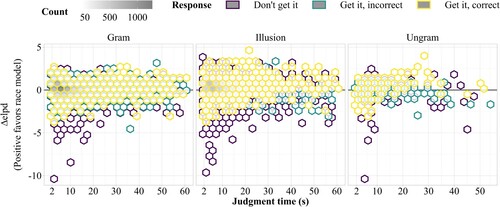

shows response proportions across constructions and conditions. shows judgment times (reading time + response selection time) across constructions, conditions, and response types.

Figure 3. Judgment times (reading time + response selection time) across constructions, conditions, and response types. Note that the y-axis is log-scaled.

A number of high-level observations can be made about the descriptive results:

Across constructions, “get it, incorrect” judgments are more common in the illusion conditions than “get it, correct” judgments, suggesting that rational inference is more common under the current instructions than good-enough processing. The only exception is the depth charge construction, which shows more than 75% “get it, correct” judgments.

Across constructions, even the fully grammatical control sentences show non-zero proportions of “incorrect” judgments. This tendency is especially pronounced for the agreement attraction sentences of Parker and An (Citation2018)Footnote10 and the NPI sentences of Parker and Phillips (Citation2016).

It is informative to compare the illusion conditions to both grammatical and ungrammatical control conditions. For agreement attraction sentences, the judgment pattern in the illusion condition is close to midway between the grammatical and ungrammatical conditions in terms of “correct” judgments, while for NPI sentences, the illusion condition is much closer to the ungrammatical condition. This is in line with the qualitative pattern observed by Xiang et al. (Citation2013) and Langsford et al. (Citation2019), who also compared the two constructions.

The missing VP illusion shows comparably high amounts of “get it, incorrect” and “don't get it, incorrect” judgments both in the grammatical and in the illusion condition. This is consistent with the assumption that multiple centre embeddings overload the parser's capacity (e.g. Gibson & Thomas, Citation1999).

For the depth charge illusion, the grammatical condition with so is almost indistinguishable from the illusion condition with too, which essentially patterns like a fully grammatical sentence. This result is unexpected if the depth charge illusion is the result of a rational inference mechanism, as recently argued by Zhang, Ryskin, et al. (Citation2023).

Across constructions, latencies tend to be shorter for “get it, correct” judgments, as would be expected if the sentence is either grammatical or an ungrammatical sentence is processed superficially. However, this pattern does not seem to hold for missing VP and NPI sentences, which casts doubt on the assumption that positive grammaticality judgments for illusion sentences are always the result of effort-saving mechanisms.

Another interesting observation is that “get it, incorrect” judgments are less common in illusion sentences compared to ungrammatical controls. This pattern would appear unexpected under the rational inference account's assumption that the non-literal readings of illusion sentences are due to their relative closeness to a fully grammatical neighbour sentence (e.g. Levy, Citation2008b), and thus higher “repairability” compared to ungrammatical sentences. However, it is not entirely clear how rational inferences relate to positive grammaticality judgments, given that previous studies used either comprehension questions, explicit measures of “editability”, and/or implicit measures such as ERPs (e.g. Gibson et al., Citation2013; Poliak et al., Citation2023; Ryskin et al., Citation2021; Zhang, Ryskin, et al., Citation2023). In terms of interpretation, it stands to reason that participants also attempt to assign meaning to ungrammatical non-illusion sentences, even though it should be more difficult to “repair” them. Thus, the “get it” judgments for ungrammatical control sentences are expected under rational inference. By contrast, an increase in positive grammaticality judgments (“correct”) for illusion sentences compared to ungrammatical controls is only expected if the “repairs” are pre-perceptual, so that there is no error awareness (Huang & Staub, Citation2021a, Citation2021b). However, if we instead assume that good-enough processing is responsible for the increased acceptability of illusion sentences, rational inference would indeed be observed less often in illusion sentences than in ungrammatical control sentences: in illusion sentences, good-enough processing will occasionally completely mask the error and prevent an error signal, so that no inference takes place.

Computational modelling

Preprocessing of individual differences predictors

Model-based preprocessing was applied to the individual differences measures from Groups 2 and 3 prior to entering them into the computational model. The questionnaire responses were subjected to a principal components analysis with polychoric correlations using the psych package (Revelle, Citation2023). The number of factors was set to 2. The resulting factor loadings are shown in . As the table shows, Factor 1 loaded positively on having fun during the experiment, being bothered by grammatical errors, and paying attention during reading, but loaded negatively on assuming that utterances typically make sense. Factor 2, by contrast, loaded positively on making this default assumption of sensibleness, but less positively on attention, and negatively on being bothered by grammatical errors.

Table 2. Factor loadings from the principal components analysis of the questionnaire data.

Given these loadings, I will assume that Factor 1 captures motivation to rigorously apply grammatical rules and pay attention to linguistic detail, which I will call pedantry, while Factor 2 captures the default assumption that sentences are sensible without much regard for correct grammar, which I will call charity. These labels are purely descriptive and should be treated with some caution. As shows, the two factors are not complementary: both load positively on enjoying the experiment, and both also load positively on attention, though the association is stronger for pedantry. If the proposed labelling is on the right track, individuals who score high on pedantry should show less good-enough processing for illusion sentences, meaning fewer “correct” responses, while individuals who score high on charity should give more “correct” responses and fewer “don't get it” responses.

For both factors, the factor scores for each participant were entered as scaled, centred predictors into the main analysis. The data from the syllogistic reasoning task completed by Group 3 were analysed using a hierarchical logistic regression model in brms (Bürkner, Citation2017). The factors validity and believability were sum-coded for this analysis. Response time was also entered as a predictor to account for speed-accuracy trade-off. The subject-level random effects for validity, believability and their interaction were extracted from this model and entered into the main analysis as centred, scaled predictors. I will call these predictors logic, belief, and conflict.

Modeling rationale and implementation

In psycholinguistics, so-called “online” measures such as reading times are usually analysed separately from “offline” measures such as acceptability ratings. In cognitive psychology, by contrast, the standard is to look at the measured latencies and observed responses in a task as reflecting the same mental process, which is often conceptualised in terms of evidence accumulation or sequential sampling (Evans & Wagenmakers, Citation2019; Ratcliff et al., Citation2016). The core assumption of evidence accumulation models is that the time spent processing a given stimulus – modulo the time required to, say, press a keyboard key – reflects the time needed to extract enough information from the stimulus to be able to respond to it. In the context of a sentence judgment study, one can assume that while the participant reads a given sentence, they are extracting information that is relevant to the task of making the judgment: identifying words, accessing their meaning, (possibly) computing the compositional structure of the sentence, consulting their mental grammar, and potentially making inferences beyond the literal input.

One way of modelling the accumulation of evidence is to assume a race between noisy accumulators that compete to produce a response. Race models have been applied with great success to many decision tasks in cognitive psychology (Heathcote & Matzke, Citation2022). A race model that is relatively straightforward to implement and interpret is the lognormal race model of Rouder et al. (Citation2015). In the lognormal race model, the different response options accrue evidence in parallel, with the fastest option winning and determining the observed response in a given trial. Under this model assumption, each trial also yields information about the unobserved responses, given that they must have been slower than the observed response. It is an open question whether conceiving of good-enough processing, rational inference and rejection as parallel processes is warranted; they could, in principle, occur sequentially, or there could be “switching” between different modes of processing within a trial (Ferreira & Huettig, Citation2023). Nevertheless, the parallelism assumption is a useful simplification, and does not preclude the possibility that the latent processes have considerable overlap in terms of the information they make use of (see below).

The notion that different mechanisms are available to derive sentence meaning, and that these mechanisms operate in parallel, is well-established in the literature, especially in the context of “heuristic” versus “algorithmic” interpretations (e.g. Dempsey et al., Citation2023; Ferreira, Citation2003; Ferreira & Huettig, Citation2023; Kuperberg, Citation2007). For instance, most variations of the good-enough processing approach do not assume that the sentence processor only applies superficial heuristics, but that heuristics and fully compositional processing are carried out in parallel. Heuristic processing is often, but not necessarily always faster than fully compositional processing (Dempsey et al., Citation2023), which may outperform heuristic processing in cases where the input cannot be easily coerced to fit into a “template” such as agent-verb-object (Ferreira, Citation2003). The novel aspect of my model is that a third parallel route to meaning, namely rational inference, is assumed.

What is crucial to the model is the assumption that the responses in the experimental task can be reliably mapped to the proposed processes. Given the recent proposal that rational inference can be pre-perceptual (Huang & Staub, Citation2021a, Citation2021b), there is a possibility that “get it, correct” responses may be produced by mental “repairs” that the reader takes no conscious notice of. However, the conscious nature of the experimental task should make this type of non-conscious rational inference unlikely. Nevertheless, I compare the race model against a serial model that incorporates non-conscious rational inference using cross-validation, and find that the race model has better predictive fit to the data.

I implement the lognormal race model in Stan (Stan Development Team, Citation2023) and fit it to the judgments and their associated reading/judgment times, that is, the time taken to read the entire sentence and produce a response. This measure is, of course, very coarse; the “atomic” events of reading such as fixations on single words, and possibly rereading of (parts of) the sentence, are not captured. However, the model is not intended as a model of these lower-level processes, but rather treats them as means to an end; the goal is to accumulate evidence to reach a decision about a judgment: does the sentence make sense, and is it grammatically well-formed?

As a further simplification, the two types of “don't get it” responses (“correct”/“incorrect”) are coded as a single response category, so that there are three accumulators in the model: reject, infer, and good. Their finishing times FT in a given trial i are each sampled from a lognormal distribution with mean μ and standard deviation σ:

(9)

(9) The reject accumulator is taken to represent complete failure to understand the sentence, the infer accumulator is taken to represent conscious rational inference processes, and the good accumulator is taken to represent good-enough processing. The means

of the finishing time distributions are the main parameters of interest, as differences in μ between conditions are assumed to reflect systematic variation. By contrast, the σ parameters index noise in the evidence accumulation process, which leads to unsystematic variation in the resulting judgments.

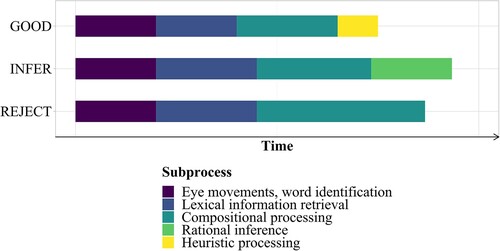

As the stimulus sentence is the same for each assumed latent process, some percentage of the finishing time across the three accumulators for each trial is going to be identical. For instance, each of the response options presumably requires the words in the sentence to be identified by fixating them and accessing the mental lexicon. However, things may already start to diverge at this point: under good-enough processing, less information may be retrieved from the lexicon compared to the other racing processes, which should make the accumulator faster on average. By contrast, rational inference by assumption involves additional reasoning steps beyond the veridical encoding of the input, meaning that the infer accumulator must have some percentage of its finishing time devoted to these steps, presumably slowing it down relative to the good accumulator. schematically shows the contributions of the component processes to the total accumulation time of each accumulator. The different μ parameters encode these base differences in speed between accumulators, which are additionally assumed to be affected by the experimental manipulations and individual differences between participants.

Figure 4. Contributions of component processes to the finishing times of the three accumulators in a hypothetical experimental trial.

The three accumulators are assumed to be active across grammatical, ungrammatical, and illusion sentences. This assumption is not novel, but is rarely spelled out in the literature. Both the rational inference framework and the good-enough processing framework aim to cover all human communication, not just non-literal interpretations of subtly ill-formed sentences. Shallow meaning templates can, in principle, be applied to any type of sentence with varying rates of success (Ferreira, Citation2003), while rational inference may also occur for well-formed sentences (Keshev & Meltzer-Asscher, Citation2021; Levy, Citation2008b, Citation2011). Differences between grammatical, ungrammatical and illusion sentences, as well as between different constructions, are quantitative rather than qualitative, in the sense that some sentences are closer to being fully well-formed than others.

Against this background, changes in average accumulator speed are assumed to reflect how quickly the corresponding process (good, infer, reject) extracts and utilises information from the stimulus. Accumulation speed depends on the individual reader's cognitive resource allocation and on the properties of the sentence. For instance, the superficial, frequency-based templates associated with good should have the highest fit to grammatical control sentences, followed by illusion sentences, followed by ungrammatical control sentences. By contrast, rational inference should not accrue much evidence, and hence not be allocated a large amount of cognitive resources, in grammatical controls compared to illusion sentences or ungrammatical controls: assuming that an error signal is needed to trigger the inference process (Levy, Citation2008b), such a signal should be less likely to be generated if the sentence is prescriptively well-formed. Furthermore, even if an error signal is generated, any attempt to “repair” the sentence would fail, which would presumably also be reflected in slower accumulation times for infer.

In this context, a somewhat puzzling observation based on the descriptive statistics is that some participants give “get it, incorrect” or even “don't get it” judgments for grammatical sentences, which begs the question why the infer and reject accumulators should ever win if the sentence is fully well-formed. One possibility is that these judgments are uninformative and should be captured by the model's noise component as the result of random fluctuations in the accumulation process. Another possibility is that participants find some of the grammatical sentences stylistically odd, and/or that the experimental design may have created some amount of hypervigilance. Especially for missing VP sentences, a third possibility is that participants are unable to parse the well-formed structure, and therefore reject it or try to infer the meaning rather than compostionally deriving it. Ultimately, the question of why rejection rates are rarely zero for sentences that are designed to be fully grammatical applies to may (psycho)linguistic studies, and should be given more attention in the literature, especially if the aim is to design naturalistic stimuli. Nevertheless, the presence of a grammatical baseline is important for gauging “how far away” illusion sentences are from it in terms of acceptability and interpretability, even more so if the baseline does not show perfect acceptability either.

The mathematical structure of for each accumulator x in trial i is that of a linear mixed-effects model with an intercept α, slopes β for the predictors

, and normally distributed by-subject and by-item adjustments u and w for both intercepts and slopes. Predictors include the factor-coded conditions within each construction type, the individual differences measures, and their interactions. During fitting, the observed response in a given trial determines which accumulator's α and β parameters are assumed to have produced the observed reaction time. As non-observed responses from the remaining accumulators must have been slower by assumption, some information is also gained regarding their corresponding α and β parameters.Footnote11

(10)

(10) The observed reaction time in a given trial i is the finishing time of the winning accumulator, plus a shift parameter estimated from the data that represents non-decision time, which can vary between subjects.

(11)

(11) IF…ELSE constructions in Stan are used to exclude the individual differences predictors where no data is available, that is, for the syllogistic reasoning predictors in Groups 1 and 2, and for the questionnaire-based predictors in Group 1. To account for differences in sentence length between constructions, sentence length in characters is added as a predictor to each μ.

Given the assumptions of the lognormal race model, if one accumulator becomes faster in a given condition, there will be more responses of the associated type, and fewer responses in the other response categories. This is true even if the finishing times of the other accumulators are unaffected by the manipulation, because, like in a real-life race, it is the relative speed of the competitors that determines the winner. Thus, if a given manipulation speeds up the infer accumulator, it does not follow that the good and reject accumulators are slowed down by the same amount, or even slowed down at all. Unlike in competition-based models, where strengthening of one response option automatically implies weakening of the other options through inhibition, race models in principle allow response options to accrue evidence completely independently from one another (Teodorescu & Usher, Citation2013). However, whether the racing processes are truly independent is a question of implementation (Heathcote & Matzke, Citation2022), and ultimately a question of whether assuming independence is plausible and empirically warranted. For instance, in the present implementation, the accumulators share a common global intercept, as well as shared hierarchical adjustments to the finishing times, which are meant to capture global differences in information extraction speed between participants.

Despite some shared structure across accumulators, the focus of the present work is on disentangling rational inference and good-enough processing, and on identifying possible trade-offs between the different mechanisms. Due to the structure of the judgment task, giving one response naturally precludes giving another response in the same trial. However, through the inclusion of reaction times in the model, it can be inferred if the other responses are being “actively” or “passively” suppressed. Active trade-offs would entail that the data are more likely under a model where the μ parameters of multiple accumulators are affected by a manipulation, whereas passive trade-offs would entail that only one accumulator's μ parameter is affected. In such cases, there will be more responses of the given type and fewer responses of the other types, but no indication in the data that a speedup in one accumulator is accompanied by a slowdown in the others. Individual-level trade-offs are modelled via random-effects correlations in the model. The empirical question is whether, for instance, a subject with a strong reject tendency, as reflected by a hierarchical adjustment that encodes faster finishing times for that accumulator, will show slower finishing times for the good and/or infer accumulators.

The fundamental ability of the model to correctly identify active trade-offs was established by means of a simulation study. The code is available in the online supplementary materials. Response and latency data were generated from a race process with three accumulators. Experimental manipulations were simulated that either affected multiple accumulators in opposite directions or just one of the accumulators, and the model was fitted to the generated data. Despite similar descriptive patterns in the summary statistics, the model was able to correctly recover the data-generating parameters in all cases.

Modeling results, cross-validation, and discussion

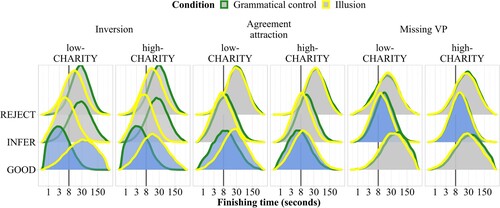

Population-level effects

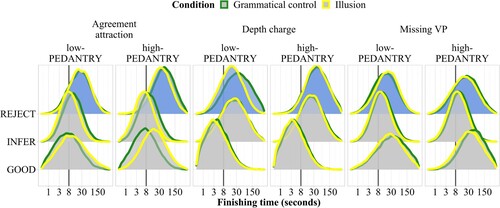

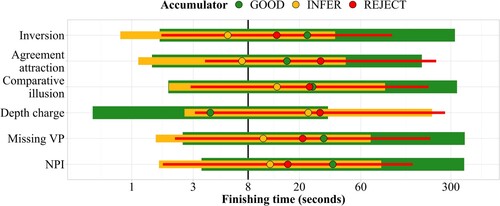

shows the predicted finishing times of the three accumulators in the lognormal race model across constructions and conditions. The figure allows for several types of visual comparisons: each panel shows one construction, while the outline colours show the different conditions (illusion versus control). For each accumulator (good, infer, reject), the conditions can be compared within a given construction, with shorter finishing times meaning more responses of that type. In addition, finishing times can be compared across constructions by visually comparing the respective panels. For instance, finishing times for the good accumulator in the grammatical control condition (green) are faster for inversion sentences than for comparative illusion sentences, so that more and faster “get it, correct” judgments are predicted for that condition, in line with the descriptive results.

Figure 5. Posterior predictive distributions of finishing times (250 samples) of the three accumulators across constructions and conditions. Faster finishing times correspond to a higher expected number of responses of the respective type. Finishing times more than ± 2.5 SD away from the log mean have been removed. Reference line added at 8 seconds. Note that the x-axis is log-scaled.

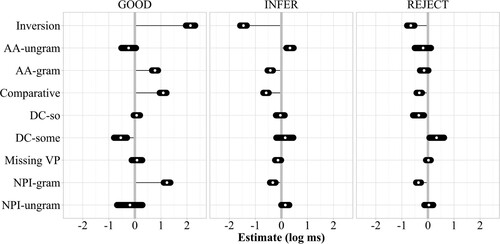

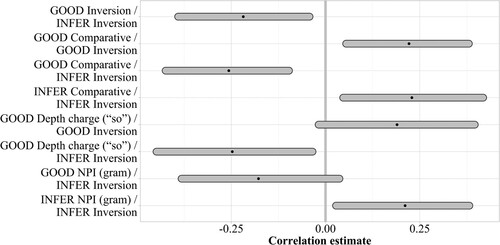

shows highest density intervals for the slope parameters (differences between conditions) across constructions.Footnote12 Across all constructions, the estimated mean finishing times of the accumulators are slower than the empirically observed judgment times, which follows from the model assumptions: in trials where a given response is not observed, it is assumed that the associated accumulator was slower than the observed response time.

Figure 6. 95% highest density intervals of slope parameters across all constructions on the log ms scale. Across all constructions, the slope is the difference between the illusion condition and the indicated control condition(s). Positive estimates correspond to a slowdown of the accumulator, that is, fewer responses of the associated category in the illusion condition. Reference line added at ms.

In general, the parameter estimates and predicted finishing times align well with the descriptive results.As seen in , the inversion construction shows the largest difference in judgments between the illusion and control conditions, followed by the comparative illusion and the NPI illusion. The agreement attraction effect is somewhat smaller, while for depth charge sentences and missing VP sentences the illusion and grammatical control conditions are almost indistinguishable, though the reject accumulator does show some graded sensitivity to the depth charge manipulation. shows the distribution of accumulator finishing times for the illusion conditions only across constructions. The pattern confirms that the depth charge illusion behaves differently from the other constructions, as it shows a considerable speed advantage for the good accumulator over the other accumulators, leading to many fast “get it, correct” judgments.

Figure 7. Mean ± 2 SD of predicted accumulator finishing times (250 samples) for the illusion condition across constructions. Faster finishing times correspond to a higher expected number of responses of the respective type. Finishing times more than ±2.5 SD away from the log mean have been removed. Reference line added at 8 seconds. Note that the x-axis is log-scaled.

Most constructions show active trade-offs between the accumulators at the population level: compared to the grammatical baseline, the inversion manipulation slows down good while simultaneously speeding up infer and reject, as do the comparative illusion manipulation, the agreement attraction manipulation, and the NPI illusion manipulation. Compared to the ungrammatical baseline, the agreement attraction, depth charge, and NPI illusion manipulations tend to speed up good while slowing down infer. The credible intervals of these comparisons are somewhat wider because there is less data for the ungrammatical conditions, but the pattern is in line with the descriptive results in that there are actually fewer “get it, incorrect” judgments in the illusion conditions compared to the ungrammatical conditions. The presence of active trade-offs is informed by the latency data: based only on the responses, it would be unclear if one process dominates the response pattern because it is strengthened by a manipulation, or because the competing processes are weakened.

reject is the slowest accumulator on average, being [11 s, 26 s] (95% highest density interval) slower than good across all constructions. Across illusion and control sentences, infer accumulates evidence more slowly than good by [0.2 s, 9 s] on average. In terms of variability, the opposite picture emerges: reject shows the lowest amount of variability in finishing times at [11 s, 12 s], followed by infer [14 s, 15 s], and finally good [20 s, 23 s] with the highest amount of variability. The good accumulator being fastest but less consistent in its speed of evidence accumulation than the other accumulators is in line with the basic assumptions of the good-enough processing framework, which assumes that readers create imperfect, “quick and dirty” sentence representations in some proportion of trials.

Increasing sentence length in characters by one standard deviation slows down reject by [0.2 s, 4.5 s], and good by [3.8 s, 10.1 s], while the effect on infer crosses zero: [−0.9 s, 2.4 s]. This pattern is somewhat unexpected, given that longer sentences should, in principle, offer more opportunities for errors and “repairs”, but recall that there is a passive trade-off between the accumulators: reject and good being slowed down in longer sentences results in more trials in which infer wins, thus predicting more “get it, incorrect” judgments compared to shorter sentences.

In Group 3, receiving the logic task prior to the sentence judgment task as opposed to the other way around also affects the speed of the accumulators: doing the logic task first speeds up reject by [−24.2 s, −3.2 s] and good by [−7.2 s, −3.9 s]. The order effect on infer crosses zero: [−6.5 s, 2.8 s]. Due to trade-off between the accumulators, this means that there were fewer “get it, incorrect” answers but more “don't get it” and “get it, correct” answers when the logic task was completed first. The observed pattern is in line with participants being mentally exhausted after completing the logic task, and preferring to either completely reject sentences or to uncritically accept them rather than engaging in effortful rational inference processes.

Graphical posterior predictive checks

shows predicted versus observed response proportions across constructions and conditions. shows predicted versus observed judgment times across constructions, conditions, and responses.

Figure 8. Predicted (colored) versus observed (gray) response proportions across constructions and conditions. Proportions were computed by participant. The visible stratification is due to between-participant variability, which is accurately captured by the model.

Figure 9. Predicted (histograms) versus observed (eye plots) judgment time distributions across constructions, conditions, and responses.

The response proportions predicted by the model are in good qualitative and quantitative alignment with the observed data, even at the level of individual participants, as shown by the stratification in . The predicted judgment times also reproduce the qualitative patterns. The quantitative fit is also adequate, though predicted judgment times for “get it, correct” judgments tend to be somewhat longer than those seen in the data for some of the ungrammatical and illusion conditions.

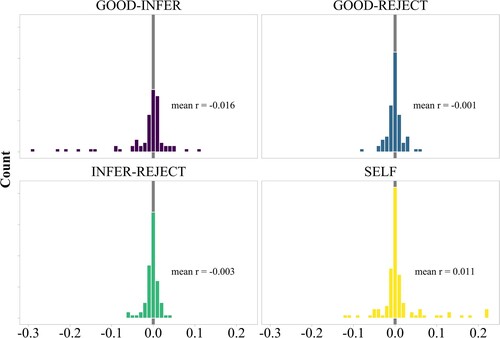

Individual-level trade-offs

shows the distribution of correlation estimates between subject-level random effects across all constructions by accumulator pair. I limit the discussion to slope parameters, that is, to differences between conditions. Active trade-offs predict negative correlations between the slopes for each pair of accumulators. By contrast, correlations between slopes within the same accumulator (self) should be positive, assuming that one and the same subject will react similarly to the different illusions. shows correlations for which the probability of direction is above 0.95, that is, for which the correlation estimate is mostly positive or negative. Note that this criterion does not correspond to a frequentist test of significance, but merely singles out effects for which the parameter estimates mostly point in a given direction. Due to the large number of parameters (9 slopes × 3 accumulators), the results should be interpreted with some caution.

Figure 10. Histogram of subject-level random-effects correlations across all constructions by accumulator pair, weighted by probability of direction.

Figure 11. Correlation estimates of subject-level random effects and associated 95% highest density intervals. Only correlations with probability of direction >0.95 for slope parameters (differences between conditions) are shown.

The correlations of the subject-level random slopes show some active trade-offs within constructions, but crucially also across constructions. As shows, well-evidenced correlations tend to go in the expected direction for good-infer and self correlations, but overall the data are mostly inconclusive. As can be seen from , participants who show larger-than-average effects of the inversion illusion on the good accumulator tend to show smaller-than-average effects on the infer accumulator for the same manipulation. This negative correlation suggests that participants who tend to do good-enough processing in the inversion construction (“slackers”) do not devote many cognitive resources to rational inference. Such a trade-off is expected under the assumption that good-enough processing and rational inference serve different, conflicting goals: to conserve mental energy or to expend additional energy to infer meaning. The pattern of trade-offs holds across all correlations shown in : larger participant-level effects on the good accumulator correlate with smaller participant-level effects on the infer accumulator. Importantly, all correlation estimates that reached the 0.95 probability-of-direction cutoff include the inversion construction, likely because the average effects in this construction are the largest. The inversion construction is thus a good candidate for a stable indicator of individual differences in illusion processing: an individual's processing preferences for inversion sentences can be used to predict the same individual's processing preferences for comparative illusion sentences, depth charge sentences, and NPI illusion sentences. However, due to the large number of correlations tested, future confirmatory studies should aim to test these correlations more rigorously.

Individual differences measures

In terms of interactions with the individual differences measures, the main question of interest is whether the difference between the illusion and control conditions for a particular constructions varies with a participant's self-reported pedantry and interpretational charity, and/or according to their logical reasoning ability. Due to the large number of possible interactions across constructions and accumulators, I will report only the results for constructions in which at least one interaction parameter reached probability of direction >0.95. Across all predictors, I plot the finishing time distributions of the three evidence accumulators for the 20 highest- and lowest-scoring participants against each other.

Pedantry

shows interactions with the pedantry predictor, which is thought to reflect a participant's motivation to rigorously apply grammatical rules. pedantry effects are seen in the agreement attraction, depth charge, and missing VP constructions. Contrary to my prediction, the interactions mainly affect the reject accumulator rather than the good accumulator. For agreement attraction and missing VP sentences, high-pedantry participants show faster finishing times for the reject accumulator compared to the control condition, that is, more “don't get it” responses. This pattern is still broadly in line with the assumption that pedantry captures the strict application of grammatical rules in ungrammatical illusion sentences: pedantic individuals tend to give “don't get it” responses if the grammar does not license an interpretation, as opposed to ignoring errors or drawing inferences beyond the literal input. For depth charge sentences, however, the pattern is reversed: low-pedantry participants tend to respond “I don't get it” more often in the illusion condition. I discuss this surprising finding below.

Charity

shows interactions with the charity predictor, which is thought to reflect a participant's default assumption that sentences are formally correct and sensible. Interactions with charity are seen for inversion, agreement attraction, and missing VP sentences. As a general pattern, high-charity participants tend to distinguish less between illusion and control sentences for these constructions, which is plausible given the interpretation of the predictor. However, there are differences between the constructions with regard to which accumulator is affected: for inversion illusion and agreement attraction sentences, high charity leads to faster good-enough processing, and thus more “get it, correct” judgments, while for missing VP sentences, low charity leads to faster rational inference, and thus more “get it, incorrect” judgments, for illusion compared to control sentences; this suggests that conscious rational inference in missing VP sentences may require less charitable assumptions about sentences usually being correct and sensible.

Figure 13. Posterior predictive distributions of finishing times (250 samples) of the three accumulators across constructions and conditions, by charity score. Interactions with probability of direction >0.95 are highlighted in blue.

Moving on to the predictors derived from the syllogistic reasoning task, recall that there are three individual-differences measures: the main effect of logical validity (logic), the main effect of believability (belief), and the validity × believability interaction, which is commonly interpreted to signal a conflict between rule-based reasoning and intuition (conflict). No effects reached probability of direction >0.95 for belief, so I will focus on the other two predictors.

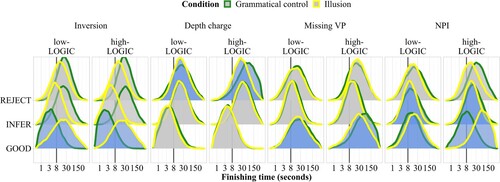

Logic

shows effects of the logic predictor for inversion sentences, depth charge sentences, missing VP sentences, and NPI sentences. Similarly to the pedantry predictor, the effect of logic is such that high-logic participants distinguish more strongly between grammatical and illusion sentences for these constructions, which mainly affects the good accumulator. Depth charge sentences are unique in that logic mainly affects “don't get it” responses: high-logic participants tend to reject illusion sentences of this type more often than control sentences. Overall, the results are in line with the assumption that individuals with strong logical abilities invest more cognitive resources into processing sentences compositionally as opposed to heuristically, and are thus more likely to spot grammatical errors, and/or, in the case of depth charge sentences, fail to understand the sentence when the compositional meaning is nonsensical.

Logic-belief conflict

The conflict predictor affects comparative illusion, depth charge, and NPI sentences, as shown in . For comparative illusion and depth charge sentences, low-conflict participants distinguish more strongly between illusion and control sentences; this difference is visible in rational inferences (“get it, incorrect”) for comparative illusion sentences, but in rejections (“don't get it”) for depth charge sentences. This pattern is broadly in line with the assumption that conflict measures the amount to which intuition interferes with rule-based processing. The pattern in NPI sentences, however, is unexpected: high-conflict participants show a slightly larger difference between conditions on the infer accumulator than low-conflict participants.

Summary

To summarise, pedantic individuals and individuals who consistently apply logical rules in the face of distracting believability information tend to experience fewer acceptability illusions for some constructions, presumably because they tend to stick to closely to prescriptive grammar when evaluating meaning. By contrast, individuals who make charitable assumptions about sentence interpretability and formal correctness appear to tend more towards good-enough processing, at least for some illusions.

Model comparisons via cross-validation

Despite the plausible parameter estimates and promising results of graphical posterior predictive checking, there is a question of whether the proposed race model generalises well to unseen data, or whether it is overfitted to the current data set. One well-known way of approaching this question is through cross-validation: the model is fitted repeatedly to only a subset of the data, and the remaining data points are used as novel data that the model's predictive fit is evaluated against (see Vehtari & Ojanen, Citation2012; Yarkoni & Westfall, Citation2017 for introductions). Since no model can realistically be expected to give a perfect fit (Box, Citation1979), it is usually the relative predictive performance of different models that is of interest. Here, I focus on three questions:

| (1) | What is the relative contribution of each accumulator to the race model's predictive fit? | ||||

| (2) | What is the contribution of the individual differences measures to the race model's predictive fit? | ||||

| (3) | How does the predictive fit of the race model compare to alternative response models? | ||||

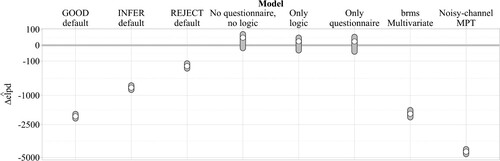

Question 1 can be answered by completely removing all slope parameters related to the experimental manipulations from one of the accumulators. This amounts to the assumption that the respective accumulator is “passive” in the sense that it is unaffected by the properties of the sentence, and that the associated response is only produced differentially across conditions as a consequence of the other accumulators being affected by the manipulations. The response associated with the unaffected accumulator can thus be seen as a default response in the simplified model (Nicenboim & Vasishth, Citation2018; Paape & Zimmermann, Citation2020). Question 2 can be approached in a similar way, namely by removing all slope parameters related to the individual differences measures from the model. Question 3 can be answered by implementing models that do not assume a race mechanism as the process generating the observed responses and latencies. Model comparisons are carried out using approximate leave-one-out cross-validation via Pareto-smoothed importance sampling, as implemented in the loo R package (Vehtari et al., Citation2019, Citation2015). The measure of interest is the expected log pointwise predictive density () of each model, which quantifies how likely the unseen data points are under the model.

In principle, the model space for the problem at hand is -open (Piironen & Vehtari, Citation2017): there are infinitely many possible models that could account for the data. Considering different race models first, in order to keep the number of models to be compared manageable, I consider only the three models with one “passive” accumulator each (good, infer, or reject), as well as three models in which either both sets of individual-differences slopes (questionnaire-based and logic-task-based) or only one of them are removed from all three accumulators. In terms of models that do not assume a race between accumulators as the data-generating process, I will limit myself to two additional models: A multinomial processing tree (MPT) model (see Batchelder & Riefer, Citation1999; Erdfelder et al., Citation2009 for reviews) that assumes “noisy channel” mechanisms as the sole source of the different response types, and a “theory-free” multivariate model that can be fitted straightforwardly via the brms package (Bürkner, Citation2017).

The MPT model assumes two types of possible mental edits to the sentence representation that occur serially and stochastically: edits that occur before a grammatical violation arrives and edits that occur in response to such violations. The concept of surprisal (Hale, Citation2001; Levy, Citation2008a) posits that a word's processing difficulty is proportional to its predictability in a given context. Levy (Citation2011) presented a model in which surprisal can be affected by mental edits to the sentence representation before the critical word arrives. For instance, in an agreement attraction sentence like The key to the cabinets were rusty, if the preamble is mentally edited into The keys to the cabinets …, the ungrammatical word were is now much less surprising than in a setting where the preamble is always represented veridically (Yadav et al., Citation2023). Similarly, in a missing VP sentence like The apartment that the maid who the cleaning service sent over was well-decorated, if the preamble is edited to contain only two as opposed to three subject nouns, not encountering a third verb would not be surprising to the reader (Futrell et al., Citation2020; Hahn et al., Citation2022). If such context edits happen pre-perceptually or at least before perceptual information is integrated at higher levels (Huang & Staub, Citation2021a, Citation2021b), this would explain why illusion sentences can be perceived as being formally correct. The surprisal-plus-mental edits model thus offers an alternative explanation for the “get it, correct” judgments seen in the present study.

The MPT model assumes that the process distortion occurs first with probability p, potentially followed by the process repair with probability p

. The process probabilities are estimated from the data. Additionally, the following assumptions are encoded in the model:

| (1) | Distortions are equally likely to occur in grammatical, ungrammatical and illusion sentences, but can differ between constructions. Distortions lead to slowdowns and rejections in grammatical sentences but to speedups and acceptance (“get it, correct”) in ungrammatical and illusion sentences. The amount of slowdown/speedup d differs between constructions and is estimated from the data. | ||||

| (2) | Repairs can also occur across all conditions and incur a cost r that is estimated from the data. Both repair probability and repair cost differ between constructions. Illusion sentences are more likely to be repaired than ungrammatical sentences. Grammatical sentences can only be “repaired” if they have first been distorted, in which case the slowdown will be d+r with probability | ||||

| (3) | Sentence length and individual differences can affect both distortion and repair rates. Distortion and repair costs differ between individuals. Judgment times are lognormally distributed, with an added shift (non-decision time) parameter. | ||||

The current implementation of the noisy-channel proposal as an MPT model is considerably less detailed than the ones provided by Hahn et al. (Citation2022) and Yadav et al. (Citation2023), as it neither takes into account the size of the required edits, nor the corpus frequencies of the different constructions. On the other hand, it is, to my knowledge, the first implementation that takes into account both distortions and repairs across grammatical, ungrammatical, and illusion sentences.

The “theory-free” model was implemented using the brms syntax for multivariate models (Bürkner, Citation2024). The brms model is not a cognitive process model but purely a statistical one that treats the experimental responses as having a categorical distribution with a θ parameter for each response (“get it, correct”, “get it, incorrect”, “don't get it”) that is affected by the experimental manipulations and individual differences. Response times are assumed to have a shifted lognormal distribution with mean μ and standard deviation σ, where μ is affected by the experimental manipulations and individual differences. Crucially, the model does not assume any connection between responses and their associated latencies, apart from possible correlations between random effects for a given subject and item.

displays the difference in expected log pointwise predictive density for each alternative model against the full race model. Differences in have an associated uncertainty, which can be quantified by computing their standard errors and the resulting confidence intervals (Vehtari et al., Citation2017). As the figure shows, turning any of the accumulators in the race model into a “passive” default response sharply reduces predictive fit. The reduction in predictive fit is strongest for the good accumulator, suggesting that the effects of the manipulations on this accumulator are the most important, while removing the slopes on the reject accumulator yields the smallest predictive loss. Nevertheless, the predictive fit of the full model is still higher than for any of the three simpler “default” models.

Figure 16. Estimated differences in log pointwise predictive density between the full model and alternative models. A positive difference means that the alternative model has a better predictive fit than the full model. Error bars show 95% confidence intervals. Note that the y-axis is square-root-scaled.

The two non-race models both have decisively lower predictive fit than the full race model. The multivariate brms model is on par with the worst-fitting race model, but the noisy-channel MPT model does not appear to predict the data well. Of course, this only applies to this specific implementation of the noisy channel approach. One should keep in mind that the MPT model has much fewer parameters than the race model, as well as more constraints: repairs have a strictly positive cost, while distortions are assumed to result in speedups or slowdowns depending on the condition, which are constrained to be of equal magnitude. Hard constraints are important for theory building and testing, especially if the empirical fit of the constrained model turns out to be suboptimal (Roberts & Pashler, Citation2000). Nevertheless, a different implementation of the noisy channel proposal may outperform the race model, but a comparison of different noisy channel models is beyond the scope of the current paper. That being said, it is potentially informative to compare the predictive performance of the race and MPT models for individual observations, as the model with overall worse predictive performance may nevertheless show an advantage for specific aspects of the data (Nicenboim & Vasishth, Citation2018). shows differences in between the two models by condition, observed response, and judgment time.