Abstract

Performance Validity Tests (PVTs) are used to measure the credibility of neuropsychological test results. Until now, however, a minimal amount is known about the effects of feedback upon noncredible results (i.e., underperformance) on subsequent neuropsychological test performance. The purpose of this study was to investigate the effects of feedback on underperformance in Chronic Fatigue Syndrome (CFS) patients. A subset of these patients received feedback on Amsterdam Short-Term Memory (ASTM) failure (i.e., feedback [FB] group). After matching, the final sample consisted of two comparable groups (i.e., FB and No FB; both n = 33). At baseline and follow-up assessment, the patients completed the ASTM and two measurements of information processing speed (Complex Reaction Time [CRT] and Symbol Digit Test [SDT]). Results indicated that the patients in the FB group improved significantly on the CRT, compared to the No FB group. Although not significant, a comparable trend-like effect was observed for the SDT. Independent of the feedback intervention there was a substantial improvement on ASTM performance at re-administration. A limited feedback intervention upon underperformance in CFS patients may result in improvement on information processing speed performance. This implies that such an intervention might be clinically relevant, since it maximizes the potential of examining the patients’ actual level of cognitive abilities.

Introduction

Chronic Fatigue Syndrome (CFS) is defined by a severe and medically unexplained fatigue persisting for six months or more and leading to a substantial reduction in activities. Concentration and memory complaints are among the eight additional symptoms (Fukuda et al., Citation1994; Reeves et al., Citation2003). Subjective cognitive complaints are reported by up to 89% of patients with CFS (Jason et al., Citation1999). These complaints are significantly related to social and occupational dysfunction (Christodoulou et al., Citation1998) and to the level of fatigue (Capuron et al., Citation2006).

Although subjective cognitive complaints are highly prevalent, only a subgroup of CFS patients shows impaired performance on neuropsychological testing (Cockshell & Mathias, Citation2014; Knoop, Prins, Stulemeijer, van der Meer, & Bleijenberg, Citation2006). In their meta-analysis, Cockshell and Mathias (Citation2010) reported inconsistent and even contradictory findings about cognitive performance in CFS patients. These authors found most evidence for cognitive impairments in the domains of information processing speed, and attention. Additionally, fatigue and depressive symptoms did not entirely account for the variance in cognitive test performance (Cockshell & Mathias, Citation2010). Until now, the exact extent and nature of reduced neuropsychological test performance in CFS patients is unclear (DeLuca, Johnson, Ellis, & Natelson, Citation1997; Cockshell & Mathias, Citation2010; Cockshell & Mathias, Citation2014).

One factor that could partially explain low cognitive test scores in CFS is underperformance (Goedendorp, van der Werf, Bleijenberg, Tummers, & Knoop, Citation2013; Van der Werf, Prins, Jongen, van der Meer, & Bleijenberg, Citation2000). Underperformance is conceptualized as the extent to which a person’s test performance is not an accurate reflection of his or her actual level of cognitive abilities (Larrabee, Citation2012). Performance validity tests (PVTs) were developed to detect underperformance due to various causes, such as deliberate uncooperativeness for external gain (e.g., obtaining financial benefits) or more psychological causes (e.g., a patient’s fear that symptoms are not being recognized) (Boone, Citation2007; Carone, Iverson, & Bush, Citation2010).

Determining the validity of cognitive test results in CFS patients is important for the diagnostic process. More specific, underperformance causes noise in the neuropsychological data. This noise, in turn, potentially clouds the expected brain–behavior relationship that underlies neuropsychological test interpretation (Fox, Citation2011). As a result, incorrect conclusions about cognitive impairment can be drawn based on invalid data due to underperformance, leading to inadequate diagnoses and treatment (e.g., Roor, Dandachi-FitzGerald, & Ponds, Citation2016). For this reason, the standard usage of PVTs to assess for underperformance has been advised by professional organizations (Bush et al., Citation2005; Heilbronner et al., Citation2009).

Underperformance in clinical assessments is non-negligible. For example, the prevalence of PVT failure in patients with psychiatric disorder (Dandachi-FitzGerald, Ponds, Peters, & Merckelbach, Citation2011), ADHD (Marshall et al., Citation2010), or traumatic brain injury (Krishnan & Donders, Citation2011) was found to range between 21% and 31%. To date, most studies have shown that investigating underperformance is also relevant to understanding cognitive functioning in patients with CFS. Three studies have found that between 16% and 30% of CFS patients obtained scores indicative of underperformance on a PVT (Goedendorp et al., Citation2013; Van der Werf et al., Citation2000; Van der Werf, de Vree, van der Meer, & Bleijenberg, Citation2002). Other studies have found that the PVT failure rate was low (i.e., 6%; Cockshell & Mathias, Citation2012) or even zero (Busichio, Tiersky, DeLuca, & Natelson, Citation2004) in this patient group. These inconsistent findings on the prevalence of underperformance in CFS patients could be explained by methodological differences between studies, that is, the different PVTs that were used and the heterogeneity of the CFS patient samples.

Although the literature has provided guidelines for the determination and classification of underperformance (Bush et al., Citation2005), a minimal amount of knowledge exists regarding when and how underperformance can best be communicated to the patient. To the best of our knowledge, the study by Suchy, Chelune, Franchow, and Thorgusen (Citation2012) is the only study that investigated whether providing patients with feedback on underperformance had an effect on subsequent neuropsychological test performance. These authors conducted a retrospective study in which two groups of patients with multiple sclerosis (MS) were compared. In one group, feedback was provided regarding PVT failure, while the other group did not receive feedback. The feedback intervention resulted in significantly improved PVT (i.e., the Victoria Symptom Validity Test, VSVT) scores upon re-administration and better performance on a general memory test (i.e., the Wechsler Memory Scale-III, WMS-III) post-feedback. Although these results are promising, it remains unclear whether these results generalize to other clinical samples.

The aim of this study was to investigate whether the favorable effect of providing feedback on underperformance found by Suchy et al. (Citation2012) could also be found in CFS patients.

Method

Participants

Patients were consecutively referred to the Expert Center for Chronic Fatigue of the Radboud University Medical Center, a tertiary treatment facility for chronic fatigue. Consultants at the outpatient clinic of the Department of Internal Medicine assessed the patients’ medical status to decide whether the patients had been sufficiently examined to exclude a medical explanation for their fatigue. If their medical evaluation was deemed insufficient, the patients were seen again for anamnesis, full physical examination, case history evaluation, and laboratory tests, following the national CFS guidelines (Centraal Begeleidings Orgaan, Citation2013), which are in accordance with the U.S. Centers for Disease Control (CDC) guidelines (Fukuda et al., Citation1994; Reeves et al., Citation2003). The CDC criteria for CFS were used: fatigue had to be present for six months or more, accompanied with four out of the following eight symptoms: sore throat, tender lymph nodes, muscle and joint pain, headaches, sleep disturbance, postexertional malaise lasting more than 24 h, and cognitive dysfunction (Fukuda et al., Citation1994; Reeves et al., Citation2003). If patients met the CDC criteria for CFS, they were referred to the Expert Centre for Chronic Fatigue. The patients were seen routinely for CFS management purposes (i.e., not as part of a separate research project). All of the patients in this sample sought treatment for CFS (cognitive behavioral therapy, CBT). If patients were engaged in a disability claim, they could not participate in the treatment program.

Patients were included in this study if they scored 35 or higher on the fatigue severity subscale of the Checklist Individual Strength (CIS; Worm-Smeitink et al., Citation2017); and had a weighted total score ≥700 on the Sickness Impact Profile 8 (SIP8; Jacobs, Luttik, Touw-Otten, & de Melker, Citation1990). For this study, additional inclusion criteria included: (a) underperformance (i.e., score ≤85) on the Amsterdam Short-Term Memory test (ASTM; Schmand & Lindeboom, Citation2005); (b) Dutch language proficiency; (c) repeated neuropsychological assessment; and (d) being 18 years or older. Patients who showed psychiatric comorbidity during a clinical interview that could explain the fatigue were excluded. The initial number of patients in the database was 1,382. A total of 331 CFS patients (23.9%) underperformed (i.e., ASTM ≤85). This percentage of underperformance was in accordance with the prevalence found in previous studies of CFS patients (e.g., Van der Werf et al., Citation2002). The data were collected between July 2004 and July 2012. During the inclusion period, the policy was changed in that since July 2007 feedback was given on PVT failure. Of the patients who fulfilled the mentioned criteria, 103 were provided with feedback (FB group), and 33 were not provided with feedback (No FB group). After matching (see Procedures section) the final sample consisted of two comparable groups (i.e., FB and No FB; both n = 33). displays the demographical and clinical characteristics of the FB and No FB groups.

Table 1. Baseline means, median scores (for the ASTM), standard deviations, and ranges for demographical and clinical characteristics.

The medical ethics committee of the Radboud University Medical Center approved this study.

Procedures

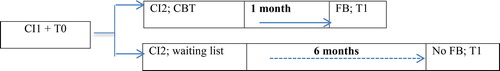

The psychological assessment part of routine clinical care was conducted before starting with CBT. The following actions were conducted successively (see ). First, one of the psychologists met with the patient for a clinical interview. Then, tests and questionnaires (see Instruments) were administered by a trained test assistant. A PVT (i.e., ASTM) was administered at the beginning of this test session, followed by the Symbol Digit Test (SDT) and the Complex Reaction Time task (CRT). During most of the inclusion period of this study, the policy was that all patients with indications for CBT and who failed the ASTM received a feedback intervention before the second assessment, which occurred approximately one week before the start of CBT. The goal of this intervention was to try to attempt to positively influence subsequent psychological assessments and treatment. During this feedback session, the psychologist: (a) addressed that the CFS symptoms of the patient were difficult to evaluate because of the lower than expected test performance on a previously administered test; (b) emphasized exerting the patient’s best effort and that improvement was expected; and (c) explained that therefore tests needed to be repeated (FB group). The remaining subjects in this sample did not receive feedback on the initial PVT failure (No FB group). Therefore, group assignment was conducted on the basis of naturalistic changes in clinical procedures, which were not dependent on patient characteristics. At the second assessment, the ASTM, SDT and CRT were re-administered (i.e., ASTM 2, SDT 2 and CRT 2). The two groups, FB group and No FB group, differed in the meantime interval between the first and second administration. On average, this was one month for the FB group and six months for the No FB group. The patients in the No FB group were placed on a waiting list due to limited treatment capacity for CBT, hence the longer time before re-assessment occurred and treatment was started.

Figure 1. Timeline study procedure: CI1 = clinical interview 1; T0 = neuropsychological test administration baseline; CI2 = clinical interview 2 (randomization to CBT or waiting list); T1 = neuropsychological test administration follow-up.

At baseline, we found that the two groups only differed in mean ASTM score (Mann-Whitney U test, p = .003). Therefore, 33 participants were selected from the FB group (n = 103) by matching them on their ASTM score at baseline with the No FB group—in addition to age, sex, and level of education—blind for other scores at baseline and re-assessment (i.e., neuropsychological test and questionnaire scores at T0 and T1).

Instruments

The Amsterdam Short-Term Memory test (ASTM; Schmand & Lindeboom, Citation2005) was used to measure underperformance. The ASTM is a forced-choice verbal recognition task, presented to subjects as a memory test. Please refer to the ASTM manual for additional details regarding materials and test procedures (Schmand & Lindeboom, Citation2005). The ASTM has been thoroughly validated in 17 normative (n = 222) and clinical patient groups (n = 1281) (Schmand & Lindeboom, Citation2005). Based upon these studies, its internal consistency is excellent (Cronbach’s α = 0.91). The test–retest effect of the ASTM was examined in patients with: (a) documented brain damage/disease (e.g., Korsakoff’s syndrome, traumatic brain injury, etc.); and (b) no external incentives. Stability in test behavior and hence in performance in these patients was expected. After a time interval of one to three days, Pearson’s correlation between the first and second administrations of the ASTM was .91 (Schmand & Lindeboom, Citation2005). In the validation studies, a cutoff score of 86 was associated with a specificity of 83% and a sensitivity of 92% (Schmand & Lindeboom, Citation2005).

The Complex Reaction Time task (CRT; Vercoulen et al., Citation1998) is a reaction time test described in detail in previous studies of CFS patients (e.g., Goedendorp et al., Citation2013). It was used as a measurement of information processing speed. This test is comprised of three consecutive tasks consisting of 30 trials each. For the purposes of this study, a mean reaction time compound score of the three consecutive tasks was calculated and used for further analysis.

The second performance test was the Symbol Digit Test (SDT) of the Dutch version of the Wechsler Adult Intelligence Scale (WAIS; Stinissen, Willems, Coetsier, & Hulsman, Citation1970). Here, symbols are presented and must be decoded based on a key translating nine symbols into nine corresponding digits within a 90-s timeframe. This test taps mainly into information processing speed but also attention. Previous research has shown that CFS patients had significantly slower information processing speed compared to healthy controls, based upon the SDT and CRT (Vercoulen et al., Citation1998).

The revised version of the Symptom Checklist (SCL-90-R; Derogatis, Citation1994) was designed to measure psychological and somatic symptoms over the previous seven days. In this study, the total score was used as a general measurement of psychological and somatic symptoms. The Dutch version of the SCL-90-R provides extensive normative data (Arrindell & Ettema, Citation2005).

The fatigue subscale of the Checklist Individual Strength (CIS Fatigue) was used to indicate the level of fatigue over the previous two weeks. The subscale consists of eight items. The total scores range between 8, indicating no fatigue, and 56, indicating severe fatigue. Scores of ≥35 on the CIS Fatigue are indicative of severe fatigue. The CIS has been extensively validated for the assessment of fatigue (Worm-Smeitink et al., Citation2017), and it is sensitive for detecting changes in fatigue severity (Knoop, van der Meer, & Bleijenberg, Citation2008).

Impairments in daily functioning were assessed with the Sickness Impact Profile 8 total score (SIP8 Total; Jacobs et al., Citation1990). The eight subscales of the SIP are totaled for a weighed total score, with higher scores indicating more functional impairments (range of 0–5799). The mean SIP8 total score of a healthy group of 78 women was 65.5 (SD 137.8) (Servaes, Verhagen, & Bleijenberg, Citation2002). The SIP8 has good reliability (Bergner, Bobbitt, Carter, & Gilson, Citation1981) and was validated for the Dutch population (Jacobs et al., Citation1990).

Data analysis

Data were analyzed using Statistical Package for the Social Sciences software (SPSS), version 23.0, with an alpha of p < .05 (two tailed).

Raw test scores were checked for outliers, score distributions and missing data. There was one extreme outlier for SDT 1, one outlier for SDT 2, one outlier for SCL-90-R, two outliers for CRT 1 compound score, one for CRT 2 compound score. These scores were replaced by the sample mean plus three standard deviations, as described by Field (Citation2013). The scores on the CRT compound score and ASTM were not normally distributed and these variables were therefore log transformed. There were no missing data.

First, descriptive statistics were calculated. The FB and No FB groups were compared with the Mann-Whitney U test (ASTM), independent samples t-test (age; SDT; SCL-90-R; SIP8 Total; CIS Fatigue; log transformed CRT compound score), or the Chi-square test (education; sex). Additionally, the patients from the FB group that were selected in the matching procedure were compared with the nonselected patients on ASTM 2, SDT 2, and log transformed CRT 2 compound scores.

To determine the effects of feedback on subsequent performance on the ASTM, repeated measures analysis of variance (ANOVA) was conducted. The log transformed ASTM total score, SDT score and log transformed CRT score were used as dependent variables, time (baseline and follow-up) as a within-subjects factor, and feedback group (FB and No FB) as a between-subjects factor. Within-group effect sizes were calculated to evaluate the effects of repeating the neuropsychological tests on the FB and No FB groups.

To evaluate the clinical relevance of the feedback intervention, the SDT scores at baseline and follow-up were compared between the two groups (i.e., FB and No FB). Raw test scores on the SDT were compared with demographically adjusted SDT T-scores of the published norms in the manual of the WAIS (Stinissen et al., Citation1970). An SDT T-score of one standard deviation (i.e., SD) less than the mean was considered below “normal.” No norm scores were available for the CRT. Therefore, the procedure of comparing individual scores with demographically adjusted norm scores could not be executed for the CRT.

Results

Descriptive statistics

After matching on their respective baseline measures, selected patients from the FB group (n = 33) were comparable to nonselected patients from the FB group (n = 70) on the ASTM 2 mean score (Mann-Whitney U test, p =. 754), SDT 2 score (t = −1.68, df = 101, p = .095) and log transformed CRT 2 compound score (t = .946, df = 101, p = .346). In the final sample, each group (i.e., FB and No FB) consisted of 33 patients.

As can been seen in , the patients in the two groups (i.e., FB and No FB) had comparable demographic and clinical measures at baseline. The mean level of education in both groups was medium vocational training. The sample consisted of primarily female subjects in their late thirties. The ASTM score ranges showed a strong ceiling effect. In this sample, 81.8% of the ASTM baseline scores varied between 82 and 85, within a range of 64–85. On the second administration of the ASTM, 81.7% of the scores varied between 82 and 90, within a range of 75–90.

Effect of feedback on underperformance

depicts the group (i.e., FB vs. No FB) differences on the repeated measure of underperformance (i.e., ASTM) and measures of information processing speed (i.e., SDT and CRT). Repeated measures analysis of variance (ANOVA), with the log transformed ASTM total score at baseline and follow-up as a within-subjects factor, and feedback group (FB and No FB) as a between-subjects factor, found no significant interaction between time and feedback group: F(1, 64) = 1.63, p = .20. There was a main effect of time, F(1, 64) = 25.25, p < .001, ηp2 = .28, showing an overall improvement on re-administration. There was no main effect for feedback group, F(1, 64) = 0.32, p = .57, ηp2 = .005. Together, these results demonstrated that the ASTM scores improved on re-administration in both the FB and No FB patient groups.

Table 2. Baseline and follow-up means (SDT & CRT), median scores (ASTM), percentages failing the ASTM, and percentages of subjects that score below average on the SDT.

Effect of feedback on information processing speed performance

First, for the SDT, repeated-measures analysis of variance (ANOVA), with the SDT total score at baseline and follow-up as a within-subjects factor, and feedback group (FB and No FB) as a between-subjects factor the interaction between time and feedback group failed to reach significance, F(1, 64) = 4.29, p = .058, ηp2 = .05. There was a main effect of time, F(1, 64) = 9.58, p = .003, ηp2 = .13, indicating that the SDT scores increased between baseline and re-administration. There was a main effect of feedback group, F(1, 64) = 4.67, p = .034, ηp2 = .06, indicating that there was a difference between the FB and No FB CFS patients groups on SDT scores. This difference seems more outspoken at follow-up assessment, as is illustrated by a larger within-group effect size of −.69 in the FB group compared to −.14 in the No FB group.

At baseline, an equal number of participants scored less than “normal” (i.e., < 1 SD below the mean) on the SDT in both groups (i.e., 4/33 in the FB and No FB groups). At follow-up, significantly more subjects improved to a “normal” score on the SDT in the FB group, compared to the No FB group (χ2 = 5.41, p = .020). During follow-up, all of the subjects in the FB group scored in the “normal” range (i.e., > 1 SD below the mean). In the No FB group, 5 of 33 (i.e., 15%) produced less than average scores at follow-up.

Second, for the CRT log transformed compound score, repeated-measures analysis of variance (ANOVA), with the CRT log transformed compound score at baseline and follow-up as a within-subjects factor, and feedback group (FB and No FB) as a between-subjects factor, found an interaction effect for time and feedback group, F(1, 64) = 13.27, p = .001, ηp2 = .17 indicating that patients in the FB group improved significantly more on the CRT compared to patients in the No FB group (see ). There was a main effect of time, F(1, 64) = 5.17, p = .02, ηp2 = .07, showing that the CRT scores improved between baseline and re-administration. There was a main effect for feedback group, F(1, 64) = 8.31, p = .005, ηp2 = .11, indicating that patients in the FB group scored significantly higher on the CRT compared to the No FB group. Additionally, the within-group effect size was −.71 in the FB group and .20 in the No FB group. In summary, these results demonstrated that the FB group showed significant greater improvement on the CRT than the No FB group.

Discussion

We examined the effect of providing feedback on underperformance on the subsequent neuropsychological test performance of CFS patients. To our knowledge, this is the second study to examine the effect of feedback on underperformance in clinical patients.

Our main findings are that (a) underperformance occurred in a substantial number of CFS patients referred for treatment (i.e., 23.9%), (b) underperformance was not stable between assessments, and (c) the feedback intervention had no effect upon underperformance on the re-administered PVT (i.e., ASTM), and was associated with mixed findings on measurements of information processing speed during follow-up.

Before discussing the study findings in detail, we want to address one major limitation of this study: the difference in time interval between baseline and follow-up of the FB group (i.e., one month) and No FB group (i.e., six months). The difference in the time interval was caused by limited treatment capacity, and not by a systematic flaw in the study design. Also, the two groups were matched on baseline measures, and analyses showed that this matching was done without influencing outcome measurements. Nonetheless, the difference in time interval might have influenced our results. First to mention is the difference in practice effect (Heilbronner et al., Citation2010) in the two conditions. It could be argued that the shorter between-session period in the FB group resulted in higher scores at re-assessment compared to the No FB group. Unfortunately, the practice effects of the utilized neuropsychological tests in this study (i.e., SDT and CRT) for the two different time intervals in CFS patients are unknown. Additionally, the longer waitlist period in the No FB group may have resulted in changes in health status (i.e., worsening of symptoms) compared to the FB group, which could have affected the results.

The large proportion of patients in both groups (i.e., 38–48%) that performed within normal limits on the ASTM during the repeated neuropsychological assessment, underscores the idea that underperformance is not a static trait. That is, given the high test–retest reliability of the ASTM, a change in test score at follow-up assessment, was probably not due to error variance but due to a change in underperformance. Our findings are comparable to those of Van Valen et al. (Citation2015), who found that, in a sample of 323 clinical patients with chronic solvent-induced encephalopathy (CSE), 42% reverted from an invalid to valid score on a PVT at re-assessment after one year. The practical implication of these findings is that performance validity should be checked for in every repeated neuropsychological assessment.

The lack of a group difference on the ASTM in combination with improvements on measures of information processing speed in the FB group could intuitively seem inconsistent, and raises questions. However, it could be argued that—because the CRT is likely more sensitive in detecting change (i.e., measured in msec.) compared to the SDT—a group difference was found on this measure. Moreover, the SDT showed a comparable trend—albeit borderline significant—and also showed more “clinical” improvement at re-assessment in the FB group. Therefore, despite the mentioned limitations, these findings may tentatively suggest a positive feedback effect.

An important strength of the current study is that during follow-up all tests (i.e., ASTM, SDT, and CRT) were re-administered in both groups (i.e., FB and No FB). Suchy et al. (Citation2012) only repeated the PVT (i.e., VSVT) in the FB group, and found that 68% of the patients reverted to a valid score range upon re-administration. Consequently, they concluded that a feedback intervention upon PVT failure effectively decreased underperformance. In the current study, we found that 48% of the patients in the FB group scored in the valid score range at follow-up. However, because 38% in the No FB group also showed improvement on the ASTM at re-administration, we found no significant effect of the feedback intervention upon subsequent PVT performance. This result suggests that the conclusion of a positive effect of a feedback intervention upon underperformance reported by Suchy et al. (Citation2012) was likely premature.

Although our study has its merits in advancing the understanding of the effect of feedback upon underperformance, its retrospective and naturalistic design is a limitation. An experimental design with random group allocation (i.e., FB and No FB) is necessary to further determine the effect of feedback on underperformance. Additionally, future studies could use theoretical frameworks associated with feedback responsiveness in cases of noncredible test results, such as deterrence theory (Horner, Turner, VanKirk, & Denning, Citation2017) and cognitive dissonance theory (Merckelbach, Dandachi-FitzGerald, van Mulken, Ponds, & Niesten, Citation2015). This results in a theory-driven intervention, which could lead to better understanding and reproducibility of study findings.

How to interpret retest data after feedback upon underperformance clinically? First, patients who continue to underperform during follow-up are likely capable of better performance. Their neuropsychological test results are invalid. Second, improvement on a PVT (i.e., performance in the valid score range at re-administration) after a feedback intervention does not automatically indicate that the patient did not underperform and that the neuropsychological test scores are thus valid. Nonetheless, even if a normal score reflects an underestimation, this score still excludes cognitive deficits. This fact is important since the primary purpose of a neuropsychological assessment is, as stated by Boone (Citation2007), “to determine whether patients have objectively (i.e., credible) verified cognitive dysfunction” (p. 37). Although some researchers argued that warning subjects that measures of underperformance will be administered reduces subsequent response bias (i.e., Schenk & Sullivan, Citation2010; Johnson & Lesniak-Karpiak, Citation1997), Youngjohn, Lees-Haley, and Binder (Citation1999) suggest that a improvement in performance on a re-administered PVT after such an warning intervention might be the result of a more sophisticated form of response bias, in which case the question regarding the validity of the test results remains. This result is not only a limitation of this study but of the entire field of neuropsychological assessment: a gold standard to measure performance validity is lacking. Therefore, clinicians should be cautious in interpreting neuropsychological test results in the presence of PVT failure, even after improved performance with repeated administration. In general, the credibility of the symptoms and test performance of an individual patient is determined by the clinician relative to all of the available information (i.e., information from the interview with the patient, his/her behavioral presentation during the assessment, and the scientific knowledge of patterns and severity of cognitive disorders associated with the clinical condition). This determination of the credibility of the neuropsychological test results is improved by the employment and consideration of validity tests, compared to clinical judgment alone (Dandachi-FitzGerald, Merckelbach, & Ponds, Citation2017).

In conclusion, our findings suggest that (a) underperformance occurs in a substantial number of CFS patients referred for treatment, (b) performance validity is not a stable characteristic but fluctuates between assessments, and (c) a limited feedback intervention may result in improvement on information processing speed performance. These findings imply that that performance validity should be assessed in every repeated neuropsychological assessment. Similarly, research studies on cognitive deficits in CFS patients need to take performance validity into account. Finally, the possible positive effect of a feedback intervention warrants further research. Engaging in underperformance might reinforce the patient’s experience of symptoms and illness behavior. Therefore, it is important that research into strategies that might prevent or alter this behavior is conducted.

Acknowledgments

We thank Lianne Vermeeren for the collection of the data. Part of the results of this study was presented at the mid-year meeting of the International Neuropsychological Society, July 2016, London (UK).

References

- Arrindell, W. A., & Ettema, J. H. (2005). Symptom Checklist. Handleiding bij een multidimensionale psychopathologie indicator [Symptom Checklist. Manual of a multidimensional psychopathology indicator]. Amsterdam: Harcourt Test Publishers.

- Bergner, M., Bobbitt, R. A., Carter, W. B., & Gilson, B. S. (1981). The Sickness Impact Profile - development and final revision of a health-status measure. Medical Care, 19(8), 787–805.

- Boone, K. B. (2007). Assessment of feigned cognitive impairment: A neuropsychological perspective. New York: Guilford Press.

- Bush, S., Ruff, R., Troster, A., Barth, J., Koffler, S., Pliskin, N., … Silver, C. (2005). NAN position paper. Symptom validity assessment: Practice issues and medical necessity. NAN Policy & Planning Committee. Archives of Clinical Neuropsychology, 20(4), 419–426.

- Busichio, K., Tiersky, L. A., DeLuca, J., & Natelson, B. H. (2004). Neuropsychological deficits in patients with chronic fatigue syndrome. Journal of the International Neuropsychological Society, 10(02), 278–285.

- Capuron, L., Welberg, L., Heim, C., Wagner, D., Solomon, L., Papanicolaou, D. A., … Reeves, W. C. (2006). Cognitive dysfunction relates to subjective report of mental fatigue in patients with chronic fatigue syndrome. Neuropsychopharmacology, 31(8), 1777–1784.

- Carone, D. A., Iverson, G. L., & Bush, S. S. (2010). A model to approaching and providing feedback to patients regarding invalid test performance in clinical neuropsychological evaluations. The Clinical Neuropsychologist, 24(5), 759–778.

- Centraal Begeleidings Orgaan. (2013). Richtlijn Diagnose, behandeling, begeleiding en beoordeling van patiënten met het chronisch vermoeidheidssyndroom (CVS) [Guideline: Diagnosis, treatment, coaching and evaluation of patients suffering chronic fatigue syndrome (CFS)]. Retrieved from https://www.diliguide.nl/document/3435/file/pdf/ 2013.

- Christodoulou, C., DeLuca, J., Lange, G., Johnson, S. K., Sisto, S. A., Korn, L., & Natelson, B. H. (1998). Relation between neuropsychological impairment and functional disability in patients with chronic fatigue syndrome. Journal of Neurology, Neurosurgery & Psychiatry, 64(4), 431–434.

- Cockshell, S. J., & Mathias, J. L. (2010). Cognitive functioning in chronic fatigue syndrome: A meta-analysis. Psychological Medicine, 40(08), 1253–1267.

- Cockshell, S. J., & Mathias, J. L. (2012). Test effort in persons with chronic fatigue syndrome when assessed using the Validity Indicator Profile. Journal of Clinical and Experimental Neuropsychology, 34(7), 679–687.

- Cockshell, S. J., & Mathias, J. L. (2014). Cognitive functioning in people with chronic fatigue syndrome: A comparison between subjective and objective measures. Neuropsychology, 28(3), 394–405.

- Dandachi-FitzGerald, B., Merckelbach, H., & Ponds, R. (2017). Neuropsychologists’ ability to predict distorted symptom presentation. Journal of Clinical and Experimental Neuropsychology, 39(3), 257–264.

- Dandachi-FitzGerald, B., Ponds, R. W. H. M., Peters, M. J. V., & Merckelbach, H. (2011). Cognitive underperformance and symptom over-reporting in a mixed psychiatric sample. The Clinical Neuropsychologist, 25(5), 812–828.

- De Bie, S. (1987). Standaardvragen 1987: Voorstellen voor uniformering van vraagstellingen naar achtergrondkenmerken en interviews [Standard questions 1987: Proposal for uniformization of questions regarding background variables and interviews]. Leiden, Netherlands: Leiden University Press.

- DeLuca, J., Johnson, S., Ellis, S. P., & Natelson, B. H. (1997). Cognitive functioning is impaired in patients with chronic fatigue syndrome devoid of psychiatric disease. Journal of Neurology, Neurosurgery & Psychiatry, 62(2), 151–155.

- Derogatis, L. R. (1994). SCL-90-R: Administration, scoring and procedures manual (3rd ed.). Minneapolis, MN: Nation Computer Systems.

- Field, A. P. (2013). Discovering statistics using IBM SPSS Statistics: and sex and drugs and rock 'n' roll (fourth edition). London: Sage publications.

- Fox, D. D. (2011). Symptom validity test failure indicates invalidity of neuropsychological tests. The Clinical Neuropsychologist, 25(3), 488–498.

- Fukuda, K., Straus, S. E., Hickie, I., Sharpe, M. C., Dobbins, J. G., & Komaroff, A. (1994). The chronic fatigue syndrome: A comprehensive approach to its definition and study. International Chronic Fatigue Syndrome Study Group. Annals of Internal Medicine, 121(12), 953–959.

- Goedendorp, M., van der Werf, S., Bleijenberg, G., Tummers, M., & Knoop, H. (2013). Does neuropsychological test performance predict outcome of cognitive behavior therapy for chronic fatigue syndrome and what is the role of underperformance? Journal of Psychosomatic Research, 75(3), 242–248.

- Heilbronner, R. L., Sweet, J. J., Attix, D. K., Krull, K. R., Henry, G. K., & Hart, R. P. (2010). Official position of the American Academy of Clinical Neuropsychology on serial neuropsychological assessments: The utility and challenges of repeat test administration in clinical and forensic contexts. The Clinical Neuropsychologist, 24(8), 1267–1278.

- Heilbronner, R., Sweet, J., Morgan, J., Larrabee, G., & Millis, S. & Conference Participants (2009). American Academy of Clinical Neuropsychology consensus conference statement on the neuropsychological assessment of effort, response bias, and malingering. The Clinical Neuropsychologist, 23, 1093–1129.

- Horner, M. D., Turner, T. H., VanKirk, K. K., & Denning, J. H. (2017). An intervention to decrease the occurrence of invalid data on neuropsychological evaluation. Archives of Clinical Neuropsychology, 32, 228–237.

- Jacobs, H. M., Luttik, A., Touw-Otten, F. W., & de Melker, R. A. (1990). The Sickness Impact Profile; results of an evaluation study of the Dutch version (De 'sickness impact profile’; resultaten van een valideringsonderzoek van de Nederlandse versie. Nederlands Tijdschrift Voor Geneeskunde, 134, 1950–1954.

- Jason, L. A., Richman, J. A., Rademaker, A. W., Jordan, K. M., Plioplys, A. V., Taylor, R. R., … Plioplys, S. (1999). A community-based study of chronic fatigue syndrome. Archives of Internal Medicine, 159(18), 2129–2137.

- Johnson, J. L., & Lesniak-Karpiak, K. (1997). The effect of warning on malingering on memory and motor tasks in college samples. Archives of Clinical Neuropsychology, 12(3), 231–238.

- Knoop, H., Prins, J., Stulemeijer, M., van der Meer, J. W., & Bleijenberg, G. (2006). The effect of cognitive behaviour therapy for chronic fatigue syndrome on self-reported cognitive impairments and neuropsychological test performance. Journal of Neurology, Neurosurgery and Psychiatry, 78(4), 434–436.

- Knoop, H., van der Meer, J. W. H., & Bleijenberg, G. (2008). Guided self-instructions for people with chronic fatigue syndrome: randomised controlled trial. British Journal of Psychiatry, 193(04), 340–341.

- Krishnan, M., & Donders, J. (2011). Embedded assessment of validity using the Continuous Visual Memory Test in patients with traumatic brain injury. Archives of Clinical Neuropsychology, 26(3), 176–183.

- Larrabee, G. J. (2012). Performance validity and symptom validity in neuropsychological assessment. Journal of the International Neuropsychological Society, 18(04), 625–631.

- Marshall, P., Schroeder, P., O’Brien, J., Fischer, R., Ries, A., Blesi, B., & Barker, J. (2010). Effectiveness of symptom validity measures in identifying cognitive and behavioral symptom exaggeration in adult attention deficit hyperactivity disorder. The Clinical Neuropsychologist, 24(7), 1204–1237.

- Merckelbach, H., Dandachi-FitzGerald, B., van Mulken, P., Ponds, R., & Niesten, E. (2015). Exaggerating psychopathology produces residual effects that are resistant to corrective feedback: An experimental demonstration. Applied Neuropsychology: Adult, 22(1), 16–22.

- Reeves, W. C., Lloyd, A., Vernon, S. D., Klimas, N., Jason, L. A., Bleijenberg, G., … Unger, E. R. (2003). Identification of ambiguities in the 1994 chronic fatigue syndrome research case definition and recommendations for resolution. BMC Health Services Research, 3(1), 25.

- Roor, J. J., Dandachi-FitzGerald, B., & Ponds, R. W. M. (2016). A case of misdiagnosis of mild cognitive impairment: The utility of symptom validity testing in the outpatient memory clinic. Applied Neuropsychology: Adult, 23(3), 172–178.

- Schenk, K., & Sullivan, K. A. (2010). Do warnings deter rather than produce more sophisticated malingering? Journal of Clinical and Experimental Neuropsychology, 32(7), 752–762.

- Schmand, B., & Lindeboom, J. (2005). Amsterdam Short-Term Memory Test: Manual. Leiden. Netherlands: Psychologische Instrumenten, Tests en Services.

- Servaes, P., Verhagen, C., & Bleijenberg, G. (2002). Determinants of chronic fatigue in disease-free breast cancer patients, a cross-sectional study. Annals of Oncology, 13(4), 589–598.

- Stinissen, J., Willems, P. J., Coetsier, P., & Hulsman, W. L. (1970). Handleiding bij de Nederlandse bewerking van de WAIS [Manual of the Dutch edition of the WAIS]. Amsterdam: Swets and Zeitlinger.

- Suchy, Y., Chelune, G., Franchow, E. I., & Thorgusen, S. R. (2012). Confronting patients about insufficient effort: The impact on subsequent symptom validity and memory performance. The Clinical Neuropsychologist, 26(8), 1296–1311.

- Van der Werf, S., Prins, J., Jongen, P., van der Meer, J., & Bleijenberg, G. (2000). Abnormal neuropsychological findings are not necessarily a sign of cerebral impairment: A matched comparison between chronic fatigue syndrome and multiple sclerosis. Neuropsychiatry, Neuropsychology, and Behavioral Neurology, 13, 199–203.

- Van der Werf, S., de Vree, B., van der Meer, J., & Bleijenberg, G. (2002). The relations among body consciousness, somatic symptom report, and information processing speed in chronic fatigue syndrome. Neuropsychiatry, Neuropsychology, and Behavioral Neurology, 15, 2–9.

- Van Valen, E., van Hout, M., Heutink, M., Wekking, E., van der Laan, G., Hageman, G., … Schmand, B. (2015). Performance validity in chronic solvent-induced encephalopathy. Poster session presented at the annual meeting of the Developmental Neurotoxicology Society, Montréal, Canada.

- Vercoulen, J. H., Bazelmans, E., Swanink, C. M., Galama, J. M., Fennis, J. F., van der Meer, J. W., & Bleijenberg, G. (1998). Evaluating neuropsychological impairment in chronic fatigue syndrome. Journal of Clinical and Experimental Neuropsychology, 20(2), 144–156.

- Worm-Smeitink, M., Gielissen, M., Bloot, L., van Laarhoven, H. W., van Engelen, B. G., van Riel, P., … Knoop, H. (2017). The assessment of fatigue: Psychometric qualities and norms for the Checklist individual strength. Journal of Psychosomatic Research, 98, 40–46.

- Youngjohn, J. R., Lees-Haley, P. R., & Binder, L. M. (1999). Comment: Warning malingerers produces more sophisticated malingering. Archives of Clinical Neuropsychology, 14(6), 511–515.