ABSTRACT

Public engagement is crucial to strengthen responsibility frameworks in highly innovative contexts, including as part of business organisations. One particular innovation that calls for public engagement is gamification. Gamification fosters changes in working practices to improve the organisation, efficiency and productivity of a business by introducing gratification and engagement mechanisms in non-gaming contexts. Gamification modifies the workforce’s perception of constraints and stimulates the voluntary assumption of best practices to the benefit of employees and enterprises alike. Here, we broadly discuss the use of gamification at work. Indeed, gamification raises several concerns about privacy, due to the massive collection, storage and processing of data, and about the freedom of employees: as the level of data protection decreases, so too does workers’ self-determination. We argue that the implementation of privacy by design can not only strengthen autonomy via data protection but also develop more viable instances of RRI in accordance with human rights.

Introduction

Gamification innovations represent a quiet revolution in the organisation and management of work environments in which gratification and engagement mechanisms (comparable to those in videogamesFootnote1) are introduced in non-gaming workplace contextsFootnote2 (Deterding et al. Citation2011). Serious games are concerned with the use of gaming for purposes other than mere entertainment or fun and have ‘a special power to motivate and instruct,’ thus becoming an excellent tool for easy and quick learning (Meadows Citation1999, 345). Because of this ‘learning by doing’ (Dewey Citation1916), gamification is particularly useful in non-ludic contexts. Game elements – including avatars, challenges, competitions, leaderboards, notifications, user profiles and role-playing – are being implemented across a wide range of sectors such as healthcare, marketing, finance, education, logistics, e-commerce and retailing. Several different organisations deploy gamification to enhance efficiency and productivity by stimulating their workers or other stakeholders (patients, teachers, students, clients etc.) to adopt a given desired behaviour without explicitly forcing it and to respond to anticipated problems according to their interaction with the game (Mavroeidi, Kitsiou, and Kalloniatis Citation2020).

In business, game design elements are increasingly being used to create more attractive work environments, capture user motivation and engagement, increase worker competence, train employees and foster best practices while avoiding the application of traditional rules and disciplinary sanctions (Mavroeidi, Kitsiou, and Kalloniatis Citation2019; Warmelink et al. Citation2020). Game design elements have been used, for instance, to train surgeons to ‘heighten the awareness of all aspects of thoracic surgical education’ in a more stimulating format (Mokadam et al. Citation2015, 1053) and to provide ‘an active learning and training environment for military jet pilots’ in flight simulators (Noh Citation2020). Alibaba introduced game design elements in its digital wallet Alipay so ‘users get points through responsible use of Alibaba fintech offerings.’Footnote3 Digital games that incorporate sustainability issues have been used in education to update the competences of schoolteachers, students, who learn how to develop such digital games, and children, who learn the value of sustainability (Nordby et al. Citation2016). Apart from these interesting cases, gamification also raises some concerns, depending on the context.Footnote4

Amazon is also experimenting with using game design elements in low-skilled work. It employs them to reduce the negative experiences associated with repetitive tasks such as taking items from shelves and stowing products on them. Employees engage in a ‘racing’ game to fulfil customer orders while their progress is registered in a videogame format (Bensinger Citation2019). Workstations display staff progress in the game on small screens: lights indicate which item the worker/player needs to put in a given bin and scanning devices track task completion. Individuals, teams or entire floors can then be entered in these race-style competitions to pick or stow toys, cell phone cases, coffee makers, etc. As the game progresses, employees are then rewarded with points, virtual badges and other goodies at the end of their shift. This boosts employee engagement in the task at hand and encourages adherence to standard organisational practices. This profound ability to transform staff motivation and habits – and its potential to encompass other key stakeholders – makes the innovation of gamification a particular case of innovation that calls for a responsible innovationFootnote5 framework.

However, despite increases in productivity, efficiency and staff motivation, especially in contexts where there is a need to perform particularly repetitive and stressful tasks (e.g. in logistics, e-commerce, retailing and sharing economy sectors such as ride-hailing, food delivery, couriers, taxis etc.), innovations in workplace gamification can raise social and ethical issues. In more industrialised contexts, in fact, game design elements raise questions regarding health and autonomy,Footnote6 for example, since workers are steered into doing what they probably would not have done spontaneously of their own accord, with consequences for their health that can be relevant. Moreover, this effect is reached thorugh a severe diminution of privacy safeguards since the introduction of gaming elements requires pervasive collection, storage and processing of employee data (Mavroeidi, Kitsiou, and Kalloniatis Citation2019).

This point puts employee subjectivity in sharp relief in contexts where game design elements push workers to reach higher performance levels by alleviating the tedious and stressful nature of their tasks. In this way, game design elements function as a form of human enhancement both physically (since the worker’s performance is enhanced) and morally (since the worker’s motivation is modified – Perryer et al. Citation2016). In an analysis of ‘human enhancement’ in work contexts, Pustovrh, Mali, and Arnaldi (Citation2018) argue that cognitive enhancement (pharmaceutical in their case) can raise work norms and create the conditions for work to respond to ever more stressful and fatiguing demands.Footnote7 Responsibility is therefore needed in managing this efficiency and effectiveness and the augmentation of bodily capacities to prolong this optimised productive behaviour over time. Gamification here becomes the main means of reaching this aim.

In this context, however, since game design elements require data to function, the process of altering worker’s preferences requires a massive collection of employee data. There therefore exists a special link between the data that are required by game design elements in work and the autonomy of the individuals whose will is altered in gamified contexts. As personal information collected during the ‘game experience’ is processed through automated profiling templates (the evaluation logic and psychological induction mechanisms of which remain opaque and outside the control of the individual), the question of transparency becomes a problem of individual self-determination versus organisational conformity.Footnote8 Since workers are led to increase their performance in more demanding tasks which they probably would not have done spontaneously considering their repetitive and stressful nature, in the end they are induced to wish what the employer wants and completely adhere to the purposes of the enterprise. Viewed from this perspective, gamification almost represents a devious form of instrumentalisation of workers making them subject to employer demands. Therefore, through the use of game design elements and their consequences in terms of ludopathy and gaming addiction (Griffiths and Alex Citation2009), the transformation of staff motivation leads to employee subjectivity overlapping with that of the employer. In this context, the aims of the enterprise and workers therefore tend to coincide.

Considering their potential impact on data protection, health and autonomy rights,Footnote9 gamification innovations may therefore stray onto a collision course with individual rights,Footnote10 leading to them being assessable as irresponsible innovation (von Schomberg Citation2013).

This study does not reject gamification in the workplace as such, but questions which design requirements should be in place to meet the requirements of RRI and PbD (D’Acquisto and Naldi Citation2017). We do not merely critique gamified experiences that raise concerns but try to indicate the necessary correctives to make these experiences ethically sound and responsible. We intend to show that the principle of PbD can encourage responsible innovations in gamification, i.e. ones providing the desired productivity increases in an organisation while at the same time safeguarding the rights of its employees. Furthermore, we hypothesise that PbD-led responsible innovation can strengthen both data protection and autonomy in work environments.

This study is structured as follows. First, a case of game design elements in the workplace is presented that raises concerns from a RRI perspective, in particular regarding worker privacy, health and autonomy, which are considered from the legal standpoint in the following sections. To analyse the case, the literature on RRI is reviewed following a rights-based approach and responsible innovation frameworks are applied to the case of gamification innovations to identify the social-ethical issues involved.Footnote11 Next, the PbD principle is proposed as a means to operationalise RRI in gamification. Finally, the introduction of game design elements in the workplace in the light of PbD is framed, suggesting design requirements and actions to be adopted at the design stage (or ‘RRI correctives’) which can potentially strengthen data protection in an organisation in order to also enhance workers’ autonomy and their health.

The rise of gamification in the workplace

Deterding et al. (Citation2011) define gamification as the implementation of game elements in services which are not games. electing ‘points’ to pass ‘levels’ and winning ‘rewards’ are examples of game elements (Cafazzo et al. Citation2012). Gamification offers benefits in several domains. In education, users’ interest in learning is increased (Lucassen and Jansen Citation2014), while gamification is used in logistic activities to maintain workers’ motivation (Hense et al. Citation2014). Apart from these domains, interactive elements have been integrated in the working environment to enhance user engagement and adoption of the services involved. Additionally, gamification affects users’ behaviour, as in some domains users have to complete their tasks to win badges (Mavroeidi, Kitsiou, and Kalloniatis Citation2019; AlMarshedi et al. Citation2017).

Gamification is used extensively in workplaces and results in various benefits, as it can be a solution to numerous work challenges in an organisation, including training and skill development (Ruhi Citation2015). Crucially, through game-like processes a productive and healthy work environment is maintained in which repetitive tasks become enjoyable. By enhancing employees’ willingness to complete such tasks, their workplace stress can be more effectively controlled, resulting in higher levels of staff health and wellbeing (Herzig, Ameling, and Schill Citation2012).

According to Oprescu, Jones, and Katsikitis (Citation2014), ten principles can be applied to transform work activities through gamification. These are summarised in the mnemonic ‘I play at work.’ Taking each in turn, the ‘persuasive elements’ of gamification increase employee’s satisfaction with their work, while ‘learning orientation’ refers to the development of personal and organisational capabilities and resources. The ‘achievement-based rewards’ principle, meanwhile, boosts employee retention. The ‘Y-generation adaptable’ principle focuses on work experiences that are enjoyable and rewarding for the staff involved. ‘Amusement factors’ result in personal satisfaction. Similarly, the ‘transformative’ and ‘wellbeing oriented’ principles refer to enhanced levels of productivity and personal/organisational wellbeing respectively. Employee self-efficacy is encompassed in the ‘orientation’ principle, while the ‘research-generating’ principle improves collaboration and understanding between managers and their teams, and decision-making processes. Finally, the ‘knowledge-based’ principle refers to the systematic provision of feedback, including rewards, to employees. According to Perryer et al. (Citation2016), when using gamified services some common issues should be considered and deserve attention, so that for using them to be effective several rules should be considered when designing them. Such rules are emphasis on cooperation to avoid negative feelings and that no further effort is required in order not to lead to demotivation. When designing services with such issues in mind, an appropriate balance between gaming and working is ensured (Perryer et al. Citation2016).

Gamification is variously spreading in the workplace. DevHub provides a gamified application through which employees win badges – ‘devatars’ – for completing their tasks, thus intensifying productivity, particularly among those who previously avoided such tasks. At Google, employees who travel are encouraged to use a Travel Expense app. If they spend less than their designated expenses they are given the opportunity either to reallocate the difference to a subsequent trip, to receive it in their next pay check or to donate it to charity (Datagame Citation2016). Gamification can also be used to resolve organisational gaps. Lawley Insurance, for instance, designed a staff rewards contest to find and correct database inaccuracies that were leading to unreliable sales forecasts. Within two weeks, staff had hit as many of the company’s targets as in the previous seven and a half months combined (Datagame Citation2016). Gamification also has interesting applications in the field of sustainability. The UVa Bay Game has been tested as an effective learning platform to raise awareness of sustainability issues. Students played ten two-year rounds adopting a ‘doing right by doing the right thing’ approach. The aim was to change their behaviour to make it more environmentally friendly (Learmonth et al. Citation2011).

Large-scale distribution

Despite some positive examples of applications of game design elements in the workplace (e.g. training aviators, surgeons, school teachers, students, work reorganisation in the insurance sector etc.), there are concerns regarding workplace gamification, particularly when one looks at business sectors such as logistics, e-commerce, retailing, ride-hailing, food delivery and couriers. In this case, there are specific factors related to work involving repetitive, stressful and non-provisional manual tasks, the organisation of turnover and the particular heaviness of night and day shifts that make the use of gamification problematic. In logistics, for example, packaging and order-picking tasks entail that workers have to perform the same movements for hours in day and night turns often only in contact with robots and machines. This type of work can lead to chronic diseases and various health problems concerning tendons and muscles in the workers’ arms and legs, especially when a shift lasts the whole week (Ferro Citation2021). This aspect of work processes can change the impact of game design elements in the workplace. As ILO (Citation2021, 220) highlights, ‘gamification schemes […] push workers towards excessively long hours and high-intensity work could be considered injurious to health.’ Using gamification techniques in such work processes sheds light on more general aspects of gamification that are less visible in training settings.

Special attenation must be devoted to the game case since playing is essentially opposed to working. Playing has specific relevance in human life since it allows forms of constraints typical of working to be interrupted, thus enriching the individual’s imagination. While games are not the free play of imagination but quite the opposite since they follow rules, playing is fundamentally different to working. Although in an ordered form,Footnote12 play is a key element of what in Marxist terms can be called the realm of freedom, as opposed to the realm of necessity. Homo ludens cannot be at the same time homo faber. Play in the service of work aims and with the ultimate goal of profit-making must be seen as a perversion of play when looked at from the perspective of approaches ranging from Friedrich Schiller’s ‘On the Aesthetic Education of Man’ (1794) to Huizinga (Citation1998). In this sense, gamification only transforms the recreative goal of playing into a means for better performing mandatory work tasks. This is a kind of trick or illusion that has consequences for workers.

Gamifying repetitive mechanical work may therefore be seen as an abuse of the human drive to play, a form of instrumentalisation of human beings and so a violation of their autonomy. As Schiller said, man only plays when he is in the fullest sense of the word a human being, and he is only fully a human being when he plays. Moreover, practically speaking, game addiction, ludopathy and a state of permanent competition with other colleagues accompanied with a perception of no constriction in performing heavy tasks can further increase pressure on employees from both the physical and psychological standpoints (Griffiths and Alex Citation2009).

Recently, the media have highlighted the case of Amazon warehouses, where some forms of gamification have been experimented with for a while (Bensinger Citation2019). This new form of labour organisation is especially relevant in the European context, in particular in terms of autonomy, health and data protection (on this, see § 4). Therefore, it is crucial to assess its sustainability given the rights system implemented in EuropeFootnote13 and to see how it can be transformed in a way which might be compatible with European rights regulation. In other words, it should be seen whether gamification can lead to responsible and ethical outcomes (Warmelink et al. Citation2020).

Despite much attention by mass media, not much is known about the gamification practices in Amazon warehouses (no video or pictures are available as the use of mobile phones is not allowed). Nonetheless, information available from traditional media allows us to understand the real dimension of the phenomenon.

In Citation2019, Amazon started to gamify tasks in some of its warehouses to boost employees’ motivation when picking and stowing items, often for ten hours a day or more (Bensinger Citation2019). In line with the burgeoning automation of work processes, the Amazon workforce was forced to be isolated from other workmates, to often be stationary and to perform highly repetitive tasks in situ. The use of robots flanking humans has certainly made the work less strenuous since employees no longer have to run kilometres during their shifts. However, the trade-off is a more monotonous work pattern.

To enhance the workers’ productivity and make their tasks more enjoyable – or perhaps, in fact, endurable – Amazon has therefore introduced a form of gamified re-organisation of logistic work (Warmelink et al. Citation2020; Delfanti Citation2019). Experimental games entitled MissionRacer, PicksInSpace, Dragon Duel and CastleCrafter have been implemented in five warehouses on a voluntary basis. These games feature vintage old-fashioned graphic design reminiscent of videogame masterpieces such as Donkey Kong and Pac-Man. The games are displayed on small screens of workstations, like a form of workplace Tetris, and indicate which item must be placed in a given bin. Through the use of scanning devices and a tracking system for items (Delfanti Citation2019), individuals, teams and even entire floors can follow the progress of the work, which correlates with the completion of levels in a virtual competition. Staff are then awarded points, rewards, virtual prizes or virtual badges based on their standing on the leaderboard in the style of arcade machines popular in the 1980s. Thanks to the engaging play element associated with video games, workers perceive lower levels of fatigue and stress (Griffiths and Alex Citation2009) and are more motivated to follow standard protocol in less time. Anonymous workers interviewed by the Washington Post stated that they were able to stow up to 500 items in less than an hour. Other interviewees, also anonymously, voiced appreciation of this gamification as a means of breaking the monotony of their tasks at work.

Clearly, the redefinition of work via gamification provides significant benefits in organisational efficiency and productivity. The need for complete automation of work is avoided (Casilli Citation2020) while maintaining the better competence of humans with the same efficiency as machines but at a cheaper cost and with comparable outcomes (Warmelink et al. Citation2020). In this light, gamification leads to better integration of human labour with automatised work owing to a clear amelioration of workforce performance. Working in an artificial and permanent state of competition, employees are motivated to reach greater outcomes (Warmelink et al. Citation2020).

For the record, Amazon has emphasised that no employee was compelled to take part in the Palo Alto experiment and that the workers who chose not to engage in gamification processes have not been monitored or penalised (Bensinger Citation2019). However, a monitoring system was created following workers’ participation in the gamification experiment. Moreover, given the generally accepted system of monitoring to evaluate the speed, efficiency and other key factors in workforce performance, it can be argued that non-participating workers are also covertly entered in competition with those taking part in the gamification experiments.

It is evident that for gamification to be successful, an efficient data collection system is needed. Game design elements only work with massive data collection. Without it they do not work.

However, health and privacy are not the only relevant concerns. Considering the effects of gamification enhancing workers’ performance, their self-determination ability can also be considered to be at stake as processes of greater engagement in gaming, ludopathy, game addiction and the ability to work on workers’ motivation are able to alter it (Griffiths and Alex Citation2009) making their work better integrable with that of machines. In this light, gamification functions like a clear form of human enhancement which modifies the work conditions of employees both physically and psychologically (Perryer et al. Citation2016). The powerful human drive to play, to use Schiller’s notion, is used to turn work into play, allowing humans to better ‘function’ in algorithmically controlled work processes in which humans are attached to machines in new ways. This leads to a reinterpretation of the man/machine relationship within the enterprise.

Coming back to the ongoing process of automatisation in Palo Alto, a recent analysis of patents owned by Amazon noted that ‘workers are not about to disappear from the warehouse floor’ (Delfanti and Frey Citation2020). Seen in this light, gamification can be understood as the other side of the process of automatisation of work in the age of service digitalisation. Given the fact that introducing game design elements in the workplace pushes employees to reach a level of efficiency comparable with that of machines, workers become a competitive substitution of the process of automation. However, this outcome is only possible through persistent, selective and incisive control of data. Since gamification requires the collection, storage and processing of staff data to function properly, along with constant monitoring of their activities, systematic control of privacy appears to act as the means with which corporations can achieve total transformation of the shopfloor process. As Robinson et al. (Citation2020) highlight, these ‘opaque methods of ‘algorithmic management’ produce information asymmetries and surveillance that restrict workers’ autonomy’ and it affects their ability to develop autonomous lives (Roessler Citation2005) and even their identities.Footnote14 This is exactly the charge that can be levelled at gamification.

Workers’ rights involved in gamification

Unlike the case of training, implementation of game design elements in large scale distribution is far from having no consequences for workers. As some noted, ‘[w]here they are available, bonuses have created a strong incentive structure through gamification that encourages workers to work long hours and with high intensity’ (ILO Citation2021, 159). This surely affects their health and also their autonomy and their privacy. This worsening of their health and the large use of their data is done on a voluntary basis. What is of interest is that all these rights do not only have a moral dimension. Since they have legal recognition, from which legal obligations stem which can be linked to the norms that recognise them, they also have a legal one. Various enforcement mechanisms involving courts at the national, supranational and international levels can hinder these innovations that impact the individual rights of workers.

First, working with high intensity to perform low-skilled tasks that are laborious and repetitive puts in question the right to health in the work environment (ILO Citation2021, 220). This right is recognised in the constitutions of several European countries (Germany, Greece, Italy, France, Spain, the Netherlands etc.). It also has supranational recognition in the EU Charter of Fundamental Rights (art. 35) and international recognition in art. 8 of the European Convention on Human Rights (ECHR), which is supported by the jurisprudence of the European Court of Human Rights (ECtHR), from which precise obligations on States derive (e.g. Open Door Counselling et al. v. Ireland App. 14234/88). The right to health covers both the right to access healthcare and the right to not have any diminution of one’s psycho-physical health state (Ruggiu Citation2018, 305 ff.). This has special relevance in the case of low-skilled works like those at the centre of some instances of gamification.

This increase in the risk to workers’ health is based on a subtle modification of their motivation since the introduction of game design elements has effects comparable to human enhancement, namely to an alteration of physical performance (because their work performance is enhanced) and their psychology (because their will is altered and their attention, concentration capacity and resistance to stress are enhanced). This puts in question employees’ self-determination ability.

In legal terms, workers’ self-determination represents the limit of the directorial and organisational power of the employer, since it limits both the employer’s power to determine work tasks (which is not unlimited) and workers’ control over the execution of these tasks. According to the jurisprudence of the ECtHR, art. 8 ECHR (family and private life) protects the sphere of individual autonomy against enterprise power given the special vulnerability of workers due to the original labour asymmetry (e.g. Copland v. the U.K., App. 62617/00; Barbulescu v. Romania, App. 61496/08, § 70). Given the special link between the determination of tasks and control over how these tasks are performed, this sphere is protected by both the 1981 Convention No. 108 (Automatic Processing of Personal Data) and the Recommendation CM/Rec 2015(5) with regard to the use of digital technology in the work environment. The latter excludes employer interference in the private life of employees (art.14) and the use of personal data not pertinent to tasks set for the work position (art. 19). On this issue, the ILO (Citation2021, 177) notes that ‘[a] key facet of autonomy and control over work is related to their ability to choose working hours and break times, as well as to decline certain orders, for reasons such as exhaustion or safety concerns.’ This means that the unbalanced relationship between an enterprise and its employees puts their autonomy in a situation of initial vulnerability, over which gamification can have effects. This is the reason why the ILO requires special attention in the case of gamification (ibid. Citation2021, 220).

Since the intrinsic aim of game design elements is to modify the individual’s motivation and this usually happens with a large collection and processing of data, within the EU the GDPR is also involved. The GDPR expressly takes employer-worker asymmetry into account since it does not consider worker consent a legal basis for processing workers’ data given their position of vulnerability (arts. 6 and 9). Data processing can be based on the enterprise’s interest in execution of the contract to which the data subject, namely the worker, is party (art. 6, 1 let. b). However, this interest is limited given the different strengths of the two parties, with the will of the employee being in a vulnerable position. In this sense, Opinion 8/2001 of the Article 29 Working Party on the processing of personal data in the employment context states that ‘[r]eliance on consent should be confined to cases where the worker has a genuine free choice and is subsequently able to withdraw the consent without detriment.’ As it modifies the employee’s motivation and this aim is achieved via data processing, gamification alters the genuineness of will. This is why data protection is essentially linked to autonomy in this context. However, this is not only because any digital technology (artificial intelligence system, chatbot, virtual assistant, software) uses data, even personal, to function, meaning that data processing by game design elements that involve workers is specifically aimed at modifying the condition of choice of the subjects, namely their will. It is also because loss of employees’ control over the sphere of self-determination starts with a parallel and pervasive loss of control over their data. In other words, the ability of gamification to foster deeper engagement by the individual (its intrinsic aim) is only achieved through collection, retention and processing of their data. Therefore, the ability to alter the individual’s motivation through gamification builds a circular connection between privacy and autonomy. In this regard, art. 88 GDPR gives States the power to adopt special measures to protect the rights and liberties of workers with regard to data processing.

If this is true, it also means, however, that if we change game design elements in a way that is privacy sound, not only can workers recover control over their data but they can interrupt the process of weakening their self-determination ability. In this sense, we believe that the assessment of gamification can change via a design approach (see § 6 and 7).

Public engagement, Responsible Research and Innovation, and RRI by design

The misalignment between workers’ privacy, health and self-determination and the employer’s interest in better work organisation, efficiency and productivity leads to the question of whether gamification is ethically acceptable and what the conditions for it to be acceptable are.

The emerging field of RRI provides useful insights to assess the ethical acceptability of innovation systems, including in the field of gamification. The Amazon case shows that the enterprise’s interest in better organisation, efficiency and productivity via the use of game design elements can be accompanied with a sacrifice of working conditions (a state of permanent monitoring and/or competition), worsening of workers’ health (a situation of psychophysical stress due to the requirement for continual human enhancement), alteration of free self-determination ability (due to game engagement, game addiction and ludopathy, which are intrinsic in gamification) and loss of workers’ control over their data, which can be shared with the employer and even other colleagues. We therefore wonder if it is possible to build a responsibility framework for gamification innovations in the workplace and if so how (§ 7).

In the context of European policy, which aligns ethical concerns and societal interests with public investment in research and innovation, RRI has been developed as a governance framework in which ‘societal actors and innovators become mutually responsive to each other with a view to the (ethical) acceptability, sustainability and societal desirability of the innovation process and its marketable products’ (von Schomberg Citation2013, 63). This governance model aims to build a responsibility framework mainly by fostering stakeholderFootnote15 participation (inclusion) and implementing ethical acceptability in research and innovation.

Notwithstanding a large consensus on RRI at both the academic and institutional levels, two broad traditions in the RRI literature can be distinguished (Ruggiu Citation2015). These two traditions push RRI in two divergent directions: one towards ethical acceptability identified with norms set at the constitutional level; and the other towards inclusion as a process of public engagement. We believe that a fusion of these two traditions can be useful, not only for RRI in general but also in the case of gamification.

First, according to a normative substantial approach, the starting point of the innovation process is located in norms and values that generate products and services that serve society (von Schomberg Citation2013). The main characteristic of these values is that they can be identified at the level of constitutions or EU treaties (notably, arts. 2 and 3 of the Treaty on the European Union) and they aim to shape both science and innovation according to a top-down logic. Means of participation, research programmes and innovation must be anchored in shared values. Therefore, the ethical acceptability of innovation depends on values such as health, self-determination and privacy (according to an approach oriented to rights – Ruggiu Citation2015) and informs of what is ethically acceptable and what is not. In our case, for instance, privacy can be identified as a substantive norm that informs the gamification design process, leading to new products and services that respect the societal value of privacy. One potential drawback of this approach, however, is that values such as privacy are not monolithic. Instead, they have various levels of application with various consequences depending not only on the law or the way in which courts apply it. Limiting the privacy of individuals for the sake of public security, for example, differs from motives that only serve the interests of private corporations. Moreover, this approach raises questions concerning what constitutes a justifiable reason to interfere with individual autonomy and personal freedoms. In the case of gamification, the main problem would be that, on the one hand, even if privacy can be implemented top-down by the employer this might not satisfy the workers and, on the other hand, it might be insufficient to enhance their self-determination ability.

A second tradition, the procedural approach to RRI, instead focuses on the innovation process and the ways in which actors anticipate risks, reflect on desirable outcomes and engage stakeholders (Owen et al. Citation2013). In this approach, the process of stakeholder engagement, therefore, must be open, democratic and inclusive to create ethically acceptable solutions. It is (i) a framework of responsiveness able to attract inputs stemming from society (according to a bottom-up logic) and (ii) a framework of reflexivity that leads society to collectively reflect on the purposes of innovation (Owen et al. Citation2013). Public engagement can be aimed at either ‘restoring trust’ in matters such as innovation where people perceive that public institutions are too far away (e.g. GMO – von Schomberg Citation2013) or at ‘building robustness’ to strengthen the deliberative process (Groves Citation2011). In general, it is required because legal responsibility schemes can be insufficient in the case of innovation (Sand Citation2018). In these cases, to enlarge responsibility it is necessary for all parties involved to be actively engaged through a process of responsibilisation. Ethical acceptability, namely the values needed to anchor innovation, is therefore built bottom-up through society, with society and for society (Owen, Macnaghten, and Stilgoe Citation2012). This particular process of inclusion finally generates a shared vision of societal future driving innovation in an agreed given direction (Grinbaum and Groves Citation2013).

In this approach, predetermined normative claims are not declared but must be identified through the inclusion of all stakeholders. Here, the emphasis is on the responsible governance or management of the innovation process, which can be achieved through stakeholder participation (Lubberink et al. Citation2019). In highly uncertain contexts, public engagement is the only way to establish how risks must be allocated. More specifically, potential and unexpected risks must be anticipated (by means of pubic consultations, for example), the purposes of the innovation must be reflected on, societal actors must be included and engaged in the innovation process, and the innovators must be responsive to any societal concerns raised (through forms of participation that can reach the design stage). Such concerns include the following: (a) who could be negatively affected by the gamification innovation?; (b) what is the ultimate purpose of the gamification innovation?; (c) how can employees be involved in the game design process?; and (d) what ethical concerns have to be resolved before proceeding with the innovation?

In the case of privacy, for instance, it is critical for any violations – and their harmful effects – to be anticipated throughout the innovation process. This anticipation, combined with the need for reflection, has the potential to uncover any mismatches in the innovation between the interests of employers and their employees and opens up the possibility of redesigning for greater mutual benefit. Indeed, the identification of the risks involved in gamification should be assessed not only by designers and managers (data protection impact assessments) but by a broader spectrum of stakeholders including, above all, workers. They must be able to choose what data can be collected, stored, and processed, with whom they are to be shared, which technical data protection measures can be implemented and what limitations are to be imposed etc. (even through forms of ‘co-design’Footnote16). The resulting responsive/responsible behaviour should engender higher levels of trust, rapport and autonomy among the various parties involved. Given that privacy is by no means a unilateral value or norm that can be implemented top-down by the entrepreneur, anticipation, reflection, inclusion and responsiveness can therefore help innovators identify the design requirements for responsible gamification.

However, it has been noted that an important drawback of this procedural approach is the fact that stakeholder inclusion and deliberation cannot wholly resolve the normative questions about ethical considerations of privacy and autonomy (Blok Citation2019a). In the case of gamification, this means that even when letting workers actively participate in the process of choice in the enterprise, it cannot be ensured that the final decision taken will respect privacy. For example, in some instances of gamification workers might only adhere to the employer's desiderata and give up both their privacy and their autonomy.

Given the drawbacks of the two approaches, it has been argued that responsible management of the innovation process requires both predetermined substantive normative values and procedures, which together enhance the social desirability, ethical acceptability and sustainability of innovations (Blok Citation2019b). This means that, on the one hand, data protection must be ensured from the game design stage through a risk assessment of data breaches that could occur in gamification processes and through a following introduction of the necessary correctives in the design. On the other hand, workers should be allowed to actively take part in the design of all the elements that are implemented in the workplace according to a design strategy inspired by the GDPR. In other words, for a full responsibilisation of workers, it is necessary for measures implementing privacy (according to a rights-based approach) to be accompanied by measures implementing worker participation (‘RRI by design’). Whereas a focus on procedures alone can lead to distortive outcomes for the enterprise (e.g. violation of rights), combining these procedural elements with predetermined tools that embed privacy requirements from the outset (i.e. by design) ensures that responsibility can be concretised at the organisational level. For this to be successful, responsible management of innovation must be implemented not only at the level of individual innovation managers and designers but equally at the strategic organisational level and the economic system level through greater engagement of employees (Long, Inigo, and Blok Citation2020). This action aimed at shaping the game design elements according to the proposed RRI correctives (see § 7) leads to strengthening a responsibility framework in the field of gamification. This finally leads to an integrated and embedded approach to gamification innovations in which RRI is designed in from the outset, transforming the game design elements at the design stage (‘RRI by design’) (Owen Citation2014).

The privacy by design principle

The rise of the design-thinking approach

The concept of ‘RRI by design’ (Owen Citation2014) derives from a radical mutation in the approach to innovation that finds its apex in the PbD principle. Among the several privacy strategies,Footnote17 goal-oriented approaches, risk-oriented approaches and design strategies (Hoepman Citation2014) ensure a deep change of perspective in gamification innovations. This is the choice made, for example, by the GDPR in Europe, and it has its roots in a long debate involving the protection of privacy in the field of ICT.

The modern-day shift from industrial manufacturing to knowledge-based economies and digital service delivery has increased the value of information and the need to manage this change responsibly (Cavoukian Citation2011). The PbD principle attempts to respond to this need through a change of approach to innovation from a design-thinking perspective.

In business ethics, adopting design thinking leads to a radical change in the approach to problem solving at the organisational, strategic and product-development levels of an enterprise (Brown and Katz Citation2009). Complex problems, such as the ‘wicked problems’ (Buchanan Citation1992) in gamification, must be handled contextually at the design stage of a system, which in turn requires a degree of practical foresight (Jones Citation1992). This implies a shift in focus from mere analysis of a problem to its contextual resolution as the starting point of the construction of the system (Nelson and Stolterman Citation2012), as in the case of game design elements. The use of design-thinking methodology therefore provides a framework for understanding and pursuing innovation in methods that ultimately contribute to the systematic growth of the enterprise and enhanced benefits for its clientele.

The development of privacy-enhancing technologies (PETs) towards the end of the twentieth century shifted the attention of the scientific community to the protection of individuals’ personal information (Rodotà Citation1995; Borking et al. Citation1995). In the age of big data, the human body tends to become a digital body, a fulcrum for data that transcends the corporeal (Rodotà Citation2016). Against this backdrop of the datafication of human life (D’Acquisto et al. Citation2015, 8), protection of individuals and all their data becomes a ‘wicked problem’ that must be tackled from the outset, i.e. at the design stage. Privacy must be incorporated in IT systems, design processes, organisational procedures and planning operations that may impinge on individual lives and liberties (Cavoukian Citation2011, 1). This leads to forms of design oriented towards the protection of rights (Ruggiu Citation2015). Hence, the discussion shifts from the issue of ‘big data versus privacy’ to that of ‘big data with privacy,’ according to which privacy requirements should be identified early on in the big data analytics value chain (D’Acquisto et al. Citation2015, 8). Privacy-enhancing measures must be built in ‘by design’ (van den Berg and Leenes Citation2013) which leads us to the PbD principle. This approach has been incorporated in the GPDR, which is relevant in cases of gamification applied in Europe.

The protection of privacy under the GDPR: by design and by default

Under the GDPR, PbD (art. 25), which is mandatory,Footnote18 covers both the ‘data protection by design’ (DPbD) principle and ‘data protection by default’ (DPbd), which are strictly intertwined. These two principles are functional to one another.

DPbD is characterised by a proactive approach that aims to anticipate and prevent data breaches before they materialise (Cavoukian Citation2011, 1). This implies first a thorough risk assessment (‘data protection impact assessment’ – art. 35 GDPR) to identify at an early stage all the possible violations of rights according to a rights-based approach (Ruggiu Citation2015). Rather than reacting to privacy-invasive events after the fact, privacy standards must be set and enforced at the design stage of networked data technologies. Only by having a vision of potential breaches is it possible to imagine the counter-measures that can be adopted at the design stage (§ 7). Therefore, PbD aims to deliver the maximum degree of privacy from the outset, ensuring that personal information is automatically protected in any IT system or practice that involves the processing of data (Cavoukian Citation2011, 1).

According to DPbd, instead, privacy becomes the default setting: if individuals do nothing, the system will protect their privacy and continue to do so without a need for legal action or judicial remedy. In this sense, following the ‘DPbd’ principle, the types of data collected and/or processed must be solely those necessary to reach the predetermined purposes (‘data minimisation’ principle – art. 5 let. c GDPR) (Cavoukian Citation2011, 2). Moreover, GDPR also provides that ‘by default personal data are not made accessible […] to an indefinite number of […] persons.’ Therefore, DPbD operates in a context that is already limited by default, strengthening the technologies that are developed. This action by default is functional to the implementation of the data protection measures at the design stage of any processing system, such as game designing elements.

An example of how PbD has operated under the GDPR in Europe can be seen in the way in which cookies are handled when browsing a website in the EU, namely through the use of informative pop-ups that give all users the possibility to choose the way they want their data protected, the subjects who can access them, with whom the data can be shared, the limits of profiling and the limits of legitimate interest, etc. This would not be possible without a radical transformation by design of the technologies that are used to make the internet work.

The protection of data by design requires articulated and multi-level action. From the outset, PbD demands adherence to three guiding principles (Cavoukian Citation2011, 2). First, the purposes for which data are collected, used, retained and disclosed must be specified (‘purposes specification’) and communicated to the individual at the time of collection (art. 5 let. b GDPR). Second, the collection of personal data must be fair, lawful (‘lawfulness principle’ according to arts. 5 let. a and 6 GDPR) and limited to what is strictly necessary for the specified purposes (‘data minimisation’ – art. 5 let. c). Third, the use, retention and disclosure of personal information cannot proceed without the permission of the individual (‘consent’) except where otherwise required by law (art. 7 GDPR). These guiding principles are implemented by embedding privacy in the design and architecture of IT systems, operations and practices (Cavoukian Citation2011, 3) so that privacy implementation measures become an integral component of the system (rather than a reactive bolt-on) and without diminishing its overall functionality (PbD according to art. 25 GDPR). This means that in gamification contexts, workers must be well informed of the types of data, purposes of processing, the technical measures adopted, conditions for retention, durability (when the data are cancelled) and levels of protection (measures that are to be adopted). Furthermore, if the data are able to identify the subject, the worker must be put in the condition of making a real choice about them from the beginning of the development stage of the technology.

Data, however, even in the case when they can be collected and processed, do not go out of the control of the worker forever. Processing must have an end (durability). The implementation of privacy aims to accommodate all interests and objectives in a positive-sum or ‘win-win’ manner, as opposed to a more dated zero-sum approach (Cavoukian Citation2011, 3). This overcomes false dichotomies such as ‘privacy versus security’ by highlighting that it is far more desirable to realise both within the same framework, the composite functionality of which leads to business success. From this perspective, adopting the principles of data protection becomes ‘an essential value of big data, not only for the benefit of the individuals, but also for the very prosperity of big data analytics’ (D’Acquisto et al. Citation2015, 8). Equally, embedding privacy elements within an IT system must occur prior to data collection and extend securely throughout the entire life cycle of the data concerned (Gross and Acquisti Citation2005). The data controller, like the entrepreneur in the workplace, must also ensure that all information is securely destroyed at the end of the process. PbD therefore produces a secure lifecycle of data from cradle to grave: security is end-to-end (Cavoukian Citation2011, 3). Similarly, when the game design elements are also involved, the employer must communicate to the workers how long the data are collected and processed, making the end explicit.

In this framework, it is also crucial to adopt and implement measures of a technical and organisational nature that prevent the direct or indirect identification of the individual whenever personal dataFootnote19 are concerned (art. 25 GDPR). This implies that these measures must be thought of, developed and integrated in the technology by design. This is crucial in gamification innovation because the possibility of identification is the beginning of loss of control by workers and of the limitation of their autonomy (de Andrade Citation2011). Examples of this implementation process include the measures of anonymisation or the pseudonymisation of information collected. However, as we will see (in § 7 below), further measures must accompany data encryption measures. Anonymisation measures mean that individuals cannot be identified within a group or associated with any specific data. Instead, pseudonymisation suspends the objective link between the information and the person concerned via the use of pseudonyms, impeding the identification of the subject only temporarily (D’Acquisto and Naldi Citation2017, 33 ff.). However, today advances in processing techniques allow identification of the subject through accurate integration of even anonymous data. This possibility cannot be ignored when workers have to actively take part in the design of their privacy in gamification contexts.

The need of engaging stakeholders at the design stage

More broadly, PbD also seeks to ensure the inclusion of all stakeholders (pursuant to the stated promises and objectives) in a transparent and open manner. Visibility, openness and transparency concerning policies and procedures are essential for the ongoing accountability of the data controller and for stakeholders’ trust in a system optimised for business success (Cavoukian Citation2011, 4). In this light, participation by stakeholders, like workers in cases of gamification in the workplace, can be considered a further consequence of PbD. This means that workers must be put in the situation of being able to choose not only which data can be collected by game design elements and which level of protection is to be implemented but also which type of game design elements are adopted in the workplace via forms of feedback (forms of cooperation v. forms of competition). Finally, PbD requires data controllers and designers to keep the interests, needs and concerns of their users (namely workers) at the forefront by implementing strong privacy defaults by design, appropriate and prompt communications and user-friendly and user-empowering options (Acquisti, Brandimarte, and Loewenstein Citation2015) and means of feedback (user-centric architecture). This definitely leads to forms of co-design in gamification that can be considered the end of full participation in the design stage (although not the only form of participation).

RRI correctives to game design elements in the workplace

A major challenge when using useful gamified applications is to protect employees’ personal data (Mavroeidi, Kitsiou, and Kalloniatis Citation2019). Equally, fostering trust among employees is of great importance in the adoption of RRI frameworks (Ruggiu Citation2015). Businesses must therefore pay close attention to data protection while keeping their employees informed of their privacy rights and the data management, e.g. access rights, data use (Yonemura et al. Citation2017). Plenty of laws, regulations and policies worldwide emphasise the importance of protecting employees’ privacy in various business systems, aiming at providing a balance between collecting enterprise data and individual privacy protection (Adams Citation2017).

Many differences characterise these laws, for instance there is little correspondence between the United States’ and the EU’s fundamental rights of data protection. In the USA there are no constitutional/legal requirements for data processors on how to use personal data (Schwartz and Peifer Citation2017). They are nowadays expected to manage the collection, storage and usage of personal information effectively (Dinev et al. Citation2013). In this respect, privacy engineering in such systems is immense not only in Europe but recently also across the Atlantic. This is a significant part of the system development process, where privacy developers should define principles in the form of technical requirements that need to be satisfied in order for the system to ensure a minimum level of privacy and be trustworthy for users (Martin & Kung, Citation2018).

In the EU, GDPR enforcement has made the protection of personal data compulsory for all organisations during systems design and implementation (Sousa et al. Citation2018). New data rights have been established for EU citizens, supporting their autonomy and self-determination. Additionally, each organisation is obliged to establish a Data Protection Officer (DPO), an expert in data protection rules and practices who is responsible for ensuring that organisational processes comply with the legislation (art. 37 GDPR).

The DPO is expected to support the procedure effectively for both the business and the employees. The DPO must provide business developers with the necessary information to combine privacy by design principles with the GDPR requirements, so a strong elicitation process for the set of technical requirements that should be addressed needs to be established. He/she must propose a process for validating the elicited requirements in a data protection impact assessment (DPIA) – carried out according to the GDPR – to identify privacy risks. As far as employees are concerned, the DPO is expected to enhance their ability to trace their personal data by offering easy-to-use services raising their privacy awareness level.

This move towards transparency in data management improves trust among stakeholders (Stanculescu et al. Citation2016). All workplace services, including gamified ones, should be designed with the users’ privacy protection in mind.

However, several game elements violate the privacy requirements, leading to violations of users’ privacy (Mavroeidi, Kitsiou, and Kalloniatis Citation2019). In response, a PbD approach is suggested, considering that it has been newly incorporated in the GDPR (Romanou Citation2018). One key issue for the implementation of PbD is analysis of technical privacy requirements in systems during the design process. This is required in various privacy engineering methodologies (Pattakou, Kalloniatis, and Gritzalis Citation2017; Pattakou et al. Citation2018; Kalloniatis Citation2017; Argyropoulos et al. Citation2016). Among them, a privacy safeguard (PriS) (Kalloniatis, Kavakli, and Kontellis Citation2009; Kalloniatis, Kavakli, and Gritzalis Citation2007), an established PbD approach, identifies the following privacy requirements: anonymity (unknown identity of users); pseudonymity (protection of anonymity with a pseudonym); unlinkability (inability to relate subjects to actions); undetectability (impossibility of disclosing components); and unobservability (inability to disclose actions).

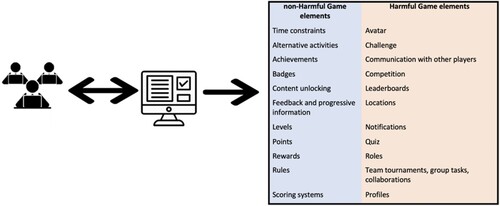

These requirements have been used to prove their violation by a variety of game elements (Mavroeidi, Kitsiou, and Kalloniatis Citation2019), as is summarised in . This violation occurs regarding legal issues with GDPR compliance and regarding contextual privacy expectations of users due to disclosure of their identities. The elements can be harmful or non-harmful for users’ identitities (Mavroeidi, Kitsiou, and Kalloniatis Citation2020). Collecting points, for example, is not a harmful process, while presenting them on leaderboards assigned to user profiles results in privacy violation. As leaderboards publicly present the status of users, violation of their identities occurs.

In order to use an avatar, a record of users’ characteristics is needed, leading to violation of users’ privacy (Mavroeidi, Kitsiou, and Kalloniatis Citation2019). Following this approach, the relations among elements and requirements has been examined and the results are presented in . PriS considers privacy requirements to be organisational goals and describes the impact of privacy goals on the organisational processes affected. These processes aim to support the selection of a system architecture that best satisfies them. Therefore, PriS provides an integrated way of working, from high-level organisational needs to the IT systems that satisfy them (Kalloniatis, Kavakli, and Gritzalis Citation2008). PriS is considered in a study by Robol, Salnitri, and Giorgini (Citation2017) to be an effective method to use in GDPR-compliant socio-technical systems. The GDPR aims (a) to promote organisations’ and companies’ data collection and processes by introducing specific privacy requirements as primary goals, thus dealing with several complex issues, such as company-level awareness (Tikkinen-Piri, Rohunen, and Markkula Citation2018;) and (b) to provide EU citizens with further control of their personal data while minimising threats to their data rights and freedoms (Lambrinoudakis Citation2018). Therefore, the conceptual association with PriS requirements is more than clear, since they promote a set of expressions based on which all the processes of an organisation are considered.

Table 1. Violations of privacy requirements.

Conversely, according to the RRI framework, privacy requirements should be implemented during the design of workplace gamified services to ensure privacy protection. PriS, for instance, proposes software design patterns for the analysis of privacy requirements in systems (Kalloniatis, Kavakli, and Gritzalis Citation2007). These can be implemented when designing gamified services in order to satisfy the goals of the RRI framework regarding responsible innovation. Given the apparent privacy violations presented in , these privacy-enhancing patterns can be deployed to comply with the corresponding requirements in the initial design stage. Using the anonymity and pseudonymity pattern, at the user’s request the system determines whether identity is needed and provides and implements processes depending on the case. Similarly, for the requirements of unobservability and unlinkability, the system will check a user’s request to ascertain whether one or both of these requirements are necessary before connecting the user, thereby protecting user privacy.

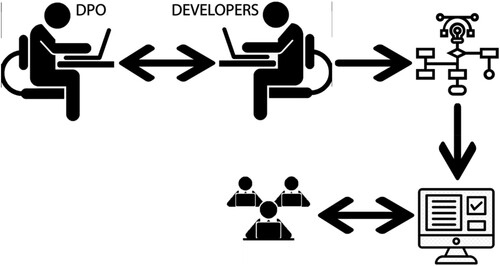

Below we present two distinct scenarios for correctives aimed at implementing an RRI framework in a gamified workplace setting. The first scenario concerns employees’ mandatory daily tasks, as presented in . A company selling herbal beauty products has decided that its marketing team should communicate through a gamified application in the hope that the tasks assigned will be completed more promptly and efficiently, thereby increasing the company’s revenue. After an effective promotion, the team member will win rewards in line with the higher profits generated. New business targets will be announced each time, which are to be accomplished in a more effective manner according to the application. Through this motivational way of working, employee productivity will be increased and workplace anxiety reduced. The employees will further embrace the company’s philosophy, as organisational objectives will interlink with their own personal goals.

However, since the purpose of the gamified workplace is to engage users in timely and efficient working, their identities should be protected. During their interactions with the application, therefore, each user’s identity will be hidden. Additionally, rewards should not be published: this is to prevent perceptions of the gamified team process as a negative challenge to workers. To achieve the objective of a departmental gamified platform that still protects employee privacy, the developers of the gamified services should consider privacy issues in parallel with game elements during the design cycle. The company DPO should support the developers by informing them which information should be protected in the game elements implemented. This will be used to determine the specific privacy requirements for the service. To this end, the DPO will provide the guidelines for a DPIA method to deploy in order to identify the likelihood of any possible privacy violation incidents deriving from the game elements (the relevant harmful game elements are presented in ). Having determined the privacy requirements, the DPIA will assist in the identification and assessment of privacy risks, leading to the selection of appropriate measures to reduce them. By analysing the privacy requirements and following the PriS method, this aim will be accomplished since the appropriate technical countermeasures to satisfy each requirement will be identified. This information will allow the developers to select and proceed with the most suitable implementation techniques to ensure the protection of the users’ privacy (e.g. satisfaction of user rights etc.).

This protection, in turn, will amplify the trust between the marketing team members and the wider organisation. The employees will have a more positive attitude to their work, the atmosphere among team members will be more constructive and their managers will be gratified by a more efficient achievement of company targets.

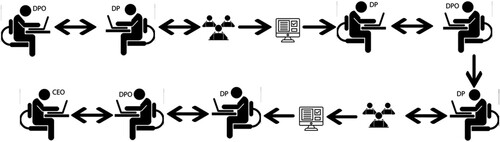

Additionally, based on the correctives in the RRI framework, the employees should play an active role in the gamified application design process. The second scenario features this incorporation of employee preferences during system design, and is presented in . Taking into consideration the harmful and non-harmful game elements mentioned previously, the organisation DPO informs the person responsible for the HR department about the elements. Next, this person records the elements that each employee prefers to be part of the design of the gamified application that they will be using at work. The DPO receives and collates the feedback and notifies HR of any harmful cases of privacy violation and how they can be resolved prior to rollout. This information is communicated to the employees so they remain informed about the possible consequences of these harmful elements. Specifically, employees are informed in detail about potential violation of their privacy and trained on the potential threats occurring in each harmful game element. Thus, they will be aware of every disclosure of their information.

The second phase of game element selection considers how non-harmful game elements can be used safely, while outlining the measures required in response to the harmful game elements. The list of the preferred game elements is then reported to the DPO, who will notify the CEO, highlighting the importance of privacy protection, especially during the use of harmful game elements. In this scenario, the users remain informed regarding the harmful side of gamification and are given an active role in the design of the gamified service. Their participation in the process is useful and important. By implementing these steps and including them in the design process, the workers have the opportunity to consciously select the game elements as they will be aware of possible privacy violations. This procedure will reduce the potential impact of the risks on the employees, and also the risk of non-compliance with GDPR rules at the operational level.

Introducing gamification in workplace activities can increase employee engagement and productivity regarding various organisational targets. The protection of personal data is crucial to ensure trust among staff, management and the organisation as a whole. By implementing these scenarios user privacy is protected and GDPR regulation is applied.

Conclusions

The introduction of specific forms of gamification in the workplace seems to have the same rationale as public engagement in RRI, which is that of engaging the workforce to reorientate internal practices and create overall alignment with company goals. Organisations can achieve performance, efficiency and productivity improvements while lightening the load of laborious, monotonous and stressful tasks for their employees.

However, concerns remain over the interconnected issues of employee privacy, health and autonomy, particularly given the extent to which personal data is used in gamification. When harmful, gamified activities will inevitably affect and limit the self-determination of staff, leading them to accept work conditions that can be quite demanding from the health standpoint (human enhancement in the case of non-provisional tasks). When privacy is integrated by design, on the other hand, the inclusion of data protection has the potential to strengthen autonomy. This study has demonstrated how, based on the principle of PbD, it is possible to develop the tools and systems needed to raise the level of privacy protection in gamification. Furthermore, it has suggested that full empowerment of workers requires employees to be given greater control not only over their personal data but also over the choice of the game design elements (moving towards forms of co-design). By adhering to an RRI model, the infringement of privacy, health and autonomy is not an unescapable outcome of gamified activities at work. Gamification can in fact lead to fully responsible outcomes.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Daniele Ruggiu

Daniele Ruggiu is Assistant Professor in Legal Philosophy at the Department of Political Science, Law, and International Studies of the University of Padova. His main focus is on the impact of new and emerging technologies on human rights, on governance models, in particular Responsible Research and Innovation, on the role of the European Convention on Human Rights in technology innovation, on the philosophical aspects of the application of law and legal hermeneutics in the Gadamer's and Ricoeur s perspective. Ruggiu has taken part to several projects at the national and international level (SynthEthics, Epoch project, Res-AGorA, Neurolaw Network). He has several national and international publications, in particular : Human Rights in the Era of Emerging Technologies (Il Mulino 2012, in Italian) and Human Rights and Emerging Technologies: Analysis and Perspectives in Europe (Pan Stanford Publishing, 2018 , with a foreword by Roger Brownsword). Ruggiu published several articles in high ranked disciplinary journals like Nanoethics, Philosophy of Management, Law Innovation and Technology, Journal of Law and Technology, Biotechnology Law Review, Rivista di Filosofia del Diritto, Ragion pratica. He collaborates with Ars interpretandi and Agenda digitale.eu.

Vincent Blok

Vincent Blok is associate professor in Philosophy of Technology and Responsible Innovation at the Philosophy Group, Wageningen University (The Netherlands). He is also director of the 4TU.Ethics Graduate School in the Netherlands. Together with seven PhD candidates and four Post-docs, he reflects on the meaning of disruptive technologies (AI, Synbio, digital twins) for the human condition and its environment from a continental philosophical perspective. His books include Ernst Jünger's Philosophy of Technology. Heidegger and the Poetics of the Anthropocene (Routledge, 2017), Heidegger's Concept of philosophical Method (Routledge, 2019), The Critique of Management. Toward a Philosophy and Ethics of Business Management (Routledge, 2021), and From World to Earth. Philosophical Ecology of a threatened Planet (Boom, 2022 (in Dutch). Blok published over hundred articles in high ranked disciplinary philosophy journals like Environmental Values, Business Ethics Quarterly, Synthese and Philosophy & Technology, and in multi-disciplinary journals like Science, Journal of Cleaner Production, Public understanding of Science and Journal of Responsible Innovation. See www.vincentblok.nl for more information about his current research.

Christopher Coenen

Christopher Coenen, political scientist, works in the strongly interdisciplinary field of technology assessment at KIT's Institute of Technology Assessment and Systems Analysis (ITAS), where he heads the research group 'Life, Innovation, Health and Technology' and has carried out projects for the European Commission, the German Federal Ministry of Research and the Bundestag, among others. He is the editor-in-chief of the journal ‘NanoEthics: Studies of New and Emerging Technologies’.

Christos Kalloniatis

Dr. Christos Kalloniatis holds a PhD from the Department of Cultural Technology and Communication of the University of the Aegean, a master degree on Computer Science from the University of Essex, UK and a Bachelor degree in Informatics from Technological Educational Institute of Athens. Currently he is an Associate professor and head of the Department of Cultural Technology and Communication of the University of the Aegean and director of the Privacy Engineering and Social Informatics (PrivaSI) research laboratory. He is a member of board of the Hellenic Data Protection Authority and former member of the board of the Hellenic Authority for Communication Security and Privacy. His main research interests are the elicitation, analysis and modelling of security and privacy requirements in traditional and cloud-based systems, the analysis and modelling of forensic-enabled systems and services, Privacy Enhancing Technologies and the design of Information System Security and Privacy in Cultural Informatics. He is an author of several refereed papers in international scientific journals and conferences and has served as a visiting professor in many European Institutions. Prior to his academic career he has served at various places on the Greek public sector including the North Aegean Region and Ministry of Interior, Decentraliastion and e-Governance. He is a lead-member of the Cultural Informatics research group as well as the privacy requirements research group in the Department of Cultural Technology and Communication of the University of the Aegean and has a close collaboration with the Laboratory of Information & Communication Systems Security of the University of the Aegean. He has served as a member of various development and research projects.

Angeliki Kitsiou

Dr. Angeliki Kitsiou is a post-doctoral researcher at the Department of Cultural Technology and Communication of the University of the Aegean and teaches as adjunct faculty at the same Department. She holds a PhD in Sociology from the University of the Aegean. Her thesis focused on the study of the Free Software Movement, highlighting the new challenges regarding deviance, social control and information management related to the Information Society. She has been involved in several funded research projects concerning innovative educational and quality assurance methods and social policy emphasis added on juvenile and immigrants. Her research interests and recent publications concern the bridging between sociological theory and privacy frameworks, associated with the most current developments in Social Sciences and Informatics.

Aikaterini-Georgia Mavroeidi

Katerina Mavroeidi holds a BSc from the Department of Cultural Technology and Communication of the University of the Aegean and a Master degree on Cultural Informatics and Communication from the same University. She has also achieved a Master degree on Information Security from the University of Brighton. Currently, she is a PhD student at the Department of Cultural Technology and Communication of the University of the Aegean. Her skills include computer graphics and user interface design with the focus on user experience and usability evaluation. Her second master degree broadened her knowledge on Information Security. Based on that, her skills include also analysis and modelling of security and privacy requirements, software architecture and risk management. Her dissertation of this master was about usable security. In addition, her interests lie in the area of usable privacy.

Simone Milani

Simone Milani is Associate Professor at the Department of Information Engineering, the University of Padova., and he's currently the heading teacher for the courses Digital Forensics, Biometrics, Immersive Technologies and 3D Augmented Reality. He received the Laurea degree in telecommunication engineering and the Ph.D. degree in electronics and telecommunication engineering from the University of Padova, Padova, Italy, in 2002 and 2007, respectively. He was a Visiting Ph.D. Student with the University of California at Berkeley, Berkeley, CA, USA, in 2006, and later he worked as Post-Doctoral Researcher for the University of Udine, Udine, Italy, the University of Padova, and the Politecnico di Milano, Milan, Italy, from 2007 to 2013. He has also been consultant for STMicroelectronics, Agrate, Italy. He is member of the Ethical Committee of the Human Inspired Technologies center at the University of Padova and of the Information Forensics and Security Technical Committee of the IEEE Signal Processing Society. He's author of more than 120 papers published on international conferences and journals. His research interests include artifical intellìgence and deep learning, digital signal processing, image and video coding, 3D reconstruction and rendering, Augmented/Virtual/Mixed Reality, and multimedia forensics.

Andrea Sitzia

Andrea Sitzia is Labour Law Associate Professor at the Department of Political, Juridical and International Studies of the University of Padova, where he currently teaches Labour Law and EU Labour Law; he also teaches Law, Informatics and Society at the Informatics degree course. He received the Laurea degree in Jurisprudence and the Ph.D. degree in Labour Law from the University of Padova. He has stable research and teaching exchanges with the Eotvos Lorand University of Budapest and with the University of Reims, Champagne-Ardenne. President of the Certification Commission of Labour contracts at the University of Padova. He's author of more than 100 papers published in national and international conferences and journals. His research interests include privacy, employer's power of control, contracts, supply chain regulation, inmates work, ILO conventions.

Notes

1 Gamification can be realised both with board games (see, e.g., Chappin, Bijvoet, and Oei Citation2017) and with digital technologies. In this work we only focus on the case of gamification via digital technologies because it has interesting implications from the legal, informatic and governance standpoints.

2 Although game elements can be introduced across a broad spectrum of fields, such as business, education, military, traffic flow, healthcare, training, etc., in this study we focus mainly on business.

4 Some work environments where low-skilled tasks are carried out are more demanding for the workers from the physical standpoint (fatigue, stress, impact on health, long duration etc.). In these contexts gamification mainly alters perceptions of the impact of the tasks assigned, aggravating their consequences, especially on workers’ wellness and on labour asymmetry with the employer (ILO Citation2021, 220).

5 Here we follow von Schomberg’s definition of responsible research innovation (RRI) (von Schomberg Citation2013, 63). See § 6 below. However, although the present work can be traced back to his reflection on RRI focusing on public engagement and ethical acceptability as the framework for research and innovation, it will only focus on the case of responsible innovation (RI) in the field of gamification.

6 In this context, athough they have a moral dimension, self-determination, health and privacy are mainly considered from the legal standpoint (see § 6 & 4).

7 This also puts workers’ right to health at risk (see § 4).