Abstract

The literature offers methods and tools for user experience (UX) evaluation. Among them, the irMMs-based method exploits mental models related to specific situations of interaction. The literature also proposes frameworks and models to describe product development activities. One of these, the X for Design framework (XfD), allows modeling different activities and suggests modifications in order to improve them. This research aims at verifying the capabilities of the XfD in improving the irMMs-based method. Once improved thanks to the XfD, the irMMs-based method is adopted together with the original release of it and with the well-known Think Aloud usability evaluation method in evaluating the UX of a CAD software package. The comparison of the results starts demonstrating the capabilities of the XfD in improving UX evaluation activities. The research outcomes can be of interest for researchers who can exploit the XfD suggestions to deepen their knowledge about human cognitive processes and for industrial practitioners who can apply the suggestions proposed by the XfD to their own evaluation activities to make them more effective.

Public Interest Statement

Today, the market requires more and more multi-functional, customizable, reliable and innovative products. Moreover, these products must arouse specific users’ perceptions and emotions; they must be user-experience (UX) oriented. In order to achieve this, companies can improve their development processes using specific methods and tools. One of them, the X for Design framework (XfD), deals with products’ functions and structures as well as with users’ perceptions and emotions aroused by products. The research described in this paper aims at verifying the XfD capabilities in improving the existing UX evaluation activities named irMMs-based method. These activities exploit interaction-related mental models (irMMs) generated by users with different knowledge about the products under evaluation to produce lists of positive and negative UX aspects.

1. Introduction

In recent years, user experience (UX) has gained importance in product development processes due to the increasing number of functionalities (with the related interface complexity), the development of new interaction paradigms, the availability of innovative technologies and devices, etc. (Saariluomaand & Jokinen, Citation2014). The UX goes beyond usability concerns since it also focuses on users’ subjective perceptions, responses, aesthetic appraisal and emotional reactions while interacting with products or services (Law, Roto, Hassenzahl, Vermeeren, & Kort, Citation2009; Thuring & Mahlke, Citation2007). Previous experiences and expectations about future interactions influence the UX; moreover, UX depends on social and cultural contexts (von Saucken, Michailidou, & Lindemann, Citation2013; Zarour & Alharbi, Citation2017). Users’ emotions and cognitive activities are basic elements for the UX (Park, Han, Kim, Cho, & Park, Citation2013). Emotions are the responses to events deemed relevant to the needs, goals, or concerns of an individual and encompass physiological, affective, behavioral, and cognitive components (Brave & Nass, Citation2012). Cognitive activities, highly influenced by emotions, generate and exploit mental models that govern human behavior. Mental models are internal representations of reality, generated according to past experiences or to prejudices on similar situations, aimed at choosing the best user behaviors against foreseen behaviors of products or events (Gentner & Stevens, Citation1983; Yang et al., Citation2016). User behaviors aim at overcoming specific, critical situations and at achieving experiences as positive and satisfactory as possible (Greca & Moreira, Citation2000).

The literature offers several UX evaluation possibilities. They range from simple tools like the User Experience Questionnaire—UEQ (Rauschenberger, Pérez Cota, & Thomaschewski, Citation2013) and the IPTV-UX Questionnaire (Bernhaupt & Pirker, Citation2011) to more sophisticated platforms and systems to evaluate web applications like the weSPOT inquiry-based learning platform (Bedek, Firssova, Stefanova, Prinsen, & Chaimala, Citation2014) and the Personalized Mobile Assessment Framework (Harchay, Cheniti-Belcadhi, & Braham, Citation2014). There are even more articulated and complete approaches, like the Valence method (Burmester, Mast, Kilian, & Homans, Citation2010), the quantitative evaluation of the meCUE questionnaire (Minge & Thuring, Citation2018) and the irMMs-based method (Filippi & Barattin, Citation2017). The irMMs-based method, first starting point of this research, evaluates the quality of UX considering emotions and mental models together. Each irMM—interaction-related mental model—refers to a specific situation of interaction; its structure has been thought to be as effective as possible in managing UX matters. For example, irMMs involve meanings and emotions—two of the main UX components—as well as users and products’ behaviors, making in this way the product role explicit. The irMMs-based method generates lists of positive and negative UX aspects. The former represent unexpected, surprising and interesting characteristics of interaction; the latter refers to bad product transparency, gaps between expected user behaviors and those allowed by real products, etc.

The literature also proposes frameworks and models to describe UX related product development activities as well as general ones. The framework of the product experience of Desmet and Hekkert (Citation2007) describes cognitive activities in different types of affective product experiences by involving three elements: the aesthetic experience, the experience of meaning, and the emotional experience. The Norman’s model of the seven stages of action cycle (Norman, Citation2013) focuses on human cognition and behavior by analyzing and describing how human beings perform actions. The UXIM of von Saucken and Gomez (Citation2014) provides a detailed description of the UX considering the interaction between user and product, the temporal perspective of the experience (representing expectations before usage and remembrance after usage) and the surrounding environment. The situated Function-Behaviour-Structure framework with extension (Cascini, Fantoni, & Montagna, Citation2012; Gero & Kannengiesser, Citation2004) describes design processes from the cognitive point of view using specific variables, processes and environments. This framework allows modeling design activities that generate technical specifications starting from those user needs that the product should help to satisfy. Finally, the X for Design framework (XfD) (Filippi & Barattin, Citation2016) models activities dealing with different aspects of product development processes and suggests modifications in order to improve them.

The research described in this paper aims at verifying the capabilities of the XfD in improving the irMMs-based method. The XfD is used first to improve the activities of this method; after that, the original and the improved releases of the irMMs-based method are adopted to evaluate the UX of a CAD software package. The same evaluation is also performed using the well-known Think Aloud usability evaluation method (TA, hereafter). The comparison of the results takes place afterward.

The research outcomes can be of interest for both researchers and industrial practitioners. The former can exploit the XfD suggestions to understand the reasons why real evaluation activities sometimes miss something, deepening in this way their knowledge about human cognitive processes. The latter can apply the suggestions proposed by the XfD to their own evaluation activities to make them more effective.

The paper runs as follows: the background section describes the XfD and the irMMs-based method. The activities section starts with the improvement of the irMMs-based method; then, the adoption of the two releases of the method and of the TA in the field takes place; the comparison of the results appears afterward, followed by the discussion section. The conclusions close the paper, proposing some perspectives for future work.

2. Background

2.1. The XfD

The X for Design concept (Filippi & Barattin, Citation2016) refers to the ability to model product development activities that start from the analysis of user needs and proceed up to the generation of prototypes and to the manufacturing of final products. All of these deals with different kinds of sources/targets in any context, keeping into consideration human perceptions, sensations and emotions. Sources are the starting point of the product development activities and can range from the conventional product functions and user needs to unconventional ones like shapes, materials, sounds and smells. Targets are the goals these activities aim to achieve and can consist of conventional product characteristics like structures as well as in unconventional ones like functions, behaviors and shapes. Sources and targets are somehow the same thing and this allows them to be collected using a unique set. Thanks to this, possible information redundancy and/or misalignment disappear. The XfD was developed by putting the framework of the product experience into a relationship with the Norman’s model of the seven stages of action cycle and with the situated Function-Behaviour-Structure framework with extensions (FBS, hereafter). These frameworks and model suggested the XfD architecture as well as the statements and rules used to model the product development activities. The core of the XfD replicates the FBS architecture. Specifically, the FBS components exploited here are the variables, the environments and the classes of sub-processes. The FBS variables allow describing the different aspects of a product; they are N (Need), R (Requirement), F (Function), B (Behavior) and S (Structure). These variables can represent at best the whole sources/targets set. The three FBS environments on which the variables are managed refer to the designers/engineers’ perceptive sphere, called external, interpreted and expected worlds. Finally, the four FBS classes of sub-processes (the building blocks for modeling product development activities) allow putting variables and worlds into a relationship, with a little add-on in the push-pull class where the interpretation of a variable can be also influenced by a different one. The exploitation of the framework of the product experience allows including aesthetics (A), meaning (M) and emotion (E) in the variable set. They do not belong to the sources/targets set; instead, they are internal variables. Finally, the model of the seven stages of action cycle suggested adding one more variable to be able to consider users correctly in the product development activities. This variable is human behavior (hB); it describes the behavior of those who will interact with the product under development. Thanks to this, how the human mind works in generating a reaction starting from a perceived stimulus is made explicit. Appendix A collects all the symbols used in the XfD.

The XfD contains some facilities to make its adoption as easy and effective as possible. A list of IF-THEN statements allows translating product development activities into sequences of sub-processes, the language used by the XfD. These statements come from the FBS and refer to generic activities. The XfD users, researchers and/or industrial practitioners, contextualize these statements time by time to highlight the proper variables and translate each existing product development activity into the corresponding sub-process (or sequence of sub-processes). In the end, the resulting ordered list of sub-processes represents the model of the product development activities as they are. Together with the statements, the XfD provides a set of rules to refine the model. This refinement consists in suggesting missing sub-processes. These absences come mainly from the underestimation or unawareness of the corresponding activities by the real product development processes. The introduction of the suggested sub-processes enriches the model; their implementation improves the original product development processes. The rules come from all the components considered in the research: the framework of the product experience, the model of the seven stages of action cycle and the FBS. These rules, just like the statements, are generic; they do not refer to any specific context or situation. The difference between statements and rules consists in their role: the statements model the product development activities already implemented while the rules add new activities to the existing ones for improvement. The XfD users select specific rules depending on the variables and sub-processes involved time by time and contextualize these rules to generate the missing sub-processes. Figure summarizes the adoption of the XfD.

Up to now, the XfD has been successfully adopted in the field to model different kinds of activities, from the development of fashionable home appliances (from shapes to functions) to the optimization of dishwashers (from sounds, behaviors and shapes to structures), to the development of pieces of furniture (from materials to shapes).

2.2. The irMMs-based method

The irMMs-based method evaluates the experiences of users interacting with products by exploiting interaction-related mental models (irMMs). The irMMs are mental models consisting of lists of users’ meanings and emotions, including the users and products’ behaviors determined by these meanings and emotions (Filippi & Barattin, Citation2017). The generation of an irMM comes during the user’s attempt to satisfy a specific need in a specific situation of interaction. This generation develops through five steps, based on the model of the seven stages of the action cycle. This model has been considered just to assess the correctness of the approach to the irMM generation; indeed, the latter is more complex including elements not present in the former like meanings and emotions. In the first step, the user perceives and interprets the need. Thanks to this interpretation, in the second step the user recovers previous irMMs and selects those ones that could be suitable to satisfy the current need. The presence/consideration of one or more real products could influence this selection. The selected irMMs allow the user to establish the goals in the third step. Goals represent intermediate and time-ordered results to achieve defining the path to follow to satisfy the need. They are required to overcome the limits of human problem solving when facing complex problems. In the fourth step, the user assigns the desired meanings and emotions to each goal. These come from the elaboration of the meanings and emotions belonging to the irMMs selected before. Positive meanings and emotions tend to remain as they are; negative ones could change into their positive correspondences depending on the number of previous experiences that report those negative meanings and emotions. In the fifth step, the user defines his/her behavior and the related product behavior in order to achieve those desired meanings and emotions. These behaviors come again from elaborating the behaviors present in the irMMs selected before.

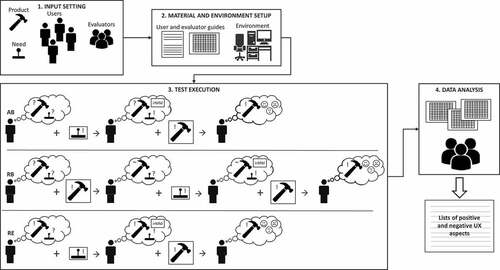

The irMMs-based method consists of four phases, as shown in Figure , and exploits tests with users to generate positive and negative UX aspects. Users compare the irMMs they generated as soon as they knew the need to achieve to the experience allowed by the real product. Tests consider three groups of users that differ in the knowledge of the product. The first group considers users who do not know the product at all; they generate their irMMs just based on previous experiences with different products. They are the absolute beginners (AB) users. The second group considers again users who do not know the product; nevertheless, before the generation of the irMMs (and before to know the need to satisfy), they are allowed to interact freely with the product for some time. They are the relative beginners (RB) users. Finally, the third group considers users who already know and use the product. They are the relative experts (RE) users. The tests for the three groups follow the same activities, as shown in the central part of Figure , except for some slight differences. These tests can run in parallel, providing that no interferences among them happen in the meantime.

What follows describes in detail all the activities for each phase of the method.

2.2.1. Phase 1. Input setting

This phase defines three inputs. The features of the product to evaluate are the first input; they can be functions, procedures, physical components, etc. The second input is the users who undergo the tests. Their selection comes by obeying to different knowledge requirements about the product. AB and RB users must not know the product before the evaluation; on the contrary, RE users must know it and have used it at least for a given period (its duration varies time by time, depending on the product complexity, on the required reliability of the evaluation results, etc.). One more requirement, valid for every user, is “users must have similar knowledge about the field the product belongs to”. More requirements can apply due to specific evaluation characteristics. Finally, the third input concerns the evaluators. For the AB and RB users, evaluators can be almost any, from skilled and knowledgeable about the product to barely aware of it; contrarily, for the RE users, the evaluators must know the product very well because, in case of users running into very specific problems, they must be able to overcome these problems quickly and easily.

2.2.2. Phase 2. Material and environment setup

The second phase prepares the material and the environment to perform the tests. The material consists of two documents, one for the users, different from group to group, and one for the evaluators. The first document, called user guide, helps the users in generating the irMMs and in performing the interaction with the product. Its first part reports the need to satisfy and the instructions on how to generate the irMM, together with examples. The second part shows suggestions on how to perform the interaction with the real product and to compare it to the irMM. This document contains tables and empty spaces to describe the irMM and to comment on the comparison. In the case of the AB users, the user guide is free of references to the product to evaluate in order to avoid bias. On the contrary, the user guides for the RB and RE users contain precise information on the product under evaluation to make the users’ attention focus on it rather than on products that the users could know and/or have used in the past. The second document allows the evaluators to collect data classified against their type (meanings, emotions, etc.) and tagged with the references to the users who find them; moreover, this document contains instructions on how to conduct the data analysis. The test execution also requires the setup of a suitable environment reflecting the common use of the product under evaluation. Depending on the characteristics of the specific evaluation, this phase selects a UX lab where the equipment to collect data is already present or identifies a real environment to perform the tests and moves the equipment there.

2.2.3. Phase 3. Test execution

The third phase starts differently for the RB users than for the AB and RE ones. The RB users interact freely with the product for a while before the irMM generation. The evaluators invite them to focus on the specific features of the product highlighted in the input phase. Once this free interaction comes to the end, every user generates his/her irMM. Then, he/she tries to satisfy the need by using the product. The user must behave as described in his/her irMM; in the meantime, he/she must check if the product allows performing his/her behavior and if he/she gets the product behavior as expected. Of course, problems can occur anytime; these problems are addressed as gaps. If a gap shows up, the evaluators suggest the way to overcome the problem in terms of user and product behaviors. The user reports the reasons for the gap from his/her point of view and judges if the allowed behaviors are better or worse than those described in his/her irMM. Once the interaction finishes, a debriefing where the user reasons about his/her experience and expresses further comments about it takes place. In particular, the evaluators invite the user to reconsider the meanings and emotions expressed during the generation of the irMMs in order to highlight any change.

2.2.4. Phase 4. Data analysis

Three rules lead the evaluators’ analysis of the data collected during the tests. These rules allow generating the positive and negative UX aspects starting from the desired and real meanings and emotions as well as from the gaps between the expected user and product behaviors (those described in the irMMs) and the real ones. These rules are as follows:

First rule. Every desired meaning or emotion generates one UX aspect. This aspect will be positive or negative depending on the positivity/negativity of the meaning or emotion it comes from. For example, consider the need “washing delicate clothes with a washing machine”. One of the goals can be “washing program for delicate clothes set”. One meaning associated to this goal can be temperature, with a positive judgment because of the reason “since it is difficult to know the best temperature for washing any type of clothes, the washing machine has predefined programs where all parameters are already set, temperature included”. This meaning and the related reason allow generating the positive UX aspect “the washing machine offers predefined programs where all the parameters are already set; the user must only select the right program according to the type of clothes to wash”.

Second rule. Every gap generates one UX aspect. Even in this case, the UX aspect will be positive or negative depending on the positivity/negativity of the gap it comes from. For example, consider again the need for washing delicate clothes. A gap related to the expected product behavior “all parameters of the washing program shown in the display” could raise if the real behavior of the product consists in showing the washing time and temperature only. The judgment about the gap would be negative, with the reason “when a washing program is selected, all washing parameters should be shown on the display to be sure to wash the clothes in the right way and safely. Unfortunately, the spin dryer speed is not shown”. This gap generates the negative UX aspect “the information reported on the display about the selected washing program is incomplete because it misses the spin dryer speed”.

Third rule. Every change between desired meanings and emotions and their corresponding real ones generates one or more UX aspects. If the change shows a positive trend, then the UX aspect(s) will be positive; negative otherwise. This generation comes considering the reasons for the changes expressed by the users. These reasons can refer to the interaction in general or they can point at specific gaps. In the first case, only one UX aspect arises; in the second case, also the UX aspects generated starting from the pointed gaps are considered as responsible for the change. For example, consider again the need for washing delicate clothes. The desired emotion happy, associated with the goal “starting time for the washing machine optimized” can become very happy once the real interaction has come. There is a positive trend between the desired and real emotions. The reason for this change could be “the setting of the washing time at night without asking anything to the user is very interesting because, in this way, the risk to forget switching on the washing machine highly decreases and money are saved (at nighttime, electricity is cheaper)”. This reason allows generating the positive UX aspect “the washing machine is smart in choosing the right time to launch the washing program”. Nevertheless, also the positive gap “the display proposed to the user a washing time to launch the washing program, expecting just a confirmation” could be responsible for this change. Therefore, also the UX aspect “the washing machine suggests the best time to launch the washing program automatically” derived from that gap will be associated with this change. Finally, if a desired meaning or emotion does not find its real mate or, vice versa, if a real meaning or emotion was not present in the irMM as a desired one, this rule works the same because the value of the missing mate is considered as neutral and the trend is evaluated respect to this value.

As soon as the data analysis comes to the end, the UX aspects generated thanks to the three rules are collected and compared to each other to delete repetitions. Then, the aspects are classified against the interaction topics they refer to. For each topic, the UX aspects are split into positive and negative and ordered against the number of occurrences and impact. If a UX aspect refers to product characteristics rarely involved in the interaction, its impact will be set to low; on the contrary, if the UX aspect deals with core procedures determining the product cognitive compatibility with the users’ problem-solving processes, the impact will be higher.

3. Activities

Achieving the research goal requires two main activities. The first activity improves the irMMs-based method thanks to the XfD. The second activity verifies the XfD capabilities in improving the irMMs-based method by comparing the results of the adoption of the improved release of the irMMs-based method in the field with those of the original one and of the TA. Three UX/XfD experts participate in these activities, acting also as evaluators in the adoptions in the field.

3.1. Improvement of the irMMs-based method

The translation of the evaluation activities of the irMMs-based method into the XfD language comes first. This translation exploits statements, variables and classes of sub-processes. Then, the UX/XfD experts use the rules to refine the model. Some examples of translation and refinement show how the UX/XfD experts work. Finally, the UX/XfD experts translate the suggestions coming from the rules back into evaluation activities.

3.1.1. Translation

The translation of the first two phases (input setting and material and environment setup) do not generate any sub-process. This because they do not deal with transformations, interpretations, focussing or comparisons, the only activities translated into sub-processes. Nevertheless, these phases define the agents addressed in the model – the users and the evaluators, as well as the need to satisfy. The need belongs to the users’ external world because the users are the agents who interpret it; for this reason, the Ne variable represents it. The third phase (execution of the tests) considers the users as the only agents; in case of gaps rising up, the model assumes that they solve the gaps by themselves, without the evaluators’ help. Therefore, the worlds and sub-processes in this part of the model refer to users only. On the contrary, in the fourth phase (analysis of the results), the worlds and sub-processes refer to the evaluators only.

The resulting model is in Appendix B. The first four columns of the table contain the phases of the irMMs-based method (with the agents involved time by time), the activities of each phase, the sub-processes generated thanks to the exploitation of the XfD statements and the reasons for these translations.

For example, consider the translation of the free interaction of the RB users. This activity starts by interpreting the product structures (physical characteristics like colors, materials or sounds), behaviors and functions. All of them belong to the external world (users did not generate them). Product structures are considered first. The XfD statement “IF the product development activity deals with the analysis of a variable and this variable does not find any correspondence to the information present in the user’s mind (it is not generated by the user), THEN the variable must be interpreted using the push-pull class (to be precise, the push happens here)” regards external variables the users get in contact with. This statement generates the interpretation (⊆) of Se as Si. After that, the XfD statement “IF the product development activity deals with the analysis of a variable and this variable is interpreted by considering only information coming from the external world, THEN the variable must be further interpreted by exploiting the users’ previous experiences (previous knowledge) using the push-pull class (to be precise, the pull happens here)” generates the further interpretation (⊆) of Si. The same two rules apply for the product behaviors and functions. In other words, Be and Fe are interpreted (⊆) into Bi and Fi and further interpreted (⊆) afterward. Therefore, the first part of the RB users’ free interaction corresponds to the six sub-processes Se⊆Si, Si⊆, Be⊆Bi, Fe⊆Fi, Bi⊆ and Fi⊆.

3.1.2. Refinement

The XfD rules suggest 24 new sub-processes to the UX/XfD experts. These sub-processes represent the refinement of the model. The last two columns of the table in Appendix B contain them and their meanings.

For example, consider again the RB users’ free interaction; in particular, focus on the first part of it, regarding the interpretation of the product structures, behaviors and functions. The rule “interpreted behaviors can be achieved by interpreting external behaviors and by transforming interpreted structures” describes the generation of new interpreted behaviors starting from external behaviors (as it happens in the original release of the irMMs-based method) but also from interpreted structures. Therefore, this rule suggests adding the sub-process about the transformation (→) of Si into Bi before the further interpretation of the product behaviors (Bi⊆). Because of the differences in the input (behaviors vs. structures), this transformation can highlight behaviors that the simple interpretation of external behaviors could miss. Similarly, the rule “interpreted functions can be achieved by interpreting external functions and by transforming interpreted behaviors” describes the generation of product functions starting from external functions but also from interpreted behaviors. This rule suggests adding the transformation (→) of Bi into Fi before the further interpretation of the product functions (Fi⊆). In the end, the refinement of the first part of the RB users’ free interaction consists in the addition of the two new sub-processes Si→Bi and Bi→Fi.

3.1.3. Implementation

In order to put the result of the refinement into practice, the UX/XfD experts translate the suggestions obtained from the XfD rules back into evaluation activities. In this case, this corresponds mainly to modifications of the documents used during the test execution and for the data analysis. Appendix C contains the details of this implementation. The first two columns of the table recall all the suggestions and the related sub-processes; the last column describes the way the translations of the suggestions back into evaluation activities take place.

For example, consider the first suggestion that deals with the generation of new behaviors from structures and of new functions from behaviors (Si→Bi, Bi→Fi) (letter “a” in the table). This suggestion corresponds to adding the new instruction “during your free interaction, please pay attention also to the product behaviors that its components (shape, sounds, etc.) suggest to you, as well as to the functions suggested by these behaviors” to the part of the RB user guide regarding the free interaction.

3.2. Verifying the XfD capabilities

Verifying the capabilities of the XfD in improving UX evaluation activities comes by comparing the results of the adoption of the original release of the irMMs-based method in the field with those of the improved one and of the TA. All of them focus on the same product, a well-known CAD software package, and involve the same three UX/XfD experts as evaluators since they know very well the product under evaluation, they have a more than twenty-year experience in the CAD field, and they use the specific CAD software package almost every day.

3.2.1. Adoption of the original and of the improved releases of the irMMs-based method

The adoptions in the field of the original and of the improved releases of the irMMs-based method consider the same need and involve users with similar knowledge about the product and the field.

The input setting (first phase) highlights the 3D modeling capabilities of the CAD software package as the product feature to evaluate. After that, the evaluators select the users. All of them are students of mechanical engineering courses. They show some skill and knowledge about 3D modeling since they have already used one or more CAD software packages during the courses. AB and RB users do not know the CAD software package under evaluation; RE users have been using it almost for three months. Thirty users for each release are involved, 10 for each group.

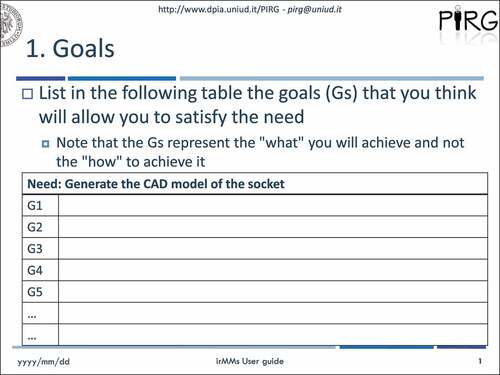

In the second phase, the evaluators customize the user guides. The need is “generate the 3D model of the socket shown in the drawing. The model is for production. Use the CAD software package available on the PC. Please respect the assigned dimensions”. Figure shows the drawing of the socket as reported in the user guides. Instructions and tables must be customized respect to this need. Figure reports the instructions to generate the goals and the empty table to fill. The documents for the evaluators remain as they are; they do not need customizations. The environment to perform the tests is a university lab consisting of two separate rooms. In the first room, the users generate the irMMs; in the second room, a PC with the CAD software package running on it allows the execution of the interaction.

Once the material is ready, the tests can start (third phase). RB users interact freely with the CAD software package for 10 min. After that, all the users generate their irMMs following the user guides. Table reports the excerpt of an irMM generated by an RB user during the tests involving the improved release of the irMMs-based method (please refer to the first two columns). This excerpt refers to the activities aimed at achieving the goal “Boolean subtraction done”.

Table 1. Excerpt of an irMM (first two columns) and its evaluation against the real interaction (last two columns)

At the end of the irMMs generation, the users compare the real interaction with the CAD software package to that described in their irMMs, always following the indications of the user guides. For example, consider again Table . During the real interaction, the user highlights two gaps (the rows with “Failed” in the third column). The first gap, related to the user action “select the command to subtract volumes”, referred to the command extrude and was negative; the second gap, related to the product reactions/feedback “the preview of the resulting volume is shown”, referred to the richer visualization of the preview of the results and was positive. The fourth column reports the reasons for the gaps from the user’s point of view and the related positive/negative judgment. In order to avoid bias, the users must keep their experiences covered until the end of the study.

In the fourth phase, the evaluators analyze the data and generate the lists of positive and negative UX aspects for each release by exploiting the three rules described in the background section.

The adoption of the original release of the irMMs-based method (data analysis included) takes 36 h, while the improved release takes 40 h. Table reports some examples of positive and negative UX aspects highlighted by the two adoptions. They refer to three interaction topics: feature Extrusion, main toolbar and 3D shapes generation. The table reports the positive and negative UX aspects for each topic, ordered against their frequency and impact.

Table 2. Examples of UX aspects coming from the adoption of the original and of the improved releases of the irMMs-based method

3.2.2. TA adoption

The TA is a well-known usability evaluation method based on tests where the participants are required to talk aloud while performing specific tasks (Nielsen, Citation1993). The participants should refer to every thought/sensation regarding the product, the interaction, possible problems, etc. Moreover, the TA also aims at collecting data about the actual use of the product (Van Den Haak, De Jong, & Schellens, Citation2003). Here, 30 users with the same knowledge and abilities required for the previous two adoptions take part in that of the TA. The division of these users into three groups of 10 users each allows maintaining the comparison feasibility. The users belonging to the first group do not know the CAD package, and they start the TA activities immediately. The users of the second group do not know the product, but they can interact freely with the package for 10 min before to start the TA activities. The third group of users know well the product and start the TA activities immediately. All the users have a document containing the definition of the need to satisfy (the same need as that for the irMMs-based method adoptions, described using the same words) and the drawing of the object to model. Once received the document, the users start to interact with the product to satisfy the need in the best way from their point of view. The evaluators must not interfere during the tests; they just stimulate the users to describe verbally the activities that they would like to do, the ones that they must do because of the perceived limits of the product (always from their point of view), and everything better or worse than what they would expect. Exceptionally, the evaluators come in help if users get stuck because they do not understand how to solve an interaction problem. Meanwhile, the evaluators report in their documents what they consider as important for the UX evaluation. This adoption, conducted in the same university lab, takes 28 h; even, in this case, users keep their experiences covered until the end of the study.

3.2.3. Results of the three adoptions

Table report the quantitative results of the three adoptions. The first row of each part contains the number of positive and negative UX aspects found by each group of users (AB, RB and RE for the two irMMs-based method adoptions; I, II and III for the TA), together with the sum of them, doubles excluded. UX aspects are classified against their references to product functions (F), behaviors (B) or structures (S) to allow a finer evaluation of them and the highlighting of the contributions of the activities added thanks to the refinement. The last row contains the time spent to perform the tests and the data analysis for each adoption. The content of the other rows, present in the parts of the table referred to the irMMs-based method adoptions only, is as follows: the second row contains the number of positive and negative gaps (doubles excluded); these are classified against product functions (F), behaviors of users and product (B+), and product structures (S). The desired meanings and emotions rows contain the number of them (doubles excluded) and the changes of meanings and emotions rows refer to changes, additions or deletions of meanings or emotions due the real interaction respect to the desired ones. The numbers are those of the positive and negative changes found.

Table 3. Quantitative results of the adoptions of the original and of the improved releases of the irMMs-based method, as well as of the TA method

The improved release of the irMMs-based method generated more UX aspects (24 positive and 88 negative) than the original one (16 positive and 68 negative) and the TA (13 positive and 60 negative) did. Nevertheless, the time spent to perform the improved release is the highest (11.2% more than the original release and 43% more than the TA). Most of the time was spent in all the adoptions by those users who did not know the software package; their time against expert users is almost double.

Qualitatively speaking, the three adoptions found the same 50 UX aspects; among them, there are the positive ones dealing with the presence of predefined 3D shapes to start the modeling from and with the possibility of modeling parts and assemblies in the same environment and the negative ones referring to the complexity in orienting the model view or to the uselessness of the hidden reference system. Other than the UX aspects found in every adoption, the original release of the irMMs-based method highlighted three fresh ones, the improved one twenty-seven and the TA eight. Moreover, the distribution of the UX aspects coming from the improved release of the irMMs-based method appears more homogeneous against product functions, behaviors and structures than that of the original release—mainly focused on product functions and behaviors—and that of the TA—more oriented to product structures. A homogeneity is present also among the results of each group of users, always speaking of the improved release; respect to the original release, AB and RB users increased their numbers of UX aspects referred to structures (in most of the cases the numbers are doubles) while RE users increased the number of UX aspects referred to both functions and behaviors.

4. Discussion

The results of the adoptions make clear that the improved release of the irMMs-based method can generate more UX aspects than the original release and the TA; moreover, there can be more fresh UX aspects and the distribution of the UX aspects against product functions, behaviors and structures can be more homogeneous. Clearly, all of this seems to be mainly due to the activities added thanks to the refinement. The higher number of fresh UX aspects is due to the higher numbers of gaps (both positive and negative) highlighted by all groups of users and of desired emotions and changes of emotion, found mainly by the RE group. For example, consider the positive UX aspect reported by three users, two of them belonging to the RB group and the last one to the AB group, “the icon symbols in the drop-down menus of the toolbar help the users to understand what the commands are for”. It comes from the positive gap “the icons of some menu commands (e.g., coil, hole and rib) were clearer and more intuitive than expected”. This gap refers to structures (icon symbols) and the users evaluated the behavior of the CAD package commands as easier to understand thanks to these icons. All of this is due to the activity added thanks to the refinement “evaluation of the product behaviors by considering its structures” (labeled as “e” in the tables of Appendixes B and C). Always referring to the freshness of the UX aspects highlighted by the improved release, consider now the negative UX aspect reported by an AB user “the feedback does not support at best this feature because the color of the volumes does not change according to the operator selected (addiction vs. subtraction)”. This UX aspect comes from the activity added thanks to the refinement “generation of new emotions due to the influence of structures and aesthetics” (labeled as “g” in the tables of Appendixes B and C). This activity pushes the users to express their real emotions considering also the product structures. The user expressed the desired emotion secure. After the real interaction, this emotion changed into quite insecure. The reason for this negative change, referred to the structure (the color, specifically), was “I became insecure on what to do to satisfy the need because the feedback from the product was unclear; the colors of the volumes did not change according to the kind of operation I was performing (addition vs. subtraction) and I did not know if I was right or wrong”.

Nevertheless, the original release of the irMMs-based method and the TA find fresh UX aspects as well but the reasons for this are different. The fresh UX aspects highlighted by the original release seem to be just due to slight differences in the users. This can be the only reason, given that the improved release is the original one except for the refinement that added activities (without modifying/deleting existing ones). The fresh UX aspects highlighted by the TA seem to be mainly due to the freedom in exploring the product, looking at characteristics not necessarily related to the satisfaction of the need. Moreover, the TA users can behave naturally, without those limitations that the users involved in the adoptions of the original and improved releases meet since they must behave exactly as described in their irMMs.

The homogenous distribution against F, B and S of the UX aspects coming from the improved release seems to be due again to the refinement. For example, the activity “generation of new structures from meanings, emotions and user behaviors” (labelled as “h” in the tables of Appendixes B and C) leads the evaluators to the generation of UX aspects focused not only on product functions and behaviors as it happened in the original release, but also on structures. The original release completely misses this activity; this is the reason for the absence of homogeneity. In the case of the TA, the freedom in interacting with the product makes the users focus naturally more on structures than on behaviors or functions. Moreover, none activity is specifically devoted to the analysis of functions and behaviors; therefore, it is normal to get less homogeneous results. This homogeneity can be observed also inside each group of users, and it is always due to the refinement. For example, the activity “generation of new behaviors from structures and of new functions from behaviors” (labeled as “a”) helps RE users during the generation of their irMMs to focus not only on structures but also on product functions and behaviors. The activity “evaluation of the product behaviors by considering its structures” (“e” label in the appendixes) helps AB and RB users during the real interaction with the product in taking care about structures when they evaluate product behaviors.

All of this starts demonstrating the XfD capabilities in improving the irMMs-based method by adding activities that increase the quantity and quality of the final results (UX aspects). The longer time required to perform the new activities is quite limited, and it seems a good tradeoff to get the advantages described before.

This research has also some drawbacks. The three adoptions are not enough to say the ultimate word about the verification of the XfD capabilities in improving UX evaluation activities. Only the irMMs-based method is exploited for this verification; moreover, only a product took part in the evaluation and users had very specific skills and knowledge. The data collected up to now do not allow applying statistical methods like, e.g., ANOVA (variance analysis) (Montgomery & Runger, Citation2002) or t-test (test of Student for comparison of means) (de Winter, Citation2013), to confirm their reliability.

5. Conclusions

Because of the increasing importance of user experience (UX) in product development processes, several UX evaluation possibilities, from simple tools up to more articulated and complete approaches, have appeared in the literature. Among the complete ones, the irMMs-based method aims at evaluating the UX focusing on emotions and mental models. The literature also offers several frameworks and models to describe product development activities. One of these, the X for Design (XfD) framework, allows improving activities by translating them thanks to a set of IF-THEN statements and by refining the result using rules. This research aimed at verifying the XfD capabilities in improving the irMMs-based method. This verification happened by comparing the results generated by the adoption in the field of the release of the irMMs-based method improved thanks to the XfD to those coming from the original release of it and from the well-known Think Aloud method. The improved release of the irMMs-based method generated more UX aspects than the original one and the Think Aloud; moreover, it highlighted more fresh UX aspects and the distribution of the UX aspects against product functions, behaviors and structures was more homogenous.

Regarding some research perspectives, there is a need for further actions to gain the required objectivity in assessing the XfD capabilities. One of these actions is applying the XfD to other existing UX evaluation activities like, for example, the Valence method and the meCUE questionnaire. In addition, more evaluation activities involving the irMMs-based method are required, possibly focusing on different kinds of products and involving users showing heterogeneous skills and knowledge. All of this would allow gaining a meaningful dataset and adopting statistical methods in order to verify the data reliability.

Acknowledgements

The authors would like to thank all the students of the Mechanical Engineering courses at the University of Udine who took part in the tests.

Additional information

Funding

Notes on contributors

Stefano Filippi

Stefano Filippi is presently Full Professor of Design and Methods in Industrial Engineering at University of Udine, Italy. He is the director of the Product Innovation Research Group - PIRG, and his research focuses mainly on Knowledge Based Innovation Systems, Interaction Design, User Experience, Geometric and Solid Modeling, and Rapid Prototyping. Main application domains are Industrial Engineering, Medicine (Orthopedic and Maxillofacial Surgery) and Cultural Heritage. His research generated more than 100 international publications.

Daniela Barattin

Daniela Barattin got the PhD in Industrial and Information Engineering on 2014. She works as a researcher at the University of Udine in the Product Innovation Research Group - PIRG, dealing with interaction design and user experience. Her research generated more than 25 international publications.

References

- Bedek, M. A., Firssova, O., Stefanova, E., Prinsen, F., & Chaimala, F. (2014). User-driven development of an inquiry-based learning platform: Formative evaluations in weSPOT. Interaction Design and Architecture(S) Journal, 23, 122–30.

- Bernhaupt, R., & Pirker, M. (2011, September). Methodological challenges of UX evaluation in the living room: Developing the IPTV-UX questionnaire. In P. Forbrig, R. Bernhaupt, M. Winckler, & J. Wesson (Eds.), Proceeding of the 5th workshop on software and usability engineering cross-pollination (pp. 51–61). Lisbon, Portugal: Springer.

- Brave, S., & Nass, C. (2012). Emotion in human-computer interaction. In J. A. Jacko (Ed.), The human-computer interaction handbook (pp. 81–96). Boca Raton: CRC Press.

- Burmester, M., Mast, M., Kilian, J., & Homans, H. (2010, August). Valence method for formative evaluation of user experience. In O. W. Bertelsen, P. Krogh, K. Halskov, & M. G. Petersen (Eds.), Proceedings of the 8th designing interactive systems conference 2010 (pp. 364–367). Aarhus, Denmark: ACM Press.

- Cascini, G., Fantoni, G., & Montagna, F. (2012). Situating needs and requirements in the FBS framework. Design Studies, 34(5), 636–662. doi:10.1016/j.destud.2012.12.001

- de Winter, J. C. F. (2013). Using the Student’s t-test with extremely small sample sizes. Practical Assessment, Research & Evaluation, 18(10), 1–12.

- Desmet, P., & Hekkert, P. (2007). Framework of product experience. International Journal of Design, 1(1), 13–23.

- Filippi, S., & Barattin, D. (2016, May). X for design, a descriptive framework for modelling the cognitive aspects of different design activities. In D. Marjanović, M. Štorga, N. Pavković, N. Bojčetić, & S. Škec (Eds.), Proceeding of the 14th International Design Conference (pp. 1265–1274). Dubrovnik, Croatia: The Design Society.

- Filippi, S., & Barattin, D. (2017, July). User experience (UX) evaluation based on interaction-related mental models. In T. Ahram & C. Falcão (Eds.), AHFE2017. Proceeding of the advances in usability and user experience. Advances in intelligent systems and computing (Vol. 607, pp. 634–645). Los Angeles, California, USA: Springer.

- Gentner, D., & Stevens, A. (1983). Mental models. Hillsdale: Lawrence Erlbaum Associates.

- Gero, J. S., & Kannengiesser, U. (2004). The situated function-behaviour-structure framework. Design Studies, 25(4), 373–391. doi:10.1016/j.destud.2003.10.010

- Greca, I. M., & Moreira, M. A. (2000). Mental models, conceptual models and modelling. International Journal of Science Education, 22(1), 1–11. doi:10.1080/095006900289976

- Harchay, A., Cheniti-Belcadhi, L., & Braham, R. (2014). A context-aware framework to provide personalized mobile assessment. Interaction Design and Architecture(S) Journal, 23, 82–97.

- Law, E. L. C., Roto, V., Hassenzahl, M., Vermeeren, A. P. O. S., & Kort, J. (2009, April). Understanding, scoping and defining user experience: A survey approach. In D. R. Olsen & R. B. Arthur (Eds.), Proceeding of the 27th conference on human factors in computing systems (pp. 719–728). Boston, USA: ACM Press.

- Minge, M., & Thuring, M. (2018, July). The meCUE questionnaire (2.0): Meeting five basic requirements for lean and standardized UX assessment. In A. Marcus & W. Wang (Eds.), DUXU 2018. Proceeding of the interactional conference of human-computer interaction (pp. 451–469). Las Vegas, USA: Springer.

- Montgomery, D. C., & Runger, G. C. (2002). Applied statistics and probability for engineers (3rd ed.). New York, USA: John Wiley & Sons, Inc.

- Nielsen, J. (1993). Usability Engineering. Boston, USA: Academic Press.

- Norman, D. (2013). The design of everyday things. (Revised and expanded ed). New York, USA: Basic Book.

- Park, J., Han, S. H., Kim, H. K., Cho, Y., & Park, W. (2013). Developing elements of user experience for mobile phones and services: Survey, interview, and observation approaches. Human Factors and Ergonomics in Manufacturing & Service Industries, 23(4), 279–293. doi:10.1002/hfm.20316

- Rauschenberger, M., Pérez Cota, M., & Thomaschewski, J. (2013). Efficient measurement of the user experience of interactive products. How to use the user experience questionnaire (UEQ). Example: Spanish language version. International Journal of Interactive Multimedia and Artificial Intelligence, 2(1), 39–45. doi:10.9781/ijimai.2013.215

- Saariluomaand, P., & Jokinen, J. P. P. (2014). Emotional dimensions of user experience: A user psychological analysis. International Journal of Human-Computer Interaction, 30(4), 303–320. doi:10.1080/10447318.2013.858460

- Thuring, M., & Mahlke, S. (2007). Usability, aesthetics and emotions in human-computer technology interaction. International Journal of Psychology, 42(4), 253–264. doi:10.1080/00207590701396674

- Van Den Haak, M. J., De Jong, M. D. T., & Schellens, B. J. (2003). Retrospective vs. concurrent think-aloud protocols: Testing the usability of an online library catalogue. Behaviour and Information Technology, 22(5), 339–351. doi:10.1080/0044929031000

- von Saucken, C., & Gomez, R. (2014). Unified user experience model enabling a more comprehensive understanding of emotional experience design. In J. Salamanca, P. Desmet, A. Burbano, G. D. S. Ludden, & J. Maya (Eds.), Proceedings of the 9th International Conference on design and emotion: The colors of care (pp. 631–640). Bogota: Universidad de los Andes.

- von Saucken, C., Michailidou, I., & Lindemann, U. (2013). How to design experiences: Macro UX versus micro UX approach. Design, User Experience, and Usability, Web, Mobile, and Product Design, 8015, 130–139.

- Yang, X., He, H., Wu, Y., Tang, C., Chen, H., & Liang, J. (2016). User intent perception by gesture and eye tracking. Cogent Engineering, 3, 1–10. doi:10.1080/23311916.2016.1221570

- Zarour, M., & Alharbi, M. (2017). User experience framework that combines aspects, dimensions, and measurement methods. Cogent Engineering, 4, 1–25. doi:10.1080/23311916.2017.1421006

ߕ

Appendix A. Symbols used in the XfD

ߕ

Appendix B. XfD model of the irMMs-based method

ߕ

Appendix C. Implementation of the activities suggested by the refinement