Abstract

There is a large body of work focused on the development of mobility assistive devices for visually impaired (VI) people. However, none of them seems to satisfy the needs of VI people, which might suggest that these requirements have not been considered during the development process. In this sense, this study aimed to develop a novel assistive system based on the opinions provided by a group of VI persons who also participated in the performance assessment stage. Two ultrasonic sensors and one infrared sensor were combined to estimate the proximity and height of an obstacle in front of the user who acquired that information via audio messages and vibrating alerts. The proposed system was tested by twelve VI participants, who were asked to provide suggestions for improvement. Our prototype performed well indoors and achieved overall positive feedback when detecting obstacles at different heights, although it was unable to provide directional information. Future research endeavors in this field might be benefited from more collaborative participation between end-users, researchers, and institutes for VI people.

PUBLIC INTEREST STATEMENT

Recent surveys exploring the perception of VI people on current electronic traveling aids have found that none of them can satisfy their requirements . A possible explanation is that VI people’s opinions have rarely been considered in the development process, and their participation, when possible, has usually been limited to the performance assessment stage. In an attempt to address this issue , this work aimed to develop an electronic traveling aid based on the opinions provided by a group of VI persons who also participated in the performance evaluation stage. Results show that it is feasible to estimate the proximity and height of an obstacle by properly combining ultrasonic and infrared sensors. Moreover, the proposed system achieved overall positive feedback from the group of VI participants. Ultimately, more collaborative participation between end-users, researchers, and institutes for VI people is crucial to develop more useful and reliable solutions.

1. Introduction

It is estimated that at least 2.2 billion people worldwide suffer from any moderate/severe visual disability (World Health Organization (WHO), Citation2021). In Colombia, the most frequent impairment among people with disabilities is the visual one (Arias-Uribe et al., Citation2018), and the highest proportion of VI people (13.2%) can be found in Valle del Cauca, whose capital is Santiago de Cali.

Blind or visually impaired (VI) people cope with many difficulties when performing daily activities, such as moving or traveling independently from one place to another (Lakde & Prasad, Citation2015; Zeng, Citation2015). Despite their simplicity and affordability, trained dogs and the white cane cannot provide VI people with all the necessary information for safe mobility. This need for more reliable navigation and orientation approaches has motivated researchers to use technology for developing VI-dedicated assistive systems. A visual assistive device may fall into one of three categories (Dakopoulos & Bourbakis, Citation2009; Elmannai & Elleithy, Citation2017): 1) the one including devices mainly focused on obstacle avoidance, 2) the one including devices that implement several orientation strategies such as spatial models and/or surface mapping, and 3) the one including devices based on geo-spatial positioning technologies such as GPS.

As pointed out by several authors (Khan et al., Citation2018; Manduchi & Coughlan, Citation2012; Tapu et al., Citation2020), any assistive device designed to facilitate the life and inclusion of any person with a disability should account for the needs and wishes of end-users. In turn, the current literature reports a plethora of electronic traveling aids (for a review see: Elmannai & Elleithy, Citation2017; Khan et al., Citation2018; Tapu et al., Citation2020), many of which offer promising results. However, none of them seems to satisfy the needs of VI people, which might suggest that these requirements have not been taken into account during the development process. Some researchers (Dakopoulos & Bourbakis, Citation2009; Tapu et al., Citation2020) have interviewed several groups of VI users in their studies. On the other hand, the information collected was used not as a part of a development process but rather as a criterion to provide a comparative overview of the state-of-the-art systems. In this sense, this study aimed to develop a novel assistive system based on the opinions provided by a group of VI persons who also participated in the performance assessment stage. This work was conducted with the collaboration of the Institute for Blind and Deaf Children in Santiago de Cali, Colombia.

2. Wearable assistive devices for VI people: a brief state-of-the-art overview

As previously pointed out, there exists a large body of work focused on introducing novel and reliable mobility assistive devices for VI people. According to (Tapu et al., Citation2020), electronic traveling aids can be active or passive. Active traveling aids collect environmental information and translate it into a form that can be perceived by the VI user through another sense (e.g., hearing or touching). On the other hand, passive traveling aids include different types of cameras and high-complexity algorithms designed to increase the mobility of VI users. But as important as more detailed and accurate information about the environment is the attribute of being easy to carry (Dakopoulos & Bourbakis, Citation2009; Tapu et al., Citation2020). Portable devices are usually compact and lightweight, although they may require constant hand interaction (e.g., an electronic cane). Conversely, wearable devices allow hands-free interaction or, at least, demand minimum hand interaction since they can be worn. Body locations considered for the development of visual assistive devices include head, chest, waist, hands, wrist and feet (Velázquez, Citation2010).

2.1. Head-mounted assistive devices

People frequently use head motion to collect information about their surroundings, so, not surprisingly, devices like headsets, glasses, and headbands are the most popular kind of visual assistive devices. In (Bhatlawande et al., Citation2012), the authors introduced a microprocessor-based prototype consisting of five ultrasonic sensors distributed on a pair of eyeglasses and a waist belt. Results showed that training is one of the most important factors for increasing users’ confidence when using the device. Nevertheless, the prototype was tested only indoors, and it could not detect ground-level obstacles. Based on the argument that the human brain is powerful enough to process complex auditory information, Meijer developed the vOICe system, which uses a single camera as an input source (Meijer, Citation1992). A portable computer transforms visual information into sounds that, then, are delivered to the VI user via headphones. The vOICe system is currently a mature commercial product (see: http://www.seeingwithsound.com). Still, it requires extensive training due to the complicated sound patterns. At the beginning of the 2000 decade, several authors began to include two video cameras to overcome limitations posed by assistive systems based on monocular cameras (e.g., depth information). For instance, the system proposed in (Schwarze et al., Citation2015) can differentiate foreground objects from the background by combining visual odometry and a Stochastic Cloning Kalman filter. More recently, Islam and coworkers developed a spectacle prototype for detecting obstacles in three different directions (left, front, and right) and path holes (Islam et al., Citation2020). The system proved to be more accurate, lightweight, and affordable than similar traveling aids. However, both approaches fail to determine the category or level of danger associated with each obstacle.

2.2. Vests and belts

Several traveling aids for VI people include processing boards and batteries whose size makes them unsuitable for wearing on the head. In this sense, vest-like assistive devices could be a valuable contribution. For instance, a prototype of navigation assistance for visually impaired (NAVI) people, developed in the University Malaysia Sabah, uses a vest to hold a single-board processing system and rechargeable batteries (Sainarayanan et al., Citation2007). The prototype employs a fuzzy learning vector quantization (LVQ) neural network to classify pixels into either background or objects. During the testing phase, users could identify stationary and even slow-moving obstacles in real-time, although they did not receive information about the distances of objects. Some other authors adopt a vest-like design to attach various vibration actuators providing haptic feedback to the VI user (Jones et al., Citation2006; Van Veen & Van Erp, Citation2003). On the one hand, only the user receives the information (i.e., discretion is a valuable asset). On the other hand, tactile vest displays usually require physical contact, so the user may consider them invasive.

One of the first belt-like assistive devices for VI people is the NavBelt (Shoval et al., Citation1998). This electronic travel aid provides the VI user with acoustical feedback from an array of ultrasonic sensors attached to a belt but without including a mounted-head sensing module, as done in (Bhatlawande et al., Citation2012). Years later, Tsukada and Yasumura proposed the ActiveBelt (Tsukada & Yasumura, Citation2004), which consists of a GPS module, a geomagnetic sensor, and eight vibrators equally distributed around the torso. This system provides the user with GPS-mapped directional information by increasing or decreasing the actuators’ vibration frequency, which ranges from 33 to 77 Hz. In a more recent study, Katzschmann and colleagues introduced ALVU (Array of Lidars and Vibrotactile Units), which allows VI users to detect low-height and high-hanging obstacles, as well as ascending and descending upstairs (Katzschmann et al., Citation2018). Still, the experiments did not account for moving objects, and they only covered a limited range of shapes of objects and boundaries.

2.3. Gloves and shoes

Various visual assistive devices that exploit touch as the substitution sense include vibrotactile arrays for the palm and fingertips. The stereo camera-based system developed by researchers from the University of Guelph in Canada includes a tactile unit consisting of a glove with five piezoelectric buzzers on each fingertip (Audette et al., Citation2000). The authors use a standard stereo-vision algorithm to create a depth map which, in turn, is divided into five directions. Each direction corresponds to a piezoelectric element, so if a pixel in an area exceeds the threshold distance, then the corresponding vibration element is activated to warn the VI user about an obstacle in that direction. Another example of the adoption of vibrotactile gloves for developing visual assistive devices is the navigational assistant proposed in (Mancini et al., Citation2018). This system aimed to facilitate jogging or running activities by detecting lines or lanes on the runway. But as occurs with tactile vest displays, this and similar approaches are considered invasive by the users. Moreover, some authors suggest that visual assistive devices should be hands-free to allow VI people to use primary traveling aids, such as the white cane. In an attempt to fulfil this requirement, various researchers who included haptic feedback in their designs started considering other body parts. A research group from Universidad Panamericana in Mexico developed a shoe-integrated vibrotactile display with a two-fold purpose: i) to study how people acquire directional information through their feet, and ii) to assess whether, or not, this process is reliable enough to be exploited for traveling assistance of VI people (Velázquez et al., Citation2009). Results suggest that vibrating information on the foot is appropriate for directional navigation but not for precise recognition of a shape. Another shoe-like prototype for visual assistive guidance is presented in (Rahman et al., Citation2019). This system, known as BlindShoe, can detect obstacles in front and back of the user, as well as the presence of water on the ground surface. However, the prototype cannot provide information related to the obstacle’s height and, given that the water sensor needs to be in contact with the liquid to send a signal, it does not prevent VI users from walking on a wet surface.

3. Methods

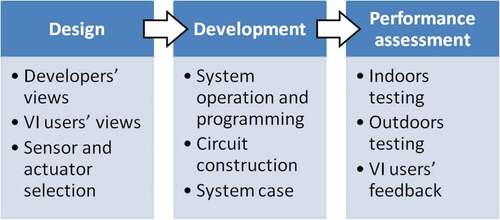

Figure outlines the design and development process of the proposed system. The subsections below provide details of each stage.

3.1. Design requirements for the proposed system

The system proposed in this study should be able to detect any obstacle in front of the user as well as potentially dangerous drop-offs (e.g., descending stairs). Whenever an obstacle is detected, information related to its proximity and height is delivered to the user via audio messages and vibrating alerts. Regarding the obstacle height, three vertical levels were considered: foot level, trunk level, and head level. Furthermore, the system should also include the following features:

Real-time operation

Hands-free

Rechargeable battery operation

Lightweight and inexpensive

To achieve more active participation of end-users in the development process, a 3-item survey was used to collect their opinions (see, Table ). Fifteen VI subjects with ages ranging from 20 to 60 years participated in the survey. Answers were collected via phone call to minimize risks associated with the COVID-19 pandemic spreading.

Table 1. Survey 1—Needs assessment

3.2. Sensor and actuator selection

Obstacle detection is achieved by three sensors: two HC-SR04 ultrasonic sensors (Multicomp, USA) and one GP2Y0A02YK0F infrared sensor (Sharp, Japan). Ultrasonic sensors are frequently used because of their high precision within short distances and their robustness to electromagnetic interference. Specifically, the HC-SR04 consists of a transmitter and a receiver of high-frequency ultrasonic pulses which are constantly propagating through the air when the sensor is active. The distance between the sensor and the obstacle can be estimated by measuring the time taken by the ultrasonic pulses to return to the sensor and multiplying it by the sound velocity in the air. This sensor provides an accuracy of 3 mm and its cost is relatively low. Due to its detection range and viewing angle (2–400 cm and 15°, respectively), it is possible to combine two sensors to detect obstacles within 4 meters in front of the user, either at the head or the trunk level. The infrared sensor is used to detect obstacles at the foot level and drop-offs. Like their ultrasonic counterparts, infrared sensors are also robust to electromagnetic interference. However, their performance might be affected by environmental light conditions and reflective surfaces. Still, these sensors are also frequently used for developing electronic aid systems for VI people because of their high directional sensitivity and low cost. The arrangement of the two ultrasonic sensors and the infrared sensor used for this study is shown in Figure .

Figure 2. Arrangement of the sensors used for the proposed system. Gray-shaded areas represent the sensors’ sensing fields.

An ATmega328 microcontroller (Microchip Technology Inc., USA), which is embedded in an Arduino Nano board (Arduino LLC, USA), processes the signals provided by the sensors and controls the electronics for auditory and vibrotactile alarms. An ISD1760 audio recording/playback module (Winbond Electronics Corporation, China) is used to deliver voice messages related to the proximity and dimensions of the obstacle. Vibrating alerts are generated by a 1027 flat vibration motor (Jinlong Machinery & Electronics Co., Ltd., China), which encodes the distance between the user and the obstacle through the vibration intensity.

3.3. System operation and programming

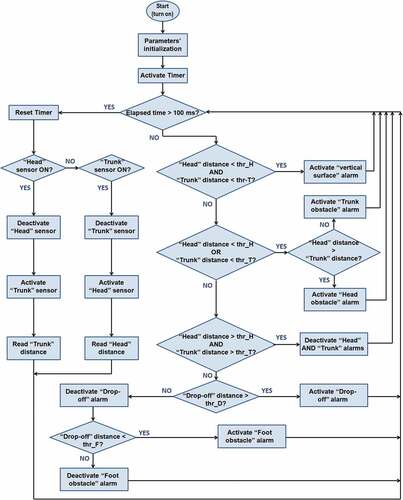

Figure shows the operation of the proposed system, which is controlled by a code written in C++ programming language by using the Arduino open-source environment. After parameters initialization, distance values provided by each sensor are recorded sequentially and continuously. The HC-SR04 sensors are triggered with a 100 ms delay among them to avoid any interference between ultrasonic pulses. While the proximity to the obstacle is estimated by comparing the recorded distance value against a threshold value, the obstacle height is estimated by the number of sensors reporting a distance value lower than that threshold. As shown in Figure , if the distance value provided by both ultrasonic sensors is lower than the threshold, then a voice message telling the user about a “vertical obstacle ahead“ (e.g., a wall or door) will be delivered to the user. Conversely, if the distance value provided by only one ultrasonic sensor is lower than the threshold, the voice message delivered to the user will depend on which sensor reported the lower distance. For instance, if the lower distance is reported by the ultrasonic sensor placed at the top (see, Figure ), a voice message telling the user about an ”obstacle at the head level” will be delivered. The shorter the distance value, the higher the vibration intensity of the flat motor.

Figure 3. Flowchart of the proposed system operation. Thresholds for detecting obstacles at the head, trunk and foot level are denoted as “thr_H”, “thr_T”, and “thr_F”, respectively. The threshold for drop-off detection is denoted as “thr_D”.

Regarding drop-offs, both a high-intensity vibrating alert and a voice message are generated if the distance value provided by the infrared sensor is greater than the drop-off threshold. This threshold was set after measuring the change of distance reported by the infrared sensor while walking on the ground toward descending stairs. The distance reported by the sensor was around 110 cm on flat surfaces, but it increased dramatically (more than 450 cm) when the distance between the sensor and descending stairs was lesser than 2 m. Conversely, the distance reported by the infrared sensor diminished when an obstacle at the foot level was found, so a corresponding alarm was also included in the system. Table shows the thresholds used in this study, not only to detect an obstacle but also to estimate its height.

Table 2. Threshold values for obstacle detection and identification

3.4. System circuit and case

Details about the connection between the electronic components of the system are shown in Figure . The voltage provided by a 9 VDC NiMH rechargeable battery is converted to 5 VDC by an LM2596 step-down switching regulator (Texas Instruments, USA). Thus, the proposed system is powered by 5 VDC. An on/off switch is integrated into the system to preserve the battery life, and the whole circuit was embedded into a single printed circuit board (PCB).

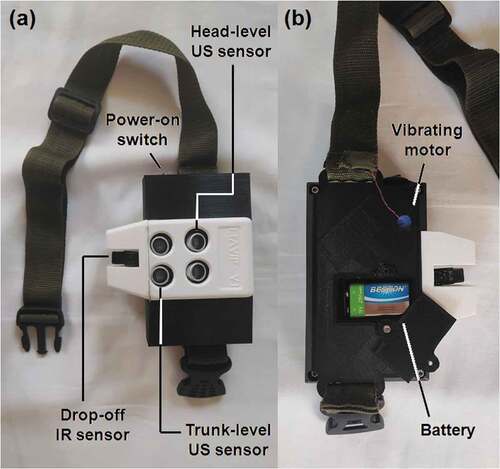

The system case was designed in SolidWorks 2020 software with ergonomic considerations (see, Figure ) and then 3D printed using a polylactic acid (PLA) filament with a precision of 1.75 mm. According to the results provided by the first survey (see, Table ), the device was adapted to a belt for wearing it on the hip.

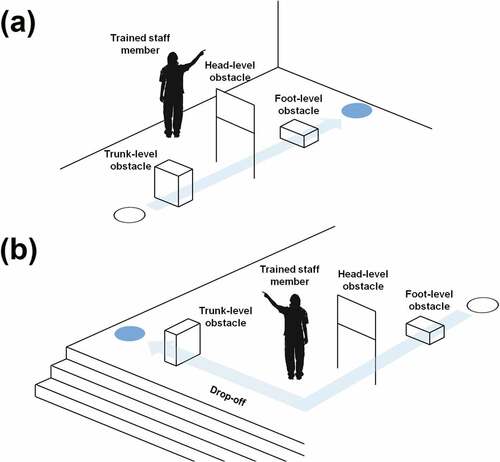

3.5. Performance assessment

We conducted a series of tests to analyze the performance of the proposed system. Written consent was obtained before involvement in the study, and all the experiments were supervised by the trained staff of the Institute for Blind and Deaf Children in Santiago de Cali, Colombia. Twelve VI users volunteered to participate in the tests, and two different navigation scenarios were chosen to simulate several real-world conditions. For the first test, which was conducted indoors, VI users were asked to walk in a straight line until reaching a wall. Three obstacles, one at the head level, one at the trunk level, and one at the foot level, were placed alternatively between starting and ending points (see, ). Once the obstacle was detected, the participant had to report its height based on the voice message delivered by the system. A trained staff member provided the VI user with assistance on where to turn when an obstacle was detected. The second test was similar to the previous one, with the difference that this test was conducted outdoors and subjects had to avoid a drop-off before reaching the ending point (see, ). We allowed the VI volunteers to use the white cane during the performance assessment stage. In addition, a YouTube channel was created by the authors to provide visual evidence of the experiments conducted in this study (link: https://www.youtube.com/channel/UC7iNtgZstFS2GcK1Oh8wGXg)

Figure 6. Scenarios for the first (a) and the second navigation test (b). The empty and filled circles denote the starting and ending points, respectively. Distances between obstacles were more than 5 meters.

After completing both tests, participants were asked to complete a second survey and provide suggestions for improvement. This survey (see, Table ) contained three questions to be responded on a 5-point Likert-type scale (totally agree, partially agree, indifferent, partially disagree, and totally disagree). Questions were chosen based on the responses provided by the participants during the first survey.

Table 3. Survey 2—Performance assessment

4. Results

The final appearance of the proposed system (16.5 × 14.5 × 6 cm) is illustrated in Figure , and Table summarizes the distribution of answers for the first survey (see, Table ). Most of the participants (73%) responded negatively when asked whether they knew any electronic assistance device for VI people (question 1). For the second question (“Which features do you consider that an electronic assistance device should include?”), the features considered as most relevant by VI respondents were comfort, easy-to-use, and obstacle detection alarms. When participants were asked how they would like to use an electronic assistance device (question 3), the majority preferred to wear it on the waist, like a belt.

Table 4. Results of the first survey: VI users’ opinions (n = 15)

Tables show the results from tests #1 and #2, respectively. While obstacles at the head, trunk, and foot level were correctly detected and identified during the first test, the foot-level obstacle was not detected in four out of twelve trials for the second test. Conversely, the proposed system was able to detect all drop-offs for this latter scenario. Results from the performance assessment are provided in Table .

Table 5. Detection results for the first test. The “X” denotes successful detection

Table 6. Detection results for the second test. The “X” denotes successful detection

Table 7. Results of the second survey: VI users’ opinions (n = 12)

5. Discussion

One of the novelties of this work is the inclusion of VI persons’ opinions in the very design stage. In turn, the features identified in this study are in line with those that better represent developers’ and end users’ views on traveling assistive devices (Dakopoulos & Bourbakis, Citation2009; Tapu et al., Citation2020). To the best of our knowledge, no previous research has reported the use of VI people’s opinions to develop a belt-like assistive device (see, Table ). In contrast, the participation of end-users (when possible) has usually been limited to the performance assessment stage. As highlighted by previous research (Manduchi & Coughlan, Citation2012), it is important to involve VI users in the different phases of assistive device development. This kind of participation may contribute to developing more robust and reliable solutions as the advantages and disadvantages of existing technologies can be identified. However, a recent survey (Tapu et al., Citation2020) has pointed out that only a few assistive systems can satisfy the main needs of VI people, which in turn may suggest that VI people’s opinions have rarely been considered in the development process. This systematic exclusion may partially explain why, despite many years of research and some remarkable efforts, none of the state-of-the-art devices have been adopted by the VI community so far. Moreover, while the reluctance to novel technologies may hamper their progress, the lack of awareness of such advances may also limit their adoption, especially in low-middle income countries (Okonji & Ogwezzy, Citation2019; Uslan, Citation1992). As shown in Table , most of the surveyed subjects responded that they did not know any electronic assistance device for VI people. In this regard, the institutes for blind and VI people should contribute to communicating the existence and benefits of this kind of technology. Thus, more active participation of the VI community in the development of assistive systems could be achieved, and, therefore, more useful solutions might be developed.

Table 8. Our system compared with similar studies (NR: not reported)

The proposed system successfully detected the foot-level obstacle when tests were conducted indoors (Table ), but it failed in four out of twelve trials when tests were conducted outdoors (Table ). A possible explanation is that the performance of the infrared sensor was affected by environmental light conditions (e.g., sunlight). Several authors have pointed out that high sensitivity to environmental light is one major drawback of infrared-based detection systems (Katzschmann et al., Citation2018; Pyun et al., Citation2013; Wahab et al., Citation2011). An alternative to address this issue is to replace the GP2Y0A02YK0F infrared sensor with another one with adjustable sensitivity. Thus, data acquired from a luminance sensor might be used to make foot-level obstacle detection more reliable. Nevertheless, it could increase the computational burden, power consumption and economic cost. Another option is to replace the infrared sensor with another ultrasonic sensor, which could also prevent false drop-off detections because of reflective surfaces (Pyun et al., Citation2013). However, detection errors might increase due to the wide sensing field resulting from several front view-aligned ultrasonic sensors. Conversely, infrared sensors have a narrower sensing range, so they may perform well when their orientation is carefully chosen (Nada et al., Citation2015).

From Table , it can be seen that, despite the overall positive feedback provided by the VI users, one-third of the total surveyed population partially agreed on the intelligibility and reliability of the alarms delivered by the system. When asked for an explanation, VI participants responded that, while the intensity of vibration was useful to estimate the proximity of the obstacle, audio messages interfered with their ability to listen to warning sounds from the environment. This is another major limitation that has also been identified by previous research (Dakopoulos & Bourbakis, Citation2009; Tapu et al., Citation2020). On the other hand, some VI persons who volunteered to participate in this study also underlined the importance of hearing an audio message with information about the obstacle height. White cane users cannot detect head-level obstacles, so various visual assistive devices include multiple sensors to provide the VI user with full coverage in the vertical plane (Bhatlawande et al., Citation2012; Katzschmann et al., Citation2018; Pyun et al., Citation2013). Previous studies report that auditory feedback has been extensively used in assistive devices for VI people when combined with vibration alarms (Khan et al., Citation2018). Advantages of auditory alarms include more detailed information of the surroundings and advice on optimal navigation routes (Kammoun et al., Citation2012). Still, developers need to be cautious about the quantity and duration of the pre-recorded messages that assistive devices can deliver, especially if playing messages interferes with obstacle detection. Speech instructions delivered by our system last from 1.2 to 1.5 seconds. Future work may include combining audio messages with other forms of auditory feedback, such as beeping sequences (Manduchi, Citation2012), musical sounds (Balakrishnan et al., Citation2007), and binaural acoustic (Schwarze et al., Citation2015). Further training for VI users on how to interpret these forms of feedback also needs to be provided.

During the performance assessment stage, we allowed the VI volunteers to use the white cane. Surprisingly, most of the participants did not employ it. When asked for an explanation, VI users responded that they wanted to be capable of traveling from one place to another while using an inconspicuous and visually unnoticeable device. Discretion is also a desirable feature for traveling assistive aids for VI people, so several researchers have taken it into account when implementing their approaches (Katzschmann et al., Citation2018; Velázquez et al., Citation2009). But unlike some other authors (Katzschmann et al., Citation2018), we did not intend to replace the white cane but allowed VI people to make their own choice. Hence the importance of developing hands-free traveling assistive devices for VI people.

Compared with similar state-of-the-art assistive systems, the proposed device reflects VI users’ opinions, it is easy to use, and it has a relatively low cost (see, Table ). In addition, the battery operation time is about sixteen hours. However, this kind of comparison should be cautiously interpreted because traveling assistive devices for VI people are as diverse as the technology behind them and the functionalities they had (Velázquez, Citation2010). For instance, some authors (Islam et al., Citation2020; Rahman et al., Citation2020) have compared the accuracy achieved by the system proposed in each with those reported by other approaches. Nonetheless, those comparisons could be biased and inaccurate because all those devices were assessed in different scenarios. Thus, we highlight the necessity of providing researchers with a standardized set of tests and scenarios to compare the performance of different visual assistive systems. To achieve this goal is necessary to understand that not all solutions share the same functionalities (Dakopoulos & Bourbakis, Citation2009; Elmannai & Elleithy, Citation2017). Like VI people’s participation during design/development stages, a more standardized way to assess the performance of novel approaches may contribute to identifying their advantages and disadvantages, which in turn may help researchers to develop more robust and reliable solutions.

A limitation of the proposed system is the inability to provide the VI user with directional information. Despite being capable of detecting what is directly in front of the user at three different heights, our prototype does not deliver information regarding the user’s surroundings. The ability to provide directional and navigational messages is one of the main functionalities that an assistive device dedicated to VI people should satisfy (Tapu et al., Citation2020). This kind of information may help the user to reach a specific destination or to identify the safest route. Nevertheless, VI users usually walk forward, and they are less interested in lateral obstacles that are further away (Katzschmann et al., Citation2018). For a VI user, it is essential to detect what is directly in front of him or her at all heights, especially if the obstacle can cause a fall or injury (e.g., drop-offs, tree branches). Therefore, we decided to tackle one problem at a time and focus on developing a prototype able to detect obstacles at three different heights. Other limitations of this study include the reduced number of scenarios used during the performance assessment phase. Moreover, the sample size was also too small. Yet, this work could contribute to encouraging other researchers to include end-users in the development process, even from the design stage. This, in turn, might contribute to the VI people’s integration into society, which has been the ultimate goal in this field.

6. Conclusion

In an attempt to reduce the difficulties faced by VI people when moving from one place to another, a novel electronic assistive system was developed. Based on the experience we gained through this work, the proximity and height of an obstacle can be estimated by properly combining ultrasonic and infrared sensors. Moreover, the proposed system achieved overall positive feedback from a select group of VI users. Future work aims to enhance the prototype with the ability to provide directional information, possibly by including additional sensors. Ultimately, more collaborative participation between end-users, researchers, and institutes for VI people, as well as a standardized set of tests to compare the performance of different approaches, is needed to provide more useful and reliable solutions.

Declarations and ethics statement

Approval for the study was obtained from the Ethics Committee of the Universidad Santiago de Cali (registry code 034) and participants’ consent was sought before recruitment. Interviews and audiovisual recordings were conducted with permission and under participants’ ethical rights of anonymity.

Acknowledgements

The authors would like to express their gratitude to the people who are part of the Institute for Blind and Deaf Children in Santiago de Cali, Colombia, for their involvement in this study. We also want to thank M.Sc. Danny Aurora Valera Bermúdez for her useful comments in the early and final drafts of the manuscript.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Erick Javier Argüello Prada

As biomedical engineers, we are constantly interested in developing practical solutions to real-world problems by integrating multiple academic disciplines. As researchers, we have found countless contributions, many of which are remarkable and provide promising results. On the other hand, we have also observed that only a few may have an actual (and positive) impact on the less favored social sectors, which confirms the existence of a gap between academia and society. This gap seems to become more evident in countries with developing economies. Based on that, this study aims to contribute to closing that gap by highlighting the importance of including end-users opinions in the development of a system that is conceived to facilitate their lives. We truly expect that this work may encourage other researchers to include end-users in development processes, even from the design stage.

References

- Arias-Uribe, J., Llano-Naranjo, Y., Astudillo-Valverde, E., & Suárez-Escudero, J. C. (2018). Caracterización clínica y etiología de baja visión y ceguera en una población adulta con discapacidad visual [Clinical characteristics and etiology of low vision and blindness in an adult population with visual impairment]. Revista Mexicana de Oftalmología (English Edition), 92(4), 174–17. https://doi.org/10.24875/rmo.m18000033

- Audette, R., Balthazaar, J., Dunk, C., & Zelek, J. (2000). A stereo-vision system for the visually impaired. School of Engineering, University of Guelph N1G 2W, Tech. Rep. 2000-41x-1

- Balakrishnan, G., Sainarayanan, G., Nagarajan, R., & Yaacob, S. (2007). Wearable real-time stereo vision for the visually impaired. Engineering Letters, 14(2), EL_14_2_2. https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.148.4860&rep=rep1&type=pdf

- Bhatlawande, S. S., Mukhopadhyay, J., & Mahadevappa, M. (2012). Ultrasonic spectacles and waist-belt for visually impaired and blind person. 2012 National Conference on Communications (NCC), Indian Institute of Technology Kharagpur, India (Feb 3-5) (pp. 1–4). IEEE. https://doi.org/10.1109/NCC.2012.6176765

- Dakopoulos, D., & Bourbakis, N. G. (2009). Wearable obstacle avoidance electronic travel aids for blind: A survey. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews), 40(1), 25–35. https://doi.org/10.1109/TSMCC.2009.2021255

- Elmannai, W., & Elleithy, K. (2017). Sensor-based assistive devices for visually-impaired people: Current status, challenges, and future directions. Sensors, 17(3), 565. https://doi.org/10.3390/s17030565

- Islam, M. M., Sadi, M. S., & Bräunl, T. (2020). Automated walking guide to enhance the mobility of visually impaired people. IEEE Transactions on Medical Robotics and Bionics, 2(3), 485–496. https://doi.org/10.1109/TMRB.2020.3011501

- Jones, L. A., Lockyer, B., & Piateski, E. (2006). Tactile display and vibrotactile pattern recognition on the torso. Advanced Robotics, 20(12), 1359–1374. https://doi.org/10.1163/156855306778960563

- Kammoun, S., Parseihian, G., Gutierrez, O., Brilhault, A., Serpa, A., Raynal, M., Oriola, B., Macé, M. J. M., Auvray, M., Denis, M., Thorpe, S. J., Truillet, P., Katz, B. F. G., & Jouffrais, C. (2012). Navigation and space perception assistance for the visually impaired: The NAVIG project. Irbm, 33(2), 182–189. https://doi.org/10.1016/j.irbm.2012.01.009

- Katzschmann, R. K., Araki, B., & Rus, D. (2018). Safe local navigation for visually impaired users with a time-of-flight and haptic feedback device. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 26(3), 583–593. https://doi.org/10.1109/TNSRE.2018.2800665

- Khan, I., Khusro, S., & Ullah, I. (2018). Technology-assisted white cane: Evaluation and future directions. PeerJ, 6, e6058. https://doi.org/10.7717/peerj.6058

- Lakde, C. K., & Prasad, P. S. (2015). Review paper on navigation system for visually impaired people. International Journal of Advanced Research in Computer and Communication Engineering, 4(1), 166–168. https://doi.org/10.17148/IJARCCE.2015.4134

- Mancini, A., Frontoni, E., & Zingaretti, P. (2018). Mechatronic system to help visually impaired users during walking and running. IEEE Transactions on Intelligent Transportation Systems, 19(2), 649–660. https://doi.org/10.1109/TITS.2017.2780621

- Manduchi, R. (2012). Mobile vision as assistive technology for the blind: An Experimental Study. In K. Miesenberger, A. Karshmer, P. Penaz, & W. Zagler (Eds.), Computers helping people with special needs. ICCHP 2012. Lecture notes in computer science (Vol. 7383, pp. 9–16). Springer. https://doi.org/10.1007/978-3-642-31534-3_2.

- Manduchi, R., & Coughlan, J. (2012). (Computer) vision without sight. Communications of the ACM, 55(1), 96–104. https://doi.org/10.1145/2063176.2063200

- Meijer, P. B. (1992). An experimental system for auditory image. IEEE Transactions on Biomedical Engineering, 39(2), 112–121. https://doi.org/10.1109/10.121642

- Nada, A. A., Fakhr, M. A., & Seddik, A. F. (2015). Assistive infrared sensor based smart stick for blind people. Proceedings of the 2015 science and information conference (SAI) (pp. 1149–1154). America Square Conference Centre: IEEE. https://doi.org/10.1109/SAI.2015.7237289

- Okonji, P. E., & Ogwezzy, D. C. (2019). Awareness and barriers to adoption of assistive technologies among visually impaired people in Nigeria. Assistive Technology, 31(4), 209–219. https://doi.org/10.1080/10400435.2017.1421594

- Pyun, R., Kim, Y., Wespe, P., Gassert, R., & Schneller, S. (2013). Advanced augmented white cane with obstacle height and distance feedback. Proceedings of the 2013 IEEE 13th International Conference on Rehabilitation Robotics (ICORR) (pp. 1–6). University of Washington - Seattle: IEEE. https://doi.org/10.1109/ICORR.2013.6650358

- Rahman, M. M., Islam, M. M., & Ahmmed, S. (2019). ”BlindShoe”: An electronic guidance system for the visually impaired people. Journal of Telecommunication, Electronic and Computer Engineering (JTEC), 11(2), 49–54. https://www.kuet.ac.bd/webportal/ppmv2/uploads/15956445004758-14238-1-PB.pdf

- Rahman, M. M., Islam, M. M., Ahmmed, S., & Khan, S. A. (2020). Obstacle and fall detection to guide the visually impaired people with real time monitoring. SN Computer Science, 1(4), 1–10. https://doi.org/10.1007/s42979-020-00231-x

- Sainarayanan, G., Nagarajan, R., & Yaacob, S. (2007). Fuzzy image processing scheme for autonomous navigation of human blind. Applied Soft Computing, 7(1), 257–264. https://doi.org/10.1016/j.asoc.2005.06.005

- Schwarze, T., Lauer, M., Schwaab, M., Romanovas, M., Bohm, S., & Jurgensohn, T. (2015). An intuitive mobility aid for visually impaired people based on stereo vision. Proceedings of the IEEE International Conference on Computer Vision Workshops (pp. 17–25). Santiago: IEEE. https://doi.org/10.1109/ICCVW.2015.61

- Shoval, S., Borenstein, J., & Koren, Y. (1998). The Navbelt—A Computerized Travel Aid for the blind based on mobile robotics technology. IEEE Transactions on Biomedical Engineering, 45(11), 1376–1386. https://doi.org/10.1109/10.725334

- Tapu, R., Mocanu, B., & Zaharia, T. (2020). Wearable assistive devices for visually impaired: A state of the art survey. Pattern Recognition Letters, 137, 37–52. https://doi.org/10.1016/j.patrec.2018.10.031

- Tsukada, K., & Yasumura, M. (2004). ActiveBelt: Belt-Type wearable tactile display for directional navigation. In N. Davies, E. D. Mynatt, & I. Siio (Eds.), UbiComp 2004: Ubiquitous computing. Lecture notes in computer science (Vol. 3205, pp. 384–399). Springer. https://doi.org/10.1007/978-3-540-30119-6_23.

- Uslan, M. M. (1992). Barriers to acquiring assistive technology: Cost and lack of information. Journal of Visual Impairment & Blindness, 86(9), 402–407. https://doi.org/10.1177/0145482X9208600907

- Van Veen, H. A., & Van Erp, J. B. (2003). Providing directional information with tactile torso displays. Proceedings of EuroHaptics, (pp. 471–474). Dublin, Ireland.

- Velázquez, R. (2010). Wearable assistive devices for the blind. In A. Lay-Ekuakille & S. C. Mukhopadhyay (Eds.), Wearable and autonomous biomedical devices and systems for smart environment. Lecture notes in electrical engineering (Vol. 75, pp. 331–349). Springer. https://doi.org/10.1007/978-3-642-15687-8_17.

- Velázquez, R., Bazán, O., & Magaña, M. (2009). A shoe-integrated tactile display for directional navigation. 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems (pp. 1235–1240). St Louis, USA: IEEE. https://doi.org/10.1109/IROS.2009.5354802

- Wahab, M. H., Kadir, H. A., Johari, A., Noraziah, A., Sidek, R. M., & Mutalib, A. A. (2011). Smart cane: Assistive cane for visually-impaired people. International Journal of Computer Science Issues, 8(4), 21–27. https://arxiv.org/ftp/arxiv/papers/1110/1110.5156.pdf

- World Health Organization (WHO). (2021, February 26). Blindness and vision impairment. Retrieved May 3, 2021, from https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment

- Zeng, L. (2015). A survey: Outdoor mobility experiences by the visually impaired. In A. Weisbecker, M. Burmester, & A. Schmidt (Eds.), Mensch und Computer 2015 Workshopband, Stuttgart: Oldenbourg Wissenschaftsverlag (pp. 391–398). De Gruyter. https://doi.org/10.1515/9783110443905-056