?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This study aims to investigate the effectiveness of video-based feedback in enhancing learning outcomes for undergraduate students in the field of architectural engineering. The research addresses a gap in the existing literature by exploring the impact of video feedback on students with different learning styles. The study involved 26 participants from Al al-Bayt University, who were enrolled in a four-week summer workshop. Participants’ learning styles were identified using Kolb’s Learning Style Inventory, encompassing divergent, assimilator, convergent, and accommodator styles. The study employed a quasi-experimental design, with participants assigned to two groups: a video feedback group (experimental group) and an in-person feedback group (control group). The video feedback group received video-based feedback on their assignments during phase 2, while the in-person feedback group received traditional face-to-face feedback. Performance scores and perceptions of feedback quality were analyzed using a formative feedback perception scale. The results revealed that video-based feedback significantly influenced feedback development and understandability throughout the design process. Statistical analysis demonstrated significant differences in performance scores between the video feedback group and the in-person feedback group. The findings of this study have practical implications for educators and instructional designers in architectural design education. Incorporating video-based feedback into teaching practices can enhance learning outcomes and improve the overall quality of feedback provided to students. The study contributes to the existing body of knowledge by shedding light on the effectiveness of video-based feedback in the context of architectural engineering education.

1. Introduction

Architecture, as a profession, has undergone substantial transformations in recent decades, driven by various factors (Obeidat & Obeidat, Citation2023). These changes have had a profound impact on architectural education, where the design studio plays a pivotal role in facilitating learning experiences (Ilgaz, Citation2009; Orbey & Sarioglu Erdogdu, Citation2020). Serving as a dynamic learning environment, the design studio employs specialized teaching techniques and educational approaches to cultivate students’ problem-identification and design problem-solving skills. Furthermore, the design studio acts as the foundation of the architectural curriculum, integrating knowledge and skills acquired from other courses within the program (Demirbas & Demirkan, Citation2003). Within the realm of architecture education, the design studio serves as a laboratory for students to develop their professional knowledge and expertise. It provides a space for students to apply theoretical concepts learned in other courses to real-world design challenges (Ilgaz, Citation2009; Lueth, Citation2008; Orbey & Erdodu, Citation2021). As the central component of the design curriculum, the studio fosters critical thinking, creativity, and problem-solving abilities among students (Demirkan & Osman Demirbas, Citation2008).

Traditionally, the design studio environment has relied on face-to-face interactions between instructors and students, facilitating dynamic communication and active teaching and learning (Mahmoodi, Citation2001). However, the COVID-19 pandemic necessitated a shift to online teaching methods, challenging the traditional model of direct interaction. The emergence of synchronous and asynchronous online teaching platforms, such as Zoom and Microsoft Teams, provided viable mediums for virtual engagement between instructors and students, allowing the exchange of design ideas and deliverables such as sketches, sections, simulations, plans, 3D digital models, mock-ups, and animations (Sevgul & Yavuzcan, Citation2022).

In light of this transformation, the use of video-based feedback has emerged as a potential alternative for instructors to provide informative feedback to design students (Sevgul & Yavuzcan, Citation2022). Video-based feedback allows instructors to record their feedback, which students can conveniently access at their own pace. This method of feedback delivery highlights its potential benefits in facilitating effective communication between instructors and students, enabling students to pay closer attention to their instructors’ feedback.

Therefore, this study aims to investigate the impact of using video-based feedback as an alternative means of communication between instructors and students in design education, specifically in the context of architectural engineering. The research involved 26 first-year students from the Department of Architectural Engineering, who were divided into control and experimental groups. To determine the participants’ learning preferences, the Learning Style Inventory (LSI) was administered. The students were given a staircase design task to be completed in two phases, with one group receiving face-to-face feedback and the other receiving video feedback. Student performance was evaluated based on their architectural representations, and their perception of feedback quality was assessed using the Formative Feedback Perception Scale (FFPS).

By examining the effectiveness of video-based feedback in comparison to face-to-face feedback, this study aims to provide insights into the potential of video-based feedback as an alternative method for enhancing communication and improving learning outcomes in design education. However, we should acknowledge that there is a potential impact of the instructor characteristics on the effectiveness of face-to-face feedback. In other words, students’ perceptions and responses to feedback can be influenced by factors such as the instructor’s expertise, communication style, approachability, and reputation.

The findings of this study will contribute to the ongoing discourse on adapting design education to online teaching methods and inform the development of effective feedback practices for the benefit of both instructors and students. For instructors, the study’s results will offer evidence-based guidance on incorporating video-based feedback into their teaching practices. Understanding the benefits and limitations of this alternative feedback method can enable instructors to adapt their pedagogical approaches to the demands of online teaching environments. For students, the findings of this study can enhance their learning experiences in design education. Video-based feedback offers the advantage of anytime access, enabling students to review feedback multiple times, reinforce their understanding of concepts, and apply the feedback to their design projects more effectively. Moreover, the study’s insights can help students develop self-directed learning skills as they learn to interpret and implement feedback in their design iterations. In a broader context, the findings of this study contribute to the ongoing discourse on adapting design education to online teaching methods. Ultimately, the practical implications of this study extend beyond the scope of architectural engineering. The findings can serve as a foundation for similar investigations in other disciplines, paving the way for innovative feedback strategies that enhance learning outcomes in various design-oriented fields.

2. Architectural design process in context of Kolb’s Experiential Learning Theory

The design process in architecture is often visualized as a two-dimensional framework, as outlined by the Royal British Institute of Architecture RIBA (Inyim et al., Citation2015). The first dimension (1-D) encompasses the decision-making process, which involves understanding the project requirements, defining the design problem, and establishing design goals and constraints. Architects need to make informed decisions about the project’s scope, purpose, and desired outcomes within this dimension. Moving into the second dimension (2-D), the design process unfolds through a series of sequential stages that allow architects to systematically address the design problem and develop effective solutions (Abo-Wardah & Khalil, Citation2016). These stages involve synthesis, analysis, evaluation, and decision-making, which are integral to the iterative nature of architectural design.

During the synthesis stage, architects engage in creative thinking and idea generation. They explore various design concepts, sketch preliminary drawings, and consider different approaches to solving the design problem (Oxman, Citation2004). This stage encourages divergent thinking, where multiple possibilities are explored, and a range of design alternatives are generated. Next, the analysis stage involves a deeper examination of the generated design alternatives. Architects evaluate the feasibility and viability of each option, considering factors such as functionality, aesthetics, sustainability, structural integrity, and compliance with relevant regulations (Lawson, Citation2006). This stage requires convergent thinking, as architects assess the merits and drawbacks of different design solutions, narrowing down the options based on practical considerations and project requirements. Once a refined set of design alternatives is identified, architects move on to the evaluation stage. Here, the selected designs are critically assessed against predetermined criteria, such as user needs, cultural context, environmental impact, and project objectives (Dutton & Willenbrock, Citation1989). Architects engage in a comprehensive review of the design alternatives, employing their expertise and knowledge to determine their strengths and weaknesses. Finally, the decision-making stage involves making informed choices among the evaluated design alternatives. Architects carefully weigh the advantages and disadvantages of each option, taking into account the project’s specific requirements, client preferences, and stakeholder input. This stage requires a thoughtful analysis of the trade-offs and potential impacts associated with each design alternative.

It is important to recognize that students may approach these design stages in slightly different ways, influenced by their preferred learning styles and personal characteristics (Fox & Bartholomae, Citation1999; Newton & Wang, Citation2022). Kolb’s Experiential Learning Theory (ELT) provides insights into different learning styles, which can influence how students engage with the design process. Convergent learners prioritize technical issues over interpersonal or social aspects, while divergent learners are more inclined toward brainstorming and generating ideas. Assimilating learners prioritize logical coherence and theory over real-world applications, while accommodating learners rely on the knowledge and input of others instead of relying solely on their technical analyses. Moreover, Kolb’s Experiential Learning Theory presents learning as a four-stage cyclical process: learning through experience, reflection, action, and further reflection (Kolb, Citation2015). This cyclical nature of learning emphasizes the importance of self-reflection as a valuable learning strategy (Demirkan & Osman Demirbas, Citation2008; Oh et al., Citation2013).

While experiential learning offers numerous benefits, it is important to consider the logistical and economic implications for architectural institutions (Djabarouti & O’Flaherty, Citation2019; Newton & Wang, Citation2022). Incorporating experiential learning methods may require additional resources and planning, making it necessary to explore alternative approaches that can provide similar benefits without significant logistical constraints. By considering the design process, learning styles, and the role of experiential learning, this study aims to contribute to the understanding of how video-based feedback can enhance communication and learning outcomes in design education, particularly in the context of architectural engineering. The exploration of these factors will shed light on effective teaching practices and support the ongoing development of innovative approaches in design education.

2.1. Rationale for choosing Kolb’s Learning Style Iventory (LSI) in this study

In selecting Kolb’s Learning Style Inventory (LSI) as the preferred tool for this study, we acknowledge the existence of various learning style models in the literature, including the Felder-Silverman model. While we recognize the merits of alternative models, we believe that Kolb’s LSI offers several advantages that make it the most appropriate choice for our research context.

Alignment with previous research: Kolb’s LSI has been widely utilized in educational research, including studies related to architecture education. By adopting a well-established model, we can build upon the existing body of knowledge and facilitate comparisons with previous findings. This enhances the scientific rigor and validity of our study.

Comprehensive framework: Kolb’s model provides a comprehensive framework for understanding learning preferences and styles. It encompasses a range of dimensions, such as concrete experience, abstract conceptualization, reflective observation, and active experimentation. This holistic approach allows us to capture the diverse learning styles within our participant group, enabling a more nuanced analysis of the relationship between learning styles and feedback perception.

Practicality and ease of administration: Kolb’s LSI is a widely recognized and easily administered tool, making it practical for our research context. Its simplicity and accessibility enable efficient data collection, particularly considering the limited resources and time constraints we faced during the study.

Applicability across disciplines: While the Felder-Silverman model may be well-suited for technical programs, such as architecture, we chose Kolb’s LSI as it provides a versatile framework that can be applied across various disciplines. By employing a model with broad applicability, we aim to contribute insights that have the potential for wider implications in the field of education.

By carefully considering these factors, we believe that Kolb’s LSI is the most suitable choice for our study. However, we acknowledge the presence of other learning style models and their potential value in future research endeavors. The selection of Kolb’s LSI was based on the specific objectives of this study, the available resources, and the desire for consistency with existing literature in the field of architectural education.

2.2. The quality of the feedback

According to Ashton (Citation1998), vocal exchanges between instructors and students are critical to learning. Demirbas and Demirkan (Citation2003) suggest that mutual reflection activities involving students and teachers should create a criticism-filled environment that encourages learning. Nicol and Macfarlane‐Dick (Citation2006) note that feedback is essential for students to learn about their performance. In this regard, Vasilyeva et al. (Citation2008) argue that feedback that identifies students’ strengths and weaknesses is essential to maximize the effectiveness of the teaching process. Within the scope of this study, feedback categories used are directive or evaluative (Winstone et al., Citation2017). By categorizing feedback as either directive or evaluative, the aim is to capture two fundamental aspects of feedback in the context of our study. Directive feedback provides specific guidance, suggestions, or instructions on how to improve performance, while evaluative feedback offers an assessment or judgment of the quality or adequacy of the work. These two categories align with the primary functions of feedback and encompass a broad range of feedback practices commonly observed in educational settings. There are other classifications, such as descriptive, prescriptive, motivational, or feedforward feedback, have been proposed in the literature, each emphasizing different dimensions or purposes of feedback. However, considering the scope and focus of our study, we believe that the distinction between directive and evaluative feedback provides a suitable framework to examine the impact of video-based feedback on students’ architectural design outcomes and their perception of feedback quality.

Beyond this, feedback must meet certain requirements to be successful—namely, it must be well-received and valued by both students and teachers. Additionally, feedback consistency and quality should be considered, and the students must easily understand and implement the feedback design (Henderson et al., Citation2019). Technical feedback aspects include the feedback’s timeliness, medium, and content, increasing students’ engagement and satisfaction (O’Donovan et al., Citation2021). It has been widely agreed that students highly value constructive criticism and feedback they receive from their instructors (Eom & Ashill, Citation2016). In the context of architectural education, the instructor must have the proper skills and techniques to increase their role in the learning process by providing clear, informative feedback that accounts for students’ different learning styles (Yazdani et al., Citation2022).

The evaluation of feedback quality in educational settings relies on several factors, including the frequency of feedback use, the degree of shared responsibility between the student and instructor, and the student’s readiness to accept criticism, as highlighted by Winstone et al. (Citation2017). O’Donovan et al. (Citation2021) suggest that feedback quality can be enhanced from the recipients’ perspective through its function in assessment and design and the learners’ interactions with tutors and peers. To this end, verbal and video feedback can be utilized in various sports and art courses to diversify skill sets, according to Deshmukh et al. (Citation2022). Feedback is an integral part of the architecture learning process, provided through various means such as the curriculum, written assignments, and verbal discussions. Nonetheless, as Smith (Citation2021) suggests, visual feedback is of paramount importance. The development of the studio culture is influenced by several factors, including the materials used in a project’s development, the organization of the course, the tools utilized, and the communication and critique methods employed among students, instructors, and peers. According to Smith (Citation2021), both undergraduate and postgraduate architectural students typically desire more visual feedback.

Researchers have highlighted the importance of video streaming in online courses by documenting instructional activities that facilitated more effective teaching (Ketchum et al., Citation2020; Rahmane et al., Citation2022; Renzella & Cain, Citation2020; Sevgul & Yavuzcan, Citation2022). Additionally, these studies demonstrated the significant role of alternative feedback modes, including video, audio, podcasts, and screencast feedback, and using these feedback formats can improve the feedback quality (Hassanpour & Sahin, Citation2021; Killingback et al., Citation2019). These researchers concluded that using the most recent tools to teach architecture students benefited students greatly during the design process.

Research studies have shown that the use of videos as a feedback technique has a significant impact on how students perceive and understand feedback and how feedback can develop and support the learning process (e.g., (Crook et al., Citation2012; McCune & Hounsell, Citation2005)). In particular, undergraduates in the Information Security and Ethics course have found that using videos as a feedback technique has a more significant impact than the traditional feedback method (Chen et al., Citation2018). These students used the screen recording of the instructor—student discussion session as a form of video feedback, which allowed them to revisit and reflect on the feedback they received. The screen was split into important sections, including students’ work, text feedback, the instructor’s face, and a moving, highlighted cursor, which helped focus students’ attention on specific aspects of the feedback (Xu et al., Citation2021).

Furthermore, the online critical method has gained popularity because academic architectural institutions began offering many of their courses on the Internet during the COVID-19 pandemic. During the early lockdown stages, some professors utilized online tools like Zoom, which let them comment or sketch on a shared screen. Such methods increased students’ sense of engagement or disinhibition, and they commented and actively participated in these drawing sessions (Sun et al., Citation2021). Distance learning has accelerated the proliferation of several representative techniques, including the merging of drawing and photography techniques, physical models and 3D models, and the use of programs like AutoCAD or Revit to simultaneously perform necessary modifications (Santos et al., Citation2021).

Yigit and Seferoglu (Citation2021) conducted a study in the field of computer education and instructional technologies (CEIT) to examine the use of video-based feedback techniques. The study involved randomly assigning students to control and experimental groups, with the control group receiving face-to-face feedback in verbal, drawing, and text formats, while the experimental group received video feedback. The study used an experimental research methodology with two different research designs. The first design used the “formative feedback perception scale,” proposed by Sat (Citation2017), to measure the impact of video feedback on students’ perceived feedback quality. The second design was based on the work of Demirbas and Demirkan (Citation2003), and received ethical approval from the University Ethics Committee. Parallel strategies were used to determine the effectiveness and tutor-related opportunities offered by the video-based feedback technique.

Technology has improved communication between teachers and students, particularly during the COVID-19 pandemic, by catering to the different learning styles of students. Video feedback has shown promise in enhancing feedback quality and improving students’ learning experience. However, further research is needed to explore the effectiveness of video feedback in various disciplines and contexts and identify best practices for its implementation in higher education. This study aims to evaluate the effectiveness of using video feedback to teach architectural design students while considering their different learning styles. The importance of this topic has increased with the return to face-to-face classroom sessions, and there is a continuous need to utilize video-based feedback to enhance interactions between teachers and students in a way that accommodates the various learning styles suggested by Kolb.

2.3. Research problem and objective statement

The design studio environment in architecture education plays a crucial role in facilitating learning and developing students’ professional skills. However, the shift to online teaching methods, accelerated by the COVID-19 pandemic, has presented challenges to the traditional model of face-to-face interactions. In this context, the use of video-based feedback has emerged as a potential alternative for instructors to provide informative feedback to design students, enabling effective communication and closer attention to feedback.

Despite the growing interest in video-based feedback, there is a need for empirical research to evaluate its effectiveness and explore its impact on learning outcomes, particularly in the field of architectural engineering. Additionally, the influence of students’ learning styles on the effectiveness of video-based feedback remains understudied. Understanding the potential of video-based feedback and its alignment with students’ learning preferences is crucial for informing pedagogical practices and enhancing communication between instructors and students in the design education context.

Therefore, the research problem of this study revolves around investigating the impact of video-based feedback as an alternative means of communication between instructors and students in architectural design education. The objective of this study is twofold:

To compare the effectiveness of video-based feedback and face-to-face feedback in terms of enhancing communication and improving learning outcomes in architectural design tasks.

To examine the influence of students’ learning preferences, as measured by the Learning Style Inventory (LSI), on the perceived quality and effectiveness of video-based feedback.

By addressing these research objectives, this study aims to provide valuable insights into the potential of video-based feedback as a pedagogical tool in architectural education and contribute to the ongoing discourse on adapting design education to online teaching methods. The findings will inform the development of effective feedback practices that cater to different learning styles, benefiting both instructors and students in the architectural engineering domain.

3. Methods and materials

3.1. Study design

This research employs a mixed-methods approach, combining quantitative and qualitative data collection methods, to provide a comprehensive understanding of the effectiveness of video-based feedback in architectural design education. The study design incorporates a quasi-experimental design, including a comparative analysis between video-based feedback provided to the experimental group and face-to-face feedback provided to the control group. The primary focus of the study is to examine the impact of these two feedback methods on student outcomes and perceptions. By comparing video-based feedback to traditional in-person feedback, the study aims to assess their respective influences on student performance and the quality of feedback. Additionally, the study explores the relationship between students’ learning styles, as identified using Kolb’s Learning Style Inventory, and their perception of feedback quality.

This mixed-methods design allows for a comprehensive exploration of the benefits and limitations of video-based feedback in architectural design education. The combination of quantitative analysis of performance scores and qualitative assessment of feedback perceptions provides a nuanced understanding of the effects of video-based feedback compared to traditional in-person feedback methods. By adopting a mixed-methods approach, the study aims to capture both the quantitative outcomes and the qualitative insights, thereby offering a more holistic evaluation of the effectiveness of video-based feedback in the context of architectural design education.

3.2. Participants selection and homogeneity

The study was conducted at Al al-Bayt University in Jordan during the 2021–2022 academic year. Participants were carefully selected from the pool of first-year students enrolled in the Department of Architectural Engineering. The selection process considered various factors, including academic standing, previous coursework, and experience in architectural design. A total of 26 participants, aged between 19 and 20 years old, were included in the study. The purpose of including participants of similar age was to ensure a relatively homogeneous sample.

To create a balanced representation, the participants were equally divided into a control group and an experimental group, with 13 participants in each group. The gender distribution in both groups consisted of ten females (76.92%) and three males (23.08%). It is important to note that the gender distribution was reflective of the composition of the larger participant pool within the Department of Architectural Engineering.

We recognize the importance of equalizing participants’ capabilities to minimize the potential impact of individual differences in learning speed. While our study did not specifically assess participants’ learning speed, we aimed to select participants with similar academic backgrounds and abilities to create a more level playing field. By ensuring a relatively homogeneous sample, we aimed to mitigate the potential influence of participants’ varying capabilities on the outcomes of the study.

3.3. Design task selection

The selection of the staircase activity in this study was based on several key justifications, as outlined below:

Relevance to architectural design education: The staircase design task was chosen due to its direct relevance to architectural design education. Staircases are integral components of architectural structures, requiring students to apply their knowledge of structural principles, spatial organization, aesthetics, and user needs. By selecting a staircase design task, we aimed to assess students’ ability to integrate and apply these core competencies in a practical design scenario.

Complexity and multidimensional nature: Staircase design presents a complex and multidimensional problem for students to solve. It involves considerations such as functionality, safety, aesthetics, and adherence to design guidelines. By engaging in staircase design, students are challenged to analyze and address these multiple dimensions, showcasing their ability to think critically and make informed design decisions.

Feasibility and resource availability: The choice of the staircase activity was also influenced by its feasibility within the context of a typical design studio setting. The necessary resources, including architectural representations and evaluation criteria, are readily available and commonly used in architectural education. This ensures that the activity can be implemented effectively and consistently across the control and experimental groups.

Potential for objective evaluation: The staircase design task offers the potential for objective evaluation based on predefined criteria. This allows for a comparative analysis of performance outcomes between the control and experimental groups. By objectively assessing the quality of the staircase designs, we can investigate the effectiveness of different feedback approaches in improving design outcomes.

In light of these justifications, the staircase activity was deemed suitable for our research objectives, aligning with the educational context of architectural design and providing an opportunity to assess students’ design skills and the impact of different feedback methods

3.4. Ethical considerations

This research project adheres to ethical guidelines and ensures the protection and well-being of all participants involved. The study follows the ethical principles outlined by Al al-Bayt University’s Research Ethics Committee, ensuring the confidentiality, informed consent, and voluntary participation of the participants.

Confidentiality: All participants’ personal information and data collected throughout the study are treated with strict confidentiality. Each participant is assigned a unique identifier to maintain anonymity during data analysis and reporting. Only the research team has access to the raw data, and any published results or findings will use aggregated data without disclosing individual identities.

Informed consent: Prior to their participation, all students were provided with a detailed explanation of the study’s purpose, procedures, and potential risks and benefits. They were also informed of their right to withdraw from the study at any time without facing any negative consequences. Informed consent was obtained from each participant, either through written consent forms or online consent processes, ensuring their voluntary participation and understanding of the study.

Voluntary participation: Participation in the study was entirely voluntary, and participants were under no obligation to take part. Students were clearly informed that declining to participate or withdrawing from the study would not affect their academic standing or relationship with the university or instructors. Moreover, students were assured that their decision to participate or withdraw would remain confidential and would not result in any negative consequences.

Data storage and protection: All collected data, including questionnaires, feedback recordings, and design sketches, are securely stored on password-protected computers and servers. Access to these data is strictly limited to authorized research team members to maintain data integrity and confidentiality. Any physical documents, such as consent forms, are stored in locked cabinets to prevent unauthorized access.

Research ethics committee approval: This study has received approval from Al al-Bayt University’s Research Ethics Committee, which ensures that the research adheres to ethical standards and guidelines. The committee reviewed the research design, data collection procedures, and the informed consent process to ensure the protection of participants’ rights and well-being.

3.5. Data collection and study procedures

The study utilized several instruments to collect data. First, the Learning Style Inventory (LSI), Version 3.1, designed by Kolb (Citation2015), was administered to determine the learning preferences of the participants. The LSI test provided scores for concrete experience (CE), abstract conceptualization (AC), reflective observation (RO), and active experimentation (AE), which were used to confirm the participants’ learning styles as accommodating, assimilating, diverging, and converging. To assess the effectiveness of video-based feedback, a two-phase design project was conducted. Students participating in the study were asked to design a staircase for a two-story villa project. Two main phases during three consecutive design studios were needed to conduct the study, as described below.

3.5.1. Phase 1: first studio session”

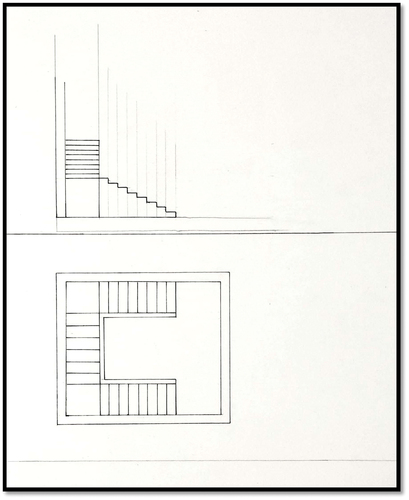

During the first phase of this study, students were introduced to the project description, followed by a 60-minute lecture on the design guidelines, requirements, and technical drawings for a staircase. After a break, students were given the remainder of the studio time to individually work on designing the staircase. To provide students with a better understanding of the design problem, they were provided with various architectural representations of the existing villa, including a 3D isometric drawing and a 2D architectural section drawing. Students were allowed to design a U-type, L-type, or wide U-type staircase within the constraints of the specified requirements. At the end of the studio, students submitted their design representations, including sketches, two architectural plans for the ground and first levels, and one architectural section at a 1/50 drawing scale, as shown in Figure . The resulting designs were evaluated to assess performance in this stage.

3.5.2. Phase 2: second studio session

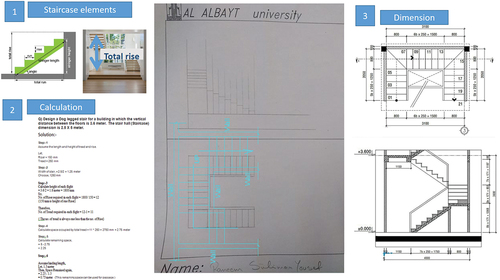

In the first part of this design session, the control group received face-to-face feedback in the form of verbal comments, drawings, and texts, while the experimental group received video feedback. The video feedback was developed and prepared using screen recorder software and had a resolution of 1920 × 1080, lasting for 4–5 minutes, as shown in Figure . During the feedback session, the instructor displayed the students’ assignments on a computer and utilized an annotation tool to provide visual feedback. In addition to the visual feedback, the instructor verbally communicated their observations, suggestions, and explanations to the students. The combination of visual and verbal feedback aimed to enhance the clarity and effectiveness of the feedback process. The instructors also presented a few visual examples of similar designs related to the students’ assignments using Microsoft PowerPoint to record on-screen activities. Each student was given a nickname to ensure anonymity during data collection, and then they completed the Formative Feedback Perception Scale (FFPS), which was analyzed as part of the study’s quantitative component. Following the feedback, students were instructed to create new versions of their designs. These revised designs were then collected and evaluated to assess performance outcomes, as shown in Figure .

3.6. Assessment instrument

The assessment of student performance was based on the architectural representation media of their staircase designs. These included the students’ 3D and 2D architectural drawings during both design sessions. The evaluation focused on the outcomes of the designs rather than the methods used. A scoring instrument developed by Demirbas and Demirkan (Citation2003) was used to evaluate the designs. This instrument was designed to evaluate students’ sketches in accordance with the design requirements and was used to evaluate both the first and second phases of the study as they had similar requirements. These two phases were evaluated using the same rating scale developed by Demirbas and Demirkan (Citation2003). Demirbas and Demirkan (Citation2003) developed a scoring instrument to evaluate students’ sketches in architectural design courses. The instrument consists of a rating scale that assesses the quality of the sketches in terms of three main criteria: (1) the design concept and its relationship with the design problem; (2) the spatial organization and composition of the design elements; and (3) the graphic quality of the sketches. The rating scale uses a five-point Likert scale, ranging from 1 (poor) to 5 (excellent), to assess each of the three criteria. Each criterion is further divided into sub-criteria, and each sub-criterion is also rated on a five-point scale. The final score is calculated by adding up the scores for each criterion and sub-criterion and averaging them. In the study being discussed, the same rating scale developed by Demirbas and Demirkan (Citation2003) was used to evaluate the quality of the students’ sketches in both the first and second phases of the design project. The focus was on the quality of the outcome rather than the process of designing the staircase.

3.7. Formative feedback perception scale (FFPS)

In addition, the FFPS developed by Sat (Citation2017) was administered to determine the participants’ perception of the quality of feedback received. The FFPS consisted of 25 items divided into three sub-factors: understandability, development, and encouragement. The responses were collected to measure students’ attitudes toward feedback and provide insights into their perceptions of its effectiveness.

After the study’s second phase, students in the experimental and control groups completed the Formative Feedback Perception Scale to determine their perception of the quality of feedback received. The Formative Feedback Perception Scale (FFPS) is a tool used to measure students’ perceptions and attitudes toward feedback. This questionnaire was developed by Sat (Citation2017) to investigate the impact of video feedback on student perceptions of feedback quality. The FFPS consists of 25 items divided into three sub-factors: understandability, development, and encouragement. The understandability sub-factor measures the clarity of the feedback provided, while the development sub-factor measures the feedback’s ability to help students improve their work. The encouragement sub-factor measures the degree to which the feedback motivates and engages students. The sub-factors identified in the Formative Feedback Perception Scale correspond to feedback quality indicators that are commonly found in the literature. For example, the sub-factor of “development” aligns with feedback that provides opportunities for improvement and revision. Similarly, the sub-factor of “understandability” relates to feedback that is clear and comprehensible. Lastly, the sub-factor of “encouragement” corresponds to feedback that promotes effective engagement with the material being studied. These quality indicators were identified by Yigit and Seferoglu (Citation2021).

Research has shown that effective feedback can significantly impact student learning and achievement. In particular, clear, specific, and actionable feedback can help students improve their work and achieve their learning goals (Hattie & Timperley, Citation2007). The FFPS is one tool that can be used to evaluate the quality of feedback provided to students and identify areas for improvement. Overall, the FFPS is a valuable tool for measuring student perceptions of feedback quality, and can help educators and researchers understand how feedback is perceived and used by students.

The reliability coefficient for the 5-point Likert scale was found to be 0.93 for the total scale, with individual sub-factor reliability coefficients of 0.92, 0.88, and 0.83 for understandability, development, and encouragement, respectively.

3.8. Data analysis

The collected data will undergo both quantitative and qualitative analyses to provide a comprehensive understanding of the effectiveness of video-based feedback in architectural design education.

Quantitative Analysis: Quantitative analysis will involve statistical techniques to compare the performance outcomes between the control group and the experimental group. The assessment data obtained from the scoring instrument developed by Demirbas and Demirkan (Citation2003) will be analyzed using appropriate statistical measures, such as mean scores, standard deviations, and inferential statistical tests (e.g., t-tests or analysis of variance) to determine any significant differences in the quality of the designs between the two groups. This analysis will help establish the comparative effectiveness of video-based feedback and face-to-face feedback in improving students’ architectural design outcomes.

Furthermore, the data obtained from the Learning Style Inventory (LSI) and the Formative Feedback Perception Scale (FFPS) will be analyzed to explore the relationship between students’ learning styles and their perception of feedback quality. Correlation analyses, such as Pearson’s correlation coefficient, will be conducted to examine the associations between learning styles (e.g., accommodating, assimilating, diverging, and converging) and the dimensions of feedback perception (e.g., understandability, development, and encouragement). These quantitative analyses will provide insights into how students’ learning styles may interact with the mode of feedback delivery and influence their perception of feedback quality.

Qualitative Analysis: Qualitative analysis will involve a thorough examination of the students’ design representations, including their architectural drawings and feedback comments. The qualitative data collected from the students’ designs will be analyzed thematically to identify recurring patterns, common themes, and rich descriptions of their design approaches, problem-solving strategies, and overall creative processes. This analysis will provide a deeper understanding of the design outcomes, students’ design thinking, and the impact of video-based feedback on their design iterations.

The feedback comments received by the students in the experimental group will also be subjected to qualitative analysis. These comments will be reviewed, coded, and categorized to identify emerging themes related to the students’ perceptions of the video-based feedback. The qualitative analysis of the feedback comments will shed light on the specific aspects of video-based feedback that students find beneficial, challenging, or motivating, and provide insights into their experiences and perceptions.

Both quantitative and qualitative analyses will complement each other, providing a comprehensive understanding of the effectiveness of video-based feedback in architectural design education. The integration of these two analytical approaches will enhance the validity and reliability of the findings and help capture the richness and complexity of the students’ experiences and performance outcomes.

4. Results and discussion

4.1. Student learning styles

The Kolb’s LSI test was used to identify the most common learning styles used by first-year design students, which is a widely used tool for characterizing learning styles. When the study was conducted with two different groups of first-year design students, the results revealed a specific distribution of learning styles among these students. Based on the results of the Learning Style Inventory (LSI) (Figure and Table ), all students were classified according to their learning style preferences. The experimental and control groups had no divergent students, while the majority preferred a converging learning style, around 77% in the experimental group and 61% in the control group. In the experimental group, more students preferred an accommodating learning style, 15.38%, compared to 7.69% in the control group. On the other hand, fewer students preferred the assimilating learning style in the experimental group compared to 30.77 % in the control group. Demirbas and Demirkan (Citation2003) reported similar findings, indicating that many first-year students preferred converging (33%) and assimilating (31.8%) learning styles.

Table 1. Distribution of all students among the four learning styles

4.2. Learning style inventory scores of students

The scores of the learning style inventories used by both groups were computed, and Table displays the mean, standard deviation, and range values for the six components of the varying style inventory (VSI) scores: Concrete Experience (CE), Reflective Observation (RO), Abstract Conceptualization (AC), Active Experimentation (AE), AC-CE (Abstract Conceptualization-Concrete Experience), and AE-RO (Active Experimentation-Reflective Observation). These components capture different aspects of learners’ preferences and tendencies in approaching learning tasks. The experimental and control groups showed approximately similar values.

Table 2. Average raw scale and range values of the two groups

4.3. Pearson correlation of learning style dimensions

The learning cycle included two bipolar dimensions—the perceiving dimension, as assessed by the combination item of AC-CE, and the processing dimension, as assessed by the combination item of AE-RO—so they should be unrelated. As shown in Table , these items displayed a low level of correlation in the control group (r = 0.08), while they were negatively related in the experimental group (r = −0.13).

Table 3. Pearson correlation of learning style dimensions

It was predicted that the CE and AC items would not be correlated with the AE-RO and that the AE and RO items would not be correlated with the AC-CE items (Smith & Kolb, Citation1996). In this research, it was predicted that the CE and AC would not be correlated with the AE-RO, and the AE and RO would not be correlated with the AC-CE. This prediction was based on the assumption that individuals’ preferences for concrete experience and abstract conceptualization are expected to be independent of their preferences for active experimentation and reflective observation. Similarly, preferences for active experimentation and reflective observation were expected to be unrelated to preferences for abstract conceptualization and concrete experience. By examining these correlations, the relationships between different learning styles and how they might relate to the effectiveness of video-based feedback in architectural design education is explored in this research.

Table shows that in the experimental group, the CE was associated (r = 0.09) with the AE-RO item, while this correlation was weaker among the control students (r = 0.03). Additionally, AE was negatively correlated (r = −0.02) with AC-CE in the experimental group and positively correlated (r = 0.03) with AC-CE in the control group. Similarly, RO was negatively correlated (r = −0.11) with AC-CE in the control group but positively correlated (r = 0.24) with AC-CE in the experimental group.

In both groups, the dialectic poles of the two combination items (AC and CE, AE, and RO) must be negatively associated. No significant association was found between RO and AC in the experimental group; however, the other combinations in the other group showed a negative correlation. Additionally, the cross-dimensional items (such as CE/RO, AC/AE, CE/AE, and AC/RO) did not show as strong of a correlation with each other as the within-dimension items in the two groups.

4.4. Relationship between feedback methods and performance scores

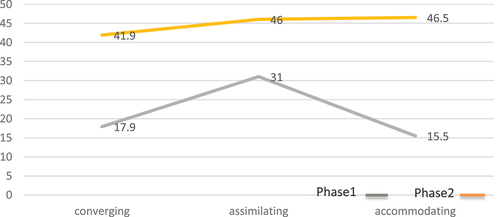

During phase 1 of the study, the product average was relatively low for both the experimental and control groups, as depicted in Figures . of the 13 students in the experimental group, only one submitted an incomplete product, resulting in an incompletion rate of 7.69%. None of the products were deemed outstanding, while eight were considered average (61.54%), and four were below average (30.78%). Similarly, three of the products submitted by the control group students were incomplete, representing a higher rate of 23.08%. None of the products was classified as outstanding, while seven were average (53.85%) and three were below average (23.08%). These findings were not unexpected, given that the phase 1 output was a sketching exercise assigned after a lecture on the subject of the design problem.

A paired samples t-test was used to determine whether the two groups’ feedback techniques affected the performance scores (Tables ) in phase 2. The t-test results revealed statistically significant differences in the average performance scores of the two phases for each feedback method in both groups. However, the experimental group’s mean difference (24.39) was greater than the mean difference in the control group (16.92), indicating a significant difference in the video feedback technique. The performance scores for phase 2 revealed that the video feedback techniques were the most effective.

Table 4. T-test: paired two samples for means for the experimental group

Table 5. T-test: paired two samples for means for the control group

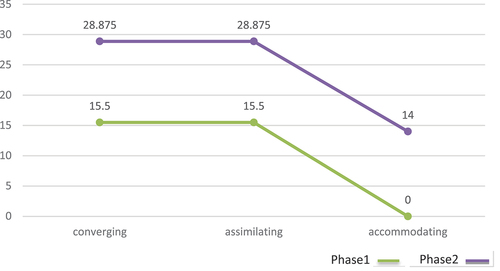

4.5. Assessment of video feedback factor among the experimental group

This section examined three feedback-related aspects: development, understandability, and encouragement, and the results of every assignment were reported individually. The phase 2 results demonstrate the importance of development (U = 159; p < 0.05) and understandability (U = 177; p < 0.05) as feedback elements. However, the difference in encouragement (U = 146; p > 0.05) was statistically insignificant (Table ).

Table 6. Distribution of Mann Whitney U test results on feedback factors for both groups after stage 3

These results could provide an opportunity to assess the impact of video feedback aspects from a broader standpoint and provide results specific to each stage. The results demonstrate that video feedback statistically impacted factors like development and understandability (p < 0.05).

Understandability is one of the markers of feedback quality (Shute, Citation2007). Consequently, this aspect of the scale was applied in the current study. According to the most recent quantitative studies, video-based feedback ensures that input is understood far more effectively than face-to-face feedback. Additionally, this outcome is supported by the qualitative findings of the study. A presentation by the teacher was also included in the video feedback, along with written comments expressed both verbally and on the screen. According to other studies, feedback given in video format is more understandable and comprehensible than feedback given in text style (West & Turner, Citation2016).

The extent to which feedback enables the learning task to be developed and revised is another indicator of the quality of the feedback (Sluijsmans et al., Citation2002). Thus, this study utilized the “development” element in the scale, demonstrating that feedback received in video format greatly increases task revision and development compared with face-to-face feedback. The video feedback format is more detailed, makes revision easier and more enjoyable, and motivates students to revise. These components aid in the development and revision of the learning tasks. Similar results have been documented in earlier studies (Denton, Citation2014; Grigoryan, Citation2017). Beyond this, because video feedback is easier and better to interpret than face-to-face feedback, it could have contributed to this outcome. This is due to the requirement that feedback must be understandable to promote development and revision. That is, it becomes challenging to apply feedback to enhance a learning activity if the feedback is not quickly and accurately comprehended. Additionally, one of the benefits of video feedback that has been highlighted in earlier research is that it provides more information than face-to-face feedback (Orlando, Citation2016). Furthermore, video feedback contains more verbal explanations of written remarks and feedback comments printed on the screen. The instructor’s gestures and expressions are also included in video feedback, making this method more thorough, and the students are more able to understand why revisions are necessary (Denton, Citation2014). Thus, the students in this study may have felt that video feedback improved development and revision, due to its detailing characteristics. If detailed feedback is presented, it increases the learning task’s effectiveness and usefulness. For instance, this study revealed that students valued detailed feedback and employed it more successfully than face-to-face feedback during the revision procedure (Demiraslan Cevik et al., Citation2015).

Video technology contributes numerous benefits to educational feedback practices, in addition to ensuring a higher quality feedback perception than face-to-face feedback. First, professors can provide written and verbal feedback to students using a video format, a principle that also applies to face-to-face feedback procedures. However, after receiving teachers’ comments during the face-to-face feedback sessions, students might forget these explanations. On the other hand, students do not encounter this issue when participating in video feedback exercises because they always have the option of watching the video again and reviewing the material they missed (Crook et al., Citation2012). Second, some students might be hesitant to receive face-to-face feedback comments if they feel anxious or uneasy discussing their work in person (Moore & Filling, Citation2012). Because students can easily watch feedback videos at home and still feel as though they are interacting with their teachers, video feedback may be an effective way to solve any issue of discomfort with in-person feedback.

The findings of this study indicate that video feedback offers several advantages over traditional face-to-face feedback methods, particularly in the development and understandability of the feedback components. These advantages align with the unique characteristics of the field of architecture, which heavily relies on visual representation and spatial understanding. Video feedback allows for a more detailed analysis of architectural designs, providing visual annotations and highlighting specific areas of interest. This visual feedback enhances students’ comprehension of design principles and spatial relationships, which are essential in architectural education.

Furthermore, video feedback provides students with the opportunity for repeated engagement and self-reflection. In architecture, design projects often require extensive exploration and iteration. Video feedback allows students to revisit and review the feedback multiple times, enabling them to gain a deeper understanding of the instructor’s suggestions and make more thoughtful revisions. Additionally, the accessibility of video feedback allows students to engage with the feedback at their own pace, promoting self-directed learning and the development of problem-solving skills.

Incorporating video feedback techniques into teaching methods is recommended to enhance the quality of feedback provided to students in architectural education. The use of video feedback not only supports students during the development and revision stages but also promotes interactions between students and teachers (Killingback et al., Citation2019; Yigit & Seferoglu, Citation2021). This engagement can enhance student-teacher relationships and foster a collaborative learning environment, which is crucial in the context of architecture learning (Killingback et al., Citation2019; Yigit & Seferoglu, Citation2021).

4.6. Practical implications

It is possible that certain characteristics, such as expertise, communication skills, and approachability, contribute to a supportive feedback environment. However, it is important to note that effective feedback delivery should not solely rely on an instructor’s inherent characteristics.

Instead, we emphasize the significance of instructors’ professional development and training in delivering effective feedback. Through continuous training and support, instructors can acquire the necessary skills and strategies to provide valuable feedback to students, regardless of their inherent characteristics. This highlights the importance of investing in instructors’ feedback capabilities to ensure consistent and impactful feedback practices in architectural design education.

Furthermore, while acknowledging the potential influence of instructor characteristics, it is crucial to consider the broader context of feedback processes. Factors such as feedback content, clarity, timeliness, and alignment with learning objectives also significantly contribute to feedback effectiveness. By examining these multifaceted aspects, we can gain a more comprehensive understanding of the complex dynamics between instructors, students, and feedback mechanisms.

4.7. Rationale for video-based feedback and considerations for implementation

The adoption of video-based feedback was driven by the potential benefits it offers in enhancing the clarity and comprehensibility of feedback. By incorporating visual examples, instructors can provide more precise and detailed feedback on specific design elements, facilitating a deeper understanding of the feedback content for students. The inclusion of visual annotations and demonstrations allows for a more visual and explicit representation of feedback, which can aid students in comprehending and applying the feedback to their design work.

However, it is important to acknowledge that video-based feedback introduces certain differences compared to traditional face-to-face critiques. We recognize that face-to-face critiques provide an immediate and interactive exchange between students and instructors, allowing for spontaneous discussions and clarifications. On the other hand, video-based feedback provides a recorded format that can be revisited and reviewed at any time, enabling students to engage with the feedback at their own pace and potentially facilitating reflection and deeper learning.

In light of these differences, it is crucial to highlight the importance of instructors’ professional judgment and pedagogical expertise in adapting and implementing feedback methods. Instructors play a vital role in selecting and employing appropriate feedback approaches that best align with the learning objectives and needs of their students. They possess the knowledge and experience to evaluate the suitability of different feedback methods, considering factors such as the learning context, student preferences, and the nature of the design task.

Therefore, we emphasize the significance of instructors’ professional development and ongoing training to enhance their feedback delivery skills and enable them to make informed decisions regarding the choice and implementation of feedback methods. By providing instructors with the necessary support and resources, they can effectively integrate various feedback approaches, including both video-based and face-to-face critiques, to create a dynamic and inclusive learning environment that promotes student growth and development.

4.8. Limitations

While this research project strives to provide valuable insights into the effectiveness of video-based feedback in architectural design education, it is important to acknowledge certain limitations that may impact the study’s findings and generalizability.

4.8.1. Sample size and selection

The study was conducted at a single institution with a limited sample size of 26 participants, all of whom were first-year students in the Department of Architectural Engineering at Al al-Bayt University in Jordan. Although efforts were made to ensure equal representation between control and experimental groups, as well as gender distribution, the small sample size may limit the generalizability of the findings to other populations or educational contexts. Future research with larger and more diverse samples would help validate and extend the study’s conclusions.

Contextual factors: The study was conducted within the specific context of a design studio setting at Al al-Bayt University. The outcomes and observations may be influenced by the unique characteristics of the institution, curriculum, and instructional practices. Therefore, caution should be exercised when generalizing the results to other educational settings or disciplines. Replicating the study in different contexts would provide a more comprehensive understanding of the impact of video-based feedback on architectural design education.

Subjectivity in evaluation: The assessment of students’ designs relied on subjective evaluations using a scoring instrument developed by Demirbas and Demirkan (Citation2003). While efforts were made to ensure consistency and reliability in the evaluations, subjective judgments may introduce inherent biases and variations in scoring. The interpretation and evaluation of architectural representations can be subjective, and different evaluators might assign different scores. The use of multiple evaluators or additional objective measures could enhance the reliability of the assessment process.

Cross-cultural validation: The study was conducted in an Arab-speaking country, while both the LSI and the FFPS were initially developed in English. It is essential to acknowledge that a cross-cultural validation of both tools would be beneficial in this case. The language in which the LSI and the FFPS were administered to the participating students was not explicitly stated in the original study. Future research should consider conducting a cross-cultural validation of these instruments to ensure their appropriateness and validity within the specific cultural and linguistic context.

Time constraints: The study was conducted over a specific timeframe, encompassing the 2021–2022 academic year and the summer studio sessions. The limited duration of the study may have restricted the depth and scope of data collection and analysis. A more extended research period could allow for a more comprehensive exploration of the long-term effects and sustainability of video-based feedback in architectural design education.

The classification of feedback: The directive or evaluative used in this study may oversimplify the complex nature of feedback. Alternative typologies exist in the literature that encompasses additional dimensions and purposes of feedback, which could provide a more comprehensive understanding of feedback practices in architectural design education. Future research should consider exploring these alternative classifications to gain deeper insights into the impact of different feedback types on student learning and performance outcomes.

Variations in instructor characteristics and their potential impact on feedback effectiveness: We acknowledge that students’ responses to feedback can be influenced by factors such as the instructor’s expertise, communication style, approachability, and reputation. While our research focused on comparing video-based feedback and face-to-face feedback, we recognize the need for future studies to explore the role of instructor characteristics in feedback processes. Understanding how different instructor characteristics may affect students’ perception and response to feedback could provide a more comprehensive understanding of the feedback dynamics in educational settings.

Despite these limitations, this research project provides valuable insights into the use of video-based feedback in architectural design education. The findings, although context-specific, contribute to the existing body of knowledge and lay the groundwork for future research endeavors aimed at addressing the identified limitations and expanding the understanding of this area.

5. Conclusion

In the design experiment, feedback stages were developed to examine the influence of various feedback techniques on student performance. The effectiveness of the video feedback technique on student performance scores was established. As a result, these findings offered a solid foundation for hypothesizing the relationship between the video feedback mode and the interactions between the teacher and students during the design process.

The following findings could be drawn from this study:

Video feedback was found to be the most effective feedback technique in improving student performance scores in architectural design.

Regardless of their preferred learning styles, all students showed improved performance scores after engaging in the design process.

Development and understandability were the feedback factors that displayed the most substantial progress, while encouragement showed the least.

New methods can be developed to determine the interactions between instructors and students during the architectural design course.

The design studio approach in architectural education accommodates all four learning types, emphasizing the importance of tailoring feedback modes to suit diverse learning styles.

Teachers should recognize the variety of students’ learning styles and communicate with them in a manner that meets their individual needs.

Individualized feedback techniques and teaching approaches that align with diverse learning styles are crucial for enhancing learning experiences in architectural design education.

In summary, the results highlight the effectiveness of video feedback, improvements in student performance scores, the importance of individualized feedback, the need for tailored teaching approaches, and the significance of recognizing and accommodating diverse learning styles in architectural design education.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Abo-Wardah, E., & Khalil, M. (2016). Design process and strategic thinking in architecture. Proceedings of 2nd International Conference on Architecture, Structure and Civil Engineering, London.

- Ashton, P. (1998). Learning Theory through Practice: Encouraging appropriate learning. Design Management Journal (Former Series), 9(2), 64–23. https://doi.org/10.1111/j.1948-7169.1998.tb00208.x

- Chen, X., Breslow, L., & DeBoer, J. (2018). Analyzing productive learning behaviors for students using immediate corrective feedback in a blended learning environment. Computers & Education, 117, 59–74. https://doi.org/10.1016/j.compedu.2017.09.013

- Crook, A., Mauchline, A., Maw, S., Lawson, C., Drinkwater, R., Lundqvist, K., Orsmond, P., Gomez, S., & Park, J. (2012). The use of video technology for providing feedback to students: Can it enhance the feedback experience for staff and students? Computers & Education, 58(1), 386–396. https://doi.org/10.1016/j.compedu.2011.08.025

- Demiraslan Cevik, Y., Haslaman, T., & Celik, S. (2015). The effect of peer assessment on problem solving skills of prospective teachers supported by online learning activities. Studies in Educational Evaluation, 44, 23–35. https://doi.org/10.1016/j.stueduc.2014.12.002

- Demirbas, O. O., & Demirkan, H. (2003). Focus on architectural design process through learning styles. Design Studies, 24(5), 437–456. https://doi.org/10.1016/S0142-694X(03)00013-9

- Demirkan, H., & Osman Demirbas, Ö. (2008). Focus on the learning styles of freshman design students. Design Studies, 29(3), 254–266. https://doi.org/10.1016/j.destud.2008.01.002

- Denton, D. W. (2014). Using screen capture feedback to improve academic performance. TechTrends, 58(6), 51–56. https://doi.org/10.1007/s11528-014-0803-0

- Deshmukh, S. S., Miltenberger, R. G., & Quinn, M. (2022). A comparison of verbal feedback and video feedback to improve dance skills. Behavior Analysis: Research and Practice, 22(1), 66–80. https://doi.org/10.1037/bar0000234

- Djabarouti, J., & O’Flaherty, C. (2019). Experiential learning with building craft in the architectural design studio: A pilot study exploring its implications for built heritage in the UK. Thinking Skills and Creativity, 32, 102–113. https://doi.org/10.1016/j.tsc.2019.05.003

- Dutton, T. A., & Willenbrock, L. L. (1989). The design studio: An exploration of its traditions and potential. Journal of Architectural Education, 43(1), 53–55. https://doi.org/10.1080/10464883.1989.10758549

- Eom, S. B., & Ashill, N. (2016). The determinants of students’ perceived learning outcomes and satisfaction in University online education: An Update*. Decision Sciences Journal of Innovative Education, 14(2), 185–215. https://doi.org/10.1111/dsji.12097

- Fox, J., & Bartholomae, S. (1999). Student learning style and educational outcomes: Evidence from a family financial management course. Financial Services Review, 8(4), 235–251. https://doi.org/10.1016/S1057-0810(00)00042-1

- Grigoryan, A. S. (2017). Audiovisual commentary as a way to reduce transactional distance and increase teaching presence in online writing instruction: Student perceptions and preferences. Journal of Response to Writing, 3(1). https://scholarsarchive.byu.edu/journalrw/vol3/iss1/5

- Hassanpour, B., & Sahin, N. P. (2021). Technology adoption in architectural design studios for educational activities. Technology, Pedagogy & Education, 30(4), 491–509. https://doi.org/10.1080/1475939X.2021.1897037

- Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. https://doi.org/10.3102/003465430298487

- Henderson, M., Phillips, M., Ryan, T., Boud, D., Dawson, P., Molloy, E., & Mahoney, P. (2019). Conditions that enable effective feedback. Higher Education Research & Development, 38(7), 1401–1416. https://doi.org/10.1080/07294360.2019.1657807

- Ilgaz, A. (2009). Design juries as a means of assessment and criticism in industrial design education: A study on Metu department of industrial design. Middle East Technical University.

- Inyim, P., Rivera, J., & Zhu, Y. (2015). Integration of building information modeling and economic and environmental impact analysis to support sustainable Building design. Journal of Management in Engineering, 31(1), A4014002. https://doi.org/10.1061/(ASCE)ME.1943-5479.0000308

- Ketchum, C., LaFave, D. S., Yeats, C., Phompheng, E., & Hardy, J. H. (2020). Video-based feedback on student work: An investigation into the instructor experience, workload, and student evaluations. Online Learning, 24(3), 85–105. https://doi.org/10.24059/olj.v24i3.2194

- Killingback, C., Ahmed, O., & Williams, J. (2019). ‘It was all in your voice’-tertiary student perceptions of alternative feedback modes (audio, video, podcast, and screencast): A qualitative literature review. Nurse Education Today, 72, 32–39. https://doi.org/10.1016/j.nedt.2018.10.012

- Kolb, D. A. (2015). Experiential learning: Experience as the source of learning and development (2nd ed.). Pearson Education Limited. https://books.google.jo/books?id=o6DfBQAAQBAJ

- Lawson, B. (2006). How designers think: The design process demystified. Routledge.

- Lueth, P. L. O. (2008). The architectural design studio as a learning environment: A qualitative exploration of architecture design student learning experiences in design studios from first- through fourth -year. [ Ph.D. Thesis]. Iowa State University (3307106). https://www.proquest.com/dissertations-theses/architectural-design-studio-as-learning/docview/193720077/se-2?accountid=31943

- Mahmoodi, A. S. (2001). The design process in architecture: A pedagogic approach using interactive thinking. University of Leeds.

- McCune, V., & Hounsell, D. (2005). The development of students’ ways of thinking and practising in three final-year biology courses. Higher Education, 49(3), 255–289. https://doi.org/10.1007/s10734-004-6666-0

- Moore, N. S., & Filling, M. L. (2012). iFeedback: Using video technology for improving student writing. Journal of College Literacy & Learning, 38, 3–14.

- Newton, S., & Wang, R. (2022). What the malleability of Kolb’s learning style preferences reveals about categorical differences in learning. Educational Studies, 1–20. https://doi.org/10.1080/03055698.2021.2025044

- Nicol, D. J., & Macfarlane‐Dick, D. (2006). Formative assessment and self‐regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218. https://doi.org/10.1080/03075070600572090

- Obeidat, B., & Obeidat, L. M. (2023). Attitudes of Jordanian architecture students toward scientific research: A single-institution survey-based study. Cogent Engineering, 10(1), 2163571. https://doi.org/10.1080/23311916.2022.2163571

- O’Donovan, B. M., den Outer, B., Price, M., & Lloyd, A. (2021). What makes good feedback good? Studies in Higher Education, 46(2), 318–329. https://doi.org/10.1080/03075079.2019.1630812

- Oh, Y., Ishizaki, S., Gross, M. D., & Yi-Luen Do, E. (2013). A theoretical framework of design critiquing in architecture studios. Design Studies, 34(3), 302–325. https://doi.org/10.1016/j.destud.2012.08.004

- Orbey, B., & Pelin Sarıoğlu Erdoğdu, G. (2021). Design process re-visited in the first year design studio: Between intuition and reasoning. International Journal of Technology & Design Education, 31(4), 771–795. https://doi.org/10.1007/s10798-020-09573-2

- Orbey, B., & Sarioglu Erdogdu, G. P. (2020). Design process re-visited in the first year design studio: Between intuition and reasoning. International Journal of Technology and Design Education, 31(4), 1–25. https://doi.org/10.1007/s10798-020-09573-2

- Orlando, J. (2016). A comparison of text, Voice, and screencasting feedback to online students. American Journal of Distance Education, 30(3), 156–166. https://doi.org/10.1080/08923647.2016.1187472

- Oxman, R. (2004). Think-maps: Teaching design thinking in design education. Design Studies, 25(1), 63–91. https://doi.org/10.1016/S0142-694X(03)00033-4

- Rahmane, A., Harkat, N., & Abbaoui, M. (2022). Educational situations (ES) as useful tools for teachers to improve architectural design studio courses (ADSC). International Online Journal of Education and Teaching, 9(1), 551–570.

- Renzella, J., & Cain, A. (2020). Enriching programming student feedback with audio comments. In Proceedings of the ACM/IEEE 42nd International Conference on Software Engineering: Software Engineering Education and Training. https://doi.org/10.1145/3377814.3381712

- Santos, R. S., Do Vale, C. P., Bogoni, B., & Kirkgaard, P. H. (2021). Didactics and circumstance: External representations in architectural design teaching. AMPS Proceedings Series, 21-23 April, 2021.

- Sat, M. (2017). Development and validation of formative feedback perceptions scale in project courses for undergraduate students. Journal of Education and Future, (12), 117–135.

- Sevgul, O., & Yavuzcan, H. G. (2022). Learning and evaluation in the design studio. Gazi University Journal of Science Part B: Art Humanities Design and Planning, 10(1), 43–53.

- Shute, V. J. (2007). Focus on formative feedback. ETS Research Report Series, 2007(1), i–47. https://doi.org/10.1002/j.2333-8504.2007.tb02053.x

- Sluijsmans, D. M. A., Brand-Gruwel, S., & van Merrienboer, J. J. G. (2002). Peer assessment training in teacher education: Effects on performance and perceptions. Assessment & Evaluation in Higher Education, 27(5), 443–454. https://doi.org/10.1080/0260293022000009311

- Smith, C. (2021). How does the medium affect the message? Architecture students’ perceptions of the relative utility of different feedback methods. Assessment & Evaluation in Higher Education, 46(1), 54–67. https://doi.org/10.1080/02602938.2020.1733489

- Smith, D. M., & Kolb, D. A. (1996). User’s guide for the learning-style inventory: A manual for teachers and trainers. Hay/McBer Resources Training Group.

- Sun, Y., Wang, T.-H., & Wang, L.-F. (2021). Implementation of web-based dynamic assessments as sustainable educational technique for enhancing reading strategies in English class during the COVID-19 pandemic. Sustainability, 13(11), 5842. https://doi.org/10.3390/su13115842

- Vasilyeva, E., Pechenizkiy, M., & De Bra, P. (2008). Adaptive Hypermedia and Adaptive Web-Based Systems. Proceedings of the 5th International Conference, AH 2008, Hannover, Germany, July 29 - August 1, 2008. https://doi.org/10.1007/978-3-540-70987-9_26

- West, J., & Turner, W. (2016). Enhancing the assessment experience: Improving student perceptions, engagement and understanding using online video feedback. Innovations in Education and Teaching International, 53(4), 400–410. https://doi.org/10.1080/14703297.2014.1003954

- Winstone, N. E., Nash, R. A., Rowntree, J., & Parker, M. (2017). ‘It’d be useful, but I wouldn’t use it’: Barriers to university students’ feedback seeking and recipience. Studies in Higher Education, 42(11), 2026–2041. https://doi.org/10.1080/03075079.2015.1130032

- Xu, Q., Chen, S., Wang, J., & Suhadolc, S. (2021). Characteristics and effectiveness of teacher feedback on online business English oral presentations. The Asia-Pacific Education Researcher, 30(6), 631–641. https://doi.org/10.1007/s40299-021-00595-5

- Yazdani, M., Rezvani, A., Vafamehr, M., & Khademzade, M. H. (2022). Evaluation of architectural Engineering curriculum to promote vocational education based on Klein Model. Creative City Design, 5(1), 45–61. https://doi.org/10.30495/ccd.2022.689602