?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Many optimization problems in engineering and industrial design applications can be formulated as optimization problems with highly nonlinear objectives, subject to multiple complex constraints. Solving such optimization problems requires sophisticated algorithms and optimization techniques. A major trend in recent years is the use of nature-inspired metaheustic algorithms (NIMA). Despite the popularity of nature-inspired metaheuristic algorithms, there are still some challenging issues and open problems to be resolved. Two main issues related to current NIMAs are: there are over 540 algorithms in the literature, and there is no unified framework to understand the search mechanisms of different algorithms. Therefore, this paper attempts to analyse some similarities and differences among different algorithms and then presents a generalized evolutionary metaheuristic (GEM) in an attempt to unify some of the existing algorithms. After a brief discussion of some insights into nature-inspired algorithms and some open problems, we propose a generalized evolutionary metaheuristic algorithm to unify more than 20 different algorithms so as to understand their main steps and search mechanisms. We then test the unified GEM using 15 test benchmarks to validate its performance. Finally, further research topics are briefly discussed.

1. Introduction

Many design problems in engineering and industry can be formulated as optimization problems subject to multiple nonlinear constraints. To solve such optimization problems, sophisticated optimization algorithms and techniques are often used. Traditional algorithms such as Newton-Raphson method are efficient, but they use derivatives and calculations of these derivatives, especially the second derivatives in a high-dimensional space, can be costly. In addition, such derivative-based algorithms are usually local search and the final solutions may depend on the starting point if optimization problems are highly nonlinear and multimodal (Boyd & Vandenberghe, Citation2004; Yang, Citation2020a). An alternative approach is to use derivative-free algorithms and many evolutionary algorithms, especially recent nature-inspired algorithms, do not use derivatives (Kennedy & Eberhart, Citation1995; Pham et al., Citation2005; Storn & Price, Citation1997; Yang, Citation2020b). These nature-inspired metaheuristic algorithms are flexible and easy to implement, and yet they are usually very effective in solving various optimization problems in practice.

Algorithms have been important through history (Beer, Citation2016; Chabert, Citation1999; Schrijver, Citation2005). There are a vast spectrum of algorithms in the literature, ranging from fundamental algorithms to combinatorial optimization techniques (Chabert, Citation1999; Schrijver, Citation2005). In some special classes of optimization problems, effective algorithms exist for linear programming (Karmarkar, Citation1984) and quadratic programming (Zdenek, Citation2009) as well as convex optimization (Bertsekas et al., Citation2003; Boyd & Vandenberghe, Citation2004). However, for nonlinear optimization problems, techniques vary and often approximations, heuristic algorithms and metaheuristic algorithms are needed. Even so, optimal solutions cannot always be obtained for nonlinear optimization.

Metaheuristic algorithms are approximation optimization techniques, and they use some form of heuristics with trial and error and some form of memory and solution selections (Glover, Citation1986; Glover & Laguna, Citation1997). Most metaheuristic algorithms are evolution-based and/or nature-inspired. Evolution-based algorithms such as genetic algorithm (Holland, Citation1975; Goldberg, Citation1989) are often called evolutionary algorithms. Algorithms such as particle swarm optimization (PSO) (Kennedy & Eberhart, Citation1995), bees algorithm (Pham et al., Citation2005; Pham & Castellani, Citation2009) and firefly algorithm (Yang, Citation2009) are often called swarm intelligence based algorithms (Kennedy et al., Citation2001).

However, terminologies in this area are not well defined and different researchers may use different terminologies to refer to the same things. In this paper, we use nature-inspired algorithms to mean all the metaheuristic algorithms that are inspired by some forms of evolutionary characteristics in nature, being biological, behaviour, social, physical and chemical characteristics (Yang, Citation2020a; Yang & He, Citation2019). In this broad sense, almost all algorithms can be called nature-inspired algorithms, including bees algorithms (Pham & Castellani, Citation2014, Citation2015), PSO (Kennedy et al., Citation2001), ant colony optimization, bat algorithm, flower pollination algorithm, cuckoo search algorithm, genetic algorithm, and many others.

Nature-inspired algorithms have become popular in recent years, and it is estimated that there are several hundred algorithms and variants in the current literature (Yang, Citation2020a), and the relevant literature is expanding with more algorithms emerging every year. An exhaustive review of metaheuristic algorithms by Rajwar et al. (Citation2023) indicated that there are over 540 metaheuristic algorithms with over 350 of such algorithms that were developed in the last 10 years. Many such new variants have been developed based on different characteristics/species from nature, social interactions and/or artificial systems, or based on the hybridization of different algorithms or algorithmic components, or based on different strategies of selecting candidate solutions and information sharing characteristics (Mohamed et al., Citation2020; Rajwar et al., Citation2023; Zelinka, Citation2015).

From the application perspective, nature-inspired algorithms have been shown that they can solve a wide range of optimization problems (Abdel-Basset & Shawky, Citation2019; Bekasş et al., Citation2018; Osaba et al., Citation2016; Pham & Castellani, Citation2015), from continuous optimization (Pham & Castellani, Citation2014) and engineering design optimization problems (Bekasş et al., Citation2018) to combinatorial optimization problems (Ouaarab et al., Citation2014; Osaba et al., Citation2016, Citation2017), multi-robots systems (Palmieri et al., Citation2018; Rango et al., Citation2018) and many other applications (Gavvala et al., Citation2019; Mohamed et al., Citation2020; Rajwar et al., Citation2023; Zelinka, Citation2015).

Despite the wide applications of nature-inspired algorithms, theoretical analysis in contrast lacks behind. Though there are some rigorous analyses concerning genetic algorithm (Greenhalgh & Marshal, Citation2000), PSO (Clerc & Kennedy, Citation2002) and the bat algorithm (Chen et al., Citation2018), however, many new algorithms have not been analyzed in detail. Ideally, a systematical analysis and review should be carried out in the similar way to convex analysis (Bertsekas et al., Citation2003) and convex optimization (Boyd & Vandenberghe, Citation2004). In addition, since there are so many different algorithms, it is difficult to figure out what search mechanisms can be effective in determining the performance of a specific algorithm. Furthermore, some of these 540 algorithms can look very similar, either in terms of their search mechanisms or updating equations, though they may look very different on the surface. This often can cause confusion and frustration to readers and researchers to see what happens in this research community. In fact, there are many open problems and unresolved issues concerning nature-inspired metaheuristic algorithms (Rajwar et al., Citation2023; Yang et al., Citation2018; Yang & He, Citation2019; Yang, Citation2020b).

Therefore, the purpose of this paper is two-fold: outlining some of the challenging issues and open problems, and then developing a generalized evolutionary metaheuristic (GEM) to unify many existing algorithms. The rest of the paper is organized as follows. Section 2 first provides some insights into nature-inspired computing and then outlines some of the open problems concerning nature-inspired algorithms. Section 3 presents a unified framework of more than 20 different algorithms so as to look all the relevant algorithms in the same set of mathematical equations. Section 4 discusses 15 benchmark functions and case studies, whereas Section 5 carries out some numerical experiments to test and validate the generalized algorithm. Finally, Section 6 concludes with a brief discussion of future research topics.

2. Insights and open problems

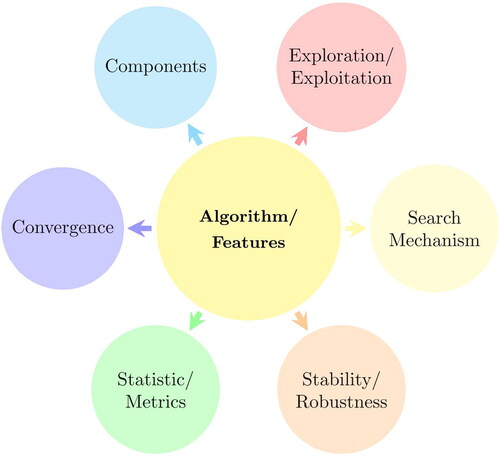

Though not much systematical analysis of nature-inspired algorithms exist, various studies from different perspectives have been carried out and different degrees of insights have been gained (Eiben & Smit, Citation2011; Ser et al., Citation2019; Yang, Citation2020b). For any given nature-inspired algorithm, we can analyze its algorithmic components, and their role in exploration and exploitation, and we also study its search mechanism so as to understand ways that local search and global search moves are carried out. Many studies in the literature have provided numerical convergence curves during iterations when solving different function optimization and sometimes real-world case studies, and such convergence curves are often presented with various statistical quantities according to a specific set of performance metrics such as accuracy of the solution and successful rate as well as number of iterations. In addition, stability and robustness are also studies for some algorithms (Clerc & Kennedy, Citation2002). Such analyses, though very important, are largely qualitative studies of algorithmic features, as summarized in .

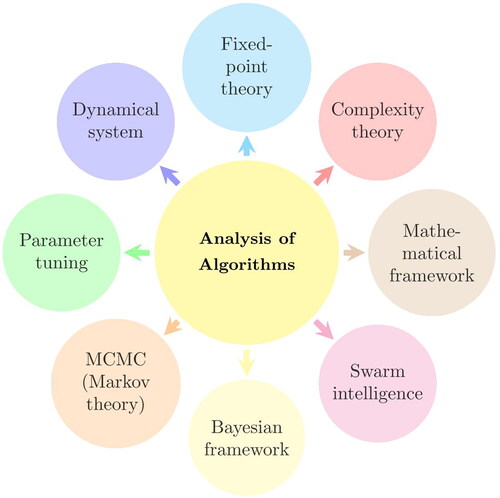

The analysis of algorithms can be carried out more rigorously from a quantitative perspective, as shown in . For a given algorithm, it is possible to analyze the iterative behaviour of the algorithm using fixed-point theory. However, the assumptions required for such theories may not be realistic or relevant to the actual algorithm under consideration. Thus, it is not always possible to carry out such analysis. One good way is to use complexity theory to analyze an algorithm to see its time complexity. Interestingly, most nature-inspired algorithms have the complexity of where n is the typically population size and t is the number of iterations. It is still a mystery that how such low complexity algorithms can solve highly complex nonlinear optimization problems that have been shown in various applications.

From the dynamical system point of view, an algorithm is a system of updating equations, which can be formulated as a discrete dynamical system. The eigenvalues of the main matrix of such a system determine the main characteristics of the algorithm. It can be expected that these eigenvalues can depend on the parameter values of the algorithm, and thus parameter settings can be important. In fact, the analyses on PSO (Clerc & Kennedy, Citation2002) and the bat algorithm (Chen et al., Citation2018) show that parameter values are important. If the parameter values are in the wrong ranges, the algorithm may become unstable and become less effective. This also indicates the important of parameter tuning in nature-inspired algorithms (Eiben & Smit, Citation2011; Joy et al., Citation2023). However, parameter tuning itself is a challenging task because its aim is to find the optimal parameter setting for an optimization algorithm for a given set of optimization problems.

From the probability point of view, an algorithm can be considered as a set of interacting Markov chains, thus it is possible to do some approximation analysis in terms of convergence using Markov chain Monte Carlo (MCMC) theory (Chen et al., Citation2018). However, the conditions required for MCMC can be stringent, and thus not all algorithms can be analyzed in this way. From the perspective of the analysis of variance, it is possible to see how the variances may change with iteration to gain some useful understanding (Zaharie, Citation2009).

An alternative approach is to use Bayesian statistical framework to gain some insights into the algorithm under analysis. Loosely speaking, the initialization of the population in an algorithm with a given probability distribution forms the prior of the algorithm. When the algorithm evolves and the solutions also evolve, leading to a posterior distribution of solutions and parameters. Thus, the evolution of algorithm can be understood from this perspective. However, since Bayesian framework often requires a lot of extensive integral evaluations, it is not straightforward to gain rigorous results in general.

A more ambition approach is to build a mathematical framework so as to analyze algorithms in a unified way, though such a framework does not exist in the current literature. Ideally, a theoretical framework should provide enough insights into the rise of swarm intelligence, which is still an open problem (Yang, Citation2020b; Yang & He, Citation2019).

As we have seen, algorithms can potentially be analyzed from different perspectives and there are many issues that need further research in the general area of swarm intelligence and nature-inspired computation. We can highlight a few important open problems.

Theoretical framework. Though there are some good theoretical analyses of a few algorithms such as genetic algorithm (Greenhalgh & Marshal, Citation2000), PSO (Clerc & Kennedy, Citation2002) and the bat algorithm (Chen et al., Citation2018), there is no unified theoretical framework that can be used to analyze all algorithms or at least a major subset of nature-inspired algorithms. There is a strong need to build a mathematical framework so that the convergence and rate of convergence of any algorithm can be analyzed with rigorous and quantitative results.

In addition, stability of an algorithm and its robustness should also be analyzed using the same mathematical framework, based on theories such as dynamical systems and perturbation as well as probability. The insights gained from such a theoretical framework should provide enough guidance for tuning and setting parameter values for a given algorithm. However, how to construct this theoretical framework is still an open problem. It may be that a multidisciplinary approach is needed to ensure to look at algorithms from different perspectives.

Parameter tuning. The setting of parameters in an algorithm can influence the performance of an algorithm, though the extent of such influence may largely depend on the algorithm itself and potentially on the problem to be solved (Joy et al., Citation2023). There are different methods for parameter tuning, but it is not clear which method(s) should be used for a given algorithm. In addition, different tuning methods may produce different results for parameter settings for different problems, which may leads to the question if a truly optimal parameter setting exists. It seems that there are different optimality conditions concerning parameter setting (Joy et al., Citation2023; Yang et al., Citation2013), and parameter settings may be both algorithm-dependent and problem-dependent, depending on the performance metric used for tuning. Many of these questions remain unresolved.

Benchmarking. All new algorithms should be tested and validated using a diverse of set of benchmarks and case studies. In the current literature, one of the main issues is that most tests use smooth functions as benchmarks, and it seems that these functions have nothing to do with real-world applications. Thus, it is not clear how such tests can actually validate the algorithm to gain any insight into the potential performance of the algorithm to solve much more complicated real-world applications. There is a strong need to systematically investigate the role of benchmarking and what types of benchmarks and case studies should be used.

Performance metrics. It can be expected that the performance of an algorithm depends on the performance metrics used to measure the performance. In the current literature, performance measures are mostly accuracy compared to the true objective values, success rate of multiple functions, number of iterations as computational efforts, computational time, and the combination of these measures. Whether these measures are fair or sufficient is still unexplained. In addition, these performance metrics tend to lead to the ranking of algorithms used and the benchmark functions used. Again, this may not be consistent with the no-free-lunch theorems (Wolpert & Macready, Citation1997). It is not clear if other performance measures should be designed and used, and what theory should be based on to design performance metrics. All these are still open questions.

Search mechanism. In many nature-inspired metaheuristic algorithms, certain forms of randomization and probability distributions are used to generate solutions with exploration abilities. One of the main tasks is to balance exploration and exploitation or diversification and intensification using different search moves or search mechanisms. However, how to balance these two components is still an open problem. In addition, exploration is usually by randomness, random walks and perturbations, whereas exploitation is usually by using derivative information and memory. It is not clear what search moves can be used to achieve both exploration and exploitation effectively.

Scalability. Most studies of metaheuristic algorithms in the literature are concerned with problems with a few parameters or a few dozen parameters. These problems, though very complicated and useful, are small-scale problems. It is not clear if the algorithms used and the implementation realized can directly be applied to large-scale problems in practice. Simple scale up by using high-performance computing or cloud computing facilities may not be enough. How to scale up to solve real-world, large-scale problems is still a challenging issue. In fact, more efficient algorithms are always desirable in this context.

Rise of Swarm Intelligence. Various discussions about swarm intelligence have attracted attention in the current literature. It is not clear what swarm intelligence exactly means and what conditions are necessary to achieve such collective intelligence. There is a strong need to understand swarm intelligence theoretically and practically so as to gain insights into the rise of swarm intelligence. With newly gained insights, we may be able to design better and more effective algorithms.

In addition, from both theoretical perspective and practical point of view, the no-free lunch theorems (Wolpert & Macready, Citation1997) had some significant impact on the understanding of algorithm behaviour. Studies also indicate that free lunches may exist for co-evolution (Wolpert & Macready, Citation2005), continuous problems (Auger & Teytaud, Citation2010) and multi-objective optimization (Corne & Knowles, Citation2003; Zitzler et al., Citation2003) under certain conditions. The main question is how to use such possibilities to build more effective algorithms.

3. A generalized evolutionary metaheuristic (GEM) for optimization

From the numerical algorithm analysis point of view (Boyd & Vandenberghe, Citation2004; Yang, Citation2020a), an algorithm in essence is a procedure to modify the current solution so as to produce a potentially better solution

The well-known Newton’s method at iteration t can be written as

(1)

(1)

where

is the gradient vector of the objective function at

and

is the Hessian matrix. Loosely speaking, all iterative algorithms can schematically be written as

(2)

(2)

where S is a step size vector, which can in general depend on the current solution

the current best solution

and a set of parameters (

). Different algorithms may differ only in the ways of generating such steps.

In addition to the open problems highlighted above, the other purpose of this paper is to provide a unified algorithm framework, based on more than 20 existing nature-inspired algorithms. This unification may enable us to understand the links and difference among different algorithms. It also allows us to build a single generic algorithm that can potentially use the advantages of all its component algorithms, leading to an effective algorithm.

As we have mentioned earlier, there are approximately 540 metaheuristic algorithms and variants in the literature (Rajwar et al., Citation2023), it is impossible to test all algorithms and try to provide a unified approach. This paper is the first attempt to unify multiple algorithms in the same generalized evolutionary perspective. Thus, we have to select over twenty algorithms to see if we can achieve our aim. Obviously, there are two challenging issues: how many algorithms we should use and which algorithms we should choose. For the former question, we think it makes sense that we should choose at least ten different algorithms or more so that we can get a reasonable picture of the unification capabilities. In fact, we have chosen 22 algorithms for this framework. As for the latter question which algorithms to use, it is almost impossible to decide what algorithms to use from 540 algorithms. In the end, the algorithms we have chosen here have different search features or characteristics in terms of their exploration and exploitation capabilities. In addition, in the case of similar exploration and exploitation capabilities, we tend to select the slightly well-established algorithms that appeared earlier in the literature because later/new algorithms may have been based on such mechanisms.

This unified algorithm is called Generalized Evolutionary Metaheuristic (GEM) with multiple parameters and components to unify more than 20 algorithms.

A solution vector x in the D-dimensional space is denoted by

(3)

(3)

For a set of n solution vectors where

these vectors evolve with the iteration t (the pseudo-time) and they are denoted by

Among these n solutions, there is one solution

that gives the best objective value (i.e. highest for maximization or lowest for minimization). For minimization,

(4)

(4)

where the argmin is to find the corresponding solution vector with the best objective value. Here,

is the average of the top m best solutions among n solutions (

). That is

(5)

(5)

In case of m = n, this becomes the centre of the gravity of the whole population. Thus, we can refer to this step as the centrality move.

The main updating equations for our proposed unified algorithm are guided randomization and position update. In the guided randomization search step, it consists of

(6)

(6)

In the position update step, it means

(7)

(7)

where

p, q, and r are parameters, and

is the individual best solution for agent or particle i. Here,

is a function of current solution, and in most case we can set

which can be a constant. In addition, ζi is a vector of random numbers, typically drawn from a standard normal distribution. That is

(8)

(8)

It is worth pointing out the effect of each term can be different, and thus we can name each term, based on their effect and meaning. The inertia term is whereas the

simulates the motion or move towards the current best solution. The term

means to move towards the individual best solution. The centrality term has a weighting coefficient

which tries to balance between the importance of the current solution

and the importance of the centre of gravity of the swarm

The main term

shows similar solutions should converge with subtle or minor changes.

is the kinetic motion of each solution vector. The perturbation term

is controlled by the strength parameter Θ where

can be used to simulate certain specialized moves for each solution agent. If there is no specialization needed,

can be used.

The selection and acceptance criterion for minimization is

(9)

(9)

These four main steps/equations are summarized in the pseudocode, shown in Algorithm 1 where the initialization of the population follows the standard Monte Carlo randomization

(10)

(10)

where Lb and Ub are, respectively, the lower bound and upper bound of the feasible decision variables. In addition, rand(1,D) is a vector of D random numbers drawn from a uniform distribution.

Special cases can correspond to more than 20 different algorithms. It is worth pointing out that in many case there are more than one way to represent the same algorithm by setting different values of parameters. The following representations are one of the possible ways for representing these algorithms:

Differential evolution (DE) (Price et al., Citation2005; Storn & Price, Citation1997): a = 1, b = F, c = 0 and Θ = 0.

Particle swarm optimization (PSO) (Kennedy & Eberhart, Citation1995; Kennedy et al., Citation2001): a = 1, b = 0, p = 1, c = 1, and Θ = 0. In addition,

and

Firefly algorithm (FA) (Yang, Citation2009, Citation2013): a = 1,

c = 0 and

Simulated annealing (SA) (Kirkpatrick et al., Citation1983): a = 1,

and Θ = 1.

Artificial bee algorithm (ABC) (Karaboga & Basturk, Citation2008): a = 1,

c = 0 and Θ = 0.

Artificial cooperative search (ACS) (Civicioglu, Citation2013): a = 1, b = R. c = 0, Θ = 0 and its two predator keys are drawn from its α and β.

Charged system search (CSS) (Kaveh & Talatahari, Citation2010): a = 1,

where R is the normalized distance with the maximum at R = 1. In addition, c = 0 and Θ = 0.

Cuckoo search (CS) (Yang & Deb, Citation2010): a = 1, c = 0, and

where α is a scaling parameter, H is a Heaviside function with a switch probability pa and a uniformly distributed random number ϵ. The step size s is drawn from a Lévy distribution. The other branch with the switch probability pa is a = 1,

Θ = 1, but ζ is drawn from the Lévy distribution

where Γ is the standard Gamma function.

Gravitational search algorithm (GSA) (Rashedi et al., Citation2009): a = 1,

rand

c = 1 and Θ = 0. In addition,

rand

where

Gradient evolution algorithm (GEA) (Kuo & Zulvia, Citation2015): a = 1, c = 0 and Θ = 0, but

In addition, the parameter is set to ra = 0 in GEA.

Harris hawk optimizer (HHO) (Heidari et al., Citation2019): a = 1, b = 0, c = 1, Θ = 0, p = 0, r = 0, and

where E varies with iteration t.

Henry gas solubility optimization (HGSO) (Hashim et al., Citation2019): a = 1, b = r, c = 1 and Θ = 0. In addition, p = 1 and r = 0, but q is related to the solubility affected by the Henry constant and parochial pressure. Here, the Henry’s constant changes with iteration t by

where

K, Cj is a given constant and

Harmony search (HS) (Geem et al., Citation2001): a = 0,

Θ = 1,

for pitch adjustment, but for harmony selection b = 1 and Θ = 0 can be used.

Ant lion optimizer (ALO) (Mirjalili, Citation2015): a = 1, b = 0, c = 1,

where di is the scaled random walk length and ci is its corresponding minimum. In addition, p = 0, q = 0, r = 1 with some variation of

is just the average of two selected elite ant-lion solutions.

Whale optimization algorithm (WOA) (Mirjalili & Lewis, Citation2016): a = 1, c = 0,

where

with r be drawn randomly from

and d be linearly decreasing from 2 to 0. In their spiral branch, Θ = 1 with an equivalent

where R is uniformly distributed in [[−1, 1].

Lion optimization algorithm (LOA) (Yazdani & Jolai, Citation2016): a = 1, b = 0, c = 1, Θ = 0, p = 0,

and r = 0. In the other moving branch in their LOA, Θ is proportional to

where D is their scaled distance and R can be drawn either from [0, 1] or [−1, 1].

Mayfly optimization algorithm (MOA) (Zervoudakis & Tsafarakis, Citation2020): a = 1, b = 0, c = 1, Θ = 0, p = g,

and

Big bang-big crunch (BBBC) (Erol & Eksin, Citation2006): the modification of solutions is mainly around the centre of gravity

of the population with a = 0.

and

Social spider algorithm (SSA) (James & Li, Citation2015): a = 1,

where w is a weight coefficient and dij is the distance between two spiders. In addition, c = 0, Θ = 1,

Moth search algorithm (MSA) (Wang, Citation2018):

b = 0, c = 1, p = 0,

r = 0, and Θ = 0 in one equation. The other equation corresponds to a = 1,

and

drawn from a Lévy distribution.

Multi-verse optimizer (MVO) (Mirjalili et al., Citation2016): a = 1,

but

where

is the maximum number of iterations. Its randomized perturbation is given by

Water cycle algorithm (WCA) (Eskandar et al., Citation2012): a = 1,

where

and rand is a uniformly distributed random number in [0, 1] for both water drops in river and stream in the WCA. For the additional search for the new stream step, a = 1,

but

as its standard deviation and ζ is drawn from a Gaussian distribution with a unity mean.

Algorithm 1:

GEM: A generalized evolutionary metaheuristic for optimization.

Data: Define optimization objective () with proper constraint handling

Result: Best or optimal solution () with archived solution history

1 Initialize parameter values (n, a, b, c, Θ, p, q, r);

2 Generate an initial population of n solutions using EquationEq. (10)(10)

(10) ;

3 Find the initial best solution and

among the initial population;

4 while (MaxGeneration) do

5 Generate random numbers ();

6 for (all solutions) do

7 Modify solutions by EquationEq. (6)(6)

(6) ;

8 Update solution vectors by EquationEq. (7)(7)

(7) ;

9 Accept a solution by checking criterion EquationEq. (9)(9)

(9) ;

10 end

11 Rank all the solutions to find the current best

12 Update the best solution so far and

by EquationEq. (4)

(4)

(4) ;

13 Save and archive the current population for next generation;

14 Update the iteration counter

15 end

16 Post-process the solutions for constraint verification and visualization;

As pointed out earlier, there are more than one ways of representing an algorithm under consideration using the unified framework, and the minor details and un-important components of some algorithms may be ignored. In essence, the unified framework intends to extract the key components of multiple algorithms so that we can figure out what main search mechanisms or moves are important in metaheuristic algorithms. Therefore, it can be expected that many other algorithms may be considered as special cases of the GEM framework if the right combinations of parameter values are used.

4. Benchmarks and parameter settings

To test the unified GEM algorithm, we have selected 15 benchmark functions and case studies. There are many different test function benchmarks, including multivariate functions (Jamil & Yang, Citation2013), CEC2005 test suite (Suganthan et al., Citation2005), unconstrained benchmark function repository (Al-Roomi, Citation2015) and engineering optimization problems (Cagnina et al., Citation2008; Coello, Citation2000). The intention is to select a subset of optimization problems with a diverse range of properties such as modality, convexity, nonlinear constraints, separability, and landscape variations. The case studies also include a mixed-integer programming pressure vessel design problem, and a parameter estimation based on data for a vibrations system governed by an ordinary differential equation (ODE).

4.1. Test functions

The ten test functions are outlined as follows.

Sphere function

(11)

(11) its global optimality

occurs at

Rosenbrock function (Rosenbrock, Citation1960)

(12)

(12) whose global minimum

is located at

Ackley function

(13)

(13) with

(14)

(14) whose global minimum

occurs at

Dixon-Price function

(15)

(15) whose global minimum

at

for

Schwefel function (Schwefel, Citation1995)

(16)

(16) its global minimum

occurs at

Booth function

(17)

(17) whose global minimum

occurs at

Holder table function

(18)

(18) whose four global minima

occur at

Beale function

(19)

(19) where

(20)

(20) The global minimum

occurs at

Trid function

(21)

(21) whose global minimum

occurs at

for

Rastrigin function

(22)

(22) whose global minimum

occurs at

4.2. Case studies

The five design case studies or benchmarks are described as follows.

11. Spring design. The three design variables are: the wire diameter w (or x1), mean coil diameter D (or x2) and the number N (or x3) of active coils.

(23)

(23) subject to

(24)

(24)

(25)

(25)

(26)

(26)

(27)

(27)

The simple lower and upper bounds are

(28)

(28)

The best solution found so far is (Cagnina et al., Citation2008; Yang, Citation2013)

(29)

(29)

12. For the three-bar truss system design with two cross-section areas x1 = A1 and x2 = A2, the objective is to minimize

(30)

(30) subject to

(31)

(31)

(32)

(32)

(33)

(33) where σ = 2 kN/cm2 is the stress limit and P = 2 kN is the load. In addition,

The best solution so far in the literature is (Bekasş et al., Citation2018)

(34)

(34)

13. Beam design. For the beam design to support a vertical load at the free end of the beam, the objective is to minimize

(35)

(35) subject to

(36)

(36) with the simple bounds

The best solution found so far in the literature is (Bekasş et al., Citation2018)

(37)

(37)

14. Pressure vessel design. The main objective is to minimize

(38)

(38) subject to

(39)

(39)

(40)

(40)

(41)

(41)

(42)

(42) The first two design variables must be the integer multiples of the basic thickness h = 0.0625 inches (Cagnina et al., Citation2008). Therefore, the lower and upper bounds are

(43)

(43) and

(44)

(44) Its true global optimal solution

occurs at

(45)

(45)

14. Parameter estimation of an ODE. For a vibration problem with a unit step input, we have its mathematical equation as an ordinary differential equation (Yang, Citation2023)

(46)

(46) where ω and ζ are the two parameters to be estimated. Here, the unit step function is given

(47)

(47) The initial conditions are

For a given system, we have observed its actual response. The relevant measurements are given .In order to estimate the two unknown parameter values ω and ζ, we can define the objective function as

(48)

(48) where

for

are the observed values and

are the values obtained by solving the differential Equationequation (46)

(46)

(46) , given a guessed set of values ζ and ω. Here, we have used

The true values are

and ω = 2. The aim of this benchmark is to solve the differential equation iteratively so as to find the best parameter values that minimize the objective or best-fit errors.

Table 1. Measured data for a vibration problem.

4.3. Parameter settings

In our simulations, the parameter settings are: population size n = 10 with parameter values of a = 1, b = 0.7, c = 1, p = 0.7, and

with

The maximum number of iterations is set to

For the benchmark functions, D = 5 is used for f1, f2, f3, f4, and f10. For f9, D = 4 is used so as to give as a nice integer. For all other problems, their dimensionality has been given earlier in this section.

5. Numerical experiments and results

After implementing the algorithm using MATLAB, we have carried out various numerical experiments. This section summarizes the results.

5.1. Results for function benchmarks

For the first ten functions, the algorithm has been run 20 times so that the best mean values and other statistics can be calculated. The results have been summarized in . As we can see, the algorithm can find all the true optimal solutions even with a small population size n = 10. This shows that the unified GEM algorithm is very efficient for solving function optimization problems.

Table 2. Numerical experiments for ten test functions.

5.2. Five case studies

To test the proposed algorithm further, we have used it to solve five different case studies, subject to various constraints. The constraints are handled by the standard penalty method with a penalty coefficient λ = 1000 to 105. For the pressure vessel problem, is used, whereas all other problems use λ = 1000.

For example, in the pressure vessel design problem, the four constraints are incorporated as so that the new objective becomes

(49)

(49)

where

(50)

(50)

The pressure vessel design problem is a mixed integer programming problem because the first two variables x1 and x2 can take only integer multiples of the basic thickness h = 0.0625 due to manufacturing constraints. The other two variables x3 and x4 can take continuous real values. For each case study, the algorithm has been run 10 times. For example, the 10 runs of the pressure vessel design are summarized in . As we can see, Run 2 finds a much better solution , highlighted in bold, than the best solution known so far in the literature 6059.7143. All the constraints are satisfied, which means that this is a valid new solution.

Table 3. Pressure vessel design benchmarks.

Following the exact same procedure, each case study has been simulated 20 times. The results for the five design case studies are summarized in . As we can see, all the best known optimal solutions have been found by the algorithm with a population size n = 10.

Table 4. Results for the five design benchmarks.

The above simulations and results have shown that the unified GEM algorithm performs well for all the 15 test benchmarks, and in some cases it can achieve even better results. This indicates that this unified algorithm is effective and can potentially be applied to solve a wide range of optimization problems. This will form part of our further research.

6. Conclusion and discussion

In this paper, we have proposed a unified algorithm, called generalized evolutionary metaheuristic (GEM), which represents more than 20 different algorithms in the current literature. We have validated the proposed GEM with 15 different test benchmarks and optimal solutions have been obtained with a small population size and fixed parameters.

From the parameter tuning perspective, it can be expected that if the parameters can be tuned systematically, it may be possible to enhance the algorithm’s performance further. In fact, a systematical parameter study and parameter tuning is needed for this new algorithm, which will be carried out in our future work.

In addition, apart from more than 20 algorithms as special cases of this unified algorithm when setting different parameter values, it can be expected that the vast majority of the 540 algorithms can also be rightly represented in this unified algorithm if certain parameter are allowed to vary in certain ways and some minor differences in some algorithms can be ignored. Obviously, for some algorithms such as the genetic algorithm, the algorithm is mainly described as an algorithmic procedure without explicit mathematical formulas. Such type of algorithm may not be easily categorized into the generalized framework. However, the procedure and algorithmic flow may in essence be quite similar to some of the main framework. In addition, a systematic comparison of this general framework can be carried out with various component algorithms. This can be a useful topic for further research.

Declaration of data

No datasets were received/used. All results are simulated and reproducible from the proposed algorithms. MATLAB codes related to this work are available upon request, by emailing the author.

Disclosure statement

The author confirms that there are no relevant financial or non-financial competing interests to report.

Additional information

Funding

Notes on contributors

Xin-She Yang

Xin-She Yang obtained his DPhil in Applied Mathematics from the University of Oxford. He then worked at Cambridge University and National Physical Laboratory (UK) as a Senior Research Scientist. Now he is Reader at Middlesex University London, and a co-Editor of the Springer Tracts in Nature-Inspired Computing. He is also an elected Fellow of the Institute of Mathematics and its Applications. He was the IEEE Computational Intelligence Society (CIS) chair for the Task Force on Business Intelligence and Knowledge Management (2015 to 2020). He has published more than 300 peer-reviewed research papers with more than 90,270 citations, and he has been on the prestigious list of highly-cited researchers (Web of Sciences) for eight consecutive years (2016-2023).

References

- Abdel-Basset, M., & Shawky, L. A. (2019). Flower pollination algorithm: A comprehensive review. Artificial Intelligence Review, 52(4), 2533–2557. https://doi.org/10.1007/s10462-018-9624-4

- Al-Roomi, A. R. (2015). Unconstrained single-objective benchmark function repository.

- Auger, A., & Teytaud, O. (2010). Continuous lunches are free plus the design of optimal optimization algorithms. Algorithmica, 57(1), 121–146. https://doi.org/10.1007/s00453-008-9244-5

- Beer, D. (2016). The social power of algorithms. Information, Communication & Society, 20(1), 1–13. https://doi.org/10.1080/1369118X.2016.1216147

- Bekasş, G., Nigdeli, M., & Yang, X.-S. (2018). A novel bat algorithm based optimum tuning of mass dampers for improving the seismic safety of structures. Engineering Structures, 159(1), 89–98.

- Bertsekas, D. P., Nedic, A., & Ozdaglar, A. (2003). Convex analysis and optimization (2nd ed.). Athena Scientific.

- Boyd, S. P., & Vandenberghe, L. (2004). Convex optimization. Cambridge University Press.

- Cagnina, L. C., Esquivel, S. C., & Coello Coello, A. C. (2008). Solving engineering optimization problems with the simple constrained particle swarm optimizer. Informatica, 32(2), 319–326.

- Chabert, J. L. (1999). A history of algorithms: From the pebble to the microchips. Springer-Verlag.

- Chen, S., Peng, G.-H., He, X.-S., & Yang, X.-S. (2018). Global convergence analysis of the bat algorithm using a Markovian framework and dynamic system theory. Expert Systems with Applications, 114(1), 173–182. https://doi.org/10.1016/j.eswa.2018.07.036

- Civicioglu, P. (2013). Artificial cooperative search algorithm for numerical optimization problems. Information Sciences, 229(1), 58–76. https://doi.org/10.1016/j.ins.2012.11.013

- Clerc, M., & Kennedy, J. (2002). The particle swarm: Explosion, stability, and convergence in a multidimensional complex space. IEEE Transactions on Evolutionary Computation, 6(1), 58–73. https://doi.org/10.1109/4235.985692

- Coello, C. A. (2000). Use of self-adaptive penalty approach for engineering optimization problems. Computers in Industry, 41(2), 113–127. https://doi.org/10.1016/S0166-3615(99)00046-9

- Corne, D., & Knowles, J. (2003). Some multiobjective optimizers are better than others. Evolutionary Computation, 4(2), 2506–2512.

- Eiben, A. E., & Smit, S. K. (2011). Parameter tuning for configuring and analyzing evolutionary algorithms. Swarm and Evolutionary Computation, 1(1), 19–31. https://doi.org/10.1016/j.swevo.2011.02.001

- Erol, O., & Eksin, I. (2006). A new optimization method: Big bang-big crunch. Advances in Engineering Software, 37(2), 106–111. https://doi.org/10.1016/j.advengsoft.2005.04.005

- Eskandar, H., Sadollah, A., Bahreininejad, A., & Hamdi, M. (2012). Water cycle algorithm – A novel metaheuristic optimization method for solving constrained engineering optimization problems. Computers & Structures, 110–111(1), 151–166. https://doi.org/10.1016/j.compstruc.2012.07.010

- Gavvala, S. K., Jatoth, C., Gangadharan, G. R., & Buyya, R. (2019). QoS-aware cloud service composition using eagle strategy. Future Generation Computer Systems, 90, 273–290. https://doi.org/10.1016/j.future.2018.07.062

- Geem, Z. W., Kim, J. H., and Loganathan, G. V. (2001). A new heuristic optimization algorithm: Harmony search. Simulation, 76(2):60–68. https://doi.org/10.1177/003754970107600201

- Glover, F. (1986). Future paths for integer programming and links to artificial intelligence. Computers & Operations Research, 13(5), 533–549. https://doi.org/10.1016/0305-0548(86)90048-1

- Glover, F., & Laguna, M. (1997). Tabu search. Kluwer Academic Publishers.

- Goldberg, D. E. (1989). Genetic algorithms in search, optimization and machine learning. Addison-Wesley.

- Greenhalgh, D., & Marshal, S. (2000). Convergence criteria for genetic algorithm. SIAM Journal Comput, 30(1), 269–282.

- Hashim, F. A., Houssein, E. H., Mabrouk, M. S., Al-Atabany, W., & Mirjalili, S. (2019). Henry gas solubility optimization: A novel physics-based algorithm. Future Generation Computer Systems, 101, 646–667. https://doi.org/10.1016/j.future.2019.07.015

- Heidari, A. A., Mirjalili, S., Faris, H., Aljarah, I., Mafarja, M., & Chen, H. (2019). Harris hawks optimization: Algorithm and applications. Future Generation Computer Systems, 97, 849–872. https://doi.org/10.1016/j.future.2019.02.028

- Holland, J. (1975). Adaptation in nature and artificial systems. University of Michigan Press.

- James, J., & Li, V. (2015). A social spider algorithm for global optimization. Applied Soft Computing, 30, 614–627. https://doi.org/10.1016/j.asoc.2015.02.014

- Jamil, M., & Yang, X.-S. (2013). A literature survey of benchmark functions for global optimization problems. International Journal of Mathematical Modelling and Numerical Optimisation, 4(2), 150–194.

- Joy, G., Huyck, C., & Yang, X.-S. (2023). Review of Parameter tuning methods for nature-inspired algorithms (pp. 33–47). Springer Nature Singapore.

- Karaboga, D., & Basturk, B. (2008). On the performance of artificial bee colony (ABC) algorithm. Applied Soft Computing, 8(1), 687–697. https://doi.org/10.1016/j.asoc.2007.05.007

- Karmarkar, N. (1984). A new polynomial-time algorithm for linear programming. Combinatorica, 4(4), 373–395. https://doi.org/10.1007/BF02579150

- Kaveh, A., & Talatahari, S. (2010). A novel heuristic optimization method: Charged system search. Acta Mechanica, 213(3–4), 267–289. https://doi.org/10.1007/s00707-009-0270-4

- Kennedy, J., & Eberhart, R. (1995). Particle swarm optimization. Proceedings of the IEEE International Conference on Neural Networks (pp. 1942–1948). IEEE.

- Kennedy, J., Eberhart, R. C., & Shi, Y. (2001). Swarm intelligence. Academic Press.

- Kirkpatrick, S., Gelatt, C. D., & Vecchi, M. P. (1983). Optimization by simulated annealing. Science (New York, N.Y.), 220(4598), 671–680. https://doi.org/10.1126/science.220.4598.671

- Kuo, R., & Zulvia, F. (2015). The gradient evolution algorithm: A new metaheuristic. Information Sciences, 316(2), 246–265. https://doi.org/10.1016/j.ins.2015.04.031

- Mirjalili, S. (2015). The ant lion optimizer. Advances in Engineering Software, 83(1), 80–98.

- Mirjalili, S., & Lewis, A. (2016). The whale optimization algorithm. Advances in Engineering Software, 95(1), 51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008

- Mirjalili, S., Mirjalili, S., & Hatamlou, A. (2016). Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Computing and Applications, 27(2), 495–513. https://doi.org/10.1007/s00521-015-1870-7

- Mohamed, A., Hadi, A., & Mohamed, A. (2020). Gaining-sharing knowledge based algorithm for solving optimization problems: A novel nature-inspired algorithm. International Journal of Machine Learning and Cybernatics, 11(7), 1501–1529.

- Osaba, E., Yang, X.-S., Diaz, F., Lopez-Garcia, P., & Carballedo, R. (2016). An improved discrete bat algorithm for symmetric and assymmetric travelling salesman problems. Engineering Applications of Artificial Intelligence, 48(1), 59–71. https://doi.org/10.1016/j.engappai.2015.10.006

- Osaba, E., Yang, X.-S., Diaz, F., Onieva, E., Masegosa, A., & Perallos, A. (2017). A discrete firefly algorithm to solve a rich vehicle routing problem modelling a newspaper distribution system with recycling policy. Soft Computing, 21(18), 5295–5308. https://doi.org/10.1007/s00500-016-2114-1

- Ouaarab, A., Ahiod, B., & Yang, X.-S. (2014). Discrete cuckoo search algorithm for the travelling salesman problem. Neural Computing and Applications, 24(7–8), 1659–1669. https://doi.org/10.1007/s00521-013-1402-2

- Palmieri, N., Yang, X.-S., Rango, F. D., & Santamaria, A. F. (2018). Self-adaptive decision-making mechanisms to balance the execution of multiple tasks for a multi-robots team. Neurocomputing, 306(1), 17–36. https://doi.org/10.1016/j.neucom.2018.03.038

- Pham, D. T., & Castellani, M. (2009). The bees algorithm: Modelling foraging behaviour to solve continuous optimization problems. Proceedings of the Institution of Mechanical Engineers, Part C: Journal of Mechanical Engineering Science, 223(12), 2919–2938. https://doi.org/10.1243/09544062JMES1494

- Pham, D. T., & Castellani, M. (2014). Benchmarking and comparison of nature-inspired population-based continuous optimisation algorithms. Soft Computing, 18(5), 871–903. https://doi.org/10.1007/s00500-013-1104-9

- Pham, D. T., & Castellani, M. (2015). A comparative study of the bees algorithm as a tool for function optimisation. Cogent Engineering, 2(1), 1091540. https://doi.org/10.1080/23311916.2015.1091540

- Pham, D., Ghanbarzadeh, A., Koc, E., Otri, S., Rahim, S., & Zaidi, M. (2005). The bees algorithm, technical note. Technical report. Cardiff University.

- Price, K., Storn, R., & Lampinen, J. (2005). Differential evolution: A practical approach to global optimization. Springer.

- Rajwar, K., Deep, K., & Das, S. (2023). An exhaustive review of the metaheuristic algorithms for search and optimization: Taxonomy, applications, and open challenges. Artificial Intelligence Review, 56(11), 1–71. https://doi.org/10.1007/s10462-023-10470-y

- Rango, F. D., Palmieri, N., Yang, X.-S., & Marano, S. (2018). Swarm robotics in wireless distributed protocol design for coordinating robots involved in cooperative tasks. Soft Computing, 22(13), 4251–4266. https://doi.org/10.1007/s00500-017-2819-9

- Rashedi, E., Nezamabadi-Pour, H. H., & Saryazdi, S. (2009). GSA: A gravitational search algorithm. Information Sciences, 179(13), 2232–2248. https://doi.org/10.1016/j.ins.2009.03.004

- Rosenbrock, H. H. (1960). An automatic method for finding the greatest or least value of a function. Computer Journal, 3(3), 175–184. https://doi.org/10.1093/comjnl/3.3.175

- Schrijver, A. (2005). On the history of combinatorial optimization (till 1960). In Aardal, K., Nemhauser, G. L., & Weismantel, R. (Eds.), Handbook of discrete optimization (pp. 1–68). Elsevier.

- Schwefel, H. (1995). Evolution and optimum seeking. John Wiley Sons.

- Ser, J. D., Osaba, E., Molina, D., Yang, X.-S., Salcedo-Sanz, S., Camacho, D., Das, S., Suganthan, P. N., Coello, C. A. C., & Herrera, F. (2019). Bio-inspired computation: Where we stand and what’s next. Swarm and Evolutionary Computation, 48, 220–250.

- Storn, R., & Price, K. (1997). Differential evolution: A simple and efficient heuristic for global optimization. Journal of Global Optimization, 11(4), 341–359. https://doi.org/10.1023/A:1008202821328

- Suganthan, P., Hansen, N., Liang, J., Deb, K., Chen, Y., Auger, A., & Tiwar, S. (2005). Problem definitions and evaluation criteria for CEC 2005, special session on real-parameter optimization. Technical Report. Nanyang Technological University (NTU), Singapore.

- Wang, G. (2018). Moth search algorithm: A bio-inspired metaheuristic algorithm for global optimization problems. Memetic Computing, 10(2), 151–164. https://doi.org/10.1007/s12293-016-0212-3

- Wolpert, D. H., & Macready, W. G. (1997). No free lunch theorems for optimization. IEEE Transactions on Evolutionary Computation, 1(1), 67–82.

- Wolpert, D. H., & Macready, W. G. (2005). Coevolutionary free lunches. IEEE Transactions on Evolutionary Computation, 9(6), 721–735. https://doi.org/10.1109/TEVC.2005.856205

- Yang, X.-S. (2009). Firefly algorithms for multimodal optimization. In Watanabe, O., & Zeugmann, T. (Eds.), Proceedings of Fifth symposium on stochastic algorithms, foundations and applications (Vol. 5792, pp. 169–178). Lecture Notes in Computer Science Springer.

- Yang, X.-S. (2013). Cuckoo search and firefly algorithm: Theory and applications, volume 516 of studies in computational intelligence. Springer.

- Yang, X.-S. (2020a). Nature-inspired optimization algorithms (2nd ed.). Academic Press.

- Yang, X.-S. (2020b). Nature-inspired optimization algorithms: Challenges and open problems. Journal of Computational Science, 46, 101104. https://doi.org/10.1016/j.jocs.2020.101104

- Yang, X.-S. (2023). Ten new benchmarks for optimization. In Yang, X.-S. (Ed.), Benchmarks and hybrid algorithms in optimization and applications (pp. 19–32). Springer.

- Yang, X.-S., & Deb, S. (2010). Engineering optimisation by cuckoo search. International Journal of Mathematical Modelling and Numerical Optimisation, 1(4), 330–343. https://doi.org/10.1504/IJMMNO.2010.035430

- Yang, X.-S., Deb, S., Loomes, M., & Karamanoglu, M. (2013). A framework for self-tuning optimization algorithm. Neural Computing and Applications, 23(7–8), 2051–2057. https://doi.org/10.1007/s00521-013-1498-4

- Yang, X.-S., Deb, S., Zhao, Y.-X., Fong, S., & He, X. (2018). Swarm intelligence: Past, present and future. Soft Computing, 22(18), 5923–5933. https://doi.org/10.1007/s00500-017-2810-5

- Yang, X.-S., & He, X.-S. (2019). Mathematical foundations of nature-inspired algorithms. Springer Briefs in Optimization. Springer.

- Yazdani, M., & Jolai, F. (2016). Lion optimization algorithm (LOA): A nature-inspired metaheuristic algorithm. Journal of Computational Design and Engineering, 3(1), 24–36. https://doi.org/10.1016/j.jcde.2015.06.003

- Zaharie, D. (2009). Influence of crossover on the behavior of the differential evolution algorithm. Applied Soft Computing, 9(3), 1126–1138. https://doi.org/10.1016/j.asoc.2009.02.012

- Zdenek, D. (2009). Optimal quadratic programming algorithms: With applications to variational inequalities. Springer.

- Zelinka, I. (2015). A survey on evolutionary algorithm dynamics and its complexy-mutual relations, past, present and future. Swarm and Evolutionary Computation, 25(1), 2–14. https://doi.org/10.1016/j.swevo.2015.06.002

- Zervoudakis, K., & Tsafarakis, S. (2020). A mayfly optimization algorithm. Computers & Industrial Engineering, 145, 106559. https://doi.org/10.1016/j.cie.2020.106559

- Zitzler, E., Thiele, L., Laumanns, M., Fonseca, C. M., & Fonseca, V. G. D. (2003). Performance assessment of multiobjective optimizers: An analysis and review. IEEE Transaction on Evolutionary Computation, 7(2), 117–132.