?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Decision-support methods are crucial for analyzing complex alternatives and criteria in today’s data-driven world. This Systematic Literature Review (SLR) explores and synthesizes knowledge about decision support methodologies that integrate Multicriteria Decision Making (MCDM) and Principal Component Analysis (PCA), an unsupervised Machine Learning (ML) technique. Both techniques optimize complex decisions by combining multiple criteria and dimensional data analysis. Focusing on performance evaluations, criterion weighting, and validation testing, this review identifies significant gaps in existing methodologies. These include the lack of consideration for non-beneficial criteria in PCA, insufficient validation tests in over half of the studies, and the non-use of communalities (the contribution of each criterion to the main factors) in decision support approaches. Additionally, this SLR offers a comprehensive quantitative overview, analyzing data from the Scopus, IEEE, and Web of Science databases and identifying 16 relevant studies. Furthermore, the scarcity of systematic reviews integrating MCDM and PCA techniques impedes evidence-based decision-making practices and theoretical evolution. This is particularly crucial as ML and data analysis advance rapidly, requiring models that reflect technological innovations. This article addresses this gap in the literature by providing an analysis of decision support methods and guiding further improvement in this field.

1. Introduction

According to Michalski et al. (Citation2013), Machine Learning (ML) is linked to the quest for computational models that enhance their ability to perform specific tasks as they are exposed to available data. This field resides at the intersection of computer science and statistics and is one of the technical disciplines experiencing remarkable growth, driven by the continuous development of new algorithms and learning theories. In addition, the increasing availability of online data and accessible computing resources have contributed to this progress. The application of ML techniques, especially those requiring intensive data analysis, spans multiple domains of knowledge, influencing various areas of science, technology, and business. This, in turn, promotes reasoned and evidence-based decision-making in the diverse spheres of contemporary society. These scopes encompass but are not limited to industries such as healthcare, manufacturing, education, finance, the legal field, and marketing (Jordan & Mitchell, Citation2015).

According to Costa (Citation2002), the decision-making process can be categorized into the following modalities: choice, classification, ordering, ordered classification, and prioritization. The analytical assessment of decisions is also subject to consideration of the extent of the criteria used to evaluate the alternatives, which may be single-criteria or multicriteria analyses. Furthermore, decision-making is vital for academia and society, from economics and business to the science, engineering, and government sectors. The complexity of decisions often involves the consideration of multiple criteria, each with its weight and relative importance. In this context, Multicriteria Decision Making (MCDM) methods play a crucial role in evaluating alternatives and choosing the best solution (Belton & Stewart, Citation2002). One of the challenges faced by decision-makers (DMs) is dealing with a large set of data, often multidimensional and with criteria that may be correlated. Thus, the unsupervised ML technique of Principal Component Analysis (PCA) has emerged, which reduces the dimensionality of the data while maintaining most of the original variance. This technique is widely used to simplify the representation of complex data, making it more understandable and useful in decision-making (Belton & Stewart, Citation2002; Fávero & Belfiore, Citation2017).

PCA is a statistical multivariate analysis technique intended to summarize multiple variables into a new set of data called factors. This process emphasizes the variation in dataset information, making meaningful patterns more discernible (Xiao et al., Citation2017). The new factors resulting from PCA have dimensionality equal to or lower than the original set of variables. They were derived such that a few principal components could explain a significant portion of the variation in the original data (Vidal et al., Citation2016). This technique is effective for the exploration and visualization of data that initially has high dimensionality. In addition, the ability of PCA to reduce the complexity of multidimensional data and create new indexes that represent the original data enables its use for the performance evaluation of alternatives (Akande et al., Citation2019; Marsal-Llacuna et al., Citation2015; Wei et al., Citation2016), which will be analyzed in this Systematic Literature Review (SLR).

MCDM methods, on the other hand, are intended to describe a set of formal approaches that explicitly consider various criteria to help stakeholders and groups explore essential decisions (Belton & Stewart, Citation2002; Maêda et al., Citation2021). According to Greco et al. (Citation2016), despite the diversity of multicriteria approaches, methods, and techniques, the essential components of MCDM are a finite or infinite set of alternatives, at least two criteria, and at least one DM. Given these crucial elements, MCDM assists decision-making, especially in choosing, classifying, or ordering alternatives.

1.1. Motivation

Despite the growing importance of MCDM and PCA techniques in simplifying complex decisions involving large volumes of data, a significant gap is observed: the lack of reviews assessing the integration of these methodologies. In critical areas like healthcare and engineering, the underutilization of potential insights from insufficiently analyzed MCDM and PCA integrations compromises decision effectiveness. This study proposes to fill this gap through an SLR that synthesizes existing knowledge and explores how integrating these advanced techniques can significantly improve decision-making across various disciplines.

The absence of reviews integrating MCDM and PCA techniques hinders the advancement of effective evidence-based practices and the evolution of related theories, limiting the development of decision-making models incorporating state-of-the-art data analysis, specifically ML. This gap is critical as the rapid evolution of ML and data analysis requires updated models that reflect technological innovations.

Following this reasoning, the present study identified new decision-support methodologies that applied PCA and MCDM based on what techniques are employed by the decision-support methods identified concerning the generation of criteria weights, performance evaluations of the alternatives, and what procedures are used to verify the adequacy of the PCA. The SLR will employ a comprehensive search strategy across multiple academic databases to address these objectives, meticulously selecting studies that meet rigorous quality standards. This approach ensures a broad yet detailed exploration of the integration of PCA and MCDM, focusing on their application in decision-making scenarios.

As recent related approaches, Sahoo and Goswami (Citation2023) explored hybrid methods in MCDM, which are crucial for increasing the precision and efficiency of decisions. This research resonates with our goal of integrating PCA and MCDM to enhance data analysis. Concurrently, Li et al. (Citation2023) investigated the use of MCDM in collaborative contexts, emphasizing how group methods can achieve consensus. This point is complemented by Zhou et al. (Citation2018), who demonstrated the application of MCDM with PCA in group decision-making, highlighting the importance of PCA in refining criteria for collaborative decision-making processes and ensuring a fair evaluation of all perspectives involved. Finally, Amor et al. (Citation2023) analyzed the use of MCDM for data classification and clustering. This study supports our approach by showing how PCA can reduce data complexity to facilitate more effective classification. These studies reflect the growing convergence between data analysis techniques and decision-making methods, a field our work aims to expand significantly. By integrating PCA and MCDM, this article not only follows but also advances current trends in operational research, proposing more sophisticated and robust solutions for complex decision-making challenges.

1.2. Relevance

The relevance of this research lies in the lack of reviews addressing the topic in question, representing a gap in the literature. In addition, this review aims to consolidate existing knowledge in this area, identify the current state of using these methodologies, and highlight future innovations from this study. Critical analysis of the existing literature can pave the way for developing new approaches integrating MCDM and PCA, potentially optimizing decision-making in various academic and professional fields and providing more informed and strategic choices.

1.3. Objective

The objective of this SLR is to explore and synthesise the existing knowledge on new decision support methods that employ MCDM methodologies in conjunction with PCA to identify how the weights of the criteria are generated, the evaluation of the performance of the alternatives in addition to the procedures for verifying the adequacy of the proposed model, thus contributing to the advancement of knowledge and improvement of decision-making practices. Both areas play key roles in decision-making and data analysis, providing valuable tools for dealing with complex problems that involve multiple criteria and high dimensionality.

The integration of these two methodologies offers opportunities to improve the evaluation of alternatives and select the best solutions, as already presented in the literature and will be seen in this work. This section examines academic studies that apply this approach to assess various issues in different domains. It is noteworthy that there have been no reviews of the literature that address this topic.

1.4. Structure of this study

In the present study, the introduction is outlined in Section I, which contextualizes the relevance of integrating PCA with MCDM in the academic literature. Section II provides an overview of the work relevant to the topic and critical concept. Section III details the research methodology adopted for the SLR, specifying the databases, selection and exclusion criteria, and the search strategy employed. Section IV presents the results and analysis of the theme, followed by Section V, during which the results and main findings are discussed, emphasizing the identified limitations and implications of the methodologies. Section VI presents threats to validity, and Section VII summarizes the findings, highlighting the contribution of the work to the academic field and outlining recommendations for future investigations.

2. Background and Related Works

This section explores works related to this theme and highlights the fundamental concepts needed to understand the study. As Related Works, six papers stand out, as seen below.

Costa et al. (Citation2022) emphasize the crucial role of MCDM in the military, highlighting the Analytic Hierarchy Process (AHP) as the most used method. The advantages of this work include the ability of MCDM methods to effectively process and synthesize multiple criteria and complex information, facilitating more accurate and rational decisions in defense matters crucial for operational and strategic success. However, a limitation arises from the specific application to military problems, which may restrict the generalization of results to other sectors. Our study complements this approach by analyzing multidisciplinary problems and emphasizing not only MCDM methods but also their capacity along with PCA.

Jolliffe and Cadima (Citation2016) provide a comprehensive review of PCA, explaining its adaptability for various data types and its capacity for dimensionality reduction while retaining critical information. However, a limitation is the study’s exclusive focus on the isolated application of PCA without exploring its integration with MCDM methods. Our study aims to address this gap, demonstrating how combining PCA with MCDM can enhance analyses and decisions in complex contexts.

Greco et al. (Citation2016) provide an overview of MCDM, emphasizing its historical foundations and exploring emerging areas and applications. While this work is pivotal for understanding the theoretical underpinnings and evolution of MCDM, it primarily focuses on traditional methodologies. Despite also covering traditional MCDM methodologies, our emphasis on integrating with ML underscores the importance of decision-support methods increasingly aligned with the rapid evolution of the digital era. These approaches are essential in data-driven decision-making environments today.

Li et al. (Citation2023) delved into MCDM's application in collaborative settings, highlighting the role of group methodologies in facilitating consensus. Their work presents an approach for managing manipulative behaviors in social network group decision-making, employing Stochastic Multi-criteria Acceptability Analysis (SMAA) to ensure fair and unbiased decisions. However, the complexity of the methodology might limit its practical application, particularly where decision-makers lack advanced analytical skills. Our study contrasts this by aiming to simplify these processes through the integration of PCA, reducing the complexity of multicriteria evaluations.

Amor et al. (Citation2023) conducted studies using MCDM to classify and cluster data, demonstrating how these techniques can streamline complex data analysis. While their systematic approach offers a detailed analysis and a structured framework for using MCDM, a potential limitation could be the focus primarily on theoretical aspects, which may not fully address practical implementation challenges or the complexity of real-world data. This contrasts with our study, which conducted an extensive review of research related to practical and everyday problems, exploring how MCDM techniques, in conjunction with PCA, can be effectively applied in diverse and real contexts.

Concurrently, Sahoo and Goswami (Citation2023) examined recent developments in MCDM techniques, including multi-objective methods, fuzzy logic-based approaches, data-driven models, and hybrid methodologies. Integrating diverse techniques and perspectives helps overcome the limitations of isolated methods, promoting a more holistic and robust evaluation of decision scenarios. This capability for synergy between methods is crucial for addressing the increasing complexity of decision-making problems in modern organizations, as seen in our article, by integrating PCA and MCDM.

Our SLR highlights the distinct pathways through which PCA and MCDM have been applied across various domains yet reveals a critical gap in their integrated application. The reviewed works underscore the versatility of MCDM in handling diverse decision-making environments - from military applications to collaborative settings - and the robustness of PCA in simplifying complex datasets through dimensionality reduction. However, the lack of studies combining these strengths indicates a significant oversight in current research. This SLR addresses this oversight by demonstrating how integrating PCA with MCDM enhances the analytical capabilities of decision-support methodologies and simplifies the decision-making process in multidimensional data environments. This work fills an identified gap and sets the stage for future investigations into integrating ML techniques with traditional decision-making models. By doing so, it contributes to the theoretical and practical advancements in the field, offering new perspectives and solutions to complex decision-making challenges encountered in various professional and academic settings.

2.1. MCDM Methods

MCDM methodologies are extensively utilized in decision-making (Tomashevskii & Tomashevskii, Citation2021) and describe formal approaches that explicitly consider multiple criteria to support DMs with specific objectives (Belton & Stewart, Citation2002). These tasks almost universally involve conflicting, nebulous goals, uncertainties, costs, and accruing benefits to various individuals, companies, groups, and other organizations (Keeney et al., Citation1993).

Among the main MCDM methods, the following stand out: AHP (Saaty, Citation1980), Technique for Order Preference by Similarity to Ideal Solution (TOPSIS) (Hwang & Yoon, Citation1981), Geometrical Analysis for Interactive Aid (GAIA) (Figueira et al., Citation2005), Analytic Network Process (ANP) (Niemira & Saaty, Citation2004), VIseKriterijumska Optimizacija I KOmpromisno Resenje (VIKOR) (Luthra et al., Citation2017) and Criteria Importance Though Inter-Criteria Correlation (CRITIC) (Diakoulaki et al., Citation1995). Additionally, the Preference Ranking Organization Method for Enrichment Evaluation (PROMETHEE) (Brans et al., Citation1986) and the ELimination Et Choix Traduisant la REalité (ELECTRE) (Belton & Stewart, Citation2002) are significant. These methods are non-compensatory; they do not synthesize outcomes into a single criterion. Some authors categorize them under Multicriteria Decision Aiding (MCDA) (De Almeida et al., Citation2022; Moreira et al., Citation2022; Pereira et al., Citation2022).

In the context of MCDM methods, performance evaluation refers to the systematic process of analyzing and comparing alternatives based on various criteria relevant to decision-making. A criterion is a unique perspective from which alternatives are evaluated and ranked. Simultaneously, an attribute represents a specific feature of the alternative to which a numerical value can be linked. An objective is an explicit preferred direction articulated regarding an attribute (Stewart, Citation1996).

These criteria can cover a variety of dimensions, such as cost, quality, efficiency, or other specific factors, depending on the context of the decision. Using MCDM methods, scores that quantify the performance of each alternative are generated, allowing objective ordering. These scores facilitate identifying the alternative most aligned with DM goals and preferences, contributing to an informed choice based on multiple criteria (Belton & Stewart, Citation2002).

To evaluate the performance of alternatives, MCDM methods are among the most used for decision-making, intended for structuring and analyzing complex evaluation problems by introducing multiple criteria, some of which conflict with each other (Ploskas & Papathanasiou, Citation2019). These methods allow DMs to structure a decision-making process, considering various aspects of evaluation, such as technical, socioeconomic, and environmental factors, at operational and strategic levels for decision-making (Greco et al., Citation2016). Regarding PCA, Fávero and Belfiore (Citation2019) explained that it is also possible to evaluate the performance of alternatives based on PCA, one of the forms being the sum of the values obtained by the principal components for each observation weighted by the percentages of shared variance of the factors.

Regarding the evaluation of the performance of alternatives by MCDM, some methods stand out: TOPSIS makes this verification based on their proximity to the ideal solution and distance to the non-ideal point in a multidimensional space of criteria; PROMETHEE evaluates the performance of alternatives based on preference indices, with each pair of alternatives being compared against each criterion, and the index aggregated to calculate a global measure of preference, which is used to rank the alternatives; VIKOR evaluates the performance of alternatives using measures of satisfactory and unsatisfactory, seeking to identify the alternative that represents the best compromise between the best overall performance and the most minor discrepancy with respect to these measures; ELECTRE employs a pre-classification approach, in which criteria are compared peer-by-peer to generate matrices of agreement and disagreement; and GAIA is used to graph relative positions, mapping alternatives and criteria in a two-dimensional or three-dimensional space (Belton & Stewart, Citation2002; Brans et al., Citation1986; Figueira et al., Citation2005; Hwang & Yoon, Citation1981).

Criterion weight generation refers to assigning values that quantify each criterion’s importance or relative contribution to the analysis of alternatives. These weights are fundamental to the evaluation process because they reflect the preferences and priorities of the DM regarding the different criteria considered through methods such as direct or parity evaluation. As the methodology evolves, subsequent generations may employ more advanced techniques, such as mathematical models, statistical analysis, or ML algorithms, for more accurate and objective allocation of criterion weights. This evolution in the generations of weights seeks to improve the consistency and reliability of the evaluation process, contributing to more robust decision-making aligned with the needs of the DM (Belton & Stewart, Citation2002).

In this paragraph, we present three methods that stand out in the literature for the generation of weights. The AHP and ANP were calculated through parity evaluation of the criteria by the DM (Hwang & Yoon, Citation1981; Niemira & Saaty, Citation2004). In contrast, CRITIC calculates the weights automatically, making evaluation unnecessary (Diakoulaki et al., Citation1995). These methods are among the most well-known methods for generating criterion weights, whereas others have been released recently.

3. Research methodology

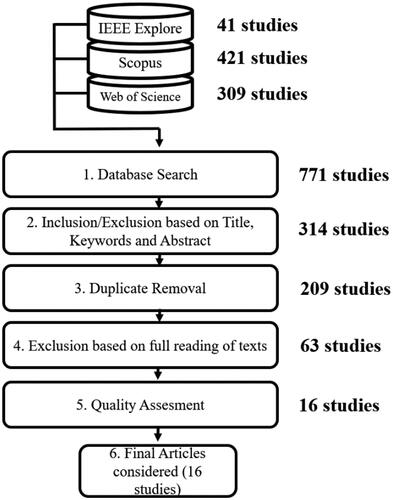

This article was based on the one proposed by Kitchenham (Citation2004), guiding the research in the comprehensive evaluation and interpretation of all available pertinent research related to PCA and MCDM. Studies were searched, selected, and analyzed according to the protocol defined in . Based on the methodological framework proposed by Creswell and Creswell (Citation2017), this study adopts a quantitative methodology, emphasizing the fusion of PCA and MCDM in decision-support methods. The investigation spanned an array of articles published between 2000 and 2023 that were meticulously sourced from databases such as IEEE Explore, Scopus, and Web of Science. The rigorous selection methodology applied a comprehensive quality assessment protocol, culminating in the selection of 16 pioneering studies that exemplify the integration of PCA and MCDM distilled from an initial aggregate of 771 research papers.

3.1. Search strategy

The preliminary phase of this SLR adhered to the PICOC framework (Population, Intervention, Comparison, Outcome, and Context), providing an essential structure for defining the study’s scope and trajectory. The search string was devised by integrating keywords intimately associated with the research theme, supplemented by the strategies proposed by Carrera-Rivera et al. (Citation2022) and Keele (Citation2007). The terminologies linked to each PICOC element are detailed in , which facilitates a rigorous and systematic examination in concert with the research’s predefined goals.

Table 1. PICOC.

Thus, after applying the PICOC, the search string used in this article was: (‘PCA’ OR ‘principal component analysis’) AND (‘multi-criteria’ OR ‘MCDM’ OR ‘MCDA’ OR ‘multicriteria’ OR ‘multi-criteria decision analysis’ OR ‘multi-criteria decision making’ OR ‘multicriteria decision making’ OR ‘multicriteria decision analysis’).

In crafting the search strategy for this SLR, the focus was on unearthing literature that extensively covered the utilization and practices of decision support methods involving MCDM and PCA. This was reflected in the precise search strings employed. The rationale behind forgoing additional search terms for the Outcome and Context categories is anchored in the belief that the comprehensive search string already efficaciously encompasses the spectrum of publications detailing the outcomes of these methodologies and the contexts within which they are operationalized. The Outcome category aims to unveil patterns and voids in MCDM and PCA applications for bolstering decision-making, ascertainable through the studies garnered by the extant search string, thus negating the necessity for supplementary terms. This string’s inclusivity ensures the aggregation of studies addressing the outcomes and ramifications of MCDM and PCA methodologies across diverse decision-making milieus in line with articulated research queries. Regarding Context, the inquiry targets decision-support methods active within academic and societal realms, inherently captured by the terminologies MCDM and PCA. Consequently, there is no impetus to enunciate additional search terms, as the research ambit already incorporates scrutinizing these methods’ employment in assorted practical scenarios, as mirrored in the collated publications.

This methodology guarantees a concentrated and pinpointed review, promoting a detailed and in-depth discourse in the domains demarcated by the research questions. It averts the dilution of the investigative scope with search terms that are either too expensive or too specialized, which could deflect from the investigation’s core thrust.

Thus, to fulfill the objective of this study, the following RQs were formulated:

RQ1: What techniques are employed by the decision-support methods identified concerning the generation of criteria weights and performance evaluations of the alternatives?

RQ2: What procedures are used to verify the adequacy of the PCA?

3.2. Selection method

Various methodologies have been documented in the research on PCA and MCDM. However, as indicated by the findings of this study, SLRs that specifically interrogate the synergistic use of PCA and MCDM in decision support methodologies still need to be included, a gap this article aims to address.

3.2.1. Inclusion and exclusion criteria

In pursuit of a holistic examination, literature from 2000 onwards was scrutinized, adhering to the inclusion and exclusion parameters delineated in . The SLR adhered to a meticulously structured protocol crucial for upholding neutrality and mitigating any inclination toward bias during the research process.

Table 2. Inclusion and exclusion criteria.

The search was conducted using the IEEE Explore, Scopus, and Web of Science databases throughout October 2023. As shown in , the year of publication, type of publication, and language were considered search filters, considering works from 2000 to 2023, articles published in Journals and Conferences, and articles in English.

3.2.2. Quality assessment (QA)

The quality assessment phase was integral to our SLR, adhering to a structured checklist based on the framework of Zhou et al. (Citation2015), which stratifies the quality of studies into four pivotal domains: Reporting, Rigor, Credibility, and Relevance. In this study, each domain underwent a systematic evaluation using a Likert-type scale ranging from 0 to 2, and was codified as QA1 through QA5, as detailed in .

Table 3. Quality assessment.

In executing the quality assessment for study selection, a stringent cut-off threshold was instituted; studies scoring below 8 out of a possible total of 10 were deemed not to meet the requisite standard for inclusion. This threshold was established to ensure the inclusion of only studies with comprehensive and transparent methodological descriptions, thus reflecting substantial rigor and relevance. Moreover, we mandated an exclusion criterion for the generation of weights (QA2) and the performance evaluation of alternatives (QA3). Studies failing to score in these critical areas were excluded from the review, regardless of their overall scores. This measure was used to maintain methodological consistency for MCDM and PCA applications across the selected studies, which is crucial for ensuring the validity and practical relevance of the review’s findings to the field.

As for PCA validation tests, such as the Kaiser, Kaiser-Meyer-Olkin (KMO), and Bartlett tests, although they are considered in the RQs, we chose not to include them in QA. This decision was made with the understanding that the presence or absence of these tests does not intrinsically disqualify a study but instead offers a valuable discussion point. Therefore, they will enrich the discussion and critical analysis of the body of SLR, providing insights into current practices and potential areas for future investigation and methodological improvement in applying PCA within the context of MCDM.

illustrates the steps used to choose the topic’s most relevant documents, as Croft et al. (Citation2023) proposed. As depicted in , the initial phase of the research involved a comprehensive analysis of the articles, starting with an evaluation of titles and abstracts. This preliminary scrutiny was facilitated using metadata and Excel for efficient organization and assessment. Initially, the search yielded 41 studies from IEEE Explore, 421 from Scopus, and 309 from Web of Science, reflecting the wide-ranging interest and research activity in PCA and MCDM decision-support methods.

A complete reading of the articles was then carried out with subsequent quality analysis, leaving 63 articles at the end of the process. By analyzing the 63 articles on the topic in question, after quality assessment, studies that launched new methods to support decision-making with PCA and MCDM were investigated, totaling 16 selected studies. The methodology outlined in exemplifies this study’s rigorous and systematic approach. This highlights the process of selection and refinement that led to the identification of the most impactful and methodologically robust studies within the vast array of literature on PCA and MCDM.

4. Results and analysis

This section presents an analysis of the results obtained in this study. The methodology used in the review aligns with the protocol thoroughly outlined in the previous section, and the results are presented to address the proposed RQs.

lists the 16 studies that launched new methods based on PCA and MCDM. In compiling our SLR, the specific methodologies were not explicitly named in the original studies. Our summary table describes the designations that most accurately encapsulate their functions. This approach facilitates a concise and accurate synthesis of the findings. Each method is designed to meet specific application contexts and broaden the scope of multicriteria analyses. This diversification of approaches suggests the adaptive capacity of these methodologies, as they are applied in various contexts, such as weapon system selection and logistical performance evaluation.

Table 4. Summarized SLR results.

In the 16 studies analyzed in this SLR, applications were verified in several areas, as Zhang et al. (Citation2019) established a methodology leveraging the synergies between AHP and PCA to enhance performance evaluation in logistics; Mishra and Mishra (Citation2018) introduced a model that melds PCA, AHP, and Marketing Flexibility Analysis (MFA) to assess adaptability in the marketing domain; Lee et al. (Citation2010) put forth a method that incorporates AHP, PCA, and GP for weapons system selection; Guo and Zhang (Citation2010) delineated a methodology for performance appraisal of third-party logistics firms, utilizing AHP and PCA; Diaby et al. (Citation2016) devised the ELICIT method, which employs PCA, Monte Carlo simulations, and expert assessments for weight elicitation within healthcare. Zhou et al. (Citation2018) created a model to analyze water resource sustainability, considering group decision-making and employing AHP and PCA; Liu et al. (Citation2018) proposed a methodology for airborne threat assessment by combining Kernel PCA (KPCA) with the TOPSIS technique; Lin et al. (Citation2016) developed a methodology for digital music platform selection, integrating PCA with VIKOR, ANP, and DEMATEL; Abonyi et al. (Citation2022) presented three methods that utilize PCA for the automatic evaluation of criterion weights; Roulet et al. (Citation2002) introduced ORME, a method applying PCA and ELECTRE for building evaluation; Q. Li et al. (Citation2023) introduced the WIRI method for the assessment of water flood risks; Hajiagha et al. (Citation2018) proposed the Total Area based on Orthogonal Vectors (TAOV) that evaluates alternatives using efficient orthogonal vectors; Xianjun et al. (Citation2020) introduced a method utilizing PCA-TOPSIS for practical multi-objective evaluation in Humanware Service; Curran et al. (Citation2020) assess Swiss organic farming’s sustainability using Sustainability Assessment and Monitoring RouTine (SMART) tools and PCA; Curran et al. (Citation2020) proposed an MCDM-AHP-PCA model to enhance government public services; and Stanković et al. (Citation2021) analyzed the circular economy in European Union countries using PCA and PROMETHEE.

4.1. Techniques used to generate the alternatives’ criteria weights and performance evaluations (RQ1)

shows the methods proposed by PCA and MCDM, explaining how the weights and performance evaluation were generated and how the studies performed PCA validation tests. The presented methods cover a variety of contexts and objectives. Specific applications include logistics performance evaluation, marketing flexibility, weapon system selection, evaluation of third-party logistics companies, elicitation of weights in the healthcare area, sustainability analysis of water resources, air threat assessment, digital music platform selection, and water flood risk assessment. Each method, through its specific techniques, offers different approaches to solving problems in other domains, evidencing the diversity of usability of these methodologies in the multicriteria analysis scenario.

According to Parreiras et al. (Citation2010), one of the main factors that denote uncertainty in decision-making is related to the application of weights regarding the respective evaluation criteria, and it is essential and complex to evaluate the level of importance of each criterion within the selected set. Thus, to determine the weights of the criteria, parity evaluation can be applied (Saaty, Citation1980), during which the DM evaluation is considered, or the weights are objectively generated from the data of the dataset analyzed without the need for an opinion on the part of the DM in the analysis of the criteria.

lists the methods used to calculate the weights of the criteria based on the DMs’ evaluation, which represents the consideration of the opinion of the DMs in achieving the problem faced. This trend is demonstrated by the fact that approximately 69% of the methods analyzed employed the subjective evaluation of the DM to generate the weights of the criteria, whereas 31% adopted objective methods. Despite their widespread use, parity evaluation can lead to significant cognitive effort and occasionally result in logical inconsistencies. For instance, when option A is preferred over option B and option B is preferred over option C, logic dictates that option A should be preferred over option C. However, such consistency is only sometimes observed in subjective evaluations.

A review of newly developed methods reveals a spectrum of approaches in the performance evaluation of alternatives. Methods such as PCA-AHP amalgamate PCA and AHP techniques, while others such as ELICIT and AHP-PCA and Communalities do not explicitly produce a performance evaluation. By contrast, the Group AHP-PCA method employs both PCA and AHP to evaluate alternatives, and KPCA-TOPSIS utilizes the TOPSIS method following dimensionality reduction by PCA. Approaches incorporating VIKOR, such as PCA – VIKOR, ANP, and DEMATEL–aim to optimize decision-making through a consensus-oriented approach.

Further methodologies, such as P-SPCA, P-PFA, and P-SRD, utilize PROMETHEE-GAIA for a more detailed and visual analysis of alternatives. ORME adopts sophisticated MCDM methods, ELECTRE III and IV. The WIRI employs TOPSIS for direct, distance-based evaluation, and TAOV focuses purely on the PCA application for assessment. Nevertheless, SMART-PCA combines PCA with managerial decision analysis, offering a more holistic approach.

The critical analysis of the various decision support methods incorporating MCDM and PCA techniques brings to light each approach’s strengths and weaknesses. PCA-AHP and Group AHP-PCA demonstrate robustness in integrating comprehensive criteria assessment, and the latter can overcome the challenges arising from complexity, subjectivity, and lack of consensus within the group in evaluating criteria (Davies, Citation1994). However, they may only fully capture the nuanced preferences of decision-makers with significant input, which can lead to cognitive overload and potential inconsistencies. Conversely, methods such as ELICIT, which do not generate performance evaluations, may offer simplicity and reduce the mental burden at the expense of depth in the decision analysis. The use of TOPSIS in methods such as KPCA-TOPSIS and WIRI provides a clear, quantifiable ranking of alternatives. However, it may oversimplify complex decision contexts, requiring a more nuanced analysis.

Placing these findings within the broader landscape of MCDM literature, it is evident that the pursuit of methodological innovation is both a response to the complexities of modern decision-making environments and a reflection of evolution in the field. The diversity of approaches captured in this SLR points to a vibrant and dynamic domain where traditional methods are being re-evaluated, and new methodologies are being explored to meet better the demands of efficiency, precision, and adaptability. This ongoing evolution underscores the necessity for continuous research, particularly for validating the effectiveness of these methods in practice and their alignment with the theoretical underpinnings of MCDM.

This diversity of methods reflects the evolution of multicriteria decision support and the pursuit of techniques that can provide robust and adaptable analyses for varying decision contexts.

4.2. Procedures for verifying the adequacy of the PCA (RQ2)

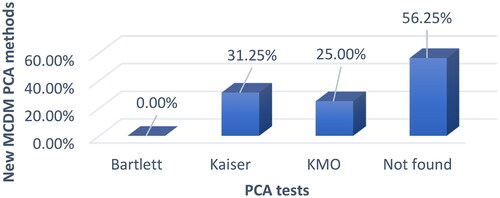

presents various articles that employed tests to validate PCA without explicitly specifying the type of test conducted. While contextual clues allow for the inference of the tests performed in some cases, the validation tests remain unspecified for others. This lack of identification is evident, as approximately 56% of the methods analyzed did not find PCA validation tests.

4.2.1. PCA and tests by Kaiser, Bartlett, and KMO

Factor analysis is a technique that can be employed in two distinct ways: as an exploratory approach to reduce the dimensionality of data and create factors from the original variables, and as a hypothesis confirmation tool, validating the reduction of data to a predefined factor or dimension (Reis, Citation2001). The origins of factor analysis can be traced back to pioneering studies conducted by Pearson (Citation1896) and Spearman (Citation1904). Pearson played a crucial role in developing the mathematical model underlying the concept of correlation, whereas Spearman introduced a methodology for evaluating the relationships between variables. A few decades later, Hotelling (Citation1933) coined the term ‘Principal Component Analysis’ to describe an approach that aims to extract components by optimizing the variance of the original data.

According to Fávero and Belfiore (Citation2017), to extract consistent and reliable information from PCA, established tests are required, such as KMO (Citation1970), Kaiser’s criterion (Ruscio & Roche, Citation2012), and the Bartlett sphericity test (Bartlett, Citation1954).

4.2.1.1. Bartlett sphericity test

This test compares Pearson’s correlation matrix with an equal-dimension identity matrix. If the differences between the principal diagonal values of these two matrices are not statistically significant at a given significance level, it indicates that factor extraction is not appropriate. In this scenario, as Fávero and Belfiore (Citation2017) point out, Pearson’s correlations between each pair of variables are statistically equivalent to zero, making it unfeasible to extract factors based on the original variables. The null H0 and alternative H1 hypotheses of the Bartlett sphericity test are defined according to EquationEquations 1(1)

(1) and Equation2

(2)

(2) , respectively:

(1)

(1)

(2)

(2)

The statistic corresponding to this test is represented by EquationEquation (3)

(3)

(3) :

(3)

(3)

With degrees of freedom, where n is the sample size, k corresponds to the number of variables, and D is the determinant of the matrix of correlations ρ (Fávero & Belfiore, Citation2019).

Following the explanation of Fávero and Belfiore (Citation2017), the Bartlett sphericity test plays the role of verifying, at a certain level of statistical significance and based on the number of degrees of freedom, whether the value resulting from the χ2 Bartlett statistic exceeds the corresponding critical value. When this condition is fulfilled, it is possible to conclude that the Pearson correlations between the pairs of variables are statistically distinct from zero, indicating that extracting factors from the original variables is feasible.

Pearson’s correlation is a metric that evaluates the degree of linear relationship between two metric variables, ranging from −1 to 1 (Egghe & Leydesdorff, Citation2009). When values approach the extremes of this range, a robust linear relationship between the two variables under scrutiny is indicated, which can be highly relevant for extracting a single factor (Fávero & Belfiore, Citation2019). However, when the correlation approaches 0, this suggests that the linear relationship between the two variables is practically non-existent (Fávero & Belfiore, Citation2017; Smith, Citation2015).

4.2.1.2. KMO test

The KMO test is a fundamental tool in the context of PCA, a measure of sample adequacy widely used to assess whether available data are appropriate for applying the dimensional reduction technique. Its primary purpose was to verify that the underlying structure in the data was sufficiently robust to be efficiently extracted by PCA (2017).

When conducting the KMO test, the correlations between the observed variables were evaluated. This analysis aims to determine whether the variables are meaningfully interrelated, which is crucial for PCA validity. The test result was a KMO index ranging from zero to one. Values closer to 1 indicate greater data adequacy for applying PCA (Shrestha, Citation2021). Values above 0.6 are generally considered acceptable, while values above 0.8 suggest excellent adequacy.

In addition to the adequacy indication, the KMO provides information on the redundancy of variables. This highlights whether variables contribute little to the overall structure of the data, which is valuable for the decision to include or exclude variables in the analysis. This ensures that PCA is conducted efficiently, considering only the most relevant variables. Thus, KMO testing plays a critical role in the initial phase of PCA, providing a detailed understanding of the data structure and guiding decisions regarding the feasibility of applying PCA, contributing significantly to more reliable results and accurate interpretations.

4.2.1.3. Kaiser’s test

Ruscio and Roche (Citation2012) highlight the latent root criterion, also known as Kaiser’s criterion, which is based only on the consideration of factors associated with eigenvalues greater than 1, which, according to Fávero and Belfiore (Citation2017), are responsible for the highest percentage of variance shared by the original variables. In this context, F1 (the main factor) is formed by the highest percentage of variance shared by the actual variables and is also referred to as the main factor (Fávero & Belfiore, Citation2017). Based on the latent root criterion, it is presumed that the factor loadings between the factors associated with eigenvalues less than one and all the original variables are relatively low because the most substantial loadings have probably already been attributed to the previously extracted factors with higher eigenvalues (Fávero & Belfiore, Citation2019). Similarly, original variables that share a limited proportion of variance with others tend to exhibit significant factor loadings for only one specific factor. If this situation applies to all the original variables, the disparity between the matrix of correlations ρ and identity matrix I will be minimal, resulting in a very low Bartlett χ2 statistic, which will consequently render the factor analysis inadequate (Fávero & Belfiore, Citation2017).

4.2.2. PCA tests prevalence

Notably, Fávero and Belfiore (Citation2017) stated that the Bartlett test is more prevalent than the KMO test for validating PCA results, and a fact that stands out when the observer observes and is that in none of the methods analyzed was the Bartlett test verified. The test’s non-application in any of the methods scrutinized undermines the robustness of the PCA results and raises questions about the dimensional integrity of the datasets. Given that the Bartlett test provides a crucial check against the identity matrix assumption, its omission could lead to overestimating component relevancy, potentially skewing the decision-making process.

4.3. Bibliometric data

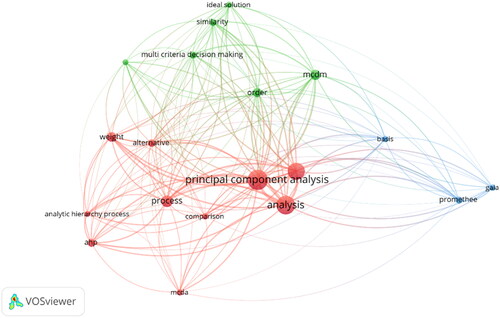

In the initial segment of the bibliometric analysis subsection, it is essential to articulate that bibliometric exploration was undertaken using a more extensive compilation of articles, explicitly employing VOSviewer and Bibliometrix (Aria & Cuccurullo, Citation2017) as analytical tools. This broader dataset comprised 63 articles that incorporated methodologies based on PCA and MCDM before implementing quality assessment criteria. This approach was adopted to provide a comprehensive overview of the prevailing trends and themes within the research landscape, encompassing PCA and MCDM, offering a panoramic view of the field.

The inclusion of these additional articles in the bibliometric analysis, despite their exclusion from the subsequent detailed methodological review post-quality assessment, served a distinct purpose. It allows for a macroscopic examination of the broader research context, encompassing a more comprehensive range of studies that might not have met the stringent criteria for detailed analysis but still contribute valuable insights into the overall patterns and developments within the PCA and MCDM research domains. This bifurcation in the analysis approach ensures the maintenance of the study’s methodological rigor while expanding the scope of the investigation to capture a more holistic view of the academic discourse surrounding PCA and MCDM methodologies. Consequently, this methodological delineation upholds the integrity and reliability of the research, ensuring a balanced and thorough exploration of the subject matter.

As will be seen in this subsection, clusters of keywords, annual production rates, and worldwide production of the studied theme were analyzed.

In , a substantial connection between MCDM and PCA methods can be observed, as evidenced by the links between the most prominent methods in the literature, such as PROMETHEE, GAIA, AHP, ANP, and TOPSIS (represented by the keyword ‘ideal solution’). This analysis highlights the close relationship between the two methodologies and the relevance of their integration in various research and decision-making contexts. In addition, there is a discernible trend in the nodes representing the articles on MCDM, demonstrating a continuous development in this field, confirming the theme’s relevance, and reinforcing the present research’s empirical and theoretical basis.

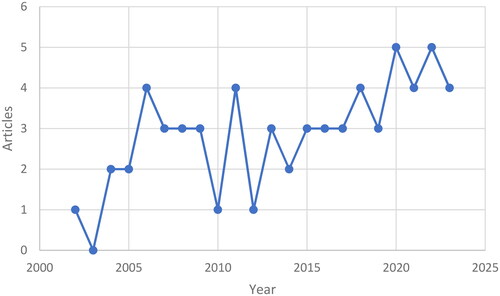

The data in , representing the number of papers published yearly from 2002 to 2023, reveal a growing trend in academic production. It is observed that, throughout this period, the initial production in 2002 registered one work, reaching peaks of four works in 2006, 2011, and again in 2018. In addition, production has recently shown a steady increase, reaching its highest level by 2022, with five papers published. This upward trend suggests a growing interest in the field of study and indicates the continued potential for further research. This increase in production, especially in recent years, reflects the growing relevance and demand for research involving the MCDM and PCA methods, offering a constantly expanding field.

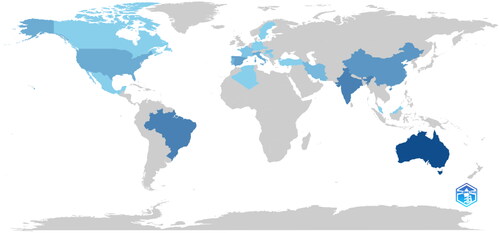

shows production by country, ranging from 1 to 13. The lowest values, such as Germany, Canada, Croatia, Kosovo, Mauritius, Mexico, Serbia, Sweden, Turkey, and other countries with fewer works, are represented in shades closer to the grey spectrum. On the other hand, countries such as Australia, Brazil, India, China, Spain, Italy, and the United States, which present a more expressive number of works, are represented in tones that vary toward the blue spectrum.

5. Discussion

This section discusses the findings of the SLR.

5.1. Objective and subjective weights of criteria

Analyzing the data from Subsection 4.1, it can be concluded that there is a tendency to use the DM's assessment to calculate the weights. The advantage of this choice lies in the fact that opinions are considered in the problem. Conversely, PCA can reduce the amount of data and generate meaningful information for decision-making (Fávero & Belfiore, Citation2019). Following this reasoning, a large amount of data impels many criteria, increasing the number of parity evaluations by the DM.

As seen by Lee et al. (Citation2010), the criteria weights were calculated using PCA and the MCDM-AHP method. Despite being a well-established method in the literature, calculating the weight of the criteria by the AHP impels parity evaluation of the criteria by the DMs, requiring considerable cognitive effort. To carry out parity assessment of the criteria, it is necessary to analysis (Saaty, Citation1980), where

is the number of criteria. Other methods in the literature calculate weights without performing an evaluation. This may be more advantageous, particularly when the dataset has many criteria, which is probable when PCA is used. For example, if there are 20 criteria, you will need

reviews, which is a significant value. Considering alternative methods in the literature that calculate weights without parity evaluation presents a substantial advantage in situations that require agile and accurate decisions.

Looking at , another emerging pattern is notorious: decision-support methods that use PCA and already renowned MCDM models. As an example, there are other methods in the literature, such as the Gaussian-AHP (Dos; Santos et al., Citation2021) and MEthod based on the Removal Effects of Criteria (MEREC) (Keshavarz-Ghorabaee et al., Citation2021), which, although recently, show robust results and can bring a new approach to PCA-MCDM synergy. In addition, the weights were automatically calculated from the information obtained from the dataset with the alternatives and criteria.

Thus, a suggestion for future work arises from the proposal by Ecer and Pamucar (Citation2022), which introduced an MCDM method that adopts an automated approach to assessing criterion weights, eliminating the need for parity assessment between variables. However, Lee et al. (Citation2010) proposed a different approach involving the evaluation of the weights calculated using PCA and AHP, requiring an equal assessment of the criteria. An optimization strategy consists of combining what has been done by Lee et al. (Citation2010) and Ecer and Pamucar (Citation2022); that is, the weight calculation can be performed based on the PCA and the effects of removing the criteria (Keshavarz-Ghorabaee et al., Citation2021), eliminating the need for evaluation by the DM. The proposed approach would unite the best of both methods, combining efficiency with reliability, resulting in a new multicriteria methodology for generating weights.

Furthermore, the automation of weight generation, focusing on efficiency and minimizing the cognitive load of the DM, enhances the practicality of the approach while simultaneously removing subjective elements from the process. This strategy ensures that the weights assigned to the criteria are determined through an objective data-driven method, thus diminishing the impact of personal judgments or biases in decision-making. Consequently, the outcomes are more reliable and replicable, providing a robust foundation for coherent and well-justified decisions.

5.2. Alternative’ assessment

PCA’s ability to reduce the complexity of large multidimensional datasets and create representative indices highlights its remarkable application in alternative performance evaluations. It is particularly effective for dealing with substantial volumes of data (Akande et al., Citation2019; Marsal-Llacuna et al., Citation2015; Wei et al., Citation2016).

After analyzing , the relevance of MCDM methods in generating the performance of the alternatives was verified, as seen in (Abonyi et al., Citation2022; Q. Li et al., Citation2023; Lin et al., Citation2016; Liu et al., Citation2018; Roulet et al., Citation2002), which used the TOPSIS, VIKOR, PROMETHEE-GAIA, and ELECTRE III and IV methods. One factor that needs to be considered is that, as proposed by Dugger et al. (Citation2022), the multiplication of the weights by the values of the main factors, as indicated by Fávero and Belfiore (Citation2019), may result in a smaller number of principal factors than the number of criteria when applying the Kaiser test, which was observed by (Q. Li et al., Citation2023; Lin et al., Citation2016; Zhang et al., Citation2019).

To assert and resolve this discrepancy, mainly to correctly express the amount of information represented by each criterion in the main resulting factors, a consideration arises from expressing the values of the communalities regarding the weights of each criterion to generate the scores of the alternatives. Communalities represent each variable’s total variance in the extracted factors, provided that these factors have eigenvalues greater than 1 (Ruscio & Roche, Citation2012). The consideration of communalities to represent the shared variance between the variables in the extracted factors, which has yet to be explored, can contribute to more accurate and complete assessments, which can be explored as a suggestion for future studies. Integrating communalities within the performance evaluation significantly enhances the extraction of accurate criterion-specific information from the corresponding principal factors. This approach ensures the precise identification of critical elements within each criterion, even when assimilated into more comprehensive components, thereby considerably refining the accuracy and relevance of the analysis for informed decision-making.

Regarding MCDM methods, for criteria with non-beneficial characteristics and higher values indicating less desirable results for DM, it is crucial to apply a specific treatment that considers this behavior (Belton & Stewart, Citation2002). Although it is not directly linked to the focus of this SLR, it was observed in the selected articles that there are still no decision support methods that consider special treatment for nonbeneficial criteria in PCA - incorporating this criterion into PCA augments the fidelity of the methodology in delineating the advantages and disadvantages associated with each alternative. This enhancement ensures that larger values signify less favorable outcomes and are aptly acknowledged and weighted in the analysis. Consequently, PCA aligns more closely with the nuanced criteria of the decision-making process, thus presenting a subtle and balanced perspective. This methodology ensures that the analytical results mirror the critical aspects involved more accurately, yielding more targeted insights for a comparative evaluation of the array of options. Ultimately, this approach imbues the resulting rankings with robustness and trustworthiness.

5.3. PCA results

As seen in Subsection 4.2 and based on the data in , another emerging pattern is observed in the PCA validation tests. The PCA test results were verified in 44% of the methods, making the values from this unsupervised ML technique suitable for use, denoting its reliability (Fávero & Belfiore, Citation2017). A recommendation for subsequent investigations would not only be to perform these tests, such as KMO, Kaiser, and Bartlett (Bartlett, Citation1954; Kaiser, Citation1970; Ruscio & Roche, Citation2012) but also to explain the values obtained and the method of receiving them, providing a more transparent and robust approach to data analysis and contributing to the consistent advancement of knowledge in this domain. Scholars should explicitly detail the PCA validation tests utilized to enhance the methodological rigor of future research. Clear articulation of these procedures will not only bolster the credibility of the research but also facilitate the comparison and replication of study results. Moreover, the absence of specified validation methods hinders the evaluation of the reliability of PCA results, potentially impacting the decision-making process and the interpretation of complex datasets.

Although the discussion chapter of this SLR will delve deeper into the implications of these findings and propose directions for future research, it is worth noting here the necessity for a standardized approach to PCA validation. The adoption of consistent validation practices will contribute to advancing the field by ensuring the accuracy and applicability of PCA in decision support systems.

6. Threats to validity

The credibility of the outcomes of this SLR is considerable, yet it is pertinent to acknowledge the potential limitations that could impact its validity. The investigation was deliberately circumscribed with novel methodologies within MCDM and PCA. Studies that did not explicitly clarify their alignment with the research queries or those deemed hybrid were excluded. This stringent selection criterion was instituted to enrich the granularity and specificity of the analysis concerning methodological advancements in the fusion of PCA and MCDM from 2000 to 2023. Furthermore, while the quality assessment criteria were meticulously adhered to, some influential studies may have been inadvertently excluded from the review.

The initiative to include Mishra and Mishra (Citation2018) article, despite its use of fuzzy logic () while excluding similar articles, was based on the unique approach of this study. Mishra and Mishra (Citation2018) work stood out for its exceptional and innovative integration of fuzzy logic with PCA and MCDM, providing valuable insights and a distinct application that closely aligns with the primary objectives of the SLR. This choice reflects a balance between adhering to the defined scope of the review and recognizing the unique contributions that enhance understanding in the field. The exclusion of other articles with fuzzy logic was a measure to maintain the focus of the SLR, prioritizing studies that more directly addressed the combination of PCA and MCDM unless they presented a particularly notable synergy or methodological innovation, as was the case in Mishra and Mishra (Citation2018) study.

The decision to restrict the search to the Scopus, IEEE Explore, and Web of Science databases without applying the snowballing technique may represent a limitation in the scope of the search, potentially excluding relevant studies available in other sources. While this impact has been minimized by access to comprehensive, multidisciplinary datasets meticulously analyzed by the authors, it is essential to recognize this potential limitation. In addition, the time constraints and focus on the English language may have excluded previous studies and research in other languages that could contribute to additional perspectives on the topic, presenting a possible limitation in generalizing the results.

7. Conclusion

This SLR set out to explore and synthesize how MCDM and PCA are integrated within decision-support methodologies, addressing a significant gap in the existing literature. By systematically analyzing peer-reviewed studies published between 2000 and 2023 from databases such as IEEE Explore, Scopus, and Web of Science, this review successfully evaluated 16 distinct methodologies.

The findings of this SLR highlight gaps and trends in current methodologies. Notably, 56% of the evaluated methods failed to verify PCA test results, raising concerns about the reliability of their outcomes. Furthermore, the absence of the Bartlett test in all reviewed methods underscores a significant oversight in validation practices. Most (69%) of the methods depended on subjective criteria weighting by decision-makers, which may affect the objectivity of the evaluations. The study concluded that communalities, essential for improving decision-making accuracy, were consistently overlooked, and no method adequately addressed non-beneficial criteria, indicating significant potential for methodological improvement.

In response to the growing need to manage large datasets and leverage emerging technologies, innovative methods like Gaussian-AHP and MEREC show a promising direction. These approaches improve the synergy between PCA and MCDM by automating weight calculations directly from the dataset, providing a more objective weight determination method. This evolution suggests an essential path for future research: developing decision support methods that not only automate weight generation but also integrate advanced multicriteria techniques and ensure rigorous validation of results through established PCA tests. It is crucial that future research not only performs but also explicitly discusses validation tests such as KMO, Kaiser, and Bartlett, fostering a more transparent approach to data analysis and the ongoing advancement of knowledge in this domain.

Finally, this SLR contributes to academic and scientific fields by providing a comprehensive and in-depth analysis of the approaches that integrate MCDM and PCA. By elucidating the trends, gaps, and opportunities for improvement identified in the reviewed research, this study offers valuable insights to researchers, practitioners, and decision-makers. These results enrich academic understanding and have practical implications for optimizing decision-making processes in several areas, cementing the importance of this SLR as a valuable guide for future research and practical applications.

Authors contributions

All authors contributed to the development of this SLR. AC, DP, RC, AT, IC, MM, and CJ were responsible for material preparation, design, data collection, interpretation of the data, and analysis. MS and CG contributed significantly, assisting in defining strategies for searching and selecting studies and ensuring comprehensiveness and precision in identifying relevant literature. AC was tasked with drafting the revised version of the document. All authors provided feedback on earlier drafts. Each team member approved the final manuscript, confirming its accuracy and completeness.

Data availability statement

The data and materials supporting the findings of this study are available upon reasonable request. As per the Taylor & Francis ‘Share upon reasonable request’ policy, the authors commit to making the supporting data and materials available to interested researchers. These can be obtained by contacting the corresponding author. It should be noted that this study did not involve any proprietary or confidential data, and sharing of the data does not require additional permissions. Any request for data will be considered in line with ethical guidelines and privacy considerations.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Arthur Pinheiro de Araújo Costa

Arthur Pinheiro de Araújo Costa Pursuing a Master's in Systems and Computing at the Military Institute of Engineering (IME), he holds the rank of Captain-Lieutenant in the Brazilian Navy. A Naval Sciences graduate specializing in Weapons Systems, he earned a postgraduate degree in Electronic Defense from the Pontifical Catholic University of Rio de Janeiro. His research interests include Machine Learning, Electronic Warfare, Decision Support Systems, drones, education, healthcare, energy efficiency, and military applications.

Ricardo Choren

Ricardo Choren received the DSc degree in Informatics from the Pontifical Catholic University of Rio de Janeiro, Brazil. He is currently in the position of full professor with the Military Institute of Engineering (IME). His main research interests include advanced software-engineering methods and analysis tools, software evolution and managing technical debt. He is a member of the Brazilian Computer Society.

Daniel Augusto de Moura Pereira

Daniel Augusto de Moura Pereira Mechanical Production Engineer from the Federal University of Paraíba (UFPB - 2006). Specialist in Occupational Safety Engineering from João Pessoa University Center (2008). Master in Production Engineering from the UFPB (2009). PhD in Architecture (2018) from PPGAU/UFPB. Green Belt, Black Belt, and Master Black Belt in Lean Six Sigma (2019). Post-Doctorate in Production Engineering (2023) from Federal Fluminense University (UFF). Associate Professor at the Federal University of Campina Grande (UFCG). Professor of the Postgraduate Program in Production Engineering at UFF. Founder and Chief Executive Officer (CEO) of the Production Engineering Symposium – SIMEP. He has several Computer Program Records (RPC) deposited at the National Institute of Industrial Property (INPI) and several articles published in the Scopus Base. Creator of the first web platform for Occupational Health and Safety Management (HSTools) and BORDAPP, the first mobile application in Brazil for Multicriteria Decision Making.

Adilson Vilarinho Terra

Adilson Vilarinho Terra Production Engineer from the Federal Fluminense University (UFF), he serves as a partner and Head of Sales at Riverdata, a Brazilian startup specializing in Big Data, Artificial Intelligence, and Machine Learning. During his undergraduate studies, he took on various roles in planning and consultancy for projects in production engineering and business management with Meta Consulting, the university's junior enterprise. He was also a monitor for Analytical Geometry and Vector Calculus at UFF. As a researcher at the Naval Systems Analysis Center (CASNAV), a military organization under the Brazilian Navy, he contributed to Mathematical and Computational Modeling. He is currently pursuing a Master's in Production Engineering at UFF.

Igor Pinheiro de Araújo Costa

Igor Pinheiro de Araújo Costa PhD student in Production Engineering at the Fluminense Federal University (UFF). Master of Science in Production Engineering, with an emphasis in Operations Research (OR), from UFF. Intermediate Officer of the Brazilian Navy, currently at the Captain-Lieutenant Post. He graduated in Naval Sciences from the Brazilian Navy College in Rio de Janeiro (2014), specializing in Machinery and Post-Graduation in Naval Propulsion. Member of the Operational Research UK Society and visiting professor at public and private institutions in OR, Multicriteria Decision Support, and Decision Support Systems.

Claudio de Souza Rocha Junior

Claudio de Souza Rocha Junior Master's student at Federal Fluminense University (UFF), Developer (Angular, NodeJS, React, PHP, MySQL, MongoDB); Data Science (Python/R); Front-End Programming and Data Science Mentor at the social business Mais1Code; Production Engineer, has six software registered with the National Institute of Industrial Property, and five patents deposited with the National Institute of Industrial Property (INPI).

Marcos dos Santos

Marcos dos Santos Military Institute of Engineering (IME)’s Professor, the author, recognized by Stanford University in 2023 as one of the top 1,294 Brazilian scientists globally, boasts an H-30 Scopus index with over 900 published research works. He has served on the Brazilian Society of Operational Research (SOBRAPO) Board from 2019 to 2021. Holding post-doctoral degrees in Space Sciences and Technologies (Aeronautics Technological Institute - ITA) and Production Engineering (Federal Fluminense University - UFF), the author earned a Ph.D. in Production Engineering (UFF) and a Master's in Production Engineering (Alberto Luiz Coimbra Institute of Postgraduate Studies and Engineering Research – COPPE) at Federal University of Rio de Janeiro (UFRJ). Renowned for creating mathematical decision support methods, he authored the book "Computational Tools for Decision Support." Awarded the Annibal Parracho Excellence in Scientific Production in 2021 and the Marechal Trompowski Medal in 2023, the author is a reviewer for 13 journals and coordinates various academic events. With 30 years of service in the Brazilian Navy, he is an Advisor to the Ministry of Defense, contributing to high-level naval decision-making at the Naval Systems Analysis Center (CASNAV) and bringing over two decades of psycho-pedagogical teaching experience.

Carlos Francisco Simões Gomes

Carlos Francisco Simões Gomes PhD in Production Engineering. Appointed Head of the Systems Engineering Department in 2007, later becoming Vice-Director until 2008. He possesses extensive expertise in Information Management (IT) and Information Architecture, emphasizing Risk Management, Multicriteria Decision-Making, Operational Research, and Production Engineering. He served as Vice-President of SOBRAPO from 2006 to 2012. He is an Associate Professor at the Federal Fluminense University (UFF). He was engaged as an adjunct professor at Ibmec and Veiga de Almeida University. He coordinated MBA programs, authored numerous publications, represented Brazil in international negotiations, and contributed significantly to academia, conferences, and various technical journals.

Miguel Ângelo Lellis Moreira

Miguel Ângelo Lellis Moreira Researcher in Mathematical Modeling and Computing at the Naval Systems Analysis Center (CASNAV), specializing in developing mathematical models for data analysis and decision support. He holds a Master's in Systems and Computing from the Military Institute of Engineering (IME), with a dissertation introducing the PROMETHEE-SAPEVO-M1 mathematical method and its computational model (promethee-sapevo.com). Currently a Ph.D. student in Production Engineering at the Federal Fluminense University (UFF), focusing on Decision Support Modeling. He is also a Mentor Professor in Data Science and Analytics at the University of São Paulo (USP), with expertise in unsupervised and supervised machine learning implementations.

References

- Abonyi, J., Czvetkó, T., Kosztyán, Z. T., & Héberger, K. (2022). Factor analysis, sparse PCA, and sum of ranking differences-based improvements of the Promethee-GAIA multicriteria decision support technique. PloS One, 17(2), e0264277. WE - Science Citation Index Expanded (SCI-EXPANDED) https://doi.org/10.1371/journal.pone.0264277

- Akande, A., Cabral, P., Gomes, P., & Casteleyn, S. (2019). The Lisbon ranking for smart sustainable cities in Europe. Sustainable Cities and Society, 44, 475–487. WE - Science Citation Index Expanded (SCI-EXPANDED) WE - Social Science Citation Index (SSCI). https://doi.org/10.1016/j.scs.2018.10.009

- Amor, S. B., Belaid, F., Benkraiem, R., Ramdani, B., & Guesmi, K. (2023). Multi-criteria classification, sorting, and clustering: a bibliometric review and research agenda. Annals of Operations Research, 325(2), 771–793. https://doi.org/10.1007/s10479-022-04986-9

- Aria, M., & Cuccurullo, C. (2017). bibliometrix: An R-tool for comprehensive science mapping analysis. Journal of Informetrics, 11(4), 959–975. https://doi.org/10.1016/j.joi.2017.08.007

- Bartlett, M. S. (1954). A note on the multiplying factors for various χ 2 approximations. Journal of the Royal Statistical Society Series B: Statistical Methodology, 16(2), 296–298. https://doi.org/10.1111/j.2517-6161.1954.tb00174.x

- Belton, V., & Stewart, T. (2002). Multiple criteria decision analysis: an integrated approach. Springer Science & Business Media.

- Brans, J. P., Vincke, P., & Mareschal, B. (1986). How to select and how to rank projects: The Promethee method. European Journal of Operational Research, 24(2), 228–238. https://doi.org/10.1016/0377-2217(86)90044-5

- Carrera-Rivera, A., Ochoa, W., Larrinaga, F., & Lasa, G. (2022). How-to conduct a systematic literature review: A quick guide for computer science research. MethodsX, 9, 101895. https://doi.org/10.1016/j.mex.2022.101895

- Costa, H. G. (2002). Introdução ao Método de Análise de Análise Hierárquica: análise multicritério no auxílio à decisão. Universidade Federal Fluminense, UFF, Niterói.

- Costa, I. P. de A., Costa, A. P. de A., Sanseverino, A. M., Gomes, C. F. S., & Santos, M. d (2022). Bibliometric studies on multi-criteria decision analysis (MCDA) methods applied in military problems. Pesquisa Operacional, 42, 1–26. https://doi.org/10.1590/0101-7438.2022.042.00249414

- Creswell, J. W., & Creswell, J. D. (2017). Research design: Qualitative, quantitative, and mixed methods approaches. Sage publications.

- Croft, R., Xie, Y., & Babar, M. A. (2023). Data preparation for software vulnerability prediction: A systematic literature review. IEEE Transactions on Software Engineering, 49(3), 1044–1063. https://doi.org/10.1109/TSE.2022.3171202

- Curran, M., Lazzarini, G., Baumgart, L., Gabel, V., Blockeel, J., Epple, R., Stolze, M., & Schader, C. (2020). Representative farm-based sustainability assessment of the organic sector in switzerland using the SMART-farm tool. Frontiers in Sustainable Food Systems, 4, 1–18. https://doi.org/10.3389/fsufs.2020.554362

- Davies, M. A. P. (1994). A multicriteria decision model application for managing group decisions. Journal of the Operational Research Society, 45(1), 47–58. https://doi.org/10.1057/jors.1994.6

- De Almeida, I. D. P., Hermogenes, L. R. D. S., De Araújo Costa, I. P., Moreira, M. A. L., Gomes, C. F. S., Dos Santos, M., Costa, D. D. O., & Gomes, I. J. A. (2022). Assisting in the choice to fill a vacancy to compose the PROANTAR team: Applying VFT and the CRITIC-GRA-3N methodology. Procedia Computer Science, 214(C), 478–486). https://doi.org/10.1016/j.procs.2022.11.202

- Diaby, V., Sanogo, V., & Moussa, K. R. (2016). ELICIT: An alternative imprecise weight elicitation technique for use in multi-criteria decision analysis for healthcare. Expert Review of Pharmacoeconomics & Outcomes Research, 16(1), 141–147. WE - Science Citation Index Expanded (SCI-EXPANDED) WE - Social Science Citation Index (SSCI) https://doi.org/10.1586/14737167.2015.1083863

- Diakoulaki, D., Mavrotas, G., & Papayannakis, L. (1995). Determining objective weights in multiple criteria problems: The critic method. Computers & Operations Research, 22(7), 763–770. https://doi.org/10.1016/0305-0548(94)00059-H

- dos; Santos, M., Costa, I. P. de A., & Gomes, C. F. S. (2021). Multicriteria decision-making in the selection of warships: A new approach to the AHP method. International Journal of the Analytic Hierarchy Process, 13(1), 147–169. https://doi.org/10.13033/ijahp.v13i1.833

- Dugger, Z., Halverson, G., McCrory, B., & Claudio, D. (2022). Principal component analysis in MCDM: An exercise in pilot selection. Expert Systems with Applications, 188, 115984. https://doi.org/10.1016/j.eswa.2021.115984

- Ecer, F., & Pamucar, D. (2022). A novel LOPCOW‐DOBI multi‐criteria sustainability performance assessment methodology: An application in developing country banking sector. Omega, 112, 102690. https://doi.org/10.1016/j.omega.2022.102690

- Egghe, L., & Leydesdorff, L. (2009). The relation between Pearson’s correlation coefficient r and Salton’s cosine measure. Journal of the American Society for Information Science and Technology, 60(5), 1027–1036. https://doi.org/10.1002/asi.21009

- Fávero, L. P., & Belfiore, P. (2017). Manual de análise de dados: estatística e modelagem multivariada com Excel®, SPSS® e Stata®. Elsevier Brasil.

- Fávero, L. P., & Belfiore, P. (2019). Data science for business and decision making. Academic Press.

- Figueira, J., Greco, S., & Ehrgott, M. (2005). Multiple criteria decision analysis: state of the art surveys.

- Greco, S., Figueira, J., & Ehrgott, M. (2016). Multiple criteria decision analysis (Vol. 37). Springer.

- Guo, Z., & Zhang, Y. (2010 The third-party logistics performance evaluation based on the AHP-PCA model [Paper presentation]. 2010 International Conference on E-Product E-Service and E-Entertainment, 1–4. https://doi.org/10.1109/ICEEE.2010.5661118

- Hajiagha, S. H. R., Mahdiraji, H. A., & Hashemi, S. S. (2018). Total area based on orthogonal vectors (TAOV) as a novel method of multi-criteria decision aid. Technological and Economic Development of Economy, 24(4), 1679–1694. https://doi.org/10.3846/20294913.2016.1275877

- Hotelling, H. (1933). Analysis of a complex of statistical variables into principal components. Journal of Educational Psychology, 24(6), 417–441. https://doi.org/10.1037/h0071325

- Hwang, C.-L., & Yoon, K. (1981). Methods for multiple attribute decision making. In Multiple attribute decision making (pp. 58–191). Springer.

- Jolliffe, I. T., & Cadima, J. (2016). Principal component analysis: A review and recent developments. Philosophical Transactions. Series A, Mathematical, Physical, and Engineering Sciences, 374(2065), 20150202. https://doi.org/10.1098/rsta.2015.0202

- Jordan, M. I., & Mitchell, T. M. (2015). Machine learning: Trends, perspectives, and prospects. Science (New York, N.Y.), 349(6245), 255–260. https://doi.org/10.1126/science.aaa8415