?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

ESA defines as Earth Observation (EO) Level 2 information product a single-date multi-spectral (MS) image corrected for atmospheric, adjacency and topographic effects, stacked with its data-derived scene classification map (SCM), whose legend includes quality layers cloud and cloud-shadow. No ESA EO Level 2 product has ever been systematically generated at the ground segment. To fill the information gap from EO big data to ESA EO Level 2 product in compliance with the GEO-CEOS stage 4 validation (Val) guidelines, an off-the-shelf Satellite Image Automatic Mapper (SIAM) lightweight computer program was validated by independent means on an annual 30 m resolution Web-Enabled Landsat Data (WELD) image composite time-series of the conterminous U.S. (CONUS) for the years 2006–2009. The SIAM core is a prior knowledge-based decision tree for MS reflectance space hyperpolyhedralization into static color names. Typically, a vocabulary of MS color names in a MS data (hyper)cube and a dictionary of land cover (LC) class names in the scene-domain do not coincide and must be harmonized (reconciled). The present Part 1—Theory provides the multidisciplinary background of a priori color naming. The subsequent Part 2—Validation accomplishes a GEO-CEOS stage 4 Val of the test SIAM-WELD annual map time-series in comparison with a reference 30 m resolution 16-class USGS National Land Cover Data 2006 map, based on an original protocol for wall-to-wall thematic map quality assessment without sampling, where the test and reference maps feature the same spatial resolution and spatial extent, but whose legends differ and must be harmonized.

Keywords:

- Artificial intelligence

- binary relationship

- Cartesian product

- cognitive science

- color naming

- connected-component multilevel image labeling

- deductive inference

- Earth observation

- land cover taxonomy

- high-level (attentive) and low-level (pre-attentional) vision

- hybrid inference

- image classification

- image segmentation

- inductive inference

- machine learning-from-data

- outcome and process quality indicators

- radiometric calibration

- remote sensing

- surface reflectance

- thematic map comparison

- top-of-atmosphere reflectance

- two-way contingency table

- unsupervised data discretization/vector quantization

- validation

PUBLIC INTEREST STATEMENT

Synonym of scene-from-image reconstruction and understanding, vision is an inherently ill-posed cognitive task; hence, it is difficult to solve and requires a priori knowledge in addition to sensory data to become better posed for numerical solution. In the inherently ill-posed cognitive domain of computer vision, this research was undertaken to validate by independent means a lightweight computer program for prior knowledge-based multi-spectral color naming, called Satellite Image Automatic Mapper (SIAM), eligible for automated near real-time transformation of large-scale Earth observation (EO) image datasets into European Space Agency (ESA) EO Level 2 information product, never accomplished to date at the ground segment. An original protocol for wall-to-wall thematic map quality assessment without sampling, where legends of the test and reference map pair differ and must be harmonized, was adopted. Conclusions are that SIAM is suitable for systematic ESA EO Level 2 product generation, regarded as necessary not sufficient pre-condition to transform EO big data into timely, comprehensive and operational EO value-adding information products and services.

1. Introduction

Jointly proposed by the intergovernmental Group on Earth Observations (GEO) and the Committee on Earth Observation Satellites (CEOS), the implementation plan for years 2005–2015 of the Global Earth Observation System of Systems (GEOSS) aimed at systematic transformation of multi-source Earth observation (EO) big data into timely, comprehensive and operational EO value-adding products and services (GEO, Citation2005), submitted to the GEO-CEOS Quality Assurance Framework for Earth Observation (QA4EO) calibration/validation (Cal/Val) requirements and suitable “to allow the access to the Right Information, in the Right Format, at the Right Time, to the Right People, to Make the Right Decisions” (Group on Earth Observation/Committee on Earth Observation Satellites (GEO-CEOS), Citation2010). In this definition of GEOSS, term big data identifies “a collection of data sets so large and complex that it becomes difficult to process using on-hand database management tools or traditional data processing applications. The big data challenges include capture, storage, search, sharing, transfer, analysis and visualization” (Wikipedia, Citation2018a), typically summarized as the five Vs of big data, specifically, volume, variety, velocity, veracity and value (IBM, Citation2016; Yang, Huang, Li, Liu, & Hu, Citation2017).

The GEOSS mission cannot be considered fulfilled by the remote sensing (RS) community to date. This is tantamount to saying the RS community is data-rich, but information-poor, a conjecture known as DRIP syndrome (Bernus & Noran, Citation2017). Before supporting this thesis with observations, the following definition is introduced. In this paper, an EO-IUS is defined in operating mode if and only if it scores “high” in every index of a minimally dependent and maximally informative (mDMI) set of EO outcome and process (OP) quantitative quality indicators (Q2Is), to be community-agreed upon to be used by members of the community, in agreement with the GEO-CEOS QA4EO Cal/Val guidelines (GEO-CEOS, Citation2010). A proposed instantiation of mDMI set of EO OP-Q2Is includes: (i) degree of automation, inversely related to human-machine interaction, (ii) effectiveness, e.g., thematic mapping accuracy, (iii) efficiency in computation time and in run-time memory occupation, e.g., inversely related to the number of system’s free-parameters to be user-defined based on heuristics, (iv) robustness (vice versa, sensitivity) to changes in input data, (v) robustness to changes in input parameters to be user-defined, (vi) scalability to changes in user requirements and in sensor specifications, (vii) timeliness from data acquisition to information product generation, (viii) costs in manpower and computer power, (ix) value, e.g., semantic value of output products, economic value of output services, etc. (Baraldi, Citation2017, Citation2009; Baraldi & Boschetti, Citation2012a, Citation2012b; Baraldi, Boschetti, & Humber, Citation2014; Baraldi et al., Citation2010a, Citation2010b; Baraldi, Gironda, & Simonetti, Citation2010c; Duke, Citation2016). According to the Pareto formal analysis of multi-objective optimization problems, optimization of an mDMI set of OP-Q2Is is an inherently-ill posed problem in the Hadamard sense (Hadamard, Citation1902), where many Pareto optimal solutions lying on the Pareto efficient frontier can be considered equally good (Boschetti, Flasse, & Brivio, Citation2004). Any EO-IUS solution lying on the Pareto efficient frontier can be considered in operating mode, therefore suitable to cope with the five Vs of spatio-temporal EO big data (Yang et al., Citation2017).

Stating that to date the RS community is affected by the DRIP syndrome is like saying that past and present EO image understanding systems (EO-IUSs) have been typically outpaced by the rate of data collection of EO imaging sensors, whose quality and quantity are ever-increasing at an apparently exponential rate related to the Moore law of productivity (National Aeronautics and SpaceAdministration (NASA), Citation2016a). In common practice, EO-IUSs are overwhelmed by sensory data they are unable to transform into EO value-adding information products and services, in compliance with the GEO-CEOS QA4EO Cal/Val guidelines (GEO-CEOS, Citation2010). If this conjecture holds true, then existing EO-IUSs cannot be considered in operating mode because unsuitable to cope with the five Vs of spatio-temporal EO big data (Yang et al., Citation2017). Several observations (true-facts) support this thesis. First, in 2012 the percentage of EO data ever downloaded from the European Space Agency (ESA) databases was estimated at about 10% or less (D’Elia Citation2012). This estimate is equal or superior (never inferior) to the percentage of ESA EO data ever used by the RS community. Since 2012, the same EO data exploitation indicator is expected to decrease, because any increase in productivity of existing EO-IUSs seems unable to match the exponential increase in the rate of collection of EO sensory data (NASA, Citation2016a). Second, EO-IUSs presented in the RS literature are typically assessed and compared based on the sole thematic mapping accuracy, which means their mDMI set of EO OP-Q2Is remains largely unknown to date (Baraldi & Boschetti, Citation2012a, Citation2012b). As a consequence, the RS literature is unable to contradict the thesis that no EO-IUS is available in operating mode. For example, when EO data-derived thematic maps were generated by EO-IUSs based on a supervised (labeled) data learning approach, at continental or global spatial extent and with estimated accuracy not inferior to a target mapping accuracy requirement, the most limiting factors turned out to be the cost, timeliness, quality and availability of adequate supervised training data samples collected from field sites, existing maps or geospatial data archives in tabular form (Gutman et al., Citation2004). Third, no ESA EO data-derived Level 2 information product has ever been systematically generated at the ground segment (DLR and VEGA Citation2011; ESA Citation2015). In the ESA definition, an EO Level 2 information product is a single-date multi-spectral (MS) image radiometrically calibrated into surface reflectance (SURF) values corrected for atmospheric, adjacency and topographic effects, stacked with its data-derived scene classification map (SCM), whose legend includes quality layers cloud and cloud-shadow, starting from an ESA EO Level 1 product geometrically corrected and radiometrically calibrated into top-of-atmosphere reflectance (TOARF) values (European Space Agency (ESA), Citation2015; Deutsches Zentrum für Luft- und Raumfahrt e.V. (DLR) and VEGA Technologies, Citation2011; CNES, Citation2015).

This last observation deserves further discussion. In the words of Marr, “vision goes symbolic almost immediately without loss of information” (Marr, Citation1982). In agreement with this Marr’s intuition, ESA defines as EO Level 2 information product an information primitive (unit of information) consisting of a stack of two coupled (inter-dependent) variables, one sub-symbolic/numeric and one symbolic, where symbolic variable means both categorical and semantics. Equivalent to two sides of the same coin, these two variables are very closely related to each other and cannot be separated, even though they seem different. The first side of the ESA EO Level 2 information primitive is a multivariate numeric variable of the highest radiometric quality, related to the concept of quantitative/unequivocal information-as-thing in the terminology of philosophical hermeneutics (Capurro & Hjørland, Citation2003), see Figure . The second side of the ESA EO Level 2 information unit is an EO data-derived SCM, equivalent to a categorical variable of semantic value, related to the concept of qualitative/equivocal information-as-data-interpretation in the terminology of philosophical hermeneutics (Capurro & Hjørland, Citation2003). In practice, ESA EO Level 2 product generation is a chicken-and-egg dilemma (Riano, Chuvieco, Salas, & Aguado, Citation2003), synonym of inherently ill-posed problem in the Hadamard sense (Hadamard, Citation1902). Therefore, it is very difficult to solve and requires a priori knowledge in addition to data to become better posed for numerical solution (Cherkassky & Mulier, Citation1998). On the one hand, no effective and efficient Cal of digital numbers (DNs) into SURF values corrected for atmospheric, topographic and adjacency effects is possible without an SCM, available a priori in addition to data to enforce a statistical stratification principle (Hunt & Tyrrell, Citation2012), synonym of layered (class-conditional) data analytics (Baraldi, Citation2017; Baraldi et al., Citation2010b; Baraldi & Humber, Citation2015; Baraldi, Humber, & Boschetti, Citation2013; Bishop & Colby, Citation2002; Bishop, Shroder, & Colby, Citation2003; DLR & VEGA, Citation2011; Dorigo, Richter, Baret, Bamler, & Wagner, Citation2009; Lück & van Niekerk, Citation2016; Riano et al., Citation2003; Richter & Schläpfer, Citation2012a, Citation2012b; Vermote & Saleous, Citation2007). On the other hand, no effective and efficient understanding (mapping) of a sub-symbolic EO image into a symbolic SCM is possible if DNs (pixels) are affected by low radiometric quality (GEO-CEOS, Citation2010). In an ESA EO Level 2 SCM product to be generated at the ground segment (midstream) as input to downstream applications, products and services (Mazzuccato & Robinson, Citation2017), the SCM legend is required to consist of a discrete, finite and hierarchical (multilevel) dictionary (Lipson, Citation2007; Mather, Citation1994; Swain & Davis, Citation1978) of general-purpose, user- and application-independent land cover (LC) classes, whose semantic value is “shallow” (not specialized) in hierarchy, but superior to zero semantics typical of numeric variables, in addition to quality layers cloud and cloud-shadow (ESA, Citation2015; DLR & VEGA, Citation2011; CNES, Citation2015). To our best knowledge, only one prototypical implementation of a sensor-specific ESA EO Level 2 product generator exists to date. Commissioned by ESA, the Sentinel 2 (atmospheric) Correction Prototype Processor (SEN2COR) is not run systematically at the ESA ground segment. Rather, it can be downloaded for free from the ESA web site to be run on user side (European Space Agency (ESA), Citation2015; Deutsches Zentrum für Luft- und Raumfahrt e.V. (DLR) and VEGA Technologies, Citation2011).

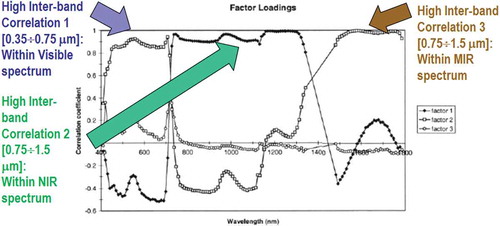

Figure 1. Courtesy of van der Meer and De John (2000). Pearson’s cross-correlation (CC) coefficients for the main factors resulting from a principal component analysis and factor rotation 1, 2 and 3 for an agricultural data set based on spectral bands of the AVIRIS hyper-spectral (HS) spectrometers. Flevoland test site, 5 July 1991. Inter-band CC values are “high” (>0.8) within the visible spectral range, the Near Infra-Red (NIR) wavelengths and the Medium IR (MIR) wavelengths. The general conclusion is that, irrespective of non-stationary local information, the global (image-wide) information content of a multi-channel image, either multi-spectral (MS) whose number N of spectral channels ∈ {2, 9}, super-spectral (SS) with N ∈ {10, 20}, or HS image with N > 20, can be preserved by selecting one visible band, one NIR band, one MIR band and one thermal IR (TIR) band, such as in the spectral resolution of the imaging sensor series National Oceanic and Atmospheric Administration (NOAA) Advanced Very High Resolution Radiometer (AVHRR), in operating mode from 1978 to date.

Noteworthy, a National Aeronautics and Space Administration (NASA) EO Level 2 product is defined as “a data-derived geophysical variable at the same resolution and location as Level 1 source data” (NASA, Citation2016b). Hence, dependence relationship “NASA EO Level 2 product → ESA EO Level 2 product” holds, where symbol “→” denotes relationship part-of pointing from the supplier to the client, in agreement with the standard Unified Modeling Language (UML) for graphical modeling of object-oriented software (Fowler, Citation2003), see Figure . This dependence means that although space agencies and EO data distributors claim systematic NASA EO Level 2 product generation at the ground segment, this does not imply systematic ESA EO Level 2 product generation. Rather, the vice versa holds: if ESA EO Level 2 product generation is accomplished, then NASA EO Level 2 product generation is also fulfilled.

Figure 2. See Footnotenote.

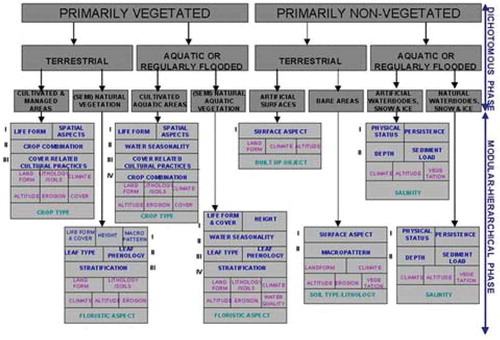

Different from the non-standard SEN2COR SCM legend instantiation (ESA, Citation2015; DLR & VEGA, Citation2011), one example of general-purpose, user- and application-independent ESA EO Level 2 SCM legend is the standard (community-agreed) 3-level 8-class dichotomous phase (DP) taxonomy of the Food and Agriculture Organization of the United Nations (FAO)—Land Cover Classification System (LCCS) (Di Gregorio & Jansen, Citation2000). The FAO LCCS-DP hierarchy is “fully nested”. It comprises three dichotomous LC class-specific information layers, equivalent to a world ontology or world model (Di Gregorio & Jansen, Citation2000; Matsuyama & Hwang, Citation1990): DP Level 1—Vegetation versus non-vegetation, DP Level 2—Terrestrial versus aquatic and DP Level 3—Managed versus natural or semi-natural. The 3-level 8-class FAO LCCS-DP taxonomy is shown in Figure . For the sake of generality, a 3-level 8-class FAO LCCS-DP legend is added with LC class “other”, synonym of “rest of the world” or “unknown”, which would include quality information layers cloud and cloud-shadow. In traditional EO image classification system design and implementation requirements (Swain & Davies, Citation1978), the presence of output class “unknown” is considered mandatory to cope with uncertainty in information-as-data-interpretation tasks. Hereafter, the standard 3-level 8-class FAO LCCS-DP legend added with the mandatory output class “other”, which includes quality layers cloud and cloud-shadow, is identified as “augmented” 9-class FAO LCCS-DP taxonomy. In the complete two-phase FAO LCCS hierarchy, a general-purpose 3-level 8-class FAO LCCS-DP legend is preliminary to a high-level application-dependent and user-specific FAO LCCS Modular Hierarchical Phase (MHP) taxonomy, consisting of a hierarchical (deep) battery of one-class classifiers (Di Gregorio & Jansen, Citation2000), see Figure . In recent years, the two-phase FAO LCCS taxonomy has become increasingly popular (Ahlqvist, Citation2008). One reason of this popularity is that the FAO LCCS hierarchy is “fully nested” while alternative LC class hierarchies, such as the Coordination of Information on the Environment (CORINE) Land Cover (CLC) taxonomy (Bossard, Feranec, & Otahel, Citation2000), the U.S. Geological Survey (USGS) Land Cover Land Use (LCLU) taxonomy by J. Anderson (Lillesand & Kiefer, Citation1979), the International Global Biosphere Programme (IGBP) DISCover Data Set Land Cover Classification System (Belward, Citation1996) and the EO Image Librarian LC class legend (Dumitru, Cui, Schwarz, & Datcu, Citation2015), start from a Level 1 taxonomy which is already multi-class. In a hierarchical EO-IUS architecture submitted to a garbage in, garbage out (GIGO) information principle, synonym of error propagation through an information processing chain, the fully-nested two-phase FAO LCCS hierarchy makes explicit the full dependence of high-level EO OP-Q2I estimates, featured by any high-level (deep) LCCS-MHP data processing module, on previous EO OP-Q2I values featured by lower-level LCCS modules, starting from the initial FAO LCCS-DP Level 1 vegetation/non-vegetation information layer whose relevance in thematic mapping accuracy (vice versa, in error propagation) becomes paramount for all subsequent LCCS layers. The GIGO commonsense principle applied to hierarchical semantic dependence is neither trivial nor obvious to underline (Marcus, Citation2018). On the one hand, it agrees with a minor portion of the RS literature where supervised data learning classification of EO image datasets at continental or global spatial extent into binary LC class vegetation/non-vegetation is considered very challenging (Gutman et al., Citation2004). On the other hand, it is at odd with the RS mainstream, where the semantic information gap from sub-symbolic EO data to multi-class LC taxonomies is typically filled in one step, implemented as a supervised data learning classifier (Bishop, Citation1995; Cherkassky & Mulier, Citation1998), e.g., a support vector machine, random forest or deep convolutional neural network (DCNN) (Cimpoi, Maji, Kokkinos, & Vedaldi, Citation2014), which is equivalent to an unstructured black box (Marcus, Citation2018), inherently semiautomatic and site specific (Liang, Citation2004) and whose opacity contradicts the well-known engineering principles of modularity, regularity and hierarchy typical of scalable systems (Lipson, Citation2007).

Figure 3. The fully nested 3-level 8-class FAO Land Cover Classification System (LCCS) Dichotomous Phase (DP) taxonomy consists of a sorted set of 3 dichotomous layers: (i) vegetation versus non-vegetation, (ii) terrestrial versus aquatic, and (iii) managed versus natural or semi-natural. They deliver as output the following 8-class LCCS-DP taxonomy. (A11) Cultivated and Managed Terrestrial (non-aquatic) Vegetated Areas. (A12) Natural and Semi-Natural Terrestrial Vegetation. (A23) Cultivated Aquatic or Regularly Flooded Vegetated Areas. (A24) Natural and Semi-Natural Aquatic or Regularly Flooded Vegetation. (B35) Artificial Surfaces and Associated Areas. (B36) Bare Areas. (B47) Artificial Waterbodies, Snow and Ice. (B48) Natural Waterbodies, Snow and Ice. The general-purpose user- and application-independent 3-level 8-class FAO LCCS-DP taxonomy is preliminary to a user- and application-specific FAO LCCS Modular Hierarchical Phase (MHP) taxonomy of one-class classifiers.

Starting from these premises our working hypothesis was that necessary not sufficient pre-condition for a yet-unfulfilled GEOSS development (GEO, Citation2005) is systematic generation at the ground segment of an ESA EO Level 2 product, never accomplished to date (ESA, Citation2015; DLR & VEGA, Citation2011), whose general-purpose SCM product is constrained as follows. First, the ESA EO Level 2 SCM legend agrees with the 3-level 9-class “augmented” FAO LCCS-DP taxonomy. Second, to comply with the GEO-CEOS QA4EO Cal/Val requirements, the SCM product must be submitted to a GEO-CEOS stage 4 Val, where an mDMI set of EO OP-Q2Is is evaluated by independent means at large spatial-extent and multiple time periods (GEO-CEOS, Citation2010). By definition, a GEO-CEOS stage 3 Val requires that “spatial and temporal consistency of the product with similar products are evaluated by independent means over multiple locations and time periods representing global conditions. In Stage 4 Val, results for Stage 3 are systematically updated when new product versions are released and as the time-series expands” (GEO-CEOS WGCV, Citation2015).

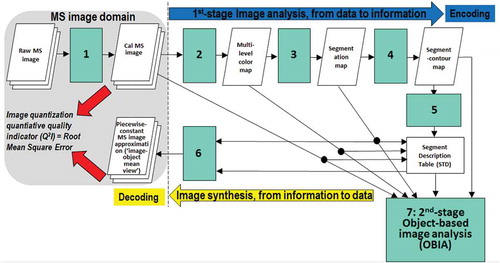

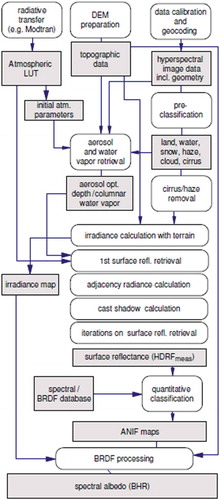

According to our working hypothesis, to contribute toward filling an analytic and pragmatic information gap from multi-source EO big data to ESA EO Level 2 information product as necessary not sufficient pre-condition to GEOSS development, the primary goal of this interdisciplinary study was to undertake an original (to the best of these authors’ knowledge, the first) outcome and process GEO-CEOS stage 4 Val of an off-the-shelf lightweight computer program, the Satellite Image Automatic Mapper™ (SIAM™), presented in recent years in the RS literature where enough information was provided for the implementation to be reproduced (Baraldi, Citation2017; Baraldi & Boschetti, Citation2012a, Citation2012b; Baraldi et al., Citation2010a, Citation2010b, Citation2010c; Baraldi & Humber, Citation2015; Baraldi et al., Citation2013; Baraldi, Puzzolo, Blonda, Bruzzone, & Tarantino, Citation2006, 2015; Baraldi, Tiede, Sudmanns, Belgiu, & Lang, Citation2016). Implemented in operating mode in the C/C++ programming language, an off-the-shelf SIAM software executable runs: (i) automatically, i.e., it requires no human-machine interaction, (iii) in near real-time because it is non-iterative, more specifically it is one-pass, with a single subsystem which is two-pass (refer to the text below), and its computational complexity increases linearly with image size, and (iii) in tile streaming mode, i.e., it requires a fixed run-time memory occupation. In addition to running on laptop and desktop computers, the SIAM lightweight computer program is eligible for use as mobile software application. By definition, a mobile software application is a lightweight computer program specifically designed to run on web services and/or mobile devices, such as tablet computers and smartphones, eventually provided with a mobile graphic user interface (GUI). An off-the-shelf SIAM software executable comprises six non-iterative subsystems for automated MS image analysis (decomposition) and synthesis (reconstruction) in linear time complexity. Its core is a one-pass prior knowledge-based decision tree (expert system) for MS reflectance space hyperpolyhedralization into static (non-adaptive-to-data) color names. Sketched in Figure , the SIAM software architecture is summarized as follows.

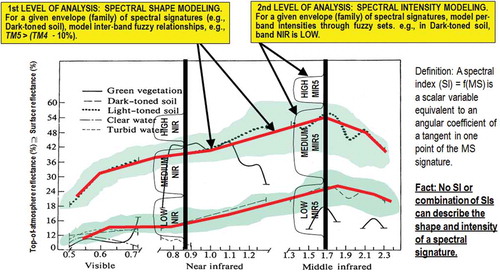

MS data radiometric calibration, in agreement with the GEO-CEOS QA4EO Cal requirements (GEO-CEOS, Citation2010). The SIAM expert system instantiates a physical data model; hence, it requires as input sensory data provided with a physical meaning. Specifically, DNs must be radiometrically Cal into a physical unit of radiometric measure to be community-agreed upon, such as TOARF values, SURF values or Kelvin degrees for thermal channels. Relationship TOARF ⊇ SURF holds because SURF is a special case of TOARF in clear sky and flat terrain conditions (Chavez, Citation1988), i.e., TOARF ≈ SURF + atmospheric noise + topographic effects + surface adjacency effects. In a spectral decision tree for MS color space hyperpolyhedralization (partitioning), this relationship means that MS hyperpolyhedra (envelopes, manifolds) in “noisy” TOARF values include “noiseless” hyperpolyhedra in SURF values as special case of the former according to relationship subset-of, while the vice versa does not hold, see Figure .

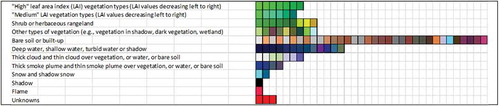

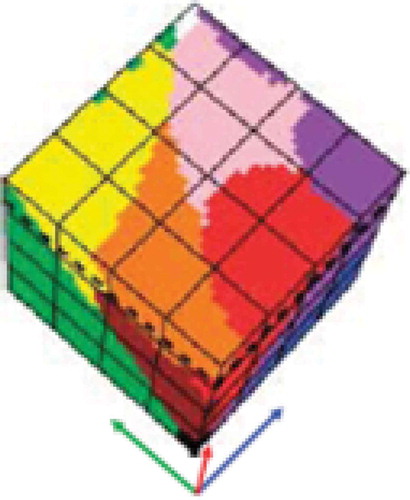

One-pass prior knowledge-based SIAM decision tree for MS reflectance space hyperpolyhedralization into three static codebooks (vocabularies) of sub-symbolic/semi-symbolic color names as codewords, see Figure . Provided with inter-level parent–child relationships, the SIAM’s three-level vocabulary of static color names features a ColorVocabularyCardinality value which decreases from fine to intermediate to coarse, refer to Table and Figure . MS reflectance space hyperpolyhedra for color naming are difficult to think of and impossible to visualize when the MS data space dimensionality is superior to three. This is not the case of basic color (BC) names adopted in human languages (Berlin & Kay, Citation1969), whose mutually exclusive and totally exhaustive perceptual polyhedra, neither necessarily convex nor connected, are intuitive to think of and easy to visualize in a 3D monitor-typical red-green-blue (RGB) data cube, see Figure (Benavente, Vanrell, & Baldrich, Citation2008; Griffin, Citation2006). When each pixel of an MS image is mapped onto a color space partitioned into a set of mutually exclusive and totally exhaustive hyperpolyhedra equivalent to a vocabulary of BC names, then a 2D multilevel color map (2D gridded dataset of a multilevel variable) is generated automatically (without human-machine interaction) in near real-time (with computational complexity increasing linearly with image size), where the number k of 2D map levels (color strata, color names) belongs to range {1, ColorVocabularyCardinality}. Popular synonyms of measurement space hyperpolyhedralization (discretization, partition) are vector quantization (VQ) in inductive machine learning-from-data (Cherkassky & Mulier, Citation1998; Elkan, Citation2003; Fritzke, Citation1997a, Citation1997b; Lee, Baek, & Sung, Citation1997; Linde, Buzo, & Gray, Citation1980; Lloyd, Citation1982; Patanè and Russo, Citation2001, Citation2002), and deductive fuzzification of a numeric variable into fuzzy sets in fuzzy logic (Zadeh, Citation1965). Typical inductive learning-from-data VQ algorithms aim at minimizing a known VQ error function, e.g., a root mean square vector quantization error (RMSE), given a number of k discretization levels selected by a user based on a priori knowledge and/or heuristic criteria. One of the most widely used VQ heuristics in RS and computer vision (CV) applications is the k-means VQ algorithm (Elkan, Citation2003; Lee et al., Citation1997; Linde et al., Citation1980; Lloyd, Citation1982), capable of convex Voronoi tessellation of a multi-variate data space (Cherkassky & Mulier, Citation1998; Fritzke, Citation1997a). For example, in a bag-of-words model applied to CV tasks, a numeric color space is typically discretized into a categorical color variable (codebook of codewords) by an inductive VQ algorithm, such as k-means; next, the categorical color variable is simplified by a 1st-order histogram representation, which disregards word grammar, semantics and even word-order, but keeps multiplicity; finally, the frequency of each color codeword is used as a feature for training a supervised data learning classifier (Cimpoi et al., Citation2014). Unlike the k-means VQ algorithm where the system’s free-parameter k is user-defined based on heuristics and the VQ error is estimated from the unlabeled dataset at hand, a user can fix the target VQ error value, so that it is the free-parameter k to be dynamically learned from the finite unlabeled dataset at hand by an inductive VQ algorithm (Patané & Russo, Citation2001, Citation2002), such as ISODATA (Memarsadeghi, Mount, Netanyahu, & Le Moigne, Citation2007). It means there is no universal number k of static hyperpolyhedra in a vector data space suitable for satisfying any VQ problem of interest if no target VQ error is specified in advance. As a viable strategy to cope with the inherent ill-posedness of inductive VQ problems (Cherkassky & Mulier, Citation1998), the SIAM expert system provides its three pre-defined VQ levels with a per-pixel RMSE estimation required for VQ quality assurance, in compliance with the GEO-CEOS QA4EO Val guidelines, refer to point (6) below.

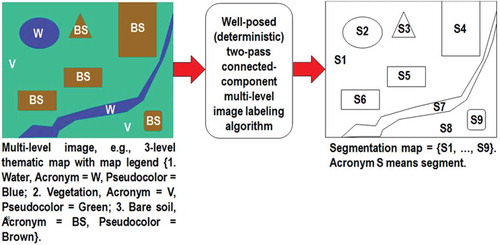

Well-posed (deterministic) two-pass detection of connected-components in the multilevel color map-domain (Dillencourt, Samet, & Tamminen, Citation1992; Sonka, Hlavac, & Boyle, Citation1994), where the number k of map levels belongs to range {1, ColorVocabularyCardinality}, see Figure . These discrete and finite connected-components consist of connected sets of pixels featuring the same color label. Each connected-component is either (0D) pixel, (1D) line or (2D) polygon in the Open Geospatial Consortium (OGC) nomenclature (OGC Citation2015). They are typically known as superpixels in the CV literature (Achanta et al., Citation2011), homogeneous segments or image-objects in the object-based image analysis (OBIA) literature (Blaschke et al., Citation2014; Matsuyama & Hwang, Citation1990; Nagao & Matsuyama, Citation1980; Shackelford & Davis, Citation2003a, Citation2003b), and texture elements, i.e., texels, in human vision (Julesz, Citation1986; Julesz, Gilbert, Shepp, & Frisch, Citation1973). Whereas the physical model-based SIAM expert system requires no human-machine interaction to detect top-down superpixels whose shape and size can be any, superpixels detected bottom-up in statistical model-based CV algorithms typically require a pair of statistical model’s free-parameters to be user-defined based on heuristics, such as a first heuristic-based geometric threshold equal to the superpixel maximum area and a second heuristic-based geometric threshold forcing a superpixel to stay compact in shape (Achanta et al., Citation2011). In a multilevel image domain where k is the number of levels (image-wide strata), individual (0D) pixels with label 1 to k, superpixels as connected sets of pixels featuring the same label 1 to k, and strata (layers), equal to discrete and finite collections of superpixels, mutually disjoint, but belonging to the same level 1 to k, co-exist as non-alternative labeled spatial units provided with a parent–child relationship, where each superpixel is a 2-tuple (superpixel ID, level 1-of-k) and each pixel is a 2-tuple (raw-column coordinate pair, superpixel ID), see Figure .

Well-posed 4- or 8-adjacency cross-aura representation in linear time of superpixel-contours, see Figure . These cross-aura contour values allow estimation of a scale-invariant planar shape index of compactness (Baraldi, Citation2017; Baraldi & Soares, Citation2017; Soares, Baraldi, & Jacobs, Citation2014), eligible for use by a high-level OBIA approach (Blaschke et al., Citation2014), see Figure .

Superpixel/segment description table (Matsuyama & Hwang, Citation1990; Nagao & Matsuyama, Citation1980), to describe superpixels in a 1D tabular form (list) in combination with their 2D raster representation in the image-domain, referred to as “literal bit map” by Marr (Citation1982), to take advantage of each data structure and overcome their shortcomings. Computationally, local spatial searches are more efficient in the 2D raster image-domain than in the 1D list representation, because “most of the spatial relationships that must be examined in early vision (encompassing the raw and full primal sketch for token detection and texture segmentation, respectively) are rather local” (Marr, Citation1982). Vice versa, if we had to examine global or “scattered, pepper-and-salt-like (spatial) configurations, then a (2D) bit map would probably be no more efficient than a (1D) list” (Marr, Citation1982).

Superpixelwise-constant input image approximation (reconstruction), also known as “image-object mean view” in commercial OBIA applications (Trimble, Citation2015), followed by a per-pixel RMSE estimation between the original MS image and the reconstructed piecewise-constant MS image. This VQ error estimation strategy enforces a product quality assurance policy considered mandatory by the GEO-CEOS QA4EO Val guidelines. For example, VQ quality assurance supported by SIAM allows a user to adopt quantitative (objective) criteria in the selection of pre-defined VQ levels, equivalent to color names, to fit user- and application-specific VQ error requirement specifications.

Table 1. The SIAM computer program is an EO system of systems scalable to any past, existing or future MS imaging sensor provided with radiometric calibration metadata parameters

Figure 4. The SIAM lightweight computer program for prior knowledge-based MS reflectance space hyperpolyhedralization into color names, superpixel detection and vector quantization (VQ) quality assessment. It consists of six subsystems, identified as 1 to 6. Phase 1-of-2 = Encoding phase/Image analysis—Stage 1: MS data calibration into top-of-atmosphere reflectance (TOARF) or surface reflectance (SURF) values. Stage 2: Prior knowledge-based SIAM decision tree for MS reflectance space partitioning (quantization, hyperpolyhedralization). Stage 3: Well-posed (deterministic) two-pass connected-component detection in the multilevel color map-domain. Connected-components in the color map-domain are connected sets of pixels featuring the same color label. These connected-components are also called image-objects, segments or superpixels. Stage 4: Well-posed superpixel-contour extraction. Stage 5: Superpixel description table allocation and initialization. Phase 2-of-2 = Decoding phase/Image synthesis—Stage 6: Superpixelwise-constant input image approximation (“image-object mean view”) and per-pixel VQ error estimation. (Stage 7: in cascade to the SIAM superpixel detection, a high-level object-based image analysis (OBIA) approach can be adopted).

Figure 5. Examples of land cover (LC) class-specific families of spectral signatures in top-of-atmosphere reflectance (TOARF) values which include surface reflectance (SURF) values as a special case in clear sky and flat terrain conditions. A within-class family of spectral signatures (e.g., dark-toned soil) in TOARF values forms a buffer zone (hyperpolyhedron, envelope, manifold). The SIAM decision tree models each target family of spectral signatures in terms of multivariate shape and multivariate intensity information components as a viable alternative to multivariate analysis of spectral indexes. A typical spectral index is a scalar band ratio equivalent to an angular coefficient of a tangent in one point of the spectral signature. Infinite functions can feature the same tangent value in one point. In practice, no spectral index or combination of spectral indexes can reconstruct the multivariate shape and multivariate intensity information components of a spectral signature.

Figure 6. Prior knowledge-based color map legend adopted by the Landsat-like SIAM (L-SIAM™, release 88 version 7) implementation. For the sake of representation compactness, pseudo-colors of the 96 spectral categories are gathered along the same raw if they share the same parent spectral category in the decision tree, e.g., “strong” vegetation, equivalent to a spectral end-member. The pseudo-color of a spectral category is chosen as to mimic natural colors of pixels belonging to that spectral category. These 96 color names at fine color granularity are aggregated into 48 and 18 color names at intermediate and coarse color granularity respectively, according to parent–child relationships defined a priori, also refer to Table .

Figure 7. Courtesy of Griffin (Citation2006). Monitor-typical RGB cube partitioned into perceptual polyhedra corresponding to a discrete and finite dictionary of basic color (BC) names, to be community-agreed upon in advance to be employed by members of the community. The mutually exclusive and totally exhaustive polyhedra are neither necessarily convex nor connected. In practice BC names belonging to a finite and discrete color vocabulary are equivalent to Vector Quantization (VQ) levels belonging to a VQ codebook (Cherkassky & Mulier, Citation1998).

Figure 8. One segmentation map is deterministically generated from one multilevel image, such as a thematic map, but the vice versa does not hold, i.e., many multilevel images can generate the same segmentation map. In this example, nine image-objects/segments S1–S9 can be detected in the 3-level thematic map shown at left. Each segment consists of a connected set of pixels sharing the same multilevel map label. Each stratum/layer/level consists of one or more segments, e.g., stratum Vegetation (V) consists of two disjoint segments, S1 and S8. In any multilevel (categorical, nominal, qualitative) image domain, three labeled spatial primitives (spatial units) coexist and are provided with parent–child relationships: pixel with a level-label and a pixel identifier (ID, e.g., the row-column coordinate pair), segment (polygon) with a level-label and a segment ID, and stratum (multi-part polygon) with a level-label equivalent to a stratum ID. This overcomes the ill-fated dichotomy between traditional unlabeled sub-symbolic pixels versus labeled sub-symbolic segments in the numeric (quantitative) image domain traditionally coped with by the object-based image analysis (OBIA) paradigm (Blaschke et al., Citation2014).

Figure 9. Example of a 4-adjacency cross-aura map, shown at right, generated in linear time from a two-level image shown at left.

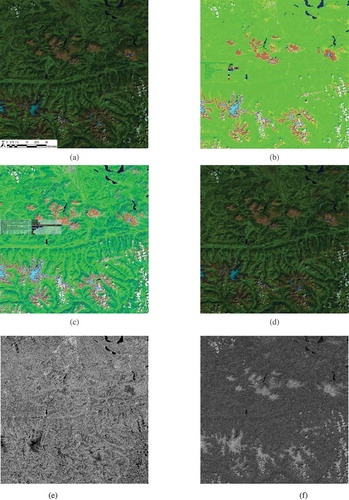

An example of the SIAM output products automatically generated in linear time from a 13-band 10 m-resolution Sentinel-2A image radiometrically calibrated into TOARF values is shown in Figure .

Figure 10. See Footnotenote.

The potential impact on the RS community of a GEO-CEOS stage 4 Val of an off-the-shelf SIAM lightweight computer program for automated near real-time prior knowledge-based MS reflectance space hyperpolyhedralization, superpixel detection and per-pixel VQ quality assessment is expected to be relevant, with special emphasis on existing or future hybrid (combined deductive and inductive) EO-IUSs. In the RS discipline, there is a long history of prior knowledge-based MS reflectance space partitioners for static color naming, alternative to SIAM’s, developed but never validated by space agencies, public organizations and private companies for use in hybrid EO-IUSs in operating mode, see Figure . Examples of hybrid EO image pre-processing applications in the quantitative/sub-symbolic domain of information-as-thing, where a numeric input variable is statistically class-conditioned (masked) by a static color naming first stage to generate as output another numeric variable considered more informative than the input one, are large-scale MS image compositing (Ackerman et al. Citation1998; Lück & van Niekerk, Citation2016; Luo, Trishchenko, & Khlopenkov, Citation2008), MS image atmospheric correction and topographic correction (Baraldi, Citation2017; Baraldi et al., Citation2010b; Baraldi & Humber, Citation2015; Baraldi et al., Citation2013; Bishop & Colby, Citation2002; Bishop et al., Citation2003; DLR & VEGA, Citation2011; Dorigo et al., Citation2009; Lück & van Niekerk, Citation2016; Riano et al., Citation2003; Richter & Schläpfer, Citation2012a, Citation2012b; Vermote & Saleous, Citation2007), see Figure , MS image adjacency effect correction (DLR & VEGA, Citation2011) and radiometric quality assessment of pan-sharpened MS imagery (Baraldi, Citation2017; Despini, Teggi, & Baraldi, Citation2014). Examples of hybrid EO image classification applications in the qualitative/equivocal/categorical domain of information-as-data-interpretation and statistically class-conditioned by a static color naming first stage are cloud and cloud-shadow quality layer detection (Baraldi, Citation2015, Citation2017; Baraldil., DLR & VEGA, Citation2011; Lück & van Niekerk, Citation2016), single-date LC classification (DLR & VEGA, Citation2011; GeoTerraImage, Citation2015; Lück & van Niekerk, Citation2016; Muirhead & Malkawi, Citation1989; Simonetti et al. Citation2015a), multi-temporal post-classification LC change (LCC)/no-change detection (Baraldi, Citation2017; Baraldi et al., Citation2016; Simonetti et al., Citation2015a; Tiede, Baraldi, Sudmanns, Belgiu, & Lang, Citation2016), multi-temporal vegetation gradient detection and quantization into fuzzy sets (Arvor, Madiela, & Corpetti, Citation2016), multi-temporal burned area detection (Boschetti, Roy, Justice, & Humber, Citation2015), and prior knowledge-based LC mask refinement (cleaning) of supervised data samples employed as input to supervised data learning EO-IUSs (Baraldi et al., Citation2010a, Citation2010b). Due to their large application domain, ranging from low- (pre-attentional) to high-level (attentional) vision tasks, existing hybrid EO-IUSs in operating mode, whose statistical data models are class-conditioned by static color naming, become natural candidates for the research and development (R&D) of an EO-IUS in operating mode, capable of systematic transformation of multi-source single-date MS imagery into ESA EO Level 2 product at the ground segment.

Figure 11. Same as in Schläpfer et al. (Citation2009), courtesy of Daniel Schläpfer, ReSe Applications Schläpfer. A complete (“augmented”) hybrid inference workflow for MS image correction from atmospheric, adjacency and topographic effects. It combines a standard Atmospheric/Topographic Correction for Satellite Imagery (ATCOR) commercial software workflow (Richter & Schläpfer, Citation2012a, Citation2012b), with a bidirectional reflectance distribution function (BRDF) effect correction. Processing blocks are represented as circles and output products as rectangles. This hybrid (combined deductive and inductive) workflow alternates deductive/prior knowledge-based with inductive/learning-from-data inference units, starting from initial conditions provided by a first-stage deductive Spectral Classification of surface reflectance signatures (SPECL) decision tree for color naming (pre-classification), implemented within the ATCOR commercial software toolbox (Richter & Schläpfer, Citation2012a, Citation2012b). Categorical variables generated by the pre-classification and classification blocks are employed to stratify (mask) unconditional numeric variable distributions, in line with the statistic stratification principle (Hunt & Tyrrell, Citation2012). Through statistic stratification (class-conditional data analytics), inherently ill-posed inductive learning-from-data algorithms are provided with prior knowledge required in addition to data to become better posed for numerical solution, in agreement with the machine learning-from-data literature (Cherkassky & Mulier, Citation1998).

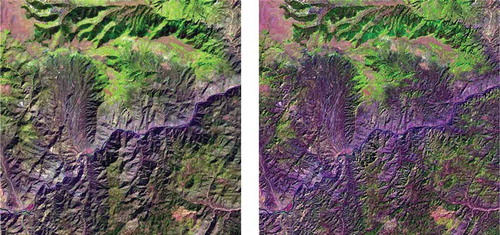

Figure 12. Left: Zoomed area of a Landsat 7 ETM+ image of Colorado, USA (path: 128, row: 021, acquisition date: 2000–08-09), depicted in false colors (R: band ETM5, G: band ETM4, B: band ETM1), 30 m resolution, radiometrically calibrated into TOARF values. Right: Output product automatically generated without human-machine interaction by the stratified topographic correction (STRATCOR) algorithm proposed in Baraldi et al. (Citation2010c), whose input datasets are one Landsat image, its data-derived L-SIAM color map at coarse color granularity, consisting of 18 spectral categories for stratification purposes (see ), and a standard 30 m resolution Shuttle Radar Topography Mission (SRTM) digital elevation model (DEM).

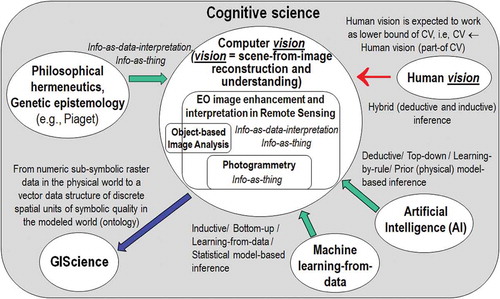

The terminology adopted in the rest of this paper is mainly driven from the multidisciplinary domain of cognitive science, see Figure . Popular synonyms of deductive inference are top-down, prior knowledge-based, learning-from-rule and physical model-based inference. Synonyms of inductive inference are bottom-up, learning-from-data, learning-from-examples and statistical model-based inference (Baraldi, Citation2017; Baraldi & Boschetti, Citation2012a, Citation2012b; Liang, Citation2004). Hybrid inference systems combine statistical and physical data models to take advantage of the unique features of each and overcome their shortcomings (Baraldi, Citation2017; Baraldi & Boschetti, Citation2012a, Citation2012b; Cherkassky & Mulier, Citation1998; Liang, Citation2004). For example, in biological cognitive systems “there is never an absolute beginning” (Piaget, Citation1970), where an a priori genotype provides initial conditions to an inductive learning-from-examples phenotype (Parisi, Citation1991). Hence, any biological cognitive system is a hybrid inference system where inductive/phenotypic learning-from-examples mechanisms explore the neighborhood of deductive/genotypic initial conditions in a solution space (Parisi, Citation1991). In line with biological cognitive systems, an artificial hybrid inference system can alternate deductive and inductive inference algorithms, starting from a deductive inference first stage for initialization purposes, see Figure . It means that no deductive inference subsystem, such as SIAM, should be considered stand-alone, but eligible for use in a hybrid inference system architecture to initialize (pre-condition, stratify) inductive learning-from-data algorithms, which are inherently ill-posed, difficult to solve and require a priori knowledge in addition to data to become better posed for numerical solution, as clearly acknowledged by the machine learning-from-data literature (Bishop, Citation1995; Cherkassky & Mulier, Citation1998).

Figure 13. Like engineering, remote sensing (RS) is a metascience, whose goal is to transform knowledge of the world, provided by other scientific disciplines, into useful user- and context-dependent solutions in the world. Cognitive science is the interdisciplinary scientific study of the mind and its processes. It examines what cognition (learning) is, what it does and how it works. It especially focuses on how information/knowledge is represented, acquired, processed and transferred within nervous systems (distributed processing systems in humans, such as the human brain, or other animals) and machines (e.g., computers). Neurophysiology studies nervous systems, including the brain. Human vision is expected to work as lower bound of CV, i.e., human vision → (part-of) CV, such that inherently ill-posed CV is required to comply with human visual perception phenomena to become better conditioned for numerical solution.

To comply with the GEO-CEOS stage 4 Cal/Val requirements, the selected ready-for-use SIAM software executable had to be validated by independent means on a radiometrically calibrated EO image time-series at large spatial extent. This input data set was identified in the open-access USGS 30 m resolution Web Enabled Landsat Data (WELD) annual composites of the conterminous U.S. (CONUS) for the years 2006–2009, radiometrically calibrated into TOARF values (Homer, Huang, Yang, Wylie, & Coan, Citation2004; Roy et al., Citation2010; WELD Citation2015). The 30 m resolution 16-class U.S. National Land Cover Data (NLCD) 2006 map, delivered in 2011 by the USGS Earth Resources Observation Systems (EROS) Data Center (EDC) (EPA Citation2007; Vogelmann et al., Citation2001; Vogelmann, Sohl, Campbell, & Shaw, Citation1998; Wickham, Stehman, Fry, Smith, & Homer, Citation2010; Wickham et al., Citation2013; Xian & Homer, Citation2010), was selected as reference thematic map at continental spatial extent. The USGS 16-class NLCD 2006 map legend is summarized in Table . To account for typical non-stationary geospatial statistics, the USGS NLCD 2006 thematic map was partitioned into 86 Level III ecoregions of North America collected from the Environmental Protection Agency (EPA) (EPA Citation2013; Griffith & Omernik, Citation2009).

Table 2. Definition of the USGS NLCD 2001/2006/2011 classification taxonomy, Level II. 2 Alaska only

In this experimental framework, the test SIAM-WELD annual color map time-series for the years 2006–2009 and the reference USGS NLCD 2006 map share the same spatial extent and spatial resolution, but their map legends are not the same. These working hypotheses are neither trivial nor conventional in the RS literature, where thematic map quality assessment strategies typically adopt an either random or non-random sampling strategy and assume that the test and reference thematic map dictionaries coincide (Stehman & Czaplewski, Citation1998). Starting from a stratified random sampling protocol presented in Baraldi et al. (Citation2014), the secondary contribution of the present study was to develop a novel protocol for wall-to-wall comparison without sampling of two thematic maps featuring the same spatial extent and spatial resolution, but whose legends can differ.

For the sake of readability this paper is split into two, the present Part 1—Theory and the subsequent Part 2—Validation. An expert reader familiar with static color naming in cognitive science, spanning from linguistics to human vision and CV, can skip the present Part 1, either totally or in part. To make this paper self-contained and provided with a relevant survey value, the Part 1 is organized as follows. The multidisciplinary background of color naming is discussed in Chapter 2. Chapter 3 reviews the long history of prior knowledge-based decision trees for MS color naming presented in the RS literature. To cope with thematic map legends that do not coincide and must be harmonized (reconciled, associated, translated) (Ahlqvist, Citation2005), such as dictionaries of MS color names in the image-domain and LC class names in the scene-domain, Chapter 3 proposes an original hybrid inference guideline to identify a categorical variable-pair relationship, where prior beliefs are combined with additional evidence inferred from new data. An original measure of categorical variable-pair association (harmonization) in a binary relationship is proposed in Chapter 4. In the subsequent Part 2, GEO-CEOS stage 4 Val results are collected by an original protocol for wall-to-wall thematic map quality assessment without sampling, where legends of the test SIAM-WELD annual map time-series and reference USGS NLCD 2006 map are harmonized. Conclusions are that the annual SIAM-WELD map time-series for the years 2006–2009 provides a first example of GEO-CEOS stage 4 validated ESA EO Level 2 SCM product, where the Level 2 SCM legend is the “augmented” 2-level 4-class FAO LCCS taxonomy at the DP Level 1 (vegetation/non-vegetation) and DP Level 2 (terrestrial/aquatic), added with extra class “rest of the world”.

2. Problem background of color naming in cognitive science

Within the cognitive science domain, vision is synonym of scene-from-image reconstruction and understanding, see Figure . Encompassing both biological vision and CV, vision is a cognitive (information-as-data-interpretation) problem inherently ill-posed in the Hadamard sense (Hadamard, Citation1902); hence, it is very difficult to solve. Vision is non-polynomial (NP)-hard in computational complexity (Frintrop, Citation2011; Tsotsos, Citation1990) and requires a priori knowledge in addition to sensory data to become better posed for numerical solution (Cherkassky & Mulier, Citation1998). It is inherently ill-posed because affected by, first, data dimensionality reduction from the 4D spatio-temporal scene-domain to the (2D) image-domain and, second, by a semantic information gap from ever-varying sensations in the (2D) image-domain to stable percepts in the mental model of the 4D scene-domain (Matsuyama & Hwang, Citation1990). On the one hand, ever-varying sensations collected from the 4D spatio-temporal physical world are synonym of observables, numeric/quantitative variables of sub-symbolic value or sensory data provided with a physical unit of measure, such as TOARF or SURF values, but featuring no semantics corresponding to abstract concepts, like perceptual categories or mental states. On the other hand, in a modeled world, also known as world ontology, mental world or “world model” (Matsuyama & Hwang, Citation1990), stable percepts are nominal/categorical/qualitative variables of symbolic value, i.e., they are categorical variables provided with semantics, such as LC class names belonging to a hierarchical FAO LCCS taxonomy of the world (Di Gregorio & Jansen, Citation2000), see Figure .

In statistics, the popular concept of latent/hidden variables was introduced to fill the information gap from input observables to target categorical variables. Latent/hidden variables are not directly measured, but inferred from observable numeric variables to link sensory data in the real world to categorical variables of semantic quality in the modeled world. “The terms hypothetical variable or hypothetical construct may be used when latent variables correspond to abstract concepts, like perceptual categories or mental states” (Baraldi, Citation2017; Shotton, Winn, Rother, & Criminisi, Citation2009; Wikipedia, Citation2018b). Hence, to fill the semantic gap from low-level numeric variables of sub-symbolic quality to high-level categorical variables of semantic value, hypothetical variables, such as categorical BC names (Benavente et al., Citation2008; Berlin & Kay, Citation1969; Gevers, Gijsenij, van de Weijer, & Geusebroek, Citation2012; Griffin, Citation2006), are expected to be mid-level categorical variables of “semi-symbolic” quality, i.e., hypothetical variables are nominal variables provided with a semantic value located “low” in a hierarchical ontology of the world, such as the hierarchical FAO LCCS taxonomy (Di Gregorio & Jansen, Citation2000), but always superior to zero, where zero is the semantic value of sub-symbolic numeric variables, see Figure .

Figure 14. Graphical model of color naming, adapted from Shotton et al. (Citation2009). Let us consider x as a (sub-symbolic) numeric variable, such as MS color values of a population of spatial units, with x ∈ ℜMS, while c represents a categorical variable of symbolic classes in the physical world, with c = 1, …, ObjectClassLegendCardinality. (a) According to Bayesian theory, posterior probability p(c|x) ∝ p(x|c)p(c) = p(c) , where color names, equivalent to color hyperpolyhedra in a numeric color space ℜMS, provide a partition of the domain of change, ℜMS, of numeric variable x. (b) For discriminative inference, the arrows in the graphical model are reversed using Bayes rule. Hence, a vocabulary of color names, physically equivalent to a partition of a numeric color space ℜMS into color name-specific hyperpolyhedra, is conceptually equivalent to a latent/hidden/hypothetical variable linking observables (sub-symbolic sensory data) in the real world, specifically, color values, to a categorical variable of semantic (symbolic) quality in the mental model of the physical world (world ontology, world model).

In vision, spatial topological and spatial non-topological information components typically dominate color information (Baraldi, Citation2017; Matsuyama & Hwang, Citation1990). This thesis is proved by the undisputable fact that achromatic (panchromatic) human vision, familiar to everybody when wearing sunglasses, is nearly as effective as chromatic vision in scene-from-image reconstruction and understanding. Driven from perceptual evidence in human vision typically investigated by cognitive science, see Figure , a necessary not sufficient condition for a CV system to prove it fully exploits spatial topological and spatial non-topological information components in addition to color is to perform nearly the same when input with either panchromatic or color imagery. Stemming from a priori knowledge of human vision available in addition to sensory data, this necessary not sufficient condition can be adopted to make an inherently ill-posed CV system design and implementation problem better constrained for numerical solution.

Deeply investigated in CV (Frintrop, Citation2011; Sonka et al., Citation1994), content-based image retrieval (Smeulders, Worring, Santini, Gupta, & Jain, Citation2000) and EO image applications proposed by the RS community (Baraldi, Citation2017; Baraldi & Boschetti, Citation2012a, Citation2012b; Matsuyama & Hwang, Citation1990; Nagao & Matsuyama, Citation1980; Shackelford & Davis, Citation2003a, Citation2003b), well-known visual features are: (i) color values, typically discretized by humans into a finite and discrete vocabulary of BC names (Benavente et al., Citation2008; Berlin & Kay, Citation1969; Gevers et al., Citation2012; Griffin, Citation2006); (ii) local shape (Baraldi, Citation2017; Wenwen Li et al. Citation2013); (iii) texture, defined as the perceptual spatial grouping of texture elements known as texels (Baraldi, Citation2017; Julesz, Citation1986; Julesz et al., Citation1973) or tokens (Marr, Citation1982); (iv) inter-object spatial topological relationships, e.g., adjacency, inclusion, etc., and (v) inter-object spatial non-topological relationships, e.g., spatial distance, angle measure, etc. In vision, color is the sole visual property available at the imaging sensor’s spatial resolution, i.e., at pixel level. In other words, pixel-based information is spatial context independent. i.e., per-pixel information is exclusively related to color properties. Among the aforementioned visual variables, per-pixel color values are the sole non-spatial (spatial context-insensitive) numeric variable.

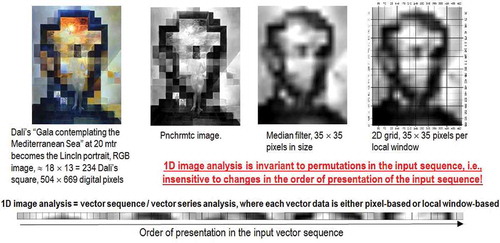

Neglecting the fact that spatial topological and spatial non-topological information components typically dominate color information in both the (2D) image-domain and the 4D spatio-temporal scene-domain involved with the cognitive task of vision (Matsuyama & Hwang, Citation1990), traditional EO-IUSs adopt a 1D image analysis approach, see Figure . In 1D image analysis, a 1D streamline of vector data, either spatial context-sensitive (e.g., window-based or image object-based like in OBIA approaches) or spatial context-insensitive (pixel-based), is processed insensitive to changes in the order of presentation of the input sequence. In practice 1D image analysis is invariant to permutations, such as in orderless pooling encoders (Cimpoi et al., Citation2014). When vector data are spatial context-sensitive then 1D image analysis ignores spatial topological information. When vector data are pixel-based then 1D image analysis ignores both spatial topological and spatial non-topological information components. Prior knowledge-based color naming of a spatial unit x in the image-domain, where x is either (0D) point, (1D) line or (2D) polygon defined according to the OGC nomenclature (OGC, Citation2015), is a special case of 1D image analysis, either pixel-based or image object-based, where spatial topological and/or spatial non-topological information are ignored, such as in SIAM’s static color naming (Baraldi et al., Citation2006).

Figure 15. Example of 1D image analysis. Synonym of 1D analysis of a 2D gridded dataset, it is affected by spatial data dimensionality reduction. The (2D) image at left is transformed into the 1D vector data stream shown at bottom, where vector data are either pixel-based or spatial context-sensitive, e.g., local window-based. This 1D vector data stream, either pixel-based or local window-based, means nothing to a human photointerpreter. When it is input to a traditional inductive data learning classifier, this 1D vector data stream is what the inductive classifier actually sees when watching the (2D) image at left. Undoubtedly, computers are more successful than humans in 1D image analysis, invariant to permutations in the input vector data sequence, such as in orderless pooling encoders (Cimpoi et al., Citation2014). Nonetheless, humans are still far more successful than computers in 2D image analysis, synonym of spatial topology-preserving (retinotopic) image analysis (Tsotsos, Citation1990), sensitive to permutations in the input vector data sequence, such as in order-sensitive pooling encoders (Cimpoi et al., Citation2014).

Alternative to 1D image analysis, 2D image analysis relies on a sparse (distributed) 2D array (2D regular grid) of local spatial filters, suitable for spatial topology-preserving (retinotopic) feature mapping (DiCarlo, Citation2017; Fritzke, Citation1997a; Martinetz, Berkovich, & Schulten, Citation1994; Tsotsos, Citation1990), sensitive to permutations in the input vector data sequence, such as in order-sensitive pooling encoders (Cimpoi et al., Citation2014), see Figure . The human brain’s organizing principle is topology-preserving feature mapping (Feldman, Citation2013). In the biological visual system, topology-preserving feature maps are primarily spatial, where activation domains of physically adjacent processing units in the 2D array of convolutional filters are spatially adjacent regions in the 2D visual field. Provided with a superior degree of biological plausibility in modeling 2D spatial topological and spatial non-topological information components, distributed processing systems capable of 2D image analysis, such as physical model-based (“hand-crafted”) 2D wavelet filter banks (Mallat, Citation2016) and end-to-end inductive learning-from-data DCNNs, typically outperform 1D image analysis approaches (Cimpoi et al., Citation2014; DiCarlo, Citation2017), although DCNNs are the subject of increasing criticisms by the artificial intelligence (AI) community (DiCarlo, Citation2017; Etzioni, Citation2017; Marcus, Citation2018). This apparently trivial consideration is at odd with a relevant portion of the RS literature, where pixel-based 1D image analysis is mainstream, followed in popularity by spatial context-sensitive 1D image analysis implemented within the OBIA paradigm (Blaschke et al., Citation2014). Undoubtedly, computers are more successful than humans in 1D image analysis, invariant to permutations in the input vector data sequence (Cimpoi et al., Citation2014). Nonetheless, humans are still far more successful than computers in 2D image analysis, synonym of spatial topology-preserving feature mapping (Tsotsos, Citation1990), which implies sensitivity to permutations in the input vector data sequence (Cimpoi et al., Citation2014).

Figure 16. 2D image analysis, synonym of spatial topology-preserving (retinotopic) feature mapping in a (2D) image-domain (Tsotsos, Citation1990). Activation domains of physically adjacent processing units in the 2D array of convolutional spatial filters are spatially adjacent regions in the 2D visual field. Provided with a superior degree of biological plausibility in modeling 2D spatial topological and spatial non-topological information, distributed processing systems capable of 2D image analysis, such as deep convolutional neural networks (DCNNs), typically outperform traditional 1D image analysis approaches. Will computers become as good as humans in 2D image analysis?

Since traditional EO-IUSs adopt a 1D image analysis approach, where dominant spatial information is omitted either totally or in part in favor of secondary color information, it is useful to turn attention to the multidisciplinary framework of cognitive science to shed light on how humans cope with color information. According to cognitive science which includes linguistics, the study of languages, see Figure , humans discretize (fuzzify) ever-varying quantitative (numeric) photometric and spatio-temporal sensations into stable qualitative/categorical/nominal percepts, eligible for use in symbolic human reasoning based on a convergence-of-evidence approach (Matsuyama & Hwang, Citation1990). In their seminal work, Berlin and Kay proved that 20 human languages, spoken across space and time in the real world, partition quantitative color sensations collected in the visible portion of the electromagnetic spectrum, see Figure , onto the same “universal” vocabulary of eleven BC names (Berlin & Kay, Citation1969): black, white, gray, red, orange, yellow, green, blue, purple, pink and brown. In a 3D monitor-typical red-green-blue (RGB) data cube, BC names are intuitive to think of and easy to visualize. They provide a mutually exclusive and totally exhaustive partition of a monitor-typical RGB data cube into RGB polyhedra neither necessarily connected nor convex, see (Benavente et al., Citation2008; Griffin, Citation2006). Since they are community-agreed upon to be used by members of the same community, RGB BC polyhedra are prior knowledge-based, i.e., stereotyped, non-adaptive-to-data (static), general-purpose, application- and user-independent. Multivariate measurement space partitioning into a discrete and finite set of mutually exclusive and totally exhaustive hyperpolyhedra copes with the transformation of a numeric variable into a categorical variable, see Figure . Numeric variable discretization is a typical problem in many scientific disciplines, such as inductive VQ in machine learning-from-data (Cherkassky & Mulier, Citation1998) and deductive numeric variable fuzzification into discrete fuzzy sets, e.g., low, medium and high, in fuzzy logic (Zadeh, Citation1965), refer to Chapter 1.

To summarize, human languages refer to human colorimetric perception in terms of a stable, prior knowledge-based vocabulary (codebook) of BC names (codewords) non-adaptive to data, physically equivalent to a discrete and finite set of mutually exclusive and totally exhaustive hyperpolyhedra, neither necessarily convex nor connected in a numeric MS color space, identified as ℜMS, where MS >2, e.g., MS = 3 like in a monitor-typical RGB data cube, see Figure . These BC names are conceptually equivalent to a latent/hypothetical categorical variable of semi-symbolic quality, see Figure , capable of linking sub-symbolic sensory data in the real world, specifically color values in color space ℜMS, to categorical variables of semantic (symbolic) quality in the world model, also known as world ontology or mental world, made of abstract concepts, like perceptual categories of real-world objects or mental states.

In an analytic model of vision based on a convergence-of-evidence approach, the first original contribution of the present Part 1 is to encode prior knowledge about color naming into a CV system by design, as described hereafter. Irrespective of their Pearson inter-feature cross-correlation, if any, it is easy to prove that individual sources of visual evidence, such as color, local shape, texture and inter-object spatial relationships, are statistically independent because, in general, Pearson’s linear cross-correlation does not imply causation (Baraldi, Citation2017; Baraldi & Soares, Citation2017; Pearl, Citation2009). According to a “naive” hypothesis of conditional independence of visual features color, local shape, texture and inter-object spatial relationships, when target classes of observed objects in the real-world scene are c = 1, …, ObjectClassLegendCardinality, for a given discrete spatial unit x in the image-domain, either 0D point, 1D line or 2D polygon (OGC, Citation2015), then the well-known “naïve” Bayes classification formulation (Bishop, Citation1995) becomes

where ColorValue(x) belongs to a MS measurement space ℜMS, i.e., ColorValue(x) ∈ ℜMS, and Neigh(x) is a generic 2D spatial neighborhood of spatial unit x in the (2D) image-domain. Equation (1) shows that any convergence-of-evidence approach is more selective than each individual source of evidence, in line with a focus-of-visual attention mechanism (Frintrop, Citation2011). For the sake of simplicity, if priors are ignored because considered equiprobable in a maximum class-conditional likelihood inference approach alternative to a maximum a posteriori optimization criterion, then Equation (1) becomes

where color space ℜMS is partitioned into hyperpolyhedra, equivalent to a discrete and finite vocabulary of static color names, with ColorName = 1, …, ColorVocabularityCardinality. To further simplify Equation (2), its canonical interpretation based on frequentist statistics can be relaxed by fuzzy logic (Zadeh, Citation1965), so that the logical-AND operator is replaced by a fuzzy-AND (min) operator, inductive class-conditional probability p(x| c) ∈ [0, 1], where , is replaced by a deductive membership (compatibility) function m(x| c) ∈ [0, 1], where

according to the principles of fuzzy logic, where compatibility/membership does not mean probability, and color space hyperpolyhedra are considered mutually exclusive and totally exhaustive. If these simplifications are adopted, then Equation (2) becomes

In Equation (3), the following considerations hold.

Each numeric ColorValue(x) in color space ℜMS belongs to a single color name (hyperpolyhedron) ColorName* in the static color name vocabulary, i.e., ∀ ColorValue(x) ∈ ℜMS,

holds, where m(ColorValue(x)| ColorName) ∈ {0, 1} is a binary (crisp, hard) membership function, with ColorName = 1, …, ColorVocabularyCardinality and ColorName* ∈ {1, ColorVocabularyCardinality}.

Set A = VocabularyOfColorNames, with cardinality |A| = a = ColorVocabularyCardinality, and set B = LegendOfObjectClassNames, with cardinality |B| = b = ObjectClassLegendCardinality, can be considered a bivariate categorical random variable where two univariate categorical variables A and B are generated from a single population. A binary relationship from set A to set B, R: A ⇒ B, is a subset of the 2-fold Cartesian product (product set) A × B, whose size is rows × columns = a × b, hence, R: A ⇒ B ⊆ A × B. The Cartesian product of two sets A × B is a set whose elements are ordered pairs. Hence, the Cartesian product is non-commutative, A × B ≠ B × A. In agreement with common sense, see Table , binary relationship R: VocabularyOfColorNames ⇒ LegendOfObjectClassNames is a set of ordered pairs where each ColorName can be assigned to none, one or several classes of observed scene-objects with class index c = 1, …, ObjectClassLegendCardinality, whereas each class of observed objects can be assigned with none, one or several color names to define the class-specific colorimetric attribute. Binary membership values m(ColorName| c) ∈ {0, 1} and m(c| ColorName) ∈ {0, 1}, with c = 1, …, ObjectClassLegendCardinality and ColorName = 1, …, ColorVocabularyCardinality, can be community-agreed upon based on various kinds of evidence, whether viewed all at once or over time, such as a combination of prior beliefs with additional evidence inferred from new data in agreement with a Bayesian updating rule, largely applied in AI, including design and implementation of expert systems. In Bayesian updating, Bayesian inference is applied iteratively (Ghahramani, Citation2011; Wikipedia, Citation2018c): after observing some evidence, the resulting posterior probability can be treated as a prior probability and a new posterior probability computed from new evidence. A binary relationship R: A ⇒ B ⊆ A × B, where sets A and B are categorical variables generated from a single population, guides the interpretation process of a two-way contingency table, also known as association matrix, cross tabulation, bivariate table or bivariate frequency table (BIVRFTAB) (Kuzera & Pontius, Citation2008; Pontius & Connors, Citation2006; Pontius & Millones, Citation2011), such that BIVRFTAB = FrequencyCount(A × B). In the conventional domain of frequentist inference with no reference to prior beliefs, a BIVRFTAB is the 2-fold Cartesian product A × B instantiated by the bivariate frequency counts of the two univariate categorical variables A and B generated from a single population. Hence, binary relationship R: A ⇒ B ⊆ A × B ≠ FrequencyCount(A × B) = BIVRFTAB, where one instantiation of the former guides the interpretation process of the latter. In greater detail, for any BIVRFTAB instance, either square or non-square, there is a binary relationship R: A ⇒ B ⊆ A × B that guides the interpretation process, where “correct” binary entry-pair cells of the 2-fold Cartesian product A × B are equal to 1 and located either off-diagonal (scattered) or on-diagonal, if a main diagonal exists when the BIVRFTAB is square. When a BIVRFTAB is estimated from a geospatial population with or without sampling, it is called overlapping area matrix (OAMTRX) (Baraldi et al., Citation2014; Baraldi, Bruzzone, & Blonda, Citation2005; Baraldi et al., Citation2006; Beauchemin & Thomson, Citation1997; Lunetta & Elvidge, Citation1999; Ortiz & Oliver, Citation2006; Pontius & Connors, Citation2006). When the binary relationship R: A ⇒ B is a bijective function (both 1–1 and onto), i.e., when the two categorical variables A and B estimated from a single population coincide, then the BIVRFTAB instantiation is square and sorted; it is typically called confusion matrix (CMTRX) or error matrix (Congalton & Green, Citation1999; Lunetta & Elvidge, Citation1999; Pontius & Millones, Citation2011; Stehman & Czaplewski, Citation1998). In a CMTRX, the main diagonal guides the interpretation process. For example, a square OAMTRX = FrequencyCount(A × B), where A = test thematic map legend, B = reference thematic map legend such that cardinality a = b, is a CMTRX if and only if A = B, i.e., if the test and reference codebooks are the same sorted set of concepts or categories. In general the class of (square and sorted) CMTRX instances is a special case of the class of OAMTRX instances, either square or non-square, i.e., OAMTRX ⊃ CMTRX. A similar consideration holds about summary Q2Is generated from an OAMTRX or a CMTRX, i.e., Q2I(OAMTRX) ⊃ Q2I(CMTRX) (Baraldi et al., Citation2014, Citation2005, Citation2006).

Table 3. Example of a binary relationship R: A ⇒ B ⊆ A × B from set A = VocabularyOfColorNames, with cardinality |A| = a = ColorVocabularyCardinality = 11, and the set B = LegendOfObjectClassNames, with cardinality |B| = b = ObjectClassLegendCardinality = 3

Table 4. Rule set (structural knowledge) and order of presentation of the rule set (procedural knowledge) adopted by the prior knowledge-based MS reflectance space quantizer called Spectral Classification of surface reflectance signatures (SPECL), implemented within the ATCOR commercial software toolbox (Dorigo et al., Citation2009; Richter & Schläpfer, Citation2012a, Citation2012b)

Table 5. 8-step guideline for best practice in the identification of a dictionary-pair binary relationship based on a hybrid combination of (top-down) prior beliefs, if any, with (bottom-up) frequentist inference

Equation (3) shows that for any spatial unit x in the image-domain, when a hierarchical CV classification approach estimates posterior m(c| ColorValue(x), ShapeValue(x), TextureValue(x), SpatialRelationships(x, Neigh(x))) starting from an a priori knowledge-based near real-time color naming first stage, where condition m(ColorValue(x)| ColorName*) = 1 holds, if condition m(ColorName*| c) = 0 is true according to a static community-agreed binary relationship R: VocabularyOfColorNames ⇒ LegendOfObjectClassNames (and vice versa) known a priori, see Table , then m(c| ColorValue(x), ShapeValue(x), TextureValue(x), SpatialRelationships(x, Neigh(x))) = 0 irrespective of any second-stage assessment of spatial terms ShapeValue(x), TextureValue(x) and SpatialRelationships(x, Neigh(x)), whose computational model is typically difficult to find and computationally expensive. Intuitively, Equation (3) shows that static color naming of any spatial unit x, either (0D) pixel, (1D) line or (2D) polygon, allows the color-based stratification of unconditional multivariate spatial variables into color class-conditional data distributions, in agreement with the statistic stratification principle (Hunt & Tyrrell, Citation2012) and the divide-and-conquer (dividi-et-impera) problem solving approach (Bishop, Citation1995; Cherkassky & Mulier, Citation1998; Lipson, Citation2007). Well known in statistics, the principle of statistic stratification guarantees that “stratification will always achieve greater precision provided that the strata have been chosen so that members of the same stratum are as similar as possible in respect of the characteristic of interest” (Hunt & Tyrrell, Citation2012).

Whereas 3D color polyhedra are easy to visualize and intuitive to think of in a true- or false-color RGB data cube, see Figure , hyperpolyhedra are difficult to think of and impossible to visualize in a MS reflectance space whose spectral dimensionality MS >3, with spectral channels ranging from visible to thermal portions of the electromagnetic spectrum, see Figure . Since it is non-adaptive-to-data, any static hyperpolyhedralization of a MS measurement space must be based on a priori physical knowledge available in addition to sensory data. Equivalent to a physical data model, static hyperpolyhedralization of a MS data space requires all spectral channels to be provided with a physical unit of radiometric measure, i.e., MS data must be radiometrically calibrated, in compliance with the GEO-CEOS QA4EO Cal requirements (GEO-CEOS, 2010, Citation2010), refer to Chapter 1.