?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This paper proposes and examines a new structural risk of default model for banks in frictional and fuzzy financial markets. It is motivated by the need to fill the shortcomings of probability-based credit risk metric models that are characterised by unrealistic assumptions such as crisply precise and constant risk-free rates of return. The problem investigated here specifically proposes a new Kealhofer–Merton–Vasicek (KMV)-type model for estimation of the risk of default for banks extended for both market friction represented by transaction costs and uncertainty modelled by fuzziness. The novel risk of default model is then validated using cross-sectional financial data of eight commercial banks drawn from several emerging economies in Southern Africa. The results from the proposed model are fairly stable and consistent compared to those from hazard function and structural credit risk models currently used in the markets. The model is relevant in that it fairly captures practical conditions faced by banks that influence their risks of default in their quest to improve financial performance and shareholders’ wealth. The study recommends that banks in frictional and fuzzy financial markets, such as those in emerging economies, can adopt and implement the proposed risk of the default model.

JEL Classification:

Public interest statement

Risk of default measures the probability that a bank will not fulfil its principal and interest loan obligations due to its past commitments, current market, economic and liquidity circumstances. Investors must accurately measure the risk of default facing their financial investments in markets characterised by high transaction costs, also called market friction. The work proposes a model that incorporates vague concepts, such as high, medium and low volatility, covered in fuzzy logic or fuzziness, and market friction which characterise most emerging economies, in estimation of risks of default of banks. The results of the study are that banks’ risks of default are inversely related to their asset values, volatilities and returns on equity and directly correlated to market frictions and liabilities. Therefore, the study ends by recommending that banks must efficiently and effectively manage their investments in frictional and fuzzy financial markets to improve corporate performance and sustainability.

Abridged paper revision under summary of reviewer suggestions and comments

After going through suggestions and comments by Reviewers of our Risk of Default Paper, the following table summarises areas of concern that were noted for revision by the Reviewers:

1. Introduction

The Merton (Citation1974) structural probability of default (PD) model revolutionised research and practice in credit risk modelling in banking firms, and over the years, academic researchers and practitioners have extended Merton’s theory in various directions. For instance, structural credit risk models are based on the assumption that the non-stationary structure of the loan obligation leads to the termination of operations on a fixed date and default can only happen on a specified date. Geske and Delianedis (Citation2003) extend the Merton asset valuation model (AVM) to the case of the valuation of tradable bonds with different terms to maturity. Their study concludes that it is incorrect to assume that the firm’s value of assets can be traded in financial markets. In reality, the value of the assets of a firm and its related parameters may not even be directly observed as articulated in most structural valuation models. This is one of the major drawbacks of structural credit risk models (CRMs) such as the Merton (Citation1974) and Black and Scholes (Citation1973) models that new models must seek to address.

Geske and Delianedis (Citation2003) conclude that interest rates which are assumed to be fixed in the traditional PD model are random or stochastic. They go further to conclude that the yield spread curve in calibrated versions of the Merton model remains essentially zero for months, contrary to the observations made in real financial markets. On the one hand, structural models for the valuation of banks are based on the assumption that financial markets are perfect and frictionless. However, in practice, these markets are characterised by some degrees of friction and uncertainty such as fuzziness, vagueness, and ambiguity (Zadeh, Citation1965; Zimmermann, Citation1980). Therefore, the study proposes a KMV risk of default model extended for transaction costs for estimation of the risk of default in banks that operate in fuzzy financial markets.

2. Background to the study

Banks worldwide operate in financial markets characterised by some degrees of uncertainty, particularly in the forms of friction and fuzziness. Zimmermann (Citation1980) and Zadeh (Citation1965) demonstrate that most contemporary CRMs are suitable for the estimation of PDs of banks where transaction costs are negligible, minimal, or equal to zero. In reality, financial investors often subjectively express the uncertainty they face with some degree of implicit fuzziness also known as impreciseness (Zebda, Citation1989). For instance, investors may express market costs or returns as average, high or low, which terms are not explicit but implicit numbers. According to Zebda (Citation1989), Zimmermann (Citation1980), and Zadeh (Citation1965), the investors’ use of operational language or semantics in market valuations is intrinsically imprecise contrary to some of the assumptions underlying most structural CRMs alluded to above.

However, the prerequisites of structural models such as Merton (Citation1974) and Black and Scholes (Citation1973) are that probability used in decision analysis is a ‘precise” number determined from repeated samples and relative frequency distributions (Kolmogorov, Citation1933). Kolmogorov’s classical probability theory differs significantly from fuzzy theory which is derived from the “degree of belief” set by financial experts and investors. Therefore, from the above observation, it becomes difficult to apply structural models to the valuations of risk metrics of banks under conditions of uncertainty in fuzzy decision-making processes (Bellman & Zadeh, Citation1970). In other words, we can conclude that investors use both probabilistic and fuzzy valuation tools to characterise the uncertainty that is inherent in financial market operations.

3. Problem statement

Contemporary literature has shown that traditional structural models such as the Merton (Citation1974) are the cornerstones of all structural CRMs but are criticised for being constrained by their nature of not applying to frictional and fuzzy financial environments and underscoring the risk of default in good or boom economic times. Although some contemporary structural CRMs have been adjusted for fuzziness, especially in option pricing, none of them have been extended for market friction (Obeng et al., Citation2021; Tsunga et al., Citation2021). Hence, this research is motivated by the need to incorporate market friction and fuzziness in the valuation of the risk of default of banks to fairly reflect on practical conditions faced by investors. Banks in economies in Southern Africa are used because this is a region characterised by unique economic, business, and financial environments compared to other continents such as Europe and America.

4. Aim and objectives of the study

This study aims to improve the ability of structural models in the estimation of risk metrics concerning the modelling of the risk of default of banks. The objectives of the research study are to

Examine the impact of a KMV risk metrics model extended for both friction and fuzziness on the risks of default of banks in emerging financial markets.

Validate the proposed KMV risk of default model using financial data drawn from banks in emerging markets of countries in Southern Africa.

Compare the results estimated using the KMV risk of default model of banks with those generated from both hazard function and structural risk models.

5. Significance of the study

Although structural CRMs such as Merton (Citation1974) and Black and Scholes (Citation1973) are the benchmarks on which all bank and other firm valuations are based, they are usually criticised for being founded on unrealistic assumptions such as constant risk-free rates of return and frictionless markets. In practice, banks operate in frictional and fuzzy financial markets, contrary to the assumptions on which most structural CRMs are applied. Therefore, by extending contemporary structural models for market friction and fuzziness, banks can attain precision in the estimation of their risk metrics (Palma & Ochoa, Citation2013).

6. Research hypothesis

The study is carried out under the following hypothesis:

Null hypothesis (H0): Market friction and fuzziness have no effects on the DPs of banks in emerging economies.

7. Scope of the study

The study is carried out in Southern African countries mainly characterised by frictional and fuzzy financial markets. The study extends the existing structural models for market friction and fuzziness which are practical factors investors face in the valuation of banks and their risk metrics such as the risk of default (Obeng et al., Citation2021; Tsunga et al., Citation2021).

8. Organisation of the study

The paper is divided into five sections: the introduction above is Section 1 and Section 2 reviews literature on structural PD models, transaction costs, fuzzy set theory, and its applications. Section 3 proposes a new look KMV framework, Section 4 validates the model using financial data of eight banks from countries in Southern Africa and conclusions and recommendations of the study are then presented in Section 5 of the paper.

9. Literature review

This section examines the various theoretical frameworks underlying credit risk modelling with specific reference to the use of the hazard function and structural models in the estimation of the distances-to-default (DTDs) needed in the valuation of risk metrics of banks such as the risk of default. The evolution of CRMs starts by presenting the hazard function model by Cox (Citation1972) before examining structural models such as Black and Scholes (Citation1973) and Merton (Citation1974) models and other relevant contemporary models on which the study leans.

9.1. Cox (Citation1972)’s semi-parametric hazard function model and risk of default

The Cox proportional hazards model (Cox, Citation1972) is mainly a regression model that is commonly used in medical science research for modelling the association between patients’ survival data or times and one or more predictor or explanatory variables. This study starts by noting that there are two main classes of models that can be applied to credit risk modelling which are structural and reduced-form models. Structural models are used to calculate the probability of default for a form or bank based on its values of assets and liabilities. A firm defaults if its market value of assets falls below the debt obligations it has to pay or settle. A firm defaults if the market value of its assets is less than the debt it has to pay. The hazard rate function in the context of credit risk modelling is defined as the rate of default calculated at any time, assuming that the borrower has survived up to that point in time (Boland et al., Citation2016). The other name for the hazard rate is the marginal default probability (MDP) which is different from classical probability and survival analysis in credit risk modelling. Survival analysis has been introduced into credit scoring or rating in recent years. It is an area of statistics that deals with the analysis of lifetime data, where the variable of interest is the time of the event. The difference between hazard and survival functions is that a hazard function focuses on failing or an event occurring while a survival function focuses on an event not failing (Sawadogo, Citation2018). Therefore in some sense, a hazard function can be perceived as the converse side of the information provided by a survival function. The survival analysis model attempts to estimate the survival probability over the entire data set given. By comparison, risk represents probability while hazard represents settings, situations, or physical objects or phenomena.

Risk can be expressed in degrees, whereas hazards cannot be expressed in degrees. Hazard rates unlike classical probability theory can value values greater than 1 and technically cannot be a probability value. The hazard function can be interpreted as the conditional probability of the failure of a device at age X, given that it did not fail before the attainment of age X (Mendes, Citation2014). In other words, the interpretation and boundedness of a discrete hazard rate are different from those under the continuous probability case, such as the normal distributions. In reality, there are four main groups of work hazards faced, namely chemical, ergonomic, physical, and psychosocial, which can cause harm or adverse effects to humans in the workplace. Hazards must be identified first before a company undertakes a risk assessment, which implies that these two variables are different (Boland et al., Citation2016). In principle, a hazard is anything that could cause harm to a person or environment while the risk is a combination of two things which are the chance that the hazard will cause harm and the seriousness that the harm would cause. The hazard rate is sometimes referred to as the failure rate which is a rate that only applies to items or objects that cannot be repaired (Sawadogo, Citation2018). It is therefore fundamental to the design of safe systems in organisational applications and is often relied on in disciplines such as engineering, insurance, economics, finance, and banking and regulatory industries. The Cox (Citation1972) model is presented in this section briefly to pave way for comparing results from its application in the study to those obtained from the traditional structural model and the proposed KMV risk of the default model.

9.2. Structural models for valuation of risk metrics of banking firms

Although the history of structural models backdates to before the Black and Scholes (Citation1973) option pricing model (OPM), the models of management of credit portfolios are pioneered by Merton (Citation1974). These models are then, developed further by Leland (1994), Leland and Toft (1996), Anderson and Sundaresan (1996), and Jarrow (2011). Although the Merton (Citation1974) model is the benchmark on which all credit risk models are based, it is said to be limited in that it is only applicable when asset values and volatilities of firms are not directly observable. Secondary parameters of the Merton model such as (drift and asset volatility, respectively) are unknown and should be estimated from observed model parameters such as equity values and volatilities. Several estimation methods for the two parameters are applied in economics, business, banking, and finance, but not enough attention has been given to their theoretical and empirical shortcomings.

New models for the valuation of risk metrics of banks have been introduced in a somewhat unique liability structure in financial practice. Hence, emphasis needs to be laid on KMV methods and maximum-likelihood approaches to the valuation of risk metrics of banks. The KMV approach makes the Credit Analytics Services (CAS) offered by Moody’s KMV rigorous as detailed in the research by Crosbie and Bohn (Citation2003). The two researchers start by describing KMV as a restriction method without referring to Ronn and Verma’s (1986) model. However, they later take it as an iterative approach made up of the following steps:

Application of to obtain a time series of implied asset values and hence compound asset returns continuously.

Use of time series of continuously compounded asset returns to obtain updated estimates for the unknowns, , and

.

Not going back to the initial stage with the updated values unless convergence has been attained.

The KMV approach uses fixed maturity at 1 year and sets a default point to the sum of the short-term and half of the long-term debt obligations. According to Crosbie and Bohn (Citation2003), the KMV suggests that firm defaults when its asset value falls somewhere between short-term default and the value of the total liabilities. The paper by these two uses KMV based on daily company market capitalisation together with quarterly updated debt levels for three banks to obtain the estimates needed. The study concludes that the KMV approach is an improvement in the distance-to-default (DTD) estimation models although it has its shortcomings. For instance, the KMV depends on the implied asset values and hence cannot be used to obtain unknown parameters in the capital structure of a firm. It also does not provide clear information on statistical estimations based on the Merton (Citation1974) model. Bharath and Shumway (Citation2008) argue that since Merton’s DTD model is not an econometric model, it is not clear how its parameters can be estimated using alternative techniques. Furthermore, it has been observed as unclear how standard errors for data forecasts can be calculated for the Merton DTD model whose application is premised on inaccurate or unrealistic assumptions.

Huang and Huang (Citation2003) believe that there is no consensus from existing credit risk literature on how much observed corporate yield spreads can be explained by credit risk approaches. Their research concludes that using calibrated historical data, it is possible to obtain consistent estimates across credit spreads across all economic considerations within the credit risk structural frameworks. However, it has also been realised that credit risk explains just a small fraction of observed investment bond returns of all maturities together with a smaller fraction of short-term and higher fractions for junk bonds (Duan & Huang, Citation2012). Different structural DP models which generate high credit spreads are good in predicting fairly similar spreads under empirically reasonable choices of parameters, leading to the robustness of conclusions of the results of the paper by Huang and Huang (Citation2003).

Bharath and Shumway (Citation2008) examine the accounting and contributions of Merton’s (Citation1974)’s DTD bond pricing model in comparison with the naive Merton model which cannot be applied in solving the implied probability of default (PD) model. Their research reveals that forecasting variables and naïve predictor models are better than hazard function models for in- and out-of-sample forecasts than both Merton DTD and reduced-form models that are based on sample inputs or variables. Fitted values drawn from expanded hazard models outperform Merton DTD-PD out of sample models. On the other hand, implied DTDs from credit default swaps (CDS) and corporate bond spreads are said to be weakly correlated with Merton DTD probabilities after adjusting for agency ratings and bond characteristics (Masatoshi & Hiroshi, Citation2009). The Merton DTD model is however criticised for not producing a sufficient statistical model for estimation of DPs of banks, making its functional form remain only useful for forecasting default events.

9.3. Estimation of DTDs for banking, financial and non-financial firms

Duan and Huang (Citation2012) investigated several empirical applications to the estimation of DTD of banks. The DTD measures the extent to which a limited–liability corporation is away from a default event. Conceptually a firm’s asset values evolve according to a stochastic dynamic process where a debt (D) contract is honoured when the value of assets, A is greater than the promised payment to be settled in the future. Otherwise, if the above condition does not hold, a firm’s debt level, D and its debt holders can only recover a partial amount of what is left of the firm. When the current value of a company is much higher than its promised future obligations, the likelihood of the default event is small (Bharath & Shumway, Citation2008; Duan & Huang, Citation2012). This is because the firm has enough buffers to absorb losses in its asset values based on its corporate financial leverage (the debt-to-asset ratio) and the converse also holds. Since asset values move randomly due to external shocks, leverage ratios may not be good enough to fairly and adequately capture the notion of the DTD of a company. Some of the methods proposed in the literature used to estimate the unknown model parameters are:

The volatility restriction method by Jones, Mason and Rosenfeld (Citation1984) and Rom and Verma (1996).

The transformation-data maximum likelihood technique by Duan (Citation1994). The KMV iterative method was described in Crosbie and Bohn (Citation2003) and the market value proxy method was used in Brockman and Turtle (Citation2003) and Eom et al. (Citation2004).

The model by Crosbie and Bohn (Citation2003), for instance, uses financial firms to illustrate the shortcomings of the KMV-DP estimation model. The study concludes that financial firms have large proportions of liabilities for instance, policy obligations of insurance firms which cannot be accounted for by the KMV approach. The maximum-likelihood models by Duan (Citation1994) are modified by Duan and Huang (Citation2012) and Duan (Citation1994) to deal with financial firms and are very appropriate and flexible techniques for use in the estimation of DTD. According to Duan and Huang (Citation2012), the CRMs’ application of DTD makes it very unrealistic because its strict applications are at odds with empirical discount rates. Most financial academics argue that the DTD approach is highly informative about defaults but must be applied together with other variables to achieve good bank financial performance. According to Duan (Citation1994), calibration through reduced-form models such as logit and logistic regression analyses should be a must take in all bank financial practices. However, it is ironic to argue that the DTD as a structural credit risk model (CRM) must be calibrated by a reduced-form model to give rise to good, precise, or accurate results. Based on the Merton (Citation1974) model, it is assumed that firms are financed by equity, E with its value at the time, t, denoted by , and one single pure discount bond (denoted by,

) with a maturity date, T and principal, F.

The asset value of the firm, is assumed to follow a geometric Brownian motion (GBM) given by the equation:

where = A standard Brownian motion,

= the volatility of assets,

= the drift, and

= the Wiener process.

Due to banks’ operations under limited liability, the value of equity, at maturity is given by

Hence, at t

can be valued through the Black–Scholes OPM to become

where

S = the stock price, r = the instantaneous risk-free rate of return, F = the strike price of a call option, T = the term to maturity, t = time today, N(

) and N(

) = the cumulative probabilities of the Z-values,

, and

, respectively.

Due to the nature of diffusion models, cannot be estimated with high precision using frequency data over several years. This is true in financial econometrics because

is accompanied by a time factor,

whereas

is a time factor of

, as implied in dWt. The frequency of data sampled is known for being less informative about

than

because

(Duan, Citation1994). When the value of

is small, we can avoid the use of

in DTD estimation particularly when this measure is used as an input in a reduced-form model that needs to be calibrated. Hence, the need to reduce sampling errors through the use of the alternative DTD formula given by

In this respect, amounts to setting,

=

in EquationEquation 4

(4)

(4) , making

to be more stable than the traditional DTD.

9.4. Classical models for valuation of banks’ probabilities of default (PDs)

Nagel and Purnanandam (Citation2020) point out that the distress faced by banks during the 2007–08 global financial crisis brought an urgent need for understanding and effective modelling of their PDs. Assessment of default risks for banks is fundamental to investors, risk managers, and regulators when it comes to the measurement of bank performances and failures. In all these applications, investors and risk analysts rely on structural models of default risk where equity (E) and debt (D) values are seen as contingent claims on the assets owned by the firms. For example, Merton’s (Citation1974) standard approach or model assumes that the value of assets of a firm follows a log-normal process that is options embedded in the firm’s E and D values which can be valued using the Black and Scholes (Citation1973) model.

Extensive financial literature employs the Merton (Citation1974) model to price deposit insurance in particular (Rennacchi, Citation1988; Ronn & Verma, Citation1986, Marcus; Shaked, Citation1984). It is argued that Merton’s log-normally distributed asset values may provide some useful approximation for the asset value process of non-financial firms. However, this assumption is very problematic for banks that include debt claims such as mortgages whose upside payoffs are limited contrary to the requirements of the log-normal distribution which postulates that the upside is unlimited. The model by Nagel and Purnanandam (Citation2020) uses capped upside of a bank’s assets. The study uses the log-normal distribution assumption on the assets of borrowers of a bank as collateral security.

The bank’s assets are modelled as a pool of zero-coupon bonds whose repayments depend on the value of the borrowers’ collateral assets at loan maturity as postulated in Vasicek (Citation1991).

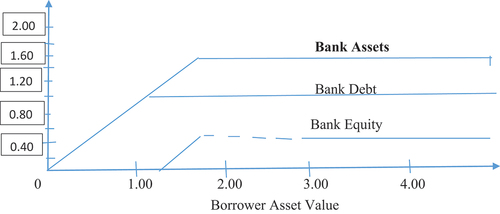

The model by Nagel and Purnanandam (Citation2020) also stipulates that loans issued have variable maturities which means that every time a fraction of a loan portfolio matures, the bank issues repayment proceeds as a set of new loans at a fixed initial loan-to-value ratio. If a loan becomes delinquent, the pledged collateral is replenished to the extent that the initial loan-to-value ratio is then satisfied. Lastly according to Nagel and Purnanandam (Citation2020) and Vasicek (Citation1991), the bank’s assets, A, E and D, are assumed to be contingent claims on the borrowers’ collateral assets. According to the study, all loans issued have values of 1.60 and debt has a face value of 1.20. This implies that the maximum that the bank can recover in the event of default is the loan value (1.60). This is capped when the borrower’s assets value falls below 1.60 because the assets of the bank are sensitive to the borrower’s asset values as demonstrated below.

shows that the value of the bank’s assets cannot have a log-normal distribution. This is because its borrowers maintain the upside of their assets’ values above the face value of the loans. The bank’s equity payoffs do not resemble a call option written on an asset with an unlimited upside, but rather a mezzanine claim comprising two kinks. These kinks have implications for the risk dynamics of the equity of a bank and the estimation of its risk of default (Nagel & Purnanandam, Citation2020). Under a capped upside, the volatility of a bank’s assets will be very low in good economic times such as booms when asset values are high. At maturity, asset values will end up running parallel to the right where banks’ equity payoffs are insensitive to variabilities in the borrower’s asset values. The Merton’s (Citation1974) standard model in which the equity of a firm is assumed to be a call option on an asset with significant variability will miss all these non-linear risk dynamics. In times of high asset values, banks may show many asset volatilities away from default, a conclusion that could be very misleading. This is because it ignores the fact that bank asset volatilities may dramatically rise if the values of its assets fall. Merton’s standard model could end up making misleading predictions about the riskiness of the values of the bank’s assets, debt, and equity.

9.5. Option pricing models (OPMs), uncertainty and estimation of PDs of banks

Nowadays many phenomena in economics, business, banking, and finance are uncertain or fuzzy but are treated as if they were crisp or precise in all decisions of banking firms and similar financial institutions. However in practice, prediction of both business and consumer bankruptcy is imprecise and ambiguous (Korol, Citation2008). The financial performance and PD modelling of banks are affected by both internal and external factors that cannot be precisely and unambiguously defined. The mere allegation that a company is at risk of bankruptcy must not be considered imprecise. In economic reality, there are more firms or persons than can be considered 100% bankrupt. It is difficult to accurately determine the degree of bankruptcy of a bank using structural statistical methods such as multivariate discriminant analysis (MDA). Fuzziness is defined by Zimmermann (2001) and Zadeh (1980) as a market condition in which returns to financial market investments are not precisely defined as expressed in probability theory but in linguistic terms such as high, average, or low. With the use of fuzzy logic and vague and ambiguous concepts, banks’ risk metrics can be defined as “high“ or ”low” risk of bankruptcy (Korol, Citation2008; Zimmermann, Citation1980).

The precisions on which structural CRMs are based are influenced by deduction and thinking processes that use a non-binary logic with fuzziness. The Merton (Citation1974) and Black and Scholes (Citation1973) models, for instance, do not put fuzziness into consideration when dealing with all the aforementioned financial problems. Banks’ CRMs such as the one by Appadoo and Thavaneswaran (Citation2013) are structural valuation models that involve uncertainty that arises from lack of knowledge, inherent vagueness, or imprecision. Of late there has been growing interest by researchers to use fuzzy numbers to deal with vagueness and imprecision facing investors in financial markets (Appadoo, Citation2006). More researchers such as Yu et al. (Citation2011) and Cherubini (Citation1997) deal with randomness in European OPMs. These models are extended to the case for pricing corporate debt contracts and provide a fuzzified version of the Black and Scholes (Citation1973) model using a variety of input variables. Results realised from the application of their models have improved estimation precision, robustness, and consistency in the pricing of options in fuzzy financial markets.

Ghaziri et al. (Citation2000) use an artificial intelligence approach to the pricing of options using neural networks and fuzzy logic and compare their results to those obtained using the Black–Scholes stock indices. The study concludes that the Black–Scholes OPM is an approximation model which leads to a considerable number of errors. Trenev (Citation2001) came up with a refined option pricing formula and discovers that because of the fluctuating nature of financial markets over time, some of the parameters of the Black–Scholes model may not be expected in the precise or exact sense. Thavaneswaran et al. (Citation2007) apply fuzziness to the Black–Scholes OPM and conclude that it is far from being practical. Although the Black–Scholes OPM is flexible and can be applied to the valuation of banks and their risk metrics, it is criticised for being premised on precise variables such as frictionless markets.

Chang and Huang (Citation2020) apply a fuzzy credit risk assessment model in banks and conclude that financial institutions need to regularly update their assessment models to maintain correct assessment results. They note that every update involves a lot of numerical experiments using multiple systems or software packages for the evaluation of the effects of different sampling techniques and classifiers to construct suitable models for the updated data sets. Chang and Huang (Citation2020) used the latest web-based technology to develop a fuzzy decision support system (DSS) that used logistic regression as the classifier combined with various sampling methods and model threshold settings to come up with a model fitting process that is more structured and efficient. Therefore by fuzzifying the independent variables of the proposed DP model, the study intends to make the new model efficient and accurate. Zhao and Dian (Citation2017) argue that fuzzy credit risk models must be premised on stable conditions and effectiveness to reduce the conservativeness of the imperfect circumstances found in the real markets. On the other hand, Sakai and Kubo (Citation2011) argue that institutional investors attain estimation rigour by assessing credit risk by using a combination of quantitative information, for example, OPMs and qualitative assessments.

Although structural OPMs can be easily constructed, they are mainly suitable for the assessment of long-term credit risk where the probability of bankruptcy varies directly with the timing of the assessment. Sakai and Kubo (Citation2011) propose a new set of assessment models for long-run credit risk that does not use stock prices and incorporates business cycles. Values estimated from these models are effectively usable in the calculation of risk spreads such as DTDs although rating biases may exist in the credit risk assessment of the markets. Schultz et al. (Citation2017) employ Merton’s PD as a continuous ex-ante measure of the likelihood of a firm’s failure to perform on a loan obligation. They apply a dynamic generalised methods of moments (GMM) to characterise the link between corporate governance and the chance of default by a financial institution.

By using the GMM technique, the research overcomes the limitations of discrete proxies used in previous similar studies. Initial test results demonstrate that there is a significant relationship between a bank’s PD and corporate governance measured in terms of executive remuneration, board, and ownership structures. However, when endogenous factors are accounted for in the above model, relationship ceases to exist. Malyaretz et al. (Citation2018) substantiate the need to incorporate efficiency indicators of bank activity as fuzzy quantities to fairly demonstrate the actual conditions faced by institutional investors in emerging markets. They propose a fuzzy multivariate regulatory analysis model for the assessment of the competitiveness of Ukrainian commercial banks. The results of their research show that there is the expediency of the application of the model to determination of the competitiveness of banks drawn into the study.

According to Lee et al. (Citation2005), the Black–Scholes option model developed in 1973 has always been considered the cornerstone of all OPMs. However, its practical applications have always been constrained by its nature of not being suitable for fuzzy financial market environments. Hence, since the planning and decision-making processes of investors in the area of option-pricing are always with a degree of uncertainty, it is pertinent that new structural models for estimation of risk metrics be adjusted to improve their precision and accuracy (Zadeh, Citation1965; Zimmermann, Citation1980). In reality, option pricing methods depend on a person’s deduction and thinking process which employs a non-binary logic with fuzziness which is not considered by classical probabilistic models such as the Black–Scholes model. Lee et al. (Citation2005) adopt a fuzzy decision tree and Bayes’ rule as a base for measuring fuzziness in options’ analysis and pricing. Their study also employs a fuzzy decision space comprising four dimensions, namely fuzzy state, sample information, action, and evaluation function to describe investors’ decisions to derive a fuzzy Black–Scholes OPM under a fuzzy financial environment. The results of the research by Lee et al. (Citation2005) show that the market risk-free rate of return, stock price, and call prices in the money (ITM) and at the money (ATM) are over-estimated while that of the out of the money (OTM) option is under-estimated. It is the flexibility demonstrated by the Merton (Citation1974) model that exploits the flexibility of the Black–Scholes OPM to come up with an asset valuation model (AVM) on which all contemporary structural credit risk models are premised.

9.6. Contemporary credit risk models for estimation of PDs of banks

According to Engelmann and Rauhmeier (Citation2011), the use of internal rating-based approaches (IRBA) of the new Basel Capital Accords is critical as it allows banks to use their rating models for the estimation of PDs provided their systems meet the set minimum requirements. Statistical theory provides a variety of methods for building and assessment of credit rating models. These methods include linear regression modelling, time-discrete techniques, binary response analysis, hazard models, and non-parametric models such as neural networks and decision trees such as the Bayes’ theorem. The benefits and drawbacks of the above models are interpreted in light of the minimum requirements of the IRBA. Engelmann and Rauhmeier (Citation2011) discover that although there are difficulties such as data collection processes and procedures, model building, and time and effort took in model validation, IRBA is efficient and maintains its predictive power in the estimation of the risk of default of a bank. In other words, contemporary IRBA must maintain their linearity assumption to be always reliable and valid in their application to the valuation of credit exposures of banks.

Kholat and Gondzarova (Citation2015) compare characteristics and mutual relationships among contemporary CRMs based on the importance of credit risk issues in the global economy and business sector operations. Their study examines Credit-Metrics and Credit Risk + models whose parameters are based on differences in computational procedures and model techniques used in the quantification of input parameters. They use variables such as risk definitions and sources, characteristics and data requirements, credit events, returns, and numerical model designs across various CRMs to come up with their models. They realise that effective models to do with credit risk need to be based on default-mode and marking-to-market (MTM) models, a result consistent with that by Gisho and Khestick (Citation2013). Default mode is a technique used in the prediction of bank losses caused by default events arising from failure and non-failure model variables. This is the case for Credit-Risk+ and Moody’s KMV) models which are improvements to the structural risk metric models by Merton (Citation1974) which is drawn from the Black and Scholes (Citation1973) OPM.

MTM models on the other hand contain the Credit-Metrics approach and focus on the market values of loans. These models use rating systems (rating migration) to determine changes in the loan quality of a potential borrower. It is noted that default mode models are only measured by changes in the debtors’ assessments which emanate from their failure to honour their loan obligations. The models compare the possible paths of loan default rates under both Credit-Metrics and Credit-Risk+ models (Musankova & Koisisova, Citation2014b). Credit-Metrics and KMV models are based on the traditional Merton (Citation1974) model and hence banks’ assets and their volatilities are central data variables that are needed in the estimation of the risk of default of a bank. On the other hand, the major variables under Credit-Risk+ models are default risk levels and asset volatilities. The paper by Musankova and Koisisova (Citation2014b) discovers that KMV data inputs are a time series of asset values comprising risk liabilities, stock prices, and asset correlations. The characteristics of credit events can also be used to compare CRMs, for example, the creditworthiness of bondholders. In this respect, the KMV determines a credit event of a bank as a change in the distance-to-default (DTD) which leads to changes in the expected default frequency (EDF; Gavlakova & Kliestik, Citation2014). The Credit-Metrics model on the other hand characterises credit events as states in which there are migrations of default events from one grade to another. Empirical evidence suggests that EDF values respond to changes in the credit quality of borrowers faster than changes in their rating classifications.

Therefore, we can conclude that credit events are more prevalent in the KMV than in Credit-Metrics models and hence the need to adopt the former for its ability to estimate prevalent credit events. Under Credit-Risk+, credit events are based on default-state because they are unique. Default mode models and changes in default rates may imply decreases in the credit quality of a borrower. The estimation of the DP of a bank and the distribution function of the default probability of of a credit event in the Credit-Metrics approach is represented by the default probability modelled based on its annual historical data (Kliestik et al., Citation2015). Under the KMV approach, the expected frequency of failure changes in response to variabilities in market values of assets and their standard deviations. On the other hand, their paper determines that under Credit-Risk+, default probability is represented by a measure of the risk of default (Gavlakova & Kliestik, Citation2014). The recovery rates under default events are exogenous parameters for each sub-portfolio of loans used in the Credit-Risk+ approach. However, under the Credit-Metrics approach, these recovery rates are captured as random variables with beta distributions and modelled using the Monte Carlo Simulation approach. The simple KMV considers recovery rates to be constant model parameters, while the KMV model assumes that these rates follow a beta data distribution. Hence, it is based on gaps in contemporary structural credit risk models presented above that this study proposes a KMV-DP estimation model extended for market friction to make it reflective of the practical conditions faced by investors and banks in fuzzy financial markets.

9.7. Market friction and variables of the proposed KMV default probability model

This subsection intends to provide the link between the literature detailed above and the proposed KMV risk of default model’s variables which are market values and volatilities of banks and return on equity (ROE) and market friction.

9.7.1. The concepts of market efficiency and friction

Calin (Citation2016) defines market efficiency as the extent to which the price of an asset or stock fully reflects all information available and hence this makes financial markets frictionless. However, economists disagree on how efficient markets are attained and frictionless. Followers of the efficient market hypothesis by Fama (1952) hold that markets efficiently deal with all information on a given security and reflect it in the stock price immediately. Therefore, technical and fundamental analysis methods of stock pricing and/or any speculative investing based on these methods are useless. A frictionless market can be defined as a theoretical trading environment where all costs and constraints associated with transactions are non-existent (Downey, Citation2019). However, the primary observation of behavioral economics, for instance, holds that investors make decisions on imprecise impressions and beliefs, rather than rational analysis. This, therefore, renders financial markets somewhat inefficient to the extent that they are affected by the decisions of investors and people in general.

argue that markets are frictionless when efficiency in capital markets requires that capital flows are sufficient to eliminate arbitrage anomalies. The authors examine the relationship between capital flows to a quantitative (quant) strategy. This is a strategy based on capital market anomalies and the subsequent performance of the financial institutions after the implementation of this strategy. When capital flows are high, holders of quantitative funds can implement arbitrage strategies more effectively, leading to lower profitability of market anomalies in the future, and the converse is also true. This means that the degree of cross-sectional equity market efficiency varies across time factors with the availability of arbitrage capital. Frictionless markets are used in theory to support investment research or financial trading concepts. In speculative investments, many financial performance returns will assume frictionless financial market costs.

Investors must view both friction and frictionless analyses for a realistic understanding of a security’s return on the market of operation. Pricing models such as Black and Scholes (Citation1973) and other methodologies also make frictionless market assumptions which are important to consider since actual transaction costs will be associated with real-world financial applications. In economic theory, a frictionless financial market is defined as a market without transaction costs to be faced by investors (Downey, Citation2019). Friction on the other hand is a type of market incompleteness. Every complete market is frictionless, but the converse does not hold. In a frictionless market, the solvency cone is the half-space normal to the unique price vector. The Black–Scholes OPM is based on the assumption of a frictionless market that is a market where investors incur no transaction costs in all their banking and investment endeavours (Durbin, 2002).

De-Young (Citation2007) defines market frictions as all transaction costs, taxes and regulations, asset indivisibility, nontraded assets, and agency and information problems that negatively impact the performance of banks and other players. These costs are not provided for in contemporary CRMs making all asset, equity, and risk metric estimates from banks’ financial data to be inaccurate and not true reflections of their actual financial performances. These costs facing banks are made very high when additional hazards such as regulatory and supervision and corporate governance and ethical challenges are factored into the estimation of risk metrics. Fuchs and Uy (Citation2010) note that a lack of technology and innovations bring huge barriers to further outreach and development, including high transaction costs and lack of access to long-term sources of finance.

Although structural models assume that markets are frictionless, in reality, financial markets are characterised by friction as evidenced by changing asset standard deviations and returns to investments. It is observed that costs of making financial transactions are very huge and not constant as purported by popular CRMs by Merton (Citation1974), Black and Scholes (Citation1973), and De-Young (Citation2007) define market friction as anything that interferes with investors’ trading and can exist even in efficient markets. They go further to argue that financial market frictions generate business opportunities and costs to investors and change over time.

9.7.2. Relationship among variables of the proposed KMV-DP model

Traditional and asset value volatility movements must play an indispensable role in the determination of the likelihood of default events (Mason & Rosenfeld, Citation1984). This is because the same level of the buffer may not be sufficient to withstand potential losses when the value of a firm’s assets is highly volatile. Under good economic times, a good DTD value must be a leverage ratio adjusted for the trend and a standard deviation of the value of a firm’s assets. Duan and Huang (Citation2012) introduced the Merton model to define the DTD of banks above. The DTD is an appropriate concept used in the estimation of default risk. However, it is challenged because it is computed only when we know the market values of assets and parameters governing both trend and asset volatilities. The asset values and volatilities are not observable when used under the standard Merton (Citation1974) model. In the absence of a time series of observed asset values, it is complex to estimate model parameters that define both trends and movements in standard deviations of assets.

Risk of default or DP is the likelihood that a bank will fail to perform on its interest and principal obligations when they fall due. Default risk occurs when the market values of banks’ assets lose value in the financial markets (Sakai & Kubo, Citation2011). In other words, when assets default, a bank must reduce the values of such assets on its books, following its laid down accounting standards and rules, and decrease its capital values and earnings. DP is a very important risk metric that must be efficiently or effectively estimated to improve precision and robustness in the assessment of bank financial performance (Engelmann & Rauhmeier, Citation2011). Effective DP estimation can also enable banks to come up with critical policies and strategies for reducing the quantity of non-performing loans (NPLs) and financial performance. In the study, the risk of default is the dependent variable, and market friction (cost of equity), bank liabilities, asset values, and their volatilities, time to maturity, and return to equity are the independent variables. The discussion below details all the independent variables of the proposed model, and how they are estimated from the raw data before they are fuzzified using contemporary models presented in Sections 3 and 4.

10. Market values of banks and their volatilities

Using Ito’s Lemma, we can demonstrate that the values of equity and assets of a bank and their volatilities can be connected through the general formula:

To be able to solve for the unobservable variables of the equation, namely and

we should be able to solve a system of non-linear equations. The famous Merton (Citation1974) PD model as applied by Garcia et al. (Citation2021) gives rise to two simultaneous linear Equationequations (7

(7)

(7) and Equation8

(8)

(8) ) with two unknowns which are

and

:

The volatilities of equity and assets of a banking firm are connected through the equation:

Asset volatilities represent how large a company’s assets swing around the mean market price that is it is a statistical measure of the returns to a bank. The values of a bank’s assets and their volatilities are unknowns to be estimated from the financial fundamentals of the bank under structural models 7 and 8.

11. Estimation of the liabilities of a bank

The basic accounting equation used in the estimation of a bank’s liabilities is Assets (A) = Liabilities (L) + Owner’s equity (S), such that Liabilities, L = A-S. In the model, a bank’s Total assets = Non-current assets+ Current assets, Liabilities = Long-term Liabilities+ Current liabilities, and Owner’s equity = Number of shares issued The current liabilities of a bank include all current payments on long-term loans such as mortgages and deposits by its clients.

12. Return on equity and its estimation

Return on equity (ROE) is defined as the company’s average residual or net income per share in the issue. According to Burns et al. (Citation2008), the ROE is a better way or measure of bank profitability than the risk-free rate of rate which is just a nominal and not effective measure of bank performance. Most structural models of default risk are pinned on the risk-free rate of return, which is far from reflecting fairly the actual performance of a bank. Hence, the study employed the banks’ ROEs as these fairly represented a true reflection of their actual position from all their trading and investment activities over a given time frame. ROEs are strong proxies for the risk-free rates of return because they are unique and directly related to the individual bank’s issued shares and market financial performances. The banks’ ROEs are calculated using the traditional formula,

Banks’ ROEs like all other risks of default model variables are converted into fuzzy values because they are also influenced by experts’ perceptions.

13. Estimation of the cost of equity

One of the major contributors to the risk of default in banks is the cost of equity which is not factored into most contemporary structural models (Besley et al., Citation2020). Cost of equity can be defined as the return that a company needs for a project or investment or equity investment or pays out to ordinary shareholders as dividends. According to Zabai (Citation2019) in conjunction with Madan and Unal (Citation1996), banks must manage their default risks through efficient, effective and prudential management of their costs of equity. The study, therefore, incorporates market friction in the form of the cost of equity, in the proposed KMV risk of default model to represent costs incurred by banks in constituting their capital bases. We used Myron Gordon’s constant growth model to estimate the bank’s cost of capital or the required rate of return (RRR). The traditional RRR or cost of equity is calculated using Gordon’s constant growth model or formula,

where

The market price per share today, and g = The constant growth rate in earnings and dividends.

14. Research methodology

We proceed by first illustrating the fuzzy logic, concepts, and approach to the estimation of risk metrics of banks before presenting the proposed KMV risk of default model, assumptions, sources of data and input variables, and their justification.

14.1. Fuzzy approach to estimation of the risk of default of a bank

This section commences by presenting the basic fuzzy concepts of fuzzy sets and systems before proposing the model to be used in calculating the risk of default of banks.

14.1.1. Fuzzy concepts and their definitions

The following are the basic definitions and properties of fuzzy set theory, systems, and numbers with their relevant operations.

Definition 3.1 A fuzzy set, A in X R (real numbers), is a set of ordered pairs A =

where

is the membership function, grade of membership, degree of compatibility, or truth of x

(Chen & Pham, Citation2001).

Definition 3.2 A fuzzy set A in is said to be a convex fuzzy set if its

are (crisp) convex sets for all

Definition 3.3 A fuzzy number, u =, a,

is specified by its core a

with support in [

] defined as:

where L(x) is an increasing function with L() =0, L(a) =1 and R (x) is a decreasing function with R (a) =1, R (

Functions L(.) and R (.) are the left and right shape functions of u, and they are assumed to be differentiated (Chen and Pham, Citation2001).

Definition 3.4 For values of s are defined to be the compact intervals

=

, which are “nested” closed intervals (Wang Y, Elhag T MS, Citation2006).

14.1.2. Fuzzy approach systems and their applications

Fuzzy approach systems have not only been used in a variety of practical problems but also in regulatory risk and credit analysis, as well as for evaluation of bankruptcy and default prediction. The fuzzy approach combines easy designs based on both experts’ opinions and data history in the estimation of the risk of default of a bank, leaving out market friction. Fuzzy logic arises as a good tool for emulating expert rules since they do not require too much effort to modelling risk metrics as other traditional methods do (Chen & Pham, Citation2001). A fuzzy system can emulate rules of the common type: IF (Conditions) THEN , where conditions and consequences are fuzzy propositions built by linguistic expressions or semantics:

x is Low

y is Not High

x is Low AND y is High

x is Low OR y is High.

Propositions 1 and 2 (expressions) define immediate propositions, while propositions 3 and 4 define combined propositions (Soares et al., Citation2013). Since these terms operate over fuzzy variables, we need to define them in linguistic terms or fuzzy sets, as covered or defined in Section 3.0. Fuzzy expressions to do with semantics such as NOT, OR, and AND, which are combined to form relations, R are detailed in Section 4.

14.2. Proposed KMV-risk of default model for commercial banks

The study proposes a risk of default model that incorporates the variables of the distance-to-capital (DTC) model but is based on the DTD valuation model. The DTC takes precedence over the DTD because the latter model does not involve complexities that are related to the firm’s numerical fundamentals. According to Larsen and Mange (2008), a firm’s DTC, at the time, t can be computed from the general formula:

where (t) = The market value of the bank’s assets at the time, t, X(t) = The market value of debt, r = The risk-free rate of return, T = The term to maturity of assets or debt,

, where PCAR = The capital requirements of a bank according to the Capital I Accord set at 8% of the risk-weighted assets of a bank. It should however be understood that for the calculation of the DTD we take

0 and PCAR = 0. The conversion of the Merton–KMV–DTD model to a risk of default model calls for deducting transaction costs from the risk-free rate of return obtained in the economy. Therefore, the proposed KMV model for the valuation of the risk of default of banks drawn from the above equations is given by:

where The study uses the return on equity (ROE) instead of the risk-free rate of return because it is more characteristic of the fair returns to banks than the latter. Following the Merton (Citation1974) model, we can show that the risk of the default probability of a bank at the time, T evaluated at the time, t is given by

where DTD at the time t is given by

where = the expected market rate of return.

Since the Standard Normal Distribution (0;1) function is universal, the side factor that determines the DP of a bank is the DTD. The DTD is simply the logarithm of the leverage ratio shifted by the expected return (u-) (T-t) and scaled by the volatility-time term,

). If we have two firms with identical leverage ratios and asset

deviations but the asset value of one firm is expected to increase at a faster rate than that of the other, it means that results will depend on the sign attached to the numerator (Dua et al., Citation2012). When the numerator is positive the assets of the firm will cover default and lower,

, making the firm less likely to default and vice versa. The implication is that when economic agents are risk-neutral, the expected investment return will be

that is

should be replaced by

. In theory, DP is not physically experienced but DTD is, hence the need for suitable estimates for both expected return and

.

Two models adopted for comparison with the proposed KMV model are the traditional structural PD and hazard function models whose formulae are outlined below:

The structural probability of default (PD) model for comparison with the proposed model (Equationequation 13(13)

(13) ) is given by the formula:

The Cox (Citation1972) proportional hazard function model discussed in Section 2.1 of the literature is based on two major assumptions: (1) survival data curves for different strata should have hazard functions that are proportional over the time frame, t and (2) the relationship between the logarithmic hazard and each covariate are linearly related, which can be verified with residual data plots. It has been shown that when the hazard ratio is >1.00, the treatment group would have a shorter survival span than the control-referenced group (Sawadogo, Citation2018). On the other hand, if this ratio is <1.00, it implies that the group of interest is less likely to have a shorter time to the event than the reference group. The ratio is however criticised for not being able to quantify the magnitude of the difference between the two groups. While the logistic regression model tests whether a risk factor affects the odds of a disease or not, the Cox (Citation1972) proportional hazards model tests whether a risk factor affects the age of onset of the disease in humans. Any hazard ratio above 1.00 would generally mean that the treatment group healed faster or had a slower time to an event. A hazard ratio of 1.00 means that both groups (control and treatment) would be experiencing an equal number of events at any given point in time (Mendes, Citation2014).

According to Sawadogo (Citation2018), the Cox (Citation1972) semi-parametric model is a regression approach for human survival data that provides an estimate of the hazard ratio and its corresponding confidence interval, which is an area of interest to credit risk modelling under frictional and fuzzy financial markets. The study uses the Cox (Citation1972) proportional regression model which fits data with a constant covariate, xx that is data that do not vary over time to a hazard function of the general form given by

where we can estimate the unknown value of β1 and h0(t) is the baseline hazard, which is a non-parametric and unspecified value that depends on the variable tt and not on xx. Therefore for particular xx values, we will be able to estimate the survival function if we have an estimate of the baseline survival function, (t)

^(t; Boland et al., Citation2016). The estimated survival function for an individual human with covariate value xk turns out to be given by

The study adopts the form of the hazard model above to determine the hazard rates of banks when it comes to their failure to meet their debt obligations. Cox’s (Citation1972) model is used in the study mainly for comparing results with those drawn from the structural and proposed KMV risk of the default model. It should however be noted that there are usually magnified differences between hazard and credit risk model ratios because risk ratios do not care about the timing of the event but are only concerned about the occurrence of the event by the end of the day.

14.2.1. Sources of data

The study used audited and published financial data of eight banks conveniently drawn from six Southern African countries. The countries from which the banks are drawn are South Africa, Namibia, Botswana, Malawi, Tanzania, and Zambia. The audited financial statements of the banks cover the period 2008–2020 and are from the World Development Index (WDI, 2020). This is a credible source of data and researchers can easily download data directly from the website for research purposes. Although the available financial data are drawn from various economies of Southern Africa, the research concentrates on financial data of banks listed on Stock Exchanges of the same countries. Panel data are popular for their ability to reduce the co-linearity among explanatory variables, and hence improve the efficiency of econometric estimates. The study employs unbalanced panel data that have been checked and screened for apparent coding errors and missing variables. It is the interest of the research to conveniently draw various countries from the region in the quest to compare and contrast the performances of banks in large and small countries such as South Africa and Mauritius, respectively.

14.2.2. Input variables for the proposed model and their justification

The data variables for the estimation of the risk of default for a bank are market values of its assets (A) and debt (D), asset volatility, the return on equity ()

Extension to existing CRMs such as KMV DTD-PD for market friction enables investors to improve the accurate estimation of their banks’ PDs. According to KMV-DTD model, the equity of a firm is perceived as a call option on its underlying assets because at the maturity of debt, bondholders receive their debts and equity holders realize the rest. The model operates only if we are given observable equity and unobserved asset values and their corresponding volatilities. Based on the Black–Scholes OPM, where the value of assets is represented by the price of a call option (C) and the value of equity by the value of the stock (S), debt (D) is taken as the strike price (K).

14.2.3. Assumptions of the KMV risk of default model

The assumptions on which the KMV model is founded are two, that is

Debt is homogeneous with time to maturity, T;

The Capital Structure of a firm is given by the general equation:

where (t) = the value of assets, D(t) = the value of debt and

(t) = the value of equity.

The markets are perfect and ignore coupons and dividends and there are no penalties incurred by investors for short selling; and

Dynamism of a bank’s assets is that they follow a geometric Brownian motion as alluded to earlier on under Equationequation 1(1)

(1) .

Under the Black–Scholes OPM, the market price of a European call option is given by

where S(t) = the stock price, r = the risk-free rate, K = the strike price of a call option, T = term to maturity of the bank’s assets and liabilities, t = time today, N(), and N(

) = the cumulative probabilities of the Z-values,

, and

, respectively.

Using Ito’s Lemma we have demonstrated that the values of equity and assets of a bank and their volatilities can be connected through the general formula above (see Equationequation 6)(6)

(6) . To solve Equationequation 6

(6)

(6) for the unobservable variables of the equation, namely

and

we should be able to solve a system of non-linear equations given by

The solutions to the above system of Equationequation (13)(13)

(13) are unique as

which is analogically due to changes in the Black–Scholes model and is an increasing function of the value of assets of a bank,

has a unique solution. On the other hand,

has a unique solution as well.

14.3. The structure of a fuzzy system

According to Soares et al. (Citation2013), a fuzzy system is usually characterized by the following components:

Input variables (made up of their respective fuzzy data sets)

Output variables (these are the diagnostic values)

Rule base (this determines the output for each combination of input values)

Inference machine (which applies fuzzy operations to transform crisp variables into fuzzy variables)

Fuzzy sets (these are linguistic terms for each input variable drawn into the model)

Crisp values (these are numerical values taken from the real financial world)

The four model variables namely expected return on equity ( are fuzzified through the architecture of fuzzy systems given below, developed by Ross et al. (2010).

14.4. Concepts used in the architecture of fuzzy systems

The principal components of fuzzy logic control systems are the fuzzifier, rule base, and evaluation, aggregation of the rule outputs, and de-fuzzification as detailed below.

15. Fuzzification

This is the process of transforming real-world crisp quantities into fuzzy quantities or variables (Ross et al., Citation2010). This can be realised by identifying the various precise and deterministic quantities as totally non-deterministic and very uncertain in practice. These variables’ uncertainty could have emerged as a result of imprecision and vagueness which may then lead to the representation of the input variables by a membership function as they can be fuzzy. For example, if we argue that the return to equity of a bank is 25% per annum, an investor could convert this crisp input into a linguistic variable such as moderate, high, or low return (Ross et al., 2010). The study transforms the four input variables, ROE, cost of equity, asset values, and volatilities into fuzzy parameters for use in the estimation of the risks of default of banks. The fuzzifier translates these crisp variables into fuzzy inputs which are then directed into the intelligence engine for further transformation. The intelligence engine is responsible for converting the input variables into fuzzy outputs. All the fuzzy outputs obtained are then directed into the de-fuzzifier where they are finally turned into crisp outputs.

16. Fuzzy operations, rules, base, and evaluation

This is the application of fuzzy operations, Minimum and Maximum in input variables according to the available rules to determine if they should be inclusive (AND) or exclusive (OR). The fuzzy rules comprise input and output variables that assume values from their term sets, with meanings associated with each linguistic concept (Soares et al., Citation2013). Crisp or exact model inputs are fed into the fuzzifier to transform them into fuzzy variables under a clearly defined rule base. The rule base is characterised by all the rules and membership functions that regulate decision-making processes in fuzzy logic systems. The base also contains the “If-Then” conditions which are used for conditional programming and controlling the whole fuzzy logic system. Rules evaluation is a technique that is used to assess the criteria and return model values based on a dynamic configuration process (Ross et al., 2010). The evaluation system gives users the possibility to configure model inputs for application scoring, approving flows, credit insurance, or bureaus.

17. Aggregation of the rule outputs

The aggregation of the rule outputs is the process by which the fuzzy sets representing the outputs of each rule are combined into composite fuzzy sets. It is a process that only occurs once for each output variable and happens before the final de-fuzzification process is undertaken. The output of the aggregation process is converted into one fuzzy set for each given output variable.

18. De-fuzzification

This is the opposite of the process of converting the crisp results into fuzzy variables. The mapping done here is the conversion of the fuzzy results into crisp variables. This process is very capable of generating a non-fuzzy control action which illustrates the possible distribution of an inferred fuzzy control action or process (Ross et al., 2010). The de-fuzzification process can also be considered to be the rounding-off process, where a fuzzy set having a group of membership values on the unit interval is transformed into a single scalar quantity.

19. Validation of the KMV-Risk of dealt model, findings, and discussions

The validation of the proposed risk of the default model follows several steps as detailed below.

19.1. The fuzzy extension principle

It has been observed that most structural stochastic models are solved using classical and fuzzy set theories but are not extended for market friction which is a huge transaction cost to investors, especially in emerging financial markets. In the process of managing functions of real variables, the use of the fuzzy extension framework should result in the correct application of the extension principle by Talamanca, Guerra, and Stefanini (Citation2011). We start by assuming that we are given an exact relationship function of the general form,

of n real variables given by . The above multiple linear relationship function’s fuzzy extension can be obtained to evaluate the effects of both transaction costs and uncertainty on the variable,

, modelled by the corresponding number,

for each level

in the interval [

;

,], given the possible values

. Suppose we are also given another variable,

;which denotes the fuzzy extension of a continuous function, f. The continuous function, f is characterised by n variables for each level of

, resulting in the interval [

;

], which represents the propagation of uncertainty from all variables,

to the variable,

(Ross et al., 2010).

It should be noted that if the uncertainty on the original variables of a model is denoted by, which is also modelled by linear numbers, the

-variable will still be a fuzzy number, starting from a single value (at

=1.00) to the most uncertain interval level (at level,

=0.00), but it loses its linearity property in the process of such transformation (Soares et al., Citation2013). This also follows that the parametric representation of the variable is also necessary when input variables are triangular fuzzy numbers to apply the extension principle and represent the non-linear output fuzzy numbers (Talamanca, Guerra, and Stefanini, 2012). To obtain the fuzzy extension of fuzziness to normal semi-continuous fuzzy intervals, we have to compute the

–cuts [

;

] of v, defined as the images of

-cuts of

; that are then obtained by solving the following constrained optimisation problems for

[0;1]:

Source: Talamanca, Guerra and Stefanini (2012).

Only in simple cases can the optimising problems above be solved analytically. In general, the solution of such a system of equations is complex and computationally expensive to determine for each [0;1]. Hence, we require global solutions to the two non-linear problems for all model variables. Therefore, Ross et al. (2010) present the above architecture of fuzzy systems which we used in translating input variables of the KMV risk of the default model into fuzzified parameters.

19.2. Settings and implementation of the fuzzy system

The fuzzy system elaborated above is implemented using Mamdami’s (1975) system as the central inference machine. This is because Mamdami’s system is very simple for use in processing rules and values of model variables. The system is set up as tabulated below for fuzzification and de-fuzzification of model variables:

19.3. Fuzzy logic and DP modelling in banks

Fuzzy logic has been defined as a computing technique based on the degree of truth. It may also be taken to be a method of reasoning that resembles human logic or reasoning in financial investment decisions. The approach of fuzzy logic imitates the way of decision-making in humans that involves all intermediate possibilities between two digital values, which are “Yes and No” (Chen & Pham, Citation2001). Therefore, fuzzy logic operates on the levels of possibilities that are associated with the input variables to attain a definite model output. Fuzzy logic is thus a basic control mechanism that depends on the degrees of the state of the input variable/s. It is the state of the input used in modelling that determines the nature of the output to be realised. A study by Sabounchi et al. (Citation2011) uses fuzzy logic that incorporates linguistic variables in the valuation of a default event. The study concludes that the use of the max-min operator system diversifies inconsistencies among fuzzy rules and defuzzified model variables behaved reasonably for defuzzified default risk estimation methods. In other words, a fuzzy logic system works on the principle of assigning a particular output depending on the probability of the state of the input variable to be used. There are many reasons given for the application of fuzzy logic in banking and finance such as

It is relatively simple to create and deploy since it’s fully based on human experts’ opinions and evaluations.

It is used in fields of study involving decision-making processes that require some sort of human judgment.

Human mind abstracts real-world variables in an imprecise manner to constitute semantic networks.

These semantic variables or networks define relations that can be expressed in linguistic terms just as experts do in various disciplines (Soares et al., Citation2013).

Hence, any business activity that requires expert opinions or judgments can be modelled through fuzzy logic rules without the need for an existing theoretical model to base on. By taking into account all the above sets of fuzzy sets, numbers, and logic or literature into consideration, we designed a model for predicting the risk of default of banks that could be accurate, robust, and practical. The methodology used in this design is the same as that which was developed and employed by (Pereira et al., Citation2012). The research methodology we use comprises the following procedure or variables running from stages A to C:

According to literature, the default event is influenced by many borrower internal and external conditions most of which could be unknown to the loan provider unless they are declared. However, simple models of DP can yield good results using statistical measures or techniques (Ross et al., 2010). To make the system more applicable, we took all the crisp variables of the model and fuzzified them before the validation of the proposed KMV-DP model.

19.4. Results from validation of the KMV-risk of default model

The study proposes a new look KMV model for estimation of the DP of a bank in emerging financial markets. It investigates the effects of asset values, liabilities, returns on equity and cost of equity or friction on the DPs of banks in fuzzy financial markets. The model is validated using financial data drawn from banks in emerging markets of countries in Southern Africa. It also aims to compare the results estimated using the KMV model with those generated from other approaches such as hazard function and structural risk models. The research employs a STATA Package to come up with three sets of results for all eight banks based on structural, fuzzy KMV, and hazard function models for comparison purposes. Table represents the architecture of fuzzy system by Ross et al. Citation2010 which was adopted for fuzzification of model variables. Table on the other hand demonstrates hoe model variables where fuzzified and defuzzified. On the other hand Table presents the three stages of the study procedure or variables.Table summarises the risks of default results from the structural and KMV models (in Scientific form, A (as decimals).

Table 1. The architecture of fuzzy systems by Ross et al. (2010)

Table 2. The process of fuzzification and de-fuzzification of model variables

Table 3. The three stages of the study procedure or variables