ABSTRACT

Neural Style Transfer is a Computer Vision topic intending to transfer the visual appearance or the style of images to other images. Developments in deep learning nicely generate stylized images from texture-based examples or transfer the style of a photograph to another one. In map design, the style is a multi-dimensional complex problem related to recognizable visual salient features and topological arrangements, supporting the description of geographic spaces at a specific scale. The map style transfer is still at stake to generate a diversity of possible new styles to render geographical features. Generative adversarial Networks (GANs) techniques, well supporting image-to-image translation tasks, offer new perspectives for map style transfer. We propose to use accessible GAN architectures, in order to experiment and assess neural map style transfer to ortho-images, while using different map designs of various geographic spaces, from simple-styled (Plan maps) to complex-styled (old Cassini, Etat-Major, or Scan50 B&W). This transfer task and our global protocol are presented, including the sampling grid, the training and test of Pix2Pix and CycleGAN models, such as the perceptual assessment of the generated outputs. Promising results are discussed, opening research issues for neural map style transfer exploration with GANs.

FRENCH ABSTRACT

En vision par ordinateur, le transfert de style neuronal vise à transférer le style d'images vers d'autres images et de générer des images stylisées. En cartographie, le style est un problème complexe, multi-dimensionnel, composé de caractéristiques visuelles saillantes et d'arrangements topologiques reconnaissables issus des choix graphiques réalisés pour représenter un espace géographique à une échelle donnée. Le transfert de styles est un enjeu pour générer une diversité de nouveaux styles pour représenter des objets géographiques. En apprentissage profond, les réseaux antagonistes génératifs (GAN) sont une classe d'algorithmes d'apprentissage non supervisé, supportant très bien des tâches de création et modification d'image à image, intéressants pour le transfert de style cartographique. Nous expérimentons et évaluons des architectures GAN, pour tester le transfert de styles à des ortho-images, à partir de différents styles cartographiques pour différents espaces géographiques, du plus simple (Plan de ville) aux plus complexes (cartes de Cassini, Etat-Major ou Scan50 N&B). Cette tâche de transfert et notre protocole global, la grille d'échantillonnage, l'entraînement, le test des modèles Pix2Pix et CycleGAN, et l'évaluation perceptuelle des résultats générés sont présentés. Cette expérimentation donne des résultats prometteurs, ouvrant des pistes de recherche pour le transfert de style neuronal cartographique.

1. Introduction

In map design, the map style transfer has been approached so far by reproducing or by mimicking inspiration sources from existing maps or artistic paintings and movements: the specification of style parameters and related expressive rendering techniques have been investigated to let the users control the style of their map (colorization, watercoloring, line drawing, texturing) (Christophe et al., Citation2016; Christophe & Hoarau, Citation2012). The specific underlying complexity of map stylization is related to the required series of choices regarding several abstractions (geometric, graphic, mental) to make a map, conveying an encoded information, as a sophisticated elaboration of meaningful salient spatial features and arrangements. These abstraction choices refer to the intention of a mapmaker to convey geospatial information and eventually how to transmit a typical and recognizable way to encode the information (Ory et al., Citation2015). Therefore, the map style transfer, as a specific map stylization issue, has to be approached carefully in order to preserve those meaningful visual features and arrangements between cartographic objects in the map.

Deep learning techniques achieve attractive results in generating stylized images from examples of textures (Gatys et al., Citation2015) or to transfer the style of a photograph to another one (Luan et al., Citation2017). Neural Style Transfer (NST) is an existing complex topic in Computer Vision. NST targets the transfer of the visual appearance or the rendering style of a reference image to other images, in order to generate output images, while minimizing both the difference of content and the difference of style: for instance, from a van Gogh painting to a photograph in the city, aiming at generating the same photograph in the style of the van Gogh painting (Gatys et al., Citation2015, Citation2016). Various techniques have been investigated to obtain more or less arbitrary style transfer (Xu et al., Citation2019).

Capturing essential visual characteristics of a map style is still at stake for geovisualization purposes, as we try to better address the concept of style for visualization and to speed up the generation of expressive cartographic representations or graphic representations inspired from cartography. Already well experienced advances in deep learning, especially about Generative Adversarial Networks (GAN) techniques, allow us to experiment their relevancy for neural map style transfer.

In this paper, we present an experimental approach of a comparison between the two main and well experienced GAN architectures, Pix2Pix (Isola et al., Citation2016) and CycleGAN (Zhu et al., Citation2017), to support the transfer of old and present map styles to ortho-images, in order to generate new cartographic styles. Based on a review of the state of the art, we first demonstrate how GAN architectures are relevant to be tested for our long-term issue of controlling the map style transfer. Then we present precisely our transfer task and protocol, our image and style sampling plan, the training of the models, and the specification of some style parameters to be controlled during the whole process. Finally we present and discuss some promising results, and assess their perceptual quality, enabling to open research issues for neural map style transfer with GANs.

2. Related work

Map Stylization & Map Style Transfer.

Map stylization is the result of a graphic and visual composition of geometric primitives rendered and represented in the map, each with an elementary style. Map style transfer, by now, supposes to have first identified the main characteristics of the style to be reproduced, by compositing and arranging main visual characteristics. For instance, a Pop Art topographic map is made of a composition of elementary styles applied to each topographic data (Christophe & Hoarau, Citation2012). Map stylization, as a type of image stylization, is approached so far by non-photorealistic techniques, such as painting, drawing, technical illustration, and animated cartoons (Kyprianidis et al., Citation2013), while specifying style parameters and related required non-photorealistic and expressive rendering techniques. When attempting to mimic old map styles or artistic techniques, the specification of expressive rendering techniques has been investigated to generate texture synthesis, water colorization, engraved prints and colorization (Arbelot et al., Citation2017; Christophe et al., Citation2016; Loi et al., Citation2017). The possibility to generate new different map styles from an existing one is possible, while preserving the main visual signature of this style and playing with other visual parameters (Ory et al., Citation2015).

Exploring the possibilities given by the parameterization of a style and its related rendering techniques offers eventually a variety of possible map styles: this approach by analogy is really efficient. The optimization while compositing sub-styles is made according to cartographic constraints ensuring all along the process to preserve spatial features, topologies and semantics. This approach of map style transfer takes time: first, the expected styles have to be specified in details, then specifically rendered according to a set of underlying constraints, and finally composited all together according to the map content. Designing a meaningful even recognizable map style remains quite ambitious. We aim at finding a way to have a more global approach to generate possible new styles, instead of specifying each elementary style for a geometry and then trying to optimize a global style composition.

Neural Style Transfer (NST)

Neural Style Transfer is indeed an example of image stylization. NST can be described as a histogram-based texture synthesis with convolutional neural network (CNN) features for the image analogies problem (Gatys et al., Citation2015). Amazing results were provided as neural algorithm of artistic styles, separating and recombining the image content and style of natural images, across a diversity of artistic styles. The users can explore new painting styles by arbitrarily combining the styles learned from individual paintings (Dumoulin et al., Citation2016; Gatys et al., Citation2016). Those works provide also clues to better understand the structure of the learned representation of an artistic style. Neural style transfer between two photographs has been also investigated, for photorealistic transfer in order to improve distortions in output images (Luan et al., Citation2017). While neural style transfer is essentially a special domain adaptation problem both theoretically and empirically (Li et al., Citation2017), some approaches have been conducted to use adversarial networks to regularize the generation of stylized images, based on the learning of intrinsic properties of image styles from large-scale multi-domain artistic images, in order to obtain more or less arbitrary style transfer (Xu et al., Citation2019). Image-based artistic rendering (IB-AR) based on NST has been proposed, by separating image contents from style, in order to create a generalized style transfer and to further target artistic creativity and production (Semmo et al., Citation2017). Semantic approaches are pursued using domain adaptation techniques, in order to avoid semantic mismatch, and finally highlight the influence of semantic information on the NST quality (Park & Lee, Citation2019).

NST for map design issues

NST has been investigated for map design issues. Artistic style transfers on maps have been addressed for instance, by generating output hybrid maps, with a Convolutional Neural Network (CNN) approach: the purpose is to assess the emotions facing very artistic outputs, with more or less randomness, causing inconsistencies in style and content of the map (Bogucka & Meng, Citation2019). The limits of NST to mimic the high quality of manual cartographic works, in particular relief shadings, have been highlighted by Jenny et al. (Citation2020). NST could move or distort the geometry of terrain features, and sometimes forgets or re-invents terrain features not existing in reality. NST techniques cannot be used for topographic mapping because of the need for accurate positioning of all relevant terrain features. The authors preferred the replication of hand-drawn relief shading using U-Net neural networks, a fully convolutional neural network developed for image segmentation (Long et al., Citation2015). U-Net have been used, for instance, to transform sketchy comics drawings into sharp drawings (Simo-Serra et al., Citation2017). These limitations are quite important to note in our context: we expect a balance of consistency in content and style in output results, and not an exact reproduction of a complete one.

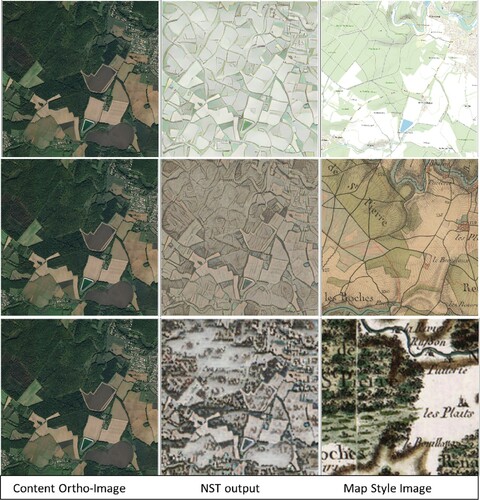

We tested the classical approach of NST (Gatys et al., Citation2015), with an accessible implementationFootnote1: results are very nice ones, highlighting the layouts of parcels, mixing nicely colors and textures, but ‘randomly’ and without any preservation of topology and spatial consistency between objects (parcels, woods, roads, urban area) (). These effects increase with the visual complexity of map styles.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GAN), as a set of machine learning algorithms allow to generate high-level realistic images, based on a generative model where two networks are competing: the first one generates an image, and the second one tries to detect if this image is real or generated by the first network. These techniques are very useful to modify or generate images. The capacities to generate new but realistic images, while remembering a particular set of visual characteristics is clearly fitting our expectations there.

Many GAN based methods achieved great results for image-to-image translation tasks (such as image synthesis, inpainting, or high-resolution) in order to improve the level of details and the quality of resulting images:

Pix2Pix is a Conditional-GAN (CGAN) in a supervised mode, based on paired training data: this requires paired images, from both domains to learn one from each other, by comparing the results produced by a model (Isola et al., Citation2016). Pix2pix is an image-to-image stable and powerful translation framework widely used. Pix2Pix requires paired training images and works well for images of same source domains, which is not always the case with map style transfer.

CycleGAN proposes to circumvent the limitations of Pix2Pix, and is trained without supervision: it has a two-step transformation of source domain image, as a cyclic consistency, first by trying to map it to the target domain and then maps back to the source domain, in order to reduce the gap between the reconstructed image and the original image (Zhu et al., Citation2017).

The Multimodal Unsupervised Image-to-Image Translation (MUNIT) framework translates an image to the target domain, while recombining the content code with a random style code in the target style space providing nice results (Huang et al., Citation2018).

GAN Architectures for map generation

Cartography and deep learning converge progressively more and more, while new architectures appear in time, promising to circumvent and improve existing limits in deep learning techniques to preserve the complexity of maps. Previous works compared deep learning approaches, for instance Convolutional Neural Networks (CNN) and Generative Adversarial Networks (GAN) for map generalization purposes, identifying new pitfalls and limits of deep learning techniques for cartography, most of all about the non-preservation of the topology (Courtial et al., Citation2020; Kang et al., Citation2020).

Various GAN models have been investigated in the very last years, to generate map styles, based on an image-to-image approach. An experiment to transfer styled map tiles from simple-styled maps, generated based on vectorized data, with different cartographic styles across various map data types (including Google Maps, OpenStreetMap, and artistic paintings) has been conducted by Kang et al. (Citation2019) showing that GANs have great potential for multiscale map style transferring. New approaches and models are under investigation, based on the combination or the improvement of Pix2Pix and CycleGAN architectures. MAPGAN is intended to transfer style, in experimenting one-to-one and one-to-many domain map generation, between different types of maps (Google maps, Baidu maps and Map World maps), while improving the generator architecture of Pix2pixHD (Li et al., Citation2020). GEOGAN is intended to automatically generate styled map tiles from satellite images, while providing a model based on cGAN with style and content losses (Ganguli et al., Citation2019). In order to circumvent main problems regarding topology issues from previous works, SMAPGAN aims at preserving topology first, as a semi-supervised generation of styled map tiles directly from remote sensing images (Chen et al., Citation2020), The Semantic-regulated Geographic GAN (SG-GAN) is an enhanced GAN model to make automatic satellite-to-map image conversion by bringing in the external geographic data as implicit guidance, as GPS traces, to ensure the consistency of road networks topology: six GAN-based approaches are compared to validate the results (Zhang et al., Citation2020).

As a long-term purpose of a better understanding of the map style structure, we would like to consider a global approach to capture a visually complex map style and to generate a diversity of them. We assume that this could be achieved by GAN techniques allowing to go beyond traditional image segmentation approaches, in order to reach such expectations and explore their capacities to handle a new approach for neural complex-styled map style transfer. Even if existing limitations are described for some tasks, and issues remain mainly about spatial topology, our objective through this experimentation is to validate main preliminary steps: this includes the description of the task and the protocol to be refined while perceptually assessing the generated outputs, in order to propose a framework for neural map style transfer.

3. Experimentation framework: neural old and current map style transfer to ortho-images

We present our global protocol to experiment our framework for neural –old and present– map style transfer to ortho-images. We describe the precise task to perform, related to our quality requirements on the results, and the selected GAN architectures to be compared. Then, we present our image and style sampling plan to fit the task, and all characteristics of the training of the models, and how we additionally decided to control some visual style parameters.

3.1. Task: map ST to ortho-images

Task design aims

Existing related works about map style transfer mainly use Google Maps or city plans, which are represented by sober, meaning visually simple and light, styles. In this experimentation, we would like to tackle topographic maps, more visually complex, regarding the content and its clutter level, as well as potential various topographic map styles existing in time, giving us the possibility to handle heterogeneous content and style in our experimentation. Additionally, mixing content and style, possibly enhancing some visual characteristics and arrangements in both content sources (maps and images) would be very relevant to tackle, in order to create new more expressive graphic representations. The main task in our experimentation is the map ST to ortho-images, including the integration of the notion of spatial resolution.

Requirements on perceptual quality on transfer results

From the previous research works on map style transfer (Christophe et al., Citation2016; Christophe & Hoarau, Citation2012), we identified different perceptual criteria from map experts auto-evaluation, that could be taken into account for the evaluation of the quality of the on-going neural map style transfer.

| (1) | A map style has been effectively transferred to an ortho-image, or a new map style has been finally generated, while integrating content and style of the reference and initial images. | ||||

| (2) | For each type of geographical environment: the textures, the colors, and the level of detail or of clutter, are well transferred. | ||||

| (3) | The output image supports effectively the recognition of main spatial features and arrangements. | ||||

| (4) | The topological constraints regarding the main networks are preserved. | ||||

| (5) | The output image looks like a map, or is validated to be a map. | ||||

Task design choices

We select to handle various sets of contents and styles, allowing to test and refine the transfer task and the transfer protocol more widely, including the following characteristics:

Various images and graphic representations representative of various types of environments: rural, urban, mountainous, coastal.

Various geometric and topological abstractions, resulting from generalization processes related to a specific map scale.

Various visual style parameters of these geometric features, i.e. textures, outlines, color contrasts and distributions.

Various levels of visual complexity and geometric and/or graphic cluttering.

3.2. Selected GAN architectures related to task

In order to perform such a map transfer task, we select the most accessible and well-experienced GAN architectures, because providing associated documentation, and widely proved in the state of the art to control current existing limitations. Here, we assume two main hypothesis on the transfer task and protocol. First, because source domain and related cluttering levels and content features are closer, Pix2Pix could be relevant for contemporary simple-styled map style transfer to ortho-images, but not suitable for old map styles more different and visually complex in content and styles (H1). Second, because possibly increasing the capacity of generating new styles from all possible combinations of contents and styles, CycleGAN could be more relevant for old visually complex and cluttered map style transfer to ortho-images (H2).

3.3. Data and networks training

3.3.1. Creating input samples

Data Sources

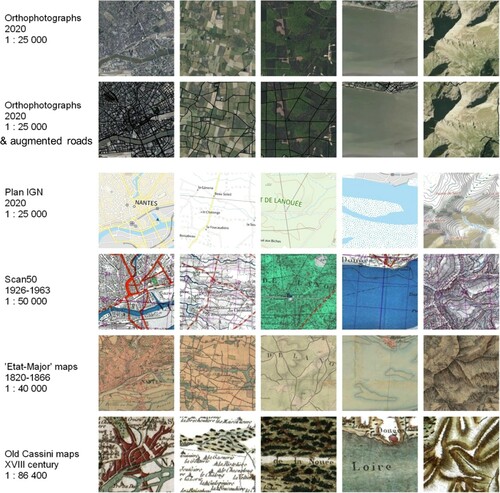

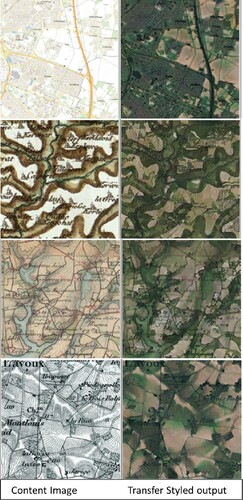

All data used in our protocol come from the same source the French geoportalFootnote2 and are illustrated by some samples for each style domain with their reference scale as followed (Figure ):

| (1) | Content data: Ortho-imagery at a spatial resolution of 50cm (Figure – 1st line); | ||||||||||||||||||||||||||||

| (2) | Map styles: a set of contemporary and old topographic maps (Figure – from 3rd to 6th line):

| ||||||||||||||||||||||||||||

Sampling grid

In order to get paired images for the use of the Pix2pix model and also to ease the perceptual assessment of the output results, our samples require to be generated, at the same location, at a similar scale. According to the variety of scales from all sources, implying a variety of the extents of sources, we delimit the extents of all images accordingly with QGIS (Figure ).Footnote3 In order to circumvent computational time, we decided to work on small samples first, in order to validate our protocol. Our sampling grid is finally based on a set of samples, some to be used to first train the model (around 80%), and some to be used to test the trained models (around 20%):

a first grid of 3km side of samples of 256×256 pixels (approx. at 1 :44291 scale): 514 samples, including 412 for training, and 102 for tests;

a second grid of 4,7 km side of samples of 400×400 pixels (approx. at 1 :44291 scale).

3.3.2. Implementation

We used an open PyTorch implementation for both unpaired and paired image-to-image translation, providing source codes to train and test both CycleGAN and Pix2Pix models (Isola et al., Citation2016; Zhu et al., Citation2017).Footnote4 Our code was run on Google Colab, including data retrieval. The step of pairing data was conducted with a QGIS script. All sets of samples (around 80% for training, and around 20% for tests), the images produced by the tests, as well as the trained model, the loss functions logs, and if possible, the intermediary images and models, are retrieved and stored. The experimental computational time implies around 200 epochs, knowing that an epoch is between 130 and 170 seconds on Google Colab, making around 8 hours in total.Footnote5

3.4. Controlling contrast, cluttering and scales

We know the main limitations of our selected architectures:

the non-preservation of spatial topology in image-to-map conversion. Ortho-imagery provides occlusion effects between spatial features of the territory, prevents from distinguishing the continuity of road and hydrographic networks, and so tends to increase the non-preservation of topology.

the failure of reproducing some specific textures and colors, very characteristic from a style.

the non-preservation of eventual complex arrangements in some samples, for instance urban spots while they are representative of a style.

The used GAN architectures do not themselves provide explicit control of some style parameters. After first trials, we propose to enhance control over the task. Different strategies could be followed to achieve this control: removing, emphasizing or pre-processing some objects in samples. Augmenting ortho-images while enhancing some object types is a strategy to make characteristic features and arrangements more ‘legible’ for the models: this could increase the probability to be learned and reproduced by the framework, such as other nearby objects benefiting from this augmentation too. We thus integrate a step of input samples preparation, by increasing the contrast of road networks with a thick black polyline coming from the related vector data (Cf. – 2nd line), and are used for all our experimentations.

4. Analysis and perceptual assessment of map style transfer (ST) results

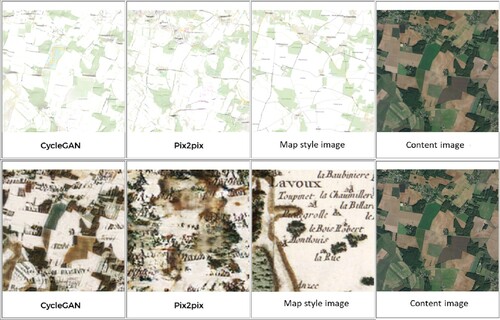

4.1. Map ST to ortho-images (Pix2Pix)4

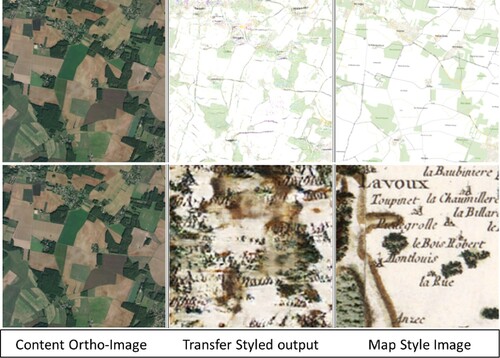

As a first glance, the results seem quite good for general visual arrangements and style, but are incomplete, even with more samples: only a few number of roads are retrieved, at some false locations, and urban spots are not taken into account (-1). Pix2Pix is rather evaluating the global similarity with the style image. It is clearly not efficient for old complex-styled map style as Cassini, because they are more cluttered with dark brown and green colors and various textures, with very large toponyms, and globally darker or with high level of dark contrast for one sample ( – 2). This type of result is likely the same with the other map styles. Confirming (H1), Pix2Pix remains effectively more efficient for simple-styled plans, because they are sober, light (unlike the related ortho-image), less cluttered, with a low level of details, unlike other possible abstract cartographic representations.

4.2. Old map ST to ortho-images (CycleGAN)

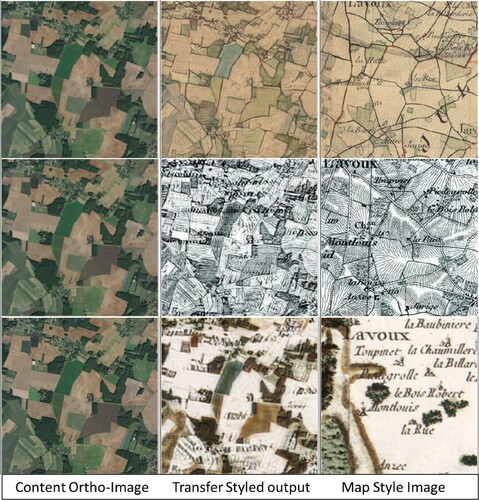

First trials have been made on a very small dataset to test our second hypothesis (H2), e.g. CycleGAN could be more powerful to manage complex-styled spatial arrangements. Extensive tests have been conducted with the all sets of samples, to approach and refine both our protocol and the generated results. presents our best results of three old map style transfers to an ortho-image. First, an ‘Etat-Major’ style transfer, with roads-enhancement, effectively visually augments the content of the ortho-image with this particular old map style: a mixed style is created between the ortho-image and the ‘Etat-Major’ style, i.e. mixing colors, textures and contrasts, as well as emphasizing parcels, outlines and urban spots very nicely ( – 1). Second, a Black & White Scan50 style transfer is really interesting, because transferring very significantly all textures to the ortho-image, even if parcels shapes may be different, emphasizing outlines and parcels. The final layout is very nice and renders properly an old map style, as drawn by hand, enhancing textures nd hatching effects. Toponyms are quite missed by the process. When augmenting the size of trained data, the effect is more subtle, emphasizing the roads layout again ( – 2). Thirdly, the Cassini style transfer becomes very subtle there, preserving the existing color contrasts of the Cassini style, emphasizing the parcels and their arrangements, and as the previous transfer, attempting to rebuild the urban spots ( – 3). The input and output domains are quite far from each other, i.e. geographic objects vary in the images, in presence, extent, and size, and are represented by various colors, textures, and outlines. Confirming (H2), the algorithm handles properly well new stylized spatial arrangements to mix between visually complex content and style of input and output images.

Figure 5. CycleGAN: old map style transfers to ortho-imagery (Etat-Major (1), Scan50 (2), Cassini (3)).

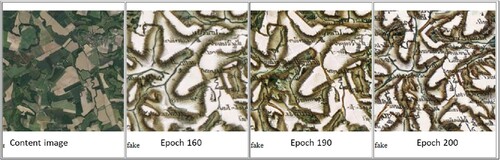

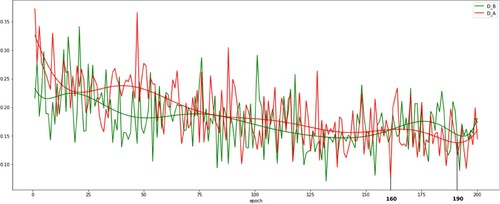

With the help of the visual analysis of the shape of the loss functions, we can observe their evolutions through epochs and their relevancy to support the evaluation of the quality of the style transfer outputs. With the example of a Cassini style transfer on an ortho-image, one can observe the shape of the discriminator of the Cassini Style on ortho-imagery (D_A) with a local minimum at epoch 160 and averaged minimum at epoch 190 highlighted in (red), and the related intermediary outputs in .

Figure 6. Map style transfer between ortho-image and Cassini: epoch-per-epoch and smoothed loss functions of the discriminators D_A (Cassini style on ortho-image, red) and D_B (ortho-image on Cassini, green).

We observe that an intermediary output, produced when the loss function of the discriminator D_A is minimal (epoch 190), is more precised in details, with distinguishable spatial location and topology, with more Cassini colors and textures, than another one of a previous epoch (epoch 160); it seems also to be better at the epoch 200, where some hydrologic network or urban spots appear, while the distributions of colors appear better (). This calls for a co-analysis of the visual quality of the resulting generated styles as well as the possible optima of the loss functions.

4.3. Inverse map ST: ortho-image to maps (CycleGAN)

In order to better understand how the style transfer could be handled by the models, we experiment inverse tasks consisting in transferring the ortho-image style to the set of maps. The results are quite interesting, because helping to explore again what could be other types of combinations of content and style, even not so good. Most of the time, the output images are not so recognizable, because very cluttered by the dark colors and textures of the ortho-image. This said, the behavior of the toponyms is quite interesting because they seem to be transferred exactly as they are. Roads and parcels layouts are quite good in the outputs. Some watercolor and irisation effects appear on the transfer of colors and textures, while erasing smoothly some colors, providing artistic effects of dilution there ().

4.4. Discussion

About the task design and the control over the map ST

We propose a task of map ST which could be now refined, as soon as we better understand the structure of the expected style through the learning process. According to our expectations about the map style transfer, the task and the protocol could be adapted and refined, in order to specifically target some map styles, from very simple to complex ones, with adapted additional controls over the datasets, the task and the process, to drive the process.

In this paper, we have been considering augmented road networks only, with particularly efficient and nice effects on the map style transfer and the quality of the results, in terms of the preservation of the spatial topology of those networks, and also on neighboring objects. This validates the choice of this control on the task. This visual emphasizing of some objects remains not so simple to manage, because according to the input data, their generalization level could involve high level of details in the road networks, that could generate uncontrolled effects during the process, generating potential noise, at targeted scales. In order to manage this further or additionally to other controls, the augmentation of some objects or arrangements could be made by emphasizing the outlines of the parcels to support the preservation of the graphs of the parcels, or by exaggerating the texturing or increasing color contrasts of other objects. Finally, according to the expectations on the task, controls could be added to manage specifically some objects or arrangements in the output (local transfer), until a global and precise entire output (global transfer).

The comparison between a task and its inverse is very interesting, at least to better understand the behavior of the models, but also to imagine the way both tasks could be processed, in a complementary way, in order to get a better map style transfer at the end. At least, it is a way to generate new ‘ortho-image-to-maps’ styles. It could be also a way to experiment possible compositions of the task and its inverse, to generate new, possibly even better results, or to handle another way to control the transfer of the style. By now, regarding the inverse task, we face likely another independent task to address which requires its own set of constraints.

This issue of generating new styles coming from ortho-images and maps converges with previous works on mixing their content and styles, in order to generate mixed styles combining abstract and photo-realistic representations of the territory and to provide more expressive graphic representations for the users after all. According to the level of controls of the transfer, some visual characteristics and arrangements in both content sources (maps and images) could be increased or reduced, and rendered more or less salient.

About perceptual quality assessment

In this paper, we handle an auto-evaluation of the results, based on our own expertise of map designers. This strategy faces some limits. It would be important to have some quantitative measures to automatically evaluate the perceptual quality of the resulting images, or at least to support a semi-automatic evaluation: visual and perceptual criteria coming from image analysis and local or global clutter measures, could be useful to drive the process and propose relevant controls and related criteria. Issues about map quality and how to assess it properly, quantitatively and qualitatively, still come up.

About the remaining limitations

Limitations about the quality of the map style transfer still remain. Most of all, the handling of toponyms is still a main issue interfering during the style transfer. Works have been conducted in parallel to attempt to remove those texts, from images, or to hide them. Texts cannot be properly generated from aerial images, but the GANs learn that it is an important feature of the map styles, so they generate maps with random characters, or random shapes as close as characters they could be. We did not expect to retrieve exactly the toponyms as they are in the content image, but most of all to transfer totally or even partially a style. Even if reaching text issues is important, we try to pursue our experimentations, including the toponyms as a part of the style being transferred, and the results, even if absolutely not perfect, circumvent more or less the issue. One idea would be to detect where texts are in the maps, using optical character recognition techniques, also now based on deep learning (Weinman et al., Citation2019): the pixels of map images where texts are could be removed from the convolutions of deep networks, in order to learn style transfer without text. Additionally, we need to consider solutions to refine the quality of our protocol, but most of all regarding the map quality: it will be useful to work further on the enrichment of input data, in order to improve and refine the loss functions, such as enlarging the sample size.

Various behaviors of models, related to their own designs, could be taken into account to balance the generation of map styles, according to what we want to achieve. About the task of an old map style transfer to ortho-image, we potentially face totally different contents, because of the possible spatial-temporal evolution of geographical features and arrangements, in addition to the very difference of styles. Pix2Pix preserves the (contemporary) content coming from the ortho-image of the input data and manages a style effect on this current content; CycleGAN tends to transfer also the old content and the old style, to the new map (). This observation claims for a possible optimization of the use of accessible GAN architectures. Probably, very recent CNN and GAN evolutions could provide more sophisticated architectures, but at the time too difficult to use in such kind of experimentation and for such specific complex expected map style generation task.

All observations and analysis lead us to think further on the way to co-analyze the visual quality of the resulting images as well as the possible optima of the loss functions. We converge here again to some optimization issues and how to balance inside the sets of output images between possible ‘good’, ‘suitable for a use’ and ‘better’ solutions.

5. Conclusion

Our experimentation of a neural map style transfer with GANs is very promising as it effectively generates new map styles from old complex ones to ortho-images.

The generation of new styles, from historical maps and current aerial images, involves problems of visual complexities regarding the notion of a style. The style also implies to take additional temporal effects into account, making some geographic and cartographic objects differ in time, in geometry and possibly in topology, so facing adaptation domain issues. Our work is therefore distinguished from the state-of-the-art in the generation of multiple styles, from data whose geometric matching is limited due to the evolution of the territory.

The quality of the generated images is quite dependent from the level of visual complexity in explored map styles and ortho-images. In some cases, the high density of visual information could generate confusion between cartographical objects for the models. In order to address the visual complexity, we experiment some controls over the process, in augmenting the road networks, involving better results, going beyond the issue of preserving the spatial topology, and mixing both content and style from both (even different) domains.

We still face some limitations on the style transfer quality, according to the visual complexities of both ortho-images and styles, which require several improvements on our design of task and protocol: (i) Identifying perceptual indicators (Gatys et al., Citation2017) would be relevant to evaluate the quality of the neural map style transfer, enabling eventually to have new leverages to refine the whole process; (ii) Working further on salient spatial graphs and arrangements to preserve visual topology is also at stake, and could be addressed by controlling the geometries and/or the styles, upstream of the process; (iii) Using other existing visual representations of the territory (engravings, photographs, paintings) would allow to explore the capacities of our map style generation.

Finally, this experimentation calls for an optimization of the compositing of content and style in preserving particular spatial arrangements, including geometric and visual topology aspects. Inverse map style transfers are also interesting in this sense, because providing some new bases for further experimentations on composition of style transfers with GANs. Going further on the design of the task, its inverse and a more refined protocol could help to identify compositional rules to investigate for a compositional approach.

This promising research contributes to the main long-term purpose of better understanding and so learning the map style structure. Improving this knowledge implies capturing the essential visual characteristics of a map style which is still at stake for geovisualization purposes.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Notes on contributors

Sidonie Christophe

Sidonie Christophe is a senior researcher in GISciences & Geovisualization, focusing on design and use of (carto)graphic styles for geovisualization and visual analysis purposes. She is also LASTIG co-director and GEOVIS Team Leader, from Université Gustave Eiffel and IGN-ENSG.

Samuel Mermet

Samuel Mermet and Morgan Laurent, Polytechn Nantes Msc. students made their research project on the topic of deep learning and map design.

Guillaume Touya

Guillaume Touya is a senior researcher in GISciences and cartographic generalization, from Université Gustave Eiffel and IGN-ENSG.

Notes

3 A subtle work has been done here to select suitable, complete, sufficiently homogeneous and typical maps, according to their specific assembly table, in order to avoid some pitfalls between some map types: problem of sheets connection, missing or incomplete data in some area, uncolored sheets, etc.

5 Important to note that technical choices (sampling plan, size samples, tools) on such topic are quite related to computational time issues: this has been strengthened by the pandemics context and the requirement to work remotely on laptops.

References

- Arbelot, B., Vergne, R., Hurtut, T., & Thollot, J. (2017). Local texture-based color transfer and colorization. Computers and Graphics, 62(5), 15–27. https://doi.org/https://doi.org/10.1016/j.cag.2016.12.005

- Bogucka, E. P., & Meng, L. (2019). Projecting emotions from artworks to maps using neural style transfer. In Proceedings of the ICA (Vol. 2, pp. 1–8). Copernicus GmbH.

- Chen, X., Chen, S., Xu, T., Yin, B., Peng, J., Mei, X., & Li, H. (2020). SMAPGAN: Generative adversarial network-based semisupervised styled map tile generation method. In IEEE Transactions on Geoscience and Remote Sensing (pp. 1–19). Conference Name: IEEE Transactions on Geoscience and Remote Sensing.

- Christophe, S., Dumenieu, B., Turbet, J., Hoarau, C., Mellado, N., Ory, J., Loi, H., Masse, A., Arbelot, B., Vergne, R., Brédif, M., Hurtut, T., Thollot, J., & Vanderhaeghe, D. (2016). Map style formalization: Rendering techniques extension for cartography. In P. Bénard, H. Winnemöller (Eds.), Proceedings of Non-Photorealistic Animation and Rendering. The Eurographics Association. https://doi.org/http://doi.org/10.2312/exp.20161064

- Christophe, S., & Hoarau, C. (2012). Expressive map design based on pop art: Revisit of semiology of graphics?. Cartographic Perspectives 73, 61–74. https://doi.org/https://doi.org/10.14714/CP73.646

- Courtial, A., Ayedi, A. E., Touya, G., & Zhang, X. (2020). Exploring the potential of deep learning segmentation for mountain roads generalisation. ISPRS International Journal of Geo-Information, 9(5), 338. https://doi.org/https://doi.org/10.3390/ijgi9050338

- Dumoulin, V., Shlens, J., & Kudlur, M. (2016). A learned representation for artistic style. CoRR arXiv:1508.06576.

- Ganguli, S., Garzon, P., & Glaser, N. (2019). GeoGAN: A conditional GAN with reconstruction and style loss to generate standard layer of maps from satellite images. arXiv:1902.05611 [cs]. arXiv: 1902.05611.

- Gatys, L. A., Ecker, A. S., & Bethge, M. (2015). A neural algorithm of artistic style. CoRR., arXiv:1508.06576.

- Gatys, L. A., Ecker, A. S., & Bethge, M. (2016). Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2414–2423.

- Gatys, L. A., Ecker, A. S., Bethge, M., Hertzmann, A., & Shechtman, E. (2017). Controlling perceptual factors in neural style transfer. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- Huang, X., Liu, M.-Y., Belongie, S., & Kautz, J. (2018). Multimodal unsupervised image-to-image translation. arXiv: 1804.04732.

- Isola, P., Zhu, J.-Y., Zhou, T., & Efros, A. A. (2016). Image-to-image translation with conditional adversarial networks. In CVPR 2017.

- Jenny, B., Heitzler, M., Singh, D., Farmakis-Serebryakova, M., Liu, J., & Hurni, L. (2020). Cartographic relief shading with neural networks. IEEE Transactions on Visualization and Computer Graphics, 27(2), 1225–1235, doi: https://doi.org/10.1109/TVCG.2020.3030456.

- Kang, Y., Gao, S., & Roth, R. E. (2019). Transferring multiscale map styles using generative adversarial networks. International Journal of Cartography, 5(2-3), 115–141. https://doi.org/https://doi.org/10.1080/23729333.2019.1615729

- Kang, Y., Rao, J., Wang, W., Peng, B., Gao, S., & Zhang, F. (2020). Towards cartographic knowledge encoding with deep learning: A case study of building generalization. In AutoCarto 2020, the 23rd International Research Symposium on Cartography and GIScience.

- Kyprianidis, J. E., Collomosse, J., Wang, T., & Isenberg, T. (2013). State of the ‘Art’: A taxonomy of artistic stylization techniques for images and video. IEEE Transactions on Visualization and Computer Graphics, 19(5), 866–885. https://doi.org/https://doi.org/10.1109/TVCG.2012.160

- Li, J., Chen, Z., Zhao, X., & Shao, L. (2020). MapGAN: An intelligent generation model for network tile maps. Sensors, 20(11), 3119. https://doi.org/https://doi.org/10.3390/s20113119Number: 11 Publisher: Multidisciplinary Digital Publishing Institute

- Li, Y., Wang, N., Liu, J., & Hou, X. (2017). Demystifying neural style transfer. arXiv:1701.01036.

- Loi, H., Hurtut, T., Vergne, R., & Thollot, J. (2017). Programmable 2d arrangements for element texture design. ACM Transactions on Graphics, 36(4): 105a. https://doi.org/https://doi.org/10.1145/3072959.2983617

- Long, J., Shelhamer, E., & Darrell, T. (2015). Fully convolutional networks for semantic segmentation. arXiv:1411.4038.

- Luan, F., Paris, S., Shechtman, E., & Bala, K. (2017). Deep photo style transfer. arXiv:1703.07511.

- Ory, J., Christophe, S., Fabrikant, S. I., & Bucher, B. (2015). How do map readers recognize a topographic mapping style?. The Cartographic Journal, 52(2), 193–203. https://doi.org/https://doi.org/10.1080/00087041.2015.1119459

- Park, D. Y., & Lee, K. H. (2019). Arbitrary Style Transfer with Style-Attentional Networks. In arXiv:1812.02342 [cs]. arXiv: 1812.02342.

- Semmo, A., Isenberg, T., & Döllner, J. (2017). Neural style transfer: A paradigm shift for image-based artistic rendering? In NPAR 2017 – Proceedings of Expressive Joint Symposium (pp. 5:1–5:13). ACM.

- Simo-Serra, E., Iizuka, S., & Ishikawa, H. (2017). Mastering sketching: Adversarial augmentation for structured prediction. arXiv:1703.08966 [cs]. arXiv: 1703.08966.

- Weinman, J., Chen, Z., Gafford, B., Gifford, N., Lamsal, A., & Niehus-Staab, L. (2019). Deep neural networks for text detection and recognition in historical maps. In IEEE 2019 International Conference on Document Analysis and Recognition (ICDAR) (pp. 902–909). ISSN: 2379–2140.

- Xu, Z., Wilber, M., Fang, C., Hertzmann, A., & Jin, H. (2019). Learning from multi-domain artistic images for arbitrary style transfer. In C. S. Kaplan, A. Forbes and S. DiVerdi (Eds.), ACM/EG Expressive Symposium. The Eurographics Association.

- Zhang, Y., Yin, Y., Zimmermann, R., Wang, G., Varadarajan, J., & Ng, S.-K. (2020). An enhanced GAN model for automatic satellite-to-map image conversion. IEEE Access, 8, 176704–176716. https://doi.org/https://doi.org/10.1109/Access.6287639Conference Name: IEEE Access

- Zhu, J.-Y., Park, T., Isola, P., & Efros, A. A. (2017). Unpaired image-to-image translation using cycle-consistent adversarial networks. In Computer Vision (ICCV), 2017 IEEE International Conference on.