?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

We suggest a wavelet filtering technique as a remedy to the problem of measurement errors when testing for cointegration using Johansen’s (Citation1988) likelihood ratio test. Measurement errors, which more or less are always present in empirical economic data, essentially indicates that the variable of interest (the true signal) is contaminated with noise, which may induce biased and inconsistent estimates and erroneous inference. Our Monte Carlo experiments demonstrate that measurement errors distort the statistical size of Johansen’s cointegration test in finite samples; the test is significantly oversized. A contribution and major finding of this article is that the proposed wavelet-based technique significantly improves the statistical size of the traditional Johansen test in small and medium sized samples. Since Johansen’s test is a standard cointegration test, and we demonstrate that the constantly present measurement errors in empirical data over sizes the test, this simple alteration can be used in most situations with more reliable finite sample inference. We empirically examine the long-run relation between CO2 emissions and the real GDP in the G7 countries. The traditional Johansen tests provide evidence of an equilibrium relation for Canada and weak evidence for the US. However, the suggested size-unbiased wavelet-filtering approach consistently indicates no evidence of cointegration for all six countries.

1 Introduction

The presence of measurement errors may lead to erroneous inference in finite samples. In empirical economic data, different degrees of measurement errors are more the rule than the exception. Thus as a consequence, standard measures are often misleading. There are several plausible sources of measurement errors such as inaccuracies in data recording and inappropriate definitions used by statistical offices that do not correspond to the desired series intended for statistical or economic analysis (Haldrup, Montanes, and Sanso Citation2005). Moreover, variables used in usual analysis may be subject to measurement errors, for example estimated regressors in regression analysis, and estimated factors often used in dynamic factor modeling (Pagan Citation1984; Hong, Ahn, and Cho Citation2016). Other examples mentioned in the literature include situations where official statistics are compiled from survey samples and not the entire population, or where empirical variables that enter the statistical analysis are only imperfect proxies for the theoretical quantities the investigator has in mind (Nielsen Citation2016).

Notwithstanding the vast evidence that measurement errors can bias estimates and distort the inference, neither the econometric research community, nor the practitioners have paid much attention to it. Cragg (Citation1994) argues that the apparent neglect of errors in variables arises for several reasons. The first reason is due to convenience; the impression that not much can be done because there are no obvious appropriate methods to deal with extra noise in the data. The second reason has its roots in the impression obtained from econometrics texts about measurement errors. Most textbooks describe the effect of measurement errors using the bivariate linear model. Employing the bivariate linear model, it has been demonstrated that measurement errors have an attenuation effect and a contamination effect. The former refers to the bias toward zero of the slope coefficients,1 while the latter consists of the bias in the intercept of opposite sign when the average of the explanatory variable is positive.

The research development regarding non-stationary time-series has had one of the greatest impacts on econometric analysis of all time. In 1905, Karl Pearson2 coined the term random walk while Yule (Citation1926) further developed these ideas and later initialized the concept of spurious, or nonsense, regressions. At least until the 1980s, many economists applied OLS on non-stationary time series variables, which Granger and Newbold (Citation1974) illustrated could generate spurious regressions. In 1987, the Nobel laureates Engle and Granger demonstrated that two nonstationary series can have stationary linear combinations. Following the seminal article by Engle and Granger (Citation1987), cointegration has been a vital element of the standard toolbox for analyzing long-run macroeconomic relationships. To overcome some of the shortcomings3 of the Engle-Granger two-stage approach to cointegration, Johansen (Citation1988, Citation1991) developed the maximum likelihood approach for the analysis of cointegration in VAR models with Gaussian errors.

There are some studies that have investigated the effect of measurement errors on cointegration relationships. Granger (Citation2009) demonstrates that when two nonstationary cointegrated series are measured with errors, they are still cointegrated if all the measurement errors are I(0). However, Fischer (Citation1990), through Monte Carlo simulation, investigates Granger’s proposition and demonstrates that measurement errors result in over rejection of the null hypothesis of no cointegration. He suggests using smaller significance level for cointegration tests when series are measured with errors, to reduce Type I error. Hassler and Kuzin (Citation2009) through Monte Carlo simulation demonstrate that the rate of convergence of static OLS is not affected by errors in variables, but the limiting distribution does change. In a stationary framework, however, the presence of measurement errors biases the OLS estimator toward zero, the effect known as attenuation bias and does not vanish asymptotically, leading to inconsistent OLS estimates.

Hong, Ahn, and Cho (Citation2016) investigate the effect of measurement errors on the speed-of-adjustment vector and on the cointegration vector. Their findings suggest that the reduced-rank estimator of the speed of adjustment vector is inconsistent, while that of the cointegrating vector is consistent but asymptotically biased. Nielsen (Citation2016) extends the co-integrated vector autoregression of Johansen (Citation1988, Citation1991, Citation1996) to the case where the variables are observed with a measurement error. Through Monte Carlo simulation, Nielsen finds that unmodeled measurement errors cause a substantial asymptotic bias in the adjustment coefficients. As a remedy, he uses a state-space form to parameterize the model, and the Kalman filter to evaluate the likelihood, and argues that after modeling measurement errors, the finite sample properties of estimators and reduced rank test statistics improve.

Duffy and Hendry (Citation2017) analyze and simulate the impacts of integrated measurement errors on parameter estimates and tests in a bivariate cointegrated system and find that in the presence of large trends and shifts, measurement errors cause the conventional attenuation bias but do not affect much cointegration analysis.

Apart from Nielsen (Citation2016) who attempts to provide a solution, the discussed literature contributes to the understanding of the effect of measurement errors on cointegration but does not suggest a practical way to overcome the problem. This article is an advancement in this field where we introduce a method that might be applied in finite sample. Through Monte Carlo simulation, we investigate the effect of measurement errors in variables on the size and the power of the Johansen’s trace and maximum eigenvalue tests.4 Unlike previous studies, we propose a wavelet-based approach to denoise series that are measured with errors to improve the size and the power of Johansen’s (Citation1988) cointegration test. This approach is then applied to investigate the long-run relationship between CO2 emissions and the real GDP.

However, it is important to highlight the type of errors that one is referring to. As is discussed in Morgenstern (Citation1963) and later argued by Cragg (Citation1994), the problem of measurement errors can refer to, but is not limited to, two main diverse types of errors. First, in the sense of mistakes in measuring an appropriately conceived quantity, and second, error in the definition of the concept being measured, so that the interpretation of the measurement does not correspond to what is actually measured. Econometricians are usually more appealed to deal with the former. The reason is that the lack of connection between the measurement and the concept being measured—which actually is a more serious problem in an economic analysis—requires the re-definition of variables. The first definition above, which is the topic of this article, refers to measurement errors in a classical5 sense, that is, a random error added to an otherwise appropriate measure.

The rest of the article is organized as follows. Sec. 2 discusses the econometric methods. In Sec. 3, the Monte Carlo experiment is described. Simulation results are presented and discussed in the fourth section, while Sec. 5 presents the empirical application. Sec. 6 presents the summary and conclusions.

2 Econometric methods

2.1 The likelihood ratio test and the effect of measurement errors

Consider a vector of observed variables that are contaminated with measurement errors:

(1)

(1) and

(2)

(2)

where xt,

, and ωt are K-vectors of the true signal, the error term and an additive noise, respectively; ωt is an independent white noise process that satisfies the classical assumption.

Johansen (Citation1988) likelihood ratio (LR) test is based on the vector error correction model (VECM) of order one (the order may be increased without any loss of generality):(3)

(3)

Under the null hypothesis of no cointegration, Π = 0. The Johansen’s trace statistic is obtained from the eigenvalues of the matrix (see Hassler and Kuzin Citation2009):(4)

(4) where

.

The LR statistic is given by(5)

(5)

In Johansen (Citation1988), the asymptotic distribution is derived, and it is noted that the critical values may be found and tabulated using simulation techniques. To show the effect of measurement errors on the signal to noise ratio, following Hamilton (Citation1994), let’s rewrite EquationEq. (1)(1)

(1) in first differences:

where

(6)

(6)

Then we have that:

Then we have that follows the following MA(1) process

where δt is a white noise process satisfying the classical assumptions and θ and δt satisfies the following:

(7)

(7)

The signal to noise ratio may therefore be rewritten as:(8)

(8)

Now one may see that as the signal to noise ratio goes to infinity and as

so does the signal to noise ratio too. In Hassler and Kuzin (Citation2009), it was shown that the asymptotic distribution depends on the magnitude of this moving average parameter which is a nuisance parameter. The option suggested by Hassler and Kuzin (Citation2009) is to estimate this nuisance parameter to solve this issue of measurement errors. However, in this article, we suggest another option which does not require any estimation of this nuisance parameter. We suggest to wavelet denoise the time series first and then use the standard Johansen (Citation1988) test. Therefore, this approach is relatively convenient for practitioners to apply, even though we need to estimate a tuning parameter. In the next section we show how this parameter is estimated and in the simulation study it is demonstrated to work very well.

2.2 Wavelet denoising

Observed time series are often noisy. Denoising them therefore consists of removing the noise to recover the signal to be analyzed. Several methods have been suggested. The Fourier transform technique has been widely applied in different fields, such as medical imaging, signal processing, and recently in economic time series analysis. The basic premise of the FT is that any signal may be expressed as an infinite superposition of sinusoidal functions, as a linear combination of sines and cosines. However, the underlying assumption is only consistent with processes whose signals are periodic and globally stationary (Gençay, Selçuk, and Whitcher Citation2002).

A wavelet (small wave as opposed to sinusoids or big wave used in Fourier analysis) w(t) is local in time and local in frequency, and its oscillations damp to zero very rapidly over time. Considering that most financial and economic time series are nonstationary, the wavelet transform (WT) overcomes the limitations (poor time localization, fixed time and frequency localization) of the FT.

Consider the observed time series that is composed of signal and noise, as in EquationEq. (1)

(1)

(1) :

where xt is the signal and ωt is the noise assumed to be

. Following Donoho and Johnstone (Citation1994) approach xt and ωt can be disentangled. Extracting the signal follows several steps. The first step consists of performing the wavelet transform. The wavelet transform utilizes a basic function (mother wavelet), then dilates and translates it to capture features that are local in time and local in frequency (Gençay, Selçuk, and Whitcher Citation2002).

In order to calculate the scaling coefficients and the wavelet coefficients one can apply the discrete wavelet transform (DWT) or maximal overlap discrete wavelet transform (MODWT) on the time series. The MODWT has an advantage over DWT; while the DWT of level J restricts the sample size to an integer multiple of 2

, there is no such limitation for the MODWT which can handle any sample size (Percival and Walden Citation2000; Habimana Citation2019). Given that the DWT requirement is rarely satisfied, we employ the MODWT.

To apply the MODWT, a discrete wavelet filter is needed. We use the Haar6 wavelet filter (Haar Citation1910). The discrete Haar filter has a length L = 2 that can be defined by its wavelet coefficients and scaling coefficients

. For level one, the coefficients are given by:

(9)

(9) and for level two:

(10)

(10)

The Haar wavelet is a symmetric compactly supported orthonormal wavelet, and presents the basic properties shared by most wavelet filters (Daubechies Citation1992; Gençay, Selçuk, and Whitcher Citation2002).

The scaling coefficients (, j = 1, 2) in EquationEqs. (9)

(9)

(9) and Equation(10)

(10)

(10) are calculated using a weighted moving average process where gl equals the weights. The wavelet coefficients (

, j = 1, 2) in EquationEqs. (9)

(9)

(9) and Equation(10)

(10)

(10) are calculated using a weighted difference operator where hl equals the weights (Månsson Citation2012).

The wavelet coefficients are then thresholded. There are several methods for thresholding. They include universal thresholding, minimax estimation, Stein’s unbiased risk estimate, and Bayesian methods, among others. In this study we opt for universal thresholding. Donoho and Johnstone (Citation1994) set(11)

(11)

The coefficients less than a threshold value δ are shrunk to zero. The advantage of universal thresholding is that it removes additive Gaussian noise with high probability. This corresponds to the measurement error situation since it consists of Gaussian noise.

The next step consists of selecting the thresholding rule. Nonlinear thresholding rules are preferred to linear ones because of their ability to adapt to rapidly changing nonstationary features (Donoho and Johnstone Citation1994; Gençay, Selçuk, and Whitcher Citation2002). There are two main thresholding rules: hard thresholding and soft thresholding.

Hard thresholding or ‘keep or kill’ is a straightforward and more intuitive method of denoising. The rule is stated as:(12)

(12) where the threshold δ is defined in EquationEq. (11)

(11)

(11) . Only those wavelets coefficients whose absolute values exceed the threshold are kept, and are otherwise set to zero. Hard thresholding is appropriate when there are large spikes in the observed series.

Unlike hard thresholding that pushes some coefficients toward zero and leaves others untouched, soft thresholding pushes all coefficients toward zero, and those wavelet coefficients that are smaller in magnitude than the threshold are set to zero:(13)

(13) where

are the soft wavelet denoised coefficients,

(14)

(14)

Soft thresholding is a continuous mapping. Since all wavelets are pushed toward zero, soft thresholding produces smoother estimates. However, if the observed data contain large spikes, this type of thresholding will suppress them (Gençay, Selçuk, and Whitcher Citation2002).

In search of a compromise between hard and soft thresholding rules, other rules have been proposed, such as Garrote’s shrinkage approach, so-called firm shrinkage, and smoothly clipped absolute deviation (SCAD) shrinkage (see Gao and Bruce Citation1997; Antoniadis Citation2007). In this article, we apply both soft and hard thresholding rules.

The final step of the algorithm consists of taking the inverse transform of the scaling coefficients and the wavelet denoised coefficients to recreate the original time series without any measurement errors. This inverse is based on the same filter as described in EquationEqs. (9)(9)

(9) and Equation(10)

(10)

(10) . Hence, gl and hl are used to inverse the transformation (see Gençay, Selçuk, and Whitcher Citation2002 for details)

3 The Monte Carlo experiments

Consider the following VECM with two series and

:

(15)

(15) where

and

are the adjustment parameters to the equilibrium.

To incorporate measurement errors, independent and normally distributed white noise is added as follows:(16)

(16)

Throughout the experiment, . This is a range from no measurement error up to the situation when the variance of the noise caused by the measurement error is the same as the variance of the classical error term. We limit ourselves to this range since it is not sensible in empirical situations that the variance of the measurement errors is larger than the variance of the error term. Hence, when the variance of the measurement error is equal or close to the variance of the error term, the measurement errors are considered to be large. This situation is approximately equal to Hong, Ahn, and Cho (Citation2016) where the only difference is that they use slightly higher variance of the measurement error than the variance of error term.

To compute the size, and

in EquationEq. (15)

(15)

(15) are set equal to zero for the true null hypothesis of no cointegrating vector (

= 0) for both the trace and max eigenvalue tests. And for the power

to emphasize on the power of the test in the presence of near-non-cointegrated7 processes, and

is set equal to zero.8 Since

N(0,1), η is equal to zero. The size and power are calculated for

. The process is repeated 10,000 times, and the rejection frequencies are presented at a nominal level of 5%.

4 Simulation results

In this section, we present and discuss the results of the Monte Carlo experiment. The rejection frequencies of the true null hypothesis, as well as the rejection frequencies of the false null hypothesis, are reported and discussed. The former is the statistical size of the test whereas the latter represents the statistical power. The size and the power are computed for Johansen’s trace and maximum eigenvalue tests for two cases. In the first case we apply the tests on raw data, while in the second case we repeat the same tests on the same, but wavelet denoised, data.

4.1 Empirical size

presents the rejection frequencies of the null hypothesis of no cointegration, that is, when . In the Appendix we present critical values for the 5% level of significance using EquationEq. (15)

(15)

(15) based on 10,000 replicates. We use the critical values when s = 0 (i.e. when there is no measurement errors). The empirical size is compared to the nominal size of 5% for different magnitudes of measurement errors and sample sizes. For s = 0 (series are generated without measurement errors) the Johansen performs well when applied on both raw and wavelet-denoised data. The maximum eigenvalue’s size is the closest to the nominal size, and soft thresholding performs better than hard thresholding.

Table 1 Empirical size of the Johansen cointegration test.

The near-perfect performance of the Johansen tests in the absence of measurement errors is not surprising in this experiment since it is under the same conditions that the critical values used to compute the size were generated. When measurement errors are introduced in the data generating process (DGP), size distortion increases with s.

For s = 0.25 Johansen tests perform fairly well in both cases (raw and denoised data). The empirical sizes are still close to the nominal size. When s increases to 0.5, one can observe an uncommon situation where the size of the Johansen test is distorted, and the distortion increases with the sample size, for both the trace and the maximum eigenvalue tests. At this point, the asymptotic property of the Johansen test starts to vanish. The wavelet-based test, on the other hand, is a bit undersized in small samples (T < 100), but the size improves asymptotically; the wavelet-denoised test succeeds in rescuing the asymptotic property of the Johansen test. Both thresholding methods yield comparable results, and there are no noticeable differences between the trace and the maximum eigenvalue tests. The distortion becomes more severe when s reaches 0.75; the Johansen test is oversized, and the distortion increases with the sample size (as an example the empirical size equals 0.55 for T = 50 and it increases to 0.8 for T = 500). Measurement errors lead to the over rejection of the null hypothesis of no cointegration. However, when the data is wavelet-denoised the distortion disappears, which results in a size of 4%. Most importantly, the size distortion decreases as the sample size increases. Irrespective of the thresholding method that is used, the maximum eigenvalue test performs better, at least asymptotically.

As expected, for s = 1 the size distortion of the Johansen test is higher. Both the trace and the maximum eigenvalue tests are oversized; and this distortion increases with the sample size evaluated, from 6% for T = 50 to 14% when T = 500. However, we investigated using simulation techniques what happens if we increase the observations (we used 50,000 observations) and in the end the empirical size decreases. Hence, for very large sample sizes the standard convergence to the nominal size occurs. Denoising the data improves the size significantly, and this improvement increases with the sample size.

Overall, the presence of measurement errors distorts the size of the Johansen test. The distortion increases with the sample size for high values of s for moderate sample sizes. For the situations evaluated (which are fairly general with a wide range of observations and different magnitudes of the measurement errors), the critical values as shown in Appendix using s = 0 may be applied.

4.2 Power of the wavelet-based Johansen test

The analysis of the size of the wavelet-based Johansen test in the presence of measurement errors revealed that the test is biased, and the bias increases with the sample size; whereas the test based on the wavelet-denoised data remains unbiased.

presents the size-adjusted power when the data are denoised using the Haar filter with soft thresholding and hard thresholding. As expected, a test with a good size comes with a cost; the test is less powerful in small samples.9 However, it is worth mentioning that the lower power in small samples does not come from denoising; even when series are measured without errors (s = 0), the original Johansen test is less powerful (see ) for close to zero, that is, in the vicinity of the null hypothesis of no cointegration. The wavelet-based test exhibits very desirable properties; the power improves with the sample size, and most importantly the test is more powerful when applied to more noisy series.

Table 2 Power (size-adjusted) of the wavelet-based Johansen test.

Table 3 Power (size-adjusted) of the Johansen (Citation1988)’s test.

There is no significant difference between the trace and the maximum eigenvalue, and both thresholding methods (hard and soft) yield similar results. The small difference in power between the trace and the maximum eigenvalue is common finding in simulation studies (see, e.g., Toda Citation1994; Lütkepohl, Saikkonen, and Trenkler Citation2001). The motivation for this is that both tests are likelihood ratio tests and no substantial difference should be expected. Moreover, the wavelet-based Johansen test, as it is the case for the original Johansen test, is more powerful when the adjustment toward the equilibrium is faster (the power increases with ). For small values of

, one needs large sample size to achieve acceptable power. The most attractive property of the wavelet-based Johansen test is its high power in the presence of very noisy series. Thus, the wavelet-denoising process is more effective for high values of s.

5 Empirical application

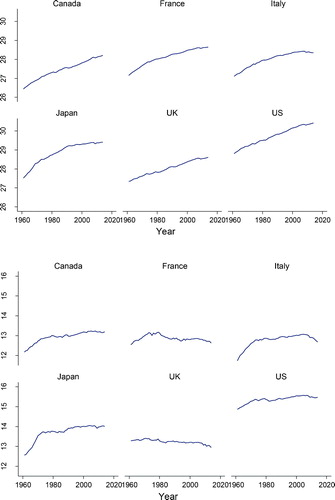

Several studies have pointed out the presence of measurement errors in CO2 emission data (Wang et al. Citation2017; Quick and Marland Citation2019; Oda et al. Citation2019) and in GDP data (Targett Citation1989; Aruoba et al. Citation2016; Chang and Li Citation2018). In order to illustrate the applicability of the suggested wavelet denoising approach, we consider the long-run relation between CO2 emissions (in metric tons) and real GDP in the seven advanced economies in the world (G7): Canada, France, Germany,10 Italy, Japan, the United Kingdom and the United States. The data is obtained from the World Development Indicators (WDI) database of the World Bank and spans over 1961–2014, that is 54 observations for each country. plots the two variables.

Prior to testing for cointegration, we must assess the integration properties of the two variables. We first check whether or not CO2 emissions and real GDP series are integrated of order one, denoted I(1), or at least integrated of the same order. If they are, they may possibly share the same common stochastic trend component. We apply the Dickey–Fuller generalized least squares (DFGLS) t-test of Elliott, Rothenberg, and Stock (Citation1996). This test is significantly more powerful than previous versions of the ADF test. We test the null hypothesis that the series is a random walk, possibly with drift, against the alternative that the series is stationary about a linear time trend. The results, reported in Appendix, indicate that CO2 emissions and real GDP series are I(1) for all the six countries.

reports the results of the Johansen test and the wavelet-based Johansen test. By applying the traditional Johansen test, the null hypothesis of no cointegration relationship is rejected for Canada at 5% and for the US at 10% significance level. The wavelet-based test, however, suggests no evidence of cointegration for the six countries.

Table 4 Johansen’s cointegration rank test for CO2 emissions and real GDP for G7 countries.

6 Concluding remarks

This article investigates the effect of measurement errors on the performance of the Johansen (Citation1988) cointegration likelihood ratio test. By employing Monte Carlo simulations, we find that empirical measurement errors result in over rejection of the true null hypothesis of no cointegration, and what is especially remarkable is that this bias increases as the sample size increases. As a remedy, a wavelet-based denoising transformation of the data is suggested using the Haar filter with MODWT. The statistical size and power of the Johansen test applied on raw and denoised data are computed for different DGPs by varying the parameters that determine the adjustment toward the equilibrium, the signal to noise ratio, and the sample sizes. The simulation results provide evidence that the denoising approach is effective with a statistical size of the wavelet-based Johansen test close to the nominal size. Most importantly, in contrast to Johansen’s test, the size of our wavelet test gets closer to the nominal size as the sample size increases, even for very noisy data.

Moreover, our wavelet-based Johansen test exhibits attractive properties in terms of statistical power. The power improves with the sample size, and the relative gain in power increases as the measurement error increases. The Haar filter combined with soft thresholding yields less distorted size, and there are no significant differences between hard and soft thresholding when it comes to statistical power. Based on our simulation results, we can recommend using wavelet-denoised data before testing for cointegration. We then applied the tests to examine the long-run equilibrium between CO2 emissions and real GDP in the G7 countries. The traditional Johansen (Citation1988) test provides evidence of an equilibrium relation for Canada and weak evidence for the US. However, our wavelet-based Johansen test suggests that these conclusions may be erroneous due to measurement errors that distort the size of the Johansen test and leads to over rejection of the null hypothesis of no cointegration.

Notes

1 Consider the following regression model , where

is x observed with noise ω so that

. Replacing

, the regression model becomes

with

; then

.

Since xi is correlated with vi, the OLS estimator is biased. where

is between 0 and 1, which implies that

is smaller than β. Measurement errors are thus biasing the OLS coefficient toward zero.

2 See Hughes B., Random Walks and Random Environments, Vol. I, Sec. 2.1 (Oxford 1995).

3 Unlike Engle-Granger approach, it is possible to add restrictions to the cointegrating vectors; coefficients estimates are symmetrically distributed and median unbiased, even when errors are non-normally distributed (Gonzalo Citation1994).

4 The size is defined as the rejection frequency under the true null hypothesis while the power is defined as the rejection frequency of the false null hypothesis for size-adjusted cointegration tests.

5 One cannot rule out the possibility of non-classical measurement errors; there are cases where measurement errors might be correlated with the true variable (the signal), for example in the tails of the income distribution. For a thorough discussion of the effect of non-classical measurement errors, see Chen, Hong, and Tamer (Citation2005).

6 For robustness checks, in addition to Haar wavelet, the Daubechies wavelet of length four, D(4) and the Daubechies least asymmetric wavelet of length eight, LA(8) were also applied in simulations. The compact support for these wavelets is in the interval [0, L – 1], where L denotes the wavelet length. Thus, Haar wavelet has compact support in interval [0, 1], the D(4) wavelet has compact support in the interval [0, 3], and the LA(8) wavelet has compact support in the interval [0, 7] (Percival and Walden Citation2000; Habimana Citation2018). The results are qualitatively similar across these three wavelets. Due to limited space, however, only Haar-based simulations are presented.

7 Terminology used by Jansson and Haldrup (Citation2002), and Banerjee, Dolado, and Mestre (Citation1998). Near zero values are chosen, since a powerful test is expected to distinguish near-non-cointegrated processes from non-cointegrated ones.

8 This is the case of “weak exogeneity” (Johansen Citation1992; Boswijk Citation1995; Urbain Citation2009). However, Hassler and Kuzin (Citation2009) show that different values of leave the size and power of Johansen’s test essentially unaffected.

9 Compared to over-sized tests.

10 Germany was not included in the analysis because its CO2 emissions series start late in 1991.

References

- Antoniadis, A. 2007. “Wavelet Methods in Statistics: Some Recent Developments and Their Applications.” Statistics Surveys 1:16–55. doi: 10.1214/07-SS014.

- Aruoba, S. B., F. X. Diebold, J. Nalewaik, F. Schorfheide, and D. Song. 2016. “Improving GDP Measurement: A Measurement-Error Perspective.” Journal of Econometrics 191 (10):384–97. doi: 10.1016/j.jeconom.2015.12.009.

- Banerjee, A., J. Dolado, and R. Mestre. 1998. “Error-Correction Mechanism Tests for Cointegration in a Single-Equation Framework.” Journal of Time Series Analysis 19 (9):267–83. doi: 10.1111/1467-9892.00091.

- Boswijk, P. 1995. “Efficient Inference on Cointegration Parameters in Structural Error Correction Models.” Journal of Econometrics 69 (1):133–58. doi: 10.1016/0304-4076(94)01665-M.

- Chang, A. C., and P. Li. 2018. “Measurement Error in Macroeconomic Data and Economics Research: Data Revisions, Gross Domestic Product, and Gross Domestic Income.” Economic Inquiry 56 (9):1846–69. doi: 10.1111/ecin.12567.

- Chen, X., H. Hong, and E. Tamer. 2005. “Measurement Error Models with Auxiliary Data.” Review of Economic Studies 72 (10):343–66. doi: 10.1111/j.1467-937X.2005.00335.x.

- Cragg, J. G. 1994. “Making Good Inferences from Bad Data.” Canadian Journal of Economics 27 (4):776–800. doi: 10.2307/136183.

- Daubechies, I. 1992. Ten Lectures on Wavelets. CBMS-NSF Regional Conf. Series in Applied Mathematics. Philadelphia, PA: SIAM.

- Donoho, D. L., and I. M. Johnstone. 1994. “Ideal Spatial Adaptation by Wavelet Shrinkage.” Biometrika 81 (9):425–55. doi: 10.1093/biomet/81.3.425.

- Duffy, J. A., and D. F. Hendry. 2017. “The Impact of Integrated Measurement Errors on Modeling Long-Run Macroeconomic Time Series.” Econometric Reviews 36 (6–9):568–87. doi: 10.1080/07474938.2017.1307177.

- Elliott, G., T. Rothenberg, and J. H. Stock. 1996. “Efficient Tests for an Autoregressive Unit Root.” Econometrica 64 (4):813–36. doi: 10.2307/2171846.

- Engle, R. F., and C. W. J. Granger. 1987. “Co-Integration and Error Correction: Representation, Estimation and Testing.” Econometrica 55 (10):251–76. doi: 10.2307/1913236.

- Fischer, A. M. 1990. “Cointegration and I(0) Measurement Error Bias.” Economics Letters 34 (9):255–59. doi: 10.1016/0165-1765(90)90127-M.

- Gao, H. Y., and A. Bruce. 1997. “Waveshrink with Firm Shrinkage.” Statistica Sinica 7:855–74.

- Gençay, R., F. Selçuk, and B. Whitcher. 2002. An Introduction to Wavelets and Other Filtering Methods in Finance and Economics. San Diego, CA: Academic Press.

- Gonzalo, J. 1994. “Five Alternative Methods of Estimating Long-Run Equilibrium Relationships.” Journal of Econometrics 60 (1–2):203–33. doi: 10.1016/0304-4076(94)90044-2.

- Granger, C. W. J. 2009. “Developments in the Study of Cointegrated Economic Variables.” Oxford Bulletin of Economics and Statistics 48 (9):213–28. doi: 10.1111/j.1468-0084.1986.mp48003002.x.

- Granger, C. W. J., and P. Newbold. 1974. “Spurious Regressions in Econometrics.” Journal of Econometrics 2 (10):111–20.

- Haar, A. 1910. “Zur Theorie Der Orthogonalen Funktionensysteme.” Mathematische Annalen 69 (9):331–71. doi: 10.1007/BF01456326.

- Habimana, O. 2018. “Asymmetry and Multiscale Dynamics in Macroeconomic Time Series Analysis.” JIBS Dissertation Series No. 122.

- Habimana, O. 2019. “Wavelet Multiresolution Analysis of the Liquidity Effect and Monetary Neutrality.” Computational Economics 53 (1):85–110. doi: 10.1007/s10614-017-9725-1.

- Haldrup, N., A. Montanes, and A. Sanso. 2005. “Measurement Errors and Outliers in Seasonal Unit Root Testing.” Journal of Econometrics 127 (1):103–28. doi: 10.1016/j.jeconom.2004.06.005.

- Hamilton, J. D. 1994. Time Series Analysis, vol. 2. Princeton, NJ: Princeton University Press.

- Hassler, U., and V. Kuzin. 2009. “Cointegration Analysis under Measurement Errors.” Advances in Econometrics 24:131–50.

- Hong, H., S. K. Ahn, and S. Cho. 2016. “Analysis of Cointegrated Models with Measurement Errors.” Journal of Statistical Computation and Simulation 86 (9):623–39. doi: 10.1080/00949655.2015.1032289.

- Jansson, M., and N. Haldrup. 2002. “Regression Theory for Nearly Cointegrated Time Series.” Econometric Theory 18 (6):1309–35. doi: 10.1017/S0266466602186026.

- Johansen, S. 1988. “Statistical Analysis of Cointegration Vectors.” Journal of Economic Dynamics and Control 12 (2–3):231–54. doi: 10.1016/0165-1889(88)90041-3.

- Johansen, S. 1991. “Estimation and Hypothesis Testing of Cointegration Vectors in Gaussian Vector Autoregressive Models.” Econometrica 59 (6):1551–80. doi: 10.2307/2938278.

- Johansen, S. 1992. “Cointegration in Partial Systems and the Efficiency of Single-Equation Analysis.” Journal of Econometrics 52 (9):389–402. doi: 10.1016/0304-4076(92)90019-N.

- Johansen, S. 1996. Likelihood-Based Inference in Cointegrated Vector Autoregressive Models, 2nd ed. Oxford: Oxford University Press.

- Lütkepohl, H., P. Saikkonen, and C. Trenkler. 2001. “Maximum Eigenvalue versus Trace Tests for the Cointegrating Rank of a VAR Process.” The Econometrics Journal 4 (10):287–310. doi: 10.1111/1368-423X.00068.

- Månsson, K. 2012. “A Wavelet-Based Approach of Testing for Granger Causality in the Presence of GARCH Effects.” Communications in Statistics—Theory and Methods 41 (4):717–28. doi: 10.1080/03610926.2010.529535.

- Morgenstern, O. 1963. On the Accuracy of Economic Observations. Princeton, NJ: Princeton University Press.

- Nielsen, H. B. 2016. “The Co-Integrated Vector Autoregression with Errors-in-Variables.” Econometric Reviews 35 (10):169–200. doi: 10.1080/07474938.2013.806853.

- Oda, T., R. Bun, V. Kinakh, P. Topylko, M. Halushchak, G. Marland, T. Lauvaux, et al. 2019. “Errors and Uncertainties in a Gridded Carbon Dioxide Emissions Inventory.” Mitigation and Adaptation Strategies for Global Change 24 (6):1007–50. doi: 10.1007/s11027-019-09877-2.

- Pagan, A. 1984. “Econometric Issues in the Analysis of Regressions with Generated Regressors.” International Economic Review 25 (1):221–47. doi: 10.2307/2648877.

- Percival, D. B., and A. T. Walden. 2000. Wavelet Methods for Time Series Analysis. Cambridge, UK: Cambridge University Press.

- Quick, J. C., and E. Marland. 2019. “Systematic Error and Uncertain Carbon Dioxide Emissions from US Power Plants.” Journal of the Air & Waste Management Association 69 (5):646–58. doi: 10.1080/10962247.2019.1578702.

- Schwert, G. W. 1989. “Tests for Unit Roots: A Monte Carlo Investigation.” Journal of Business and Economic Statistics 7:147–60. doi: 10.2307/1391432.

- Targett, D. 1989. “UK GDP—Measurement Errors and Adjustments.” Omega 17 (4):345–53. doi: 10.1016/0305-0483(89)90048-0.

- Toda, H. Y. 1994. “Finite Sample Properties of Likelihood Ratio Tests for Cointegrating Ranks When Linear Trends Are Present.” The Review of Economics and Statistics 76 (1):66–79. doi: 10.2307/2109827.

- Urbain, J. P. 2009. “On Weak Exogeneity in Error Correction Models.” Oxford Bulletin of Economics and Statistics 54 (10):187–207. doi: 10.1111/j.1468-0084.1992.mp54002004.x.

- Wang, Y., G. Broquet, P. Ciais, F. Chevallier, F. Vogel, N. Kadygrov, L. Wu, Y. Yin, R. Wang, and S. Tao. 2017. “Estimation of Observation Errors for Large-Scale Atmospheric Inversion of CO2 Emissions from Fossil Fuel Combustion.” Tellus B: Chemical and Physical Meteorology 69 (1):1325723. doi: 10.1080/16000889.2017.1325723.

- Yule, U. 1926. “Why Do We Sometimes Get Nonsense-Correlations between Time Series?—A Study in Sampling and the Nature of Time Series.” Journal of the Royal Statistical Society 89 (1):1–63.

Appendix

Table A1 Critical values for Johansen cointegration test (5% level of significance, s = 0).

Table A2 Unit root test.