?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Neural networks have enabled applications in artificial intelligence through machine learning, and neuromorphic computing. Software implementations of neural networks on conventional computers that have separate memory and processor (and that operate sequentially) are limited in speed and energy efficiency. Neuromorphic engineering aims to build processors in which hardware mimics neurons and synapses in the brain for distributed and parallel processing. Neuromorphic engineering enabled by photonics (optical physics) can offer sub-nanosecond latencies and high bandwidth with low energies to extend the domain of artificial intelligence and neuromorphic computing applications to machine learning acceleration, nonlinear programming, intelligent signal processing, etc. Photonic neural networks have been demonstrated on integrated platforms and free-space optics depending on the class of applications being targeted. Here, we discuss the prospects and demonstrated applications of these photonic neural networks.

Graphical Abstract

1. Primer on artificial intelligence, machine learning, neuromorphic computing, and neuromorphic photonics

Creating a machine that can process information like human brains has been a driving force of innovations throughout history. Artificial intelligence (AI) has been coined as an academic discipline since the 1950s [Citation1]. This field underwent the first surge of optimism from the 1950s to 1970s, however, followed by decades of setbacks. The biggest obstacle at that time was the lack of computing power noauthor_history_2017. In the last decade, AI has experienced explosive growth. Three sources fuel the advancement of AI: (Citation1) substantial development of AI algorithms, especially in machine learning and neural network models (alias for ‘deep learning’ [Citation2]); (Citation2) the abundant amount of available information in the ‘big data’ era; and (Citation3) the rise of computing power as predicted by Moore’s Law, together with new hardware (e.g. graphics processing unit (GPU)) and infrastructures (e.g. cloud-based servers).

State-of-the-art AI algorithms, by and large, are implemented using neural networks, a computing model inspired by the brain’s neuro-synaptic framework. Today, nearly all AI algorithms are running on digital computers based on von Neumann architecture, a computing architecture that has dominated computing design since it was invented but is nothing like the brain. This architecture consists of a centralized processing unit (CPU) that performs all operations specified by the program’s instructions and a separate memory that stores data and instructions. It processes information sequentially in a serialized manner. However, neural network models are radically different from von Neumann architecture in some key features. First, neural networks are highly parallel and distributed, whereas von Neumann architecture is inherently sequential (or, in the best case: sequential-parallel with multi-processors). Second, in neural networks, computing units (neurons) and storage units (synapses) are co-located. In contrast, computing units (CPUs) and storage units (dynamic random access memories (DRAMs)) are physically separate chips in digital computers. The sharp contrast between the two architectures slows down the computing speed and increases the power consumption, which, as a result, necessitates reinventing conventional computers for efficient information processing.

Neuromorphic (i.e. neuron isomorphic) computing promises to solve these problems by creating radical new hardware platforms that can emulate the underlying neural structure of the brain. The general idea is to build circuits composed of physical devices that mimic the neuron biophysics interconnected by massive physical interconnects with co-integrated non-volatile memories. In doing so, neuromorphic hardware could break performance limitations inherent in von Neumann architectures and gain advantages in speed and efficiency in solving intellectual tasks. Achieving this goal requires significant advances in a wide range of technologies, including materials, devices, device fabrication, system integration, platform co-integration, packaging etc [Citation3–5].

Neuromorphic hardware has been built in electronics on various platforms, including traditional digital CMOS [Citation6–8] and hybrid CMOS-memristive technologies (discussed next) [Citation9,Citation10]. Neural network models highlight the essential needs of high-degree physical interconnections, which, in electronic neuromorphic hardware, is achieved by incorporating a dense mesh of wires overlaying the semiconductor substrate as crossbar arrays. Unfortunately, electronic connections fundamentally suffer harsh trade-offs between bandwidth and interconnectivity [Citation11,Citation12]. A major limitation for neuromorphic electronics is interconnect density, thus confining the neuromorphic processing speed and associated application space within the MHz regime.

Photonics has unmatched feats for interconnects and communications in terms of bandwidth, which can negate the bandwidth and interconnectivity trade-offs [Citation5,Citation13–15]. The advantages of photonics for neural networks were recognized decades ago. The photonic neural network research was pioneered by Psaltis and others who adopted spatial multiplexing techniques enabling all-to-all interconnection [Citation16]. However, low-level photonic integration and packaging technologies hindered the practical applications of photonic neural networks at that time. Nevertheless, the landscape of photonic neural networks has changed tremendously with the emergence of large-scale photonic fabrication and integration techniques [Citation17,94,Citation5,Citation18]. For example, silicon photonics provides an unprecedented platform to produce large-scale and low-cost optical systems [Citation18–20]. In parallel, a broad domain of emerging applications (such as solving nonlinear optimization problems or real-time processing of multichannel, gigahertz analog signals) is also looking for new computing platforms to fulfill their computing demands [De Lima et al., 2019; Citation21–24]. All these changes have shed light on new opportunities and directions for photonic neural networks [Citation5,Citation25].

This paper is intended to provide an intuitive understanding of photonic neural networks and why, where, and how photonic neural networks can play a unique role in enabling new domains of applications. First, we discuss the challenges of digital versus analog approaches in implementing neural networks. Next, we provide a rationale for photonic neural networks as a compelling alternative for neuromorphic computing compared to electronic platforms. Then, we outline the primary technology required for evolving neuromorphic photonic processors, review existing approaches, and discuss challenges. In the subsequent sections, we provide a survey of new applications enabled by photonic neural networks and highlight the role of photonic neural networks in addressing the challenges in these applications.

2. Digital vs. analog neural networks

In this Section, we briefly compare the state-of-the-art electronic implementations of neural networks in digital and analog domain. We establish the advantages and limitations of analog implementations in general to then make the case for analog photonic implementations in the following Sections.

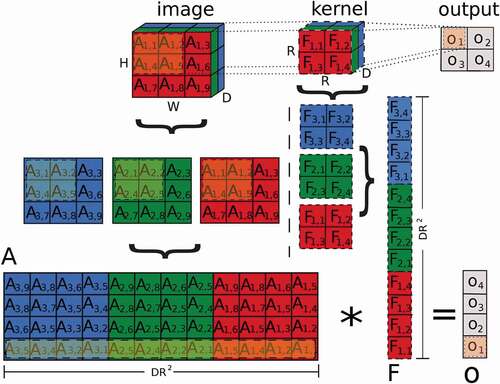

Deep neural networks (DNN) model complex nonlinear functions by composing layers of linear matrix operations with non-linear activation functions. Computationally, DNNs are mostly matrix-multiplication, with matrix-multiplications taking more than 90% of the total computations in a DNN [Citation26–28]. Due to the underlying array-based operation in the matrix multiplication, digital electronic neural network hardware is usually composed of basic units, referred to as processing elements (PEs), in a 2D array structure [Y.-H. Citation29]. Such a structure enables the matrix multiplication operation to be N faster than CPUs, where N is the input vector length. Usually, PEs are composed of digital multipliers and adders, with precision up to 32 bits, to perform a single multiply and accumulate (MAC) operation, similar to the arithmetic logic unit in the CPU core.

Since DNNs consume a huge chunk of energy in data movement, many digital neural network hardware focus on optimizing dataflow to save energy. Based on the connection of PEs and the interconnects, various dataflows can be described. An output-stationary dataflow performs all the MAC operations for a single output before moving to the next. All the inputs and weights required are fetched from the memory, multiplied, and added to the partial sum, which is stored inside PE [Y.-H. Citation30]. On the other hand, a weight-stationary dataflow holds the weights inside PE to maximize weight reuse. The partial sum accumulation occurs across multiple PEs while the input vector is fed in a staggered style allowing the PEs to perform MAC operation with the internally stored weights. Further dataflow optimizations combining different types of data reuse are also possible to reduce energy further [Y.-H. Citation30, Citation31].

Implementing the MAC operations in analog domain can help in reducing the energy consumption. Analog electronic elements, such as charge, current and time can be used to represent the data values. An inherent advantage in analog techniques is the built-in addition operation without requiring additional circuits. To perform the MAC operation with analog electronics, switched-capacitor techniques charge a capacitance sized proportionally to the weight with a current sized proportionally to the input [Citation32], current-steering techniques control the magnitude of current flowing through transistors [Citation33], and time-domain techniques modulate the pulse width of a signal using controlled oscillators (Cao, Chang, & Raychowdhury, 2020). Such analog techniques have shown to decrease energy significantly for small DNN models: 4 using switched-capacitor on BinaryNet, 67

using current steering on Matched Filter, and 1.4

using time-domain techniques on mobile reinforcement learning. The shortcomings of analog techniques include: limited size of DNN models, low bit precision (

4 b) and associated accuracy loss, analog-to-digital converter/digital-to-analog converter (ADC/DAC) overhead, susceptance to noise and process, voltage and temperature (PVT) variations.

Analog implementations have a direct consequence for noise and noise propagation [Citation34]. Since the probability of corrupting a symbol usually is identical for all bits in a sequence, the impact of a noise-induced Boolean symbol modification can be dramatic. Compared to that the corruption of an analog signal is usually more subtle as signal perturbations are mostly proportional to noise amplitude. Digital encoding therefore requires that thresholding levels significantly exceed all noise amplitudes; however, the signal propagation is then practically noiseless as the noiseless symbolic representation is continuously re-established. Furthermore, increasing a digital signal’s resolution is comparatively economic as the number of digitization levels grows exponentially.

Well-designed circuits readily approach the thermodynamic noise limit to better than one order of magnitude [Citation35]. Thermodynamics, therefore, establishes the link between such information centered arguments and the energy fundamentally required for a certain Signal-to-noise ratio (SNR). Digital encoding is penalized with a large constant energy penalty due to the required high encoding fidelity. However, the logarithmic scaling of digital encoding with precision in comparison to the quadratic scaling of analog encoding makes digital more energy efficient beyond SNR [Citation36–41].

Recently digital implementations of neural networks significantly reduced their bit-precision, with several systems today running with 8 or less bit resolution during inference. Finally, spiking NNs occupy a middle ground and potentially are superior in energy efficiency to analog for SNR and to digital for SNR

[Citation36].

Finally, the accumulation of noise can be strongly managed using the connections of a neural network. Studies based on linear, symmetric, i.e. untrained networks of noisy linear neurons show that neural network analog in and output neurons are the chief noise source, while in particular noise uncorrelated across neurons is essentially fully suppressed through the network’s connections [Citation42]. New studies in fully trained networks of noisy nonlinear units show that nonlinearity also efficiently decorrelates noise from correlated noise-populations [Citation43]. This is important as such noise can for example be induced by a common power supply. Finally, rather weak requirements allow to fully freeze the propagation of noise through a network, and an analog photonic neural network’s output can, therefore, approach the SNR of a single neuron [Citation43].

Another possible method to reduce data movement energy is moving the computing inside the memory modules itself. Such architectures are referred to as In-Memory computing (IMC), and use the memory cells as an analog circuit to perform the MAC operations, generally in a weight-stationary dataflow. The inputs are analog currents or voltages on the wordlines, while their weights are either binary, ternary or digitally stored over multiple memory cells. The accumulation happens inherently in the bitlines in the memory array, resulting in an analog output [Citation44, Jintao Zhang, Zhuo Wang, & Verma, 2016]. IMC architectures, while significantly enhancing the throughput of the system, operate in analog which call for adding more system level design considerations to meet the output signal to noise ratio (SNR) requirements and size.

IMC can also be performed in crossbar arrays of emerging memories, such as resistive RAM (ReRAM), conductive bridging, magnetic tunnel junctions, and phase change memories [Citation45]. Explicit multipliers, adders and PE interconnects are not needed in IMC. Rather, the equivalent PE array in digital is implemented in IMC in just the area required to implement the memory array, plus the ADC and DAC at the array periphery [Citation45]. Increased area efficiency allows packing far more parallel units, hence processing operations can be accelerated by more than an order of magnitude. For comparison, the area required to implement the PE in digital implementations is F2 [Y.-H. Citation29,47,49], whereas the average memory cell sizes in IMC are less than 100 F2 [Citation46], where F is the minimum feature size of the technology. Furthermore, energy consumption can also be reduced by an order of magnitude over the equivalent digital systems, since several MAC operations are performed almost at the cost of a single read operation of the memory array.

IMC suffers from constraints similar to the analog MAC implementations. Furthermore, the nonidealities of analog memory cells and their interconnects pose limitations for achieving high accuracy and scaling [Citation47]. For example, the nonlinearities of memory cells and the resistance of the interconnect in ReRAM IMC was shown to degrade the accuracy of computation with scaling (Peng Gu et al., 2015). Noise limitations have shown to saturate the computing accuracy of analog crossbars to 8 bits [Citation48]. In comparison with digital implementations, the energy, area and latency advantages from IMC have been shown to reduce with the increased precision 8,804,680. But precision reduction techniques such as variable layer precisions and nonuniform quantization [Citation26,Citation49], have shown equivalent accuracy to 32-bit digital implementations even after reducing precision down to 2-bit [J. Citation50].

3. The case for photonics for neuromorphic processors

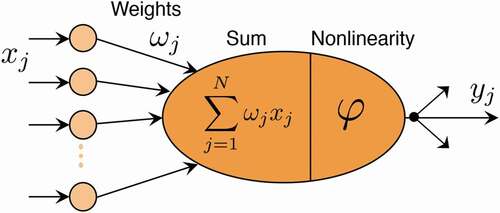

In an artificial neural network (ANN), neurons are interconnected by synaptic weights (a memory element) (). Signals from many neurons are weighted before being summed by the receiving neuron. This many-to-one (N:1) connection is called fan-in, and the weighted sum–a linear operation–is the dot product of the output from connected neurons attenuated by a weight vector. A neuron then performs a nonlinear operation on the weighted sum to implement a thresholding effect which is output to many neurons. This one-to-many (1:N) connection is called fan-out.

Electronic and photonic neuromorphic approaches to implement neural networks face different challenges. Physical laws that restrain electronics do not necessarily apply to optics. For high-speed data transfer, compared to electronic wires, optical waveguides have a lower attenuation, have no inductance (minimal frequency-dependent signal distortions), and photons hardly interact with other photons (unless intermediated by matter) which allows for wavelength multiplexing. While these fundamental properties have proven to be advantageous in optical communications, they are also important for neural networks including interconnectivity [Goodman, Leonberger, Sun- Yuan Kung, & Athale, 1984; Citation51] (i.e. neuron-to-neuron, neurons-to-neurons and neurons-to-memory communications), and parallel and linear processing, i.e. matrix multiplication [Citation51]. However, these same properties make it challenging to implement nonlinear operations [Citation52]. We refer the reader to the quantitative analyses comparing electronic and optical interconnects, and electronic and photonic computing performed by Miller [Citation11,Citation51] and Nahmias et al [Citation12,Citation53], respectively. Here, we qualitatively summarize these concepts comparing and contrasting optics and electronics.

Interconnects: Conventional electronic processors rely on point-to-point memory processor communication and can take advantage of state-of-the-art transmission line and active buffering techniques. However, a neuromorphic processor typically requires a large number of interconnects (i.e. hundreds of many-to-one fan-in per processor) [Citation54] where it is not practical to use line and active buffering techniques for each connection at high speed. This creates a communication burden which in turn, introduces fundamental performance challenges that result from RC and radiative physics in electronic links, in addition to the typical bandwidth-distance-energy limits of point-to-point connections [Citation51]. While some electronic neuromorphic processors incorporate a dense mesh of wires overlaying the semiconductor substrate as crossbar arrays, large-scale systems employ time-multiplexing or packet switching, notably, address-event representation [AER). These virtual interconnectivities allow electronic approaches to exceed wire density by a factor related to the sacrificed bandwidth. As argued by Citation5, for many applications, however, bandwidth and low latency are paramount, and these applications can be met only by direct, non-digital photonic broadcast (that is, many-to-many] interconnects.

Apart from bandwidth, power dissipation in interconnects is another major challenge in neuromorphic processors. Data movement has posed severe challenges to the power consumption of today’s digital computers as parallel computing is widely deployed. A large amount of data has to move among processors and memory units, especially in those distributed programming models like neural networks. In these models, most energy is lost in data movement [Citation55] due to capacitive charge-discharge events at high frequencies. In contrast, optics is more energy efficient in data movement at high speeds.

Linear operations and weighting: Optical physics is very well suited for linear operations. The electric field or intensity of the light can also be used as an analog metric for neuromorphic computations. The analog input in the electrical domain (voltage) can be used to modulate components such as Mach-Zehnder modulators or ring resonator modulators. The weights (i.e. linear operations) can be implemented by scaling the field with Mach-Zehnder interferometers (MZIs) [Citation56] or the intensity with RRs [Tait, Jayatilleka, et al., 2018; Citation57]. Optical signals can be added through wavelength-division multiplexing (WDM) by accumulation of carriers in semiconductors [Citation57], electronic currents [Citation58,Citation59] or changes in the crystal structure of a material induced by photons [Citation17,Citation60–62]. Such photonic neural networks, being analog in nature, share the advantages and constraints of their analog electronic counterparts. In addition, modulations can be done at 10 s of GHz, addition can be done at the speed of light in coherent MZI networks and at 10 s of GHz in ring resonator networks, and weight multiplication can be done at the speed of light, all of which provides much lower latency than analog or digital electronic MAC operations.

Dynamic power consumption is also much lower, since multiplication does not consume any energy and energy efficiency of the E/O modulation can be reduced to as low as 10 fJ per operation [Citation63]. However, static power consumption is dependent on laser wall plug efficiency, waveguide losses, energy consumed in maintaining the weights, etc., and must be minimized. Assuming an estimated energy consumption of 100 fJ per operation for digital implementation [Citation64], analog IMC brings 2.1–7.8× reduction [Citation46,Citation65]. Photonic implementations can achieve energy consumption similar to IMC, but with orders of magnitude reduction in latency. Like their IMC counterparts, photonic neural networks can operate up to 8 bits of precision [Citation58, Citation66, Citation67, Citation68].

Nonlinear operations: The same properties that allow optoelectronic components to excel at linear operations and interconnectivity are at odds with the requirements of nonlinear operations for computing [Citation5]. The implementation of photonic neurons relies on the nonlinear response of optical devices. The approaches fall into two major categories based on the physical representation of signals within the neuron: optical-electrical-optical (O/E/O) vs. all-optical.

O/E/O neurons involve the transduction of optical power into electrical current and back within the signal pathway. Their nonlinearities occur in the electronic domain and in the E/O conversion stage using lasers or saturated modulators [Citation69–72]. In the authors’ recent work [Citation72], neurons are implemented using silicon modulators that exploit the nonlinearity of the electro-optic transfer function. Modulation mechanisms can change the real and imaginary parts of the refractive index of the material and subsequently alters the index (termed E/O modulators) and loss (termed electro-absorptive modulators (EAMs)) of the optical propagating mode. Research on high speed and power-efficient modulators are very active, with a general focus on maximizing the interaction between the active material and the light. Such approaches include lithium niobate (LiNbO3) modulators based on the Pockels effect [C. Citation73], III–V semiconductor- based quantum-confined Stark effect modulators [Citation74], silicon modulators based on plasma dispersion effect [Citation75, Q. Citation76], and hybrid modulators incorporating novel materials (such as ITO and graphene) to silicon-based modulators [Citation69, Citation77, Citation78, M. Citation79].

All-optical neurons rely on the semiconductor carriers or optical susceptibility that occur in many materials. A perceived advantage of all-optical neuron implementations is that they are inherently faster than O/E/O approaches due to relatively slow carrier drift and/or current flow stages in the latter. All-optical perceptron has been demonstrated based on single-carrier optical nonlinearities, including through carrier effect in MRR [Citation80–86], changing a material state [Citation17,Citation87], such as via a structural phase transition, and saturable absorbers and quantum assemblies heterogeneously integrated in photonic integrated circuits (PICs) [Citation88].

Implementing on-chip optical neurons remains a challenge. The main challenge comes from maintaining layer-to-layer cascadability, which describes the ability of one neuron excited with a certain strength to evoke at least an equivalent response in a downstream neuron Lima et al. [2019). Maintaining the neuron cascadability must compensate for the optical device’s insertion loss, the loss due to fan-out, and less than unity device efficiency. A straightforward approach is to introduce active amplifiers providing energy gain in the optical or electrical domain. For example, Tait et al. Citation72,demonstrated an O/E/O neuron composing a silicon modulator and photodetector pair both having a capacitance of a few tens of femtofarads. Thus this neuron requires a voltage swing of a few volts, which can be provided by a transimpedance amplifier (TIA] [Citation28]. The monolithic silicon photonic integration platform (i.e. zero-change silicon photonics) [Citation89] can provide seamless integration of photonic devices and microelectronic modules. In this approach, cascadability has to trade-off with power consumption at this system level.

Cascadability highlights the importance of studying power-efficient optical devices, i.e. optical modulators with low switch voltage, sensitive photodetectors, or nonlinear optical devices with low nonlinear threshold power. For this purpose, a common idea is to optimize the device geometry to maximize the optical mode overlap with the active material region. The emerging nanoscale devices [Citation63,Citation90], based on nano waveguides, photonic crystal nanocavities, or plasmonic nanocavities, can concentrate the propagating mode’s field into a nanometer-thin region that overlaps with the actively index-modulated material. Another approach is to explore novel materials, such as graphene [M. Citation79], Indium Tin Oxide [Citation91] and Lithium niobate on insulator [C. Citation73], and III–V hybrid integration [Citation78,Citation92–96]. Similarly, all-optical neurons based on third-order nonlinearity are improved using InP-based two-dimensional photonic crystal nanocavity with quantum wells (QWs), paving the way to cascadable all-optical spiking neurons [Citation97].

The highly-efficient modulation techniques can be potentially applied to the linear operation block for high energy-efficient and high-speed weight tuning. However, linear and nonlinear operation blocks consider different trade-offs in choosing modulation devices. For example, nonlinear operations require fast modulation (10s of GHz) to improve the line rate of a photonic neural network. Silicon modulators based on plasma dispersion effect are widely used for high-speed [> 40 G) modulation for optical interconnects in data centers. Exploring this modulator technology, fast O/E/O neurons are demonstrated by Citation72,using a silicon microring modulator with a reverse-biased PN junction embedded in the microring, and Citation98,with a Mach-Zehnder type modulator. On the other hand, for linear operations, the tuning speed sometimes is not the paramount metric to be considered. For example, in deep learning inference, once the neural network is trained, the weights don’t need to be changed often. In these applications, power consumption is a more important parameter. Power consumption comes from the signal power dissipated during its propagation (i.e. device insertion loss] and the power needed to tune and hold the weight values. Therefore, modulation devices with low insertion loss and low tuning power can be better candidates for linear operation blocks. Silicon modulators used for nonlinear operations have a typical insertion loss of 4–5 dB. Apparently, the insertion loss of a silicon modulator needs to be further optimized for linear operations, because the loss would accumulate along with the large-scale Mach-Zehnder meshes. Micro-heaters based thermal tuning is easy to use and is most widely used for reconfigurable photonic circuits, including photonic neural networks. The Mach-Zehnder modulator with heaters has a much smaller insertion loss of

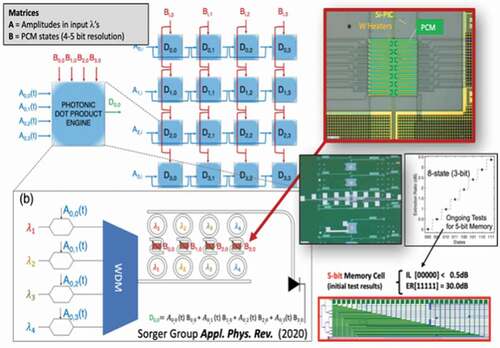

0.3 dB, however it dissipates several milliwatts of electrical power when tuning and holding the states. Non-volatile actuators using phase change materials, can set their state and then maintain their state without needing a power to hold the state, thus consuming almost zero power for matrix computations. shows a variety of modulation mechanisms and state-of-the-art devices from the literature.

Table 1. Efficiency and speed of various index modulation techniques on silicon photonics. Options for phase modulation of silicon waveguides. a) thermal tuning with TiN filament; b) thermal tuning with embedded photoconductive heater; c) PN/PIN junction across the waveguide for injection and/or depletion modulation; d) III–V/Si hybrid waveguide; e) metal-oxide-semiconductor (MOS), where the ‘metal’ is actually an active semiconductor; f) lithium niobate cladding adds a strong electrooptic effect; g) 2 single-layer-graphene (SLG) ‘capacitor’; h) non-volatile phase change material. This bandwidth was not yet shown experimentally. A big challenge is to reduce the contact resistance with Graphene, reducing RC-loading effect.

Not experimentally shown at high-speed.

Demonstrated up to 20 MHz

Memory: On-chip nonvolatile memories that can be written, erased, and accessed optically are rapidly bridging a gap toward on-chip photonic computing [Citation106]; however, they cannot usually be written to and read from at high frequencies. As described by Citation5, future scalable neuromorphic photonic processors will need to have a tight co-integration of electronics with potentially hybrid electronic and optical memory architectures, and take advantage of the memory type (volatile versus non-volatile) in either digital or analog domains depending on the application and the computation been performed.

Current approaches with photonic neural networks are driven by electronic circuits or micro-controllers to load matrices. The integration and packaging of large-scale optical and electronic circuits can be challenging in terms of cost and power. In some machine learning applications [such as deep learning inference) the weights, once trained, do not have to be updated often or at all. In these cases, the integration of novel photonic memory technologies can limit the need to read from and write to electronic memories with DACs and ADCs. All-optical memories have been demonstrated using various optical components such as nonlinear switches Citation107, MZIs (Citation108], laser diodes [Citation109], semiconductor optical amplifiers (SOAs), bandpass filters (BPFs) and isolators [J. Citation110]. The access time of an optical memory cell is small and attractive for photonic neural networks [Citation111]. Non-volatile photonic memories with phase-change materials (PCMs) set and retain the weights without further holding power after being set [Citation17], resulting in almost zero power consumption in performing matrix multiplication operations. Here a crystalline phase transition (from amorphous to crystalline and back) constitutes reversible (WRITE & RESET) memory programmability, therefore enabling dynamic synaptic plasticity and online learning in photonic neural networks. For online training of the photonic neural network, a high-speed WRITE would be ideal, and experimentally MHz speeds are possible for known PCMs such as Ge2Sb2Te5 (GST) or GST alloys, just limited by the heat-capacitance of these photonic RAM. The READ speed, interestingly, is on the order of ps, and simply given by the time-of-flight of the signal photon through such photonic RAM. However, significant improvements must be made in reducing the energy consumption (taking into account optical losses) and size, reliability and ease of interfacing for their practical deployment in photonic neural networks. The current prominent material GST has prohibitively high optical losses even for the lower-loss amorphous state with an extinction coefficient (kappa = 0.2), thus leading to high insertion loss. Future research should focus on low-optical loss solutions. Emerging P-RAMs would also eliminate the long-standing memory access bottleneck known from electronics, and a successful P-RAM integration constitutes a similar shift in optics from centralized to decentralized computing, known as in-memory computing, one of the very active research fields left in circuit design, after transistor scaling stopped.

4. Architectures of neuromorphic photonic processors

Neuromorphic processors and photonic neural networks alike require a number of fundamental building blocks; i) synaptic MAC operation, ii) nonlinear activation function (NLAF); iii) state-retention, i.e. memory, iv) data input/output (I/O) ports and, depending on the applications, data domain crossings such as v) photonic to electronic and/or vi) analog-to-digital, with the latter including DAC and the ADC counterparts. However, a generic architecture of a current state-of-art photonic neural network system is given in .

Figure 2. Generic system schematic of an analog photonic neural network or photonic tensor core accelerator. The digital-to-analog domain crossings are power costly and ideally a photonic DAC without leaving the optical domain, e.g. Ref [Citation112] would be used instead, thus reducing system complexity and hence allowing for extended scaling laws. The optical processor itself can perform different operations depending on the desired application; is the processor a tensor core accelerator, then it will perform MAC operations such as used directly in VMMs or convolutions, e.g. Ref. Citation138. Is the processor a neural network, then nonlinearity and summations are needed in addition to linear operations. For higher biological plausibility such as for temporal processing of signals at each node, then mapping of partial differential equations onto photonic hardware is needed. This includes spiking neuron approaches where event-driven scheme play a role) [Citation72,Citation85,Citation113–116]

![Figure 2. Generic system schematic of an analog photonic neural network or photonic tensor core accelerator. The digital-to-analog domain crossings are power costly and ideally a photonic DAC without leaving the optical domain, e.g. Ref [Citation112] would be used instead, thus reducing system complexity and hence allowing for extended scaling laws. The optical processor itself can perform different operations depending on the desired application; is the processor a tensor core accelerator, then it will perform MAC operations such as used directly in VMMs or convolutions, e.g. Ref. Citation138. Is the processor a neural network, then nonlinearity and summations are needed in addition to linear operations. For higher biological plausibility such as for temporal processing of signals at each node, then mapping of partial differential equations onto photonic hardware is needed. This includes spiking neuron approaches where event-driven scheme play a role) [Citation72,Citation85,Citation113–116]](/cms/asset/99cdce80-5f68-47a8-aacc-6e9eade34963/tapx_a_1981155_f0002_oc.jpg)

Photonic synaptic MAC operations and VMMs: The most common form of photonic neural networks focuses on accelerating the mathematical computation of multiplications using optics; this is not surprising, since photonic programmable circuits allow for a non-iterative mathematical multiplication by simple preparing the state of the programmable element followed by sending the optical signal through this element. Then, the multiplication is performed ‘on-the-fly’ at pico-second delays in PICs enabling a notion of ‘real-time’ (i.e. zero-delay) computing, which is incidentally not to be confused with computing schemes that ‘expect’ a system to complete a task at a pre-determined and deterministic time. Options for VMM are plenty, but the most common ones are a mesh network of cascaded MZIs, or MRR filters, or a photonic tensor core (PTC) processor [Citation56,Citation117,Citation118]. Accelerating VMMs is a worthy aim, since the mathematical computational complexity of VMMs scales with N3, where N is the matrix size (assuming a square matrix). However, the mathematical complexity is technically speaking irrelevant (at first order), since for hardware implementations considered here, the runtime complexity is actually of interest. And it is this runtime complexity that is non-iterative in analog photonic neural networks (assuming the neuron’s weights are set, i.e. programmed).

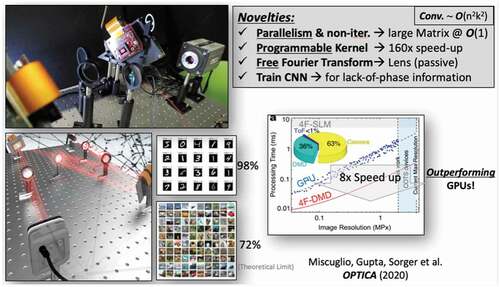

ully programmable VMM do still present a formidable challenge to physical implementations considering the size of competitive neural networks. In particular the optimization of each matrix element is technologically challenging. However, one can leverage reservoir computing (Jaeger and Haas (2004)) to mitigate this challenge to a certain degree. In reservoir computing, the connection matrix creating the neural network’s hidden state can be ad-hoc defined, and training is restricted to the readout layer. This allows to encode the VMM of hidden layers using photonic components creating large scale random projections fully in parallel. First hardware implementations used photonic delay systems (Brunner, Soriano, Mirasso, and Fischer (2013); Larger et al. (2012)) leveraging temporal multiplexing of a single nonlinear photonic element and demonstrating photonic neural networks comprising 100 s of photonic neurons. However, full parallelism can only be obtained when avoiding temporal multiplexing. Parallel reservoirs were demonstrated using spatially multiplexed photonic neural networks integrated on a silicon chip (Vandoorne et al. (2014)) and based on random scattering in an electro-optical system [Citation119,Citation120] which surpassed a high-performance GPU in terms of scalability while achieving comparable computing accuracy. Utilizing the coupled modes of a large-area vertical-cavity surface emitting laser as a reservoir, and a digital micro-mirror to realize programmable readout weights [Citation121], a fully hardware implemented photonic reservoir computing providing results without pre- or post-processing was demonstrated (Porte et al. (2021)). Generally, reservoir computing architectures offer competitive performance in various benchmark tasks, and their conceptual simplicity provides photonic systems with a viable strategy to fully leverage their parallelism.

NLAF, or threshold: Depending on the underlying algorithm model of the neural network, the NLAF can be as simple as a step-function such as used in binary classification problems, or a more elaborate function such as a sigmoidal, tangent hyperbolic, or population growth functions. However, when performing neural network training using gradient descent (GD) backpropagation (BP) methods, the problem of vanishing (or exploding) gradients can occur, where during each training cycle (called epoch) the available gradient on which a differentiation is performed is becoming consecutively smaller to the point of noise-level dominated and training would seize. To prevent this, rectifying linear units (ReLU) or Gaussian error linear units (GELU) could be used instead. In a ReLU, for example, the output is ‘zero’ up until a certain input level, upon which, the output is simply a linear function. This linearity ensures a constant and non-zero gradient during GD BG training cycles. Since differentiation at a step is mathematically not defined, a Soft-ReLU is often used instead to ensure continued loss-function minimization and neural network performance improvement with training. Note, the known problem of overfitting neural networks does also apply to photonic neural networks. However, pruning techniques and hyperparameter adjustments during training are known and interesting method (yet time consuming) to improve ANN performance. Interestingly, for the context of photonic neural networks, the network interconnectivity sparseness created in pruning steps, saves fan-out (fan-in) connections as well as waveguide connections. This is a blessing, since the bulkiness of photonic components (in contrast to electronic counterparts on a per-unit basis) increases functional density and thus performance per unit chip footprint. Indeed, future work should explore pruning techniques further for photonic neural networks. An initial study was, for instance, performed on the MZI NN mesh network that was originally developed by the MIT groups [Citation56], where it was shown that the number of required MZIs can be reduced by 3.7

-7.6

compared to the brute-force method [Citation56,Citation122]. Incidentally, in performance-optimized DNNs, the specific shape of the NLAF should be adjusted with layer depth; for instance, a ReLU that is not saturating is more useful in the upstream layers, while a saturating sigmoidal function supports a decision-making process at the fully-connected (FC) layer at the output in classification problems. In most neural networks demonstrations, the primary performance driver is power efficiency, while speed is a secondary consideration. Therefore, early photonic neural network demonstrations implement NLAF in the digital electronic domain, limiting the speed of neural network to the clock speed (hundreds of MHz to a few GHz). Nevertheless, optical NLAF, as discussed in Section 3 offer a unmatched speed over ten GHz in performing NLAF, thus becoming essential elements in order to solve many compelling applications that requires online (i.e. real-time) learning and inference or for neural networks with gigahertz bandwidths.

Memories: The memory is usually implemented electronically and various choices exist. Static RAM (SRAM) and dynamic RAM (DRAM) are used in neural network architectures to store inputs, weights, training parameters and look up table (LUT) values. At the cell level, SRAM typically uses 6 transistors (6 T) to store a single bit, whereas DRAMs use a single transistor and a single capacitor (1T1C). SRAMs are indispensable for neural network implementations given their faster access time; in addition, they can be leveraged for data reuse to reduce data fetch energy [Citation123,Citation124]. DRAM with its larger storage capacity is used to store all activations and training weights. However, owing to off-chip implementation, DRAM is slower with higher energy consumption than SRAM. Depending on the size of an SRAM and its location, the energy for read and write can scale from sub-pJ to 10 pJ per byte for SRAMs [Citation31,Citation125]. With an estimated SRAM size of 64KB used for DNN, the corresponding read and write latencies are 1 ns [Citation126], which can be projected to reduce to 0.25 ns in 7-nm CMOS. Given the size difference and off-chip implementation in comparison to SRAMs, off-chip DRAMs consume more than two orders of magnitude of energy for data fetch [Citation124]. High bandwidth memories (HBM) are used to reduce the energy consumption of DRAM’s data fetch [Citation127–130], by moving DRAM modules closer to the chip. Alternatives to DRAM include resistive memories and PCMs. Utilizing 1T1R in ReRAM crossbar topology was demonstrated with low energy consumption and write latency [Citation131,Citation132]. On the other hand, using PCMs as unit cells requires high energy to write, and has poor resolution and linearity [Citation133].

The PCMs operation is rooted in the switch between a crystalline (c) and an amorphous phase (a), typically via applied thermal stimulus (electrically or optically induced). Once switched into a particular state it remains there until a sufficiently high energy pulse (usually in the 600–1000 degree Celsius range) is delivered to the material. The switching energy is typically on the order of Nj, but, naturally, is a function of the thermal capacitor, hence scales with the device size. The memories’ WRITE and RESET speed (e.g. from ‘a’ to ‘c’ and back, or vise-versa) is determined by the heat source’s ability to deliver the energy in a short time, and the thermal capacitor’s dissipation rate. Together they set up a memory switching time, . There seem to be some confusion in the community about this; for instance, work from the Oxford group uses a ns-pulse laser to program GST memories, but the reported response times of the device is in units of seconds [J. W. Citation134, Citation135, Y. Zhang et al. [Citation139], Citation136]. Similar orders of magnitude were reported for

[Citation137]. Numerical 3D thermo-optical analysis of 1–10 micrometer small PCM pads based on GST or its alloys forecast a temporal response on the order of microseconds [Citation135]. As it stands, WRITE and RESET speeds that allow for a few dB of signal modulation (and not just 1–2

) on the order of microseconds or faster, have yet to be experimentally demonstrated. Once PCM are introduced to photonic-electronic neural networks, however, their usefulness can be rather high, since near zero-static power consumption’s can be realized by SET kernels using the PCM approach, thus enabling a compute-in-memory (CIM) paradigm enabling high system efficiency (this is for applications where the kernel is updated infrequently).

An analog memory cell made up of a capacitor has drawbacks such as need for ADC/DACs to interact with digital cells, low density compared to SRAM cell, need for refresh, and sensitivity to noise and crosstalk. However, for photonic computing, placing a capacitor memory cell next to photonic elements is attractive. This is because the computing is analog in nature, and photonic compute elements are much bigger than digital compute elements, so the large size of the capacitor memory cell in comparison to SRAM is not a major concern. Such an architecture can eliminate the data movement bottleneck, significantly reducing the access time and energy consumption. Monolithic photonic processes supporting metal capacitors are ideally suited for such implementations.

For most applications, training the neural network is time consuming, spanning hours to days or even weeks. Thus, it is realistic to assume that for most applications, the neurons’ weights or the kernel of a PTC is fixed and does not change often in time. For this reason, a non-volatile solution that retains information-of-state (i.e. memory), is of high interest. If achieved, a (near) zero static power consumption can be achieved in photonic neural networks, allowing them to be rather efficient. Note, ‘static’ refers here to the MAC operation and not to possible signal modulation, which would be considered part of the I/O of the system. Fortunately, such state-retention is recently achieved in electro-thermal programmable PCM.

Data I/Os: Photonic accelerators such as photonic neural networks and PTCs allow for high-throughputs approaching P-OPS (peta operations per second). Such a photonic ‘highway’, while promising, may not demonstrate its full potential, if the to-be-processed input data is not provided at a sufficiently high data rate to the optical accelerator. This can be assured in two ways; either the I/O data bandwidth is sufficiently high such as provided by a FPGA or the data is already prevailing in the optical domain (such as of an optical aperture from a camera system, for example). The latter is elegant, since it not only eliminates the needs to drive power-costly EO modulators for signal encoding, but more importantly eliminates the requirements for DACs/ADCs (see next paragraph). Indeed, high-speed DACs would consume about a third of the total photonic neural network system’s power. For the case of optical data as the input, some PTCs show a dramatic power drop from about 80 W down to 2 W when DACs are not needed [Citation138].

Domain crossings: digital/analog domain crossings: DACs and ADCs are required to interface a photonic neural network with digital signal processing (DSP) units (typically back-end) or when receiving data input data digitally such as from a server/computer etc. High sampling rate DACs used to drive input modulators mostly utilize current steering schemes and dissipate 5.5 pJ of energy for 6b-8b of conversion resolution [Citation140,Citation141]. The contribution of such converters to the overall energy efficiency of the neural network is reduced by 1/N as the network is scaled with N. Given the reusability of the weights over a given batch size, low speed capacitive DACs can be used. These DACs are usually adopted in textcolorredsynthetic aperture radar (SAR) architectures and typically contribute to most of the ADCs’ energy. Charge average switching, merge and split, charge recycling, and common mode voltage (Vcm) based charge recovery are some of the techniques used to reduce the DAC capacitance and consequently its switching energy [Citation142–144]. In the Vcm-based scheme, the differential DAC arrays are connected to a common mode voltage Vcm which reduces the DAC’s switching energy. The power consumption of high speed ADCs scales exponentially with the resolution and linearly with the conversion rate (Murmann, n.d.). For photonic neural network applications where the sampling frequency of the analog frontend is between 1–10 GS/s, and the resolution requirement is

8b, the energy consumption can be estimated as

1 pJ for state of the art ADCs (Murmann, n.d.). The architecture for ADC depends upon the photonic neural network. In recurrent neural network and long short-term memory (LSTM) networks, or in training back-propagation, the ADC is in a feedback network. Hence, a low-latency ADC architecture must be chosen. The lowest achievable latency is achieved in Flash ADCs, approximately

100 ps (with an estimated delay of 80 ps for dynamic comparators and 30 ps for the encoding gates). Therefore, latency-dependent photonic neural networks are limited to

10 GS/s of operation. However, high-speed Flash ADCs also consume large power. On the other hand, neural network architectures such as convolutional neural networks which do not rely on feedback relax the low latency constraints for the ADC. In such implementations, pipeline and time interleaved SAR ADCs are better suited given their low energy consumption and high conversion rate.

5. Training of photonic neural networks

Training is one of the key steps of most ANN algorithms. In common ANN, data are fed into the network and the weights are updated iteratively using error backpropagation algorithms, which requires significant computational resources. Photonic neural networks based on on-chip reconfigurable integrated photonic devices such as the MZI have been demonstrated as a promising energy efficient way to perform vector-matrix multiplications. For the deployment of photonic neural network to solve practical problems, these networks must be trained.

Current neural network optimization is largely based on back-propagating error gradients, and today’s boom in neural network applications is closely linked to the successful implementation of this concept in digital hardware. However, in hardware with unidirectional, i.e. forward flow of information, its implementation requires calculating the error-gradient for each network connection according to the chain rule of differentiation. In digital hardware this creates an enormous overhead, while in analog networks each weight and neuron parameter needs to be probed and stored, which ultimately is prohibitively complex in most settings. One hardware friendly alternative can leverage local learning rules such as STDP or Hebbian learning, yet their standard implementations usually result in sub-optimal performance. Recently a new class of optimization rules identify strategies which are more hardware friendly. The different versions of feedback alignment relax the requirement on the back-propagation of an error signal [Citation145]. Equilibrium propagation [Citation146], an energy-based model, entirely prevents error back-propagation yet achieves equivalent performance adjusts weights using local contrastive Hebbian learning. For that, the system’s input remains clamped, and an error signal is applied to the ouput. In voltage-based systems, this provides a local correction signal and the concept was successfully transferred to hardware, yet a mapping onto the governing physical laws of optics has not yet been established.

5.1. Training analogue photonic neural networks

Photonic optimization and wave propagation In systems where information can symmetrically propagate forward as well as backward, such as often the case in photonics, a regulatory error signal could be sent backwards. However, in order to physically implement weight optimization according to error back propagation, a neuron’s nonlinearity in backward direction needs to be the gradient of its nonlinearity in forward direction. Such asymmetric neurons are a challenge that remains largely out of reach until the current day, and the only viable photonic concepts rely on phase conjugation [Citation147–149]. An attractive alternative is to rely on networks comprising photonic neurons whose activation function and its derivative are proportional. This allows to use pump-probe methods, where inference is created by the forward propagation pump, while local error signals are provided by the backward propagating low-intensity probe (Guo, Barrett, Wang, & Lvovsky, 2021).

Other approaches optimize a set of weights using in-silicio simulations on a classical computer, which are then transferred to the optical system. However, such offline training significantly suffers from discrepancies between the numerical model and the physical substrates, making substantial subsequent training on the physical substrate essential. However, combining such training can result in state of the art performance, as was demonstrated using a cascaded electro-optical free-space setup that emulates deep neural networks (Zhou et al., 2021) and achieved performance superior to the seminal LeNet-5 architecture while outperforming a state of the art GPU in terms of speed and energy efficiency. This is in sofar remarkable as LeNet-5 has been a reference around 10 years ago, hence trained photonic neural networks are closing the gap. An alternative to error back-propagation is to optimize weights using competitive statistical optimization tools such as genetic algorithms [Citation139] or Bayesian optimization [Citation150].

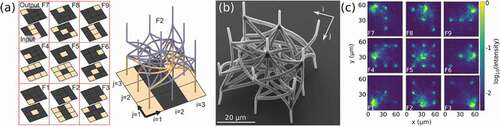

The optimization of integrated photonic circuits presents a challenge as it required probing local optical intensities at numerous sections across the entire circuit. One approach to mitigate the resulting complexity is leveraging the adjoint method, which substantially reduces the complexity due to a simplification of the associated mathematical model (Hughes, Minkov, Shi, & Fan, 2018), as shown in . In this work, a photonic analogue of the backpropagation algorithm is implemented based on the adjoint variable method (Hughes, Minkov, Shi, & Fan, 2018). By physically propagating the original field, the adjoint field and the interference field, the gradient of the loss function with respect to each phase shifter can be obtained simultaneously. The backpropagation procedure proceeds in a layer-by-layer fashion. In the -th layer of the neural network, the in situ backpropagation training steps are: (i) Forward propagation (): Encode data as the original field amplitudes

, send into the network and measure the field intensities

at each phase shifter. (ii) Error backpropagation (): Back propagate the error vector

, measure the field intensities at each shifter

and record the complex adjoint field as

. (iii) Interference measurement (): Send in the combination of the original and time-reversed adjoint fields

and measure the field intensities

at each phase shifter. (iv) Gradient calculation: Subtract the measured field intensities of (i) and (ii) from (iii) to get the gradient of loss function with respect to the phase change at each phase shifter. This procedure for gradient measurements is exact in lossless system and has been demonstrated numerically to be effective for systems with non-negligible, mode-dependent losses. The protocol provides an efficient way to compute the gradient of loss function with respect to arbitrary number of tunable parameters in constant time, which opens the possibility for in situ training of large-scale photonic circuits. This method for in situ measurements of device sensitivities can also be broadly applied to other reconfigurable systems and machine learning hardware platforms such as quantum optical circuits [Citation151] and optical phased arrays [Citation20].

Figure 3. Schematic illustration of photonic neural networks training through in situ backpropagation. Colored squares represent phase shifters. (a) Forward propagation: send in and record the intensity of the electric field

at each phase shifter. (b) Backpropagation: send in error signal

and record the electric field intensity

at each phase shifter and the complex conjugate output

. (c) Interference measurement: send in

to get gradient of the loss function

for all phase shifters simultaneously by extracting

. (Tyler et al., 2018) Hughes et al. (2018)

Boolean learning via coordinate descent Coordinate descent is a practical as well as efficient alternative to error back propagation and currently is heavily explored in the field of machine learning. Individual or sets of weights i.e. coordinates, usually drawn at random, are modified in order to probe the error landscape’s local gradient. Probing is followed by updating weights opposite to the gradient’s direction and with a weighting factor dubbed the learning rate. In photonic hardware Boolean neural network connection weights have been realized via a digital micro-mirror device (DMDs) [Citation121], and in a Boolean context the error gradient is probed by inverting a set of weights. Should the associated error gradient be negative, then the last modification is kept, otherwise it is discarded and the connections revert back to the previous configuration.

Boolean weights are currently explored in the general context of neural networks [Citation152] as well as in special-purpose electronic hardware [Citation153]. In photonic reservoir computing [Citation121] it was shown that Boolean coordinate descent converges exponentially and achieves chaotic signal prediction accuracy only slightly below a comparable photonic reservoir [Citation154] where double-precision weights were optimized offline. Furthermore, instead of a fully random, i.e. Makovian selection of descent coordinates, leveraging a greedy selection strategy allowed the system to converge twice as fast.

Boolean photonic weights implemented via a DMD enable programmable photonic neural networks comprising thousands of connections. In digitally emulated (Courbariaux et al., 2016), electronically [Citation153] and photonically implemented [Citation121] neural networks, such binarized weights resulted only in slight performance penalties. DMD-based Boolean coordinate descent in photonic neural networks therefore harbors great prospects for future, practical yet high performance neural networks, which noteworthy can be readily programmed based on classical software tools.

5.2. Ultrafast learning and spike timing dependent plasticity (STDP)

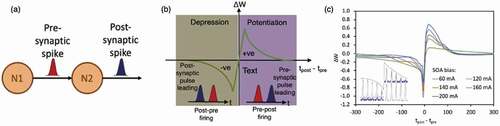

What makes neuron fascinating is its ability to learn and adapt, which is a powerful capability that governs our actions, thoughts, and memories. These important functions of humans are relying on the synaptic plasticity between neurons, which is self-adjusted based on the information being processed and the response of the neuron itself. Among different synaptic weight plasticity models, STDP is the most popular one, which is a biological process that adjusts the interconnection strength between neurons based on the temporal relationship (i.e. timing and sequence) between pre-synaptic and post-synaptic activities [Citation155]. The more you are running a certain neural circuit, the stronger the circuit becomes.

In STDP, the interconnection strength between two neurons (N1 and N2) is determined by the relative timing and sequence between the presynaptic (red) and post-synaptic spikes (blue), as illustrated in ). If N2 spikes shortly after the stimulation from the presynaptic spike, the interconnection strength will be significantly increased and results in long-term potentiation (LTP) of the connection strength, as illustrated by the shaded purple region in ). However, if N2 spikes before the stimulation from the pre-synaptic spike, the interconnection strength will be significantly decreased, resulting in long-term depression (LTD) of the synaptic connection, as illustrated by the shaded brown region in ). The exact amount of synaptic connection strength increment/decrement depending on the precise timing difference between the pre-synaptic and post-synaptic spikes of N2.

Figure 4. (a) Illustration of pre-synaptic and post-synaptic spikes from two neurons N1 and N2. (b) Illustration of a STDP response. Right purple region: long-term potentiation; Left brown region: long-term depression. tpost-tpre: time difference between the firing of the post- and pre-synaptic spikes. (c) Experimentally measured STDP curves from the photonic based STDP circuit

To enable ultrafast learning in photonic neuron network, STDP has to be implemented using photonics and integrated into the photonic neuron network [Citation156,Citation157]. One promising solution to implement STDP in photonic neuron network is using semiconductor optical amplifier (SOA) [Citation156–158]. SOA has a unique gain dynamic that is sensitive to the timing and sequence of the input stimulations, which is similar to the STDP in neurons. One example is to use both cross-gain modulation and cross-polarization modulation in SOA to mimic the LTP and LTD responses in biological neurons [Citation158], the resultant photonic STDP is shown in ) that reassemble the biological STDP. It has been shown that supervised learning can be achieved using a SOA based STDP and two SOAs based neurons [Citation156,Citation157].

6. Applications of photonic neural networks

Integrated optical neural networks will be smaller (hundreds of neurons) than electronic implementations (tens of millions of neurons). But the bandwidth and interconnect density in optics is significantly superior to that in electronics. This raises a question: what are the applications where sub-nanosecond latencies and energy efficiency trump the sheer size of processor? These may include applications 1) where the same task needs to be done over and over and needs to be done quickly; 2) where the signals to be processed are already in the analog domain (optical, wireless); and 3) where the same hardware can be used in a reconfigurable way. This section will discuss potential applications of photonic neural networks in computing, communication, and signal processing.

6.1. High-speed and low-latency signal processing for optical fiber communications and wireless communications

6.1.1. Optical fiber communications

The world is witnessing an explosion of internet traffic. The global internet traffic has reached 5.3 exabytes per day in 2020 and will continue doubling approximately every 18 months. Innovations in fiber communication technologies are required to sustain the long-term exponential growth of data traffic [Citation159]. Increasing data rate and system crosstalk has imposed significant challenges on the DSP chips in terms of ADCs performances, circuit complexity, and power consumption. A key to advancing the deployment of DSP relies on the consistent improvement in CMOS technology [Citation160]. However, the exponential hardware scaling of ASIC-based DSP chips, which is embodied in Moore’s law as other digital electronic hardware, is fundamentally unsustainable. In parallel, many efforts are focused on developing new DSP algorithms to minimize computational complexity, but usually at the expense of reducing transmission link performances [Citation161].

Instead of embracing such a complexity-performance trade-off, an alternative approach is to explore new hardware platforms that intrinsically offer high bandwidth, speed, and low power consumption [Citation162, Citation27, Citation22]. Machine learning algorithms, especially neural networks, have been found effective in performing many functions in optical networks, including dispersion and nonlinearity impairments compensation, channel equalization, optical performance monitoring, traffic prediction, etc. [Citation23].

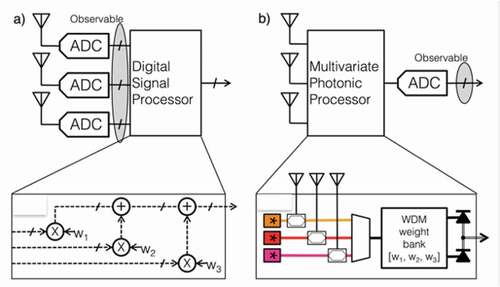

PNNs are well suited for optical communications because the optical signals are processed directly in the optical domain. This innovation avoids prohibitive energy consumption overhead and speed reduction in ADCs, especially in data center applications. In parallel, many PNN approaches are inspired by optical communication systems, making PNNs naturally suitable for processing optical communication signals. For example, we proposed synaptic weights and neuron networking architecture based on the concept of WDM to enable fan-in and weighted addition [Citation57]. This architecture can provide a seamless interface between PNNs and WDM systems, which can be applied as a front-end processor to address inter-wavelength or inter-mode crosstalks problems that DSP usually lacks the bandwidth or computing power to process (e.g. fiber nonlinearity compensation in WDM systems). Moreover, PNNs combine high-quality waveguides and photonic devices that have been initially developed for telecommunications. Therefore, PNNs, by default, can support fiber optic communication rates and enable real-time processing. For example, The a scalable silicon PNN proposed by the authors is composed of microring resonator (MRR) banks for synaptic weighting and O/E/O neurons to produce standard machine learning activation functions. The MRR weight bank is inspired by WDM filters, and the O/E/O neurons use typical silicon photodetector and modulator. Therefore, the optimization of associated devices in PNNs can utilize the fruits of the entire silicon photonic ecosystem that is paramountly driven by telecommunications and data center applications.

In order to truly demonstrate photonics can excel over DSP, careful considerations are required to identify different application scenarios (i.e. long-haul, short-reach) and system requirements (i.e. performances, energy). Continuous research is needed to improve photonic hardware and to develop hardware-compatible algorithms. In the following session, we discuss two approaches to apply PNNs for optical communications. One approach leverages standard deep learning algorithms (e.g. backpropagation) to train every parameters in PNN. The PNN can be feed-forward or recurrent. We provide an example demonstration of using this approach for fiber nonlinearity compensation in long-haul fiber communication systems. Another approach is reservoir computing which is constructed with a network of nonlinear nodes with random, but fixed, recurrent connections, followed by a read-out layer. Only the read-out layer is trainable. Photonic reservoir computing system constructed with different photonic circuits and devices have been exploited as a channel equalizer to solve linear and nonlinear impairments in short-reach optical communication systems.

Deep learning for fiber nonlinearity compensation Long-haul communication systems prioritize high performances in terms of distance reach and spectral efficiency. This requirement allows the use of coherent technology, along with dense wavelength multiplexing and polarization multiplexing schemes, to maximize the fiber capacity. In long-haul fiber optic transmission systems, fiber nonlinearity remains a challenge to the achievable capacity and transmission distance. One reason is that the nonlinear interplay between signal, noises, and optical fibers negates the accuracy of conventional nonlinear compensation algorithms based on digital backpropagation. Another reason is, the implementation of most nonlinear compensation algorithms in DSP chips demands excessive resources. In contrast, the neural network approach can learn and approximate the nonlinear perturbation from the abundant training data, rather than solely relying on the physical fiber model (known as stochastic nonlinear Schrodinger equation). Based on the perturbation methods, the derived neural network algorithm has enabled compensating the nonlinear distortion in a 10,800 km fiber transmission link with 32 Gbaud signals [S. Citation163].

Citation118,developed a photonic neural network platform based on the so-called ‘neuromorphic’ approach, aiming to map physical models of optoelectronic systems to abstract models of neural networks (which differs from the reservoir approaches discussed next). By doing so, the photonic neural network system can leverage existing machine learning algorithms (i.e. backpropagation) and map training results from simulations to heterogeneous photonic hardware. The concept is shown in . A proof-of-concept experiment demonstrates the real-time implementation of a trained feed-forward neural network model using an integrated silicon photonic neural network chip for fiber nonlinear compensation [Citation22]. In this work, the authors experimentally demonstrated that the silicon photonic neural network can produce a similar Q factor improvement compared to the simulated neural network for nonlinear compensation as shown in , but it promises to process the communication data in real-time and with high bandwidth and low latency.

Figure 5. (left) Concept of training and implementing photonic neural networks. Inset shows the NLAF of the photonic neural network measured with real-time signals. The NLAF is realized by a fast O/E/O neuron using a SiGe photodetector connected to a microring modulator with a reverse-biased PN junction embedded in the microring. (right) Constellations of X-polarization of a 32 Gbaud PM-16QAM, with the ANN-NLC gain of 0.57 dB in Q-factor and with the PNN-NLC gain of 0.51 dB in Q-factor [Citation22]

![Figure 5. (left) Concept of training and implementing photonic neural networks. Inset shows the NLAF of the photonic neural network measured with real-time signals. The NLAF is realized by a fast O/E/O neuron using a SiGe photodetector connected to a microring modulator with a reverse-biased PN junction embedded in the microring. (right) Constellations of X-polarization of a 32 Gbaud PM-16QAM, with the ANN-NLC gain of 0.57 dB in Q-factor and with the PNN-NLC gain of 0.51 dB in Q-factor [Citation22]](/cms/asset/e32586e0-4b5e-48c1-912a-4dcaca2d9ece/tapx_a_1981155_f0005_oc.jpg)

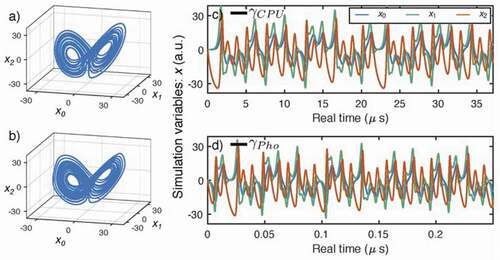

We also proposed a photonic architecture enabling all-to-all continuous-time recurrent neural networks (RNN) [Citation118]. Recurrent neural networks can resemble optical fiber transmission systems: the linear neuron-to-neuron connections with internal feedback is analog to linear multiple-input multiple-output (MIMO) fiber channel with dispersive memory. With neuron nonlinearity, RNNs can be ideally used to approximate both of linear and nonlinear effects in a fiber transmission system and compensate for different transmission impairments. RNNs, consisting of many feedback connections, are considered to be computationally expensive for digital hardware and require at least milliseconds to conduct a single inference. Contrarily, in photonic RNN, the feedback operations are simply done by busing the signals on photonic waveguides, allowing photonic hardware capable of converging to the solution within microseconds. This architecture thus allows to train PNNs externally using standard machine learning algorithms, e.g. backpropagation through time [Citation164].

Reservoir computing for channel and/or predistortion equalization Short-reach fiber-optic communication systems (FOCS) have recently seen increasing demand driven largely by the proliferation of cloud-based computing architectures and fronthauling in cloud radio access networks (C-RAN). DSP-based coherent transceivers are optimized for reach and capacity and generally considered commercially unviable for short-reach optical fiber links due to their high cost, footprint, and latency. Legacy systems employing intensity modulation and direct detection (IM/DD) with limited signal processing capabilities can provide low-cost solutions for inter-datacenter applications, however they cannot scale with the ever-increasing capacity requirements in modern communication networks. This has motivated renewed research interest in low-cost and low-complexity transceivers with bitrates optimized over short transmission distances (10–100 km) where channel impairments are dominated by dispersion with some nonlinear distortion [Citation165].

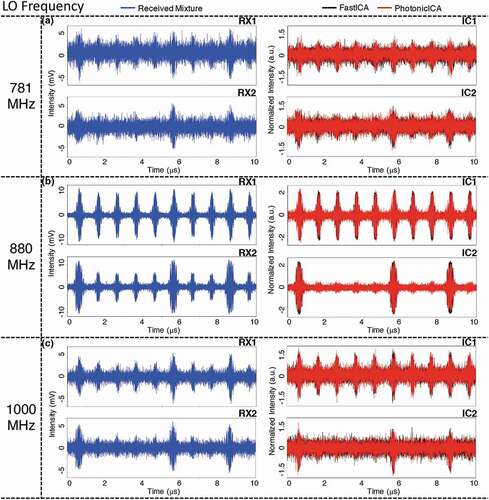

Photonic neural networks based on reservoir computing techniques have shown promising results for channel equalization in fiber-optic links. Reservoir computers (RC) are a class of recurrent neural networks that consist of a reservoir of sparsely connected neurons with randomized fixed weights. Contrary to feed-forward recurrent neural networks which are trained using backpropagation or Hessian-free optimization, reservoir computers only require the output weights to be trained, which can be achieved by linear regression. Optical RCs have attracted significant research interest as the reservoir can be realized by a single nonlinear element with a delayed feedback loop [Citation166] which has size- and cost-efficient implementations in photonic circuits. The first demonstrations of this technology for signal equalization tasks in FOCS used a semiconductor laser as a nonlinear element with a fiber delayed-feedback line and showed results competitive with DSP-based techniques [Citation162]. This approach is illustrated in . Real-time operation of this photonic RC faces challenges, however, as the input layer is time-multiplexed by electronically masking each bit before injection into the reservoir, which incurs a speed penalty as the bit time must be stretched to match the delay of the feedback loop [Citation167]. An all-optical implementation of a dual quadrature RC has also been proposed that could enable high bandwidth signal processing for coherent optical receivers [Citation167]. In this design, nonlinear transformation of the input signal is achieved through the Kerr effect in a highly nonlinear fiber with a modulated pump to select the desired signal quadrature. Optoelectronic approaches have also been considered, with optical pre-processing via spectral slicing to improve the dynamics of a digital reservoir computer. This architecture addresses the system losses incurred in all-optical RCs and achieves significant reach extension compared to legacy IM/DD systems at the cost of higher complexity as the number of photodetectors scales linearly with the number of spectral slices [Citation168]. Despite the SNR penalties, photonic RCs have merit over conventional DSP-based linear techniques when there is significant nonlinear distortion in the channel. Increasing the launch power at the transmitter may offset the system losses to achieve a higher optical SNR (OSNR) with improved performance in the presence of nonlinear impairments compared to linear equalizers. This hypothesis is supported by [Citation169] which compared the bit error rate (BER) vs OSNR trade-off for a state-of-the-art DSP-based equalizer against a photonic RC in a 100 km 56 Gbd dense WDM transmission system. As expected, the DSP equalizer is the better choice at low OSNR values, however the results show the RC-based equalization outperforms the digital receiver at high OSNR where the nonlinear perturbations are strong.

Figure 6. (a) Generic model of a reservoir computer [b) Illustration of the RC equalization scheme in Citation162,based on a single nonlinear node with time delayed feedback. The input is a vector of N samples representing a single bit or symbol. The mask vector

, which defines the input weights, is multiplied by the elements in

and injected sequentially into the reservoir. The output is a linear combination of the virtual nodes in the reservoir with weights optimized to produce an estimate

of the bit or symbol value

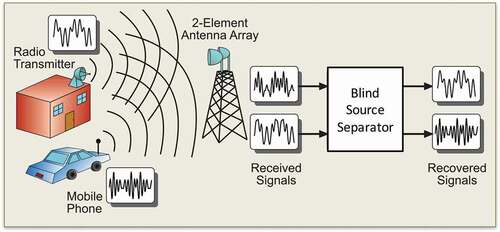

6.1.2. Jamming avoidance response

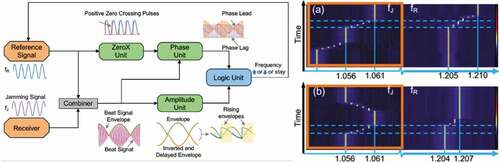

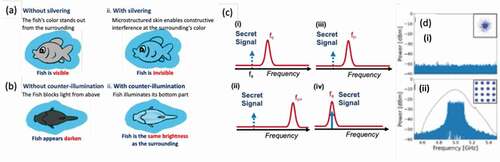

The dramatic increased demand in mobile RF systems has significantly worsened the spectral scarcity issue, the overcrowded RF spectrum increases the likelihood of inadvertent jamming (Citation170, Citation171]. Inadvertent jamming is one type of jamming that comes from a friendly source, that is usually aimless and unforeseeable. However, inadvertent jamming could easily corrupt the transmission channel if not being mitigated properly. In fact, inadvertent jamming does not only happen in our communication systems. Eigenmannia, a genus of glass knifefishes uses electric discharge to communicate with their own species and to recognize different species. Since Eigenmannia does not have FCC to regulate their frequency allocation, their frequency usage is dynamic. To avoid jamming, the Eigenmannia has an effective Jamming Avoidance Response (JAR) that helps the fish to identify potential jamming and automatically move their electric discharge frequency away from the potential jamming frequency [Citation172,Citation173]. The JAR in Eigenmannia mainly consists of four functional blocks (), they are (i) Zero-crossing point detection unit, (ii) Phase unit, (iii) Amplitude unit, and (iv) Logic unit.

Figure 7. (a) Illustration of the JAR design and the four functional units. (b) Spectral waterfall measurement of the photonic JAR in action with sinusoidal reference signal fR and jamming signals fJ = 150 MHz. (i) fJ is approaching fR from the low frequency side and triggers the JAR, (ii) fJ is approaching fR from the low frequency side and triggers the JAR, and then is moved away

First, the ZeroX unit identifies the positive zero crossing points in the reference signal. Then, phase comparison between the reference signal and the beat signal takes place at the Phase unit. Amplitude unit takes the envelope of the beat signal and marks the rising and falling amplitudes differently. Lastly, the Logic unit takes the phase and amplitude information obtained and determines if the electric discharge frequency should be increased or decreased to avoid jamming, and if there is no potential jamming threat.