ABSTRACT

While perspectives on open scholarship practices (OSPs) in Communication are noted in editorials and position papers, as a discipline we lack data-driven insights into how the larger community understands, feels about, engages in, and supports OSPs – insights that could inform current conversations about OSPs in Communication and document how the field shifts in response to ongoing discourses around OS in the current moment. A mixed-methodological survey of International Communication Association members (N = 330) suggested widespread familiarity with and support for some OSPs, but less engagement with them. In open-ended responses, respondents expressed several concerns, including reservations about unclear standards, presumed incompatibility with scholarly approaches, fears of a misuse of shared materials, and perceptions of a toxic culture surrounding open scholarship.

As a discipline, Communication is in the midst of a debate about openness. At the 2020 International Communication Association (ICA) annual conference and a follow-up at the 2021 conference, communication scholars engaged in lively discussions about the notion of ‘Open Science’ on conceptual, procedural, and ethical grounds, followed by a special issue of Journal of Communication devoted to open communication research (Shaw et al., Citation2021). Conceptually, communication scholars have discussed whether open science standards of reproducibility and replicability may be at odds with certain types of qualitative scholarship. Procedurally, some problematized the additional labor associated with manuscript preparation and review. From an ethical standpoint, some suggested open science practices are colonial in that they privilege academic voices over the subaltern voices of researched subjects. Indeed, debates over the appropriateness of the ‘open science’ label led ICA to adopt the more inclusive terminology of ‘open scholarship’ in recognition of the epistemological and methodological diversity in our discipline (we refer to Open Scholarship Practices, or OSPs, herein).

Broadly speaking, proponents of OSPs suggest that transparency will help ensure credibility of communication scholarship (Dienlin et al., Citation2021), whereas opponents argue that these practices privilege some groups of scholars while diminishing others (Bahlai et al., Citation2019) and could threaten marginalized populations within and outside of academia (Fox et al., Citation2021). Such debates are critical for establishing an agenda for discussing and interrogating OSPs, but they do not provide insights into broader patterns associated with how communication scholars understand and engage with these practices. In response to this lack of knowledge about the general attitudes and behaviors of communication scholars with regard to OSPs, we collaborated with ICA – one of the largest communication scholarship associations – to survey its members to better understand communication scholars’ (a) knowledge of (b) engagement with, (c) attitudes regarding, and (d) perspectives towards OSPs. Such data is valuable to track where and how debates around OSPs gain traction and are diffusing and influencing scholarly practices in Communication. This manuscript provides a summary of OSPs and the debate surrounding them, followed by a presentation of data from the ICA member survey (n = 330).

How scholars approach OSPs

OSPs refer to a broader movement to make both the process and output of scholarship more transparent and widely accessible (Dienlin et al., Citation2021; Lewis, Citation2019). Open scholarship is multifaceted and consists of a wide array of principles, values, and practices around procedural transparency and social inclusion in scholarship (Rinke & Wuttke, Citation2021). Some practices such as publishing study materials (e.g. survey items, experimental stimuli, codebooks) and sharing study datasets, analysis files, and software code (Bowman & Spence, Citation2020; van Atteveldt et al., Citation2019) aim at removing layers of opacity embedded in standard research practices (Bowman & Keene, Citation2018). Other practices seek to fundamentally shift the (social) scientific process itself rather than just making it more transparent, for example by encouraging researchers to register study predictions, methods, and analyses in advance of data collection (Ahn et al., Citation2021; Dienlin et al., Citation2021; Nosek & Lakens, Citation2014) – a practice known as preregistration. An extension of preregistration is the submission of multistage registered reports, in which authors submit their preregistered study protocols for publication consideration prior to data collection, and journals have the option of ‘accepting, in principle’ those study protocols (Center for Open Science, Citationn.d.). Underlying these suggestions is a belief that credible scholarship should be independently verifiable (Verfaellie & McGwin, Citation2011) which should improve the credibility of published research (Klein et al., Citation2018) – or at least, allow for that research to be open for further review.

Along with a growing interest in OSPs, there is an emergence of scholarship investigating attitudes towards and engagement in OSPs in various places and academic disciplines. For example, Abele-Brehm et al. (Citation2019) surveyed members of the German Psychological Society (‘Deutsche Gesellschaft für Psychologie’; DGP), recruiting DGP-affiliated mailing lists and receiving 380 complete responses (from a possible 4121 contacts). Their data showed that psychologists held both hopes (such as increased trust in findings) and fears (such as concerns over others uncovering errors in one’s own findings), although (a) these categories were uncorrelated with each other and (b) hopes were generally rated higher than fears. Moreover, both hopes and fears were highest among early career researchers and lowest among established professors. Their data also showed that attitudes toward open data sharing, while positive overall, were contingent on cost–benefit considerations by psychological researchers – open-ended answers reflected that OSPs were seen as valuable despite the additional labor required to engage in them properly. Christensen et al. (Citation2020) broadened the focus to consider awareness of, attitudes towards, perceived norms regarding, and adoption of open science practices sampling psychologists as well as scholars in Economics, Political Science, and Sociology. All invited respondents were ‘elite social science researchers’ and were recruited from prestigious journals and doctoral programs in North America. Of 6058 potential respondents, 2787 replied. The survey found that by 2017, 84% of respondents had engaged in at least one OSP (including sharing data, sharing materials, or preregistering study protocols), up from about 25% a decade earlier, and attitudes were overall supportive of OSPs. Data sharing was the most prevalent practice (73%) followed by sharing materials (44%) and preregistration (20%), and these patterns held across all fields, with sociologists less likely to engage in all OSPs. Self-identified qualitative researchers were least likely to engage in these OSPs.

Broadening even further to include social and natural sciences as well as engineering scholars, Pardo Martínez and Poveda (Citation2018) studied awareness of, attitudes towards, and engagement in OSPs among Colombian scholars (N = 1042). Similar to other studies, they found generally high awareness of OSPs, particularly open science tools (i.e. software, repositories, and networks) and open data. However, when asked to self-evaluate their knowledge, just over 53% of the sample ‘do not consider themselves to be sufficiently well informed’ about OSPs. Respondents signaled positive overall views of OSPs, but also indicated a lack of institutional support in terms of resources and evaluation criteria as barriers to their widespread adoption. Baždarić et al. (Citation2021) conducted a similar survey of attitudes towards and engagement in open peer review, open data, and the use of preprints in a cross-disciplinary sample of scientists (N = 541) working at Croatian higher-education institutions. Breaking somewhat from other studies, they found overall neutral attitudes towards OSP, with little variation across demographics and academic fields. This survey also identified a clear link between attitudinal support and practical engagement in OPSs, highlighting the importance of researchers’ attitudes for their research practices.

Finally in considering career trajectories, Toribio-Flórez et al. (Citation2021) focused on early-career scholars (ECRs) as presumed ‘drivers’ of future developments in academic research. Based on a survey of 568 PhD candidates affiliated with the Max Planck Society (broadly inclusive of social and natural sciences), they examined ECRs’ knowledge of, attitudes towards, and engagement in various OSPs. Several of their findings replicated prior work referenced above. In line with Abele-Brehm et al. (Citation2019), ECRs held generally positive views of OSPs. Consistent with Baždarić et al. (Citation2021), ECR’s knowledge and attitudinal support positively predicted engagement in OSPs. Like Christensen et al. (Citation2020), this survey confirmed substantial differences in OSP attitudes and engagement across disciplines (albeit with humanities scholars being overall supportive), again pointing to a need to collect discipline-specific data and discipline-specific reforms and incentive systems geared towards establishing adequate forms of OSPs. This latter conclusion is especially relevant for internally diverse fields like Communication, which was not represented in any of the prior studies noted – thus motivating the current survey.

The state of OSPs in communication

Multiple Communication journals, including Communication Research Reports (Bowman & Keene, Citation2018), Communication Studies (Bowman & Spence, 2019), Journal of Communication (Dienlin et al., Citation2021), and Media Psychology (Ahn et al., Citation2021), have recently published editorials endorsing OSPs, while not requiring them as a condition for publication. These journals and others have begun to endorse and promote OSPs, such as issuing open science badges for published papers (including Communication Studies, Communication Research Reports, Journal of Media Psychology, Media Psychology, Political Communication, Psychology of Popular Media, and others listed at https://www.cos.io/initiatives/badges), which could be taken as evidence that the profile of these practices has increased in the Communication discipline. Likewise, publishers such as Taylor & Francis (CRR, CS, and Media Psychology) have signed on to the Transparency Openness Program, or TOP (Taylor & Francis, Citation2022), signaling their institutional support for OSPs.Footnote1

However, relying solely on editorial statements or publisher endorsements to assess field-wide perspectives might create the misperception that OSPs are more widely endorsed than they are. For example, a quick inspection of ad-hoc conversations (such as those around #opencomm) suggests disagreement among scholars in terms of knowledge about, support for, and practices of open scholarship. The recent special issue of Journal of Communication focused on ‘Open Communication Research’ likewise featured editorials cautious and critical of OSPs. For example, Fox et al. (Citation2021) expressed concerns that open scholarship initiatives focus on openness at the potential expense of adequately protecting research participants, communities, and even researchers themselves – issues compounded further when working with historically marginalized communities. For example, whereas Dienlin et al. (Citation2021) suggest that Communication scholars should default to sharing research data and be compelled to explain why data should not be shared on a case-by-case basis, Fox et al. (Citation2021) counter that Communication scholars should default to data protection, and only share if they can adequately justify how data sharing will not harm those involved in a given study. Dutta et al. (Citation2021) observed that the discourse around open scholarship obfuscates contributions of Global South perspectives – including already shared critiques over data generalizability (such as the application of White, Educated, Industrialized, Rich, and Democratic samples to other populations; Bates [Citation2020], first proposed in psychology by Henrich et al [Citation2010] and established data sharing practices, among others). Similar concerns to Dutta et al. (Citation2021) were raised in a Latin American context by Moreira de Oliveira et al. (Citation2021). Arthur and Hearn (Citation2021) expanded on Dienlin et al. (Citation2021) to highlight the challenges and opportunities for open scholarship in humanities-focused work, in which longer forms of scholarship (such as monographs and books) are preferred – such works are not commonly discussed in OSP dialogues. Humphreys et al. (Citation2021) similarly focused more clearly on qualitative research methods, in which issues such as validity, transparency, reflexivity, collaboration, and ethics are key to trustworthy scholarship.

Of course, the presence of supportive and contra editorials on OSPs cannot be taken as a valid indicator of how Communication scholars understand, approach, or engage with these practices. Some empirical results have been presented. For example, Markowitz et al. (Citation2021) looked at already published Communication scholarship in 26 journals from 2010 to 2020 (k = 10,517 manuscripts) and found that just over 5% of those engaged in some OSPs, although the analysis did not specifically report which ones were being engaged. The report also found that Journal of Media Psychology, Political Communication, and Communication Research Reports had the strongest increase in OSPs over the analysis period. Bakker et al. (Citation2021) surveyed scholars publishing in 20 Communication journals with a specific focus on ‘quantitative communication research’ between 2010 and 2020 (N = 1039 of a possible 4157). Their results suggested some prevalence of questionable research practices (QRPs), such as misrepresenting inductive findings as hypothetical deductive (hypothesizing after results were known, or HARKing, 46%), post-hoc adding or removing covariates from analyses (46%) or changing analysis plans (45%) to seek more favorable results (i.e. results meeting thresholds of statistical significance). The study also found that respondents were critical of QRPs but believed that such practices were generally prevalent in Communication (and thus, a partial defense of their own engagement in QRPs); others defended QRPs as a by-product of perceived publication biases in which statistically significant findings are more likely to be accepted by journals. The same report suggested that support for OSPs to combat QRPs was a position endorsed by about one third of the respondents in open-ended question responses. That said, there are shortcomings to these existing analyses. For example, given that discussion of OSPs is a recent phenomenon with the journals named above having only adopted these practices in the last three years, a content analysis of OSPs in existing publications is likely to report overall low adoption. This uncertainty is compounded by the fact that journals do not all follow a similar set of standards – for example, there is no consistent set of OSP policies among ICA’s official journals, although some journals (such as Journal of Communication) have published essays encouraging OSPs. Likewise, targeting published authors introduces a bias insofar as it only speaks to those scholars actively publishing in a narrow band of journals (for example, a focus on quantitative scholars in Bakker et al., Citation2021), rather than probing from a broader set of Communication scholars such as the constituency of a large, diverse, and international organization such as ICA.

In recognizing that OSPs are an increasingly important discussion topic among Communication scholars, we examined how Communication scholars view and engage with OSPs by surveying the membership of ICA as one of the largest associations of Communication scholars worldwide. By doing so, this research makes four main contributions. First, our project provides a ‘field-level’ view of how Communication scholars understand and interpret OSPs and to what extent they endorse and engage with OSPs at a critical time for such debates – for example, funding agencies are moving towards requiring some OSPs such as open access (Bosman et al., Citation2021; Else, Citation2021) and some journals are instituting mandatory data sharing (Resnik et al., Citation2019) or at least publicly endorsing OSPs (see Taylor & Francis, Citation2022). Here, our project offers empirical insights, rather than relying on debates and position papers to inform ongoing debates around reforms in Communication scholarship norms, values, and practices (e.g. Bowman & Keene, Citation2018; Dienlin et al., Citation2021; Lewis, Citation2019). Second, due to the diverse membership of ICA, the survey is well positioned to capture perspectives on OSPs across many subfields, epistemological and methodological orientations as well as academic career stages present in the Communication discipline. The data presented in this study provide evidence-based insights into how a broader group of Communication scholars’ view OSPs in their chosen field. Third, the current study forms the foundation for tracking potential shifts in these perspectives over time for later attempts to historicize these debates and their outcomes. Finally, data from this project can directly inform potential revisions to publication policies, Communication methods curricula, promotion and tenure guidelines, and standards for research quality assessment across the subdisciplines. With these points in mind, we created a mixed-method questionnaire to provide a comprehensive assessment of Communication scholars’ (a) knowledge of, (b) attitudes towards, (c) engagement with, and (d) support for various OSPs. As such, our focal research question is:

RQ1a-d: What are the overall patterns of ICA members’ (a) knowledge of (b) attitudes towards, (c) engagement with, and (d) support for open scholarship practices?

Although we focus on an overview of the ICA membership at large, we also offer two comparisons between various self-identified groups of ICA members that might differ in their responses (i.e. based on career stage and epistemology/preferred methodology). Houtkoop et al. (Citation2018) found a low frequency of data sharingFootnote2 and informal discussions regarding OSPs in a sample of mostly senior psychologists, suggesting that resistance to OSP-oriented reform could be more prevalent among more established scholars, though other studies (Baždarić et al., Citation2021) have not reported similar gaps. Thus, our second question is:

RQ2a-d: Do ICA members’ (a) knowledge of (b) engagement with, (c) attitudes regarding, and (d) perspective towards open scholarship practices vary as a function of career progression status?

In addition, others have argued that scholars’ methodological and epistemological focus might shape their responses to OSP as qualitative data might not be as easily or ethically shared outside of its original context as quantitative data (Childs et al., Citation2014; Jacobs et al., Citation2021), and interpretivist and humanities-based scholarship is more likely seen as incompatible with notions of generalizability, replication, and reproduction than social science orientations rooted in empirical data (del Rio Riande et al., Citation2020; Knöchelmann, Citation2019). Hence, we ask:

RQ3a-d: Do ICA members’ (a) knowledge of (b) engagement with, (c) attitudes regarding, and (d) perspective towards open scholarship practices vary as a function of preferred methodology?

RQ4a-d: Do ICA members’ (a) knowledge of (b) engagement with, (c) attitudes regarding, and (d) perspective towards open scholarship practices vary as a function of preferred epistemology?

Finally, our census approach in targeting a specific scholarly association is similar to the approach used by Abele-Brehm et al. (Citation2019), who targeted psychologists with membership in DGP. Their study provides a unique opportunity to draw comparisons between organizations with members from related (i.e. many Communication scholars identify as social scientists and work with psychological constructs and theories) yet separate (i.e. many Communication scholars work more in humanities orientations) areas of scholarship. Here, comparisons between ICA and DGP could reveal discipline-specific variance in OSP orientations or similarities across disciplines, which are relevant to OSP debates and discussions. For example, DGP exists ‘to advance and expand scientific psychology’ (German Psychological Society, Citation2022, para. 1, emphasis added) which could be interpreted as representing social scientists with less variance in their epistemologies and methodologies than our focal ICA respondents. Moreover, as noted in Dienlin et al. (Citation2021), much of the concern over QRPs and dialogue around OSPs in Communication was animated by scientific fraud committed in Social Psychology (such as the case of Diederik Stapel; Levelt et al., Citation2012; Markowitz & Hancock, Citation2014) and much of the discourse around OSPs has origins in the psychological sciences. Finally, Abele-Brehm et al. (Citation2019) have made both their research materials and study data freely available, which facilitates our comparison. Our final research question is:

RQ5: How do ICA members’ attitudes regarding OSPs compare with DGP members’?

Method

Individuals registered as members of the International Communication Association (ICA) as of August 2020 were asked via email to reply to a brief survey of their knowledge of, engagement with, attitudes towards, and perspectives on OSPs. The survey was distributed on 26 August 2020 and made available for eight weeks, with bi-weekly reminders for those yet to complete the survey. The authors partnered with ICA in the planning and execution of this survey, and study protocols were approved by the host institution’s review board ([reference number anonymized for review]). To (1) reduce potential desirability bias that may have been introduced by knowledge of the originators of the survey, all of whom are scholars actively involved in debates around open scholarship and (2) protect the identities and personal information of all respondents, ICA staff organized all solicitations.Footnote3 A copy of our survey instrument, anonymized data analysis file, and data output with accompanying SPSS syntax are made available online via the Open Science Framework (OSF): https://osf.io/7dyte/?view_only = cecbcc641cd24be1b3c515513a2ff0a2.

Participants

We received 387 responses to the questionnaire, which was sent to 3584 registered ICA members (active as of 26 August 2020), 1810 of whom opened the email. The average time to complete the survey was 8.28 min (SD = 65.19), with a median of 12.04. As the survey was intended to be completed in a single session, we removed times faster than the lower 5% (≤ 1:12, n = 17) and slower than the upper 90% (≥ 49:21, n = 38) from further analysis.Footnote4 This resulted in the removal of 57 responses, for a final sample of N = 330 respondents with an adjusted average completion time being M = 13.54 min (SD = 8.55). Median response time was 11.38 and the shortest modal response time was 7.19.

Participants were asked to report demographic information using survey items from ICA’s standard profile information, although they were given the option to skip some or all questions (and hence, distributions reported here will not add to 100%). One hundred and six respondents (35.2% of the sample) identified as either ‘woman’ or ‘cisgender – woman’ and 102 (30.9%) identified as either ‘man’ or ‘cisgender – man,’ with 60 (18.2%) either not responding or explicitly marking ‘prefer not to say.’Footnote5

A majority of respondents lived in the United States of America (n = 169, 51.2%) at the time of taking the survey, with Germany (n = 20, 6.17%) representing the next largest group. In all, 36 nations were represented. Just under one-quarter of the sample (n = 74, 22.4%) chose not to list a country of residence.Footnote6

With respect to career progression, 74 respondents (22.4%) reported ‘senior faculty’ status, followed by 64 (19.4%) as ‘mid-career faculty, 51 (15.5%) as ‘junior faculty’ and 42 (12.7%) as ‘students.’ These four groups are used in between-groups comparisons.

Regarding ICA subfield affiliations, all 33 ICA divisions and interest groups were represented in the data, with many participants having multiple affiliations. Five subfields were represented by at least 10% of the sample: Communication and Technology (n = 75, 22.7%), Mass Communication (n = 58, 17.6%), Political Communication (n = 56, 17%), Journalism Studies (n = 54, 16.5%), and Health Communication (n = 41, 12.4%); infrequent affiliations included Instructional and Developmental Communication and Public Diplomacy (each n = 3, 1%), Intergroup Communication and Sports Communication (each n = 7, 2%).Footnote7 Due to differing response rates by subfield affiliation, comparisons were not made between affiliation groups.

Measures

We present measures below in their order-of-appearance in the survey (see OSF for complete survey metric).

Thoughts associated with ‘Open Science’ or ‘Open Scholarship’

Respondents were first asked to provide up to three answers to the question, ‘When you hear terms such as ‘open scholarship’ or ‘open science,’ what are the first three thoughts that come to mind?’ About 97% of the sample (n = 320) provided at least one answer, and 93% of the sample (n = 307) provided two or more answers. In thematic analysis, answers were pooled for descriptive reporting.

Knowledge of OSPs

Respondents were asked how much knowledge they felt they had about OSPs. We intentionally did not define the construct for participants as later questions asked for self-definitions. Response options were ‘no knowledge at all,’ ‘a little knowledge,’ ‘some knowledge,’ and ‘a great deal of knowledge’ (data reported in Results).

Familiarity with OSPs

In a single text field, participants were asked to list as many OSPs as they could recall. 82% of participants (n = 272) gave at least one answer, and the average number of practices listed was 2 (SD = 2, Mode = 1).

Engagement with OSPs

Respondents were asked eight 5-point Likert-scale items (‘0 = never’ to ‘4 = always’) about prior experience with specific OSPs. These items were not intended to be combined to indicate an underlying factor, so we conducted a principal components analysis using oblique rotation (direct oblimin) to explore the potential for these items to form components with shared patterns of variance (see Bowman & Goodboy, Citation2020; Park et al, Citation2002). Although the Bartlett’s test of sphericity was statistically significant, χ2(28) = 722.04, p < .001, the Kaiser-Meyer-Olkin measure of sampling adequacy was middling (.712), suggesting that variance explained would not be improved by using component clusters as compared to treating each listed OSP as an individual practice. Further inspection of the pattern matrix showed only three factors, and only one with an eigenvalue substantially greater than 1.00, and several items cross-loading onto multiple components (full analysis output shared to OSF). Thus, we chose to retain the individual items as representative of a specific OSPs.

Perceived benefits and problems of OSPs

Participants were asked to provide up to three answers to the two open questions, ‘In your view, what are the top three [benefits/problems] of open scholarship/science practices?’ For both benefits and problems, just about 85% of respondents (n = 284 for benefits, n = 280 for problems) chose to provide at least one answer. Only nine participants listed at least one benefit without listing any problems, and only nine participants listed at least one problem without listing any benefits.

Attitudes towards OSPs

To compare answers to an existing dataset of active association researchers, we used an instrument deployed by Abele-Brehm et al. (Citation2019) in a survey of 337 members of the German Psychological Society (www.dgps.de). Among other questions, they used a 14-item Likert-scale measure assessing attitudes towards various OSPs. We adjusted their survey in three ways. First, we read through their original German-language items (Abele-Brehm et al., Citation2018) and reported English-language translations (Abele-Brehm et al., Citation2019) using native and second-language German and English speakers to reword items for clarity.Footnote8 Second, we broke one question ‘Publishing research data is generally dispensable’ into separate questions ‘Publishing research data is generally superfluous’ and ‘Publishing research data is generally problematic’ to avoid presenting a double-barreled item to respondents (i.e. an item in which one could agree with part but not all of the statement). Third, their scale used a four-point response scale (1 = do not agree, 2 = slightly agree, 3 = fairly agree, 4 = fully agree), but such a scale did not include a neutral point and did not allow for the assessment of polarized disagreement, which could have biased respondents in their study towards agreement. To address this, we used a five-point Likert response scale from ‘strongly disagree’ to ‘strongly agree’ calibrated around a neutral point (see OSF materials).

Given our alterations to the scale, we performed an exploratory factor analysis (EFA) using principal axis factoring and oblique (direct oblimin) rotation with a maximum 100 iterations (Institute for Digital Research & Education, Citationn.d.). An initial analysis suggested factor analysis to be appropriate for the dataset regarding sampling adequacy (KMO = .852) and sphericity, χ2(105) = 1561.8, p < .001. Three items were removed for weak primary loadings in an initial EFA (≤ .400 on primary factor) and two were removed for problematic cross-loadings (≥ .300 on other factors) in a second EFA. Our analysis yielded three factors: support for OSPs, concerns about OSPs, and trust as a function of OSPs – the solution explained 51.6% of variance, although we note that KMO scores for the final analysis dropped below a preferred .800 (KMO = .763 for the final analysis) while still passing a sphericity test, χ2(45) = 954.4, p < .001. Factor descriptives as well as item descriptives (including dropped items) and estimates of internal consistency (McDonald’s omega ω for factors with more than two items; see Goodboy & Martin, Citation2020; Hayes & Coutts, Citation2020; Spearman-Brown’s ρ for factors with two items; see Eisinga et al., Citation2013) are reported in and explained in further detail in our Results section. Eighty-one participants (just under 25%) chose to answer an open-ended follow-up question, with an average word count of 40 (SD = 32).

Table 1. Attitudes towards various open scholarship practices (see Abele-Brehm et al., Citation2019).

Attitudes towards ICA’s Adoption of OSPs

Respondents were then asked about six hypothetical OSP policies that could conceivably be adopted by the ICA: one set applied to annual ICA conference submissions and one set applied to ICA journal publications (see OSF project repository for complete survey items). All items were on five-point scales from ‘strongly oppose (0)’ to ‘strongly support (4).’ As with the ‘engagement in OSP’ items, these were not designed a priori as separate items indicating an underlying factor but instead, discrete practices. However, to examine the possibility that these discrete practices formed larger components with shared variance (Goodboy & Bowman, Citation2020; Park et al., Citation2002), PCA was performed on each set of items – for ICA journals and for the ICA conference (see ). For ICA journals, the Bartlett’s test of sphericity was statistically significant, χ2(15) = 1025.614, p < .001 and the Kaiser-Meyer-Olkin measure of sampling adequacy was middling (.750), will six indicators collapsing onto a single component. For the annual ICA conference, the Bartlett’s test of sphericity was statistically significant, χ2(15) = 1439.998, p < .001 and the Kaiser-Meyer-Olkin measure of sampling adequacy was middling (.719), and although a scree plot suggested a second component with an eigenvalue greater than 1.00, the overall gain in variance explained by this second factor (eigenvalue = 1.097) and inspect of primary component loadings did not provide adequate evidence that the items formed more than one component. Thus for data analyses, both sets of items were collapsed into single components (one for ICA journals, one for ICA conferences), which suggests that survey respondents’ dispositions towards OSPs at the journal and conference level might not be nuanced to consider discrete practices.

Table 2. Respondents’ attitudes toward specific osps regarding submission to ICA journals and ICA conferences.

Scholarly focus

Participants were presented with a pair of semantic differential items asking them to mark (a) whether their scholarship was more likely to engage with quantitative or qualitative methods and (b) whether they adopted a more social science or humanities focus. For both items, responses were recorded such that ‘0’ represented full agreement with the left anchor (i.e. always engaging quantitative methods; always engaging a social science perspective), ‘50’ suggesting scholars who balance or engage both equally, and ‘100’ representing full agreement with the right anchor (i.e. always engaging qualitative methods, always engaging a humanities perspective).

The average score for the ‘quantitative methods – qualitative methods’ differential was M = 45.13, SD = 33.14 (median score of 41.00, modal score of 0) and the average score for the ‘social sciences – humanities’ differential was M = 27.95, SD = 28.88 (median score of 20.00, modal score of 0), with 59 missing cases for the former and 73 missing cases for the latter. One-sample t-tests against a score of ‘50’ (blended) suggest that sample-wise, respondents made greater use of quantitative methods, t(315) = −2.42, p = .016, and were orientated towards social science approaches, t(301) = −12.38, p < .001. Notably, the bivariate correlation between both scores was r(300) = .530, p <.001, suggesting some degree of independence between the responses.

Sample demographics

As reported above, participants were asked a suite of questions about their gender identity, professional position and career progress, and membership within ICA. All questions were taken directly from ICA’s standard demographic measures. Only career progress was used in analyses.

Results

Our results are presented below, grouped by the nature of the data analyzed. We first used quantitative survey results to address our four research questions, and then supplemented these analyses with an emergent thematic analysis of open-ended data. Qualitative data focus more specifically on sample-wide knowledge of (RQ1a) and attitudes regarding OSPs (RQ1c). Quantitative measures are analyzed presuming interval-level measurement for Likert-scaled and -type dependent variables (see Carifio & Perla, Citation2007; Robitzsch, Citation2020). Sample sizes vary for each analysis as a function of list-wise deletion for incomplete data (i.e. not all respondents answered every survey question).

Quantitative data analyses

Knowledge of OSPs

RQ1a concerned how much knowledge respondents felt they had about OSPs. The average sample-wide score was M = 1.57 (SD = .81). Given that scores of ‘0.00’ represent ‘no knowledge of OSPs,’ a follow-up one-sample t-test reported a statistically significant difference, t(328) = 35.4, p < .001, Cohen’s d = 1.95, suggesting that participants overall had ‘a little’ to ‘some’ knowledge of OSPs.

In addressing RQ2a, an analysis of variance (ANOVA) was performed comparing knowledge of OSPs means across four career progression categories (i.e. student, junior, mid-career, senior scholars). The result was not statistically significant, F(3, 230) = .699, p = .553, partial η2 = .009, suggesting that career progression stages had no appreciable impact on one’s knowledge of OSPs.

With respect to methodological and epistemological approaches (RQ3a and RQ4a, respectively), a multiple regression analysis was performed using both measures as independent ratio variables predicting knowledge of OSPs. The model was not statistically significant, F(2,252) = 1.32, p = .268, adjusted R2 = .003, Durbin-Watson = 1.67. In other words, the level of self-reported knowledge about OSPs was not significantly associated with a respondent’s methodological or epistemological preferences.Footnote9

Prior engagement with OSPs

To address RQ2, respondents were asked to report how often they tend to engage with eight different OSPs, which was subjected to a series of one-sample t-tests comparing each frequency to a value of ‘0.00’ (‘never’ engaging in the practice). For all eight OSPs, participants reported at least some engagement, although preregistered reports were the least commonly engaged OSPs ().

Table 3. Sample-wide frequencies of engaging with OSPs.

To address RQ2b, we compared the relative frequency of these practices across four career progression categories in a series of one-way ANOVA tests (). No statistically significant differences were found, suggesting that career progression is not associated with engagement with OSPs among our respondents.

Table 4. Average engagement with OSPs across career stages.

In examining RQ2c and RQ2d, we considered the influence of methodological and epistemological preferences on engaging with OSPs in separate regression models (see ). Only two significant regression models were found, indicating that scholars who engaged with social science approaches were more likely to share their research materials online, F(2,243) = 3.91, p = .021, adjusted R2 = .023, as well as their datasets, F(2,242) = 3.36, p = .037, adjusted R2 = .019. However, when Bonferroni corrections are applied to these tests to protect against false positive tests (for overview, see Allen, Citation2017), all findings failed to reach the adjusted p-value of .003. Interaction terms between methodological and epistemological preferences were also considered in all analyses but were statistically non-significant (see OSF for complete analysis results). Overall, there is no compelling evidence in this sample that engagement in OSPs is influenced by methodological or epistemological preferences.

Table 5. Influence of methodological and epistemological focus on engagement with OSPs.

Attitudes towards OSPs

Sample-wide, all three extracted factors – support, concerns, and trust – had average scores significantly different from the scale neutral point of 3.00 (RQ1c). Both support (M = 3.60, SD = 0.79), t(298) = 13.07, p < .001, and trust (M = 3.63, SD = 1.05), t(298) = 10.40, p < .001, participants rated them to be higher than the scale midpoint (i.e. trended towards agreement). Conversely, concerns over OSPs were lower than the neutral point (i.e. trended towards disagreement; M = 2.86, SD = 0.93), t(297) = −2.68, p = .008. In addition, the five individual items that did not load on to any factor also had means that were significantly greater than the neutral point (see OSF) – benefits outweighed perceived costs, publishing data was neither superfluous nor problematic, and data sharing was not seen as detrimental to one’s career. The only item that trended towards disagreement was basing hiring decisions on prior data-sharing practices (see , in addition to supplemental analysis file shared to OSF).

To compare responses across career progression categories (RQ3b), a MANOVA was used to account for correlations between dependent variables: support and concern r(298) = -.175, p = .002, trust and concern r(298) = -.132, p = .002, and support and trust r(298) = .521, p < .001. There was no significant overall multivariate effect, Wilk’s Λ = .977, F(9, 511.2) = 0.552, p = .836. Likewise, no significant univariate effects were found, suggesting that attitudes towards OSPs do not vary as a function of career progression.

Finally, we considered the potential for methodological (RQ3c) or epistemological preferences (RQ4c) to impact attitudes towards OSPs using a canonical correlation analysis. The multivariate test did not produce a statistically significant result, Wilk’s Λ = .973, F(6,458) = 1.02, p = .414. Likewise, no resulting canonical roots were statistically significant, leading to the conclusion that neither methodological nor epistemological preferences had any appreciable impact on attitudes towards OSPs.

Attitudes toward ICA-Specific OSPs

When treated as two broad components indicating attitudes for OSP policies, there is sample-wide support for OSPs regarding ICA journal and ICA conference manuscripts (RQ1d). Means on both scales were compared to the scale midpoint (3 = neither disagree nor agree) and both were found to be significantly greater than neutral: ICA journal manuscripts, t(324) = 12.4, p < .001; ICA conference manuscripts, t(322) = 3.07, p < .001. Importantly, paired-samples t-tests revealed that average support for OSPs at the journal was higher than that same support at the conference level, t(322) = 12.8, p < .001. Not surprisingly, the two scores were highly correlated, r(323) = .766, p < .001.

As a supplemental analysis, two items suggesting a requirement for sharing, however, were not broadly endorsed and the agreement with these items were significantly lower than the scale neutral point. With respect to ICA journal submissions, neither the requirement to share dataset, t(324) = −1.60, p = .111, nor the requirement to share materials, t(321) = 0.13, p = .898 had mean scores different from neutral. With respect to ICA conference submissions, neither requirement was endorsed: datasets, t(320) = −9.99, p < .001; materials, t(319) = −7.28, p < .001.

When comparing broad component scores across career progression categories (RQ2d), a MANOVA was used to account for the correlation between both sets of potential policy endorsements (for ICA journals and ICA conference submissions). There was no significant multivariate effect, Wilks’ Λ = .985, F(6,414) = 0.52, p = .797, partial η2 = .007 (see OSF for full analysis, including univariate tests). Career progression is not associated with support for or against potential ICA-specific OSP policies (see ).

Table 6. Support for potential ICA-specific OSP policies by career progression.

To address RQ3d and RQ4d, we examined the potential for methodological and epistemological preferences, as well as their interaction, to influence support for potential ICA-specific OSP policies, in separate regression models for ICA journals and ICA conferences. Neither model was statistically significant at the p < .05 level (see OSF for complete analyses and results).

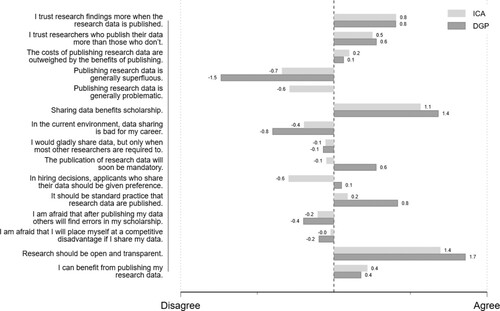

Finally, to address RQ5, we plotted items scores for the Abele-Brehm et al. (Citation2018, Citation2019) survey against those from the current survey (). Given that the DPG study used a different response option set that was imbalanced with respect to options for agreement and disagreement, plotted scores are recoded from −2 = do not agree (DGP)/strongly disagree (ICA), −1 = slightly disagree (DGP & ICA), 0 = neither disagree nor agree (ICA), 1 = slightly agree (DGP & ICA), 2 = strongly agree (DGP & ICA). Notably, item 5 (‘Publishing research data is generally problematic.’) was not included in the DGP survey, as explained in the Methods section. Statistical analysis was not performed on these data given our adjustments to the scale items, but patterns of responses are compared (i.e. to see if groups differed in valence).

Figure 1. Comparison of attitudes towards open science practices in members of the German Psychological Society (DGP, 2018) and the International Communication Association (ICA, 2020). Note. Plotted scores are means of variables recoded to -2 = do not agree (DGP)/strongly disagree (ICA), -1 = slightly disagree (DGP & ICA), 0 = neither disagree nor agree (ICA), 1 = slightly agree (DGP & ICA), 2 = strongly agree (DGP & ICA). Item 5 (“Publishing research data is generally problematic.”) not included in DGP survey.

Scholars from both associations shared very similar patterns of responses regarding OSP attitudes, with mean scores trending in the same direction (and nearly the same magnitude) for nearly every item of the survey. However, three notable differences did emerge, mostly around OSP mandates. For example, DGP members were more likely than ICA members to agree that data sharing should be ‘standard practice’ in published research. Regarding the use of OSPs as a criterion for hiring decisions, DGP members were more neutral, whereas ICA members trended towards disagreement. Likewise, ICA members were more neutral on the perspective that data sharing would soon become a requirement for publication, whereas DGP members broadly subscribed to this expectation.

Qualitative data analyses

Respondents were invited to share open replies to four broad sets of questions that aimed at gauging their OSP knowledge (RQ1a) and attitudes (RQ1c). For all four analyses below, responses were analyzed by the first author using an emergent thematic analysis scheme (Braun & Clarke, Citation2006). This process involved (1) an initial read of all responses to become familiar with the data, (2) a deep read of the responses to induce potential themes (i.e. common sentiments across responses), and (3) a constant comparison of any potential themes with previously read responses to merge themes that might be overlapping and re-read cases for the presence of novel themes. All cases were analyzed, and themes mentioned by at least 5% of participants within each response set were retained for interpretation. After these four steps were completed, emerged themes were codified and then their frequency was recorded sample-wide to allow for distribution comparison testing with other variables, such as career progression stage and methodological and epistemological preferences. After assessing frequencies, a final round of collapsing was performed for themes (see OSF for coding data).

Top three thoughts associated with OSPs

Respondents were asked to list the first three thoughts coming to mind when thinking about the concepts of ‘open science’ or ‘open scholarship.’ From 320 respondents, we retained themes mentioned by at least 16 respondents (5%). 10 emergent themes are included in . Notably, most respondents provided single-word answers or short phrases, but definitions are given below for context.

Table 7. Common themes associated with OSPs.

One of the most prevalent themes is the association between ‘open science’ and ‘open access.’ Nearly seven in 10 mentioned both concepts together. The association is not trivial as it is a broader statement about the very nature of open science. For example, a common concept in this theme was that of ‘accessibility’ as well as ‘free access’ and ‘open access.’ Here, ‘open’ was clearly associated with ‘accessibility’ in a broad sense.

Next, ‘sharing’ practices were mentioned more specifically, such as providing open research data, materials, and analysis code (the second-most prevalent theme) and doing so for the sake of transparency and integrity of research (the third-most prevalent theme). These themes are related insofar as the former focuses on specific actions that scholars can take, and the latter represents a held belief about the reasons underlying these practices, gestating in a belief that scholarship quality will benefit as a result – represented in the theme of ‘progress’ which emerged in our analysis to a lesser degree (7%). Such a notion is suggested in Bowman and Keene (Citation2018), who framed OSPs through the lens of evolving scientific norms.

The remaining themes broadly fit into one of two categories: factual statements of specific OSPs or value assessments towards those practices. Regarding the former, pre-registering study hypotheses (13%), and replicating published studies and/or reproducing the analysis from current datasets (11%) both emerged (with others referencing specific tools such as the Open Science Framework, but not reaching our threshold for theme retention). For the latter, a prominent theme included a hope that open scholarship would be collaborative and inclusive – some mentioned envisioning a ‘collaborative review process’ and others discussed this in terms of ‘crowdsourcing’ knowledge (see also Nielsen, Citation2012; Uhlmann et al. Citation2019).

Finally, a theme of ‘critical concerns’ associated with OSPs was collectively mentioned by about 20 percent of open-ended responses. First, these concerns were distinct from ‘predatory’ concerns associated with open access publishing – so-called ‘pay-to-play’ publication practices in which authors are guaranteed publication of their research after paying a fee to publishers, often with little or no peer review of the scholarship. Second, the ‘critical concerns’ theme encompassed several very specific notions individually mentioned by very few respondents, from ‘infighting’ (mentioned once, referencing concerns that OSPs encourage fierce debates among scholars) to institutional review boards and privacy concerns (protecting study participants’ identities), to perceived ‘Whiteness’ associated with OSPs. More frequent notions, albeit not prevalent enough to be retained as themes, included discussions of open scholarship as encouraging undue criticism of scholarship (including a fear of being critiqued by others, mentioned seven times), notions of open scholarship as representing an unnecessary intrusion into or surveillance of scholarship (mentioned eight times), concerns that OSPs were more of a trend or unnecessary fad among scholars (mentioned 10 times), critiques that practices are limited to specific methodologies and epistemologies (mentioned 10 times). The most prominent – and nearly reaching our threshold for retention as an emergent theme – were concerns about the invisible labor associated with maintaining and reviewing additional materials (mentioned 14 times).

Benefits of and problems with OSPs

Many of the ‘benefits’ mentioned here reflected those same emergent themes in the ‘first three thoughts’ response (see prior analysis). Thus, we did not gain deeper insights from reading these responses – this was clear after reading a little more than 15% of responses, as many respondents repeated their answers verbatim. Additional coding of the ‘benefits’ data here was deemed redundant.

However, given that the ‘critical concerns’ theme that emerged from the earlier analysis was somewhat vague and was the only explicitly negative theme, we chose to more deeply read and code participants’ answers regarding perceived problems with OSPs. All but nine participants reported both benefits and problems with OSPs, so the problems noted here are being listed by individuals who also noted benefits above. For this analysis, we declined to code any responses in which the respondent referred solely to open access publishing (n = 52). From 280 remaining responses, we retained any codes emerging at least 14 times (5% of responses) and from this, 10 themes emerged ().

Table 8. Emergent themes regarding problems with OSPs.

The most prevalent theme referred to increased efforts associated with engaging OSPs. In this theme, properly engaging sharing practices is seen as more than ‘making content available’ but involves a deeper consideration for the various potential audiences who might access this content. For example, one participant wrote ‘My datasets are so ugly … Embarrassing to share them’ and others noted that their current workflows do not often account for lab notes and other documents being understood by scholars unaffiliated with a given project. Discussions of labor were often tied with translation work for scholars operating in non-English contexts (i.e. being asked to provide complete English translations of their materials), and concerns about over-burdening early career scholars were also noted. Some felt that OSPs were not valued by various stakeholders, from other scholars to various conference and journal outlets to hiring and tenure promotion committees. One participant said that there is ‘little to no professional benefit or recognition’ for engaging in OSPs, and others suggested broadly that the added labor associated with maintaining shared content was not sufficiently rewarded to be worth the effort. Several noted that the burdens of sharing fell on the researchers’ shoulders, and they felt ‘exploited’ by the process as a result.

Another prominent theme was a concern that others would misuse shared scholarship, in particular shared datasets. Several respondents noted concerns that various malevolent parties could use data intentionally out of context from its sources, and/or without proper acknowledgement of the original scholars (i.e. the scholars who produced those datasets). This also included concerns about misuse for political gain, both in terms of social capital within scholarly communities and with respect to regional, national, or international political systems and governments. Scholars also noted concerns about being ‘scooped’ with their own data – sharing datasets might result in so-called data parasites (Longo & Drazen, Citation2016) who stand to benefit by using others’ data. One participant noted that ‘data access and collection is hard work’ and another noted that ‘[those] accessing a data file [have] WAY more to gain than [those] sharing it.’

Three similar themes centered around concerns over what is and is not considered open science – either as a function of their being unclear standards for these practices (22%), barriers around what can be shared ethically or legally (21%) or using open science as a dubious quality indicator for scholarship (5%). All three share a similar underlying claim that there is a great deal of ambiguity with respect to what is and is not considered an OSP – a sentiment echoed elsewhere (Fecher et al., Citation2015; Fecher & Friesike, Citation2014). Others noted confusion over various OSPs themselves. For example, the difference between a multistage registered report (in which authors submit their research proposal to a journal prior to data collection) and a pre-registered report (in which authors post their study predictions or expectations prior to analysis) is unclear, as even these parenthetical definitions could be open to interpretation. Others confuse replication and reproduction, when the former focused on the robustness of published findings and the latter focused on the veracity of currently reported or in-progress findings. One respondent lamented that ‘reviewers have honestly penalized me for open science I have submitted before’ and others were concerned that for some reviewers ‘more badges mean better research’ – both comments suggesting a lack of shared understanding of the values of OSPs between the parties involved.

Another set of responses drew specific attention to socially constructed dynamics of OSPs that either create or reinforce bad behavior among scholars. These were represented by a prevailing concern that open science is either implicitly or explicitly exclusionary of many forms of scholarship (18%), that open science proponents aggressively police and even attack scholars in various fora (13%), and that open science is a means of surveillance or control over scholarship (8%). These themes were somewhat reflected in the broad ‘critical concerns’ theme from earlier analyses, but merit attention as they speak to a bona fide fear even among those who actively engage with and support OSPs. Some respondents noted a ‘fear of being perfect’ that made them apprehensive to submit open scholarship for review.

A concerning pattern was the notion of #bropenscience that mostly White males were using the patina of OSPs to reaffirm control over scholarship broadly. One respondent noted that ‘the whole area needs to be decolonized (this feels mostly run by white folk …)’ and another noted that it felt mostly like ‘white men shouting OPEN SCIENCE at me.’ Such concerns have been noted in other areas, including the recent commentary by Whitaker and Guest (Citation2020) recounting observed misogyny in (often online) discussions of OSPs.

A final theme of note was the presumption that prominent entities, such as senior scholars, are more resistant to open science practices (6%). Although respondents in our sample held these beliefs, the presumption is refuted in our quantitative analysis – there were no appreciable differences in knowledge of, engagement with, or support for OSPs as a function of career progression.

Open-ended thoughts regarding Abele-Brehm et al. (Citation2019)

For various reasons, fewer participants replied to this question – less than 25% of our full sample (n = 81). Many answers here were represented in earlier themes. Thus, we provide these data in our shared OSF folder, but did not analyze them further.

Discussion

Conversations about OSPs have intensified in recent years, and a growing number of associations and journals in Communication have already begun incorporating OSPs, from the use of Open Science Badges (e.g. the ICA 2021 program and in many ICA journals) to more direct support for supplemental material (e.g. Open Science Framework content), with some journals and ICA submissions accepting pre-registered report submissions. Accompanying these efforts are numerous conference panel discussions and published journal editorials speaking to the perceived risks and benefits various OSPs. However, lacking in these panels and editorials are data broadly sampled from Communication scholars regarding field-level patterns of knowledge of, engagement with, attitudes towards, and support for OSPs. Our project is an attempt to provide such data, sampling of just over 10% of the registered membership of ICA representative of a variety of career stages, epistemologies, and methodologies in Communication scholarship. These data come at an especially important time when we consider that funding agencies and even some universities have already taken steps towards making OSPs mandatory. For example, projects funded by Horizon Europe, which makes use of European Union funding, mandate that research be ‘made immediately available through Open Access Repositories without embargo’ (European Science Foundation, Citation2022, para. 3; also see European Commission, Directorate-General for Research and Innovation, Citation2021), and a Dutch consortium of funding agencies, universities, and research agencies has worked actively towards a ‘cultural change’ in encouraging the universal adoption of OSPs (National Programme Open Science, Citationn.d., para. 7). Already reflecting some of the findings of our survey, these actions have not been accepted unconditionally, with de Knecht (Citation2020) and Neff (Citation2021) especially critical of OSP mandates that might further empower for-profit publishing companies. For example, Plan S publishing mandates that require Dutch funded research to be published open access generate over €16 million in open access fees for the publisher Elsevier (see Neff, Citation2021). Although these discussions center mostly on open access concerns, they foretell debates regarding tensions around mandatory OSPs, such as those reported in our own data.

From this sample, we can offer three tentative conclusions about broader patterns of OSPs among Communication scholars: (1) they have some knowledge of OSPs and are mostly supportive of the concepts, (2) they rarely engage OSPs, with the exception of sharing study materials and posting and reading pre-prints, and do express concerns and fears with the practices, and (3) they are mostly positive towards ICA conference and journals providing options of OSPs, but do not support making OSPs mandatory (breaking from other groups, such as DGP). The implications of these observations for Communication scholarship are unpacked below.

Growing knowledge of and support for OSPs

When asked to assess their knowledge of OSPs, more than 80% of respondents reported having ‘little’ or ‘some.’ That less than 10% had ‘no’ knowledge of OSPs shows promise insofar as the concepts are increasingly familiar among Communication scholars. Most critical was that these patterns did not differ as a function of career progression stage and epistemological or methodological focus. In other words, the basic concepts seem to be diffusing broadly, which likely allows for more critical conversations across ‘generations’ of scholars – for example, between current graduate students and more senior academic advisors (as suggested by Dienlin et al., Citation2021). Furthermore, albeit not analyzed in this manuscript, when asked openly about attending or participating in possible OSP training opportunities offered by ICA, just over half of the sample suggested that they would be interested. Suggestions ranged from a more conceptual introduction to the practices, including roundtable discussions and debates on the ethics of and reasons for engaging open scholarship, to more practical workshops, such as learning the best practices associated with sharing study elements.Footnote10 Although some critical notes were offered, most have (a) some working understanding of OSPs and (b) are willing to learn more.

When asked to list open thoughts about OSPs, many restated the most prominent practices: sharing study data, analysis code, and materials, somewhat reflecting their level of working knowledge of OSPs. More compelling was that these descriptions of specific OSPs were often accompanied by language supportive of the practices. For example, discussions of ‘Transparency & Integrity’ were seen in more than one-third of responses, in which respondents expressed having more confidence in scholarship when that work can be verified by independent readers (and far beyond one peer review cycle). One-fifth of responses noted that OSPs were ‘Collaborative & Inclusive’ as they allowed for formative feedback throughout (and even beyond) the publication process. Two more positive themes, each accounting for around 7% of the responses, also emerged, one suggesting OSPs were a sign of progress in Communication scholarship and the other hopeful that OSPs would make Communication scholarship more accessible to a broader range of publics. With respect to progress, there is a general recognition that change is natural to scholarly advancement (Kuhn, Citation1962; Popper, Citation1934/Citation1959) – similar observations can be made with respect to the renewed focus on conducting and publishing replication studies (Keating & Totzkay, Citation2019; McEwan et al., Citation2018). The latter is a suggestion that when scholarly materials are made more accessible, more can participate in the enterprise of knowledge discovery, especially when combined with social media platforms (see Chapman & Greenhow, Citation2019). Notably, the unprompted positive stance towards OSPs was reflected in the overall positive attitudes towards OSPs in response to Abele-Brehm et al.’s (Citation2019) metric.

Labor, fear, and a lack of engagement with OSPs

While most data suggested that respondents do have overall positive attitudes towards OSPs, (a) overall engagement with these practices is very low and (b) even among those sharing positive predispositions, several critical concerns were noted. For example, the only OSP engaged by more than half the sample (65%) at least ‘sometimes’ was sharing research materials online, such as survey metrics or experimental design protocols. In Bowman and Keene’s (Citation2018) model, such sharing is a critical first step towards open scholarship, but one that sits at the outer layer of so-called intimacy with a given manuscript. Likewise, just over 50% of the sample also reported making use of other scholars’ shared materials. From the perspective of open scholarship, these are notable steps towards transparency insofar as they do allow readers to see the ‘nuts and bolts’ of a wide variety of studies. Broadly speaking, Communication scholars are sharing their research materials and likewise, engaging actively with others who share the same.

At the same time, respondents do not engage with any other OSPs, such as sharing datasets or registering study protocols in advance of data collection. Among the concerns noted by the respondents, three broad patterns emerge: (a) concerns over labor and compatibility with various scholarly perspectives, (b) mistrust in and even fear of sharing with others, and (c) a sense of ambiguity regarding OSPs. Each is discussed below.

Labor and compatibility

Regarding labor, it would be disingenuous to suggest that OPSs are ‘cost free’ insofar as they do require scholars to consider the form and content of their shared materials more carefully. For example, sharing survey materials also requires providing a companion codebook so that readers can connect survey items to dataset, and datasets must be clearly labeled using language that can be understood by individuals not part of a given research team (for specific recommendations, see Bowman & Spence, Citation2020). More broadly, respondents noted the ‘extra paperwork’ and that ‘there’s already enough pressure on producing things quickly’ – one respondent noted that to properly engage in OSPs results in ‘every study [taking] a dissertation of time’ to present properly. Even among those expressly supportive of OSPs, there was full recognition that the practices are ‘time consuming (but worth it).’ OSPs were seen as even more onerous for scholarship conducted in a non-English context, as they would require additional translational work given English as lingua franca for most scholarship. Others noted the additional labor required of manuscript reviewers, who are ostensibly expected to evaluate additional materials being shared with any given manuscript – some were concerned about the additional review requirements and others were concerned about having to sort through ‘over-reported’ data, as authors might use open scholarship repositories for copious amounts of supplemental material. Discussions of labor were also tied to perceived value, with many suggesting that OSPs were not incentivized or prioritized. To this end, Colavizza et al. (Citation2020) found in a sample of PLoS ONE and BMC journals that those sharing datasets saw a 25% increase in citation rates, suggesting that manuscripts engaging with OSPs might be well-positioned for scholarly impact in the long-term. More research is needed here, especially with respect to Communication scholarship. Conversely, as noted by others (see Chapman et al., Citation2019, Edwards & Roy, Citation2017, Neff, Citation2021), framing increased citation rates as a benefit of OSPs could also be seen as contributing to problematic assessment systems that favor citation metrics over scholarship quality – a debate over so-called ‘perverse incentives’ in academia.

Concerns about additional labor could be moot in the face of suggestions that OSPs are not compatible with some forms of scholarship. As Dienlin et al. (Citation2021) raised, most OSPs tend to favor quantitative social science research that values replicability and reproducibility. Although replication and reproducibility may be less relevant with many qualitative and interpretive studies, however, dependability – defined as when ‘the researcher lays out [their] procedure and research instruments in such a way that others can attempt to collect data in similar conditions’ (Given & Saumure, Citation2008) – is still an important criterion for such work.

In these discussions, two notes are critical. First, regardless of the form of scholarship, there is a general shared interest in transparency (Haven & Van Grootel, Citation2019). Second, not all OSPs are relevant for all methods of inquiry. The latter point is likely paramount for scholars already concerned that their work is marginalized in the publication process, especially in an inherently interdisciplinary discipline such as Communication (Carleton, Citation1979), wherein flagship journals purport to be open to the field broadly but in practice could be perceived as favoring some forms of scholarship over others. As noted by one respondent, ‘Proponents [of OSPs] do not seem to be able to fathom that open science is not appropriate for much of the work in the diverse field of communication.’

Mistrust and fear

Relatedly, our respondents – even those generally supportive of OSPs – acknowledged that the social climate might not be so benevolent. Some were concerned that their shared materials and datasets would be used by other scholars to ‘scoop’ or ‘steal’ a study idea, whereas others were more concerned that once shared, they would have no way of ‘making sure the openly available data, scripts, results, etc. aren't misused by malevolent parties.’ Data misuse was a recurring theme, somewhat harkening the ‘garden of forking paths’ metaphor from Gelman and Loken (Citation2013) in which any one dataset can be analyzed several different ways for anyone determined enough to find (or not find) an effect. Others were concerned that their data would be used without proper attribution, acknowledging the labor inherent to data collection.

Perhaps more concerning was those 13% of respondents who worried about being attacked by their colleagues. Several respondents made passing reference to ‘ … stories of nasty and personal attacks of people's work after re-analysis of data’ or as put by another participant:

What I want to share my information, sometimes people have a ‘'m going to prove this person wrong' mindset (at least in the political science field in the US), rather than a collaborative mindset. And if people are out to get someone, it means that there is a risk of getting attacked if someone catches a mistake.

Others referenced the existence of ‘science vigilantes’ and suggested that the climate around open scholarship is perceived as aggressive and punitive – one respondent noted that ‘reviewers have honestly penalized me for open science I have submitted before.’ Unfortunately, such feelings can be validated in other published accounts. Nearly 35 years ago, Woolf (Citation1988) warned of aggressive science vigilantism in medical research and Teixeira da Silva (Citation2016) discussed similar concerns for scholarship more broadly. Singal (Citation2016) reviewed a controversy involving the American Psychological Association in which open scholarship proponents were labeled as ‘methodological terrorists’ (para. 4) – a comparison that was rebuked (and eventually retracted) but used to describe ‘a dangerous minority trend that has an outsized impact and a chilling effect on scientific discourse’ (para. 4). A particularly concerning note in our data suggested a toxic masculinity inherent in the discussion of OSPs. Several respondents invoked Whitaker and Guest’s (Citation2020) #bropenscience moniker in suggesting that for them, OSPs were less about scholarly integrity and more about ‘A small, loud, and privileged set of people get to narrowly define what is ‘good’ research.’ As noted above with respect to compatibility and in reflection of known racial (Chakravartty et al., Citation2018) and gender disparities (Wang et al., Citation2021) among others, such comments cannot be ignored. Such analyses were not the focus of the current study but are encouraged in future research concerned with how OSPs might disproportionally burden or hinder scholars from already deprivileged communities and perspectives.

Ambiguity in and legality of OSPs

A final emerging pattern in our data was a concern that open scholarship and various OSPs, are not well-understood or standardized. Less than 10% of respondents self-reported a ‘deep knowledge’ of OSPs. Likewise, many of the open-ended comments reflected the lack of precision with the concept. Several respondents noted ‘Different perceptions of what is open science’ and some feared ‘All or nothing mentality by some proponents of OS’ – this latter quote misrepresents the different layers of a study that can be shared, such as studies sharing research materials but not sharing data (Bowman & Keene, Citation2018). Somewhat related to ambiguity, a small portion of respondents expressed concern over so-called ‘open washing’ in which OSPs are seeing as ‘ … another heuristic (like p < .05) that gets oversimplified in practice.’

Notes about legality and privacy were present in 21% of responses. Most of these responses were in reference to data sharing, in which respondents noted concerns over ‘inadvertent participant privacy violations’ and ‘introduc[ing] risks for participants’ especially with qualitative data. One participant explained that it ‘might be hard to figure out different legislations and legal frameworks in case of international research collaboration’ and others noted that ethics review policies can be inconsistent, both between and within institutions. As such, any further promotion of OSPs within Communication scholarship must be contingent on further clarification, refinement, and education into the practices themselves – all tasks that could be undertaken by academic associations, journals, and Communication programs among others.

OSPs and academic associations

A final notable finding is that broad support for OSPs cannot be confused with the acceptance of such practices as mandatory. Indeed, participants were expressly against any OSP requirements for ICA-specific journal and conference manuscripts – a finding that might seem restricted to ICA until one realized that many members of ICA are likewise members of other associations and submit to journals more broadly beyond the ICA suite of offerings. Even at a general level, respondents did not agree that ‘the publication of research data will soon be mandatory’ and also pushed back on the notion that ‘in hiring decisions, applicants who share their data should be given preference’ – these patterns broke from other scholars, such as Psychologists completing the Abele-Brehm et al. (Citation2019) survey. These data should not be interpreted as evidence that Communication scholars do not support OSPs, but rather, as evidence of a preference to engage open scholarship on their own terms and absent broader mandates. Although not specifically examined, it is plausible that some of this reluctance stems from expressed concerns over the current climate around open scholarship as a potentially toxic and risky gambit – themes that were more prevalent in the ICA compared to the DGP data as reported. One explanation for the heightened salience of concern among Communication scholars could be that the interdisciplinary nature of our field engenders the same divergence of perspectives and opinions already stated in published editorials, such as those in the recent Journal of Communication special issue (in particular, Dutta et al., Citation2021, Fox et al., Citation2021; Moreira de Oliveira et al., Citation2021).

Limitations

Given claims that OSPs can be perceived as de facto exclusive of some scholarly orientations, self-selection biases might account for overall sample-wide support reported here. For example, respondents tended to skew more towards social science methodologies that have been shown to be more supportive of OSPs in other projects, such as Bakker et al. (Citation2021) and Abele-Brehm et al. (Citation2019). At the same time, respondents did represent a wide range of ICA divisions and interest groups and our analyses detected no strong associations between self-reported epistemology or methodology and any of our open scholarship responses. Also, while future research should more deeply consider ways to incentivize participation, our response rate was higher than rates in related work (8% of target population in Abele-Brehm et al., Citation2019; 5% in Houtkoop, Citation2018). Likewise, some might suspect self-selection bias as those who already support OSPs might have been more likely to complete the survey. Our results, however, showed that even among those supportive of OSPs, most of them also indicated ‘drawbacks’ and/or problems, suggesting their willingness to weigh the costs and benefits of OSPs.

With respect to the open scholarship attitudes scales (modeled from Abele-Brehm et al., Citation2019), we note that internal consistency of the ‘concern’ and ‘trust’ factors was not optimal, and items related to OSPs as ‘problematic’ and ‘superfluous’ and other items about the use of OSPs in hiring decisions, career advancement, and general costs and benefits did not load cleanly onto any OSP attitudes factor (‘support,’ ‘concern,’ or ‘trust’). Although we note that neither our study nor their paper aims at scale validation, future research should more critically consider a more robust measure of OSP attitudes – notable if academic communities hope to track and assess these attitudes in future research. This could be somewhat complicated, paradoxically, by general concerns that OSPs are not well-defined and are vaguely understood, as registered in our own data. We note that comparing our factors with Abele-Brehm et al. (Citation2019) is not recommended, as we adjusted the response options to allow for an equal opportunity to ‘strongly disagree’ or ‘strongly agree’ with individual items. Related to this, the current study relied on self-reports of one’s engagement with OSPs rather than using observed or behavioral data. Future research could more directly examine specific OSP rates among practicing Communication scholars or look at server data to understand how often shared content is being engaged by others (OSF offers usage statistics for content uploaded to their servers).