ABSTRACT

Concordance between 18 coaches (mean performance analysis experience: 8.3 ± 4.8 years) and 23 performance analysts (mean: 6.4 ± 4.1 years) regarding their performance analysis delivery within applied Olympic and Paralympic environments was investigated using survey-based methods. There was clear agreement on the provision, importance and need for full video. The majority of analysts (73.9%) provided profiling often or all of the time, whereas only one third of coaches felt that this was the required amount. Coaches not only agreed that coaching philosophy was the main factor directing analysis but also emphasised that training goals, level or age of athlete and discussions with athletes were influencers, far more than the analysts realised. A potential barrier for better communication was time, highlighted by all analysts as a major factor impacting their role. The majority of analysts (87%) attempted to provide feedback to athletes within one hour of performance, often or all the time. Coaches expressed a similar philosophy but were far more likely to want to provide feedback at later times. These findings should be utilised by analysts and coaches to review practice, identify gaps within practice and highlight areas for development.

1. Introduction

The primary goal of performance analysis is to provide coaches and athletes with information, via quantitative and qualitative methods, to assist decision-making and facilitate positive change in performance (O’Donoghue, Citation2006). As a result, performance analysis practitioners have become commonplace within elite environments and play an essential role within the coaching and feedback process. Research within performance analysis has primarily focused upon “the method” of analysis and subsequently the information generated via such methods, e.g. profiling. For example, performance profiling deemed as a methodology analysing potential performance patterns, attempts to offer some degree of prediction for future performance (Butterworth et al., Citation2013; James et al., Citation2005; O’Donoghue, Citation2013). As such, the understanding and potential to predict future performance is considered a powerful tool to the coach and analyst and consequently, has resulted in researchers developing a number of publications surrounding method development and specific sporting trends (Kubayi, Citation2020; O’Donoghue & Cullinane, Citation2011). What currently remains unclear is how this information is incorporated within applied practice.

Recently, some studies have investigated how feedback and performance analysis is used within applied practice by the coach, performance analyst, athlete or a combination of them (Francis & Jones, Citation2014; Groom et al., Citation2011; Martin et al., Citation2018; Wright et al., Citation2012, Citation2013). These studies have taken a qualitative approach, in an attempt to more effectively uncover the various complexities inherent within applied delivery, through the use of interviews and questionnaires (see, Groom et al., Citation2011). The main factors found to influence feedback and the use of performance analysis included coaches’ philosophy, time to carry out analysis and provide feedback, athlete interaction and training goals. Full video has been identified as the main deliverable by performance analysts, to coaches and athletes within applied practice (Martin et al., Citation2018; Wright et al., Citation2012). This approach has strengths and weaknesses which need to be managed to enable effective impact. For example, no additional time or processing capacity is required, and the analyst can simply record the performance of interest and deliver easily, e.g. via USB or online (O’Donoghue, Citation2006). All contextual information is retained since no cropping of video deemed insignificant by the analyst takes place. Thus, a coach can view a number of seconds or minutes prior to a key incident to help establish why or what contributed to the incident/outcome (O’Donoghue, Citation2006). The full video can include a large amount of “dead space”, particularly in some team sports such as rugby where ball in play is approximately 44% of overall match time (World Rugby, Citation2019). To counter this, various analysis software packages, e.g. Dartfish (Dartfish, Fribourg, Switzerland) and SportsCode (Hudl, Nebraska, USA), provide the ability to time stamp a video in multiple places using adaptable tagging panels, usually according to a team or coach’s analysis philosophy, such that single-event instances can be viewed easily. The caveat of this efficiency lies in the potential for event selection bias as well as the need for the analyst to have sufficient game understanding to direct the software to accurately read, organise and report the information of interest. In addition, current systems are not standalone pieces of software but do enable the user to export the desired data (e.g. event lists or matrices) and video for use within several external analysis and visualisation tools (e.g. Excel, Tableau, R and Python).

Whilst the role of the analyst may primarily lie in the delivery of the information to the feedback session, studies have also investigated the feedback session itself. For example, the most common duration of feedback sessions has been identified (0–20 minutes – 53%, Wright et al., Citation2013 and 30–40 minutes – 70%; Groom & Cushion, Citation2005). Coaches play a significant role within this feedback process whilst generally controlling the selection and delivery of information; for example, 73% of analysts within Wright et al. (Citation2013) stated that their coach led feedback sessions. Research has also suggested that coaches adapt their feedback approach to account for a multitude of variables, such as performance outcome (positive/negative), future performance schedule (competition/training), venue/feedback environment and type of athlete/group of athletes (personality, relationship and dynamics). In light of these variables, the coach must develop an appropriate feedback delivery strategy either: 1) immediately following performance or delayed until a later time; 2) face-to-face or online; 3) within a short or long session and 4) using a positive, balanced or negative approach. To assist this process, Groom et al. (Citation2011) developed a grounded theory framework consisting of three main categories (contextual factors, delivery approach and targeted outcome) as well as sub-categories. For example, if the goal was to elicit a change in an individual’s game related technical performance (contextual factor) the coach might select the most appropriate delivery approach. This might include a number of positive and negative examples of the athlete’s performance whilst making comparison to an elite performance.

Whilst limited studies have highlighted the factors associated with suggested effective feedback from coach, athlete and analyst perspectives in a cross-section of sports (Martin et al., Citation2018; Middlemas et al., Citation2018; Wright et al., Citation2012, Citation2013, Citation2016), there still exists a clear need to explore the degree of congruency between the coach and the analyst within elite sport, essentially highlighting if and where potential adaptations could be made within current practice to ensure that the needs of the coach are fully and consistently met. It may be argued that a coach-led analysis approach should provide such a consistent and aligned performance analysis programme; however, this is often not the case. For example, how much of the performance analysis support, delivered by performance analysts, is aligned with the needs of the coach? The purpose of this study, therefore, was to investigate the concordance between elite analysts and coaches regarding the use of performance analysis and feedback within Olympic and Paralympic sports.

2. Methods

2.1. Participants

A stratified sample of 41 coaches and performance analysts, working within British Olympic and Paralympic sport were selected to complete a survey relating to their use and involvement within performance analysis (). The participants were selected due to their affiliation with the English Institute of Sport (EIS). Thirty-five per cent of participants had < 5 years experience, 32% had 5–10 years and 32% had 10+ years experience using or delivering performance analysis within their practice. Participants were split into two groups, coaches (n = 18, mean performance analysis experience: 8.3 ± 4.8 years) and performance analysts (n = 23, mean performance analysis experience: 6.4 ± 4.1 years). Ethical approval for the study was gained from a University’s ethics committee.

Table 1. Distribution of sports represented

2.2. Survey design

Questions to be used within the survey were themed on current research regarding 1) coaches’ engagement and use of performance analysis (Kraak et al., Citation2018; Martin et al., Citation2018; Painczyk et al., Citation2017; Wright et al., Citation2012) and 2) analyst’s use and implementation of performance analysis (Wright et al., Citation2013). The list of questions, from the research referenced here, was condensed by removing similar questions and amended to better fit the target demographic and study aims. Two-experienced practitioners, who had greater than 10 years experience working as an analyst within Olympic/Paralympic sport, and the research team, who had greater than 30 years combined experience in performance analysis, reviewed and provided critical reflection upon question wording, clarity and response categories (Gratton & Jones, Citation2010). The final survey consisted of 16 closed questions (with additional text box to allow more detailed responses) with three main sections: 1) feedback structure, 2) analysis provision and 3) influencing factors. Likert scales were used to facilitate cross-sport comparison.

2.3. Procedure and data analysis

The survey was completed at a time suitable to the participant either in person (Francis & Jones, Citation2014) or via an online site (Painczyk et al., Citation2017; Wright et al., Citation2012, Citation2013), Survey Monkey (www.surveymonkey.com), in a similar manner to previous research. All responses were imported into Excel and collated as frequency counts and expressed as percentages in relation to the response category and Likert scale. Median Likert score values were presented where appropriate. Comparisons of participant responses were made between the coaching and analyst group and were statistically analysed using a Mann-Whitney U Test in SPSS (V21). Effect sizes were also calculated by dividing the z statistics by the square root of the sample size (Rosenthal, Citation1991).

3. Results and discussion

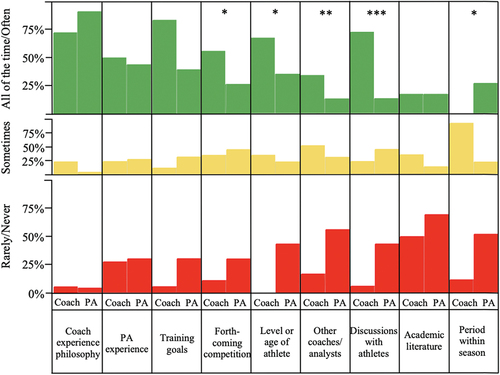

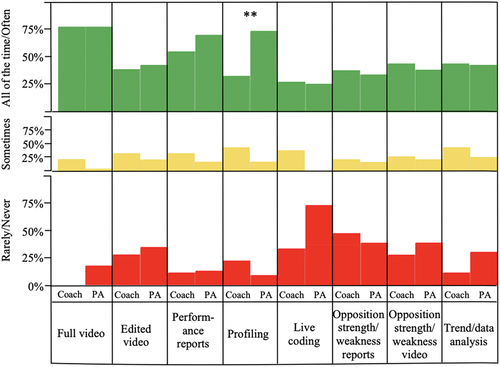

The aspects of performance analysis provided by the analysts were similar to the requirements of the coaches across the majority of variables with clear agreement on the provision, importance and need for full video (). The main difference is that 73.9% of analysts provided profiling all or most of the time, whereas only one third of coaches felt that this was the required amount (U = 110.0, p < 0.01, ES = 0.4). This discrepancy suggests the need to better understand 1) why coaches use and do not use, profiling; 2) whether coaches’ understanding of profiling differs from analysts’; and 3) were the analysts always providing the profiles their coaches required. Aspiring analysts, at least those studying performance analysis courses, are usually taught the profiling techniques outlined in the introduction (Hughes et al., Citation2001; James et al., Citation2005; O’Donoghue, Citation2005). Whilst the specific profiling techniques were not discerned in this study, further exploration is needed to determine whether the techniques taught at universities are fit for purpose. Mackenzie and Cushion (Citation2013) argued that much performance analysis research in football failed to address the needs of practitioners, with little evidence to demonstrate how findings were applicable to coaching practice. This limited transferability produced what they called a “theory-practice” gap and may similarly apply to the current profiling techniques taught in universities or used within the Olympic and Paralympic teams sampled here.

Figure 1. Comparison of the aspects of performance analysis provided or desired by the analysts and coaches. key: * = p < 0.05, ** = p < 0.01, *** = p < 0.001.

There was a discrepancy between coaches’ desire to receive live coding (77.8% wanted this at least sometimes) and analysts providing this (> 70% never/rarely did; ). This may be explained by the inability to provide live output during performance, as a result of events not allowing or providing appropriate infrastructure, to facilitate live analysis. However, it is also possible that the skill set of an analyst or a lack of functionality of the software may be the cause of the lack of provision.

Performance analysts tended to think that coaching philosophy () was the main factor directing analysis provision, concurring with the findings of Wright et al. (Citation2012) and Mooney et al. (Citation2016). Whilst coaches were of a similar opinion, they also emphasised the role that forthcoming competition (U = 129.5, p < 0.05, ES = 0.3), level or age of athlete (U = 123.0, p < 0.05, ES = 0.4), discussions with athletes (U = 68.0, p < 0.001, ES = 0.6) and seasonal period (U = 129.0, p < 0.05, ES = 0.3) influenced the analysis direction far more than the analysts realised (). Similarly, coaches most often (94.4%) reflected that the analysis was sometimes influenced by specific demands determined by the time of the playing season which was something far fewer analysts (69.6%) realised. These incongruences are most probably a consequence of a lack of communication, with coaches perhaps thinking it unnecessary to inform analysts of these decisions. However, better communication regarding these issues may facilitate better clarity of training goals for the analyst and hence the possibility of more targeted analysis in line with the coaches’ goals. Shared knowledge and understanding between coach and analyst has been argued as critical for ensuring effective practice (Groom & Cushion, Citation2004; Kuper, Citation2012; Wright et al., Citation2013). Conversely, Wright et al. (Citation2012) and Martin et al. (Citation2018) found that the majority of coaches (93% and 60% respectively) indicated that information received from performance analysis support informed their short-term planning. It would seem, therefore, that many coaches value the analysis support but do not recognise a need to include the analyst in decisions regarding planning. This may be perceived to be the sole remit of a coach, but a more adaptable and responsive approach to analysis may well positively impact planning decisions, particularly in terms of providing evidence for performance changes over time. A potential barrier for this cooperation was time, highlighted by all analysts as a major factor impacting their role.

Key: * = p < 0.05, ** = p < 0.01, *** = p < 0.001.

The majority of analysts (87%) stated that they attempted to provide feedback to athletes within one hour of performance, often or all the time, which differed to coaches who were far more likely to desire providing feedback at later times (U = 97.0, p < 0.01, ES = 0.5). The extent of this disparity adds weight to the previous finding that a lack of communication between coaches and analysts occurs in some Olympic and Paralympic sports. In terms of when feedback should be given, differences of opinion still exist. For example, McArdle et al. (Citation2010) suggested it was not uncommon for coaches to utilise immediate feedback as coaches often feel this is when the athlete’s recall is most clear. However, the authors also suggested that delayed feedback may positively remove emotion from the athlete and thus facilitate a greater degree of objectivity and self-reflection. A consistent regime of feedback, in this case less than an hour post-performance, could be an optimal strategy although athletes may not be able to receive feedback during competition when other tasks need to be achieved. Some coaches expressed a desire to move towards a more varied approach to feedback, with the aim of developing critical thinkers and independent learners who can respond more effectively to their opponent’s decisions and performance without external input.

3.1. Conclusion

This study has clearly demonstrated coach and analyst agreements on the provision of performance analysis in Olympic and Paralympic sports. Whilst this is not unexpected given the extent to which performance analysis provision is now prevalent, the details of the agreements and disagreements provide useful guidance for improving this aspect of applied sports science support. The importance and need for full video recordings were evident with stronger support from the coaches compared to the analysts. Some disparity existed for the provision of profiling with uncertainty regarding whether coaches and analysts agreed on what this entails, what methods were being used by analysts and whether the techniques taught at universities are fit for purpose. Many coaches valued the analysis support, but some did not seem to recognise a need to include the analyst in some decisions regarding planning training sessions. Whilst good communications between coach and analyst would seem an obvious positive goal, some coaches may perceive, perhaps correctly on occasion, that some decision-making is the sole remit of the coach. However, a more adaptable and responsive approach to analysis by a coach may well positively impact planning decisions, particularly in terms of providing evidence for performance changes over time. This is more likely in an environment where time is made available for coach analyst discussion, time being highlighted by all analysts as a major impediment. Coaches and analysts expressed a similar philosophy with regard to when to provide feedback although coaches were far more likely to wish to provide feedback at later times.

The surveys should be utilised by analysts and coaches to help facilitate reviewing practice within their respective sport, identify gaps within practice and highlight areas for potential development, i.e. where can the sport align more effectively. Whilst the findings were derived from Olympic and Paralympic sports, the findings may be applicable to all other sports. Of course, there will be examples of sports where they have addressed these issues and coach analyst relationships that are better developed than evidenced here. However, even at the elite level of sport, clear messages and dissonance within coaching staff were evident. Future work should endeavour to investigate a single sport, analyst team and coaching group with a longitudinal focus across a season or performance cycle, e.g. 4-year Olympic period, whilst making use of a combination of qualitative approaches including observation, informal conversations, reflective researcher notes and formal one-to-one or group interviews/sessions. This research avenue would aim to identify whether, by how much, and why, the process of performance analysis and feedback changes throughout the course of a season. How much is reactively changed based on results or the next opponent? Are there specific points throughout a season where the process is reviewed and changed? Or do coaches rigidly stick to the process outlined at the onset of the season? Moreover, extensions could be made to include academy and elite-level environments to gain an understanding of the development versus performance outlook and its effect upon the performance-analysis-feedback process. Ultimately, such research should aim to understand the: 1) interactions between coach and analyst, 2) changes in performance analysis delivery and 3) evolution of the feedback cycle based upon results, period within the season, and overall performance goals.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Butterworth, A., O’Donoghue, P., & Cropley, B. (2013). Performance profiling in sports coaching: A review. International Journal of Performance Analysis in Sport, 13(3), 572–593. https://doi.org/https://doi.org/10.1080/24748668.2013.11868672

- Francis, J., & Jones, G. (2014). Elite rugby union players’ perceptions of performance analysis. International Journal of Performance Analysis in Sport, 14(1), 188–207. https://doi.org/https://doi.org/10.1080/24748668.2014.11868714

- Gratton, C., & Jones, I. (2010). Research methods for sports studies. Routledge.

- Groom, R., & Cushion, C. J. (2004). Coaches perceptions of the use of video analysis: A case study. The FA Coaches Association Journal, 7(3), 56–58. https://www.researchgate.net/profile/Ryan-Groom-2/publication/ 262299780_COACHES_PERCEPTIONS_OF_THE_USE_OF_VIDEO_ANALYSIS/links/ 0f3175373cdf7d5783000000/COACHES-PERCEPTIONS-OF-THE-USE-OF-VIDEO-ANALYSIS.pdf

- Groom, R., & Cushion, C. (2005). Using of video-based coaching with players: A case study. International Journal of Performance Analysis in Sport, 5(3), 40–46. https://doi.org/https://doi.org/10.1080/24748668.2005.11868336

- Groom, R., Cushion, C., & Nelson, L. (2011). The delivery of video-based performance analysis by England youth soccer coaches: Towards a grounded theory. Journal of Applied Sport Psychology, 23(1), 16–32. https://doi.org/https://doi.org/10.1080/10413200.2010.511422

- Hughes, M., Evans, S., & Wells, J. (2001). Establishing normative profiles in performance analysis. International Journal of Performance Analysis in Sport, 1(1), 1–26. https://doi.org/https://doi.org/10.1080/24748668.2001.11868245

- International Judo Federation. Sport and Organisation Rules. https://www.ijf.org/documents. Accessed March 2019

- James, N., Mellalieu, S. D., & Jones, N. (2005). The development of position specific performance indicators in professional rugby union. Journal of Sports Sciences, 23(1), 63–72. https://doi.org/https://doi.org/10.1080/02640410410001730106

- Kraak, W., Magwa, Z., & Terblanche, E. (2018). Analysis of South African semi-elite rugby head coaches’ engagement with performance analysis. International Journal of Performance Analysis in Sport, 18(2), 350–366. https://doi.org/https://doi.org/10.1080/24748668.2018.1477026

- Kubayi, A. (2020). Analysis of goal scoring patterns in the 2018 FIFA World Cup. Journal of Human Kinetics, 71(1), 205–210. https://doi.org/https://doi.org/10.2478/hukin-2019-0084

- Kuper, S. (2012). Soccer analytics: The money ball of football, an outsiders perspective. Sports Analytic Conference: The Sports Office November. Manchester University Business School.

- Mackenzie, R., & Cushion, C. (2013). Performance analysis in football: A critical review and implications for future research. Journal of Sports Science, 31(6), 639–676. https://doi.org/https://doi.org/10.1080/02640414.2012.746720

- Martin, D., Swanton, A., Bradley, J., & McGrath, D. (2018). The use, integration and perceived value of performance analysis to professional and amateur Irish coaches. International Journal of Sports Science & Coaching, 13(4), 520–532. https://doi.org/https://doi.org/10.1177/1747954117753806

- McArdle, S., Martin, D., Lennon, A., & Moore, P. (2010). Exploring debriefing in sports: A qualitative perspective. Journal of Applied Sport Psychology, 22(3), 320–332. https://doi.org/https://doi.org/10.1080/10413200.2010.481566

- Middlemas, S. G., Croft, H. G., & Watson, F. (2018). Behind closed doors: The role of debriefing and feedback in a professional rugby team. International Journal of Sports Science & Coaching, 13(2), 201–2012. https://doi.org/https://doi.org/10.1177/1747954117739548

- Mooney, R., Corley, G., Godfrey, A., Osborough, C., Newell, J., Quinlan, L. R., & O’Laighin, G. (2016). Analysis of swimming performance: Perceptions and practices of US based swimming coaches. Journal of Sports Sciences, 34(11), 997–1005. https://doi.org/https://doi.org/10.1080/02640414.2015.1085074

- O’Donoghue, P. (2005). Normative profiles of sports performance. International Journal of Performance Analysis in Sport, 5(1), 104–119. https://doi.org/https://doi.org/10.1080/24748668.2005.11868319

- O’Donoghue, P. (2006). The use of feedback videos in sport. International Journal of Performance Analysis in Sport, 6(2), 1–14. https://doi.org/https://doi.org/10.1080/24748668.2006.11868368

- O’Donoghue, P. (2013). Sports performance profiling. In T. McGarry, P. O’Donoghue, & J. Sampaio (Eds.), Routledge handbook of sports performance (pp. 127–139). Routledge.

- O’Donoghue, P., & Cullinane, A. (2011). A regression-based approach to interpreting sports performance. International Journal of Performance Analysis in Sport, 11(2), 295–307. https://doi.org/https://doi.org/10.1080/24748668.2011.11868549

- Painczyk, H., Hendricks, S., & Kraak, W. (2017). Utilisation of performance analysis among Western Province Rugby Union club coaches. International Journal of Performance Analysis in Sport, 17(6), 1057–1072. https://doi.org/https://doi.org/10.1080/24748668.2018.1429757

- Rosenthal, R. (1991). Meta-analytic procedures for social research (2nd ed.). CA: Sage.

- World Rugby. Game Analysis. http://www.worldrugby.org/game-analysis. Accessed January 2019

- Wright, C., Atkins, S., & Jones, B. (2012). An analysis of elite coaches’ engagement with performance analysis services (match, notational analysis and technique analysis). International Journal of Performance Analysis in Sport, 12(2), 436–451. https://doi.org/https://doi.org/10.1080/24748668.2012.11868609

- Wright, C., Atkins, S., Jones, B., & Todd, J. (2013). The role of performance analysts within the coaching process: Performance analysts survey ‘the role of performance analysts in elite football club settings’. International Journal of Performance Analysis in Sport, 13(1), 240–261. https://doi.org/https://doi.org/10.1080/24748668.2013.11868645

- Wright, C., Carling, C., Lawlor, C., & Collins, D. (2016). Elite football player engagement with performance analysis. International Journal of Performance Analysis in Sport, 16(3), 1007–1032. https://doi.org/https://doi.org/10.1080/24748668.2016.11868945