ABSTRACT

The University of Sydney Library piloted an initiative in 2017 that used feedback and learning analytics to address referencing errors in undergraduate student assignments. We collaborated with teaching staff to deliver standardised feedback and learning materials to students, combining several educational technologies in use at the University. We discuss our pilot through three lenses: technology, collaboration and evaluating impact. Key findings include that technology affords opportunities for student support however integration issues can complicate the streamlining of workflows. Moreover, it is not possible for non-embedded library staff to align technologies with pedagogy and learning outcomes. Collaborations between library and teaching staff include a shared understanding of the value of referencing as an ethical practice. Teaching staff, however, may be unable to deliver referencing feedback in a way that is consistent with an externally designed initiative. When evaluating the impact of our pilot we found that students often display a lack of understanding about the role of feedback in their learning. Recommendations include that teaching staff should drive the use of technologies within their units of study, and that instructionally designed feedback planning is key in delivering consistent feedback, developing student feedback literacy and in supporting referencing as ethical practice.

Introduction

Higher education is an evolving space, providing both challenges and opportunities for academic libraries to support and contribute to learning, teaching and research. Change occurs through aligning education with current and future labour needs, which is a government expectation for higher education in Australia. The Department of Education and Training report into student retention, completion and success makes clear: ‘institutions should be continually adjusting curriculum, pedagogy and academic policy design to meet student needs and expectations’ (Citation2018, p. 6). Academics, however, are time-poor and often look to professional staff to implement emerging technologies in creative ways to improve student experience and their own workflows. This provides an opening for university libraries to play a key role in supporting teaching staff with new ways of delivering learning materials to their students at scale.

A specific focus for the Library’s attention at the University of Sydney came from the Vice-Chancellor’s Taskforce into academic misconduct. It found that there was a significant amount of ‘negligent’ plagiarism at the University – that is, cases where students did ‘not seem to understand the correct ways to cite and reference material that is not their own’ (University of Sydney, Citation2015, p. 2). A key recommendation of the report was that faculty embed in courses what students need to know about the referencing requirements of their specific disciplines (University of Sydney, Citation2015, p. 2). A subsequent report found that there had been an increase in incidents of plagiarism in five of our faculties in recent years, which includes cases of improper attribution, citation format issues, and incorrect paraphrasing and quoting (University of Sydney, Citation2018, p. 5). We believed we could support teaching staffFootnote1 with these forms of negligent plagiarism through the delivery of feedback to students who require learning opportunities to better develop referencing skills.

Despite a concern that students should develop good scholarly practices, literature in both Library and Information Science and Higher Education highlights that academics often do not directly address students’ information literacy deficits in relation to referencing, plagiarism or the use of scholarly sources with formal or scaffolded training. This was found to be the case in two separate overseas surveys by McGuinness (Citation2006) and Raven (Citation2012) who looked at the disparities between faculty expectations and student research practices at their respective universities. Faculty tended to assume that students would implicitly learn how to do research (Raven, Citation2012, p. 14) and pick up academic writing skills through preparing coursework assignments (McGuinness, Citation2006, p. 577). Teaching staff also believed that students were largely responsible for mastering these skills themselves (McGuinness, Citation2006, p. 579; Raven, Citation2012, p. 9). In the Australian context, Bretag et al. (Citation2014, p. 1153) analysed the policies of 39 universities and found that 95% of them indicated that students were responsible for academic integrity, rather than staff (80%) or the institution (39%). In effect, most institutions believed that ethical decision making was a matter of ‘individual responsibility’ which made it unnecessary for the university to play a role in developing student understanding of academic integrity or to take an holistic approach to it (Bretag et al., Citation2014, p. 1153). Moreover, Bretag et al’s., survey of student understandings of academic integrity found that students were largely dissatisfied with the support they received to avoid integrity breaches (Citation2014, p. 1160–1). While students agreed there was enough information about academic integrity policy, they did not feel that there were adequate opportunities for training throughout the course of their degrees (Bretag et al., Citation2014, p. 1165).

We identified some limitations in existing resources at the University of Sydney Library that address research practices and referencing. For example, new and undergraduate students may lack the skills to find our online resources, while material delivered face-to-face lacks scalability. Teaching staff also do not always invite librarians into their classes to teach about plagiarism (see Michalak, Rysavy, Hunt, Smith, & Worden, Citation2018). It is also difficult in a physical, real-time learning context to cater to the varied learning needs of individual students within a single cohort. We saw an opportunity to make use of existing electronic marking workflows to deliver timely and constructive commentary to students on specific academic integrity issues. The benefit of using a digital technology to provide students with feedback on referencing would be twofold:

commentary would be standardised for each type of referencing error (see Appendix 1); and

commentary would be personalised and only go to those students that required it (see Appendix 2).

Our pilot project supporting referencing feedback used a combination of technologies already in use at the University of Sydney. These included the University’s learning management system (LMS), the similarity detection software Turnitin,Footnote2 and the Student Relationship Engagement System (SRES)Footnote3 which is a learning analytics tool developed at this University. We partnered with teaching staff who would apply our standardised feedback to student assignments during marking. We also obtained Human Ethics [Protocol Number 2017/188] approval to conduct a study alongside our teaching initiative so that we could disseminate any useful findings about the impact of our referencing feedback on students’ behaviour. By comparing data from students across two assignments, we hoped to be able to assess whether our feedback produced any measurable improvement in their academic writing as indicated by a reduced incidence of referencing errors, including plagiarism.

Below we discuss some of the tensions we negotiated, and lessons learnt, while measuring the effectiveness of a teaching initiative which relied on multiple technologies and multiple collaborations to succeed. The paper takes the form of a narrative essay. The body first discusses the theme of feedback in learning and our approach to it, which is followed by a brief outline of the pilot design. The following three sections then present the methodology, findings and discussion related to each of the three project areas: Technologies, Collaboration, and Evaluating Impact. The conclusion presents our recommendations for future practice.

Responses to Plagiarism and Approaches to Feedback in Learning

A survey of the literature on academic integrity highlights that educational responses rather than punitive ones are more effective in addressing unintentional plagiarism (Dalal, Citation2015; DeGeeter et al., Citation2014; Divan, Bowman, & Seabourne, Citation2015; Gunnarsson, Kulesza, & Pettersson, Citation2014; Halupa, Breitenbach, & Anast, Citation2016; Henslee, Goldsmith, Stone, & Krueger, Citation2015; Liu, Lu, Lin, & Hsu, Citation2018; Powell & Singh, Citation2016; Stagg, Kimmins, & Pavlovski, Citation2013; Strittmatter & Bratton, Citation2014). Studies have investigated educational interventions such as information literacy classes (Gunnarsson et al., Citation2014; Henslee et al., Citation2015), online tutorials (Gunnarsson et al., Citation2014; Henslee et al., Citation2015), writing development programs (Divan et al., Citation2015; Liu et al., Citation2018), student self-reflections (Dalal, Citation2015; Powell & Singh, Citation2016), student perceptions of self-plagiarism (Halupa et al., Citation2016), a screencasting project (Stagg et al., Citation2013), and plagiarism ethics (Strittmatter & Bratton, Citation2014) to name a few. These nine approaches to academic integrity have merit as all claim some improvement in student understanding or behaviour post intervention.

We were interested in a more scalable and targeted teaching initiative than these forms of face-to-face and online support. Using feedback to help students develop good research skills was an obvious methodological choice for a teaching initiative because of the critically important role it plays in learning (Carless & Boud, Citation2018; Sefcik, Striepe, & Yorke, Citation2020, p. 40). Literature on feedback shows that it is a powerful influence on student achievement, particularly when it addresses faulty interpretations (Hattie & Timperley, Citation2007, p. 81–2) and is targeted towards improvement (Henderson et al., Citation2018, p. 2). Providing consistency in feedback also helps to ensure that it is a positive experience for students (Ferguson, Citation2011, p. 59; Sopina & McNeill, Citation2015, p. 676), as it contributes to a perception of fairness in the assessment process (Lizzio & Wilson, Citation2008, p. 265). Moreover, leveraging digital technologies for feedback has been shown to improve its standardisation (Sopina & McNeill, Citation2015, p. 676), while also being a mode of delivery that is highly rated by Australian students (Ryan, Henderson, & Phillips, Citation2019, p. 1519). Some of the literature on electronic feedback, however, has used technology more as a revision strategy rather than a resource for skill development (see for example, Buckley & Cowap, Citation2013; Halgamuge, Citation2017; Rolfe, Citation2011). Separate studies by Halgamuge (Citation2017) and Rolfe (Citation2011) allowed students to submit draft assignments to Turnitin and repeatedly change these drafts based on feedback from the software’s similarity report. Halgamuge (Citation2017) was unable to show that students were able to avoid incidences of plagiarism without a similarity report, while Rolfe’s (Citation2011, p. 707) work showed that students’ referencing skills worsened post intervention. In contrast, White, Owens, and Nguyen (Citation2008) have shown that detection software alone does not prevent plagiarism, whereas a constructive feedback approach to it can be successful. They found that combining tests of writing skills with exemplars of good versus bad writing did transfer to subsequent assignments and decreased the frequency of plagiarism (White et al., Citation2008, pp. 131–2; see also Kashian, Cruz, Jang, & Silk, Citation2015, for a similar combination of instructional activity and electronic feedback). We favoured the approach of combining digitally delivered feedback with learning opportunities.

Pilot Design

Our pilot design was a form of just-in-time learning delivered only to students requiring it. We believed our feedback would be effective because it would provide a detailed corrective for poor referencing performance via a Turnitin QuickMark (Sefcik et al., Citation2020, p. 40), while also providing a guide for future action through the learning activities delivered via the SRES (Ferguson, Citation2011, p. 60). QuickMarks allow teaching staff to utilise an existing library of feedback comments, and to create their own, which can be applied on multiple occasions, to multiple students, and across multiple classes and assignments when marking through Turnitin. Our recruitment criteria specified that collaborating units of study would have two written assessments where referencing was an explicit aspect of the marking rubric. We intended that insights derived from feedback on the first assignment could be applied by students to their second assignment, thus making it an effective learning experience for them (Carless & Boud, Citation2018, p. 1318; Ferguson, Citation2011, p. 58; Henderson et al., Citation2018, pp. 3, 6).

The Library team was solely responsible for the design of both the teaching initiative and research study. Though we informed potential units of study about how the pilot and research would work during the recruitment process, we did not receive input from teaching staff on our design. We were not embedded as teaching staff in any of the units of study recruited for our pilot, so the application of QuickMarks to assignments and timing for the return of feedback was entirely in the hands of teaching staff. In aligning ourselves with a culture of evidence-based decision making, we required corroboration that our teaching initiative was effective in changing student behaviour and before we attempted to employ the design more broadly across the University (see Palsson & McDade, Citation2014). We had to use more than one piece of technology to achieve this (discussed in the Technologies section). As a consequence, taking an evidence-based approach to the delivery of a teaching initiative posed a challenge for teaching staff, students and, therefore, us in attempting to use these data to evaluate its impact.

Technologies

Learning analytics technologies can be employed to measure and analyse student behaviour in a variety of ways, with learning analytics commonly being associated with the tracking and analysis of big data about students. In contrast, our study is situated in the category of course-level learning analytics research. We aimed to use analytics technology to deliver feedback to students, putting information into the hands of teaching staff and students to achieve desired learning outcomes. We required technologies that would allow us to flag referencing issues in students’ written work and then leverage these marking decisions to provide appropriate feedback.

Turnitin allows a library of predefined comments, known as QuickMarks, to be created and applied whenever a commonly-used piece of feedback is required during assignment marking. Based on our research (discussed in the Collaboration section), we created a set of QuickMark comments specific to the needs of University of Sydney students to provide explanatory content about common referencing errors.Footnote4 Once this library of comments is uploaded by teaching staff to their QuickMarks Manager, the set can be used and reused when marking assignments to provide standardised feedback to students who make one or more of these errors. By providing consistent feedback on common referencing issues we hoped to improve student understanding and reduce teaching staff workload when marking. We also wanted to track student engagement with feedback as a means of evaluating the pilot. Unfortunately, Turnitin has no mechanism to record student interaction with QuickMarks or resource links, requiring us to enlist another piece of technology.

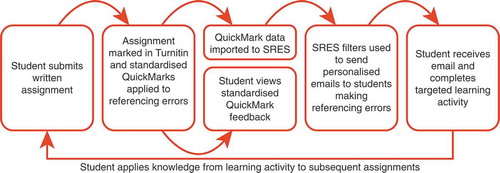

The SRES is an analytics tool that helps teaching staff to curate relevant data and compose personalised messages by building simple rules to customise information that different students can receive via email, SMS, or webpages (D. Y. T. Liu, Bartimote-Aufflick, Pardo, & Bridgeman, Citation2017; Vigentini et al., Citation2017). It enabled us to import the number of times each QuickMark was applied to each student assignment, send personalised emails, track the number of times those emails were opened and the number of times links within them were clicked (). This functionality was ideal for our purposes, however, we had two pedagogical concerns about our use of the SRES. Firstly, we would be using two technologies to deliver separate pieces of essentially the same feedback to students. Secondly, the SRES is designed to allow pedagogy to drive analytics, which should be in the control of teaching staff rather than a team of librarians.

Figure 1. Teaching initiative workflow illustrating how the technologies link to provide standardised and personalised feedback on referencing errors in written assignments

We addressed the first issue by using QuickMarks to deliver a short, standardised explanation of the referencing error and the SRES to deliver a personalised email that expanded on the error and included a link to an online learning activity. It would have been more efficient to deliver all feedback, including links for relevant learning activities, within each of our QuickMark comments. However, we would not have been able to gather data in the ways outlined above. Ironically, our attempt to measure the impact of our teaching initiative disturbed the streamlined delivery of our content. A further technological complication occurred with the shift to Canvas as the University’s LMS in semester 2. Integration issues between Turnitin and Canvas resulted in a delay to the release of follow-up emails from the SRES to students.

Despite these issues, we found both QuickMarks and the SRES to be well suited for delivering electronic feedback to students. QuickMarks are applied directly to assignment errors so students receive feedback at the point of need. The rich functionality of the SRES can enable teaching staff to be innovative about the ways in which they engage and support students. Our academic collaborators, however, did not have the time or confidence with this technology to utilise it themselves during this pilot. Rather, they allowed the Library team to send communications about referencing errors on their behalf (see the Collaboration section below).

For feedback to be truly efficacious, it needs to occur sooner than it was delivered in our pilot. For all units of study, grades for first assignments were released later than planned by teaching staff due to technological and workload issues that were independent of our pilot. The ensuing gap between assignment submission and receipt of feedback may have made the feedback less effective for students and decreased their engagement with the referencing support materials. The delay certainly resulted in a major pedagogical issue as students had already submitted their second written assignment before receiving feedback on the first. The mistiming of feedback meant that students had no immediate opportunity to act on it (see Carless & Boud, Citation2018, p. 1318; Ferguson, Citation2011, p. 58; Henderson et al., Citation2018, pp. 3, 6). In effect, our teaching initiative was unable to ‘feedforward’ (Hattie & Timperley, Citation2007, p. 90) into the second assignment within units of study as we had planned in our pilot design. While we hoped that students would incorporate insights from our referencing feedback into subsequent units of study, we had no way of evaluating this.

Our experience with these technologies has demonstrated to us that analytics are better able to support students when teaching staff integrate tools such as Turnitin QuickMarks and the SRES with pedagogy to improve learning outcomes. When these technologies are in the control of teaching staff, rather than a Library team, teaching staff can determine how best to frame the inclusion of analytics as part of a subject’s activity. This will be far more be effective in guiding the decision making of students as well as teachers (see McKee, Citation2017).

Collaboration

During 2017 we partnered with academic coordinators from four units of study to deliver our referencing pilot. Two units were partnered with in semester 1, hereafter referred to as Unit 1 and Unit 2, and two units in semester 2, hereafter referred to as Unit 3 and Unit 4.

Our two academic collaborators for semester 1 allowed us to survey student essays from a previous enrolment period so we could determine key referencing issues. The three most common errors we identified were:

the failure to cite any source;

the failure to cite appropriately scholarly sources; and

the failure to cite consistently using a specified citation style.

Our findings are consistent with those of Lunsford and Lunsford (Citation2008), whose study on common error forms in students’ scholarly writing found a similar set of issues to do with referencing. Students struggled with ‘the use of sources on every front’, which included omitting them, failing to choose appropriate ones, and failing to document them correctly (Lunsford & Lunsford, Citation2008, pp. 795–6).

This research informed our creation of a set of three predefined referencing QuickMarks and related follow up emails with relevant learning activities. All content was tailored to each collaborating unit of study. Each piece of feedback targets a specific scholarly procedure used to document a research paper, so it is directed to the ‘process’ level of understanding that is needed to perform referencing tasks (see Hattie & Timperley, Citation2007, p. 90). Our approach is consistent with Hattie and Timperley’s (Citation2007) model of feedback in that we aimed to enhance learning through providing information about a student’s progress with scholarly writing and about the actions they need to take to improve it (pp. 86–87). Our QuickMarks thus combined feedback with instruction related to both the ‘task’ and ‘process’ levels of learning (see Hattie & Timperley, Citation2007, p. 82).

It became evident during the collaboration process that the Library workload associated with this pilot was not scalable beyond this project. The complexity of coordinating multiple technologies required us to:

develop training materials for teaching staff to ensure that our QuickMarks set was uploaded and applied systematically by the entire teaching team. This was particularly important given that one unit of study we recruited had an academic coordinator and six other teaching staff marking assignments.

build and send follow up emails to flagged students, as none of our academic coordinators were familiar enough with the SRES to do this themselves.

An unanticipated issue in collaborating with teaching staff was the lack of usable data gathered to evaluate the impact of the pilot. For Unit 1, we successfully delivered emails from the SRES to students for both written assignments. However, the resulting data set was quite small. We were unable to proceed with the Unit 2 collaborator due to their concerns around giving the Library access to student information, so we also acquired no data from this collaboration.

Though we recruited a further two academic coordinators in semester 2, Unit 3 only applied QuickMarks to half the student intake for the first assignment. A second marker for the course, with whom we had never communicated, may not have been fully onboard with or cognisant of the demands of our teaching initiative. We chose not to send follow-up emails to those students that had received QuickMarks because it would be unethical to continue when half the students had not been included. Unit 4 only applied QuickMarks for the first assignment. They overlooked that we wanted them to apply the QuickMarks also on the second assignment, so we had no comparative data from this collaboration.

Our partnerships with academic coordinators proceeded initially from the shared aim of wishing to improve students’ scholarly writing through feedback on referencing. This goal, however, was not clearly linked to the learning outcomes of the assignments for all the units of study we recruited. In Hattie’s and Timperley’s (Citation2007) terms, the ‘success criteria’ about scholarly writing were not explicit enough for students, and some teaching staff, to see a need to attain this goal (and see ‘Evaluating impact’ section below) (pp. 88–89). This was most evident for Unit 3, where QuickMarks were applied to only half the student cohort, but it was also evident for Unit 1 and Unit 4, as we found many missed opportunities for applying QuickMarks when we sampled their marked essays. It is common for teaching staff not to highlight all forms of error in an essay, as Lunsford and Lunsford (Citation2008) found in their large study of changes in undergraduate writing (see also Ferguson, Citation2011). Markers tend to focus on a few specific error patterns in student papers (Lunsford & Lunsford, Citation2008, p. 794), and they do well to pick up on even half of the referencing errors that students make in research assignments (Lunsford & Lunsford, Citation2008, p. 796). This may not be ideal from the point of view of the missed learning opportunities and lack of consistency in feedback that it implies (e.g. Lizzio & Wilson, Citation2008). There are sound pedagogical reasons as to why too much correctional feedback can be undesirable.

Hattie and Timperley (Citation2007) offer a cogent reason for restricting feedback, as too much of it on any one of the levels in their model (task, process, self-regulation, and self-personal), ‘may detract from performance’ if it inhibits students from making the necessary cognitive effort to develop good strategies to attain an intended learning outcome (p. 91). As well, Ferguson’s (Citation2011) survey of student perceptions of feedback found that students tended to rate feedback on ‘pedantic detail’ such as ‘spelling, grammar, referencing, etc’ less favourably than feedback about the ideas and arguments expressed in their work (pp. 56–57). Teaching staff also prefer to engage with the intellectual content of student work. In our post-semester debrief, one collaborator told us they chose to restrict the application of QuickMarks as they did not want to discourage otherwise good students from continuing to study the subject. They also wished to provide more messages of approval to students about the strengths of their work, rather than offer too many of a correctional nature.

Ferguson’s (Citation2011) statements speak to the need to prioritise commentary about core ideas, however, we would disagree with categorising referencing as ‘pedantic detail’ (p. 56). Rather, we see it as a foundation of good research and a moral act in that it confers or denies value to other scholars in a field (Penders, Citation2018, p. 1). Ferguson’s view thus highlights a major challenge facing educators who wish to reverse this tendency to relegate citing to a mechanical rather than ethical practice.

There is a hierarchy of teaching aims, both in practice and even within the literature on feedback. Correctional commentary and the goal of good scholarly communication are both subordinated to more primary aims, the most important of which is the engagement between marker and student around intellectual content. This may help to explain why teaching staff in the units we partnered with made less use of our QuickMarks when marking assignments than we had anticipated. Moreover, despite our intentions, teaching staff may not have experienced the pilot as labour saving. When we sampled marked essays, we found some instances where a QuickMark had been applied incorrectly and some instances where teaching staff had commented about a referencing error instead of using the relevant QuickMark. Possible interpretations include the speed with which marking has to be done thereby resulting in errors, forgetting about the study at the time of marking, or even being resistant to it due to our failure to get buy-in from everyone. Perhaps applying commentary from a third party (us) to an assignment was more burdensome than typing their own feedback directly on to an essay. It may also be the case that applying more of our correctional feedback increased marking time if teaching staff tried to balance every instance of its delivery with commentary about students’ substantive work so that students would not feel their assignment feedback was too detail oriented.

The issues outlined above could be overcome with the development of an explicit feedback plan for a unit of study or single subject area. This idea is supported in modern feedback literature which defines it as an educative process rather than ‘commentary’ (Henderson et al., Citation2018, p. 4) or ‘telling’ (Carless & Boud, Citation2018, p. 1317). An effective plan is one that provides multiple opportunities for students to develop their abilities to assess their own work. This transforms potential student defensiveness, as they become an active part of the process, rendering feedback meaningful (Carless & Boud, Citation2018, pp. 1317–8, 1323). The establishment of ‘a productive feedback ethos’ (Carless & Boud, Citation2018, p. 1322) would enable primary learning outcomes to be maintained without needing to restrict feedback or subordinate some teaching aims, such as referencing. This would strengthen teaching staff teams to communicate to students the value of feedback in their learning process and its relevance to learning outcomes (Henderson et al., Citation2018, p. 9). A plan would also help ‘maintain consistency of assessors’ which would be a better learning experience for students (Henderson et al., Citation2018, p. 7). One suggestion for future collaborations with teaching staff on academic integrity issues would be to consider the co-development of, or input to, feedback planning as a direction for further work.

Evaluating Impact and the Student Experience

Due to the lack of application of our QuickMarks by teaching staff, we were unable to collect sufficient data to undertake the statistical analysis of the impact of our pilot on student achievement that we had originally envisaged. We were, however, still able to collect quantitative information on how students engaged with the email feedback, summarised in . In conjunction with the qualitative feedback discussed below, these data gave us some insights into how students perceived and engaged with the teaching initiative.

Table 1. Student interaction with feedback emails and links to learning activities

Two mechanisms were employed to collect qualitative data from students. The first was at the bottom of each email sent out by the SRES which asked the question ‘Was this email helpful?’, and students could choose to answer it by clicking on a ‘Yes’ or ‘No’ link. They could then choose to provide additional comments with their response. Two responses were received directly in response to these emails, one response from a student in each of Units 1 and 4. The second mechanism for collecting qualitative feedback was a survey that we sent out to all students who received emails during the pilot. We received only one response to this survey, from a student in Unit 4. Three qualitative responses from students was far fewer than we had hoped to collect, nonetheless they provided us with some interesting perspectives into the student experience of receiving technology-mediated feedback and revealed some unanticipated issues in our initiative.

One of the responses (Unit 1) to the question of whether the email was helpful was extremely positive: ‘Quite often we are not reminded about referencing issues with clear feedback. This was good as it provided me with the correct solutions.’ This supported both our perceptions and literature findings that referencing is often not addressed and that pointing out an error while at the same time providing learning materials to address an issue is helpful to some students (see also Bretag et al., Citation2014, p. 1165; Hattie & Timperley, Citation2007; Henderson et al., Citation2018).

These points were touched on from another perspective from a student (Unit 4) who responded to the survey. This person stated that ‘when i asked for clarification, the tutor told me there was nothing wrong. Nonetheless appreciate the direction to information, however i do not understand why the non-issue was brought up at all.’ As in the previous response, the direction to related learning materials is viewed positively. In this case, however, the fact that referencing is often not addressed seems to have led the student to conclude that appropriately attributing scholarly sources is not particularly important. In order for students to view their lack of knowledge as a gap they need to address, teaching staff need to include referencing as an explicit criterion of success in assignment learning outcomes.

Communicating with students at scale in a technology-mediated way is not without its hazards. The SRES uses several strategies to mitigate the depersonalisation of programmed communications, including sending emails so they appear to come directly from teaching staff. Replies to the email go to individual teaching staff, facilitating any ongoing conversation that the student wishes to have. However, in one situation, our email interrupted an in-progress communication between a student and the member of the teaching staff that our email appeared to come from. This Unit 4 student responded that the email was not helpful, stating: ‘The response only addressed one of my concerns – I had asked about both referencing systems and also about feedback more generally and was a little disappointed that my concerns were not addressed.’ Without any signposting to indicate how and why the email was coming to them, the personalisation confused communications between teaching staff and student, as the student felt they had been ignored and that staff were not paying attention to their issues.

Sending emails out into an unknown context was a risk that we had not fully appreciated before receiving qualitative feedback from students. The response to the student survey indicated that at least one student had ongoing communication problems with some teaching staff, and we sent our emails without any awareness of this. The comparatively low rate of opening and engagement with emails seen in Unit 4 (53% open rate, 14% link click rate) could feasibly have been influenced by these communication issues – if students found previous communications unhelpful, perhaps they gave little attention to subsequent messages. The personalised communication offered by the SRES is likely to be most effective when used by teaching staff who are aware of the context in which they are communicating, allowing them to appropriately signpost messages so that they fit seamlessly in with any ongoing communication.

We intended the feedback in QuickMarks and the SRES emails to provide scaffolding and support in developing student skills. The positive qualitative response from the Unit 1 student indicates that some students viewed the feedback in the manner that we anticipated. Other students had a different view of the meaning of feedback. The survey response indicated that some students interpret feedback as necessarily punitive, rather than a learning opportunity: ‘not sure how i could have possibly received a HD on said assessment if i had reference incorrectly?????’. In this case, the student ignored the feedback because they perceived it to be inconsistent with their grade as also noted by Ferguson (Citation2011, p. 57) and because their knowledge was insufficient to accommodate it (see Hattie & Timperley, Citation2007, p. 100). This aligns with the previously discussed reluctance of some teaching staff to apply QuickMarks to all instances of a referencing error, as they felt that the feedback would be interpreted as excessively correctional or punitive.

It is constructive to reflect on the usefulness of feedback delivery methods that we used in our initiative from the student perspective, as it provides us with a clear example of a lack of feedback literacy among some students participating in the pilot (see Carless & Boud, Citation2018; Henderson et al., Citation2018). To address these varied responses to feedback, we recommend educating students on the role of feedback early in their studies. Students would then have the tools to appreciate how feedback applies to their work and its function in self-improvement, as these play a key part in feedback effectiveness (Carless & Boud, Citation2018, pp. 1316–7; Henderson et al., Citation2018). We also recommend that the delivery of personalised communications to students be situated with teaching staff, unless a library team is embedded within a teaching unit, to avoid the possibility of confusing students about who is contacting them.

Conclusions and Implications for Practice

We could not measure impact on learning due to the lack of data gathered, however, the multifaceted scope of our project provided us with useful intelligence about the use of analytics and relationship engagement technologies, the need for explicit feedback planning in curricula development, and how referencing might be better supported at this University in the future.

We offer the following recommendations:

Teaching staff need to be in control of positioning technologies such as the SRES within their units of study, so that they can align pedagogy with actionable data and effectively signpost communications with students.

An instructionally designed feedback plan would support teaching staff to communicate to students the value of feedback in their learning process, which is crucial in the development of student feedback literacy.

Library teams could more effectively support teaching staff with referencing if this feedback was contextualised within a unit’s existing feedback plan. This would also ensure that referencing was made a key criteria of assessment success, which might assist students to appreciate it as ethical practice.

The widely held perception of referencing as an administrative detail may help to explain why reported incidents of plagiarism have increased in five of our faculties in recent years, including cases of improper attribution, citation format issues, and incorrect paraphrasing and quoting (University of Sydney, Citation2018, p. 5). As this paper has discussed, there is a continuing need to find practical ways to address these deficits in scholarly communication. In particular, there is a greater need to resituate referencing as ethical practice and moral action that confers value on one’s own and others’ contributions; such ethical practices are skills that students require as graduates working in real world contexts.

Acknowledgments

We would like to emphasise our gratitude to all our collaborators in this study as they so willingly provided us with a platform from which we could trial, assist and measure our initiative to demonstrate our impact and value.

We also gratefully acknowledge the assistance of the following people in this project: Julia Child, Jesse Irwin, Sarah Graham, Mithunkumar Ramalingam, and Anniee Hyde.

We would like to thank the two anonymous reviewers of this paper. Their thoughtful suggestions have greatly improved the structure, framing, and readability of this manuscript.

Disclosure Statement

Authors O’Donnell, Maloney, Masters, and Liu have no conflict of interest or financial ties to disclose. Dr Liu leads a cross-institutional team that develops and supports the Student Relationship Engagement System (SRES), a multiple award-winning learning analytics platform that empowers educators to capture, curate, analyse, and use relevant data to enhance student engagement.

Additional information

Notes on contributors

Rosemary O’Donnell

Rosemary O’Donnell works with the Academic Services division of the University of Sydney Library. She supports teaching and research through the delivery of pedagogically informed online learning experiences and information/digital literacy services. Rosemary completed a Certificate in Further Education Teaching at Thames Polytechnic London and holds a PhD in Social Anthropology from the University of Sydney. EMAIL [email protected] ORCID https://orcid.org/0000-0003-4096-1941

Kayla Maloney

Kayla Maloney works with the Digital Collections team at the University of Sydney Library. She supports researchers in managing, analysing and visualising their data. Kayla completed an undergraduate degree in geophysics at the University of Alberta in Canada and holds a PhD in geoscience from the University of Sydney. EMAIL [email protected] ORCID https://orcid.org/0000-0001-6247-3944

Kate Masters

Kate Masters works with the Academic Services division of the University of Sydney Library. She delivers integrated, pedagogically-aligned teaching and research support to academic staff and students. Kate attained a First Class Honours degree in History from the University of Wollongong and a Master of Applied Science (Library and Information Management) from Charles Sturt University. EMAIL [email protected] ORCID https://orcid.org/0000-0002-8833-7497

Danny Liu

Danny Liu works with the Educational Innovation Team at the University of Sydney. He is a molecular biologist by training, programmer by night, researcher and academic developer by day, and educator at heart. A multiple national teaching award winner, he works at the confluence of learning analytics, student engagement, educational technology, and professional development and leadership to enhance the student experience. EMAIL [email protected] ORCID http://orcid.org/0000-0002-6618-5576

Notes

1. By teaching staff, we refer to academic course coordinators who have teaching and administrative responsibilities for a unit of study as well as their teaching assistants who tutor and mark assignments for smaller groups of students within a unit. The academic coordinators that we partnered with for this pilot are sometimes referred to as academic collaborators or simply collaborators. A unit of study is an individual subject often taught in one semester at the University of Sydney.

2. Turnitin is similarity detection software that is mandatory for most written assignments at the University of Sydney.

3. The SRES is web-based software developed by a team at the University of Sydney, which allows teachers to collect and analyse student data and personalise responses to student cohorts. At the time of this study, it is used at four universities in Australia and has received multiple awards, including the 2017 Pearson ACODE Award for Innovation in Technology Enhanced Learning.

4. The links supplied in Turnitin QuickMarks to do with referencing and plagiarism provided content that was inconsistent with that claimed in the QuickMark comment, or the explanatory content was so broad as to not inform students what specific error they had made.

References

- Bretag, T., Mahmud, S., Wallace, M., Walker, R., McGowan, U., East, J., … James, C. (2014). ‘Teach us how to do it properly!’ An Australian academic integrity student survey. Studies in Higher Education, 39(7), 1150–1169.

- Buckley, E., & Cowap, L. (2013). An evaluation of the use of Turnitin for electronic submission and marking and as a formative feedback tool from an educator’s perspective. British Journal of Educational Technology, 44(4), 562–570.

- Carless, D., & Boud, D. (2018). The development of student feedback literacy: Enabling uptake of feedback. Assessment & Evaluation in Higher Education, 43(8), 1315–1325.

- Dalal, N. (2015). Responding to plagiarism using reflective means. International Journal for Educational Integrity, 11(1), 1–12.

- DeGeeter, M., Harris, K., Kehr, H., Ford, C., Lane, D. C., Nuzum, D. S., … Gibson, W. (2014). Pharmacy students’ ability to identify plagiarism after an educational intervention. American Journal of Pharmaceutical Education, 78(2), 1–33.

- Department of Education and Training. (2018). Final Report - Improving retention, completion and success in higher education. Online – June. Australian Government. Retrieved from https://docs.education.gov.au/node/50816

- Divan, A., Bowman, M., & Seabourne, A. (2015). Reducing unintentional plagiarism amongst international students in the biological sciences: An embedded academic writing development programme. Journal of Further and Higher Education, 39(3), 358–378.

- Ferguson, P. (2011). Student perceptions of quality feedback in teacher education. Assessment & Evaluation in Higher Education, 36(1), 51–62.

- Gunnarsson, J., Kulesza, W. J., & Pettersson, A. (2014). Teaching international students how to avoid plagiarism: Librarians and faculty in collaboration. The Journal of Academic Librarianship, 40(3–4), 413–417.

- Halgamuge, M. N. (2017). The use and analysis of anti‐plagiarism software: Turnitin tool for formative assessment and feedback. Computer Applications in Engineering Education, 25(6), 895–909.

- Halupa, C. M., Breitenbach, E., & Anast, A. (2016). A self-plagiarism intervention for doctoral students: A qualitative pilot study. Journal of Academic Ethics, 14(3), 175–189.

- Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112.

- Henderson, M., Boud, D., Molloy, E., Dawson, P., Phillips, M., Ryan, T., & Mahoney, P. (2018). Framework for learning: Closing the assessment loop - Framework for effective feedback. Australian Government Department of Education and Training. Retrieved from http://newmediaresearch.educ.monash.edu.au/feedback/framework-of-effective-feedback/

- Henslee, A. M., Goldsmith, J., Stone, N. J., & Krueger, M. (2015). An online tutorial Vs. Pre-recorded lecture for reducing incidents of plagiarism. American Journal of Engineering Education, 6(1), 27–32.

- Kashian, N., Cruz, S. M., Jang, J.-W., & Silk, K. J. (2015). Evaluation of an instructional activity to reduce plagiarism in the communication classroom. Journal of Academic Ethics, 13(3), 239–258.

- Liu, D. Y. T., Bartimote-Aufflick, K., Pardo, A., & Bridgeman, A. J. (2017). Data-driven personalization of student learning support in higher education. In A. Peña-Ayala (Ed.), Learning analytics: Fundaments, applications, and trends (pp. 143–169). Springer. doi:10.1007/978-3-319-52977-6_5

- Liu, G. Z., Lu, H. C., Lin, V., & Hsu, W. C. (2018). Cultivating undergraduates’ plagiarism avoidance knowledge and skills with an online tutorial system. Journal of Computer Assisted Learning, 34(2), 150–161.

- Lizzio, A., & Wilson, K. (2008). Feedback on assessment: Students’ perceptions of quality and effectiveness. Assessment & Evaluation in Higher Education, 33(3), 263–275.

- Lunsford, A. A., & Lunsford, K. J. (2008). “Mistakes are a fact of life”: A national comparative study. College Composition and Communication, 59(4), 781–806. Retrieved from http://www.jstor.org/stable/20457033

- McGuinness, C. (2006). What faculty think – Exploring the barriers to information literacy development in undergraduate education. The Journal of Academic Librarianship, 32(6), 573–582.

- McKee, H. (2017). An instructor learning analytics implementation model. Online Learning, 21(3), 87–102.

- Michalak, R., Rysavy, M., Hunt, K., Smith, B., & Worden, J. (2018). Faculty perceptions of plagiarism: Insight for librarians’ information literacy programs. College and Research Libraries, 79(6), 747.

- University of Sydney, & Office of Educational Integrity. (2018). Educational Integrity Annual Report. University of Sydney.

- Palsson, F., & McDade, C. L. (2014). Factors affecting the successful implementation of a common assignment for first-year composition information literacy. College & Undergraduate Libraries, 21(2), 193–209.

- Penders, B. (2018). Ten simple rules for responsible referencing. PLoS Computational Biology, 14(4), Article e1006036.

- Powell, L., & Singh, N. (2016). An integrated academic literacy approach to improving students’ understanding of plagiarism in an accounting course. Accounting Education, 25(1), 14–34.

- Raven, M. (2012). Bridging the gap: Understanding the differing research expectations of first-year students and professor. Evidence Based Library and Information Practice, 7(3), 4–31.

- Rolfe, V. (2011). Can Turnitin be used to provide instant formative feedback? British Journal of Educational Technology, 42(4), 701–710.

- Ryan, T., Henderson, M., & Phillips, M. (2019). Feedback modes matter: Comparing student perceptions of digital and non‐digital feedback modes in higher education. British Journal of Educational Technology, 50(3), 1507–1523.

- Sefcik, L., Striepe, M., & Yorke, J. (2020). Mapping the landscape of academic integrity education programs: What approaches are effective? Assessment & Evaluation in Higher Education, 45(1), 30–43.

- Sopina, E., & McNeill, R. (2015). Investigating the relationship between quality, format and delivery of feedback for written assignments in higher education. Assessment & Evaluation in Higher Education, 40(5), 666–680.

- Stagg, A., Kimmins, L., & Pavlovski, N. (2013). Academic style with substance: A collaborative screencasting project to support referencing skills. The Electronic Library, 31(4), 452–464.

- Strittmatter, C., & Bratton, V. K. (2014). Plagiarism awareness among students: Assessing integration of ethics theory into library instruction. College and Research Libraries, 75(5), 736–752.

- University of Sydney, & Academic Misconduct and Plagiarism Taskforce. (2015). An approach to minimising academic misconduct and plagiarism at the University of Sydney. University of Sydney.

- Vigentini, L., Kondo, E., Samnick, K., Liu, D. Y., King, D., & Bridgeman, A. J. (2017). Recipes for institutional adoption of a teacher-driven learning analytics tool: Case studies from three Australian universities. In H. Partridge, K. Davis & J. Thomas (Eds.), ASCILITE2017: 34th International Conference on Innovation, Practice and Research in the Use of Educational Technologies in Tertiary Education. University of Southern Queensland: Australasian Society for Computers in Learning in Tertiary Education (ASCILITE).

- White, F., Owens, C., & Nguyen, M. (2008). Using a constructive feedback approach to effectively reduce student plagiarism among first-year psychology students. Proceedings of the Visualisation for Concept Development Symposium (2008).

Appendix 1.

Standardised QuickMark message content

No Source

In academic writing you need to support your ideas, facts and arguments by citing scholarly sources. If you do not acknowledge your sources you are committing plagiarism. This is because you are presenting another person’s work as your own, and are not communicating in a scholarly way.

Non-Scholarly Source

In academic writing you need to support your ideas, facts and arguments by citing scholarly sources. Scholarly sources are written by people with good subject knowledge and are often peer reviewed. Peer reviewed sources have been checked by experts in the subject for accuracy and quality before being published. In this unit you must use peer-reviewed journal articles, scholarly texts and reputable non-peer reviewed materials as factual sources for your assignments.

Incorrect Citation

You must use the in-text APA 6th referencing system for all assignments in this unit of study. Your in-text citations must include: author; year; page number(s), if citing a specific idea, fact or argument. American Psychological Association. (2010). Publication manual of the American Psychological Association (6th ed. ed.) Washington, DC: American Psychological Association. Your reference list citations must be in alphabetic order and include: author; year; title; source; other information depending on what type of resource you’re citing. American Psychological Association. (2010). Publication manual of the American Psychological Association (6th ed. ed.) Washington, DC: American Psychological Association. When quoting you must include either: double quotation marks for short quotations, or indented block text for quotations of more than 40 words. Please see the following guide at: http://tinyurl.com/ybcczvlm

Appendix 2.

Personalised SRES email content with learning materials

If students received more than one type of QuickMark (e.g. Non-Scholarly Source and Incorrect Citation), they would receive a single, integrated message incorporating information about both issues.

Dear $PREFERREDNAME$

Introductory paragraphs:

[email body section if condition met]

In your recent assignment for $UNITOFSTUDY$ you didn’t consistently support your arguments with sources.

[email body section if condition met]

In your recent assignment for $UNITOFSTUDY$ some of the sources you cited were not scholarly.

[email body section if condition met]

In your recent assignment for $UNITOFSTUDY$ you made some errors in applying a referencing system in a consistent way.

[email body section if condition met]

In your recent assignment for $UNITOFSTUDY$ you didn’t consistently support your arguments with sources, and some of the sources you cited were not scholarly.

[email body section if condition met]

In your recent assignment for $UNITOFSTUDY$ you didn’t consistently support your arguments with sources, and you made some errors in applying a referencing system in a consistent way.

[email body section if condition met]

In your recent assignment for $UNITOFSTUDY$ some of the sources you cited were not scholarly. You also you made some errors in applying a referencing system in a consistent way.

[email body section if condition met]

In your recent assignment for $UNITOFSTUDY$ you didn’t consistently support your arguments with sources, and some of the sources you cited were not scholarly. You also made some errors in applying a referencing system in a consistent way.

Learning materials offered when conditions met:

[email body section if condition met]

Why is referencing important?

Referencing is essential to communicating in a scholarly way because: it acknowledges the author or creator for their work, thus avoiding plagiarism; it gives credibility to your work; it enables others to locate the sources you have cited in your work. Learn how to support your arguments with scholarly sources in this ‘Supporting Evidence’ workshop from the University of New England. Practice your referencing skills with the Library’s 10 minute ‘How to reference’ tutorial.

[email body section if condition met]

What is a scholarly source?

Scholarly sources are written by people with good subject knowledge and are often peer reviewed. Peer reviewed sources have been checked by experts in the subject for accuracy and quality before being published. Use the REVIEW criteria to establish whether a source is scholarly. Learn how to apply REVIEW with the Library’s 10 minute ‘Scholarly versus Non-Scholarly resources’ tutorial.

[email body section if condition met]

How do I reference?

The Library’s Referencing and citation styles subject guide provides: an overview of major referencing systems; how to apply styles that comply with either an In-text (Author-Date) or Note (Footnotes or Endnotes) system.

[Final body section]

Please direct any enquiries to your tutor, do not reply to this email

Regards

$TUTORNAME$