?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Multivariate mixtures are encountered in situations where the data are repeated or clustered measurements in the presence of heterogeneity among the observations with unknown proportions. In such situations, the main interest may be not only in estimating the component parameters, but also in obtaining reliable estimates of the mixing proportions. In this paper, we propose an empirical likelihood approach combined with a novel dimension reduction procedure for estimating parameters of a two-component multivariate mixture model. The performance of the new method is compared to fully parametric as well as almost nonparametric methods used in the literature.

1. Introduction

Mixture models provide a flexible way of modelling complex data obtained from a population with observed or unobserved heterogeneity. Mixture models have been applied in astronomy, biology, fishery, human genetics, and other scientific areas of research. See Titterington, Smith, and Makov (Citation1985), Lindsay (Citation1995), McLachlan and Peel (Citation2000), and references therein.

We consider a special multivariate mixture model where repeated measurements are available for each subject. Let be independent and identically distributed (i.i.d.) d-variate random vectors from a finite mixture model with m components. If the elements of the vector

are independent conditional on belonging to a subpopulation, then the mixture density is given by

(1)

(1) where

's are mixing proportions such that

,

for all j, and

, with or without subscripts, denotes a univariate density function.

The above data structure is quite common especially in social sciences where measurements are taken repeatedly for various reasons. For example, the goal of research on preschool children's inclusion task responses is to study different solution strategies with which young children solve a given cognitive task. The solution strategy is often called the latent variable since it is hidden and unobservable. A group of preschool children can be considered as a sample from a mixture model where the components correspond to the various solution strategies; see Thomas and Horton (Citation1997). In a simplified setting, one could assume that there are two main solution strategies which lead to a mixture model with two components.

Many researchers studied the nonparametric identifiability of the above multivariate mixture model. Hall and Zhou (Citation2003) showed that the model (Equation1(1)

(1) ) is always nonparametrically unidentifiable when d=2 and m=2. Under some mild regularity conditions, Hall and Zhou (Citation2003) proved that the two-component mixture model is nonparametrically identifiable for

. Kasahara and Shimotsu (Citation2014) discussed the identifiability of the number of components in multivariate mixture models in which each component distribution has independent marginals. Hettmansperger and Thomas (Citation2000) considered the situation where the elements of the vector

are, not only conditionally independent, but also identically distributed. Under such an assumption, the mixture density (Equation1

(1)

(1) ) can be rewritten as

(2)

(2)

They proposed an almost nonparametric approach to estimate the mixing proportions. Their key idea is to categorise data into 0 or 1 by setting an optimal cut point and then apply the EM algorithm to estimate the mixing proportion in the resulting binomial mixture models. Cruz-Medina, Hettmansperger, and Thomas (Citation2004) extended the work of Hettmansperger and Thomas (Citation2000) by transforming the observed vector into a count vector which leads to a multinomial mixture model.

To avoid possible loss of efficiency in categorising continuous data into count data, we propose a nonparametric approach to estimate the mixing proportions using empirical likelihood (EL). The EL, which was first introduced by Owen (Citation1988), is a nonparametric method of inference based on a data-driven likelihood ratio function. This nonparametric and likelihood-based approach has become one of the most effective statistical methods. See Owen (Citation2001) for a comprehensive review. As shown in Qin and Lawless (Citation1994), the EL is a prominent efficient tool in estimating parameters by incorporating estimating equations into constrained maximisation of the empirical likelihood function.

We first develop the proposed methodology for the 3-dimensional mixture models, and later on extend it to higher dimensions. For the multivariate mixture model, we propose linking the various moment estimating equations through the EL to provide a more efficient estimation. In the d-dimensional mixture model, there are moment estimating equations. When d is large, it is impracticable to search for the optimal solution. We propose a simple and intuitive bootstrap-like modification of the method. First we obtain K sets of three indices chosen randomly and without replacement from

, and then multiply the K nonparametric likelihoods pertinent to the chosen indices to obtain the profile empirical likelihood ratio function.

Our simulation results show that, when the parametric model is correctly specified, our EL estimators perform similarly to the parametric estimators. However, when the parametric model is misspecified, the EL estimators perform uniformly better than the parametric estimators and the almost nonparametric estimators.

The paper is organised as follows. The proposed empirical likelihood approach for multivariate mixture model and its theoretical properties are presented in Section 2. The extension to d-dimensional (d>3) mixtures is also presented. Simulation studies and real data analysis are provided in Section 3. Discussions are given in Section 4.

2. Methodology

We first discuss the methodology for the three-variate mixture model, and then extend to multivariate mixtures with higher dimensions.

2.1. Three-variate mixture model

Let be a 3-dimensional random vector with distribution function

and joint probability density function

(3)

(3) where

, and the component density functions

and

are different but unspecified. This model is a special case of model (Equation2

(2)

(2) ) with m=2 and d=3.

The parameters of interest are the expectations of the random variables and the mixing proportion π. Suppose and

are the expected values of the two components:

and that they satisfy

. We then have the following moment estimating equations

There are seven estimating equations in total with three unknown parameters

.

Let ,

, be i.i.d. observations from the multivariate mixture model (Equation3

(3)

(3) ), and

. According to Owen (Citation1988), the EL function based on the observed data is

(4)

(4) Let

. For the distribution

under study, feasible

's satisfy

(5)

(5) where

(6)

(6) with

,

Inference on

is usually made through their profile likelihood, which is obtained by maximising (Equation4

(4)

(4) ) with respect to

's subject to the constraints in (Equation5

(5)

(5) ). Up to a constant not depending on

, the resulting empirical log-likelihood is

where

is the Lagrange multiplier determined by

We can show that in a

neighbourhood of the true values of

,

is determined uniquely by an implicit function of

. We denote the maximum empirical likelihood estimators as

. Their asymptotic properties are given in the following theorem by Qin and Lawless (Citation1994). When

takes its true value

, we write

to be

for short.

Theorem 2.1

Under the regularity conditions specified in Qin and Lawless (Citation1994). As n goes to infinity, where

With in place of

, we can estimate the underlying distribution functions

and

. The asymptotic normality of the resulting empirical likelihood estimators can be established in a similar way to Theorem 2.1.

2.2. Multivariate mixtures with higher dimensions

We now extend the methodology discussed in the previous section to the case with d>3. Suppose the d-variate data , arise from the mixture model with the following mixture density

In principle, we can adopt the same approach as in the case d=3 in order to make inferences about

. When d is large, however, the number of estimating equations we must deal with is

which can be extremely large. Consequently, it is impractical to find the optimal solution to embrace that many estimating equations in the empirical likelihood setup.

We now propose a simple and intuitive solution to the high-dimensional problem. Let , and

(

) be all the possible samples of size 3 from

drawn by simple random sampling without replacement. We randomly select K sets from

by simple random sampling without replacement. Let

(

) be the resulting K index sets, and

denote

. We assume

for each k, and treat the data with different

as independent samples. The profile empirical likelihood ratio function of

based on the selected index sets is

where the function

is defined in (Equation6

(6)

(6) ).

Applying the method of constrained optimisation, we have

where

and

are the Lagrange multipliers. Setting the first derivative of G with respect to

to zero, we have

Multiplying both sides of the above equation by

and summing over i give

which leads to

. Therefore, the maximum of

is attained at

where the Lagrange multipliers

's are the solutions to

Putting

back and taking logarithm, we have the profile empirical log-likelihood ratio function of

,

We show that with probability tending to one, there must be a local maximum point in a very small neighbourhood of the true parameter value of

. Let

.

Lemma 2.1

Let be the true value of

. Suppose

and

and that

and

are non-degenerate distributions. Conditioning on

as

attains its maximum value at some point

with probability 1 in the interior of the ball

. Let

with

. Consequently,

and

satisfy

where

Lemma 2.1 implies that the proposed EL estimator is consistent. Based on Lemma 2.1, we further establish the asymptotic normality of

in the following theorem. This result is an extension of Theorem 1 in Qin and Lawless (Citation1994). It embraces the correlation structure of the selected elements within the random vectors.

Theorem 2.2

Assume the conditions of Lemma 2.1. Let

and

Conditioning on

as n goes to infinity,

converges in distribution to

where

If there are no common elements in and

, then

. Further, if d is quite large, and there are no common elements in any pair of

and

(

), then

, and

. At the other extreme, if

for

, then

, and

. Therefore, the second term in

stands for the efficiency loss due to the fact that some data are used more than once.

3. Simulation studies and data analysis

3.1. Simulation studies

We have carried out simulations to evaluate the finite-sample performance of the proposed empirical likelihood estimators (EL). For comparison, we have also considered two of its competitors: the maximum likelihood estimators (ML) under the multivariate normal mixture model, and the almost nonparametric estimators based on multinomial mixtures (Cruz-Medina et al. (Citation2004); MN for short). Both the ML and MN estimators can be calculated by the EM algorithm.

We generate data from the mixture model (Equation3(3)

(3) ). Different specifications of component distributions

and

are listed below:

(Normal mixtures)

and

are the density functions of

and

, respectively. Here

and

or 2.

(Non-central t mixtures)

and

are the density functions of

and

, respectively. Here

denotes a t-distribution with r degrees of freedom, non-centrality parameter

, and mean μ, where

. Here r=4,

, and

or 2.

(Chi-square mixtures)

and

are the density functions of

and

. Here

and

or 20.

Table 1. Biases (%) and standard deviations (%) (in parentheses) of different estimators based on 1,000 simulations with n=400. Data were generated from the multivariate mixture model with

and

and

being

being

and

and

, respectively. Here

, respectively. Here

,

,

or 2 and d=3 or 6.

or 2 and d=3 or 6.

Table 2. Biases (%) and standard deviations (%) (in parentheses) of different estimators based on 1,000 simulations with n=400. Data were generated from the multivariate mixture model with

and

and

being

being

and

and

, respectively. Here

, respectively. Here

,

,

or 2 and d=3 or 6.

or 2 and d=3 or 6.

Table 3. Biases (%) and standard deviations (%) (in parentheses) of different estimators based on 1,000 simulations with n=400. Data were generated from the multivariate mixture model with

and

and

being

being

and

and

, respectively. Here

, respectively. Here

,

,

or 20 and d=3 or 6.

or 20 and d=3 or 6.

Let us first examine Table , where the multivariate normal mixture model is correctly specified. As expected, the ML estimators have the smallest standard deviations in all cases and the smallest absolute biases in most cases. The proposed EL estimators perform very similarly to the ML estimators and both of them are uniformly better than the MN estimators. As goes further away from

, all estimators have decreasing standard deviations. This may be because the two component distributions in the mixture model also get further away from each other. When π increases from 0.2 to 0.8, the performances of all the three estimators for

are getting better, while those for

are getting worse. This is probably because as π increases, the multivariate normal mixture contains increasing information about

but decreasing information about

. All the three estimators for π have better performance when π lies in the middle than on the boundaries of its parameter space.

However, when data are generated from non-normal mixtures, the ML estimators lose their optimality. From Tables –, we can see that compared with the MN estimators, they have smaller absolute biases in some cases, but larger standard deviations in other cases. The proposed EL estimators perform reasonably well as they have uniformly smaller biases and standard deviations than the other two competitors.

If the mixing proportion is of primary interest, we see that when the multivariate normal mixture is correctly specified, the ML estimator again performs the best and the EL estimator has almost the same reasonable performance. Both of them perform better than the MN estimator. When the model is misspecified, the EL estimator has the best performance followed by the MN estimator. These two estimators usually win the ML estimator by a large amount. For example, in Table , when ,

, and d=3, all three estimators for π have similar standard deviations, however, the ML estimator has a much larger absolute bias (0.3044) compared with the EL estimator (0.0056), and the MN estimator (0.0042).

When the data dimension d increases from 3 to 6, the standard deviations of both the EL and MN estimators are getting smaller but they have different performances in bias. The absolute biases of the EL estimators are always getting smaller, while those of the ML and MN estimators are not the case. For example, in Table , when and

, the absolute bias of the MN estimator for

increases from 0.5742 to 0.6359 and that of the ML estimator for

increases from 0.0366 to 0.2254. By contrast, that of the EL estimators for both

decreases from

to

.

Overall, the EL method exhibits more robust performance than the MN and ML methods for different model specifications. When the normal mixture is correctly specified, the proposed EL estimators have comparable performance as the ML estimators. When the normal mixture is misspecified, the EL estimators perform uniformly better than the other two competitors.

3.2. Data analysis

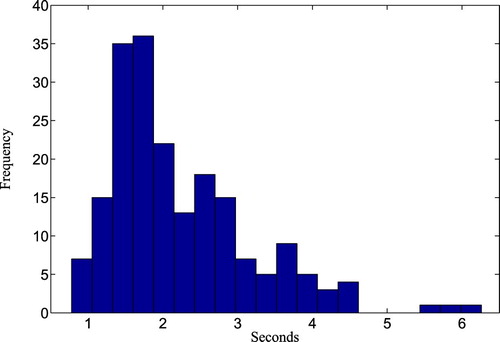

Reaction time (RT) task is one of the most common experimental methods in psychology to study individual differences. In this section, we apply our proposed empirical likelihood method to a RT data set which was analysed by Cruz-Medina et al. (Citation2004). In this experiment, 197 nine-year-old children were tested on mental rotation task in which a target figure was presented on the left and another one on the right. Children thus had to determine whether the second figure was identical to the first or simply a mirror image instead. The RT was recorded in milliseconds. There were 6 trials, and we considered these trials as d=6 repeated measurements. The time delays between trials were randomly chosen so that children would unable to anticipate the length of delays. The subsequent trials were then expected or assumed to be independent. We display only the histogram of the first measurement of the data in Figure ; those for the rest are similar. Cruz-Medina et al. (Citation2004) suggested using a two-component mixture to fit the heterogeneous RT distribution.

Since recorded in milliseconds, the RT values range from around 700 to 7000. For convenience, we re-scale them in seconds; the resulting numbers are no greater than 10. Although the mixing proportion π is of primary interest, we calculate the EL, MN and ML estimators for all the three parameters and

. The results are tabulated in Table . Based on these point estimates, we also provide 95% Wald interval estimates for all the three parameters with variances estimated by 200 bootstrap repetitions.

Table 4. Point and interval estimates of the EL, MN and ML methods for

and

and

. EL

. EL

: EL with

: EL with

; EL

; EL

, EL

, EL

, EL

, EL

: EL with K=8; MN

: EL with K=8; MN

: MN with cut points

: MN with cut points

being the deciles of the empirical distribution, which was suggested by Cruz-Medina et al. (Citation2004) for general use; MN

being the deciles of the empirical distribution, which was suggested by Cruz-Medina et al. (Citation2004) for general use; MN

: MN with cut points

: MN with cut points

, which was used by Cruz-Medina et al. (Citation2004) when they analysed this dataset.

, which was used by Cruz-Medina et al. (Citation2004) when they analysed this dataset.

As mentioned in Section 2.2, the EL estimator depends on the K randomly selected sets (

). Therefore, we shall obtain different EL estimates in general when applying the EL method more than one time if

. We apply the EL method with K=8 three times, and denote the results by EL

, EL

and EL

, respectively. In this example, d=6. When

, the results are denoted by EL

. We see that the EL estimates with K=8 are very close to those with K=20. This confirms that the proposed random selection strategy works very well. The EL proportion estimates are all around 0.7, and the EL estimates for

and

are around 1.6 and 2.9, respectively.

When applying the MN method, we need to determine the cut points 's. For general use, Cruz-Medina et al. (Citation2004) suggested using 10 cut points and choosing

to be the deciles of the empirical distribution of the data. The resulting MN method, denoted by MN

, is also the MN method compared in our simulation study. When analysing the RT data, Cruz-Medina et al. (Citation2004) used

. We denote the resulting MN method by MN

. It seems that the MN results depend to some extent on the choice of cutting points, because the MN

proportion estimate 0.52 is quite different from that of MN

0.59. In the meantime, the MN

point and interval estimates are both nearly equal to those of the ML method.

According to our simulation studies, the EL method exhibits more robust performance than the MN and ML methods. This indicates that the EL analysis results are more trustworthy than those of the other two methods.

4. Discussions

In this paper, we proposed an empirical likelihood-based estimation method for the parameters of a multivariate two-component mixture model. We discussed three-variate mixtures in detail and extended the methodology to high-dimensional mixtures by giving a permutation-like method which reduces the high-dimensional problem to a three-dimensional situation. The performance and efficiency of the method are demonstrated through a real data example as well as simulation studies. The simulation results show that the proposed method is quite efficient in comparison to both completely parametric and almost nonparametric methods in the literature. Furthermore, the proposed method can accommodate parameter estimation in high-dimensional mixtures by requiring estimation only in three dimensions.

The extension of our approach to mixtures with more than two components is valuable and interesting. Similar to the two-component mixture situation, one can use a set of moment conditions implied by the mixture model to identify and estimate mixing proportions and other component parameters. When the number of components grows, the number of unknown parameters increases. The improvement in the performance of the proposed approach in terms of better identification and higher efficiency may crucially depend on the choice of the set of moment conditions. We will consider it in future research.

Acknowledgements

The authors would like to thank the editor, the AE, and the referee for their insightful comments and suggestions. The authors would like to thank Dr Jing Qin for valuable discussions and many helpful comments.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes on contributors

Yuejiao Fu

Yuejiao Fu is an Associate Professor of Statistics in the Department of Mathematics and Statistics at York University, Canada. She received her PhD in Statistics in 2004 from the University of Waterloo. Her research interests include mixture models, empirical likelihood, and statistical genetics.

Yukun Liu

Yukun Liu is a Professor in the School of Statistics, Faculty of Economic and Management, East China Normal University, China. He received his PhD in Statistics in 2009 from Nankai University, China. His research interests include nonparametric and semiparametric statistics based on empirical likelihood and their applications in case-control data, capture-recapture data, selection biased data, and finite mixture models.

Hsiao-Hsuan Wang

Hsiao-Hsuan Wang received her PhD in Statistics in 2010 from York University, Canada. She is now a director in Model Quantification, Enterprise Risk Management, CIBC, Canada.

Xiaogang Wang

Xiaogang Wang is a Professor in Statistics in the Department of Mathematics and Statistics of York University. He is also holding an adjunct position as a senior research fellow at the Institute of Data Science of Tsinghua University in Beijing. He received his PhD in Statistics from the University of British Columbia in 2001. His current research is on statistical analysis of complex data in health and life sciences.

References

- Cruz-Medina, I. R., Hettmansperger, T. P., & Thomas, H. (2004). Semiparametric mixture models and repeated measures: The multinomial cut point model. Journal of the Royal Statistical Society: Series C (Applied Statistics), 53, 463–474. doi: 10.1111/j.1467-9876.2004.05203.x

- Hall, P., & Zhou, X. H. (2003). Nonparametric estimation of component distributions in a multivariate mixture. The Annals of Statistics, 31, 201–224. doi: 10.1214/aos/1046294462

- Hettmansperger, T. P., & Thomas, H. (2000). Almost nonparametric inference for repeated measures in mixture models. Journal of the Royal Statistical Society. Series B, 62, 811–825. doi: 10.1111/1467-9868.00266

- Kasahara, H., & Shimotsu, K. (2014). Nonparametric identification and estimation of the number of components in multivariate mixtures. Journal of the Royal Statistical Society. Series B, 76(1), 97–111. doi: 10.1111/rssb.12022

- Lindsay, B. G. (1995). Mixture models: Theory, geometry and applications. Hayward: Institute for Mathematical Statistics.

- McLachlan, G. J., & Peel, D. (2000). Finite mixture models. New York: Wiley.

- Owen, A. B. (1988). Empirical likelihood ratio confidence intervals for a single functional. Biometrika, 75, 237–249. doi: 10.1093/biomet/75.2.237

- Owen, A. B. (2001). Empirical likelihood. New York: Chapman & Hall/CRC.

- Qin, J., & Lawless, J. (1994). Empirical likelihood and general estimating equations. The Annals of Statistics, 22, 300–325. doi: 10.1214/aos/1176325370

- Thomas, H., & Horton, J. J. (1997). Competency criteria and the class inclusion task: Modeling judgments and justifications. Developmental Psychology, 33, 1060–1073. doi: 10.1037/0012-1649.33.6.1060

- Titterington, D. M., Smith, A. F. M., & Makov, U. E. (1985). Statistical analysis of finite mixture distributions. New York: Wiley.

Appendix

Since both Lemma 2.1 and Theorem 2.2 are established conditionally on the K selected sets (

), for convenience we regard the K selected sets as fixed sets throughout the proofs. Note that

's are i.i.d. random vectors for fixed k and varying i, while they are not independent for fixed i and varying k.

Proof

Proof of Lemma 2.1

We consider , which can be rewritten as

with

. From Qin and Lawless (Citation1994), we can show that

and

uniformly about

, for each

. By Taylor's expansion, we have

where c is the smallest eigenvalue of

Similarly,

Since

is a continuous function of

when

belongs to the ball

, as n is large,

must have a maximum point

in the interior of this ball such that

Proof

Proof of Theorem 2.2

Taking derivatives about and

, we have

for , and

is the Kronecker delta. Expanding

and

at

, we have

where .

It follows from the above equations that

(A1)

(A1) Here

where

and

.

Define and

, where ⊗ is the Kronecker product operator. Under the conditions of Theorem 2.2, as

, it can be verified that

and therefore

where

In addition,

converges in distribution to

, where

Therefore,

. Since the inverse of

is

where

, we further have

which converges in distribution to

with

(A2)

(A2) With some algebra, it can be seen that

and

, which implies

This finishes the proof of Theorem 2.2.