?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

In mediation analysis, the exposure often influences the mediating effect, i.e., there is an interaction between exposure and mediator on the dependent variable. When the mediator is high-dimensional, it is necessary to identify non-zero mediators () and exposure-by-mediator (

-by-

) interactions. Although several high-dimensional mediation methods can naturally handle

-by-

interactions, research is scarce in preserving the underlying hierarchical structure between the main effects and the interactions. To fill the knowledge gap, we develop the XMInt procedure to select

and

-by-

interactions in the high-dimensional mediators setting while preserving the hierarchical structure. Our proposed method employs a sequential regularization-based forward-selection approach to identify the mediators and their hierarchically preserved interaction with exposure. Our numerical experiments showed promising selection results. Furthermore, we applied our method to ADNI morphological data and examined the role of cortical thickness and subcortical volumes on the effect of amyloid-beta accumulation on cognitive performance, which could be helpful in understanding the brain compensation mechanism.

1. Introduction

A mediation model examines how an independent variable or an exposure () affects a dependent variable (

) through one or more intervening variables or mediators (

) (Baron and Kenny Citation1986; Daniel et al. Citation2015; Holland Citation1988; Imai et al. Citation2010; Imai and Yamamoto Citation2013; MacKinnon Citation2008; Pearl Citation2013; Preacher and Hayes Citation2008; Robins and Greenland Citation1992; Sobel Citation2008; VanderWeele T Citation2015; VanderWeele T and Vansteelandt Citation2014; VanderWeele TJ Citation2011). In the mediation mechanism, we often observe that

influences the mediating effect of

on

, i.e., there is an interaction between

and

on the dependent variable (

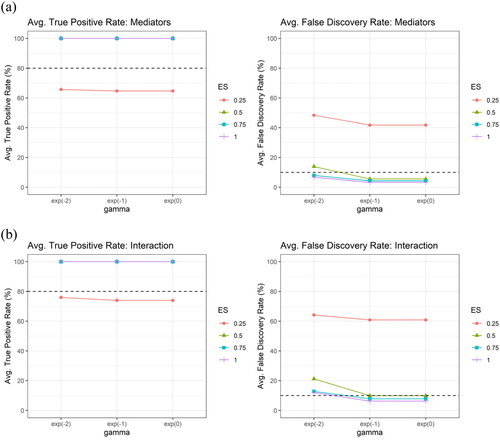

), as represented in .

Figure 1. A graphical representation of the mediation effect of the intervening variable on the relationship between the independent variable

and the dependent variable

(black arrows, upper triangular part) and the interaction effect between

and

(orange arrow).

A typical multivariate mediation model with exposure-by-mediator interactions takes the following form in (1). In a general context, let represent the independent variable or the exposure of interest, let

represent the dependent variable or the outcome of interest, let

where

represent

potential mediator variables (

), and let

represent the interaction term between the exposure variable

and the potential mediator variable

Denote

as the

vector of observed exposure measurements from

subjects,

as the

vector of observed outcome responses from

subjects, and

as the

matrix of

observed potential mediator variables from

subjects. For each subject

(1)

(1)

where

and

Subjects are assumed to be independent and identically distributed (i.i.d.). Any

with non-zero

and

coefficients is considered to be a mediator. Any

with non-zero

coefficient is considered to have an interaction effect.

In the single mediator case, T. J. VanderWeele (Citation2014) proposed the four-way decomposition method to handle the -by-

interaction, which united methods that attribute effects to interactions and methods that assess mediation and was used in literature (e.g., Wang Y et al. Citation2019) to understand the mediated interaction effect. This decomposition idea was later extended to the few mediators case, such as in Bellavia and Valeri (Citation2018).

New mediation methods have also been developed to address the issue of high dimensions in the mediators. Some used regularization-based methods (Li et al. Citation2021; Serang et al. Citation2017; Zhao Y and Luo Citation2016). Others used hybrid methods such as the combined filter method with coordinate descent algorithm (van Kesteren and Oberski Citation2019), screening and regularization (Luo et al. Citation2020; Schaid and Sinnwell Citation2020; Zhang et al. Citation2016, Citation2021), and dimension reduction and regularization (Zhao Y et al. Citation2020).

Nevertheless, for models involving interactions with high-dimensional data, it is important to preserve the underlying hierarchical structure between the main effects and the interactions, because models with interactions but without corresponding marginal effects can be difficult to interpret in practice (Chipman Citation1996; Choi et al. Citation2010; Hao and Zhang Citation2017; Nelder Citation1977; Yuan et al. Citation2009; Zhao P et al. Citation2009). This remains the case in the mediation model with high-dimensional mediators. In regression settings, maintaining the hierarchical structure between the main effects and the interactions requires that the interaction terms are only allowed to be included in the model if the corresponding main effects are also present in the model (Bien et al. Citation2013; Hao et al. Citation2018; Zhao P et al. Citation2009). Take as an example the model with interactions formulated as where

the hierarchical structure is considered preserved if for any

term that has a non-zero coefficient, its consisting variables

and

also have non-zero coefficients. Such concept is also referred as “heredity”, “marginality principle”, and being “hierarchically well-formulated” in previous literature, such as in Hamada and Wu (Citation1992), Chipman (Citation1996), Nelder (Citation1977), and Peixoto (Citation1987) (Bien et al. Citation2013). Similarly, in the mediation settings as in (1), we consider the hierarchical structure between the main effects and interactions to be preserved if whenever there is an interaction effect between the exposure

and

the corresponding

has to be a mediator; in other words, whenever the coefficient for

is non-zero, the corresponding

should also have non-zero

and

coefficients.

To address the issue of hierarchical structure, in regression settings, regularization methods with different penalty functions (Bien et al. Citation2013; Choi et al. Citation2010; Yuan et al. Citation2009; Zhao P et al. Citation2009) have been developed to aid the interaction selection, but they become infeasible as the number of independent variables increases (Hao et al. Citation2018). To address such limitation, Hao et al. (Citation2018) proposed an efficient interaction selection method for high-dimensional data, the regularization algorithm under marginality principle (RAMP), in which possible interaction terms are sequentially added based on the current main effects.

Compared to the regression settings, it is more challenging to preserve the hierarchical structure between the main effects and interactions in the mediation analysis because model selection now involves two models: on

and

on

Although the high-dimensional mediation methods mentioned earlier may naturally handle

-by-

interactions, research is scarce in preserving such hierarchy. Therefore, in this paper, we aim to identify the mediators and the exposure-by-mediator interactions in the high-dimensional mediators setting while addressing the underlying hierarchical structure between the main effects and interactions.

This identification of mediators and their hierarchically preserved interaction with exposure can be useful in explaining how the brain reacts during the process of brain-related changes and in understanding the brain compensation mechanism, defined as the phenomenon that the effect of the brain on the outcome is altered. For example, under the context of cognitive aging and brain pathology, where we have cognitive or clinical performance as the outcome, aging or pathology as the exposure, and certain brain measures such as cortical thickness as the potential mediators, we often observe that thinner cortical thickness () is associated with the worse cognitive performance (

), and further, in the presence of some pathology (

), we observe the opposite association or no association anymore. In other words, the brain acts differently on the outcome because of the exposure (i.e., there is

-by-

interaction), which illustrates the brain compensation mechanism based on our definition. Or alternatively, we may think that the brain tries to compensate for the loss in cognition due to the pathology, which is also indicative of the idea of compensation.

Our proposed method employs a sequential regularization-based forward-selection approach to identify the mediators and their interactions with exposure while preserving the hierarchical structure between them. Specifically, we propose an adaptive forward-selection regularization strategy for the preservation of hierarchical structure. The main idea of the process is that while we generate a series of models through a sequence of decreasing tuning parameters (e.g., models from

tuning parameters; we denote the generation of

-th model as the

-th step, where

), for each tuning parameter (i.e., at each step) we impose penalization on a subset of coefficients based on the identified mediators and interactions from the model generated with the previous tuning parameter (i.e., from the previous step) to enforce the hierarchical structure, and finally the optimal model is determined as the one with the smallest Haughton’s Bayesian information criterion (HBIC) among all the generated models. The rule of this adaptive penalization is that, starting from a model with no mediators and no interactions (i.e., penalize all), if a mediator is identified but its corresponding interaction term is not, we will include the identified mediator in the next step (i.e., not penalize it); if both the mediator and its corresponding interaction are identified, we will include both in the next step (i.e., not penalize both); if an interaction is identified, but its involving

is not, we will not only include the interaction but also include the involving

in the next step (i.e., not penalize both) to preserve the hierarchical structure. Recently, C. Wang et al. (Citation2020) proposed a method designed specifically for high-dimensional compositional microbiome mediators, in which the selection of the interaction between treatment and mediators was considered. In contrast to their method, our method focuses on the continuous mediators, which do not have any sum constraint on the mediators as in the microbiome data. In addition, instead of adding overall penalties to the objective function to preserve the hierarchical structure as in C. Wang et al. (Citation2020), we adopt an adaptive way to update the penalties and include the potential mediator if either its interaction with the exposure is identified or its main effect is identified in the previous step.

In this paper, we first introduced our proposed algorithm in Section 2. Then, we presented the simulation results in Section 3. Finally, we applied our method to the real-world human brain imaging data and examined the role of cortical thickness and subcortical volumes on the relationship between amyloid beta accumulation and cognitive performance in Section 4.

2. Methods

We aim to identify the mediators () and their interactions with exposure (

) while preserving the hierarchical structure between the main effects and interaction effects. The multivariate mediation model that we consider takes the form in model (1), introduced in Section 1. Under the Gaussian assumption of the i.i.d. error terms, the log-likelihood is given as

(2)

(2)

where

We denote be the index set for all of the

potential mediators,

be the index set for the mediators identified in step

where step

was introduced in the introduction and refers to the generation of the

-th model using the corresponding

-th tuning parameter defined in details below in 1. Initialization,

be the index set for the remaining

variables (out of

variables),

be the index set for the involving

variables in the interaction terms identified in step

and

be the index set for the involving

variables in remaining interaction terms (out of

variables).

Our algorithm is described as follows. provides a summary of the algorithm.

Initialization

First, we standardize the data. Specifically, we standardize and each column of

by subtracting its mean and dividing by its standard deviation, so that each variable has a mean of zero and a standard deviation of one. We will use the standardized data in our proposed algorithm.

Next, using the standardized data, we generate an exponentially decaying sequence, which will be served as regularization parameters for model tuning. Particularly, we compute and

for some small

(e.g., 0.05), where

is the matrix that column-wisely combines

and

Based on the determined

and

we generate an exponentially decaying sequence with length

(e.g., 20),

Such generation process for tuning parameters is typical in linear regression literature (e.g., Friedman et al. Citation2010; Hao et al. Citation2018) and is modified particularly for our mediation model with interaction terms by additionally including

and

in the computation of

Also, we start with

and

Note that

and

after the data standardization.

2. Find regularization path

For each we minimize the following objective function (3), in the form of twice negative log-likelihood (after removing constant terms that do not depend on parameters of interest) plus penalties, with respect to

where

is defined as

and the upper-diagonal elements of

is defined (in a vector form) as

(3)

(3)

All the terms consist of the penalty terms. Note that, as it is of no practical meaning to consider mediators and exposure-by-mediator interactions in the mediation analysis without an exposure, we will always include

in the path-b model and thus will never penalize

Also, note that each

yields a model and that from the total

models the best model will be determined based on HBIC (details in the following part: 3. Model selection). In addition, we use a decreasing sequence of tuning parameters (

) for coefficients in that we aim to start with a base model with all the

coefficients being zeros and we intend to include more variables as we relax the penalization via smaller tuning parameters.

To preserve the hierarchical structure between main effects and interactions in the mediation model (1), we propose an adaptive forward-selection regularization strategy. In brief, as shown in expression (3), penalization for coefficients at each step is imposed only on a subset of the coefficients

in model (1), which is determined based on the mediators and interactions identified from the model in step

(or,

and

).

The adaptive penalization operates as follows. (i) If a variable is identified as a mediator but its corresponding interaction term

is not identified, then we will include this

variable as the mediator in the next step but not its corresponding interaction—we will penalize all the

terms except for the

and

coefficients. (ii) If both a mediator

and its corresponding interaction

are identified, we will include both in the next step—we will not penalize the corresponding

and

coefficients. (iii) If an interaction

is identified, but its involving

is not identified as a mediator, then we will not only include the interaction

but also include the involving

in the next step to enforce the main effect to be included in the model to preserve the hierarchical structure between the main effects and interaction effects; in other words, we will not only not penalize the

coefficient of this interaction but also not penalize the

and

coefficients of its involving

to enforce the hierarchical structure.

Putting (i), (ii), and (iii) all together, if a variable is selected as a mediator in the previous step (i.e., the index

is in

), then we will not penalize the corresponding

and

at the current step; if a

variable is selected as an interaction in the previous step (i.e., the index

is in

), then we will not only not penalize the corresponding

but also not penalize the corresponding

and

to force the main effect to be included into the model so that the hierarchical structure is preserved. In other words, we will penalize

and

coefficients of a

variable if it is neither identified as a mediator nor

is identified as the interaction, and we will penalize

coefficients of a

term if it is not identified as the interaction. These altogether form the penalty terms for coefficients

in the objective function (3).

We utilize an iterative estimation approach to estimate the nuisance parameters and

and the coefficients

Denote

Given

and

we can rewrite the log-likelihood related part in the objective function (3) into the quadratic form of

as

where

(defined in 1.Initialization), and

and

can be estimated by minimizing

where

(explained previously),

and

Given

the nuisance parameter

can be estimated as the sample variance of the residuals from the path-b model, and the nuisance parameter

can be estimated as a sparse matrix controlled by a regularization parameter (

) from the variance-covariance matrix of the residuals in the path-a model. We imposed sparsity constraints on

considering the real-data application. Other penalization (e.g., L2) methods may also be an option. After we initialize

(and

and

correspondingly), we will iteratively update the estimation until convergence.

In the current implementation, the estimation of is implemented with the glmnet R package (Friedman et al. Citation2010) and the estimation of the nuisance parameter

is implemented with the QUIC R package (Hsieh et al. Citation2014), which estimates a sparse inverse covariance matrix using a combination of Newton’s method and coordinate descent and controls the sparsity via a regularization parameter. In our numerical analysis, we noticed that the choice of tuning parameter of

(

) did not affect the model selection results significantly, while conducting a grid search for both tuning parameters (

and

’s) can significantly increase computing time (see Appendix A). Thus, the value of the regularization parameter for the

estimation is empirically chosen (following Hsieh et al. Citation2014) and is fixed at

in our simulation study and real-data application. Also, by fixing the sparsity of

we will be able to get more comparable and stable HBIC scores for model selection, which will be introduced shortly in the following 3. Model selection part, in the sense that we are looking for the best model in terms of

given the same sparsity level of

Then, we update the selected mediator set and the selected interaction set correspondingly based on the estimated coefficients. The mediators identified (i.e., the variables with non-zero

and

coefficients) at current step

form the current selected mediator set (i.e., their indices

’s form

). The interactions identified (i.e., the

variables with non-zero

coefficient) at current step

form the current selected interaction set (i.e., their indices

’s form

).

3. Model selection

Next, for each step we compute HBIC (Haughton Citation1988) between the current model and the null model. Current model is the model generated at the current step

with the estimated

coefficients, whereas the null model is defined as the model with all coefficients being zero. HBIC for two model comparison can be computed as

where

is the log-likelihood function of the model

which can be computed using EquationEq. (2)

(2)

(2) ,

is the number of parameters of the model

consisting of the number of non-zero

coefficients and the number of non-zero upper-diagonal elements in

(Gao et al. Citation2012), and

is the sample size (Bollen et al. Citation2014). HBIC is chosen to evaluate the model performance instead of cross-validation because cross-validation may not perform well with limited sample size. Previous studies have shown that HBIC stands out in the selection of measurement models (Haughton et al. Citation1997; Lin et al. Citation2017); among the information criterion (IC) measures, the scaled unit information prior BIC (SPBIC) and the HBIC have the best overall performance in choosing the true full structural models (Bollen et al. Citation2014; Lin et al. Citation2017); SPBIC and HBIC performed the best in selecting path models and were recommended for model comparison in structural equation modeling (SEM) (Lin et al. Citation2017); HBIC might be preferable to SPBIC for its simplicity in computation (Lin et al. Citation2017). Among the

generated models, we select the model with the smallest HBIC as the final model.

Note that in the initialization step, should be set to ensure that we start with the base model—a model with a non-zero

coefficient and zero

coefficients. In occasional cases, the computed

can be too small to give a base model as the starting point. To account for that, our algorithm gradually enlarges

by a factor (1.5 by default, which is a 50% increase from the previous one) until we start with a base model.

Table 1. XMInt algorithm summary.

3. Simulation

In this section, we performed simulation under two settings of mediators—independent mediators and correlated mediators with correlation structure from the ADNI data to mimic real-world situations. More details about the ADNI data are provided in Section 4. The latter setting is included as it is important to evaluate the performance of shrinkage-based model selection when the dependency among variables is taken into consideration, as discussed in previous literature (Bühlmann and Van De Geer Citation2011; Wainwright Citation2009).

For each subject, the exposure was independently generated from the standard normal distribution. The potential mediators were generated based on the path-a model in (1) with We used

under the setting of independent mediators, and used the correlation structure obtained from the ADNI data (details in Section 4) as

under the setting of correlated mediators. The outcome was generated based on the path-b model in (1) with

and

We set the first three

variables (

) to be the true mediators (i.e., having non-zero

and

coefficients) and set

to be the true exposure-by-mediator interaction term (i.e., having non-zero

coefficient). We let the effect size (

) represent the value of

of the truth, which are

in our case.

In the simulation with independent mediators, we set the sample size to be the number of potential mediators to be

the effect size to be

and all other coefficient values to be 0. In the simulation with correlated mediators, we set the sample size to be

, the number of potential mediators to be

which is the same as in the data analysis in Section 4, the effect size to be

and all other coefficient values to be 0. Also, we used the default

and

to generate the

sequence. The regularization parameter for

estimation (

) is fixed at

The final model is given by the

that minimizes HBIC.

Under each simulation scenario, to evaluate the model selection performance, we calculated the average true positive rate (TPR) and the average false discovery rate (FDR) across the 100 simulation runs for the mediator and the interaction, respectively. For each simulation run, the TPR was computed as the proportion of the truth that was selected by the algorithm. For example, the TPR for the mediator is and the TPR for the interaction is

The FDR was computed as the proportion of the falsely selected non-truth variables from the selection. That is, the FDR for the mediator is

and the FDR for the interaction is

Currently, there is no direct comparison method available. Nevertheless, we used a general LASSO estimation without imposing adaptive penalties for comparison, for both settings of mediators.

In addition to the TPR and the FDR, to assess the performance of preserving the hierarchical structure, we calculated the average percentage of selected interactions without hierarchy across the 100 simulation runs under each simulation scenario. Specifically, for each simulation run, the percentage of selected interaction without hierarchy was computed as the proportion of the selected interaction that did not have corresponding mediator selected:

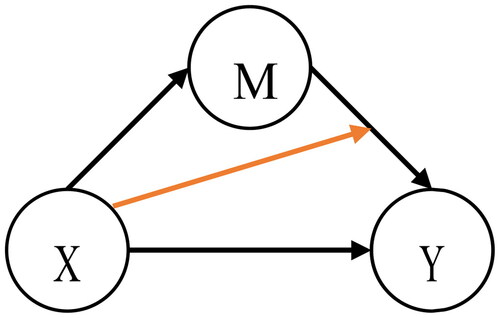

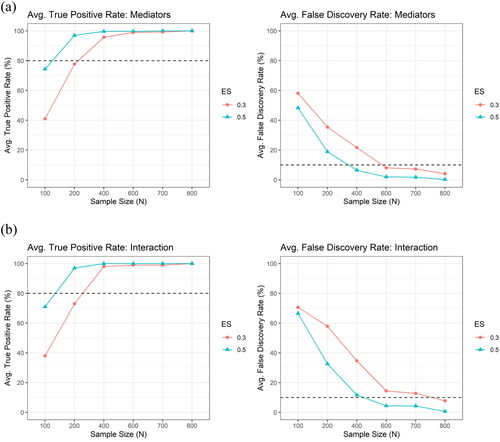

shows the average TPR and the average FDR across the 100 simulation runs by the sample size, the number of potential mediators and the effect size, for (a) the mediator and for (b) the interaction, respectively, under the setting of independent mediators, using XMInt. By the design of our algorithm, the hierarchical structure between interactions and mediators is preserved (the percentage of interaction without hierarchy were all zeros). Our results showed that the average TPR generally increased with effect size, for both the mediator and the interaction. When the effect size and the sample size were moderate to large ( at least 200,

at least 0.5), our algorithm can almost 100% of the time identify all of the three true mediators and the true interaction term (TPR close to 100%) and it is not likely to falsely select the non-truth (FDRs controlled under approximately 5% for mediators and 10% for interactions, on average). Also, we observed that by increasing the sample size, we may be able to make up for a small effect size to some degree and maintain a reasonably high TPR.

Figure 2. The average true positive rate (TPR) and the average false discovery rate (FDR) across the 100 simulation runs by the sample size (), the number of potential mediators (

) and the effect size (

), for (a) the mediator (upper panel) and for (b) the interaction (lower panel), respectively, under the setting of independent mediators, using XMInt.

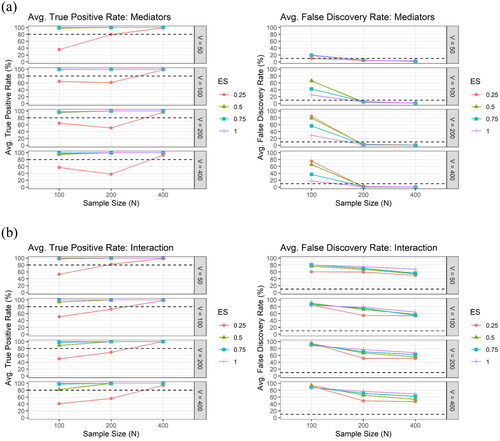

shows the average TPR and the average FDR across the 100 simulation runs by the sample size and the effect size, for (a) the mediator and for (b) the interaction, respectively, under the setting of correlated mediators with correlation structure obtained from Alzheimer’s Disease Neuroimaging Initiative (ADNI) data, using XMInt. By the design of our algorithm, the hierarchical structure between interactions and mediators is preserved (the percentage of interaction without hierarchy were all zeros). When the sample size was large ( at least 600), regardless of the effect size, our algorithm can almost 100% of the time identify all of the three true mediators and the true interaction term (TPR approximately 100%) and it is not likely to falsely select the non-truth (FDRs well controlled under approximately 5% for mediators and 10% for interactions, on average).

Figure 3. The average true positive rate (TPR) and the average false discovery rate (FDR) across the 100 simulation runs by the sample size () and the effect size (

), for (a) the mediator (upper panel) and for (b) the interaction (lower panel), respectively, under the setting of correlated mediators with correlation structure obtained from the ADNI data, using XMInt. The number of potential mediators (

) is 89, which is the same as in the ADNI data.

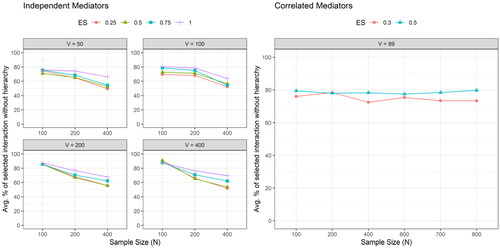

By comparison, the hierarchical structure cannot be preserved if we use merely LASSO without adaptive penalties for both settings of mediators—the percentages of selected interaction without hierarchy were high, as shown in . Furthermore, as shown in and , the FDRs for the interactions were high across all simulation scenarios under both settings of mediators, which indicates that it is likely to falsely select many non-truth interactions using LASSO only.

Figure 4. The average percentage of selected interaction without hierarchical structure across the 100 simulation runs by the sample size (), the number of potential mediators (

) and the effect size (

), under the setting of independent mediators (left) and the setting of correlated mediators with correlation structure obtained from the ADNI data (right), using LASSO only.

Figure 5. The average true positive rate (TPR) and the average false discovery rate (FDR) across the 100 simulation runs by the sample size (), the number of potential mediators (

) and the effect size (

), for (a) the mediator (upper panel) and for (b) the interaction (lower panel), respectively, under the setting of independent mediators, using LASSO only.

Figure 6. The average true positive rate (TPR) and the average false discovery rate (FDR) across the 100 simulation runs by the sample size () and the effect size (

), for (a) the mediator (upper panel) and for (b) the interaction (lower panel), respectively, under the setting of correlated mediators with correlation structure obtained from the ADNI data, using LASSO only. The number of potential mediators (

) is 89, which is the same as in the ADNI data.

4. Data Application

We applied our algorithm to the human brain imaging data from the ADNI, a longitudinal multicenter study designed for the early detection and tracking of Alzheimer’s disease. Specifically, we assessed the role of cortical thickness and subcortical volumes on the relationship between amyloid beta accumulation and cognitive abilities at baseline. We used the baseline data from the participants without dementia—diagnosed as mild cognitive impairment (MCI; ) or cognitively normal (CN;

). Participants’ characteristics are displayed in .

Table 2. Participants’ characteristics at baseline (ADNI dataset).

The data were downloaded from the ADNI database (http://adni.loni.usc.edu). The initial phase (ADNI-1) recruited 800 participants, including approximately 200 healthy controls, 400 patients with late MCI, and 200 patients clinically diagnosed with AD over 50 sites across the United States and Canada and followed up at 6- to 12-month intervals for two to three years. ADNI has been followed by ADNI-GO and ADNI-2 for existing participants and enrolled additional individuals, including early MCI. To be classified as MCI in ADNI, a subject needed an inclusive Mini-Mental State Examination score of between 24 and 30, subjective memory complaint, objective evidence of impaired memory calculated by scores of the Wechsler Memory Scale Logical Memory II adjusted for education, a score of 0.5 on the Global Clinical Dementia Rating, absence of significant confounding conditions such as current major depression, normal or near-normal daily activities, and absence of clinical dementia.

All studies were approved by their respective institutional review boards and all subjects or their surrogates provided informed consent compliant with HIPAA regulations.

The exposure of interest is amyloid beta accumulation, which is a univariate variable. Cerebrospinal fluid (CSF) amyloid beta (A-42) concentrations were measured in picograms per milliliter (pg/mL) by ADNI researchers using the highly automated Roche Elecsys immunoassays on the Cobas e601 automated system following extensive validation studies (Bittner et al. Citation2016; Shaw et al. Citation2016). The CSF data used in this study were obtained from the ADNI files UPENNBIOMK9_04_19_17.csv. Detailed descriptions of CSF acquisition including lumbar puncture procedures, measurement, and quality control procedures were presented in http://adni.loni.usc.edu/methods/.

The outcome variable of interest is the memory composite score, which is a univariate variable. We used ADNI’s pre-generated cognitive composite scores that were constructed based on bi-factor confirmatory factor analyses models (Crane et al. Citation2012). Composite memory scores were derived using the Rey Auditory Verbal Learning Test, AD Assessment Schedule-Cognition, Mini-Mental State Examination, and Logical Memory.

MPRAGE T1-weighted MR images were used in this analysis. Cross-sectional image processing was performed using FreeSurfer Version 7.0.1. Region of interest (ROI)-specific cortical thickness and volume measures were extracted from the automated FreeSurfer anatomical parcellation using the Desikan-Killiany Atlas (Desikan et al. Citation2006) for cortical regions and ASEG (Fischl et al. Citation2002) for subcortical regions. We considered 89 potential mediators that were derived from 68 cortical thickness measures from both left and right hemispheres, 5 corpus callosum subregion volume measures, and 16 subcortical volume measures from both left and right hemispheres (Thalamus, Caudate, Putamen, Pallidum, Hippocampus, Amygdala, Accumbens, VentralDC). This selection was made because these measures are among the most commonly used measures in AD research. We did not include ventricles and non-brain areas in our analysis as they are surrogate measures of brain atrophy and their volumes do not carry significant biological meaning in this context. While we could use high-resolution measures such as vertex-level cortical thickness measures or voxel-based morphometry (VBM), however, functional data analysis or appropriate dimension reduction is recommended since those measures tend to exhibit much higher correlations. Moreover, it is worth noting that ADNI did administrate other modalities such as DWI, resting fMRI, and ASL, but only to a subset of the participants as per their protocol. Thus, to maintain the largest possible sample size, we decided to use the T1-based measures. Since the volume measures are typically confounded by brain size, we divided them by the estimated total intracranial volume to adjust for the potential confounding effect. Furthermore, as all these 89 measures are often correlated with sex and age, we regressed them on sex and age and used the residuals as our potential mediators.

After the data preparation, data standardization was performed on the exposure, the outcome and the potential mediators such that each of them had a mean of zero and a standard deviation of one, and we used the standardized data in the data analysis. We used the default and

to generate the

sequence. The regularization parameter for

estimation (

) is fixed at

The final model is given by the

that minimizes HBIC.

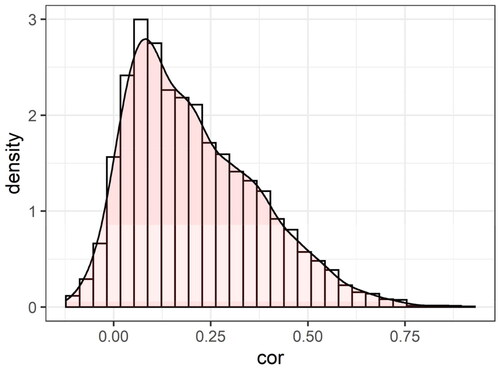

Note that in the simulation of correlated mediators in Section 3, the correlation structure was obtained from the ADNI data. Particularly, the used in the simulation setting of correlated mediators was the correlation matrix of the residuals of the potential mediators after regressing out the effect of the exposure using path-a model, after the data standardization. The average correlation was around 0.2. The distribution of the correlations is also shown in .

Figure 7. The distribution of the correlations between the residuals of the 89 potential mediators after regressing out the effect of the exposure using path-a model, after the data standardization, in the ADNI dataset.

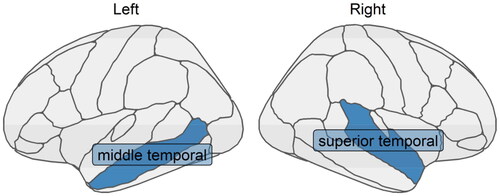

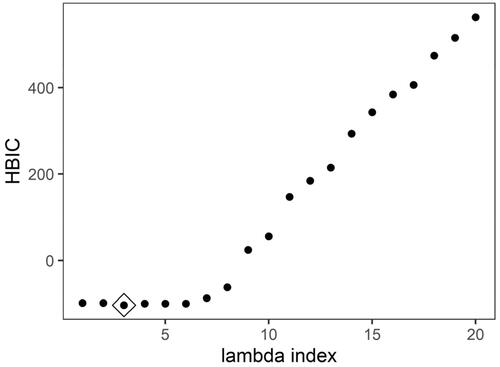

Our algorithm identified mediated interaction effects of cortical thickness from two temporal regions: the middle temporal region in the left hemisphere and the superior temporal region in the right hemisphere, as shown in . Coefficients are displayed in and the HBIC curve is in . In other words, the cortical thickness of the two identified regions mediates the relationship between amyloid beta accumulation and cognitive abilities, and further, their effect on cognition is influenced by amyloid beta accumulation. The direction of the interaction can also be explored using the four-way decomposition idea proposed in T. J. VanderWeele (Citation2014), through the regression-based counterfactual approach (Valeri and VanderWeele Citation2013; VanderWeele T and Vansteelandt Citation2014) to estimate the joint effect of multiple mediators implemented in CMAverse R package (Shi et al. Citation2021). Under the counterfactual independence assumptions, it showed that when there is no amyloid beta accumulation, the thinner cortex in the middle temporal region of the left hemisphere and the superior temporal region of the right hemisphere is associated with worse cognitive performance (); however, when there is more amyloid beta accumulation involved, such mediation effect disappears—the thinner cortex is no longer associated with worse cognition (

). These altogether illustrate the brain compensation mechanism during this process of brain-related changes.

Figure 8. Two cortical thickness measures (one in the middle temporal region in the left hemisphere and the other in the superior temporal region in the right hemisphere) that were identified to have the mediated interaction effects on the relationship between amyloid beta accumulation and memory using XMInt on ADNI dataset.

Figure 9. HBIC curve for model selection in ADNI data application for each with tuning parameter for

fixed at

Table 3. Coefficients of selected mediators and exposure-by-mediator interactions in ADNI data application.

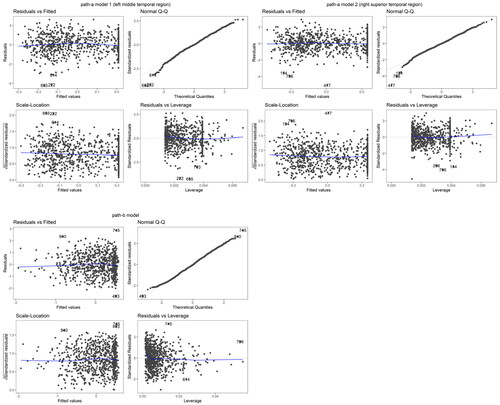

We also performed the model diagnosis to justify the normal assumptions on the model distribution. We fitted the mediation model with the selected two mediators (using the standardized data). As shown in , Q-Q plots did not indicate noticeable violation of the normal assumptions on the mediators and the outcome.

5. Discussion

In this paper, we proposed the XMInt algorithm to identify the mediators and the exposure-by-mediator interactions in the high-dimensional mediators setting while preserving the underlying hierarchical structure between the main effects and the interaction effects. A key feature of this algorithm is its ability to preserve the hierarchical relationship between the mediators and the exposure-by-mediator interactions. We evaluated the performance of our algorithm under various conditions to investigate when it demonstrates optimal model selection, and two simulation settings of mediators were considered—independent mediators and correlated mediators with correlation structure obtained from the ADNI data—to better mimic real-world situations. Our simulation results revealed that for the independent mediators, when the effect size and the sample size are moderate to large, our algorithm was able to correctly identify the true mediators and interaction almost all the time, without falsely selecting many non-truth variables; a similar conclusion holds for the correlated mediators when the sample size is large, regardless of the effect size. To illustrate our algorithm, we also applied our method to real-world human brain imaging data. Two cortical thickness measures, specifically, one in the middle temporal region in the left hemisphere and the other in the superior temporal region in the right hemisphere, were identified to have mediated interaction effects on the relationship between amyloid beta accumulation and memory abilities. This identification of temporal thickness as a mediator is consistent with the existing literature (e.g., Villeneuve et al. Citation2014). A follow-up four-way effect decomposition (VanderWeele TJ Citation2014) revealed that in the absence of amyloid beta accumulation, reduced cortical thickness in these two identified regions is associated with worse cognitive performance, but with increased amyloid beta accumulation, the mediation effect in these two regions disappears, which illustrates a potential brain compensation mechanism. One limitation is that when the number of potential mediators or the sample size becomes larger, it may take a longer time to run the algorithm, as the computation involving becomes slower. In summary, our algorithm works well and can be used as an effective tool to identify the mediated interaction with preserved hierarchical structure in the mediation analysis.

Software

The XMInt R package is available at https://github.com/ruiyangli1/XMInt.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Data availability statement

Data used in the preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu), under the data use agreement by ADNI. The simulation experiment data example is available at https://github.com/ruiyangli1/XMInt. As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in the analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.usc.edu/wp-content/ uploads/how_to_apply/ADNI_Acknowledgement_List.pdf.

Additional information

Funding

References

- Baron RM, Kenny DA. 1986. The moderator–mediator variable distinction in social psychological research: conceptual, strategic, and statistical considerations. J Pers Soc Psychol. 51(6):1173–1182.

- Bellavia A, Valeri L. 2018. Decomposition of the total effect in the presence of multiple mediators and interactions. Am J Epidemiol. 187(6):1311–1318.

- Bien J, Taylor J, Tibshirani R. 2013. A lasso for hierarchical interactions. Ann Stat. 41(3):1111–1141.

- Bittner T, Zetterberg H, Teunissen CE, Ostlund RE Jr, Militello M, Andreasson U, Hubeek I, Gibson D, Chu DC, Eichenlaub U, et al. 2016. Technical performance of a novel, fully automated electrochemiluminescence immunoassay for the quantitation of β-amyloid (1–42) in human cerebrospinal fluid. Alzheimers Dement. 12(5):517–526.

- Bollen KA, Harden JJ, Ray S, Zavisca J. 2014. BIC and alternative Bayesian information criteria in the selection of structural equation models. Struct Equ Model. 21(1):1–19.

- Bühlmann P, Van De Geer S. 2011. Statistics for high-dimensional data: methods, theory and applications. Berlin (Germany): Springer.

- Chipman H. 1996. Bayesian variable selection with related predictors. Can J Stat. 24(1):17–36.

- Choi NH, Li W, Zhu J. 2010. Variable selection with the strong heredity constraint and its oracle property. J Am Stat Assoc. 105(489):354–364.

- Crane PK, Carle A, Gibbons LE, Insel P, Mackin RS, Gross A, Jones RN, Mukherjee S, Curtis SM, Harvey D, et al. 2012. Development and assessment of a composite score for memory in the Alzheimer’s disease neuroimaging initiative (ADNI). Brain Imaging Behav. 6(4):502–516.

- Daniel RM, De Stavola BL, Cousens SN, Vansteelandt S. 2015. Causal mediation analysis with multiple mediators. Biometrics. 71(1):1–14.

- Desikan RS, Ségonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, Buckner RL, Dale AM, Maguire RP, Hyman BT, et al. 2006. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage. 31(3):968–980.

- Fischl B, Salat DH, Busa E, Albert M, Dieterich M, Haselgrove C, Van Der Kouwe A, Killiany R, Kennedy D, Klaveness S, et al. 2002. Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron. 33(3):341–355.

- Friedman J, Hastie T, Tibshirani R. 2010. Regularization paths for generalized linear models via coordinate descent. J Stat Soft. 33(1):1–22.

- Gao X, Pu DQ, Wu Y, Xu H. 2012. Tuning parameter selection for penalized likelihood estimation of gaussian graphical model. Stat Sin. 22(3):1123–1146.

- Hamada M, Wu CFJ. 1992. Analysis of designed experiments with complex aliasing. J Qual Technol. 24:3.

- Hao N, Feng Y, Zhang HH. 2018. Model selection for high-dimensional quadratic regression via regularization. J Am Stat Assoc. 113(522):615–625.

- Hao N, Zhang HH. 2017. A note on high-dimensional linear regression with interactions. Am Stat. 71(4):291–297.

- Haughton DM, Oud JH, Jansen RA. 1997. Information and other criteria in structural equation model selection. Commun Stat Simul Comput. 26(4):1477–1516.

- Haughton DMA. 1988. On the choice of a model to fit data from an exponential family. Ann Stat. 16(1):342–355.

- Holland PW. 1988. Causal inference, path analysis, and recursive structural equations models. Sociol Methodol. 18:449–484.

- Hsieh CJ, Sustik M, Dhillon IS, Ravikumar P. 2014. Quic: quadratic approximation for sparse inverse covariance matrix estimation. J Mach Learn Res. 15(1):2911–2947.

- Imai K, Keele L, Yamamoto T. 2010. Identification, inference and sensitivity analysis for causal mediation effects. Stat Sci. 25(1):51–71.

- Imai K, Yamamoto T. 2013. Identification and sensitivity analysis for multiple causal mechanisms: revisiting evidence from framing experiments. Polit Anal. 21(2):141–171.

- Li B, Yu Q, Zhang L, Hsieh M. 2021. Regularized multiple mediation analysis. Stat Interface. 14(4):449–458.

- Lin LC, Huang PH, Weng LJ. 2017. Selecting path models in SEM: a comparison of model selection criteria. Struct Equ Model. 24(6):855–869.

- Luo C, Fa B, Yan Y, Wang Y, Zhou Y, Zhang Y, Yu Z. 2020. High-dimensional mediation analysis in survival models. PLoS Comput Biol. 16(4):e1007768.

- MacKinnon DP. 2008. Introduction to statistical mediation analysis. New York (NY): Routledge.

- Nelder JA. 1977. A reformulation of linear models. J R Stat Soc Ser A. 140(1):48–77.

- Pearl J. 2013. Direct and indirect effects. arXiv:13012300 [cs, stat]. [accessed 2022 Jan 05]. http://arxiv.org/abs/1301.2300.

- Peixoto JL. 1987. Hierarchical variable selection in polynomial regression models. Am Stat. 41(4):311–313.

- Preacher KJ, Hayes AF. 2008. Asymptotic and resampling strategies for assessing and comparing indirect effects in multiple mediator models. Behav Res Methods. 40(3):879–891.

- Robins JM, Greenland S. 1992. Identifiability and exchangeability for direct and indirect effects. Epidemiology. 3(2):143–155.

- Schaid DJ, Sinnwell JP. 2020. Penalized models for analysis of multiple mediators. Genet Epidemiol. 44(5):408–424.

- Serang S, Jacobucci R, Brimhall KC, Grimm KJ. 2017. Exploratory mediation analysis via regularization. Struct Equ Model. 24(5):733–744.

- Shaw LM, Fields L, Korecka M, Waligórska T, Trojanowski JQ, Allegranza D, Bittner T, He Y, Morgan K, Rabe C. 2016. Method comparison of ab (1-42) measured in human cerebrospinal fluid samples by liquid chromatography-tandem mass spectrometry, the inno-bia alzbio3 assay, and the elecsys® b-amyloid (1-42) assay. Alzheimers Dement. 7(12):P668.

- Shi B, Choirat C, Coull BA, VanderWeele TJ, Valeri L. 2021. CMAverse: a suite of functions for reproducible causal mediation analyses. Epidemiology. 32(5):e20–e22.

- Sobel ME. 2008. Identification of causal parameters in randomized studies with mediating variables. J Educ Behav Stat. 33(2):230–251.

- Valeri L, VanderWeele TJ. 2013. Mediation analysis allowing for exposure-mediator interactions and causal interpretation: theoretical assumptions and implementation with SAS and SPSS macros. Psychol Methods. 18(2):137–150.

- VanderWeele T. 2015. Explanation in causal inference: methods for mediation and interaction. Oxford University Press.

- VanderWeele T, Vansteelandt S. 2014. Mediation analysis with multiple mediators. Epidemiol Methods. 2(1):95–115.

- VanderWeele TJ. 2011. Controlled direct and mediated effects: definition, identification and bounds. Scand Stat Theory Appl. 38(3):551–563.

- VanderWeele TJ. 2014. A unification of mediation and interaction: a four-way decomposition. Epidemiology. 25(5):749–761.

- van Kesteren EJ, Oberski DL. 2019. Exploratory mediation analysis with many potential mediators. Struct Equ Model. 26(5):710–723.

- Villeneuve S, Reed BR, Wirth M, Haase CM, Madison CM, Ayakta N, Mack W, Mungas D, Chui HC, DeCarli C, et al. 2014. Cortical thickness mediates the effect of β-amyloid on episodic memory. Neurology. 82(9):761–767.

- Wainwright MJ. 2009. Sharp thresholds for high-dimensional and noisy sparsity recovery using ℓ1 -constrained quadratic programming (lasso). IEEE Trans Inform Theory. 55(5):2183–2202.

- Wang C, Hu J, Blaser MJ, Li H. 2020. Estimating and testing the microbial causal mediation effect with high-dimensional and compositional microbiome data. Bioinformatics. 36(2):347–355.

- Wang Y, Bernanke J, Peterson BS, McGrath P, Stewart J, Chen Y, Lee S, Wall M, Bastidas V, Hong S, et al. 2019. The association between antidepressant treatment and brain connectivity in two double-blind, placebo-controlled clinical trials: a treatment mechanism study. Lancet Psychiatry. 6(8):667–674.

- Yuan M, Joseph VR, Zou H. 2009. Structured variable selection and estimation. Ann Appl Stat. 3(4):1738–1757.

- Zhang H, Zheng Y, Hou L, Zheng C, Liu L. 2021. Mediation analysis for survival data with high-dimensional mediators. Bioinformatics. 37(21):3815–3821.

- Zhang H, Zheng Y, Zhang Z, Gao T, Joyce B, Yoon G, Zhang W, Schwartz J, Just A, Colicino E, et al. 2016. Estimating and testing high-dimensional mediation effects in epigenetic studies. Bioinformatics. 32(20):3150–3154.

- Zhao P, Rocha G, Yu B. 2009. The composite absolute penalties family for grouped and hierarchical variable selection. Ann Stat. 37(6A):3468–3497. http://arxiv.org/abs/0909.0411.

- Zhao Y, Lindquist MA, Caffo BS. 2020. Sparse principal component based high-dimensional mediation analysis. Comput Stat Data Anal. 142:106835.

- Zhao Y, Luo X. 2016. Pathway lasso: estimate and select sparse mediation pathways with high dimensional mediators. arXiv:160307749 [stat]. [accessed 2021 Nov 16]. http://arxiv.org/abs/1603.07749.

Appendix A

In this section, we aim to illustrate that the choice of tuning parameter of (

) does not affect the model selection results significantly, while conducting a grid search for both tuning parameters (

and

’s) can substantially increase computing time, and that our choice of

in the proposed algorithm is reasonable.

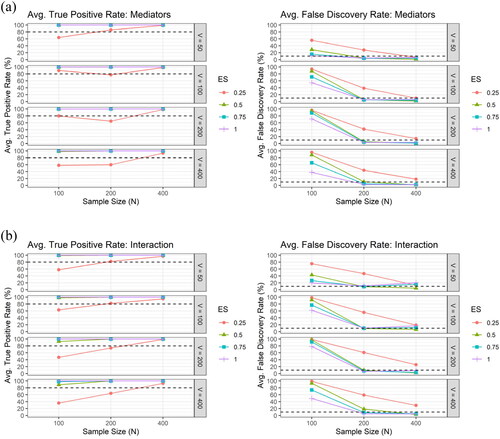

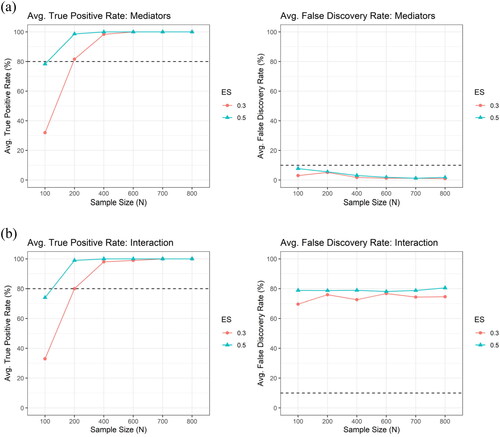

shows the average true positive rate (TPR) and the average false discovery rate (FDR) across the 100 simulation runs, by different values of tuning parameter of (

), for (a) the mediator (upper panel) and for (b) the interaction (lower panel), respectively, under the setting of independent mediators with sample size

number of potential mediators

and different effect sizes (

), using XMInt. The results showed that both TPRs and FDRs remained relatively stable across the range of

values. Thus, the value of the regularization parameter for the

estimation is empirically chosen, following Hsieh et al. (Citation2014), and is fixed at

in our simulation study and real-data application.

Figure A1. The average true positive rate (TPR) and the average false discovery rate (FDR) across the 100 simulation runs, by different values of tuning parameter of (

), for (a) the mediator (upper panel) and for (b) the interaction (lower panel), respectively, under the setting of independent mediators with sample size

number of potential mediators

and different effect sizes (

), using XMInt.