?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

In financial markets, systemic risk is a type of risk in which the failure of one stock in the market triggers a sequence of failures. Our study proposes a Bayesian decision scheme to dynamically monitor systemic risk under any preferences and restrictions in financial risk management. We begin by capturing the moving correlations of stock returns because such correlations represent the strengths of the relationships among stocks. Then, we construct a dynamic financial network to link the stocks with strong relationships. Using the financial space, which is related to the position of stocks in the network plot, we locate two stocks in the financial space that are a short distance apart, because the relationship between these two stocks is strong. Using the distances between stocks in the financial space, together with the salient preferences and restrictions in financial risk management, we propose a systemic risk score. We then use 20 years of data to demonstrate the effectiveness of our proposed systemic risk score to give an early signal of global financial instabilities.

1. Introduction

Systemic risk describes a chain of failures among institutions or markets that is triggered by contagion from a failure in one of them (Chan et al. Citation2005). Compared with the failure of an individual institution, we might think it unlikely that many institutions could fail simultaneously. However, institutions can never isolate themselves from external influences (Bhar and Nikolova Citation2013; Raddant and Kenett Citation2021), and the relationships among the institutions, as one source of systemic risk, can transfer a failure from one to another. Even if the initial shock is created by the failure of one institution, it can create a chain of breakdowns when the relationships among institutions are strong (Contreras et al. Citation2022). The subprime mortgage crisis in 2008 (Dwyer and Tkac Citation2009; Tomczak Citation2023) is a well-known global incident demonstrating the importance of studying systemic risk in the markets. A market crash will also induce a high level of systemic risk—such as the crash of 2020, which was brought about by the COVID-19 pandemic (So et al. Citation2021b). Regional incidents, too, including the European sovereign debt crisis (Ureche-Rangau and Burietz Citation2013) and the war in Ukraine (Lockett Citation2022), affect not only the local markets but also the global market, and research on the immediate impact and aftershocks of these crises is still ongoing. In this paper, we develop a new approach for measuring systemic risk over time that incorporates a variety of financial risk-management scenarios.

Extensive research has suggested several possible directions (De Bandt and Hartmann Citation2000; Benoit et al. Citation2017) for the study of systemic risk. One direction focuses on balance sheets and macroeconomic data (Jackson and Pernoud Citation2021), with the balance sheet approach reflecting major businesses’ relationships with other institutions (Hanson et al. Citation2011; Haldane and May Citation2011; Cai et al. Citation2018), while the macroeconomic data approach reflects the market situation (Brunnermeier Citation2009; Rodríguez-Moreno and Peña Citation2013; He and Krishnamurthy Citation2019). Another direction focuses on textual information (Maiya and Rolfe Citation2014; Kawata and Fujiwara Citation2016; So et al. Citation2022), extracting real-time financial news and building a network based on keywords to track the market situation.

Our proposal for the study of systemic risk focuses on the relationships revealed by daily stock returns. We assume that, if a business relationship exists between two institutions, any news concerning one of them will affect the stock returns of both. Therefore, by capturing the correlations of stock returns (De Nicolo and Kwast Citation2002; Blei and Ergashev Citation2014), we can measure the strength of the business relationship between those two institutions. When the relationships among institutions in the market are strong, any bad news about one member of the market can trigger a chain reaction of failures (Mantegna and Stanley Citation1999). Therefore, it is important to detect the pairs of institutions that possess a strong relationship (Battiston et al. Citation2012; Thurner and Poledna Citation2013; Huang et al. Citation2013; So et al. Citation2021a).

In the literature, several useful statistics are aimed at using stock returns to detect the relationships between institutions and market crashes, including but not limited to systemic expected shortfalls (SES) (Acharya et al. Citation2017), SRISK (the expected capital shortfall of a financial entity, conditional on a prolonged market decline) (Brownlees and Engle Citation2017), CoVaR (the value at risk (VaR) of the financial system, conditional on institutions being under distress) (Adrian and Brunnermeier Citation2016), and Absorption Ratio (AR), which measures supply and demand (Kritzman et al. Citation2011). Using statistical models to capture the instability contributed by the relationships among institutions is also possible (Girardi and Ergün Citation2013; So et al. Citation2020a, Citation2021c). Some studies have analyzed the market situation by using network statistics (Tabak et al. Citation2014; Wang et al. Citation2017; Neveu Citation2018; So et al. Citation2021a; Lai and Hu Citation2021; So et al. Citation2021b). As a further development on these approaches, Billio et al. (Citation2012) constructed a systemic risk measure using principal component analysis and a network of Granger-causality. Hautsch et al. (Citation2015) studied the realized systemic risk (volatility) beta value, using the significant relationship in the tail event, along with information from the market and balance sheets. Diebold and Yılmaz (Citation2014) considered the variance decomposition technique to construct a network to measure systemic risk. Wang et al. (Citation2021) considered a multilayered network to handle various types of stock information in detecting systemic risk. Härdle et al. (Citation2016) considered the network-analysis-based tail event on stock returns.

As with the work of some others, our proposal involves Bayesian methods in the computation of systemic risk—such use is not new. Gandy and Veraart (Citation2017) considered a weighted directed network that measured individual liabilities among institutions. Ballester et al. (Citation2023) studied the transmission of systemic credit risk by using a Bayesian network. Lupu et al. (Citation2020) estimated some common systemic risk measures with a Bayesian entropy estimation method. Deng and Matteson (Citation2022) proposed the Bayesian Spillover Graphs to study the dynamic network and identify some special features of the network.

Specifically, the proposed systemic risk measurement is based on the work of Das (Citation2016), and two components constitute our score. First, we assign a weight to each institution with the Bayesian framework. In application, investors may for example have a greater concern about systemic risk among the institutions that belong to a particular industry, and the relationships among institutions in that industry should be the focus, even though the business relationships of others also deserve attention. Another example would be an investor who faces restrictions on investments or has preferences for systemic risk management; the proposed systemic risk score has set up a weight with the Bayesian framework to adjust to those restrictions and preferences.

In addition to the weight assigned to each stock, we aggregate information from the pairwise relationships of institutions, to measure their contribution to systemic risk. We consider a network model that represents the institutions as nodes and the significance of business relationships by edges. When an institution fails, the impact first transfers to those who have a business relationship with the failed institution. Depending on the strength of their relationship to the failed institution and the loss in that impact, other institutions might then generate the next impact and transfer it to a further institution, which may not be directly linked to the source of the first impact (Martínez-Jaramillo et al. Citation2010; Aiyar Citation2012; Acemoglu et al. Citation2015). Therefore, a network model that highlights those strongly related institutions accounts for the contagion of failure resulting from connectedness in the market (Chen et al. Citation2020).

Although a network model explores and visualizes significant business relationships, our study builds further upon the financial network with a latent space, extending the concept of the latent space from Chu et al. (Citation2021). The latent space converts the binary indicator in the network—whether or not a pair of institutions is significantly related—to a continuous measure of the likelihood that a pair of institutions is related. The latent space includes all of the institutions as points and assigns each one’s position such that the distance between two points reflects the significance of their relationship to all other institutions in the latent space. An advantage of using the latent space model over simply a network model is that the distances in latent space can provide hints that indicate the indirect relationships between two institutions, formed by a chain of institutions, by assigning a short distance between the institutions, while the distance in a network—that is, the length of the shortest path between institutions—may miss it. Some studies visualize the network and make use of the arrangement of positions in the visualization to measure systemic risk (Heimo et al. Citation2007; Linardi et al. Citation2020). We have chosen a Bayesian approach to look for the best position in the latent space. Combining these two components, then, we take the weights of each institution and the network with an embedded latent space, or what we call the financial space, since the space is related to financial information, as input into the systemic risk score.

To the best of our knowledge, we are the first to combine a Bayesian framework for weight assignments and the concept of a financial network with an embedded latent space. As an application of the systemic risk score, we attempt to construct an early signal that warns of impending market crashes. We consider the stock return data in the Hong Kong market for the past 20 years and use the systemic risk score to detect any prior signal of the financial turnulence during that period. We also compare the model’s performance in raising an early signal with that of other well-known systemic risk measures. In the literature, Billio et al. (Citation2016) proposed an early signal based on entropy measures with the financial market in Europe. Allaj and Sanfelici (Citation2022) used realized variance and the feedback rate of price volatility to construct an early signal. We examine our proposed model by considering dynamic time wrapping, which matches the pattern of two time series, to determine the lead and lag relationships. When the systemic risk score leads the market returns before and during the crashes at a reasonable distance, we say that the systemic risk score has raised an early signal predicting the market crashes. We also use the systemic risk score to demonstrate various applications for establishing the weight of each institution in the Bayesian framework. We demonstrate that our approach overcomes the difficulties in determining a representative weight under complex risk-management restrictions and preferences.

In the remainder of this paper, Section 2 describes the study’s methods and materials and the details and setup of the two components of the proposed systemic risk score, and lists other systemic risk measures for comparison. Section 3 describes the approach to estimate the model parameters and the relevant settings. Section 4 describes the experimental approach, the results of the comparison, and some properties of the systemic risk score. Section 5 discusses the results, summarizes the limitations of our model, and proposes possible directions for future research.

2. Materials and Methods

2.1. Systemic Risk Score

Suppose there are n stocks in the financial market of interest. Our proposed systemic risk score takes two inputs, the first of which is the weight of stock i in measuring the systemic risk, for

Because the weights determine the amount of attention we pay to each stock, the purpose of calculating systemic risk determines how we should assign the weights. Let

be the vector of weight. In our study, we made two assumptions on the weights:

All entries in

are non-negative, and

The sum of all entries of

equals 1.

The first assumption is required to ensure that the systemic risk score is well-defined. Following this assumption, a zero weight for stock i means that the stock i receives minimum attention or is not being considered when measuring systemic risk. The second assumption is not compulsory. This assumption says that we measure the attention to each stock in a relative sense, instead of in an absolute sense. This is also helpful when we compare the systemic risk score across scenarios. For example, suppose that we treat the weights as the amount of assets allocated to each stock. The systemic risk score will be proportional to the total capital, even if the proportion of assets allocated to each stock is identical. Only when we put the weights on the same scale will the comparison across portfolios become meaningful.

The next input to the proposed systemic risk is the contribution of systemic risk given by the relationship between stock i and j. As explained in the Introduction, strong relationships between stocks build the path toward a failure contagion. We assign the value of

according to the strength of the relationship, accounting not only for the direct connection but also for the indirect connection, which is linked through a chain of intermediates.

We make use of the significance of the business relationships between stocks, together with the importance of the stocks, to construct the systemic risk scores. Let is a matrix of systemic risk contributions given by the pairwise relationships of stocks. Our systemic risk score is defined by

a function of both the weights and the systemic risk contributions. There are many possible formulations for systemic risk, but motivated by Das (Citation2016), we select those with the following properties:

S has to be non-negative. In particular, when

the systemic risk score

When

increases, S increases.

S is continuously differentiable on the weight

and is also linearly homogeneous of order 1.

The first property ensures a natural representation of risk: If we do not put weight on any asset, the systemic risk in our consideration must be 0. The second property follows directly from the meaning of the matrix C: The higher the contribution given by the relationship of a pair of stocks, the higher the systemic risk. The third property is helpful for understanding the proposed systemic risk score. This property ensures that scaling all of the weights by a positive factor will scale the by the same factor.

Hence, according to Euler’s homogeneous function theorem, we decompose the systemic risk into the sum product of the partial derivatives and the weights.

(1)

(1)

Based on EquationEquation (1)(1)

(1) , the partial derivative with respect to

represents the change in systemic risk per unit weight contributed by a small change in weight. If the partial derivative is large, stock i will have a large influence on the systemic risk. This happens when the relationships between stock i and some other stocks contribute significantly to the systemic risk, and consequently, those stocks should be the focus of our attention. Therefore, we adopt the systemic risk score S in this study, so that

(2)

(2)

The fraction in EquationEquation (2)

(2)

(2) is necessary for the third property. Putting the partial derivatives and the weights together, we have

(3)

(3)

Because there is a square root outside the quadratic form we need to guarantee that the sum inside the square root of EquationEquation (3)

(3)

(3) is positive. A sufficient condition is to require C to be positive semi-definite. In our case, because the weights are non-negative, we only require all entries of C to be non-negative.

2.2. Bayesian Decision Theory Applied to Various Financial Scenarios

The assignment of weights – that is, of the attention we pay to each stock—depends on the situation. For example, if we care about the systemic risk encountered in a particular portfolio, we can set the weights as the proportion of assets allocated to each stock. We can always find the optimal allocation by minimizing the systemic risk score. However, in a case in which there are some restrictions or limitations on the weight selection, instead of looking for an optimal allocation, we are interested in studying how these restrictions affect the systemic risk.

Therefore, instead of looking for a single weight to represent the financial scenario, we produce multiple values for systemic risk, one on each possible combination of weights. This approach coincides with the framework of Bayesian decision theory (Rachev et al. Citation2008; Ando Citation2009). We assign a distribution to the weights and then study the distribution of systemic risk score defined in EquationEquation (3)

(3)

(3) . This approach bypasses the difficulties of looking for a weight that represents the scenario. Adopting the Bayesian decision process also gives us flexibility in setting the level of importance of each of the possible weights. Although multiple weights are available under the constraints, some of them are in favor of the market participants. The level of importance distinguishes the amount of emphasis put upon each of the weights, which in the framework of Bayesian decision theory is equivalent to setting an informative prior for the weights. Correspondingly, the prior density takes on the role of the level of importance.

Throughout our paper, we assign two kinds of distribution to the prior for the weights. We emphasize that other distributions are also possible, as long as they fit the financial scenario and the preference toward each combination of weights. The level of importance, or equivalently the prior density, assigned to each combination of weights, is determined by the hyperparameter, regardless of the choice of distribution. Therefore, the specification of hyperparameters is also important in setting up the financial scenario of interest.

The first distribution is the Dirichlet distribution. This distribution matches the two assumptions on the weights in Section 2.1 when there is no further restriction on the collection of possible combinations of weights. The hyperparameter of the Dirichlet distribution is a vector of length n, is positive in all entries, and determines the mean and variance of the weights

We have three specifications of hyperparameters to be considered in our study.

Random Allocation.

In a random allocation, every possible combination of weights has the same level of importance—such as taking every entry of

as 1. This is the baseline specification for most financial scenarios in our study because this specification is equivalent to no preference for any particular combination of weights.

Allocation proportional to market capitalization.

In an allocation that is proportional to market capitalization, the mean of weights

is proportional to the market capitalization shared by each stock. Market capitalization is the total dollar value of a stock. When a company with a large weight fails, it impacts the market severely and leads to a series of failures. This is achieved in the model by setting the proportion of

shared by the sum of all entries in

to be proportional to the market capitalization shared by stock i. Therefore, we have to set the sum of

to complete the specification. Notice that when

is smaller than 1, those combinations of weights that have

deviating from the prior mean receive a higher level of importance. To avoid this counter-intuitive case, we assign the sum of

so that the smallest

is 1. We compare this specification with the random allocation as one financial scenario in our study.

Allocation including a risk-free asset.

In an allocation that includes a risk-free asset, one extra stock, representing the risk-free asset, is inserted into the consideration. Therefore, now we have

entries for the weight

and so for the hyperparameter of the Dirichlet distribution,

The contribution of systemic risk toward the risk-free asset and any other asset is set to be 0. While holding all other entries of

constant, increasing the value of

decreases the weights that are assigned to risky assets and hence reduces the systemic risk score. At one extreme, when the weight of the risk-free asset

is 1, the systemic risk score achieves 0, which is the minimum value of the score. At the other extreme, when

is 0, the systemic risk score is equivalent to ignoring the risk-free asset. As one financial scenario in our study, we compare this specification with the random allocation to study the effect that a decreasing systemic risk score has on increasing

The second distribution to the prior for the weights is a joint of multiple independent scaled Dirichlet distributions. This is the distribution of weights when each stock is pre-assigned into exactly one of the G groups, and the sum of the weights of stocks in each group is predetermined. We let

be the vector of the weight of stocks that belong to group g, and

is the sum of the weight of stocks in group g. Hence, we have

as the proportion of the predetermined sum of weights of stocks in group g being assigned to each stock in group g. Therefore, by setting each

to be Dirichlet distributed with hyperparameter

we have the weight

following a joint of multiple independently scaled Dirichlet distributions. We have three specifications of the hyperparameter to consider in our study.

Allocation with an equally shared industry sum of weights.

In an allocation with an equally shared industry sum of weights, we first classify the stocks according to their industry. Within each group, we use a random allocation—that is, we take every entry of

as 1, to specify that there is no preference within the group. We equally share the sum of weights with each group, so that the sum of weights in each group is

This specification is the baseline for the allocation with weights competition within groups.

Allocation with the industry of focus.

In the allocation with an equally shared industry sum of weights, we have set the sum of weights in each group to be

To indicate a focus on an industry-specific crisis, we set a sum of weights for the industry of concern to be 0.5 and each of the remaining industries shares the remaining sum of weights equally, at

We compare the systemic risk score of a focus on the industry-specific crisis with the random allocation.

Allocation with a weights competition within groups.

In the allocation with an equally shared industry sum of weights, we make use of the random allocation and state no preference for any combination of weights within each group. In this specification of an allocation with a weights competition within groups, stocks compete for weights within their group. We achieve this by taking every entry of

as 0.1. Because all entries of

are less than 1, those combinations where the weights deviate from the prior mean receive a higher level of importance. Under the constraint that the sum of all entries of

is 1, the combination of weights in which one of the stocks takes the majority of weights while the remaining stocks share the remaining proportion receives a higher level of importance than does the combination of weights in which each stock equally shares the weights. Therefore, the stocks in each group prefer to compete instead of share the weights. We compare the systemic risk score under this specification with the allocation with an equally shared industry sum of weights.

2.3. Setting up the Contribution of Systemic Risk with a Latent Space Model

The next input to the systemic risk score is the contribution of systemic risk given by the business relationship between two institutions. Information on the relationships between institutions is often inaccessible however, and even if we can gain access to the data, it is still not easy to determine the contribution to systemic risk given specifically by the two institutions’ relationship. Therefore, we need a proxy for the relationship between the two institutions. In our study, we choose the correlation of stock returns as our proxy. We believe that if two institutions have a significant relationship, whenever one of them suffers and its stock returns fall, the other one will also be impacted. We therefore first use the stock returns to construct a network of significant business relationships. Then, we embed a financial space into the network to further study the indirect business relationships, which are relationships created through a sequence of intermediate institutions (Ng et al. Citation2021).

2.3.1. Network Setup

We study the strength of the relationships by considering the correlations between the stock returns (Chen et al. Citation2020; Patro et al. Citation2013). Suppose our dataset of stock contains information of T trading days in total. Let is the closing price of stock

on day t. The log return

(4)

(4)

is the difference in the log price on two consecutive trading days. Let

be the 21-days historical average log return of stock i on day t – that is,

(5)

(5)

If the sample correlation of the 21-day historical log return between two distinct stocks i and j on day t

(6)

(6)

exceeds a threshold, we say the relationship between stocks i and j on trading day t is strong and we assign

otherwise, we set

Correlations of the 21-day historical average log return are significantly positive at

and

when

is at least 0.2914, 0.3687, 0.5034, respectively. We consider these three critical values as the thresholds in our study.

For each trading day t, we gather all over i and j to form an adjacency matrix

so that we put an edge between the nodes representing stocks i and j in the network of the day t if

Because the correlation is symmetric—that is,

—the dynamic network is a sequence of undirected networks. Also, we do not assign any value to

as we do not include the self-loop in the analysis.

2.3.2. Financial Space

The financial network assigns edges to indicate significant business relationships between institutions. To quantify the contribution of systemic risk, we introduce the concept of financial space, which is latent, unobserved, and D-dimensional. We first let be the coordinates of stock i in the financial space on trading day t. The financial space is a low-dimensional representation of the relationship among those stocks. In particular, the distance between two institutions in the financial space serves as a measurement of the strength of the relationship. Moreover, to have a better understanding of the distance between nodes, we follow a typical choice of

i.e. restricting our attention to a two-dimensional financial space, in the sequel (Sewell and Chen Citation2015). As a remark, in literature, some studies pick other choices of D when they are considering a more complex network (Zhang et al. Citation2022).

To give meaning to the distances, we consider a typical network plot that assigns the node positions to give a uniform length of edges and distribution of nodes, to avoid any crossing or blending of edges (Battista et al. Citation1994). This is often achieved by adopting a network configuration that assigns a shorter distance between those nodes that are more strongly related, and vice versa. To assess the systemic risk on each trading day t, we borrow the concept of “the shorter the distance, the stronger the relationship,” to give meaning to the distance between nodes i and j on the plot of the network corresponding to trading day t.

The financial space is a latent space, meaning that we measure the distance between institutions by considering their relative positions. By embedding the financial space into the network, we assign the nodes in the plot that match their position in the financial space. Therefore, instead of assigning the distances from observed information, we first locate each node in the financial space and then measure the distance between the two nodes. Unlike the nodes and edges in the networks, the financial space is latent and we do not observe the positions directly from the correlations or the network; therefore, we apply the technique of latent space modeling (Hoff et al. Citation2002; Sewell and Chen Citation2015; Chu et al. Citation2021) to find the positions that best represent the systemic risk in the market. The latent space modeling technique for a network aims to use a metric space, usually a two-dimensional Euclidean space, to explain the existence of edges between nodes in a network, and hence to explore the relationships between nodes.

Previous studies have used the nodes-distance measure to calculate connectedness among banks (Cai et al. Citation2018; Abbassi et al. Citation2017). By employing the distance measure, we can account for the relationship between nodes. Therefore, from the perspective of an individual stock, the closer it is to other nodes, the higher its chance of suffering contagion from the failure of those neighboring nodes. From the perspective of the market, the shorter the distance between stocks, the easier it is for financial incidents to initiate a contagion of failure, thereby triggering a systemic breakdown.

We then measure the distance between stocks i and j on day t by

(7)

(7)

where

is the Euclidean norm. Recall that the financial space is latent and unobserved. We need some rules to guide ourselves when looking for a position for each stock.

First, for each trading day, we aim to maintain an identical spread of positions. In other words, before considering any data, the positions of stocks on any trading day should have an identical distribution.

After the first day, we determine the positions for trading day t based on the positions from the previous trading day. To maintain an identical spread, we assume that stocks move from their previous positions with a suitable shrinkage towards the center. The center represents the average position that a stock holds throughout the entire study period. Additionally, we introduce the persistence parameter matrix to quantify the extent to which information from trading day

influences the positions on the current day. A larger value of the persistence parameter indicates a stronger influence of the positions from the last trading day on the current positions.

Let diagmat be a function that creates a diagonal matrix with its diagonal entries arranged in the same sequence as the input, represents the average position of stock i throughout the entire study period,

is a diagonal matrix that measures the transition sizes of the positions of stock i in the financial space between consecutive trading days, and

is also a diagonal matrix that measures the persistence of stock i. On day

we assume that the position for stock i follows a D-variate normal distribution:

(8)

(8)

where I is the identity matrix. After the first day, the position of stock i on trading day t is determined as follows:

(9)

(9)

For each stock i and dimension d, we consider the sequence as a univariate autoregressive time series of order 1. To ensure an identical variance over all the marginal distribution of the position in all t, we set the initial spread as

as shown in EquationEquation (8)

(8)

(8) , and restrict

for all i and d.

The setup in EquationEquations (8)(8)

(8) and Equation(9)

(9)

(9) indicates that the position of stocks across dimension are independent. Therefore, equivalent to EquationEquations (8)

(8)

(8) and Equation(9)

(9)

(9) , the position of stock i on dimension d on day 1 is

(10)

(10)

and the position of stock i on dimension d on day

is

(11)

(11)

2.3.3. Potential factors for Strong Relationships between Stocks

Multiple potential factors affect the chance for two stocks to be strongly related. In our study, we classify the stocks into groups by industry: Finance

Utilities

Properties

and Commerce & Industry

Stocks from the same industry share some common characteristics, thus inflating the correlations of their returns. However, any common characteristic is only limited to those within the same industry, so the inflation applies only to those pairs of stocks belonging to the same industry. The extra risk due to the common characteristic results in different probabilities when comparing a pair of stocks coming from the same industry to another pair coming from two different industries. In other words, if both stocks i and j come from industry m on trading day t, we assign Otherwise, if stocks i and j come from two different industries, we set

2.3.4. Forming a Network of Significant Business Relationships

We use the financial space and other potential factors to explain the significance of the relationship between two stocks. In our study, the significance of a business relationship depends on whether the correlation of returns exceeds the threshold described in Section 2.3.1, which is binary. Therefore, we assign a Bernoulli distribution for the significance of the relationship between stocks i and j on trading day t with a log odds

(12)

(12)

where

are the respective effects of the M covariates

We assume there is conditional independence on the status of relationships, given the corresponding log odds.

Under the formulation given by EquationEquation (12)(12)

(12) , when the factors increase by 1 unit, the log odds increase by

the corresponding value of the effect. The negative sign in front of

indicates that a smaller

leads to a larger

When the distance increases by 1 unit, the log odds decrease by 1 unit.

2.4. Contribution to Systemic Risk with Financial Space

Summarizing all of the above formulations about the financial network and financial space, we have constructed a relationship between the probability of observing a significant business relationship between two institutions and the latent financial space covering those potential factors, which are not included in the covariates. The distance in the financial space has taken into account the indirect relationship between institutions—a feature that comes from the triangular inequality of the Euclidean space. The distance between any two institutions is bounded above by the chain of intermediate institutions. Therefore, even though we are considering the pairwise correlation between the returns of two institutions, the financial space can also capture the indirect relationship.

With this advantage, we use the estimated log odds obtained by plugging the estimate of the latent position and the effect parameters into EquationEquation (12)

(12)

(12) , as a measure of the contribution to systemic risk. To satisfy the requirement that all entries of C are non-negative, we need to transform the log odds. With abuse of notation, we take a transformation C on the matrix

so that we can substitute C by

in EquationEquation (3)

(3)

(3) to calculate the systemic risk on day t. A natural choice is to set the

entry of the transformed output to be

(13)

(13)

because this transformation, which is the inverse function of the logit function, guarantees that the range of

is non-negative and also outputs the probability of stocks i and j having a significant positive correlation at time t. The diagonal entries

are fixed to be 1 to include the allocation of weights into the calculation of the systemic risk score. Therefore, the systemic risk score on trading day t becomes

(14)

(14)

To represent the sequence of the systemic risk score along time, we make an abuse of notation to set to be the systemic risk score on trading day t.

3. Parameter Estimation

In our study, we estimate the position in the financial space of stock

at time

the average position

of stock

the transition sizes of positions in the financial space

of stock

the parameter of persistence matrix

of stock

and the effect parameter

of the covariate

with a Bayesian approach.

3.1. Posterior Distribution

Let be the collection of all edges in the financial network,

be the collection of the positions in the financial space,

be the collection of all effect parameters for each covariate,

be the collection of all the average positions in the financial space,

be the collection of all transition sizes in the financial space in consecutive trading days, and

be the collection of all parameters of persistence.

We assign an independent normal prior with mean and variance

to the effect parameter

an independent normal prior with mean

and variance

to

an independent inverse gamma prior with shape

and scale

to

and an independent uniform prior to

In other words, we assume that

and

are independent of each other. Additionally, we assume that

and

are independent and local independence within

meaning that given all the parameters, the elements in

are independent of each other.

Based on the above assumptions, the log posterior of our model, with the constant term excluded, has the following generic form:

(15)

(15)

(16)

(16)

(17)

(17)

where we have made use of the Bayes theorem in EquationEquation (15)

(15)

(15) , the independence between

and

in EquationEquation (16)

(16)

(16) , and the independence among

and

in EquationEquation (17)

(17)

(17) .

Based on the local independent assumption, the likelihood has the following generic form:

(18)

(18)

where, following the EquationEquation (12)

(12)

(12) , the likelihood of single observation

is

(19)

(19)

We have dropped those parameters that are unrelated to the stock i and j on the trading day t in EquationEquation (18)(18)

(18) .

Let be the set of effect parameters

but

is excluded. The

of the full conditional of the effect parameters, with the constant term excluded, is

(20)

(20)

Let be the set of average positions

but

is excluded. The

of full conditional of the average position on dimension d of stock i, with the constant term excluded, is

(21)

(21)

Let be the set of transition sizes

but

is excluded. The

of full conditional of the persistence on dimension d of stock i, with the constant term excluded, is

(22)

(22)

Let be the set of persistence matrices

but

is excluded. The

of full conditional of the transition sizes on dimension d of stock i, with the constant term excluded, is

(23)

(23)

when all

3.2. Identification Issue

In our model, any two sets of the latent position in the financial space give the same likelihood when they are equivalent under rigid transformation, i.e. translation, reflection, rotation, and any sequence of the above three. This creates an identification issue because many sets of the position of stocks in the financial space give the same likelihood and thus we cannot distinguish them by the likelihood. Following the proof in Appendix F, in Bayesian analysis, the normal prior on the average position of each stock in the financial space, i.e. has eliminated the identification issue due to translation. The assumption of using a diagonal matrix for the covariance of the position in the financial space at each time point, i.e. EquationEquations (8)

(8)

(8) and Equation(9)

(9)

(9) , has eliminated the identification issue due to rotation, except for rotation about the origin by a multiple of

with

in our case.

Therefore, in our case, the identification issue on the parameters exists only due to reflection and rotation about the origin by a multiple of Rotation about the origin by a multiple of

is the same as first permuting the entries and then reflecting along the vertical axis. Therefore, in the following discussion, to further elaborate, we let

be a

permutation matrix which is obtained by swapping some of the rows in the identity matrix, and let

for

so that the reflection matrix

is a diagonal matrix with

or 1 in the diagonal. The model parameters

(24)

(24)

give the same posterior as that using the following model parameters

(25)

(25)

for any

and

Therefore, after each iteration of the MCMC, we transform the MCMC iterate according to the definition in EquationEquation (25)(25)

(25) to maintain the unique representation of the model parameters. The transformation follows these steps:

For each dimension

if the absolute value of the minimum average coordinate is not greater than the absolute value of the maximum average coordinate, i.e.

we set

Otherwise, we set

We select the permutation matrix

such that the maximum absolute value of the average coordinate after transformation forms a non-increasing sequence of length D. Specifically, for distinct dimensions

if we have

then we assign the first row of the permutation matrix

to be the

row of the identity matrix, second row of the permutation matrix

to the

row of the identity matrix, and so on.

The first constraint addresses the identification issue caused by reflection. For each dimension d, there are two scenarios: either the absolute value of the minimum average coordinate is not greater than the absolute value of the maximum average coordinate, or vice versa. These two scenarios are equivalent and lead to the same posterior with appropriate choices of In our study, we choose the former scenario.

The second constraint deals with the identification issue arising from the permutation of coordinates. Although there are D! Scenarios in total, which are equivalent with appropriate choices of only one scenario exists where the maximum absolute value of the average coordinate forms a non-increasing sequence of length D.

In our case with the above constraints reduce to the followings:

For each dimension

if

we set

Otherwise, we set

If

the permutation matrix

swaps the two rows in the identity matrix. Otherwise, the permutation matrix is the identity function.

Through this transformation, we can select the unique representation of the model parameters from a total of 4 sets of model parameters, which share the same posterior density, for each MCMC iterate.

3.3. Initial values

Because the posterior is intractable, we estimate the parameters via the Markov chain Monte Carlo (MCMC) method. As input to the MCMC, we first must assign the position for each stock in the financial space. In a full estimation, we assign a shorter distance between a pair of individuals if they are linked. In accord with Sewell and Chen (Citation2015), we estimate the distance matrix between institutions i and j at time t, denoted by the entry of

by

(26)

(26)

We then apply classical multidimensional scaling on each estimated distance matrices to obtain the first guess of the initial values of the positions in the financial space at each t. To avoid the nodes on the latent space clustering at the same position, we add a normal random noise with a standard deviation of 0.001 to each coordinate.

After that, to draw the best connection of stocks in the financial space over time, we sequentially, starting from perform a Procrustes transformation (Hurley and Cattell Citation1962) to get the second guess of the initial values of the positions in the financial space. The Procrustes transformation takes two inputs, one is the first guess of the initial values of the positions in the financial space at time

which we transform to the second guess. Another one is the second guess of the initial values of the positions in the financial space at time t, obtained from the previous transformation.

Let be the second guess of the initial value of

i.e. after the Procrustes transformation. The transformation rotates the first input about the origin to get

so that the sum of the squared Euclidean distances of all stocks traveled to

from

is minimized. The second guess of the initial values of the positions in the financial space at the first trading day directly takes the first guess, i.e. we do not transform with Procrustes the first guess of the initial values of the positions in the financial space.

We determine the initial value of effect parameters for

by fitting a logistic regression to

(27)

(27)

(28)

(28)

using the function glm in R, so that the distance between institutions in the financial space, and the known factors

for

are included as covariates. Suppose

is the estimate of

in the logistic regression for

We set the initial value of

denoted by

in the MCMC to be the same value as

for

Recall that, in our model, we have restricted to be

for identification. Therefore, after performing the logistic regression, we scale the coordinate of the stock in the financial space by a factor of

In other words, let

be the initial value of the position of stock i at trading day t in the financial space for MCMC. We have the initial value

(29)

(29)

for all i and t.

Finally, for each dimension d, we fit an autoregressive model of order 1 to the time series of using the function ar in R and then use the estimate of the mean, the standard deviation, and the autoregressive coefficient as the initial values of

and

denoted by

and

respectively.

3.4. Hyperparameters for the Priors

To utilize the result from the logistic regression fitted for the initial values of we set the mean of the normal prior of

to be the estimates of

obtained from the logistic regression, i.e.

The variance of the normal prior

i.e.

are set to be 10. The mean and variance of the normal prior of

are set to be

and

respectively. The shape and scale parameters of the inverse gamma prior of

are set to be 2.04 and 1.04, respectively, so that the prior mean and prior variance are 1 and 25. These setting aims at keeping the prior non-informative.

3.5. MCMC Algorithm

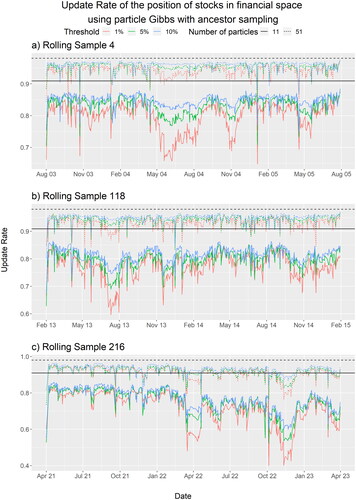

Suppose we take L iterates in total for the MCMC estimation. Given the state-space structure of our model, we adopt the particle Gibbs with the ancestor sampling method to improve the convergence (Lindsten et al. Citation2014). We use particles to balance the computational time and the convergence speed.

In the sequel, we introduce a superscript to the variables to indicate the MCMC sample at the

iteration. We also introduce the superscript

to represent the

particle in the

iterate. Furthermore, those parameters with tilde

on top represent the proposed sample. Denote

to be the collection of

from time

to

and

to be the realization of a random variable from the standard uniform distribution.

The variables a and indicate the acceptance ratio and controls the acceptance rate respectively. The subscript, attached to the acceptance ratio a and the variable controls the acceptance rate

indicates the parameters that these two variables refer to. The variables w, W, and A respectively refer to the unnormalized weight, normalized weight, and normalized ancestor weight that are used in the particle Gibbs. Let

be the index of the selected particle in the particle Gibbs that will be used as the MCMC sample.

Then, with the initial values set, for iteration

For all variables that we estimate via the MCMC, we first set the

iterate to be identical to the

iterate. We set this up for notation consistency in the following discussion. Moreover, the value of the

iterate is the one that we should set for the

iterate if the proposed sample in the

iterate is rejected.

Conduct a single step MCMC for each of

to obtain the

iterate

based on the

iterate. For each m,

Sample

from

Calculate the acceptance ratio

If

we set

to be

Otherwise, we keep

to be

Conduct a particle Gibbs with ancestor sampling for the position in the financial space

to obtain the

iterate

based on the

iterate. For each i,

For

Sample for Q particles, denoted by

from

Set

to be the sample from the last iteration.

Evaluate for each

the weights

where is a collection of stock indices excluding the index i.

For

(Resampling) Resample the Q particles, denoted by

from

with replacement, so that

is selected with probability

where are the normalized weights.

(Ancestor Sampling) Sample 1 ancestor, denoted by

from

so that

is selected with probability

Sample for Q particles, denoted by

from

and set

Set

to connect the selected ancestor with the latent position at time t obtained from the last iterate.

Evaluate for each

the weights

where is a collection of stock indices excluding the index i.

Select

from

with probability

and set the output (or equivalently

) to be

Conduct a single step MCMC for each of

to obtain the

iterate

based on the

iterate. For each i and d,

Sample

from normal

Calculate the acceptance ratio

If

we set

to be

Otherwise, we keep

to be

Conduct a single step MCMC for each of

to obtain the

iterate

based on the

iterate. For each i and d,

Sample

from truncated normal

where the non-positive regime of the domain is truncated.

Calculate the acceptance ratio

If

we set

to be

Otherwise, we keep

to be

Conduct a single step MCMC for each of

to obtain the

iterate

based on the

iterate. For each i and d,

Sample

from Beta distribution

Calculate the acceptance ratio

If

we set

to be

Otherwise, we keep

to be

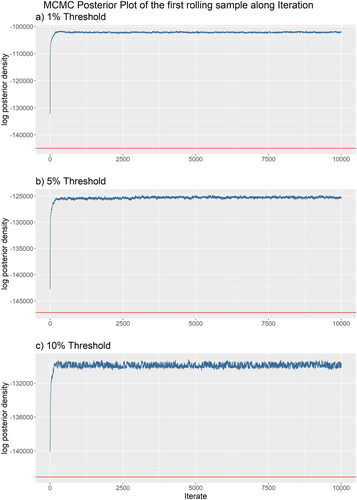

3.6. Settings of MCMC

During the estimation, we conduct iterations to obtain a sufficient number of samples for estimation. Furthermore, we treat the first

of iterates as burn-in. Therefore, we consider only the last 5000 iterates in the estimation.

Starting from the iterates, we adjust

and

which are the variables that control the acceptance rate of the proposal of

and

respectively, based on the acceptance rate for every 50 iterates until reaching the end of adaptation process. As we estimate the parameters one by one, we would like the acceptance rate to stay between

to

having a maximum difference of

from the optimal acceptance rate

(Gelman et al. Citation1997). We use the new step size for the next 50 iterates. This adapting process is repeated until we have made 3000 iterations. After that, we keep the step size until the end of the estimation. In Appendix D, we provide the diagnostic of the MCMC estimation.

4. Results

In this section, we first demonstrate four settings of the systemic risk score with the financial space model. By establishing a suitable distribution of the weights, the systemic risk score possesses intuitive properties that are useful in real-life applications. Then, we compare the performance of the systemic risk score with other approaches that are listed in Appendix A.

The aim of making the comparison is to evaluate the performance of each measure in detecting an early signal of future financial instability. By applying the dynamic time warping (DTW) algorithm (Giorgino (Citation2009); see Appendix B for details), we identify whether there are lead and lag relationships between the systemic risk measure and the market return

Here

indicates not only the systemic risk score proposed in Section 2.3 but also other formulations of the same systemic risk score and other competitive systemic risk measure. We will introduce them in the later section.

We first treat the systemic risk measure and the market return as functions of time. We can raise the early warning signal only if the systemic risk scores lead the market returns, meaning that the pattern appearing in a systemic risk score also appears in the market returns at a later date, at least during the periods of pre-turbulence and post-turbulence. In addition, given a leading relationship, the systemic risk measure should be increasing and taking a large value in the proximity of the market crashes, if it is going to indicate an early warning signal.

4.1. Experiment Setting

Our study focuses on the Hong Kong stock market during the 20 years from May 2003 to April 2023. We have chosen the constituent stocks of the Hang Seng Indexes as the stocks of interest. Therefore, in the DTW algorithm, we have chosen the returns of the Hang Seng Index (HSI) as a proxy of the market returns in Hong Kong.

We adopt a rolling window approach to monitor systemic risk using the most up-to-date information (Chan et al. Citation2023). The rolling window covers two years, the first of which comprises the trading days from May 2003 to April 2005. We make use of the financial network to estimate the parameters, including the effect of known factors and the position of the institution in the financial space. We then slice the window by one trading day and again estimate the parameters. In principle, we repeat the process of estimating the parameters and slicing the rolling window until the window reaches the last trading day in our study period.

The computational burden of the rolling window approach increases with the number of trading days in our study. To alleviate the burden, the parameters do not change significantly when we add a few days at the end of the rolling window or drop a few days at the beginning. This assumption is important because, instead of repeating the full estimation, we conduct a partial estimation by reusing the estimation result from an earlier rolling window.

Therefore, as is shown in , in the first rolling sample, we conduct a full estimation using the financial networks from May 2003 through April 2005. A full estimation involves all of the parameters, including the effect of known factors the position of stocks in the financial space

the average position of stock in the financial space

the transition sizes of positions in the financial space

and the parameter of persistence matrix

of the financial space. Then, in the period of partial estimation, in the

slice of the rolling window following the latest full estimation, we reuse the parameter estimates but with the position in the financial space from trading day T to

In the partial estimation, we conduct 50 iterates only to update the latest information, with no burn-in period. As an example, in the first slice, we estimate the position on the last trading day in April 2005 and the first trading day in May 2005 only. In the last slice, we estimate the position from the last trading day in April 2005 to the last trading day in May 2005.

Table 1. The rolling window scheme keeps the size of the data at two years in the full estimation.

After slicing to include the last trading day in the month of partial estimation, the first rolling sample is completed. In the next rolling sample, we start over with a full estimation, using the network from June 2003 through May 2005, and then we continue the slicing with partial estimation. This process is repeated until all 216 rolling samples are completed.

To calculate systemic risk, we must ensure that only the latest up-to-date information is involved and none of the future information is included. Therefore, we calculate only the value of systemic risk from May 2005 to April 2023, which are the trading days involved in the partial estimation. As an example, to assess the systemic risk on the first trading day in May 2005, we first conduct a full estimation of the financial networks from May 2003 to April 2005, and then we conduct a partial estimation to obtain the configuration of the financial space on the first trading day in May 2005. Finally, we use EquationEquation (14)(14)

(14) to calculate the value of systemic risk. The computational details related to the proposed systemic risk score are available in Appendix C.

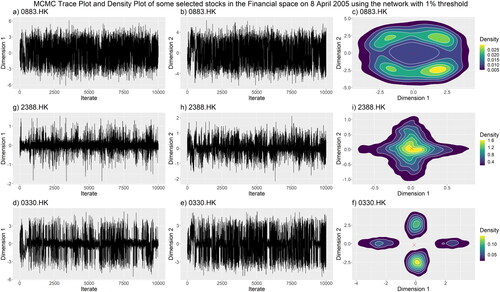

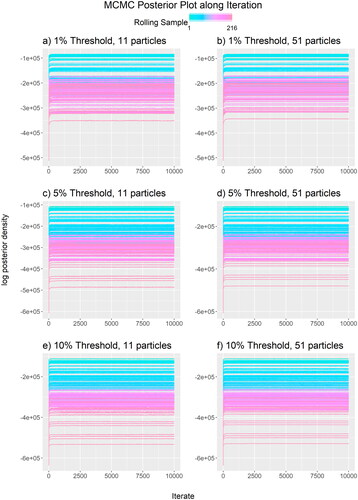

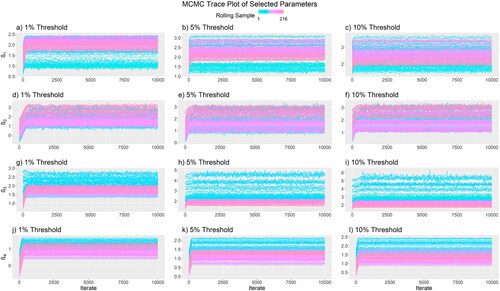

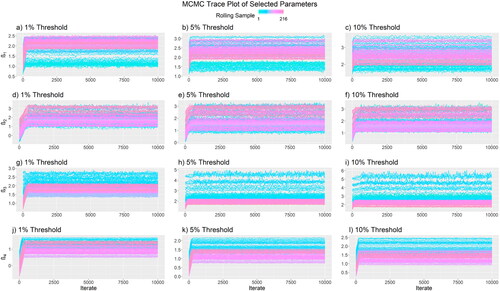

In Appendix D.1, D.2, and E, the posterior density plot and trace plot provide evidence that the Markov chain has converged before the burn-in period. However, despite using the transformation described in Section 3.2 to establish the unique representation, we observe in Appendix E that the posterior density exhibits multiple modes. Furthermore, Appendix E demonstrates that the posterior mean yields a lower value in the posterior density compared to the posterior mode. Therefore, when computing the systemic risk score and determining the values utilized in the partial estimation, we employ the posterior mode instead of the posterior mean. Although our financial space model produces a multi-modal posterior density, the systemic risk score, as defined in EquationEquation (14)(14)

(14) , remains invariant to translation, reflection, and rotation in the financial space. This invariance arises because the score solely considers the distance between pairs of stocks in the financial space, disregarding their specific positions in the space.

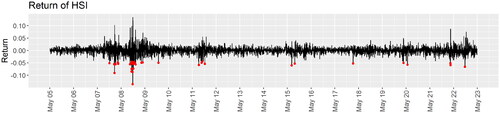

4.2. Data

We first set up the period that is regarded as turbulence. In , we mark with red dots the trading days that the return of HSI has dropped below three standard deviations from the mean over the whole study period. We find that it has covered most financial turbulence in the study period, including but not limited to the subprime mortgage crisis and the global financial crisis from mid-2007 to early 2009, the fluctuation from mid to late 2011, China’s stock market turbulence (Han and Khoojine Citation2019) from mid-2015 to early 2016, the trade war between the US and China (Liu Citation2018) in 2018, the COVID-19 pandemic in early 2020, and the rapid transmission of COVID-19 in Hong Kong and the continuation of warfare in Ukraine (Lockett Citation2022; Jiaxing Li Citation2022) in 2022. Therefore, we select the periods mentioned above as the turbulence.

Figure 1. Return of HSI. The red dots are the trading days that the return dropped below the three standard deviations from the mean over the whole study period.

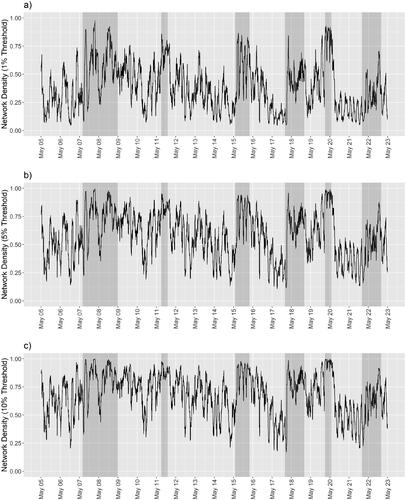

In , we present the densities of

and

the time series of networks constructed using the critical values for a 21-day historical correlation to be significantly positive at the

and

level, respectively. The regions shaded in gray are the periods when the financial turbulence occurs. We find that, except during 2022, when there is financial turbulence, the network densities often reach higher values than those during normal periods. The differences are more apparent in

than in

and

The differences in network densities between the normal and turbulent periods allow us to recognize an early signal when we observe an unusual increase in network densities. This builds the foundation for our further analysis of the network and the embedded financial space.

4.3. Properties of Our Proposed Measure in Financial Risk Management

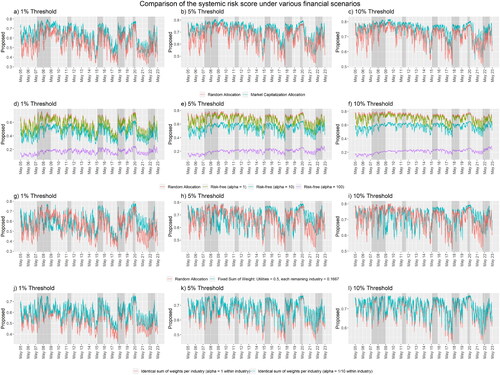

We plot the results of the comparisons in , in which each plot refers to a financial scenario comparing the specifications of the priors listed in Section 2.2. We summarize the four financial scenarios of interest in .

Figure 3. The systemic risk scores in four financial scenarios (Scenario 1: (a–c); Scenario 2: (d–f); Scenario 3: (g–i); Scenario 4: (j-l)) under three different threshold values of correlation in the construction of networks (1% Threshold: (a, d, g, j); 5% Threshold: (b, e, h, k); 10% Threshold: (c, f, i, l)). The red lines refer to the baseline systemic risk scores. The lines in other colors refer to the measurements of systemic risk under specific considerations.

Table 2. The four financial scenarios that make use of the specifications of hyperparameters and distributions of choice are listed in Section 2.2.

The first specification represents a scenario that considers market capitalization, which equates to the total dollar value of a stock. A larger company often has a higher market capitalization and hence makes a greater contribution to systemic risk. Therefore, to calculate systemic risk, we use Dirichlet priors for the weights with the hyperparameters

proportional to the market capitalization shared among all stocks in the study; in other words, the prior mean is proportional to the market capitalization. To encourage the selection of weights that are close to the prior mean, we set the sum of all

to be the reciprocal of the smallest

so that all entries of

are at least 1. This arrangement assigns a higher level of importance to those combinations of weights that follow the market capitalization closely. The sum of the hyperparameters controls the penalty on the level of importance for those weights deviating from the proportion of market capitalization. In , the systemic risk score under the market capitalization allocation often has a higher systemic risk than the baseline. This is because approximately

of the total market capitalization is shared by one-fourth of the stocks. Therefore, a change in log odds in any of the dominating stocks in the financial space produces a greater effect on the systemic risk score than on the baseline.

The second specification includes in the analysis a risk-free asset, which does not contribute to systemic risk. Therefore, to reduce the impact brought about by financial instability, we allocate a certain proportion of money to the risk-free asset. To measure the systemic risk encountered in the portfolio with different preferences for the proportions allocated to the risk-free asset, we assign hyperparameters corresponding to each preference. First, we set the hyperparameters for all risky assets

This provides a fair ground for comparison with the baseline, which always has zero weight assigned to the risk-free asset. We then consider three cases, with each taking a different hyperparameter for the risk-free asset

The higher the hyperparameter for the risk-free asset, the higher the average proportion allocated to it. In our study, because the number of stocks varies over time, the corresponding average proportion allocated to the risk-free asset is also dynamic. Using the number of stocks that constitute the HSI on May 2023 – that is,

– when

and 100, the mean weights for the risk-free asset are

and

respectively. In , the higher the hyperparameter that is assigned to the risk-free asset, the smaller the systemic risk is, and the gap between cases is wider during financial turbulence than during normal periods. Moreover, none of the four lines cross each other. Therefore, we can claim that increasing the allocation to a risk-free asset always decreases the effect of financial turbulence on the portfolio, especially during financial turbulence. Thus, practitioners could design their portfolios by changing the proportion allocated to risk-free assets, to monitor the systemic risk encountered in their investments.

The third specification assigns a fixed-weight sum of stocks in each industry. We demonstrate this with a focus on the Utilities industry to study the impact of the COVID-19 pandemic and the global energy crisis (Rankin et al. Citation2021). In our study, stocks are classified in industries. Therefore, the sum of weights for the Utilities industry is 0.5, while for all other industries the fixed sum of weights is equally shared, i.e.

for each of the remaining three industries. Using the stock classification by HSI on May 2023, there are five stocks from the energy industry, so their average weights are

This specification shows the systemic risk of the Utilities industry; maintaining half of the weights from other industries accounts for the impact outside the Utilities industry. We observe that before March 2020, the systemic risk of the energy industry is more or less close to the baseline, but after March 2020, the systemic risk is usually above the baseline. This observation matches the actual situation, in which the COVID-19 pandemic and the global energy crisis increased systemic risk in the Utilities industry.

The last specification also presets a sum of the weights of stocks in each industry. We allocate assets to the stocks equally in each industry, but we prefer only a few within each industry. In traditional portfolio theory, investing in a wider variety of stocks diversifies risk. In this specification, each industry shares the same preset sum of weights. However, amateur investors often do not allocate their money equally to all stocks and instead select only a few from each industry. Although the average weight is identical to both the specification of interest and the baseline, we find that the systemic risk score for the specification of interest generally is higher than the baseline. This coincides with conventional wisdom, as each relationship between companies becomes more important.

4.4. Comparisons of Our Proposed Measures with Alternative Formulations

We also compare the performance on raising an early signal by the systemic risk scores and other systemic risk measures in the literature. We again use the market capitalization allocation for the weights. We show the point-to-point comparison plot produced by dynamic time wrapping for each of the formulations of the same systemic risk scores and some common systemic risk measures that are listed in Appendix A.

To facilitate the discussion, we let

and

be the time series of the systemic risk score constructed using the methods in EquationEquation (14)

(14)

(14) , where the corresponding adjacency matrices of the input time series of the networks to estimate

are

and

respectively. Let

and

be the time series of systemic risk score constructed using the methods in EquationEquation (A2)

(A2)

(A2) , where the adjacency matrices to compute the systemic risk score on trading day t are

and

respectively. Let

and

be the time series of the systemic risk score constructed using the methods in EquationEquation (A5)

(A5)

(A5) , where the input time series of networks to obtain estimated log odds are

and

respectively.

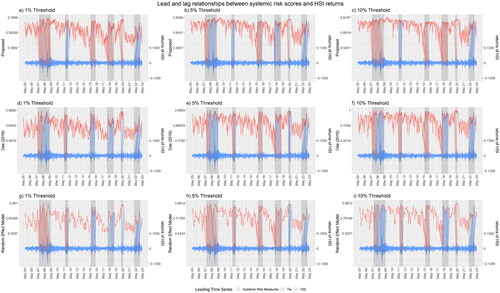

In , the systemic risk measures and the returns in the HSI are in red and blue, respectively. Each dotted line links two points so that there is one from each time series. The color of the dotted line indicates the leading time series. The gray dotted line indicates a tie. We evaluate the performance of each systemic risk measure by observing the period preceding the turbulence, where the periods of turbulence are shaded in gray. The red dotted line between the two curves indicates that the systemic risk measure has shown a pattern that later appears in the HSI returns.

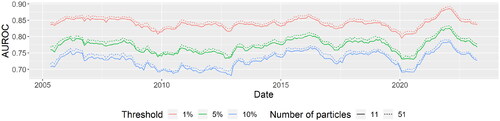

Figure 4. We compare by dynamic time wrapping the performance of the Das (Citation2016) and our proposed systemic risk scores (red): using the financial space model (a–c), using the adjacency matrix directly (d–f), and using a random effects model (g–i), with the returns of the HSI (blue) as the reference. We construct the network using the critical value for 21-day of historical correlation to be significantly positive at the (a, d, g) (b, e, h)

and (c, f, i)

levels. We only present those dotted lines that are relevant to financial turbulence, to indicate the lead and lag relationships during the turbulence.

shows that regardless of the choice of systemic risk contribution and the threshold level in the construction of the network, we observe that there is an unusual climb in the values of the systemic risk score on or before the market crashes. Then, the systemic risk score falls gradually and keeps fluctuating until the next market crash.

also shows the performance of the systemic risk score in detecting an early signal of financial instability. To better illustrate, we have selected parts of the wrapping curve (dotted lines in the figures) that are relevant to the market crashes. We first observe the results from the network with the financial space. Before the global financial crisis in mid-2007, both the and

lead the market return for 6 months during the crisis and continued to increase for 3 to 6 months throughout the crisis period. We believe with the 1% and 10% threshold, the systemic risk score successfully represents an early signal about the market. In the market crash brought on by the COVID-19 pandemic, the

and

value leads the market return for 3 months, while only the

values lead for 6 months. In

the number of days ahead of the crashes brought on by the COVID-19 pandemic is also too long to claim as an early signal. The

and

values continued leading for three months during the crisis, and the systemic risk score is also climbing during the crisis period. Therefore, we believe that an early signal is demonstrated successfully.

On the other hand, in the market turbulence from mid to late 2011, the systemic risk score failed to raise an early signal. All three systemic risk scores were lagging behind the market return. In the crashes from mid-2015 through early 2016, the systemic risk score also failed to raise an early signal. Although all of them lead the market before the crashes, the number of days ahead of the crashes in all the three

and

are also too long to claim it as an early signal. Moreover, the climbing trend comes too late to raise an early signal. In the market turbulence in 2018, not to mention the lagging

although all of the

and

lead the market return, the number of days ahead are too long. Therefore, no early signal appears. Finally, in the market crash in 2022, none of the

and

leads the market returns throughout the period. Although we observed a climb near the end of March, the systemic risk score has already missed the greatest drop in that period. Therefore, early signals also do not appear.

Different results are delivered when we use the adjacency matrix instead of the financial space to measure the contributions to systemic risk, i.e. the formulation proposed by Das (Citation2016). As is shown in the middle row of , during the global financial crisis from mid-2007 to early 2009, the systemic risk score does not lead the market return throughout the crisis for all the

and

During the crashes brought by the COVID-19 pandemic, only the

leads for three months, giving a warning signal for the crisis, and in the remaining crashes, the systemic risk score lags the market returns. The leading by the

does not hold throughout the whole period of the COVID-19 pandemic crash. The

lags the market returns. In the market turbulence in mid to late 2011, the

has raised an early signal as it has led the market for 2 to 3 months. However, the leadership does not hold in

and

During the crashes in mid-2015 through early 2016, both

and

lead the market return, but the number of days ahead of the crashes is either too short or too long to claim it as an early signal. Moreover, the climbing trend in

comes too late to raise an early signal. None of

and

lead the market throughout the whole crashes in 2018 and 2022.

Similar observations were found when we used the random effects model to measure the contribution to systemic risk. The systemic risk score led the market returns during the global financial crisis from mid-2007 to early 2009 only in and

and the leadership with the

does not persist throughout the whole period. For the market crash brought by the COVID-19 pandemic, only the

leads for three months, giving a warning signal for the crisis. In the market turbulence from mid to late 2011, all the

and

failed to lead the market throughout the whole period. In the market crash from mid-2015 through early 2016, the

and

thresholds lead for 6 months. However, it is hard to claim that as a warning signal because the value of systemic risk is moving downward. In other combinations of thresholds and crashes, the systemic risk scores lag the market returns. In the turbulence throughout 2018,

lags the market return. Even though

leads the market return in the market, the systemic risk score is declining in the pre-crisis period and not lead throughout the whole period. The

also suffers from a similar issue but lags the market return in most of the turbulence period.

We also compare the number of times that the systemic risk score leads the market returns with reference to and . The proposed systemic risk score has a similar number of time points that leading the returns of the HSI when comparing them with the scores using the adjacency matrix and the random effects models. Comparing the systemic risk score based on the the proportion of time that the

leads the market returns is larger than

followed by

Comparing the systemic risk score based on the

the proportion of time that the

leads the market returns is smaller than both

and

Finally, comparing the systemic risk score based on the

the proportion of time that

leads the market returns is the least, followed by the

and finally the

We believe that it is more important to lead the market returns, as an early signal, in the proximity of the crashes. Therefore, in terms of the early signal that we have successfully demonstrated, the systemic risk score based in the financial space performs better than the other two setups do.

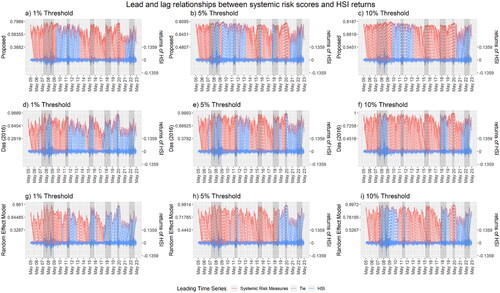

Figure 5. The complete wrapping curves between the Das (Citation2016) and our proposed systemic risk scores (red): using the financial space model (a-c), using the adjacency matrix directly (d-f), and using a random effects model (g-i), with the returns of the HSI (blue) as the reference. We construct the network using the critical value for 21-day of historical correlation to be significantly positive at the (a, d, g),

(b, e, h), and

levels (c, f, i).

Table 3. Number of time points in the wrapping curve that each systemic risk score leads, ties with, or lags the returns of the HSI, as summarized from .

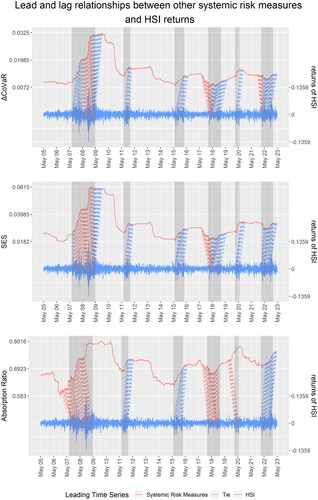

Next, we consider the performance of other systemic risk measures. shows the performance of three other systemic risk measures in providing an early signal of financial instability. Again, those regions shaded in gray are the periods of market turbulence or worldwide financial incident. We have selected the parts of the wrapping curve that are relevant to the market crashes for better illustration.

Figure 6. We compare here by dynamic time wrapping the performances of three other systemic risk measures: (a) CoVaR, (b) the systemic expected shortfall, and (c) the absorption ratio, using the returns of the HSI as the reference time series. We include only the dotted lines for the time points that are relevant to financial turbulence.

Although all three measures climb during most market crashes, the systemic risk measure seldom leads the market returns throughout the periods of the crashes. Among all of the other systemic risk measures considered in this study, only the absorption ratio (AR), defined in Appendix A.5, leads the market returns during the global financial crisis from mid-2007 to early 2009, the turbulence throughout 2018, and also during the crash of the COVID-19 pandemic, for 6 to 9 months. However, as an early signal, the AR may not be dependable given the low position of the value of the AR before the global financial crisis from mid-2007 to early 2009. It is even dropping before the turbulence throughout 2018. The only time that we can claim the AR as an early signal is before the COVID-19 pandemic, when the AR keeps climbing, especially with a jump after late 2019, until mid-2020.

In the global financial crisis from mid-2007 through early 2009, CoVaR leads only from mid-2008 to early 2009, which covers the strongest period of the crash throughout the study period. However, the measure lags during the remaining period of the crisis. The Systemic Expected Shortfall (SES), defined in Appendix A.4, leads from mid-2007 to early 2009 but still does not lead throughout the whole financial crisis, and it also lags the market returns in the most remaining market crashes.

5. Discussion