?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This paper proposes an artificial neural network (ANN) architecture for solving nonlinear fractional differential equations. The proposed ANN algorithm is based on a truncated power series expansion to substitute the unknown functions in the equations in this approach. Then, a set of algebraic equations is resolved using the ANN technique in an iterative minimization process. Finally, numerical examples are provided to demonstrate the usefulness of the ANN architectures. The results verify that the suggested ANN architecture achieves high accuracy and good stability.

1. Introduction

The study of applications of arbitrary-order integrals, differentials, and mathematical characteristics is done in fractional calculus. The fractional calculus operator is highly appropriate for necessarily suitable with genetic traits and storage. Recently, this topic has gained popularity among scientists due to its extensive use in several branches of engineering, research [Citation1–6] and many others. The investigation of fractional differential equations (FDEs) has received significant interest and is now an evolving research topic. In [Citation7], the authors investigated the stability analysis and system characteristics of the Caputo sense fractional model of Nipah virus transmission. In [Citation8], the human liver is mathematically modeled using the Caputo-Fabrizio fractional derivative and numerically analyzed with real clinical data. In [Citation9], a regularized fractional derivative Ψ -Hilfer is analyzed, as well as various applications. The study of [Citation10] examines a mathematical model for a real-world cholera outbreak employing the Caputo fractional derivative. In [Citation11], within the framework of fractional calculus, the authors examined the dynamics of the motion of an accelerating mass-spring system. Fractional-order differential equations typically have difficulty solving analytically, and their approximations and numerical solutions are of growing interest to researchers. Therefore, it is critical to creating some solid and effective strategies for managing FDEs.

Compared to conventional numerical techniques, ANN approximation computing appears to be less sensitive to the spatial dimension. ANN offers a flexible mesh; however, it does not need to directly deal with the mesh; instead, it simply needs to find a solution to the optimization task. Based on these characteristics and benefits, Lagaris et al. [Citation12] invented the network technique for the Differential Equations problem early on. In [Citation13], a second-order perturbed delay Lane-Emden model originating in astrophysics is solved using a neural swarm technique based on ANN. In [Citation14], the classic Lane-Emden model is used to create a singular third-order perturbed delay differential model with its two kinds, and it is solved using the ANN procedure. In [Citation15], a neuro-evolutionary method based on ANN is given for addressing a class of unique singly disturbed boundary value problems. The authors of [Citation16] have offered an analysis of the fractional singular perturbed model based on fractional order derivatives and perturbation terms. In [Citation17], the authors present a computationally intelligent method to solve the third type of nonlinear pantograph Lane-Emden differential problem. In [Citation18], a singular multi-pantograph delay differential equation has been solved using the ANN technique with optimization of the genetic algorithm. In [Citation19], a Python library named NeuroDiffEq is proposed to solve differential equations with ANN. In [Citation20], Wu et al. developed a wavelet neural network (WNN) with the structure to solve the fractional differential equations and gave the conditions for convergence. In [Citation21], Gao et al. proposed a triangle neural network to solve Caputo-type fractional differential equations. Earlier this decade, the use of ANNs to solve FDEs has attracted a lot of attention from various scholars and specialists [Citation22–25].

Due to their numerous benefits, ANNs are gaining importance. For example, it provides error detection and resilience for any quantitative or qualitative characteristics recorded in each neuron in the network with adjacent allocation and can perfectly simulate any extremely nonlinear connection. They may use parallel distributed processing techniques, which can accelerate the completion of large-scale activities, and they can recognize and adapt to new or ambiguous systems. Additionally, the power series approach can be utilized to solve challenging mathematical problems, can offer a series polynomial as a resolution function, and can be used to compute the coefficient. Various real-world problems have been modeled in FDEs, as mentioned above. Simple FDEs can be solved analytically method. However, solving complex FDEs are challenging for analytical and numerical methods. In this paper, we produce an approximation solution of the FDEs,a specific fractional-order Lane-Emden equation, and a nonlinear higher fractional-order differential equation. For this purpose, we integrate the ANN structure with the truncated power series approach in an iterative minimization process. Here, we employ Caputo's definitions for fractional differentials.

The rest of the paper is organized as follows; Section 2 presents the framework, including a brief overview of the problem and the definitions and notation of fractional calculus. Section 3 describes the implementation of the ANN algorithm. Section 4 uses a suitable truncated series expansion to express the answer. The problem becomes a minimization problem by applying the mean squared error function. Also, numerical examples are provided to demonstrate the usefulness of the suggested approach. Section 4 concludes the study and gives the future direction of the approach.

2. Preliminaries

This section initially provides some basic notation and concepts for fractional calculus.

Definition 2.1

Let be the differential function on

. The fractional integral of

is defined as

(1)

(1) where

represents the Gamma function.

Definition 2.2

Let be the differential function on

, then the Caputo fractional derivatives of

are defined by

(2)

(2)

(3)

(3) where ν is a fractional number, n is a natural number of N, and

represents the gamma function.

In order, we compute the Caputo fractional derivative of as follows.

(4)

(4) and

(5)

(5) where C denotes the constant,

is the least integer greater than or equal to ν. In the following work, we use

instead of

for convenience.

2.1. Problem statement

In this paper, we consider a nonlinear fractional differential equation such as

(6)

(6) subject to the initial conditions as follows.

where

is the fractional order,

is an analytical rational function, and

are known analytical functions of real value.

The Lane-Emden equation can be used to simulate a variety of astrophysical and mathematical physics problems [Citation26, Citation27], which creates a non-linear singular initial value problem in semi-infinite domains of differential equations. The general form of the Lane-Emden equation is as follows:

(7)

(7) where l>0 and with initial conditions

,

.

On the other hand, several scholars have recently modeled the fractional order Lane-Emden equation. Consideration of its derivative in the Caputo sense allows for Equation (Equation7(7)

(7) ) to be generalized. Thus, it is possible to derive the following arbitrary-order Lane-Emden equation as follows:

(8)

(8) where l>0,

,

with

,

. This type of problem is considered to be solved using ANN considered as an example in the study. Also, we implemented the ANN to deal with higher-order fractional differential equations.

Ordinary differential equations are resolved by conventional power series approaches. In this approach, the truncated power series expansion is considered to substitute the unknown functions in the equations. A set of discrete point-based equations with unknown coefficients is produced once the problem's unknown functions have been substituted. After that, a set of such algebraic equations is resolved using the artificial neural network technique.

Here, we expressed the power series as follows.

(9)

(9) where

are the unknown coefficients. Substituting Equation (Equation9

(9)

(9) ) into Equation (Equation6

(6)

(6) ), we have the following.

(10)

(10) Here,

, where

is the size of the mesh on the x axis and

. Next, we arrive at the discretization method shown below.

(11)

(11) where m is the order of the power series polynomial.

3. ANNs approach

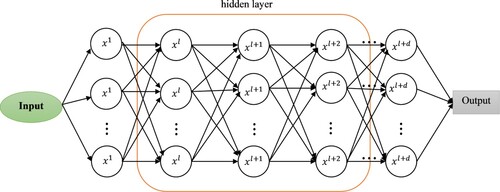

The Universal Approximation Theorem [Citation28] states that an artificial neural network can accurately approximate any closed interval specified in real space. In this work, we applied a multilayer perceptron (MLP) neural network architecture with a layer of input, two layers of hidden n neurons, and an output layer.

Each hidden neuron in Figure is formed as a function that deals with the linear combination of the weight matrix and the neuron's inputs (the learning algorithm optimizes the model parameters ). The output of each hidden neuron is used as the input of the other neuron, and the output of the neuron of the output layer makes up the output of MLP neural network. The ANN used in this work to solve FDEs (Equation6

(6)

(6) ) can be mathematically expressed as Equation (Equation11

(11)

(11) ).

The interaction between each neuron in the neural architecture can be inferred mathematically as follows: (12)

(12)

(13)

(13) where

and

denote the weights matrix corresponding to the associated input

and

respectively,

denotes the bias, σ is the activation functions, and

represent the output of the network. Here, we discrete x in the set of points

on the interval

where

.

The loss function is defined as follows when the neural network is used to compute the numerical solution of problem (Equation6(6)

(6) )

(14)

(14) where λ is the known artificial parameter, and

is a regularization that helps to push outlier weights nearer 0 but not quite to 0. For ease of expression, the aforementioned mathematical letters

can be written as below.

(15)

(15) The parameter

is trained through the neural network as shown in Equation (Equation14

(14)

(14) ). After that, the value of the right side of (8) can be compared with the value of the left side of (8) and the overall error is obtained by adding the error functions such as

(16)

(16) then, the following optimization problem is achieved

(17)

(17) Figure represents the structure of a neural network, and its hidden neurons of the hidden layers are described in Equation (Equation12

(12)

(12) ). Finally,

is obtained via the forward propagation of Figure . Then substitute

and discrete point

into both sides of Equation (Equation11

(11)

(11) ), and execute Equation (Equation14

(14)

(14) ). Equation (Equation11

(11)

(11) ) serves as a loss function for adjusting

and

. We employ the ReLU function as the activation function in this neural network because ReLU provides the following benefits. First, it can speed up network training because its derivative is more straightforward to get than the sigmoid and tanh functions. Second, it makes the network more nonlinear, because it is a nonlinear function by nature, when applied to the neural network, It can be a grid-fitting nonlinear mapping. Third, it can also stop the gradient from fading. The derivatives of the sigmoid and tanh functions are near 0 when the value is too high or too low, but since relu is an uncompressed activation function, this occurrence does not occur. Finally, it can decrease overfitting by making the grid sparse because the part less than zero is zero and the part higher than zero has value.

Consequently, we decide to adopt the adaptive moment estimation (Adam) approach to minimize the loss function because it is successful in real-world applications. Compared to other approaches to adaptive learning rate, it has a higher convergence rate and a more reliable learning impact. Additionally, it can fix problems with other optimization methods, including the disappearance of the learning rate, plodding convergence speed, or significant loss function variation brought on by high variance parameter update.

4. Numerical simulations

In this part, we provide three examples to demonstrate the validity and effectiveness of the proposed ANN algorithm. Python 3.9.1 was used to implement all the numerical results in the following examples. In the examples, the proposed architecture of the MLP neural network sets up three hidden layers, the first two have 30-30 hidden neurons, and the third has 3. These hidden layers are fully connected with one input layer and one output layer. This proposed architecture is known as Net.4 in the rest of the paper. Here, the results of Net.4 are compared to Net.1 [Citation22], Net.2 [Citation25], and Net.3 [Citation25]. More details about these neural networks are given in Table . We take m = 3 and compute the following mean square error (MSE) in numerical experiments to make comparisons easier.

(18)

(18) where n represents the number of epochs.

Table 1. Structure of the proposed and comparative networks.

With the batch size 64, MSE is optimized using the Adam optimizer. Adam is a first-order gradient-based optimization of stochastic loss functions that has found considerable use in fitting intricate deep neural networks [Citation29]. The approach is reliable, computationally practical, and effective when applied to deep neural networks with many parameters. To obtain the optimal optimization result, tuning the learning rate, learning rate warm-up, learning rate schedules, momentum choices, selection of regularizers and their parameters are required.

Example 4.1

Consider the problem of the Bagley–Torvik equation [Citation30]

(19)

(19) with the initial conditions

and

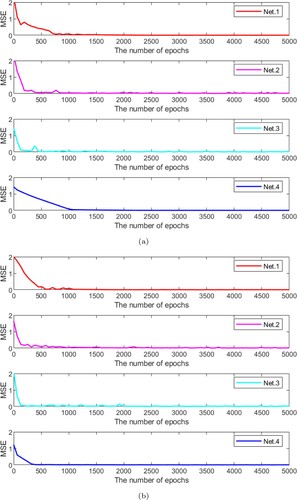

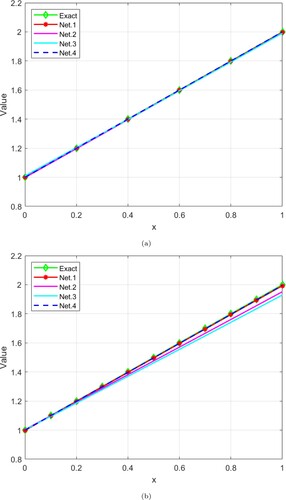

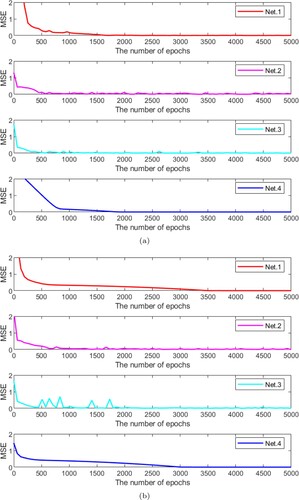

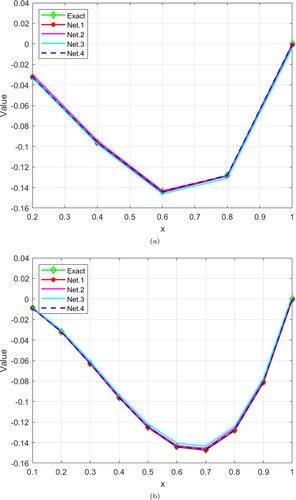

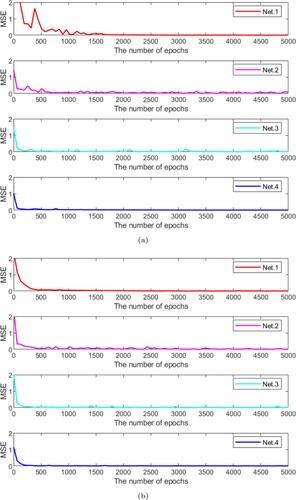

Figure represents the influences of MSE with an increasing number of epochs. It consists of the performance of four networks for n = 6 and n = 11. We can observe that Net.2 and Net.3 have the value of the loss function has a sharp decrease trend, and when increasing the number of epochs then, the value of the loss function fluctuates. However, Net.1 and Net.4 show stable lines after a certain number of epochs, which means the value of the loss function consistently decreases without deteriorating the accuracy. Table consists of the absolute error of with the epochs of 5000, where

represent the exact solutions associated with the sample x and y is the approximate solutions for Net.1, Net.2, Net.3, and Net.4. Net.4 achieved higher accuracy than the other comparative networks. The exact and approximation solutions are represented in Figure . It is confirmed that the suggested Net.4 works effectively since the curve of the exact solution almost precisely coincides with the curve of the approximate solutions.

Table 2. Absolute error of for epoch = 5000.

Example 4.2

Consider the problem of the fractional order Lane-Emden equation [Citation31]

(20)

(20) with

,

.

In this example, we consider ,

, and

(21)

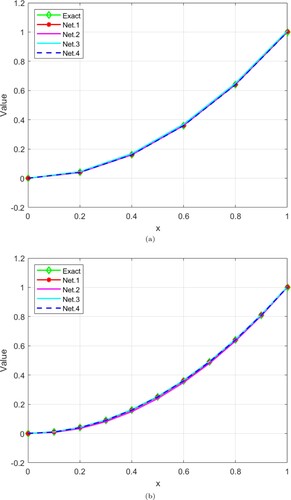

(21) The test Example 4.2 is a special type of fraction differential equation different from Example 4.1. As in the previous Example 4.1, Figure shows the MSE with increasing the number of epochs for proposed architecture (Net.4) and comparative networks (Net.1, Net.2, and Net.3). The absolute error of

is recorded in Table . Additionally, Figure plotted the exact and approximation solutions for various numbers of collocation points.

Table 3. Absolute error of for epoch = 5000.

Example 4.3

Consider the problem of the higher order nonlinear FDE [Citation32]

(22)

(22) with

,

, and

.

The test Example 4.3 is a higher order nonlinear fractional differential equation different from the previous test Examples 4.1 & 4.2. As in the previous examples, Figure , Table , and Figure display the same results.

Table 4. Absolute error of for epoch = 5000.

5. Conclusion

This paper suggests an ANN architecture to solve fractional differential equations. The advantages of the proposed ANN algorithm are examined using the three different fractional differential equations, including a higher-order nonlinear fractional differential equation. The proposed neural network architecture finds more accuracy than the comparative neural networks (Net.1 [Citation22], Net.2 [Citation25], Net.3 [Citation25]) in the test examples considered and could get a more satisfactory approximate solution with non-mesh discretization. The result obtained here is more accurate compared to existing results obtained in the available literature. We have uploaded the main source code on GitHub: https://github.com/amanojup/FDEsANN.git. The methodology described in this article can be applied to other types of challenging fractional-type problems.

Acknowledgments

The researchers would like to acknowledge Deanship of Scientific Research, Taif University for funding this work. Further authors are grateful to reviewers for their fruitful comments. Manoj Kumar are thankful to UGC for providing SRF.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Baleanu D, Diethelm K, Scalas E, et al. Fractional calculus: models and numerical methods. Vol. 3. New Jersey (NJ): World Scientific; 2012.

- Baleanu D, Güvenç ZB, Machado JT, et al. New trends in nanotechnology and fractional calculus applications. Vol. 10. New York (NY): Springer; 2010.

- Bazhlekova E, Bazhlekov I. Viscoelastic flows with fractional derivative models: computational approach by convolutional calculus of dimovski. Fract Calc Appl Anal. 2014;17(4):954–976.

- Arqub OA, Al-Smadi M. An adaptive numerical approach for the solutions of fractional advection–diffusion and dispersion equations in singular case under riesz's derivative operator. Phys A Stat Mech Appl. 2020;540:Article ID 123257.

- Su X, Xu W, Chen W, et al. Fractional creep and relaxation models of viscoelastic materials via a non-newtonian time-varying viscosity: physical interpretation. Mech Mater. 2020;140:Article ID 103222.

- Stefański TP, Gulgowski J. Signal propagation in electromagnetic media described by fractional-order models. Commun Nonlinear Sci Numer Simul. 2020;82:Article ID 105029.

- Baleanu D, Shekari P, Torkzadeh L, et al. Stability analysis and system properties of nipah virus transmission: A fractional calculus case study. Chaos Solitons Fract. 2023;166:Article ID 112990.

- Baleanu D, Jajarmi A, Mohammadi H, et al. A new study on the mathematical modelling of human liver with caputo–fabrizio fractional derivative. Chaos Solitons Fract. 2020;134:Article ID 109705.

- Jajarmi A, Baleanu D, Sajjadi SS, et al. Analysis and some applications of a regularized ψ–hilfer fractional derivative. J Comput Appl Math. 2022;415.

- Baleanu D, Ghassabzade FA, Nieto JJ, et al. On a new and generalized fractional model for a real cholera outbreak. Alex Eng J. 2022;61(11):9175–9186.

- Defterli O, Baleanu D, Jajarmi A, et al. Fractional treatment: an accelerated mass-spring system. Rom Rep Phys. 2022;74:122.

- Lagaris IE, Likas AC, Papageorgiou DG. Neural-network methods for boundary value problems with irregular boundaries. IEEE Trans Neural Netw. 2000;11(5):1041–1049.

- Sabir Z, Said SB, Al-Mdallal Q, et al. A neuro swarm procedure to solve the novel second order perturbed delay lane-emden model arising in astrophysics. Sci Rep. 2022;12(1):1–15.

- Sabir Z, Said SB. Heuristic computing for the novel singular third order perturbed delay differential model arising in thermal explosion theory. Arab J Chem. 2023;16(3):Article ID 104509.

- Sabir Z, Botmart T, Raja MAZ, et al. A stochastic numerical approach for a class of singular singularly perturbed system. PLoS One. 2022;17(11):e0277291.

- Sabir Z, Said SB, Baleanu D. Swarming optimization to analyze the fractional derivatives and perturbation factors for the novel singular model. Chaos Solitons Fract. 2022;164:Article ID 112660.

- Sabir Z, Raja MAZ, Ali MR, et al. An advance computational intelligent approach to solve the third kind of nonlinear pantograph lane–emden differential system. Evol Syst. 2022;12:1–20.

- Sabir Z, Wahab HA, Nguyen TG, et al. Intelligent computing technique for solving singular multi-pantograph delay differential equation. Soft Comput. 2022;16:1–13.

- Chen F, Sondak D, Protopapas P, et al. Neurodiffeq: A python package for solving differential equations with neural networks. J Open Source Softw. 2020;5(46):1931.

- Wu M, Zhang J, Huang Z, et al. Numerical solutions of wavelet neural networks for fractional differential equations. Math Methods Appl Sci. 2021;46:3031–3044.

- Gao F, Dong Y, Chi C. Solving fractional differential equations by using triangle neural network. J Funct Spaces. 2021;2021:1–7.

- Rostami F, Jafarian A. A new artificial neural network structure for solving high-order linear fractional differential equations. Int J Comput Math. 2018;95:528–539.

- Pakdaman M, Ahmadian A, Effati S, et al. Solving differential equations of fractional order using an optimization technique based on training artificial neural network. Appl Math Comput. 2017;293:81–95.

- Hadian-Rasanan AH, Rahmati D, Gorgin S, et al. A single layer fractional orthogonal neural network for solving various types of lane–emden equation. New Astron. 2020;75:Article ID 101307.

- Dai P, Yu X. An artificial neural network approach for solving space fractional differential equations. Symmetry. 2022;14(3):535.

- Singh R. Optimal homotopy analysis method for the non-isothermal reaction–diffusion model equations in a spherical catalyst. J Math Chem. 2018;56(9):2579–2590.

- Singh R. Analytical approach for computation of exact and analytic approximate solutions to the system of lane-emden-fowler type equations arising in astrophysics. Eur Phys J Plus. 2018;133(8):1–12.

- Hornik K. Approximation capabilities of multilayer feedforward networks. Neural Netw. 1991;4(2):251–257.

- Kingma D, Ba J. Adam: A Method for Stochastic Optimization. San Diego 3rd International Conference for Learning Representations. 2015.

- Momani S, Odibat Z. Numerical comparison of methods for solving linear differential equations of fractional order. Chaos Solitons Fract. 2007;31(5):1248–1255.

- Akgül A, Inc M, Karatas E, et al. Numerical solutions of fractional differential equations of lane-emden type by an accurate technique. Adv Differ Equ. 2015;2015(1):1–12.

- Deshi A, Gudodagi G. Numerical solution of Bagley–Torvik, nonlinear and higher order fractional differential equations using haar wavelet. SeMA J. 2022;79(4):663–675.