ABSTRACT

Less than half of U.S. veterans meet physical activity guidelines. Even though changing physical activity can be challenging, prior studies have demonstrated that it is possible. Older adults are using technology to aid in such behavior change. However, research that explores the mechanisms of how technology can aid in behavior change is lacking, especially among older veterans. Thus, the purpose of this secondary, convergent mixed methods study was to explore how older veterans engaged with technologies that were used during a multicomponent telerehabilitation program. The study included veterans aged ≥ 60 years with ≥ 3 chronic medical conditions and physical function limitation. Quantitative data were collected during the primary randomized controlled trial, and qualitative data were collected via individual interviews following completion of the telerehabilitation program. Data were merged and then analyzed by high vs. low technology engagement groups. Key similarities and differences between groups were identified in five domains: satisfaction with the virtual environment, coping self-efficacy, perceptions of Annie (automated text messaging platform), experiences using the activity monitor, and self-management skills. Findings can help inform the successful integration of similar technologies into physical rehabilitation programs. Further study is warranted to understand additional factors and mechanisms that influence technology engagement in telerehabilitation.

Trial Registration: NCT04942613

Introduction

Physical inactivity is a key contributor to the development and worsening of noncommunicable diseases (Budreviciute et al., Citation2020), which are the leading cause of premature death and disability worldwide (Division of Global Health Protection, Citation2023). Within the United States, 50–75% of individuals over the age of 65 with a chronic medical condition do not meet the recommended physical activity guidelines of 150 minutes of moderate-to-vigorous physical activity (Omura et al., Citation2021). Among the veteran population, less than 50% meet physical activity guidelines, and this statistic is even lower among veterans who receive care from Veterans Health Administration (VHA) (Brown et al., Citation2019; Littman et al., Citation2009). Increasing and then sustaining routine physical activity is essential. Higher levels of physical activity can reduce the negative sequalae of noncommunicable diseases such as heart disease and diabetes (Budreviciute et al., Citation2020). Increasing physical activity can also help older veterans age in place through other well established health benefits such as improved cognition, mood, physical function, and overall well-being (Rhodes et al., Citation2017; Warburton & Bredin, Citation2017). Prior research demonstrates that making meaningful changes in daily physical activity behaviors requires more than addressing impairments such as weakness, physical function, and pain (Bartholdy et al., Citation2020; Giannouli et al., Citation2016; Rapp et al., Citation2012). Programs designed to increase daily physical activity must also support behavior change by incorporating theoretically informed techniques such as self-monitoring and goal setting.

In recent years, behavior change programs to improve physical activity have included digital technologies either as the primary intervention or a supplemental tool (Alley et al., Citation2022; Goode et al., Citation2017; Stockwell et al., Citation2019). Prior studies have demonstrated the potential value of technology to support physical activity (Aromatario et al., Citation2019; Goode et al., Citation2017; Head et al., Citation2013), but there are limitations. For example, interventions that rely solely on the provision of digital technologies, such as an activity monitor, tend to show no changes in physical activity (Chokshi et al., Citation2018; Ferrante et al., Citation2020), suggesting that simply supplying a digital health technology is not enough to facilitate behavior change. Similarly, a qualitative study that explored veteran perspectives on the usefulness of a wearable device for increasing physical activity described mixed opinions about whether the device could help with behavior change (Kim & Patel, Citation2018). In contrast, programs that use such tools to support behavior change techniques, such as self-monitoring, feedback, and goal setting, show engagement in physical activity (St Fleur et al., Citation2021; Zubala et al., Citation2017). However, how digital health technologies aid in behavior change is relatively unexplored (Aromatario et al., Citation2019).

The use of digital health technologies as a component of behavior change interventions designed to improve physical activity in older adults is fraught with barriers. Some established barriers include reduced cognitive functioning, fine motor control impairments, sensory impairments, and poor internet access (Ahmad et al., Citation2022; Alexander et al., Citation2021; Wildenbos et al., Citation2018). Further, a recent study found age stereotypes negatively influence how healthcare professionals view the technology capabilities of older adults, suggesting that ageism may be a barrier to adoption of digital health technologies (Mannheim et al., Citation2021). Other studies of ageism have explored how societal ageist beliefs influence older adults’ views—a phenomenon known as internalized ageism—of their own technology capabilities and showed that such beliefs can reduce the likelihood of older adults’ technology engagement (Choi et al., Citation2020; Köttl et al., Citation2021). Given the multitude of barriers older adults may face when using technology, it is important to develop a deeper understanding of solutions and facilitating conditions so that more older adults have the opportunity to use digital technologies to improve their health.

To improve understanding of the mechanisms by which digital health technologies support behavior change among older veterans and older adults’ experiences using technology during an exercise intervention, we conducted a convergent mixed-methods study that was embedded within a randomized controlled feasibility trial. The feasibility trial evaluated a MultiComponent TeleRehabilitation (MCTR) program designed to improve physical function, perceived health, and daily physical activity by comprehensively supporting behavior change. Two of the four components of the program included 1) a biobehavioral intervention and 2) adjunctive technologies. Our aims for this embedded convergent mixed methods study were to: (1) evaluate technology engagement level and identify characteristics associated with high versus low technology engagement (quantitative); (2) evaluate whether technology engagement level was associated with median change in physical activity from pre- to post-program (quantitative); (3) describe participants’ experiences using technologies and their perspectives of how the technologies influenced physical activity (qualitative); and (4) describe salient differences that contributed to high versus low technology engagement (mixed methods).

Materials and methods

Research design

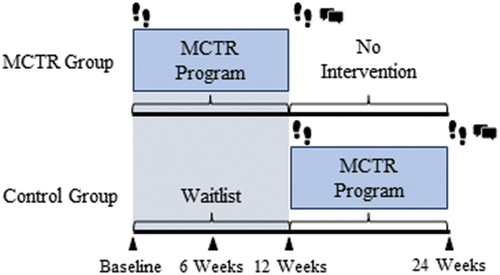

This was an embedded, convergent mixed-methods research study (Curry et al., Citation2013). We followed Fetters (Fetters et al., Citation2013) guidelines for reporting a mixed-methods study. The main study was a randomized, outcome assessor blinded, two-arm feasibility study. Participants were randomized to: (1) intervention or (2) waitlist control. The intervention group started the 12-week MCTR program immediately following randomization. The waitlist control group completed six one-hour education sessions every other week over 12 weeks and then had the option to complete the MCTR program. Further details of the study are described in the trial registration (NCT04942613).

Participants & setting

For the main study, participants were recruited nationally through media postings, and locally through referrals from clinician partners. The MCTR program was delivered by physical therapy clinicians located within the VA Eastern Colorado Health Care System using a site-to-home format where the site refers to the physical location of the physical therapist and home refers to the physical location of the participant. Participants were eligible for inclusion if they were a U.S. veteran ≥60 years of age, had ≥ 3 medical conditions, and had impaired physical function as measured by the 30 second sit-to-stand test. Potential participants were excluded if they had a life expectancy of <12 months, acute or progressive neurological disorder, moderate to severe dementia without caregiver assistance, or any medical condition precluding safe participation in high-intensity progressive rehabilitation. Enrolled participants were consecutively recruited for semi-structured interviews equally from both randomization groups within 3 weeks of completing the MCTR program. Thus, participants in the intervention group were recruited following 12-week outcome assessment, and participants in the waitlist control group were recruited following 24-week outcome assessment. Enrollment for interviews continued until we reached theoretical saturation.

MCTR program

The MCTR program consisted of 4 components: (1) high-intensity rehabilitation, (2) biobehavioral intervention, (3) social support, and (4) technology. The overarching goal of the MCTR program was to improve physical function, perceived health, and daily physical activity. Since the purpose of this secondary analysis was to explore how technology supported program participation and behavior change, the description of the program here will be limited to the biobehavioral intervention and the technology supports.

The biobehavioral intervention—termed ‘coaching’ for participants—was integrated into six of the ten 60-minute individual telerehabilitation sessions and occurred as a stand-alone intervention for two 30-minute booster sessions during weeks 7–12 of the program. The main goals of the coaching sessions were to facilitate program engagement, elicit motivations for change, promote increased physical activity, and teach participants self-management skills such as goal setting and problem solving. All research physical therapists were trained in motivational interviewing (MI) techniques (Miller & Rollnick, Citation2013) to facilitate delivery and personalization of the coaching.

Technologies included 1) VA Video Connect (VVC), which is the VA-approved telehealth platform for synchronous video visits; 2) a text messaging protocol delivered by the VA’s web-based program called Annie; and 3) activity monitoring (Fitbit or another wearable activity monitor). VVC is a secure videoconferencing platform that can be used in a web browser (e.g. Chrome) or mobile application. VVC includes safety features within the platform that allow clinicians to document the patient’s address so that they can activate emergency medical services in the event of a medical or mental health emergency. Annie is an automated VA text messaging platform that contains multiple health subscriptions; these subscriptions are designed to support a veteran’s health self-management. We developed a specific text messaging subscription to support participants during the MCTR program and enhance biobehavioral interventions. One-way text messages—meaning no participant response was requested—provided education, reminders, and encouragement. Two-way text messages collected data about step counts (once daily), falls (once weekly), goals (once weekly starting in week 5), and goal progress (once weekly starting in week 5). The full text message subscription is detailed in a previous manuscript (). Finally, participants were offered a Fitbit Versa 2 (Fitbit, San Francisco, California) for activity monitoring. Some participants already owned an activity monitoring device (e.g. Apple watch, Samsung watch, etc.) and opted to use their device for the research study. Research staff assisted participants with the Fitbit set-up. Physical therapists educated participants about how to use basic functions and integrated the data into the coaching sessions.

Data collection

provides an overview of the study design and timing of data collection.

Figure 1. Timing for data collection.

Quantitative data collection

Quantitative data were collected and managed using REDCap tools hosted at the University of Colorado Denver (NIH/NCRR Colorado CTSI Grant Number UL1 RR025780) (Harris et al., Citation2009, Citation2019). REDCap is an encrypted, HIPAA-compliant web-based software platform designed to support data capture. All surveys were sent to participants’ preferred email for completion, and the outcome assessor was present to address any questions. All outcomes were collected at pre-program and post-program. For the intervention group, pre-program collection occurred at baseline and post-program collection occurred at week 12. For the waitlist control group, pre-program collection occurred at week 12 and post-program collection occurred at week 24.

Demographics and participant characteristics were collected at baseline via self-report or extracted from the medical record (see ). Rurality (yes vs. no) was determined based the participant’s zip code and the Federal Office of Rural Health Policy Eligible Zip Codes file (Federal Office of Rural Health Policy FORHP, Citation2022). Travel miles saved was defined as the round-trip driving distance between the veteran’s physical address and the nearest VA facility.

Table 1. Baseline demographics and participant characteristics (n = 50).

The primary exposure to technology engagement was defined with two conditions: 1) responding to ≥ 80% of Annie text messages, and 2) setting at least 1 goal based on activity monitoring data. We set a threshold of ≥ 80% because this was the adherence level we used in our previous work,34 and identifying an activity monitor goal via chart review was the only feasible objective measure we had to quantify engagement with the activity monitor. Participants were considered high engagers if both conditions were present and low engagers if 1 or no conditions were met. The definition for technology engagement differed somewhat from our a priori definition, which only considered response rate to the Annie text messages (condition 1). We added the second condition for the activity monitor following research team discussions because we believed it better captured engagement with both adjunctive technologies.

The primary outcome, physical activity, was defined as the average number of free-living steps/day. Physical activity was measured before and after the MCTR program using an accelerometer-based activity monitor (ActiGraph GT3X-BT, Pensacola, FL). Participants were instructed to wear the device with a waist belt, except when bathing, for 10 days. Data were sampled at 60 Hz. A minimum of 4 valid wear days (>600 minutes wear time) was required for analysis.

Covariates of interest included cognition, technology skills, and program satisfaction. Cognitive function was a covariate of interest because prior research has demonstrated that cognition may be a factor influencing technology use (Choi et al., Citation2020; Wildenbos et al., Citation2018). Cognitive function was assessed using the Telephone Montreal Cognitive Assessment (MoCA), which is a modified version of the MoCA that removes the items requiring vision so it may be conducted via phone. Scores range from 0 to 22; scores of < 18 have high sensitivity to detect cognitive impairment (Pendlebury et al., Citation2013). Technology skills were assessed at baseline using the Mobile Device Proficiency Questionnaire (MDPQ)-16 (Roque & Boot, Citation2018). This 16-item survey measures a person’s capability to perform different tasks on a mobile device using a 1 to 5 Likert-type scale (1-never tried, 2-not at all, 3-not very easily, 4-somewhat easily, 5-very easily). The MDPQ-16 is a validated measure with excellent reliability; summative scores range from 16 to 80. Participant satisfaction was measured using the v-signals survey, which is an 11-item VA survey specific to video telehealth appointment. Items are rated on a 5-point Likert scale [(1) strongly disagree to (5) strongly agree].

Qualitative data collection

Sixty-minute semi-structured key informant interviews were conducted via the VVC platform. This platform was used for interviews because it is a secure videoconferencing platform and it was used for the MCTR program, thus supporting familiarity and continuity. Interviews were conducted by one research team member (MRR), and she was joined by one other member of the research team (JP or MLM) for a subset of interviews. MRR had a therapeutic relationship with some of the key informants because she was also a study interventionist. As such, she encouraged candid feedback by stating that the goal of the interviews was to learn about participants’ experiences to improve the program for other veterans. She also emphasized that anything stated during the interview would not detract from the therapeutic relationship. An interview guide was used to focus the interview on participants’ experiences of the MCTR program in general, the use of technologies, and perceived changes in physical function, participation in life roles, and physical activity behavior (Appendix 1). The interviews were audio-recorded with permission, professionally transcribed, and checked for accuracy by a member of the study team.

Data analysis

Quantitative data analysis

All quantitative data were summarized using means, standard deviations (SD), medians, IQR, n and percent, as appropriate. Technology engagement was dichotomized for statistical analysis. First, we assessed for between group differences using chi-squared test for categorical variables and Wilcoxon rank sum test for continuous variables. We used linear regression to model change in daily step counts as a function of technology engagement level and cognition both in univariate models and multivariate models adjusted for baseline step counts. Least squares mean (95% CI) for change in average daily step counts was estimated from the adjusted model.

Qualitative data analysis

A directed content analysis approach (Hsieh & Shannon, Citation2005) was used to analyze the qualitative data. An initial codebook was developed deductively using codes based on the MCTR program components; for example, one initial code was ‘perceptions of Annie’ because the text messaging subscription was an integral technology in the MCTR program. The data and codebook were organized using Dedoose (Dedoose, Los Angeles, CA) software. MRR and MKT independently coded the transcripts using deductive codes and added codes as they emerged from the data. MRR and MKT met regularly to discuss the transcripts, update the codebook, and resolve any discrepancies in applying the codebook. Similar codes were grouped into higher-order conceptual domains. For example, human factor, text acceptance, and content relevance were codes within the domain ‘perceptions of Annie’. When initial coding was completed, MRR and MKT reviewed excerpts by code to identify emerging themes within each domain.

Mixed-methods analysis

Integration of qualitative and quantitative data occurred via embedding and merging (Fetters, Citation2020). We used the engagement level to group our qualitative data, exported each dataset from Dedoose, and analyzed each group’s excerpts separately to explore patterns. Based on emerging patterns, our team then moved between quantitative and qualitative data iteratively to enhance interpretation. For example, a qualitative group difference emerged within the domain of the virtual environment—high engagement group consistently discussed feeling safe whereas this experience did not emerge consistently for the low engagement group. This difference prompted us to return to the quantitative data to explore group differences, and we evaluated the difference in safety events between engagement groups. We also used a joint display (Guetterman et al., Citation2021) to further interpretation by displaying both qualitative and quantitative data. Finally, meta-inferences by engagement group and by domain were drawn from the joint display.

Ethical considerations

The Colorado Multiple Institutional Review Board (COMIRB 21–2773) and the VA Eastern Colorado Healthcare System Research & Development committee approved the study protocol, and electronic informed consent was obtained from all participants using Research Electronic Data Capture (REDCap) tools hosted at the University of Colorado Denver (NIH/NCRR Colorado CTSI Grant Number UL1 RR025780) (Harris et al., Citation2009, Citation2019).

Results

Of the 50 enrolled participants in the main study, 18 (9/group) completed semi-structured interviews following MCTR program completion. Of the 50 participants, 31 (62%) were high engagers meaning they were adherent to Annie text messages and set at least one goal based on activity monitoring data. The remaining 19 (38%) were low engagers. Of the 18 interviewees, 9 (50%) were high engagers and 9 (50%) were low engagers. Characteristics of the sample () were consistent with the older veteran population (Kuwert et al., Citation2014; Olenick et al., Citation2015; Wilson et al., Citation2018), with about 30% positive for depression, 40% indicating social isolation (disengaged), and 34% reporting loneliness. Here, we present consecutively the quantitative and qualitative with mixed methods results.

Quantitative results

Associations with technology engagement level

displays the relationship between technology engagement level and participant characteristics, including cognition and technology skills; physical activity covariates; and program covariates. Group differences were seen for the MDPQ-16 summative score. This finding led us to explore each item of the MDPQ-16; we found 5 items were scored significantly higher by the high engagement group compared to the low engagement group (). For physical activity variables, the high engagement group demonstrated higher scores on the self-efficacy for exercise scale (p = 0.008) only. There were no significant differences between engagement groups for program factors.

Table 2. Comparison of covariates by engagement group.

Associations with change in daily step count via ActiGraph

For the 36 participants with valid wear time both pre- and post-program, the median (IQR) change in daily step count was −95 (−514 to 915) steps for all participants combined, 167 (−415 to Citation1073) steps for participants in the high engagement group, and −121 (−1694 to 768) steps for participants in the low engagement group.

Results of simple (unadjusted) linear regression showed no significant associations between change in daily step count and technology engagement level. When adjusting for baseline step count, the high technology engagement group demonstrated a mean improvement of 328 steps (95%CI: −170 to 827) compared to the low engagement group, which demonstrated a mean decline of 615 steps (95%CI: −1370 to 139). Although there was a significant difference for change in daily step counts between groups, neither group showed an improvement in daily step count from pre- to post-program. When adjusting for baseline step count, there was no significant association between change in step counts and cognition.

Qualitative and mixed-methods results by domain

The subsections contained herein present qualitative and mixed-methods findings, and meta-inferences by engagement group ().

Table 3. Joint display of mixed-methods findings by technology engagement group and domain.

Satisfaction with the virtual environment

Both high and low engagement groups described the virtual sessions as easier and more convenient compared to in-person sessions. This finding was corroborated by the satisfaction scores for both groups (high engagement group median (IQR) 4.9 (4.7, 5.0) and low engagement group 4.7 (4.4, 5.0), p = 0.28). The qualitative data illuminated these satisfaction ratings. One participant, for example, explained the convenience of telerehabilitation:

I really liked it [physical rehabilitation] a lot better in my home [through telehealth], because I didn’t have to get out and go somewhere. I live in a little, small town. Every time we want to go somewhere, it’s 40, 50 miles away. So just the convenience of having that [telehealth session], where you didn’t have to get out in weather or face the traffic or whatever it was. Low engagement group, participant 032.

High engagers discussed how they felt safe in the virtual environment, which was a perception that was not described consistently among the low engagement group. Safety included both physical and psychological safety.

So, when you’re in your house, you kind of let down your guard … . It [virtual environment] allowed us to be ourselves, and it wasn’t so difficult to, you know, show your weaknesses—you know, the fact that you couldn’t do this, or you got short of breath. High engagement group, participant 009.

When examining the incidence of safety events by group, 23 out of 31 (74%) participants in the high engagement group reported ≥ 1 safety events compared to 12 out of 19 (63%) participants in the low engagement group (p = 0.41).

Technology coping self-efficacy

Within the context of this study, coping self-efficacy was defined as the participants’ judgement about their ability to problem solve technological challenges. The two engagement groups differed in the specific strategies used for technology problem solving. The high engagement group often described being able to manage difficulties independently through strategies such as trial and error or relying on their own knowledge base. For example:

The Video Connect, I’ve gotten much better at. I’ve had difficulties coordinating the iPad, and the use of hearing aids so that I could hear from a distance … . But I was able to work a hearing aid solution [with the iPad]. High engagement group, participant 013.

Because this participant had a hearing impairment, he needed to be able to pair his Bluetooth hearing aids with his iPad so that he could actively participate in the telehealth sessions. This technology function allowed him to be further away from his iPad so that he could exercise safely, his physical therapist could visualize the entire movement, and he maintained his ability to hear the physical therapist. The low engagement group, in contrast, often relied on external sources of support, such as research staff, family, or friends to address technology challenges. For example: ‘I’m not as good at setting up my telephone to be a hotspot as, like, my wife would be. So, I just didn’t remember how to do it, to be able to connect’. Low engagement group, participant 043.

The low engagement group also described more frequent technology challenges than the high engagement group. These findings were supported by the median scores of the MDPQ-16: 75 out of 80 (IQR: 65, 79) for the high engagers scoring and 65 out of 80 (IQR: 51, 75) for the low engagers. This was a statistically significant difference (p = 0.03). Further, the troubleshooting subscale (average of 2-items) was also different between groups [high engagers: 5- very easily (IQR: 4, 5) vs. low engagers: 4- somewhat easily (IQR: 2, 5); p = 0.02]. There was a higher degree of variability among the lower engagers in response to these troubleshooting items as determined by the IQR.

Perceptions of Annie

The human factor was a salient concept that emerged in relation to the Annie app—the text messaging platform used in the intervention. Participants described the human factor when they acknowledged that there was a human involved at some point in the process. Examples included recognizing that a person created the program or knowing that clinicians received the data from Annie. For example: ‘Even though I know it’s [Annie] not a real person, I know it’s a program set up by real people to help you’. High engagement group, participant 009. The human factor was also realized when participants assigned human characteristics to Annie. For example: ‘I visualized her [Annie] as a very good secretary … Very good at communicating and persistent of getting the right information to the right people on time. That’s Annie’. High engagement group, participant 012.

For participants in the high engagement group, the human factor was a facilitator for interacting with Annie. They often described using Annie in concert with the activity monitor to report their daily step count in response to Annie text messages. In contrast, the low engagement group consistently described a lack of understanding about Annie in relation to its human factor.

Annie was a little confusing at first because I didn’t know she was a computer program. She would come on saying, “Hi, this is Annie”. And, at first, I thought, “This woman is getting a little bit tiresome” because she was making the same comments over and over again. I thought, “Wow, Annie’s got to get a life”. Low engagement group, participant 040.

Descriptions of responding to Annie’s text messages were often absent from the low engagement group’s interview data. These differences in perceptions of Annie may explain why there was a large difference in the median response rate to the two-way text messages for daily step counts between the two groups [high engagement: 100% (IQR: 98%, 100%) vs. low engagement: 67% (IQR: 46%, 89%); p < 0.001].

Experiences using the activity monitor

The ways in which participants experienced and used the activity monitor varied between the high engagement and low engagement groups. Within the high engagement group, participants consistently identified monitoring their daily step counts as their primary physical activity data point. Aspects of coping self-efficacy emerged when participants discussed first recognizing that the activity monitor could be inaccurate in certain situations, and subsequently, taking concrete actions to obtain more accurate data for the step counts. One participant used her learning to make recommendations to the research team regarding specific guidance to provide to future program participants in order to support data quality. She recommended:

With the Fitbit, you need to pass onto people that they need to take it off and put it into a place like a tight pocket or, let’s say, around their ankle when they’re using a shopping cart. Because when they’re using a shopping cart, they’re holding onto the handles and their wrist isn’t moving. So, therefore, it’s not counting steps. High engagement group, participant 020.

Similarly, the low engagement group identified issues with data accuracy, especially for daily step counts. This perception was cited as a reason to distrust the data, which may have contributed to less use of daily step counts to guide goal setting.

I don’t know [that] I put a great lot of faith in it [the Fitbit]. Because I found out, on the Fitbit, if you use your walker, your cane, it doesn’t count it up. Steps are not measured unless you’re moving your arm. So, that was disappointing because when I get out and use my walker with counting steps, I’d come back, say, “I know, I know I walked more than that”.’Low engagement group, participant 032.

While some participants in the low engagement group described that they enjoyed tracking and monitoring their daily steps, they were a minority in the low engagement group. More often, these participants either did not use the Fitbit data or used an alternative metric such as heartrate or oxygen saturation, which was information that was more salient to their general health, or perhaps, a specific health condition. We examined whether there was a group difference in certain medical conditions such as cardiac and pulmonary diseases. However, there was not a significant difference in the prevalence of these comorbidities between groups.

Self-management skills

Self-management skills included those skills that were targeted through the coaching intervention coupled with technology such as self-monitoring, enhanced self-awareness or reflection, and action planning. Self-awareness emerged when participants reflected on why their activity was at a certain level and recognized that a change needed to happen.

Self-awareness commonly led to action among participants in the high engagement group specific to physical activity. These participants tended to describe using self-monitoring, self-awareness, and action planning in a stepwise process. They also described using Annie and the activity monitor together, with the daily prompts facilitating regular review (self-monitoring) and use of the activity data (action planning). For example:

By putting in my steps each day [self-monitoring], it made me aware. I was able to see like, “Gosh, today I only did 1,700 steps. That’s pretty bad”. To see that and then know that, “Wow, I want to change this” [self-monitoring and self-awareness] … And you’d be surprised if you only have 3,500 steps, you can do another 1,500 steps within, I don’t know, like a half an hour easily [action]. High engagement group, participant 019.

Another participant described a similar scenario in which the daily text message from Annie helped to facilitate self-monitoring and action:

The fact that I had to stay conscious of particularly my steps every day, and I knew that I was going to have to tell someone [referring to Annie], you know, at the end of the day. And I would look at it [step count on his activity monitor] and say, “Jesus, I got to get on my bike for a while”. You got to get the steps up, you know? High engagement group, participant 022.

These quotes highlight ways that the integration of Annie and the activity monitor informed physical activity intentions and planning for specific actions that supported achieving step count goals in the high engagement group.

Within the low engagement group, most participants described only self-monitoring and typically did not advance into self-awareness or action regarding physical activity. However, participants in the low engagement group described using these self-management skills to inform future action regarding other health behaviors. For example, one participant with heart failure described how he used the Fitbit app to monitor his weight and fluid intake, which allowed him to take action based on specific changes:

Where you track your weight, now that’s pretty good to have on there [referring to Fitbit app]. Know how much you’re losing or gaining, because for the congestive heart failure and the fluid buildup, I’ll be a lot more weight some days than others. So, it’s good to know if my weight’s jumping up and down, and then [the app has] water intake, which is also good to know, because I’m limited to 64 ounces a day … Every now and again, you’ll see a jump of 5, 6, 7 pounds, 8 pounds, then you know that you’ve got way too much fluid jumped in there … Anytime it jumps up to four or five, six pounds or something like that, then let them know [referring to the heart clinic]. Low engagement group, participant 032.

Another participant in the low engagement group was prompted to follow-up with her sleep clinic based on the sleep data collected by the Fitbit:

The sleep tracking has been invaluable to me personally because I do suffer from insomnia. And it’s been able to give me a better understanding of the utilization of my CPAP [Continuous Positive Airway Pressure]. And the CPAP was supposed to reduce the number of sleep interruptions, and it has not been successful in that [based on data from the Fitbit]. So, I am working with the sleep clinic on that to try and figure it out”. Low engagement group, participant 021.

Participants in the low engagement group also often described prioritizing other movements or activities over daily steps. For example, one participant’s main goal was to complete an exercise session every day and another participant wanted to improve the quality of his walking rather than the quantity: “It was the quality I felt, with the steps I took, was more important than how many. I could[n‘t] care less if I could walk a half a mile. If I can’t walk a quarter of a block safely and feel steady, then so what if I can stumble a half a mile? There’s no gain there”. Low engagement group, participant 043.

The lack of engagement with respect to step counts among the low engagement group was also reflected in the quantitative data. Specifically, there was a significant difference in response rates to Annie’s goal text messages [high engagement: 88% (IQR: 75%, 96%) vs. low engagement: 25% (IQR: 2%, 58%); p < 0.0001]. Further, change in daily step counts was significantly associated with engagement level in adjusted analyses [high engagement group: mean (95%CI): 328 (−170 to 827) vs. low engagement group: −615 (−1370 to 139); p = 0.04].

Discussion

Results from our study revealed three key findings that help explain why older Veterans chose to engage or not engage with the adjunctive technologies used during our behavior change program. These findings are related to technology coping self-efficacy, integration of the technologies into the MCTR program, and application of self-management skills.

Technology coping self-efficacy

The low engagement group identified more technology challenges, required more time to learn the technologies, and did not fully engage with the adjunctive technologies. These findings highlight the need for early identification of participants who may have low technology literacy and low self-efficacy so that additional support may be provided proactively to overcome barriers to technology use. The MDPQ-16 survey, and specifically the troubleshooting subscale, may help identify some of these individuals. Early identification would allow for additional education and training on how to use each technology prior to starting the intervention so that participants could take full advantage of the technologies. Another important strategy to use in future studies is to engage older adults’ social support network. As shown by our cohort, having social support via research staff, family, or friends was the primary strategy the low engagement group used to overcome technological issues. Thus, future studies should include strategies to engage older adults’ social network or, in the absence of a social network, connect them with individuals or services capable of assisting with technology use. This recommendation is consistent with prior studies that have also reported on the benefit of using social support to facilitate use of technology and telehealth intervention (Dryden et al., Citation2023; Garcia Reyes et al., Citation2023; Grady et al., Citation2023; Hale-Gallardo et al., Citation2020).

Integration of technologies into the MCTR program

Group differences emerged in how the participants experienced, perceived, and used both Annie and the activity monitor during the MCTR program. Within the high engagement group, participants frequently discussed both technologies in the same story about changing their physical activity. As a result, these experiences portrayed the adjunctive technologies as a complementary and cohesive part of the MCTR program. One of the primary facilitators for using Annie, was that the high engagement participants understood the purpose of Annie and recognized that their responses were being monitored by the clinicians implementing the program. This finding is consistent with previous research (Hwang et al., Citation2017; Karlsen et al., Citation2017; Radhakrishnan et al., Citation2016) that identified patient knowledge of remote supervision as a facilitator for home telehealth programs. The high engagement participants also reported enhanced accountability to both reporting and progressing their daily steps, which was facilitated by Annie’s text messages and the activity monitor. Participants in the high engagement group placed a high personal value on daily step counts and viewed it as an important outcome. According to technology acceptance theories, individuals who see a benefit—termed perceived usefulness—are more likely to use that specific technology (Holden & Karsh, Citation2010). The finding that participants were able to appreciate and understand the intentional connections between the technologies may have further enhanced perceived usefulness and served as a facilitator to technology engagement.

In contrast, the low engagement group did not value daily step count for monitoring their health goals. Nor did they understand the relationships among the text messaging program, activity monitor, and desired behavior change. This finding was evident through participants’ experiences that depicted the technologies as operating in siloes. One factor contributing to this disconnect was that the low engagement group had misconceptions about Annie. These participants often did not understand the purpose of the text messages or that Annie was a text messaging platform rather than a real person. Furthermore, the two-way text messages delivered by Annie were developed to collect data about daily step counts, falls, and goals. These requests did not always align with the priorities of participants in the low engagement group. In fact, they tended to prioritize alternate health metrics for self-monitoring such as heart rate, oxygen saturation, and sleep. This finding may be related to a preoccupation with underlying health conditions, though we found no group differences in the prevalence of health conditions. Further research is warranted to elucidate the motivations for participants to place higher value on metrics more directly related to monitoring physical activity. Actual and perceived disease severity and psychological processes such as fear avoidance behaviors or fear of falling may have stronger relationships, helping determine what data is valued.

The lower perceived value of the daily step count data among the low engagement group may have been influenced as well by distrust in the data that arose when participants identified context-specific inaccuracies in these data. Accurate data may be an important facilitator for consumer activity trackers (Eisenhauer et al., Citation2017; Lavallee et al., Citation2020). Further, and unlike the participants in the high-engagement group, they did not describe strategies to improve data accuracy. One solution could be to help participants use the activity monitor in alternate ways such as those suggested by a key informant in the high engagement group, which involved wearing the monitor on the ankle. Another solution to this barrier is to use an alternate activity monitor capable of capturing accurate step count data for individuals with slow walking speed or for those who use an assistive device; to our knowledge, however, no such commercial device is currently available. Perhaps a more holistic solution would be to use technologies that can be adapted to what matters to the patient; this solution would align with the 4 M model of healthcare for age-friendly systems (Mate et al., Citation2018). Additionally, it is important to acknowledge that technology may not be the right or desired solution for all older adults.

Application of self-management skills

A key finding regarding self-management skills was seen qualitatively in how participants described the integration of text messages and activity monitoring. From participants in the high engagement group, we consistently heard how participants learned self-management skills that included self-monitoring, self-awareness, and action planning. In contrast, this progression was absent for participants with low technology engagement regarding daily step counts. However, some participants from the low engagement group provided examples of how they applied these same self-management skills to different health conditions and behaviors. For example, one participant monitored his heart rate during yardwork, combined this data with his subjective symptoms such as fatigue and shortness of breath, and used the information to make decisions about when he should rest. Holistically, this is a beneficial health outcome and consistent with age-friendly healthcare system recommendations. In the context of our research, however, these skills did not successfully translate to increasing physical activity. This finding may be partially explained by the coaching protocol, which allowed treating physical therapists to tailor the sessions to health outcomes or activities that were important to the individual participant. Because of this veteran-centered approach, not all participants focused on increasing daily step counts, further contributing to a lack of fit between the adjunctive technologies and the MCTR program.

The differences in how both groups applied these self-management skills to physical activity may partially explain why we saw a significant difference between the groups for daily step count change in adjusted analyses. Despite this group difference, neither group showed a significant change in daily step count from pre- to post-program. One key reason for this latter finding was the small sample size; we were not powered to detect differences in daily step count because it was a secondary outcome in the main trial. A second reason that may explain a lack of change from baseline for daily step count was that subjective reports often overestimate objective physical activity—except for those who report to never exercise—due to social desirability bias (Adams et al., Citation2005; Brenner & DeLamater, Citation2014). Another possible explanation for the inconsistency in our results between subjective reports and objective data, is the ‘intention-behavior gap’, which, simply stated, occurs when a person plans to do something (behavioral intent) but does not do that thing (behavior) (Cook et al., Citation2018). These preliminary findings suggest that intentional use of technology may augment behavior change programs, especially when the technological solution is aligned with the context and population. Further study is warranted to confirm these findings in a larger sample and to determine if these mechanisms of action are similar in different populations.

Limitations

There are some limitations to this study. First, we had a small sample size for the quantitative analyses; as such, we were not powered to detect within or between group differences for physical activity as measured by daily step counts. Further, the pre-program actigraphy data collection typically occurred during the first two to three weeks of the MCTR program; as such, we did not have a true baseline for our participants. Second, our mixed-methods analysis was iterative, and we modified our a priori definition for technology engagement level. Initially, the definition was based solely on response rate to Annie two-way text messages and did not account for use of the activity monitor. During research team discussions, we re-defined technology engagement encompassing engagement with both technology solutions. Despite this being a post-hoc change, we believe it is more comprehensive and representative of technology use in the MCTR program. One of the researchers who conducted interviews had a pre-existing therapeutic relationship with some of the interviewees, which may have introduced social desirability bias. We mitigated this bias when introducing and framing the interviews by stating that we were interested in each person’s candid feedback, noting that we intended to use the information to improve the program for other veterans. Finally, we did not interview individuals who withdrew from the study. Consequently, it is possible that we did not capture some unique experiences of technology use.

Conclusion

Findings from this study identified salient differences between participants who demonstrated high and low engagement with the technology used during the MCTR program. Mixed findings were consistent with technology acceptance theories, especially for the connection participants described between the perceived value (or usefulness) of the activity monitor data and their health behaviors. Emergent constructs in our data were perceived ease of use, perceived usefulness, and facilitating conditions. The contrasting findings between the high and low engagement groups suggest ways that the intervention and program procedures may be modified to assist those with lower technology literacy or self-efficacy. In particular, the findings underscored the importance of communicating clear goals and purposes for each technology and ensuring that the proposed technology solution matches what matters most to the patient—a core principle of age-friendly health systems. Results from this study can inform the integration of similar technologies into physical rehabilitation programs delivered in-person or via telehealth. Further study is required to improve effective integration of technologies into rehabilitation programs and improve understanding of factors and mechanisms that influence meaningful technology engagement in telerehabilitation.

Acknowledgements

We would like to thank the Mixed Methods Program and Qualitative and Mixed Methods Learning Lab at the University of Michigan for guidance with data integration and visualization methods.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data that support the findings of this study are available upon reasonable request.

Additional information

Funding

References

- Adams, S. A., Matthews, C. E., Ebbeling, C. B., Moore, C. G., Cunningham, J. E., Fulton, J., & Hebert, J. R. (2005, February 15). The effect of social desirability and social approval on self-reports of physical activity. American Journal of Epidemiology, 161(4), 389–30. https://doi.org/10.1093/aje/kwi054

- Ahmad, N. A., Mat Ludin, A. F., Shahar, S., Mohd Noah, S. A., & Mohd Tohit, N. (2022, March 9). Willingness, perceived barriers and motivators in adopting mobile applications for health-related interventions among older adults: A scoping review. British Medical Journal Open, 12(3), e054561. https://doi.org/10.1136/bmjopen-2021-054561

- Alexander, N. B., Phillips, K., Wagner-Felkey, J., Chan, C. L., Hogikyan, R., Sciaky, A., & Cigolle, C. (2021, September). Team VA Video Connect (VVC) to optimize mobility and physical activity in post-hospital discharge older veterans: Baseline assessment. BMC Geriatrics, 21(1), 502. https://doi.org/10.1186/s12877-021-02454-w

- Alley, S. J., van Uffelen, J., Schoeppe, S., Parkinson, L., Hunt, S., Power, D., Waterman, N., Waterman, C., To, Q. G., Duncan, M. J., Schneiders, A., & Vandelanotte, C. (2022, May). The effectiveness of a computer-tailored web-based physical activity intervention using fitbit activity trackers in older adults (active for life): Randomized controlled trial. Journal of Medical Internet Research, 24(5), e31352. https://doi.org/10.2196/31352

- Aromatario, O., Van Hoye, A., Vuillemin, A., Foucaut, A. M., Crozet, C., Pommier, J., & Cambon, L. (2019, October). How do mobile health applications support behaviour changes? A scoping review of mobile health applications relating to physical activity and eating behaviours. Public Health, 175, 8–18. https://doi.org/10.1016/j.puhe.2019.06.011

- Bartholdy, C., Skou, S. T., Bliddal, H., & Henriksen, M. (2020, December). Changes in physical inactivity during supervised educational and exercise therapy in patients with knee osteoarthritis: A prospective cohort study. The Knee, 27(6), 1848–1856. https://doi.org/10.1016/j.knee.2020.09.007

- Brenner, P. S., & DeLamater, J. D. (2014). Social desirability bias in self-reports of physical activity: Is an exercise identity the culprit? Social Indicators Research, 117(2), 489–504. https://doi.org/10.1007/s11205-013-0359-y

- Brown, M. C., Sims, K. J., Gifford, E. J., Goldstein, K. M., Johnson, M. R., Williams, C. D., & Provenzale, D. (2019, June). Gender-based differences among 1990–1991 Gulf War Era Veterans: Demographics, lifestyle behaviors, and health conditions. Women’s Health Issues, 29(Suppl 1), S47–s55. https://doi.org/10.1016/j.whi.2019.04.004

- Budreviciute, A., Damiati, S., Sabir, D. K., Onder, K., Schuller-Goetzburg, P., Plakys, G., Katileviciute, A., Khoja, S., & Kodzius, R. (2020). Management and Prevention Strategies for Non-communicable Diseases (NCDs) and their risk factors. Frontiers in Public Health, 8, 574111. https://doi.org/10.3389/fpubh.2020.574111

- Choi, E. Y., Kim, Y., Chipalo, E., Lee, H. Y., & Meeks, S. (2020, September). Does perceived ageism widen the digital divide? And does it vary by gender? The Gerontologist, 60(7), 1213–1223. https://doi.org/10.1093/geront/gnaa066

- Chokshi, N. P., Adusumalli, S., Small, D. S., Morris, A., Feingold, J., Ha, Y. P., Lynch, M. D., Rareshide, C. A. L., Hilbert, V., & Patel, M. S. (2018, June 13). Loss-framed financial incentives and personalized goal-setting to increase physical activity among ischemic heart disease patients using wearable devices: The ACTIVE REWARD randomized trial. Journal of the American Heart Association, 7(12). https://doi.org/10.1161/jaha.118.009173.

- Cook, P. F., Schmiege, S. J., Reeder, B., Horton-Deutsch, S., Lowe, N. K., & Meek, P. (2018). Temporal Immediacy: A Two-System Theory of Mind for Understanding and Changing Health Behaviors. Nursing Research, 67(2), 108–121. https://doi.org/10.1097/nnr.0000000000000265

- Curry, L. A., Krumholz, H. M., O’Cathain, A., Plano Clark, V. L., Cherlin, E., & Bradley, E. H. (2013, January 1). Mixed methods in biomedical and health services research. Circulation Cardiovascular Quality and Outcomes, 6(1), 119–123. https://doi.org/10.1161/circoutcomes.112.967885

- Division of Global Health Protection. (2023, February 3). Global noncommunicable diseases fact sheet. Centers for disease control and prevention, U.S. Department of Health & Human Services. Retrieved October 6, 2023 at https://www.cdc.gov/globalhealth/healthprotection/resources/fact-sheets/global-ncd-fact-sheet.html#:~:text=Noncommunicable%20diseases%20(NCDs)%2C%20such,of%20death%20and%20disability%20worldwide

- Dryden, E. M., Kennedy, M. A., Conti, J.(2023). Perceived benefits of geriatric specialty telemedicine among rural patients and caregivers. Health Services Research, 58(S1), 26–35. https://doi.org/10.1111/1475-6773.14055

- Eisenhauer, C. M., Hageman, P. A., Rowland, S., Becker, B. J., Barnason, S. A., & Pullen, C. H. (2017, March). Acceptability of Health technology for self-monitoring eating and activity among rural men. Public Health Nursing, 34(2), 138–146. https://doi.org/10.1111/phn.12297

- Federal Office of Rural Health Policy (FORHP). Eligible ZIP codes. Excel document. Health resources & services administration. Retrieved November 30, 2022, from. https://www.hrsa.gov/rural-health/about-us/what-is-rural/data-files

- Ferrante, J. M., Devine, K. A., Bator, A., Rodgers, A., Ohman-Strickland, P. A., Bandera, E. V., & Hwang, K. O. (2020, October). Feasibility and potential efficacy of commercial mHealth/eHealth tools for weight loss in African American breast cancer survivors: Pilot randomized controlled trial. Translational Behavioral Medicine, 10(4), 938–948. https://doi.org/10.1093/tbm/iby124

- Fetters, M. D. (2020). Writing a mixed methods article using the hourglass design model. In N. Ivankova & V. Plano Clark, (Eds.), The mixed methods research workbook: Activities for designing, implementing, and publishing projects (pp. 253–269). SAGE Publications, Inc. chap 1.

- Fetters, M. D., Curry, L. A., & Creswell, J. W. (2013, December). Achieving integration in mixed methods designs-principles and practices. Health Services Research, 48(6 Pt 2), 2134–2156. https://doi.org/10.1111/1475-6773.12117

- Garcia Reyes, E. P., Kelly, R., Buchanan, G., & Waycott, J. (2023, March 21). Understanding older adults’ experiences with technologies for health self-management: interview study. JMIR Aging, 6, e43197. https://doi.org/10.2196/43197

- Giannouli, E., Bock, O., Mellone, S., & Zijlstra, W. (2016). Mobility in old age: Capacity is not performance. BioMed Research International, 2016, 3261567. https://doi.org/10.1155/2016/3261567

- Goode, A. P., Hall, K. S., Batch, B. C., Huffman, K. M., Hastings, S. N., Allen, K. D., Shaw, R. J., Kanach, F. A., McDuffie, J. R., Kosinski, A. S., Williams, J. W., & Gierisch, J. M. (2017, February). The Impact of Interventions that integrate accelerometers on physical activity and weight loss: A systematic review. Annals of Behavioral Medicine, 51(1), 79–93. https://doi.org/10.1007/s12160-016-9829-1

- Grady, A., Pearson, N., Lamont, H., Leigh, L., Wolfenden, L., Barnes, C., Wyse, R., Finch, M., Mclaughlin, M., Delaney, T., Sutherland, R., Hodder, R., & Yoong, S. L. (2023, December 19). The effectiveness of strategies to improve user engagement with digital health interventions targeting nutrition, physical activity, and overweight and obesity: Systematic review and meta-analysis. Journal of Medical Internet Research, 25, e47987. https://doi.org/10.2196/47987

- Guetterman, T. C., Fàbregues, S., & Sakakibara, R. (2021). Visuals in joint displays to represent integration in mixed methods research: A methodological review. Methods in Psychology, 5, 100080. https://doi.org/10.1016/j.metip.2021.100080

- Hale-Gallardo, J. L., Kreider, C. M., Jia, H., Castaneda, G., Freytes, I. M., Cowper Ripley, D. C., Ahonle, Z. J., Findley, K., & Romero, S. (2020). Telerehabilitation for rural veterans: A qualitative assessment of barriers and facilitators to implementation. Journal of Multidisciplinary Healthcare. 13:559–570. https://doi.org/10.2147/jmdh.s247267

- Harris, P. A., Taylor, R., Minor, B. L., Elliott, V., Fernandez, M., O’Neal, L., McLeod, L., Delacqua, G., Delacqua, F., Kirby, J., & Duda, S. N. (2019). The REDCap consortium: Building an international community of software platform partners. Journal of Biomedical Informatics, 95, 103208. 07/1/2019. https://doi.org/10.1016/j.jbi.2019.103208.

- Harris, P. A., Taylor, R., Thielke, R., Payne, J., Gonzalez, N., & Conde, J. G. (2009). Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics, 42(2), 377–381. 04/1/2009, https://doi.org/10.1016/j.jbi.2008.08.010

- Head, K. J., Noar, S. M., Iannarino, N. T., & Grant Harrington, N. (2013, November). Efficacy of text messaging-based interventions for health promotion: A meta-analysis. Social Science & Medicine, 97, 41–48. https://doi.org/10.1016/j.socscimed.2013.08.003

- Holden, R. J., & Karsh, B. T. (2010, February). The technology acceptance model: Its past and its future in health care. Journal of Biomedical Informatics, 43(1), 159–172. https://doi.org/10.1016/j.jbi.2009.07.002

- Hsieh, H. F., & Shannon, S. E. (2005, November). Three approaches to qualitative content analysis. Qualitative Health Research, 15(9), 1277–1288. https://doi.org/10.1177/1049732305276687

- Hwang, R., Mandrusiak, A., Morris, N. R., Peters, R., Korczyk, D., Bruning, J., & Russell, T. (2017). Exploring patient experiences and perspectives of a heart failure telerehabilitation program: A mixed methods approach. Heart & Lung, 46(4), 320–327. https://doi.org/10.1016/j.hrtlng.2017.03.004

- Karlsen, C., Ludvigsen, M. S., Moe, C. E., Haraldstad, K., & Thygesen, E. (2017, December). Experiences of community-dwelling older adults with the use of telecare in home care services: A qualitative systematic review. JBI Database of Systematic Reviews and Implementation Reports, 15(12), 2913–2980. https://doi.org/10.11124/jbisrir-2017-003345

- Kim, R. H., & Patel, M. S. (2018, November). Barriers and opportunities for using wearable devices to increase physical activity among veterans: Pilot study. JMIR Formative Research, 2(2), e10945. https://doi.org/10.2196/10945

- Köttl, H., Gallistl, V., Rohner, R., & Ayalon, L. (2021, December). ”But at the age of 85? Forget it!”: Internalized ageism, a barrier to technology use. Journal of Aging Studies, 59, 100971. https://doi.org/10.1016/j.jaging.2021.100971

- Kuwert, P., Knaevelsrud, C., & Pietrzak, R. H. (2014, June). Loneliness among older veterans in the United States: Results from the national health and resilience in veterans study. The American Journal of Geriatric Psychiatry: Official Journal of the American Association for Geriatric Psychiatry, 22(6), 564–569. https://doi.org/10.1016/j.jagp.2013.02.013

- Lavallee, D. C., Lee, J. R., Austin, E., Bloch, R., Lawrence, S. O., McCall, D., Munson, S. A., Nery-Hurwit, M. B., & Amtmann, D. (2020). mHealth and patient generated health data: Stakeholder perspectives on opportunities and barriers for transforming healthcare. Mhealth, 6, 8. https://doi.org/10.21037/mhealth.2019.09.17

- Littman, A. J., Forsberg, C. W., & Koepsell, T. D. (2009, May). Physical activity in a national sample of veterans. Medicine and Science in Sports and Exercise, 41(5), 1006–1013. https://doi.org/10.1249/MSS.0b013e3181943826

- Mannheim, I., Wouters, E. J. M., van Boekel LC, & van Zaalen, Y. (2021, April). Attitudes of Health Care Professionals Toward Older Adults’ Abilities to Use Digital Technology: Questionnaire Study. Journal of Medical Internet Research, 23(4), e26232. https://doi.org/10.2196/26232

- Mate, K. S., Berman, A., Laderman, M., Kabcenell, A., & Fulmer, T. (2018, March). Creating age-friendly health systems – a vision for better care of older adults. Healthcare, 6(1), 4–6. https://doi.org/10.1016/j.hjdsi.2017.05.005

- Miller, W. R., & Rollnick, S. (2013). Motivational interviewing: Helping people change (Vol. xii, 3rd ed.). Guilford Press.

- Olenick, M., Flowers, M., & Diaz, V. J. (2015). US veterans and their unique issues: Enhancing health care professional awareness. Advances in Medical Education and Practice, 6, 635–639. https://doi.org/10.2147/amep.S89479

- Omura, J. D., Hyde, E. T., Imperatore, G., Loustalot, F., Murphy, L., Puckett, M., Watson, K. B., & Carlson, S. A. (2021, August). Trends in meeting the aerobic physical activity guideline among adults with and without select chronic health conditions, United States, 1998–2018. Journal of Physical Activity & Health, 18(S1), S53–S63. https://doi.org/10.1123/jpah.2021-0178

- Pendlebury, S. T., Welch, S. J., Cuthbertson, F. C., Mariz, J., Mehta, Z., & Rothwell, P. M. (2013, January). Telephone assessment of cognition after transient ischemic attack and stroke: Modified telephone interview of cognitive status and telephone Montreal Cognitive Assessment versus face-to-face Montreal Cognitive Assessment and neuropsychological battery. Stroke, 44(1), 227–229. https://doi.org/10.1161/strokeaha.112.673384

- Radhakrishnan, K., Xie, B., Berkley, A., & Kim, M. (2016, February). Barriers and Facilitators for sustainability of tele-homecare programs: A systematic review. Health Services Research, 51(1), 48–75. https://doi.org/10.1111/1475-6773.12327

- Rapp, K., Klenk, J., Benzinger, P., Franke, S., Denkinger, M. D., & Peter, R. (2012, October). Physical performance and daily walking duration: Associations in 1271 women and men aged 65–90 years. Aging Clinical and Experimental Research, 24(5), 455–460. https://doi.org/10.1007/BF03654813

- Rhodes, R. E., Janssen, I., Bredin, S. S. D., Warburton, D. E. R., & Bauman, A. (2017, August). Physical activity: Health impact, prevalence, correlates and interventions. Psychology & Health, 32(8), 942–975. https://doi.org/10.1080/08870446.2017.1325486

- Roque, N. A., & Boot, W. R. (2018, February). A new tool for assessing mobile device proficiency in older adults: The mobile device proficiency questionnaire. Journal of Applied Gerontology: The Official Journal of the Southern Gerontological Society, 37(2), 131–156. https://doi.org/10.1177/0733464816642582

- St Fleur, R. G., St George, S. M., Leite, R., Kobayashi, M., Agosto, Y., & Jake-Schoffman, D. E. (2021, May). Use of fitbit devices in physical activity intervention studies across the life course: Narrative review. Journal of Medical Internet Research MHealth and UHealth, 9(5), e23411. https://doi.org/10.2196/23411

- Stockwell, S., Schofield, P., Fisher, A., Firth, J., Jackson, S. E., Stubbs, B., & Smith, L. (2019, June). Digital behavior change interventions to promote physical activity and/or reduce sedentary behavior in older adults: A systematic review and meta-analysis. Experimental gerontology, 120, 68–87. https://doi.org/10.1016/j.exger.2019.02.020

- Warburton, D. E. R., & Bredin, S. S. D. (2017, September). Health benefits of physical activity: A systematic review of current systematic reviews. Current Opinion in Cardiology, 32(5), 541–556. https://doi.org/10.1097/hco.0000000000000437

- Wildenbos, G. A., Peute, L., & Jaspers, M. (2018, June). Aging barriers influencing mobile health usability for older adults: A literature based framework (MOLD-US). International Journal of Medical Informatics, 114, 66–75. https://doi.org/10.1016/j.ijmedinf.2018.03.012

- Wilson, G., Hill, M., & Kiernan, M. D. (2018, December). Loneliness and social isolation of military veterans: Systematic narrative review. Occupational medicine, 68(9), 600–609. https://doi.org/10.1093/occmed/kqy160

- Zubala, A., MacGillivray, S., Frost, H., Kroll, T., Skelton, D. A., Gavine, A., Gray, N. M., Toma, M., & Morris, J. (2017). Promotion of physical activity interventions for community dwelling older adults: A systematic review of reviews. Public Library of Science ONE, 12(7), e0180902. https://doi.org/10.1371/journal.pone.0180902