Abstract

Background

While the knowledge required of residents training in orthopedic surgery continues to increase, various factors, including reductions in work hours, have resulted in decreased clinical learning opportunities. Recent work suggests residents graduate from their training programs without sufficient exposure to key procedures. In response, simulation is increasingly being incorporated into training programs to supplement clinical learning. This paper reviews the literature to explore whether skills learned in simulation-based settings results in improved clinical performance in orthopedic surgery trainees.

Materials and methods

A scoping review of the literature was conducted to identify papers discussing simulation training in orthopedic surgery. We focused on exploring whether skills learned in simulation transferred effectively to a clinical setting. Experimental studies, systematic reviews, and narrative reviews were included.

Results

A total of 15 studies were included, with 11 review papers and four experimental studies. The review articles reported little evidence regarding the transfer of skills from simulation to the clinical setting, strong evidence that simulator models discriminate among different levels of experience, varied outcome measures among studies, and a need to define competent performance in both simulated and clinical settings. Furthermore, while three out of the four experimental studies demonstrated transfer between the simulated and clinical environments, methodological study design issues were identified.

Conclusion

Our review identifies weak evidence as to whether skills learned in simulation transfer effectively to clinical practice for orthopedic surgery trainees. Given the increased reliance on simulation, there is an immediate need for comprehensive studies that focus on skill transfer, which will allow simulation to be incorporated effectively into orthopedic surgery training programs.

Video abstract

Point your SmartPhone at the code above. If you have a QR code reader the video abstract will appear. Or use:

Introduction

Recent publications suggest that surgical residents may graduate from their training programs without sufficient clinical exposure to key procedures.Citation1,Citation2 This is thought, in part, to be driven by duty hour restrictions, pressures to increase operating room (OR) efficiency, and the ongoing development of new techniques for trainees to learn.Citation2,Citation3 In their seminal paper, Reznick and MacRaeCitation3 suggested that simulation can be used as an adjunct to clinical rotations to increase exposure to different procedures and skills. Simulation is an appealing teaching tool as it provides residents with an environment in which they can learn new skills with no impact on patient care.Citation4 Residents are able to make mistakes, receive valuable feedback, and improve performance prior to working with patients, without the time pressures that are omnipresent in traditional clinical teaching situations.Citation4 Furthermore, the variety of simulation materials available, including synthetic models, animal models, cadavers, and virtual realityCitation5 allows educators to choose models best suited for teaching specific skills. Not having to rely on patients with real health issues also allows educators and learners to adjust the fidelity of models to create learning experiences which are optimized for the educational needs of the learner.Citation4 Fidelity is defined as how realistic a simulation model appears to the learner (physical fidelity) or whether the task itself causes similar behavior to what is required in the real world (functional fidelity).Citation17 While high-fidelity simulators may sometimes better mimic the setting in which trainees will actually perform the skill, having too much information can sometimes interfere with learning.Citation6 Thus, the ability to adjust the level of fidelity present can be a powerful educational tool.

In 2006, Reznick and MacRaeCitation3 described the evidence on the transfer of skills from simulation models to the OR. Much of the work they presented focused on laparoscopic procedures. Although laparoscopic procedures may have some similarities to procedures performed by orthopedic surgeons, such as arthroscopy, there are distinct technical differences that may influence how well data showing the transfer of laparoscopic skills learned through simulation to the clinical setting may generalize to skills related to orthopedic surgery, especially arthroscopic surgery.Citation7 For instance, the arthroscopic operative field tends to be much more shallow than the laparoscopic field. This means that operative instruments tend to be shorter and require a different, often larger range of motion that introduces challenges such as increased travel in and out of the operative field and an increased reliance on anatomic landmarks compared with laparoscopy.Citation8 These technical differences may increase the importance of depth perception and haptic feedback in arthroscopy simulation models.Citation9

Although a few studies have looked at the effect training on arthroscopic simulators has on surgical performance in live patients, little evidence has been published examining simulation training for open orthopedic surgical procedures.Citation10 Thus, further work is required to determine whether simulation training in orthopedic surgery actually improves performance in the clinical environment, and how much weight should be given to performance assessments that have been conducted in simulation. This is a timely issue, given the global shift toward competency-based models of education for surgical trainees which are making increasing use of simulation for teaching and assessment.Citation11 The purpose of this scoping review was to identify and summarize the existing literature regarding the effectiveness of transfer of skills related to the specialty of orthopedic surgery learned through simulation.

Materials and methods

The databases used to conduct this search were Ovid Embase (from 1974 to August 23, 2016); Ovid MEDLINE(R) In-Process & Other Non-Indexed Citations, Ovid MEDLINE(R) (from 1946 to August 23, 2016); Ovid MEDLINE(R) Epub Ahead of Print (August 23, 2016); Cochrane Library (September 23, 2016); and PubMed (September 23, 2016). The following search terms were used: simulation, transfer, orthopaedic*, orthopedic*. Boolean terms were used to combine the search terms. Two independent reviewers (JY, NW) completed a title and abstract review, and then a full-text screening of the articles that met the inclusion criteria. Reference lists of the included articles were manually searched for relevant studies. The reviewers met following each stage of the review and discussed any discrepancies until consensus was reached. We included articles that discussed trainees in orthopedic surgery (medical students, residents, fellows) and transfer of skills from simulation training to a clinical environment. Articles in fields outside orthopedic surgery, which focused on staff performance, and conference abstracts or reports that lacked sufficient detail were excluded.

Results

Article selection

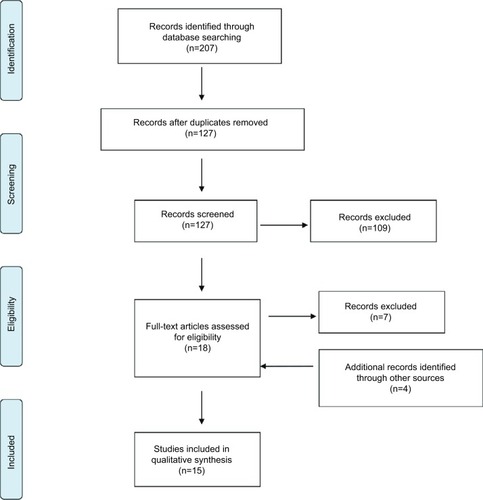

The search revealed 94 studies from Ovid, 12 from Cochrane, and 101 from PubMed, giving a total of 207 studies. Of these studies, 80 duplicates were removed. The resulting 127 articles underwent title and abstract screening. Following this screening, 18 articles were selected for full-text review. Of these articles, 11 were included in the final qualitative analysis. Two articles were excluded due to insufficient information (conference abstract and report), and the other five articles excluded did not look at transfer to a clinical setting. Four papers were added following a hand-search of the reference lists, thus giving a total of 15 studies (). Of the 15 included papers, there were four experimental studies and 11 review articles.

Experimental study characteristics and outcomes

Four experimental studies, published from 2008 to 2016, looked at the effect training orthopedic surgery residents (ranging across postgraduate years 1–5) on arthroscopy simulators had on their ability to transfer their skills into the OR (). Two of these studies investigated knee arthroscopy,Citation12,Citation13 while the other two investigated shoulder arthroscopy.Citation14,Citation15 While the specific evaluation tools used in all four studies differed, all studies measured performance with a checklist and a global rating scale (GRS). Three out of the four studies used at least one previously validated checklist or GRS.Citation12,Citation14,Citation15 All four studies also used the time to complete the surgical task as an outcome measurement. Additionally, two of the studies incorporated motion analyses and looked at the efficiency of hand movements,Citation22 using path length and number of hand movements as indices of performance.Citation13,Citation15 From these performance metrics, three of the four studies concluded skills transferred to performance of procedures on live patients,Citation12,Citation13,Citation15 as evidenced by a significant difference between the simulation-trained and traditionally-trained (control) groups on at least one outcome measure (see for further details).

Table 1 Experimental study characteristics

In addition to varied outcomes, the four studies differed with respect to their methodologies and performance requirements. While three studiesCitation12,Citation14,Citation15 provided their control groups with the same learning materials as the simulation-trained group, including a video of the procedure and a procedural checklist, one study did not.Citation13 Furthermore, one study required trainees to receive a perfect score on visualization and probing tasks before moving on to perform the surgical procedure on a live patient,Citation12 two studies required a minimum number of arthroscopies to be practiced in simulation based on previous literature,Citation14,Citation15 and one study stated that the trainee had to perform 18 arthroscopies in the simulation environment.Citation13 Thus, only one studyCitation12 required trainees to achieve a perfect score before performing the surgical procedure on a live patient, whereas the other studies merely set a minimum number of attempts.

Finally, these studies also differed in how or if they defined fidelity of the simulation model used. Only two of the experimental studies, Cannon et alCitation12 and Waterman et al,Citation15 stated the fidelity of their simulation models (). The fidelity of the models used by Dunn et alCitation14 and Howells et alCitation13 were not provided directly, but were inferred from another experimental studyCitation15 that used the same simulator model and a systematic reviewCitation22 of simulation in arthroscopy, respectively ().

Review study characteristics

Eleven review studies were reviewed; these were published from 2010 to 2016. Five reviews explored arthroscopy simulation more broadly, including whether skills transferred into the OR.Citation7,Citation18–Citation21 Three reviews evaluated the validity of simulator models in arthroscopy training.Citation16,Citation22,Citation23 One reviewed arthroscopy techniques that can be taught using simulator models.Citation24 Two reviews discussed the evidence to support the use of different simulator models in orthopedic training and mentioned the transfer of skills to the clinical environment in their discussions.Citation2,Citation5

Most of these reviewsCitation7,Citation19,Citation20,Citation21 included experimental studies that did not test whether skills transferred from a simulator model to the clinical setting and none of these reviews included any of the four experimental studies discussed in this paper.

Outcome measures

The most common outcome measure among studies was the time to complete the surgical task.Citation7,Citation16,Citation22,Citation23 Most studies (and all studies that measured transfer) included a variety of additional outcome measures such as path length,Citation7 number of collisions,Citation7,Citation21 economy of movement,Citation7 and number of hand movements.Citation22 The review by Aim et alCitation7 reported over 30 different outcome measures across 10 studies, and suggested a need to standardize the outcome measures used in simulation studies.

Transfer of skills

All of the reviews identified a need for further evidence on whether skills learned in simulation transfer to improved operative performance.Citation2,Citation5,Citation19,Citation20 Many of the reviews cited the paper of Howells et alCitation13 as the only study to date demonstrating transfer from simulation to the OR; however, the majority of the reviews were published prior to three of the four experimental studies included in this study. Several of the reviews also criticized the poor outcome measures and control group used in the Howells et al study.Citation16,Citation19,Citation21 Furthermore, Hetaimish et alCitation22 highlighted that many studies examine the transfer of skills between different types of simulator models rather than transfer from simulator models to clinical performance.

Construct validity

Despite the lack of evidence supporting the transfer of skills from simulation to the clinical environment, most reviews found strong support for construct validity or the ability of simulator models and assessment tools to discriminate between different levels of experience.Citation2,Citation5,Citation7,Citation19,Citation20,Citation22 It is important to note that the construct in question could be interpreted not as “clinical performance”, but rather the ability to discriminate between levels of trainees’ skills on a simulator task. One review did not find strong support for construct validity (as defined above), though the authors suggested this might be due to practice effects on the simulator, rather than trainee experience with arthroscopic procedures.Citation21 Another review suggested that although there was strong support for simulation models to discriminate between novices and experts, future studies should investigate whether models can discriminate between novices and intermediate learners.Citation23

Competence

The review studies also commented on the need to define competent performance in arthroscopic training.Citation7,Citation16,Citation19 This includes defining what a competent performance on a simulator is, particularly as it relates to transfer into the clinical setting,Citation19 how many simulation sessions it takes to facilitate the transfer of those skills,Citation7,Citation19 and lastly, how many arthroscopic procedures in the OR must be completed for a trainee to be deemed competent for independent practice.Citation16

Fidelity

Out of the 11 reviews, six commented only on the fidelity of specific simulator models (i.e., identified each model as being either high or low fidelity).Citation5,Citation16,Citation18,Citation19,Citation23,Citation24 Stirling et alCitation2 suggested high-fidelity simulators can provide haptic feedback, potentially facilitating the transfer of skills from simulation to the OR;Citation2 they also suggested high fidelity might be more appropriate for senior surgeons.Citation2 However, one paper discussed the conflicting evidence on whether high or low fidelity is more beneficial for learning surgical skills.Citation22

Discussion

This scoping review suggests evidence for the transfer of skills from simulation to the clinical environment remains sparse for surgical procedures related to the practice of orthopedic surgery. Importantly, none of the experimental studies reviewed focused on the transfer of skills from simulation to the clinical setting for open procedures.

Only four experimental studies measuring the transfer of arthroscopic skills acquired in simulation to performance in the OR were identified, with three concluding that transfer occurred. One experimental study showed a significant difference between simulation- and traditionally-trained trainees’ performance in simulation and the OR on a checklist, but not the GRS.Citation12 One interpretation of this data is that the simulator helped trainees to learn the steps of the procedure, but did not help them to perform the procedure better in the clinical context, which calls into question the authors’ assertion of skill transfer. Another experimental study only showed a significant difference between the simulation- and traditionally-trained trainees on half of the outcomes they measured.Citation15 Additionally, this study measured different aspects of performance in simulation (motion analysis) and the clinical setting (safety scores and a checklist), so it is difficult to determine whether improvements in performance in simulation were linked to, or transferred to, improved performance in the clinical setting. The variety of assessment tools and outcome measures used across the different studies not only makes it challenging to compare the different studies, but also raises questions as to what is the most appropriate strategy for measuring transfer of learning.

While most studies in this review used time to complete the surgical task as a measure of performance, other additional outcome measures, such as task-specific checklists, GRS, and motion analysis, were used in only some papers. The four experimental studies included in this review used checklists and GRS, which are traditionally used to measure surgical skill performance in the clinical setting. Checklists measure knowledge or steps of a procedure, and GRS captures how well the task was completed.Citation22 As such, a GRS is a useful supplement to checklists as it is able to distinguish between novices and experts, as both groups might know the steps but have a significant difference in performance.Citation22 It has also been shown that as trainees become more familiar with procedures, some of the underlying neural processes become automatized, which results in poorer performance on task-specific checklists.Citation25 Educators may consider focusing on GRS to ensure patient safety and readiness for trainees to move on to the next phase of training.

Another weakness of the studies reviewed relates to the issue of the validity of the tools that were used to assess performance.Citation22 Since validity is context specific, educators should be mindful when applying a “validated” tool into a new context without proper testing. This is of particular importance for studies investigating transfer, as the simulated and clinical settings are different contexts. Thus, studies investigating transfer should establish validity of outcome measures in both the simulated and clinical settings being used. Using the same validated outcome measures in both settings will allow for appropriate comparisons to establish transfer of learning.

Another tool that was used to measure performance is motion analysis, which can generate metrics such as number of collisions, number of hand movements, and path length to provide information on the level of skill with which a technique is performed.Citation12 The literature suggests that motion analysis measures are able to accurately discriminate between trainees with different levels of arthroscopic experienceCitation13 and thus have strong construct validity as defined by the studies in this review. Nevertheless, there remains a lack of evidence supporting whether motion analysis can predict transfer of learning from a simulation laboratory to the clinical setting. Furthermore, motion analysis is rarely used in the OR, making the comparison of performance between simulation and the clinical setting challenging.

Clearly, more research needs to be focused on measuring how skills transfer from the simulation laboratory to the clinical setting in orthopedic surgery. Determination of the most appropriate outcome measures to assess competency for procedures performed in orthopedics must be developed, validated, and assessed. Moreover, in light of the recent focus on competency-based medical education, the lack of the understanding of what defines competence has broader implications for determining whether a trainee is able to practice independently. Moving forward, educators must create evaluation frameworks that clearly define competence, so that training programs can meaningfully compare performance in simulation to the clinical environment, or implement simulation training in competency-based curricula.

Lastly, another critical component to successfully incorporating simulation into surgical training is the fidelity of the learning model. While the fidelity of simulator models in the experimental articles in this review were identified, we were unable to determine if transfer of skills from simulation to the OR is different between low- and high-fidelity models, due to the varying outcome measures across studies. The reviews included in this paper suggested that a difference does exist between low- versus high-fidelity models for the transfer of learning,Citation22 but did not elaborate on their reasoning for this. In 2012, Norman et alCitation17 conducted a review of studies comparing low- and high-fidelity models and concluded that the benefits gained from low- and high-fidelity models are equivalent. However, this may have been due to many simulators being incorrectly labeled as “high fidelity”, when, in fact, the functional fidelity of the model is low.Citation17 For example, a virtual reality simulator may place the participant in an environment that looks real; however, if they are not forced to use their hands the way they would in the OR (haptic feedback, space limitations, and so on), the simulator would be high in physical fidelity but low in functional fidelity. These two subsets of fidelity should be carefully considered, so that the fidelity of simulation models can be adjusted to optimize learning based on the level of the learner and hopefully enhance transfer of skills to the clinical setting.

Conclusion

More work needs to be done regarding the transfer of skills from the simulation environment to the clinical setting in orthopedic surgery. Studies need to use reliable and consistent outcomes, include clear definitions of competence, and consider both physical and functional fidelity. Tools used to measure transfer of skills from the simulation laboratory to the clinical setting should be validated in the context in which they are being used, and the same aspects of performance should be measured in simulation and the clinical setting. Improving these aspects of simulation studies in orthopedic surgery will help determine whether skills can be transferred into the clinical environment, and will help training programs better assess the competence of their trainees.

Disclosure

The authors report no conflicts of interest in this work.

References

- BellRHJrBiesterTWTabuencaAOperative experience of residents in US general surgery programsAnn Surg2009249571972419387334

- StirlingERLewisTLFerranNASurgical skills simulation in trauma and orthopaedic trainingJ Orthop Surg Res2014912625523023

- ReznickRKMacRaeHTeaching surgical skills—changes in the windN Engl J Med2006355252664266917182991

- ThomasGWJohnsBDMarshJLAndersonDDA review of the role of simulation in developing and assessing orthopaedic surgical skillsIowa Orthop J20143418118925328480

- AkhtarKSChenAStandfieldNJGupteCMThe role of simulation in developing surgical skillsCurr Rev Musculoskelet Med20147215516024740158

- BrydgesRCarnahanHRoseDRoseLDubrowskiACoordinating progressive levels of simulation fidelity to maximize educational benefitAcad Med201085580681220520031

- AimFLonjonGHannoucheDNizardREffectiveness of virtual reality training in orthopaedic surgeryArthroscopy201632122423226412672

- AkhtarKSugandKWijendraAThe transferability of generic minimally invasive surgical skills: is there crossover of core skills between laparoscopy and arthroscopy?J Surg Educ201673232933826868317

- van der MeijdenOASchijvenMPThe value of haptic feedback in conventional and robot-assisted minimal invasive surgery and virtual reality training: a current reviewSurg Endosc20092361180119019118414

- BurnsGTKingBWHolmesJRIrwinTAEvaluating internal fixation skills using surgical simulationJ Bone Joint Surg Am2017995e2128244920

- SonnadaraRMuiCMcQueenSReflections on competency-based education and training for surgical residentsJ Surg Educ201471115115824411437

- CannonWDGarrettWEJrHunterREImproving residency training in arthroscopic knee surgery with use of a virtual-reality simulator: a randomized blinded studyJ Bone Joint Surg Am201496211798180625378507

- HowellsNRGillHSCarrAJPriceAJReesJLTransferring simulated arthroscopic skills to the operating theatre: a randomised blinded studyJ Bone Joint Surg Br200890449449918378926

- DunnJCBelmontPJLanziJArthroscopic shoulder surgical simulation training curriculum: transfer reliability and maintenance of skill over timeJ Surg Educ20157261118112326298520

- WatermanBRMartinKDCameronKLOwensBDBelmontPJJrSimulation training improves surgical proficiency and safety during diagnostic shoulder arthroscopy performed by residentsOrthopedics2016393e479e48527135460

- HodginsJLVeilletteCArthroscopic proficiency: methods in evaluating competencyBMC Med Educ2013136123631421

- NormanGDoreKGriersonLThe minimal relationship between simulation fidelity and transfer of learningMed Educ201246763664722616789

- BoutefnouchetTLaiosTTransfer of arthroscopic skills from computer simulation training to the operating theatre: a review of evidence from two randomised controlled studiesSICOT J20162427163093

- FrankRMEricksonBFrankJMUtility of modern arthroscopic simulator training modelsArthroscopy201430112113324290789

- VaughanNDubeyVNWainwrightTWMiddletonRGDoes virtual-reality training on orthopaedic simulators improve performance in the operating room?Paper presented at: Science and Information ConferenceJuly 28–30; 2015London, UK

- ModiCSMorrisGMukherjeeRComputer-simulation training for knee and shoulder arthroscopic surgeryArthroscopy201026683284020511043

- HetaimishBElbadawiHAyeniOREvaluating simulation in training for arthroscopic knee surgery: a systematic review of the literatureArthroscopy201632612071220.e127030548

- Slade ShantzJALeiterJRGottschalkTMacDonaldPBThe internal validity of arthroscopic simulators and their effectiveness in arthroscopic educationKnee Surg Sports Traumatol Arthrosc2014221334023052120

- TayCKhajuriaAGupteCSimulation training: a systematic review of simulation in arthroscopy and proposal of a new competency-based training frameworkInt J Surg201412662663324793233

- SchmidtHGNormanGRBoshuizenHPA cognitive perspective on medical expertise: theory and implicationsAcad Med199065106116212261032