Abstract

Aim

To assess the trend of changes in the evaluation scores of faculty members and discrepancy between administrators’ and students’ perspectives in a medical school from 2006 to 2015.

Materials and methods

This repeated cross-sectional study was conducted on the 10-year evaluation scores of all faculty members of a medical school (n=579) in an urban area of Iran. Data on evaluation scores given by students and administrators and the total of these scores were evaluated. Data were analyzed using descriptive and inferential statistics including linear mixed effect models for repeated measures via the SPSS software.

Results

There were statistically significant differences between the students’ and administrators’ perspectives over time (p<0.001). The mean of the total evaluation scores also showed a statistically significant change over time (p<0.001). Furthermore, the mean of changes over time in the total evaluation score between different departments was statistically significant (p<0.001).

Conclusion

The trend of changes in the student’s evaluations was clear and positive, but the trend of administrators’ evaluation was unclear. Since the evaluation of faculty members is affected by many other factors, there is a need for more future studies.

Introduction

In higher education institutions including medical sciences universities, teaching by faculty members is considered a qualitative index of education, which is evaluated using different methods and by different sources including students, administrators, peers, and self-evaluation.Citation1 The aim of such evaluations is to help managers hire appropriate faculty members and promote or extend their contracts. It can also help with faculty members’ professional development and improvement.Citation2–Citation4 For this purpose, there is a need to evaluate improvements in teachings.Citation5 According to Murray et al,Citation5 three methods can be used to assess the improvement of teachings as follows: 1) studying the perspectives of faculty members, 2) performing experimental assessments, and 3) comparing the mean scores of evaluations over years. Many studies have been conducted on the perspectives of faculty members, but no studies are available on the remaining two methods. It is believed that faculty members try to regulate their performance based on the results of evaluations to improve themselves in teaching and learning.Citation6 Therefore, evaluation performed in academic settings have caused changes in faculty members’ performance. DunkinCitation7 revealed that such changes are more obvious in those faculty members with negative evaluation results than those with positive ones. Shakournia et alCitation8 assessed the trend of faculty members’ evaluation scores in a university of medical sciences in Iran and reported a constant evaluation process without any meaningful change over a 10-year period. Considering differences in students as evaluators in different years, they stated that faculty members’ performance was not significantly changed over those years.Citation8 In a similar study in the USA, evaluation scores of 2,800 faculty members were assessed over a 5-year period. It showed that faculty members’ evaluation had a constant trend.Citation5 In the study by Rafiei et al in a university of medical sciences in Iran, faculty members’ evaluation for theoretical courses had a favorable and ascending trend, but no significant change was observed in the trend of evaluation of clinical courses.Citation9,Citation10 Similar studies in Canada showed that the assessed trend of changes was positive.Citation5,Citation11

To avoid single dimensionality evaluation, teaching methods and educational activities of faculty members are evaluated once a year from learners’ and administrators’ perspectives using questionnaires. The instructor receives a written report as the feedback consisting of ratings on each item of the questionnaire. It indicates the strengths and weaknesses of the faculty members’ performance. Additionally, faculty members are informed of the mean ranks of scores given by students and administrators. The results can be used for making decisions on faculty members’ employment status and annual promotion. This indicates the necessity of assessing the effect of evaluation on the improvement of faculty members’ performance. Furthermore, it can be used in the improvement of faculty members’ evaluation system. Therefore, this study aimed to assess the trend of changes in the evaluation scores of faculty members and the discrepancy between administrators’ and students’ perspectives in a medical school from 2006 to 2015.

Materials and methods

Design and setting of the study

This was a repeated cross-sectional study. The sample included 579 faculty members of a medical school in 28 clinical medicine (anesthesiology, cardiology, community medicine, dermatology, emergency medicine, ENT, infectious diseases, internal medicine, neurology, neurosurgery, obstetrics and gynecology, ophthalmology, orthopedic, urology, pediatrics, physical medicine & rehabilitation, radiology, radiotherapy, and surgery) and basic sciences’ departments (anatomy, genetics, immunology, medical physics, parasitology and mycology, pathology, and physiology). They were evaluated over the past 10 years from once to 10 times by students (undergraduate or postgraduate) and administrators (the director and vice director of the school and chair of the departments).

Data collection

For data collection, evaluation records of the faculty members over the decade from 2006 to 2015 were collected and analyzed. In this system, faculty members are evaluated by students and administrators using appropriate questionnaires. The students’ assessment questionnaire in theoretical and practical courses included areas such as punctuality, academic and practical mastery, teaching and assessment methods, and communication skills with students. The administrators’ questionnaire included areas such as interaction with students, interaction with the department and colleagues, curriculum planning, professional ethics and conscientiousness, discipline and time management, and mastery in the field of specialty.

During the 10 years of study, before the end of each academic year, students and administrators evaluated the professors online or manually, and their average marks were collected and submitted to the professors in a report.

Each evaluation record included student’s evaluation score, administrator’s evaluation score, and the total evaluation score. The total score was the weighted mean of the two scores. The data were collected from the online system or the analysis software for paper records. It was noted that online evaluation was conducted in the medical school from 2006, but clinical faculty members were evaluated using the paper format.

Statistical analyses

Descriptive and inferential statistics were used for data analysis. Continuous data were presented as mean and standard deviation and categorical data as number and percentage. The normal distribution of continuous data was evaluated using the Kolmogorov–Smirnov test and Q–Q plot. The linear mixed effects model was used for analyzing repeated evaluation scores. This approach enabled us to evaluate changes over time and compare changes in the scores of members in different departments.

Ethical considerations

Necessary permissions were granted by the ethics committee affiliated with Isfahan University of Medical Sciences and Educational Development Center of the university before data collection. Anonymity of faculty members was maintained through assigning codes.

Results

The results showed that the medical school currently has more than 519 faculty members, of whom more than 60% were male and less than 40% were female. Their mean age was 50 years. The type of data collected in this study was the evaluation scores of faculty members by students, administrators, and the total evaluation score (). To maintain the confidentiality of the data, the names of educational departments were presented using codes.

Table 1 Faculty members’ evaluation scores in different departments

As shown in , the mean scores of evaluation by the students and administrators in various departments were 89.14±8.04 and 94.04±5.18, respectively. The total mean score of evaluation was 92.11±5.05, with maximum and minimum mean scores of 94.93±2.49 and 89.19±5.42, respectively.

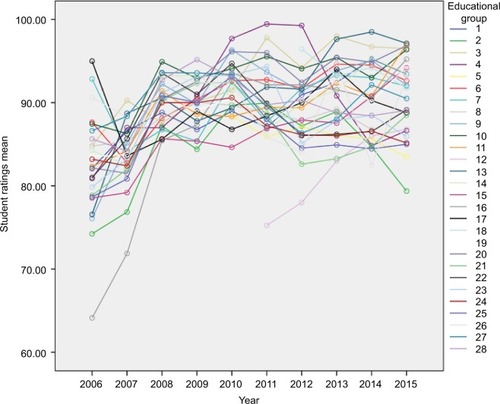

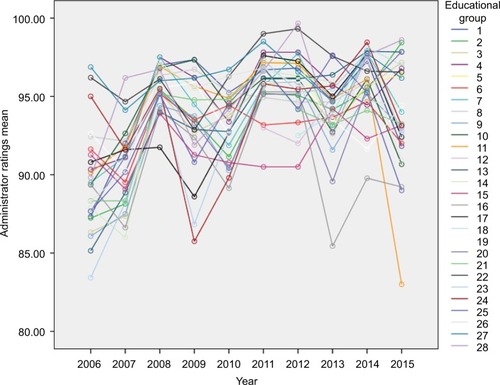

In evaluation by the students, a significant difference was observed between various departments (p<0.001) indicating the effect of the academic department. Additionally, over years, the mean scores of evaluation showed significant changes (p<0.001), indicating the effect of time. Regarding the interaction between time and the departments, the changes over years in various academic departments were also statistically significant (p<0.001). shows the trend of changes in evaluation by the students in the departments. While an occasional drop was observed in some departments, in general the trend was gradually ascending. In some years, the ascending trend had a higher speed such as the third academic year, which should be investigated in future studies. Differences in the mean scores of evaluation by the administrators in various departments showed a statistically significant difference (p<0.001), indicating the effect of the academic department. On the other hand, the mean score of changes over time also showed a statistically significant difference (p<0.001), indicating the effect of time. Furthermore, the mean score of changes over time between the different departments was statistically signifi-cant (p<0.001), which showed the interaction between time and the academic department. As can be seen from , the difference was significant not only between various academic departments but also within each department over time. While these changes had a positive trend during some periods, it had a descending trend. However, considering the range of scores during early years compared to the 10th year, in most departments maximum and minimum scores increased, and the rate was positive. During this time period, in most departments, the trends were aligned with each other.

Figure 1 The mean scores of faculty members’ evaluation by the students in different educational department from 2006 to 2015.

Figure 2 The mean scores of faculty members’ evaluation by the administrators in different educational department from 2006 to 2015.

In the total evaluation score, a significant difference was observed between the academic departments (p<0.001) indicating the effect of the department. Furthermore, over consecutive years (from 2006 to 2015), the mean evaluation scores showed a significant change (p<0.001) due to the effect of time. Also, over time, changes in various departments were statistically significant (p<0.001) indicating the interaction between time and the department.

Discussion

The aim of this study was to assess the trend of changes in the evaluation scores of faculty members and find discrepancies between the administrators’ and students’ perspectives. It was found that the overall evaluation had ascending and positive trends. The findings were in agreement with those of Murray et alCitation5 at the University of Ontario in Canada, Rafiei et alCitation10 at the Arak University, and Rezaei et alCitation12 at the University of Kerman. However, in the study by Rezaei et al,Citation12 the mean evaluation score was lower than in the present study. Therefore, an evaluation score of 75 was considered low, but the mean evaluation score of faculty members in Kerman University was reported as 75. This difference can be due to the dominant culture of the scoring system and the institutional context and environment.

In the present study, a significant difference was observed between the mean evaluation scores in various academic departments, indicating that the features and conditions of each department would affect scores given by the students and the administrators. HallingerCitation13 believes that institutional and cultural conditions are static and affect interactions within the institution. In a qualitative study in the same institution, faculty members stated that beside administrators, students of different schools had different evaluation scores. They believed that this difference was caused by the unique context and environment in each school.Citation14 MaroofiCitation15 also stated that the quality of the educational environment and the students could affect the quality of teaching, and consequently evaluation results. In most departments and over years, the evaluation scores given by the administrators were higher than those of the students due to consideration of interpersonal communications. Studies by Tahmasbi et alCitation16 and Shakurnia and TaherzadehCitation17 also showed similar results.

Evaluation by the students also indicated an improved trend in the evaluation score of almost all departments and meaningful changes in mean scores over time. This result was similar to the findings of Rafiei and MosayebiCitation10 in Arak and Fattahi et alCitation18 in Kerman. The differences between different years in the academic departments indicated that faculty members’ performances improved over time. In addition to gaining more experience,Citation19 the reason for such changes could be the effect of courses and workshops held by the Educational Development Center, which were developed based on the results of faculty members’ evaluation and official and unofficial need assessments. These courses improve faculty members’ educational performances and increase their evaluation scores. The study by Shakournia et alCitation8 also showed that training courses could improve faculty members’ performances and increase their evaluation scores. Significant changes were observed in the mean scores of different departments in each year, which could be due to differences in the students’ perspectives over years.Citation8 However, besides the effects of conditions and features of each school on the results of evaluation, students fill out evaluation forms carefully, purposefully, and accurately and give appropriate scores to faculty members. Therefore, this is in disagreement with the current notion that students fill out evaluation forms inaccurately and without enough attention.Citation20,Citation21 Nevertheless, the effects of viewpoints and attitudes of peers and students in changing the dominant culture and environment of the school should not be ignored.Citation20 Sometimes, it is considered a conspiracy to be against or in favor of one faculty member.Citation22 Lack of sufficient explanation to students about how to complete evaluation forms or lack of enough motivation for doing so can influence evaluation results.

Because of differences in questionnaires, the evaluation results are different between the students and administrators. It is believed that interpersonal relationships between faculty members and administrators influence evaluation results and make it difficult to judge the evaluation outcome.

The mean score of evaluation by the administrators had no particular order over years in different academic departments and followed no specific patterns. In the study by Shakurnia and Taherzadeh,Citation17 evaluation by administrators had less stability than those by students. A probable reason could be managerial changes that happen in schools over the years, such as changes of the director and vice director of the school and even the chair of departments. Managerial changes and lack of explanation about the process of faculty members’ evaluation can influence personal perceptions, attitudes, and beliefs, and thus the evaluation score. Lack of a unified protocol for the evaluation of faculty members by administrators is another reason for such a difference, and therefore, no particular criterion exists for evaluation. Evaluation by administrators is not done fairly, and personal opinions and intentions are involved in evaluation.Citation23 This hinders the effectiveness of administrators’ opinions for creating changes in faculty members’ performance.Citation24

Taylor and TylerCitation25 mentioned that faculty members are expected to take measures for improving their performances after receiving their annual evaluation reports. Therefore, a positive trend indicates the presence of intended change. Assessing the conformity of these results with factors such as faculty members’ academic degrees, participating in educational courses, changes in students’ performance, the rate of students’ success during different years, and the characteristics of students such as age, grade-point average, place of residence, parents’ literacy level, etc, are suggested in future studies.

Conclusion

The trend of changes in the results of faculty members’ evaluations by the students was clear and positive, but the same trend in the administrators’ evaluation was unclear. Therefore, future studies should study this discrepancy and related factors.

For increasing the accuracy of judgments, it is necessary to make appropriate changes in the evaluation system. Improving the evaluation system in universities can have positive effects in the improvement of faculty members’ performance and help with improving academic education.

This study did not assess reasons for changes in the trend of faculty members’ evaluation during the intended time period. Therefore, further studies should be designed and conducted for analyzing the reasons behind these changes.

Acknowledgments

The authors would like to thank the staff working in the faculty members’ evaluation department of the Educational Development Center who helped us in the production of this article.

Disclosure

The authors report no conflicts of interest in this work.

References

- TaghaviSAdibYPhenomenological experiences graduate students of Tabriz University humanities in faculty evaluation practicesPal Jour2017163367373

- KamaliFYamaniNChangizTInvestigating the faculty evaluation system in Iranian Medical UniversitiesJ Educ Health Promot201431224741652

- GillmoreGMDrawing inferences about instructors: the inter-class reliability of student ratings of instructionOffice of Educational Assessment, Report No 00-02University of WashingtonUS2000 Available from: http://depts.washington.edu/assessmt/pdfs/reports/OEAReport0002.pdfAccessed February 5, 2017

- DeCostaMBergquistEHolbeckRGreenbergerSA desire for growth: online full-time faculty’s perceptions of evaluation processesJ Educ Online20161321952

- MurrayHGJelleyRBRenaudRDLongitudinal trends in student instructional ratings: does evaluation of teaching lead to improvement of teaching?1996 Available from: https://eric.ed.gov/?id=ED417664Accessed January 14, 2017

- ArchibongIANjaMETowards improved teaching effectiveness in Nigerian public universities: instrument design and validationJ Higher Educ Stud20161278

- DunkinMJNovice and award-winning teachers’ concepts and beliefs about teaching in higher educationHativaNGoodyearJTeacher Thinking, Beliefs and Knowledge in Higher Education28Science & Business Media20024157

- ShakourniaAElhampourHMozafariADashtBozorgiBTen year trends in faculty members’ evaluation results in Jondi Shapour University of Medical SciencesIran J Med Educ200872309316

- RafieeMSeifiMStudy of factors related to teacher evaluation by the students in Arak University of Medical SciencesPresented at: 1st International Conference on Reform and Change Management in Medical Education, 6th Congress on Medical EducationNovember 30–December 2, 200366Tehran, Iran

- RafieiMMosayebiGResults of six years professors’ evaluation In Arak University of Medical SciencesArak Med Univ J20101245262

- MurrayHGStudent evaluation of teaching: has it made a differencePresented at: Annual Meeting of the Society for Teaching and Learning in Higher EducationJune 2005Charlottetown, Prince Edward Island

- RezaeiMHaghdoostAAOkhovatiMZolalaFBaneshiMRLongitudinal relationship between academic staffs’ evaluation score by students and their characteristics: does the choice of correlation structure matter?J Biostat Epidemiol2016214046

- HallingerPUsing faculty evaluation to improve teaching quality: a longitudinal case study of higher education in Southeast AsiaEduc Asse Eval Acc2010224253274

- KamaliFYamaniNChangizTFatemehZFactors influencing the results of faculty evaluation in Isfahan University of Medical SciencesJ Educ Health Promot201871329417073

- MaroofiYThe determining of teaching component weight for evaluation of faculty member performance with analytical hierarchy process modelsIran Higher Educ201134143168

- TahmasbiSVallianAMoezzyzadehMThe correlation between the students’ & authorities’ ratings for faculty evaluation in Shahid Beheshti School of DentistryJ Dent Sch Shahid Beheshti Univ Med Sci2012295358365

- ShakurniaATaherzadehMCorrelation between teachers’ evaluation scores by students, the department head and the faculty deanEduc Strategies Med Sci201473155160

- FattahiZMousapourNHaghdoostAThe trend of alterations in the quality of educational performance in faculty members of Kerman University of Medical SciencesStrides Dev Med Educ2006226371

- WenSHXuJSCarlineJDZhongFZhongYJShenSJEffects of a teaching evaluation system: a case studyInt J Med Educ2011218

- GreenwoodGEBridgesCMWareWBMcLeanJEStudent evaluation of college teaching behaviors instrument: a factor analysisJ Higher Educ197344596604

- LudlowLA longitudinal approach to understanding course evaluationsPrac Assess Res Eval2005101113

- JavadiAArab baferaniMAsib shenasie raveshhaye arzyabi keyfiate tadris az tarighe daneshjouyan ba roykarde boomiPresented at: The first conference in the quality assessment in UniversitySharif UniversityTehranMay 7, 2014Tehran, Iran Persian

- Karimi-MoonaghiHZhianifardAJafarzadehHBehnamHRTavakol-AfshariJExperiences of faculty members in relation to the academic promotion processStrides Dev Med Educ2015114485499

- CampbellJPEvaluating teacher performance in higher education: the value of student ratings [dissertation]Orlando, FLUniversity of Central Florida2005

- TaylorESTylerJHCan Teacher evaluation improve teaching?Educ Next2012124