Abstract

Background

After almost a decade of implementing competency-based programs in postgraduate training programs, the assessment of technical skills remains more subjective than objective. National data on the assessment of technical skills during surgical training are lacking. We conducted this study to document the assessment tools for technical skills currently used in different surgical specialties, their relationship with remediation, the recommended tools from the program directors’ perspective, and program directors’ attitudes toward the available objective tools to assess technical skills.

Methods

This study was a cross-sectional survey of surgical program directors (PDs). The survey was initially developed using a focus group and was then sent to 116 PDs. The survey contains demographic information about the program, the objective assessment tools used, and the reason for not using assessment tools. The last section discusses the recommended tools to be used from the PDs’ perspective and the PDs’ attitude and motivation to apply these tools in each program. The associations between the responses to the assessment questions and remediation were statistically evaluated.

Results

Seventy-one (61%) participants responded. Of the respondents, 59% mentioned using only nonstandardized, subjective, direct observation for technical skills assessment. Sixty percent use only summative evaluation, whereas 15% perform only formative evaluations of their residents, and the remaining 22% conduct both summative and formative evaluations of their residents’ technical skills. Operative portfolios are kept by 53% of programs. The percentage of programs with mechanisms for remediation is 29% (19 of 65).

Conclusion

The survey showed that surgical training programs use different tools to assess surgical skills competency. Having a clear remediation mechanism was highly associated with reporting remediation, which reflects the capability to detect struggling residents. Surgical training leadership should invest more in standardizing the assessment of surgical skills.

Background

There has been a recent, significant shift in residency training toward competency-based programs, such as the Canadian Medical Education Directives for Specialists (CanMEDS) frameworkCitation1 and the Accreditation Council for Graduate Medical Education (ACGME) Outcome Project.Citation2 These projects have identified certain competencies that residents should acquire during their training (). Surgical competency is covered under the medical expert and patient care sections in the CanMEDS and ACGME frameworks, respectively. However, after the initiation of these projects, program directors (PDs) have been struggling to identify an optimal assessment tool to measure this competency that meets certain criteria.Citation3,Citation4 The first criterion, reliability, refers to the reproducibility, consistency, and generality of the results over time. The second criterion, feasibility, reasons that, in order to be accepted, a new tool should be cost-effective, easy to execute, and not time-consuming. The third criterion, validity, asks if the tool assesses what it is supposed to assess. Validity is divided into five sub-categories: predictive validity, which asks if the tool can predict future performance; face validity, which asks if the tool reflects real life; construct validity, which asks if the tool measures what it is designed to measure; content validity, which asks if the domain that we are assessing is assessed by the tool; and criterion-related validity, which asks if the result assessed by a tool correlates with that measured by the current gold standard tool.

Table 1 CanMEDS and ACGME core competencies

After almost a decade of implementing competency-based programs in postgraduate training programs, the assessment of technical skills remains more subjective than objective, relying mainly on the gut feelings of the assessor and on nonstandardized direct observation of the resident while the resident performs a procedure. These subjective assessment methods have poor reliability, as manifested by measuring interrater and test–retest reliability.Citation3,Citation5 Dr Carlos A Pellegrini, the former president of the American Surgical Association, questioned in his presidential letter: “Is performance measurement possible and accurate?”Citation6 He sought a valid, reliable, measurable, and feasible assessment tool. In recent decades, surgical educators around the world have developed and validated objective assessment tools to measure surgical residents’ technical skills. Martin et al invented a new method that resembles the Objective Structured Clinical Examination and named it the Objective Structured Assessment of Technical Skills (OSATS).Citation7 The future of training is moving toward the use of more technology; Fried et alCitation8 developed the McGill Inanimate System for Training and Evaluation of Laparoscopic Skills (MISTELS). In addition, Datta et alCitation9 developed the Imperial College Surgical Assessment Device (ICSAD), which assesses surgical residents’ dexterity by electromagnetic means.

The Saudi Commission For Health Specialties (SCFHS)Citation10 was established under a royal decree in 1983. Its responsibilities are to organize, evaluate, and set policies for training program accreditation; in addition, the SCFHS is the accreditation and qualifying agency of the trainees. Thirty-seven residency and fellowships training programs in multiple health specialties have been recognized by the SCFHS. One of the main aims of the SCFHS is to supervise and assure the quality of graduates by offering a fair assessment to all trainees.

To enact a new change in a complex organization system, Heifetz and LaurieCitation11 recommend “getting on the balcony” to have a clear view of the current situation. Data on the assessment of technical skills in surgical specialties in national settings are lacking. We conducted this research to explore the current tools used by different surgical programs for technical skills assessment, their relationship with remediation (ie, the action taken by a program director toward a resident who does not fulfill the criteria for promotion to the next level of training), the recommended tools from the PDs’ perspective, and PDs’ attitudes toward the available objective tools for technical skills assessment.

Methods

Study design

A cross-sectional study was carried out between January 27, 2010 and March 24, 2010 and involved all of the surgical PDs accredited by the SCFHS.

Population, sample size, and sampling

This census study involved all of the PDs of all surgical programs accredited by the SCFHS (general surgery, orthopedics surgery, urology, otolaryngology, plastic surgery, maxillofacial surgery, obstetrics, and gynecology). The inclusion criterion was a surgical PD accredited by the SCFHS. The exclusion criteria were PDs not identified by the SCFHS or with invalid or incorrect email addresses on the SCFHS website. Ophthalmology and fellowship PDs were also excluded.

The survey

For the survey, we used a modified version of a previously used survey after receiving author permission.Citation12 The modified survey was designed by the primary investigator and the coauthors. A focus group was developed to assess the content and face validity of the survey. The focus group was composed of five surgeons from different surgical specialties who hold degrees in epidemiology and/or medical/surgical education. These surgeons were non-PDs to avoid contamination of the final sample.

The survey contains demographic information about the program (specialty, number of residents evaluated each year, number of residents accepted each year, number of full-time staffers, and the presence of a simulation lab). Respondents were provided a list of the available objective assessment tools with a brief description of each and asked to identify which of the tools they used and explain the reason for not using any of the available tools, if applicable. Remediation was also covered (the presence of a clear remediation mechanism based on poor surgical performance, information on when it takes place, and the number of residents that required remediation). The last section involves the importance and the recommended tools from the respondent’s perspective, his/her attitudes and motivations to apply those tools in his/her training program, and any further comments.

The survey was sent to the participants’ emails through the SurveyMonkey® website. A reminder email was sent to participants who failed to respond within 2 weeks.

Statistical analysis

All data were collected electronically and downloaded as an Excel (Microsoft Corporation, Redmond, WA) spreadsheet. From there, they were incorporated into the SPSS program (v 17; IBM Corporation, Armonk, NY). Descriptive statistics were tabulated, as were mean and median calculations for the scoring of various questions. The response to each question was considered as a continuous variable, and Chi-squared and Fisher’s exact tests were used where appropriate.

We then conducted a correlation analysis using the Pearson correlation coefficient with the remediation rate for each assessment tool. The associations were also calculated using odds ratios for each assessment tool. Both P-values less than 0.05 and 95% confidence intervals that did not overlap with 1 were considered statistically significant.

Results

Response rate

A total of 116 PDs were surveyed in the study, and 71 directors responded to our survey, for a response rate of 61%. Of these 71 directors, 65 respondents (56%) completed the survey. Six incomplete responses were excluded. The response rate after the first mailing was 18 (16% of the total) respondents; after the second mailing, another 53 responded (46%).

Demographic data

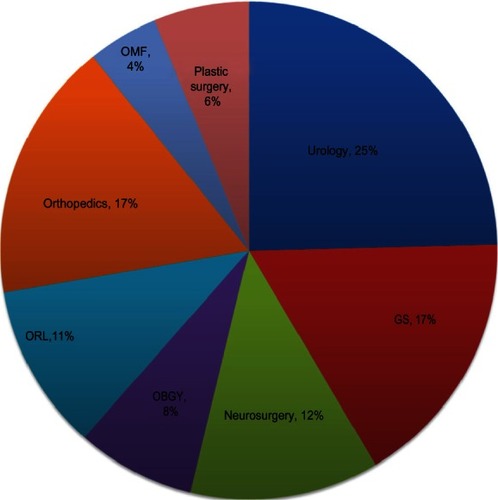

Urology PDs comprised 25% (n = 16) of the respondents (). The mean number of residents evaluated each year by a single PD was 11.4 (median = 8), with the highest number in general surgery. The mean number of residents accepted each year per institution was 2.74 (median = 2), with the highest number in general surgery. Fourteen percent of PDs reported having a simulation lab in their institution. The complete descriptive characteristics of the studied programs are shown in .

Figure 1 Distribution of respondents by specialty.

Table 2 Demographic data of the programs surveyed

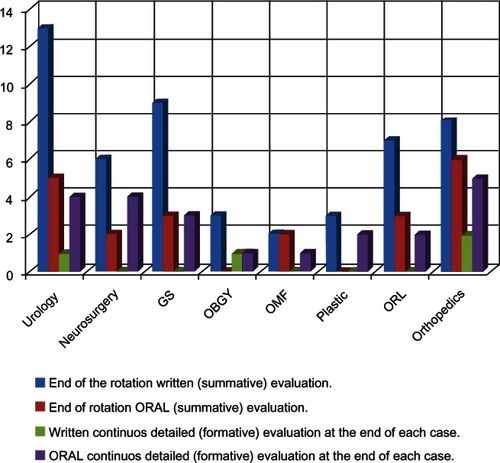

Surgical skills evaluation and feedback

Of the respondents, 39 (60%) only performed summative evaluations of their residents (ie, end of rotation/year evaluation), whereas 10 (15%) only conducted formative evaluations of their residents (ie, continued evaluation throughout the rotation/year). The remaining 14 (22%) used both summative and formative evaluations of residents’ technical skills. The evaluation and feedback was provided orally by 37 (57%) respondents and was written for 48 (74%) respondents. The most common evaluation used was an end-of-rotation, written summative evaluation (70%). The distribution of evaluation techniques by specialty is shown in .

Technical skills assessment tools

Of the respondents, 42% thought that they were using more than subjective technical skills assessment, whereas the remaining 59% mentioned using only nonstandardized, subjective, direct observation for technical skills assessment. Eighteen (26%) respondents were using at least one of the assessment tools listed in the survey (ie, OSATS, videotaped analysis, and resident surgical portfolios). Resident surgical portfolios refer to the log books containing all surgical cases in which the resident participated, either as the primary surgeon or the assistant, during his/her residency training. Eight (12%) respondents reported using hand-motion analysis, and five (8%) respondents reported using simulation to assess residents’ technical skills. shows the distribution of assessment tools used by specialty.

Table 3 Distribution of assessment tools used by specialty

OSATS

Seven (11%) respondents were using the OSATS, and orthopedic surgeons used it the most. Those respondents who never used it (59, 89%) attributed their non-use to the fact that it was not validated in their specialty (23, 39%), that they were not aware of the supporting literature (21, 36%), and that it was either not cost-effective (3, 5%) or not practical (6, 10%). In addition, eight respondents (14%) attributed its non-use to availability issues, whereas four (7%) attributed its non-use to lack of institutional support and resources. Of the respondents, 80% judged the OSATS as important, whereas only 1.5% thought that it was not important. Furthermore, 5% and 14% stated that they did not know or were neutral regarding the importance, respectively. Many participants (85%) recommended that the OSATS be included as part of a technical skills assessment program, whereas the other participants were neutral (11%) or did not know (5%).

Videotaped analysis

Ten (15%) respondents used videotaped analysis, and general surgery programs used this tool the most. Of those respondents who never used it (55, 85%), reasons given were that it was not validated in their specialty (21, 38%); they were not aware of the supporting literature (19, 35%); it was not cost-effective (7, 13%), practical (14, 26%), or available (4, 7%), and other reasons (8, 15%). Of the respondents, 60% thought that videotaped analysis was important; 32% thought it had no effect, whereas 6% thought that it was not important. Many of the participants (63%) recommended that videotaped analysis be part of a technical skills assessment program, whereas 29% were neutral, and 3% did not recommend its use.

Resident surgical portfolios

A total of 35 (54%) respondents used resident surgical portfolios. Urology PDs used them the most. Of those respondents who never used these portfolios (30, 46%), the reasons provided were that they were not validated in their specialty (11, 37%), that they were not aware of the supporting literature (12, 40%), that they were either not cost-effective (3, 10%) or practical (5, 17%), and other reasons (5, 17%). Of the respondents, 79% thought that resident surgical portfolios were important and should be part of a technical skills assessment program, whereas only 19% thought that portfolios were neutral. No respondents thought that portfolios were not important or did not recommend their use.

Remediation

Nineteen programs reported having a clear mechanism for remediation based on poor surgical performance. These remediation mechanisms take place at the end of the rotation, at the end of the academic year, and at the end of training at frequencies of 68%, 26%, and 5%, respectively. Thirty-one (48%) PDs reported having had at least one remediation case in the past 5 years based on poor surgical performance. The mean number of residents in all programs remediated in the past five years was 1.77 (1–4), and a total of 55 residents were required to remediate. Using videotaped analysis as an objective assessment tool was significantly associated with reporting remediation (P = 0.026), but there was no significant association with the use of the OSATS or surgical residents’ portfolios and remediation (). However, having a clear remediation mechanism based on poor surgical performance was highly significantly associated with reporting remediation (P = 0.001). However, no association was identified between reporting remediation and the different modes of evaluation and feedback.

Table 4 The association between remediation and both different assessment tools and the presence of a remediation mechanism

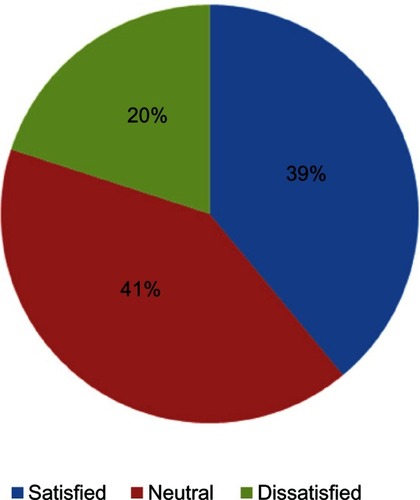

Satisfaction, attitude, motivation

Only 39% of respondents are satisfied () with the methods they have been using to assess technical skills, and this satisfaction was significantly associated with the use of surgical residents’ portfolios (P = 0.035). Of the respondents, 91% agreed that our survey increased their knowledge about the objective assessment of technical skills; 89% and 88% of the respondents agreed that our survey motivated them to learn more about and to apply objective assessment tools in their programs, respectively. A total of 94% of the participants expressed interest in attending a workshop on objective assessment tools. The comments provided by the participants were very favorable, and, in general, the participants found the survey to be extremely helpful. As an example of how participants valued the survey, one participant stated, “All members of examination committees in different programs should have continuous updates of their methods of evaluation.” Most comments also involved expressing interest in attending any further workshops or training pertaining to the matter of the objective assessment of surgical skills, e.g. “I think we need a workshop on acceptable methods for all trainers in all programs in Saudi Arabia.”

Discussion

Technical surgical errors comprise the majority of surgical errors, with more than half occurring due to a lack of competence.Citation13,Citation14 Reports have suggested that objective assessments be used to minimize medical errors.Citation15 The SCFHS pays a great deal of attention to standardizing training and assures the quality control of graduates by adapting specific criteria for the assessment of the quality of training centers and by conducting annual national exams for all postgraduate medical training programs in the different specialties.Citation10 Despite this great interest in highly standardized training programs, national exams only assess clinical skills and knowledge by using multiple choice questions and an Objective Structured Clinical Examination, whereas the assessment of surgical residents’ technical skills is completely ignored and left to each individual institution. Due to this nonstandardized technical skills assessment, graduate residents’ surgical competency varies widely. We hope our study will serve as a cornerstone for developing a standardized objective assessment program for surgical residents’ technical skills, both nationally and internationally.

The detection of struggling residents during training is one of the main aims of PDs. Subjective evaluation, which has poor reliability,Citation3,Citation5 may not detect this issue. Therefore, the use of objective assessment tools such as the OSATS, MISTELS, and electromagnetic hand motion analysis could be a “safeguard” against this dilemma, as all trainees would be tested fairly and objectively using validated tools.Citation5,Citation16 Applying these tools could be another struggle; most of our respondents are motivated and willing to apply new and innovative methods for assessment but lack knowledge and information about these tools. Thus, adapting faculty enhancement programs directed toward surgical education could solve this problem.

Having clear policies and mechanisms for remediation is helpful in detecting struggling trainees. The association between implementing such policies and reporting residents requiring remediation is confirmed in this study. However, the use of objective assessment tools did not display any association with reporting remediation, possibly because they are relatively new tools and their implementation is recent. Videotaped analysis is not a consistent tool, but using it with a validated checklist increases its reliability, validity, and objectivity.Citation17 However, if used without checklists, subjective direct observation continues to have disadvantages.

Bandiera et alCitation18 preferred using simulations, direct observation and in-training evaluation reports, logbooks, and objective structured assessment tools such as the OSATS and MISTELS for technical skills assessment. The ACGMECitation2 recommend that checklists and 360° evaluation be the first methods used for performance assessments of medical procedures. However, most of our participants recommended that the OSATS and residents’ surgical portfolios be used first. Moreover, although more than half of the PDs surveyed use portfolios, the quality of these portfolios is unknown. In addition, the literature has demonstrated poor reliability and validity for this instrument.Citation3 Therefore, the assessment should not depend primarily on portfolios, and other assessment tools should be included;Citation19 portfolios provide an idea of the quantity, but not the quality, of the procedures performed.Citation3 The reliability and construct validity of the OSATS are established,Citation20 but only a minority of programs use it, either due to a lack of knowledge and/or inadequate resources.

Recent reports on incorporating technology and health informatics into postgraduate training and assessment are fascinating. Reports from Tufts UniversityCitation21 on adapting the OpRate application to follow the progress of surgical residents’ technical skills demonstrate that adapting such formative written application programs is useful in identifying residents who need special consolidated training in one area or another. Another reliable application adapted by Southern Illinois UniversityCitation22 is the operative performance rating system, which is an internet-based, procedure-based assessment including ten checklist items that are used to evaluate residents’ technical and decision-making skills for six different general surgical procedures.

Dexterity-based analysis devices have been covered extensively in the literature. The ICSAD has been validated as a hand motion analyzer in both open and laparoscopic surgeries.Citation23,Citation24 The ICSAD tracks hand motion efficiency by measuring the number and speed of hand motions, the time taken to complete the task, and the distance traveled by the surgeon’s hand. The Advanced Dundee Endoscopic Psychomotor TesterCitation25 is a computer-controlled endoscopic performance assessment system that measures the time taken to complete the task, the contact error time, the route of the props, and the number of completed tasks within a time limit. This system has reasonable reliabilityCitation26 and contrast validityCitation27 as an endoscopic assessor. The third system, the Hiroshima University Endoscopic Surgical Assessment Device measures the time taken to complete the task and the deviation of the instrument tip in the horizontal and vertical axes. Its initial reliability and validity reports are encouraging.Citation28

Simulators have gained great popularity in training and, recently, in assessment. The minimally invasive surgical trainer-virtual reality (MIST-VR) simulator is one of the first simulators developed for training basic laparoscopic skills.Citation29 MIST-VR has been validated for assessing basic laparoscopic skills.Citation30 Maithel et alCitation31 compared three commercially available virtual reality simulators, MIST-VR, Endotower, and the Computer Enhanced Laparoscopic Training System (CELTS). All three simulators met the criteria for construct and face validity, and CELTS had the highest scores. Fried et alCitation8 also developed MISTELS, which includes five laparoscopic task stations (peg transfer, precision pattern cutting, securing a ligating loop, intracorporeal knotting, and extra-corporeal knotting) performed in standardized laparoscopic training boxes. The reliability and validity of this system have been confirmed.Citation8,Citation32–Citation36 In addition, MISTELS has been shown to be superior to subjective in-training evaluation reports in identifying residents with poor laparoscopic skills.Citation37 Recently, a cognitive component was incorporated into MISTELS through the fundamentals to laparoscopic surgery (FLS) module, and FLS has been reported to have reasonable reliability.Citation38 However, the validity of the FLS is questionableCitation39 because the cognitive element of the assessment cannot differentiate between experts and novices.

Few published reports have focused on the real situation of technical skills assessment. Brown et alCitation12 surveyed otolaryngology PDs in the United States and found that most of the participants used subjective evaluation. Similarly, they identified a significant correlation between having a clear remediation mechanism and the remediation rate, which confirms that implementing clear mechanisms and policies will enhance the identification of residents requiring remediation.

This study has several limitations. First, as in any survey study, the response rate is a major limitation and affects the calculated response rate for each specialty. Therefore, we sought to overcome this issue by frequently sending the survey to the participants, communicating via phone and on-site visits, and extending the survey time from 4 weeks to 6 weeks. Second, we assumed that the majority of participants would have a poor understanding of all of the assessment tools, so we added a brief description of each assessment tool in the survey, which may have caused a biased response. Third, the variety of specialties surveyed and the limited number of available programs make it difficult to generalize the results. Fourth, although we developed this survey scientifically using focus group methods and validated it prior to distribution to our target population, the survey has not been formally validated. Using the survey in a different population, such as with North American surgical PDs or any other regional PDs, would add credence to our results and will further validate both the survey and the results of our study.

Conclusion

The survey revealed that surgical training programs use different tools to assess surgical skills competency. Having a clear remediation mechanism was highly associated with reporting remediation, which reflects the capability to detect struggling residents. Surgical training leadership should invest more in standardizing the assessment of surgical skills.

Disclosure

The authors report no conflicts of interest in this work.

References

- CanMEDs Framework [webpage on the Internet]OttawaRoyal College of Physicians and Surgeons of Canada c2012 [cited 2012 Jun 3]. Available from: http://www.royalcollege.ca/portal/page/portal/rc/canmeds/frameworkAccessed February 22, 2010

- Toolbox of Assessment Methods [webpage on the Internet]ChicagoAccreditation Council for Graduate Medical Education c2000–2012 [cited 2012 Jun 3]. Available from: http://www.acgme.org/acgmeweb/Accessed March 15, 2010

- ReznickRKTeaching and testing technical skillsAm J Surg199316533583618447543

- ShumwayJMHardenRMAssociation for Medical Education in EuropeAMEE Guide No. 25:The assessment of learning outcomes for the competent and reflective physicianMed Teach200325656958415369904

- MoorthyKMunzYSarkerSKDarziAObjective assessment of technical skills in surgeryBMJ200332774221032103714593041

- PellegriniCASurgical education in the United States: navigating the white watersAnn Surg2006244333534216926559

- MartinJARegehrGReznickRObjective structured assessment of technical skill (OSATS) for surgical residentsBr J Surg19978422732789052454

- FriedGMFeldmanLSVassiliouMCProving the value of simulation in laparoscopic surgeryAnn Surg20042403518525 discussion52551815319723

- DattaVMackaySDarzpAGilliesDMotion analysis in the assessment of surgical skillComput Methods Biomech Biomed Engin200146515523

- The Saudi Comission for Health Specialities [webpage on the Internet]RiyadhThe Saudi Comission for Health Specialities c2012 [cited 2012 Jun 3]. Available from: http://english.scfhs.org.sa/index.php?option=com_content&view=article&id=100&Itemid=4378Accessed April 2, 2010

- HeifetzRALaurieDLThe Work of LeadershipHarvard Business Review2001

- BrownDJThompsonREBhattiNIAssessment of operative competency in otolaryngology residency: Survey of US Program DirectorsLaryngoscope2008118101761176418641530

- GawandeAAZinnerMJStuddertDMBrennanTAAnalysis of errors reported by surgeons at three teaching hospitalsSurgery2003133661462112796727

- RogersSOJrGawandeAAKwaanMAnalysis of surgical errors in closed malpractice claims at 4 liability insurersSurgery20061401253316857439

- KohnLTCorriganJMDonaldsonMSTo Err is Human: Building a Safer Health SystemWashingtonNational Academy Press1999

- JafferABednarzBChallacombeBSriprasadSThe assessment of surgical competency in the UKInt J Surg200971121519028147

- AggarwalRGrantcharovTMoorthyKMillandTDarziAToward feasible, valid, and reliable video-based assessments of technical surgical skills in the operating roomAnn Surg2008247237237918216547

- BandieraGSherbinoJFrankJRThe CanMEDS Assessment Tools Handbook. An Introductory Guide to Assessment Methods for CanMEDS Competencies1st edOttawaThe Royal College of Physicians and Surgeons of Canada2006

- TochelCHaigAHeskethAThe effectiveness of portfolios for post-graduate assessment and education: BEME Guide No 12Med Teach200931429931819404890

- WinckelCPReznickRKCohenRTaylorBReliability and construct validity of a structured technical skills assessment formAm J Surg199416744234278179088

- WohaibiEMEarleDBAnsanitisFEWaitRBFernandezGSeymourNEA new web-based operative skills assessment tool effectively tracks progression in surgical resident performanceJ Surg Educ200764633334118063265

- LarsonJLWilliamsRGKetchumJBoehlerMLDunningtonGLFeasibility, reliability and validity of an operative performance rating system for evaluating surgery residentsSurgery20051384640647 discussion64764916269292

- DattaVMandaliaMMackaySChangACheshireNDarziARelationship between skill and outcome in the laboratory-based modelSurgery2002131331832311894037

- MackaySDattaVMandaliaMBassettPDarziAElectromagnetic motion analysis in the assessment of surgical skill: Relationship between time and movementANZ J Surg200272963263412269912

- HannaGBDrewTClinchPHunterBCuschieriAComputer-controlled endoscopic performance assessment systemSurg Endosc199812799710009632879

- FrancisNKHannaGBCuschieriAReliability of the Advanced Dundee Endoscopic Psychomotor Tester for bimanual tasksArch Surg20011361404311146774

- FrancisNKHannaGBCuschieriAThe performance of master surgeons on the Advanced Dundee Endoscopic Psychomotor Tester: contrast validity studyArch Surg2002137784184412093343

- EgiHOkajimaMYoshimitsuMObjective assessment of endoscopic surgical skills by analyzing direction-dependent dexterity using the Hiroshima University Endoscopic Surgical Assessment Device (HUESAD)Surg Today200838870571018668313

- McCloyRStoneRScience, medicine, and the future. Virtual reality in surgeryBMJOct 202001323731891291511668138

- TaffinderNSuttonCFishwickRJMcManusICDarziAValidation of virtual reality to teach and assess psychomotor skills in laparoscopic surgery: results from randomised controlled studies using the MIST VR laparoscopic simulatorStud Health Technol Inform19985012413010180527

- MaithelSSierraRKorndorfferJConstruct and face validity of MIST-VR, Endotower, and CELTS: are we ready for skills assessment using simulators?Surg Endosc200620110411216333535

- DerossisAMFriedGMAbrahamowiczMSigmanHHBarkunJSMeakinsJLDevelopment of a model for training and evaluation of laparoscopic skillsAm J Surg199817564824879645777

- GhitulescuGA DAFeldmanLSA model for evaluation of laparoscopic skills: is there correlation to level of training?Surg Endosc200115S127

- DerossisAMBothwellJSigmanHHFriedGMThe effect of practice on performance in a laparoscopic simulatorSurg Endosc1998129111711209716763

- PetersJHFriedGMSwanstromLLDevelopment and validation of a comprehensive program of education and assessment of the basic fundamentals of laparoscopic surgerySurgery20041351212714694297

- FraserSAKlassenDRFeldmanLSGhitulescuGAStanbridgeDFriedGMEvaluating laparoscopic skills: setting the pass/fail score for the MISTELS systemSurg Endosc200317696496712658417

- FeldmanLSHagartySEGhitulescuGStanbridgeDFriedGMRelationship between objective assessment of technical skills and subjective in-training evaluations in surgical residentsJ Am Coll Surg2004198110511014698317

- SwanstromLLFriedGMHoffmanKISoperNJBeta test results of a new system assessing competence in laparoscopic surgeryJ Am Coll Surg20062021626916377498

- ZhengBHurHCJohnsonSSwanströmLLValidity of using Fundamentals of Laparoscopic Surgery (FLS) program to assess laparoscopic competence for gynecologistsSurg Endosc201024115216019517182