Abstract

Background

The purpose of this study was to conduct a meta-analysis on the construct and criterion validity of multi-source feedback (MSF) to assess physicians and surgeons in practice.

Methods

In this study, we followed the guidelines for the reporting of observational studies included in a meta-analysis. In addition to PubMed and MEDLINE databases, the CINAHL, EMBASE, and PsycINFO databases were searched from January 1975 to November 2012. All articles listed in the references of the MSF studies were reviewed to ensure that all relevant publications were identified. All 35 articles were independently coded by two authors (AA, TD), and any discrepancies (eg, effect size calculations) were reviewed by the other authors (KA, AD, CV).

Results

Physician/surgeon performance measures from 35 studies were identified. A random-effects model of weighted mean effect size differences (d) resulted in: construct validity coefficients for the MSF system on physician/surgeon performance across different levels in practice ranged from d=0.14 (95% confidence interval [CI] 0.40–0.69) to d=1.78 (95% CI 1.20–2.30); construct validity coefficients for the MSF on physician/surgeon performance on two different occasions ranged from d=0.23 (95% CI 0.13–0.33) to d=0.90 (95% CI 0.74–1.10); concurrent validity coefficients for the MSF based on differences in assessor group ratings ranged from d=0.50 (95% CI 0.47–0.52) to d=0.57 (95% CI 0.55–0.60); and predictive validity coefficients for the MSF on physician/surgeon performance across different standardized measures ranged from d=1.28 (95% CI 1.16–1.41) to d=1.43 (95% CI 0.87–2.00).

Conclusion

The construct and criterion validity of the MSF system is supported by small to large effect size differences based on the MSF process and physician/surgeon performance across different clinical and nonclinical domain measures.

Introduction

One of the most widely recognized methods used to evaluate physicians and surgeons in practice is multi-source feedback (MSF), also referred to as a 360-degree assessment, where different assessor groups (eg, peers, patients, coworkers) rate doctors’ clinical and nonclinical performance.Citation1 Use of MSF has been shown to be a unique form of evaluation that provides more valuable information than any single feedback source.Citation1 MSF has gained widespread acceptance for both formative and summative assessment of professionals, and is seen as a trigger for reflecting on where changes in practice are required.Citation2,Citation3 Certain characteristics of health professionals have been assessed using MSF, including their professionalism, communication, interpersonal relationships, and clinical and procedural skills competence.Citation4 One of the main benefits of MSF is that it provides physicians and surgeons with information about their clinical practice that may help them in improving and monitoring their performance.Citation5

The number of published studies on the use of MSF to assess health professionals in clinical practice has increased substantially. In a recent systematic review studying the impact of workplace-based assessment of doctors’ education and performance, Miller and ArcherCitation6 reported evidence of support for use of MSF in that it has the potential to lead to improvement in clinical performance. Risucci et alCitation7 demonstrated concurrent validity for MSF in surgical residents by showing a medium effect size correlation coefficient between MSF scores and American Board of Surgery In-Training Examination (ABSITE) scores. When using MSF with residents at different levels in their program, Archer et alCitation8 showed modest increases in the performance of year 4 in comparison with year 2 trainees, thereby demonstrating the construct validity of this approach to assessment. Violato et alCitation9 compared changes in physician performance from time 1 to time 2 (a 5-year interval) using total scores given by medical colleagues and coworkers using the MSF questionnaire and demonstrated a significant improvement in their performance over time. Although MSF has been used in a variety of contexts, the research focus varies on measures across years in programs, differences between assessor groups, or comparisons with other assessment methods, so the validity of MSF needs to be investigated further.

The main purpose of this study was to conduct a meta-analysis by identifying all published empirical data on the use of MSF to assess physicians’ clinical and nonclinical performance. We conducted a meta-analysis on the construct and criterion (predictive or concurrent) validity of the MSF system as a function of both summary effect sizes, their 95% confidence intervals (CIs), and interpretation of the magnitude of these coefficients.

Materials and methods

Selection of studies

In this present study, we followed the guidelines for reporting of observational studies included in a meta-analysis.Citation10 In addition to PubMed and MEDLINE, the CINAHL, EMBASE, and PsycINFO databases were searched from January 1975 to November 2012. We also manually searched the reference lists for further relevant studies. The following terms were used in the search: “multi-source feedback”, “360-degree evaluation”, and “assessment of medical professionalism”. Studies were included if: they used at least one MSF instrument (eg, self, colleague, coworker, and/or patient) to assess physician/surgeon performance in practice; they described the MSF instrument or its design; they described factors measured by the MSF instrument; they provided evidence of construct-related and/or criterion-related validity (predictive/concurrent); and they were published in an English-language, peer-reviewed journal. The main reason for restricting the search to refereed journals was to ensure that only studies of high quality were included in the meta-analysis. On the other hand, we excluded studies if they used nonmedical health professionals, did not provide a description or breakdown of what the MSF instrument was measuring, did not provide empirical data on MSF results, reported data on feasibility and/or reliability only, and/or focused on performance changes after receiving MSF feedback.

Data extraction

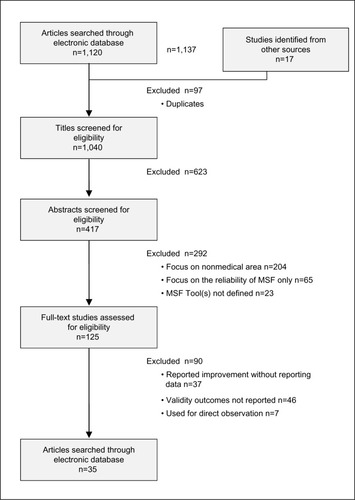

The initial search yielded 1,137 papers, as shown in . Of these, 623 papers were excluded based on the title, 292 were excluded based on a review of the abstract, 97 were removed as they were duplicates, and a further 90 were eliminated after a review of the full-text versions. Finally, we agreed on a total of 35 papers to be included for meta-analysis. A coding protocol was developed that included each study’s title, author(s) name(s), year of publication, source of publication, study design (ie, construct or criterion validity study), physician/surgeon specialty (eg, general practice, pediatrics), and types of raters (ie, self, medical colleague, consultants, patients, and coworkers). All 35 articles were independently coded by two authors (AA and TD) and any discrepancies (eg, effect size calculations) were reviewed by a third author (KA, AD, or CV). Based on iterative reviews and discussions between the five coders, we were able to achieve 100% agreement on all coded data.

Statistical analysis

The statistical analysis of all effect size calculations was done using the Comprehensive Meta-Analysis software program (version 1.0.23, Biostat Inc, Englewood, NJ, USA). Most of the studies reported mean differences (Cohen’s d) between MSF scores as effect size measures. However, there were some studies that reported the Pearson’s product-moment correlation coefficient (r). For these studies, and in order to preserve consistency in the data that were reported, r was converted to Cohen’s d using the following formula: d = 2r/√(1 − r2).Citation11

We selected MSF domains or subscale measures as the variables of interest and either contrasted these scores between assessor groups (eg, different personnel ratings, in-training year, or postgraduate year of practice) or with other measures of clinical performance competencies (eg, ABSITE or Objective Structured Clinical Examination [OSCE]).

On combination of results from studies that used different research designs (eg, different physician year in practice) or different personnel ratings (eg, medical colleagues, coworkers, patients) and methods of analysis between assessor groups (eg, MSF in comparison with ABSITE, as well as an objective structured practical examination [OSPE]), we used a random-effects model in combining the unweighted and weighted effect sizes. The fixed-effects model assumes that the summary effect size differences are the same from study to study (eg, use of MSF with different questionnaires). In contrast, the random-effects model calculation reflects a more conservative estimate of the between-study variance of the participants’ performance measures.Citation12

In this meta-analysis, residents in different years of rotation and the attending physicians/surgeons were treated equally in that they represent treating physicians at different stages of their year of practice. Therefore, we are evaluating the performance of these ‘physicians/surgeons’ that had a more or less similar trajectory in achieving clinical competency as a function of their performance by using the multi-source feedback system.

To assess for the heterogeneity of effect sizes, a forest plot with Cochran Q tests was conducted. Absence of a significant P-value for Q indicates low power within studies rather than the actual consistency or homogeneity across studies included in the meta-analysis. In addition, the distribution of the studies in the forest plots was an important visual indicator to measure the consistency between studies. Interpretation of the magnitude of the effect size for both mean differences and correlations are based on Cohen’sCitation13 suggestions, ie, d=0.20 – 0.49 is “small”, d=0.50 – 0.79 is “medium”, and d≥0.80 is considered to be a “large” effect size difference.

Results

The characteristics of the 35 studies included in the meta-analysis were based on four groups () that reported contrasts between different physician years in practice (group A), differences between physician performance levels on two occasions (group B), rating differences between self, medical colleague, coworker, and patients (group C), and comparisons between MSF and other measures of performance (group D). The reported MSF domain measure (ie, items 1 through 5) and the corresponding unweighted effect sizes based on either the contrast or comparison variables are presented in . Different approaches to testing the validity of MSF were demonstrated by studies included in this meta-analysis. In groups A and B, we investigated the construct validity of the domains’ measures of MSF by showing that physicians at different levels of experience or on two separate occasions tend to obtain higher clinical performance scores. In groups C and D, the criterion validity of MSF is compared with other similar assessments of clinical performance or different raters as either a concurrent or predictive validity measure.

Table 1 Characteristics of MSF studies with construct and criterion (concurrent/predictive) validity effect size measures

The sample size of the studies range from six plastic surgery residentsCitation14 to 577 pediatric residentsCitation15 who had been assessed using MSF with as few as 1.2 patients and 2.6 medical colleaguesCitation16 and as many as 47.3 patients completing forms per individual.Citation17 Questionnaire items used as part of MSF ranged from as few as four itemsCitation18 to as many as 60 itemsCitation14 per questionnaire. Information on specific demographic characteristics, such as students’ sex or age was not reported, but level of training and years of practice as a physician were typically identified. In each study, the unweighted mean effect size difference (Cohen’s d) was provided or calculated based on the MSF domain measures as a contrasting variable (eg, years spent as a physician in practice) or with a comparison measure (eg, OSPE).

Construct validity of MSF system

Of the 35 studies that reported data on physician/surgeon performance, 31 (88%) demonstrated results in support of the construct validity of the MSF system. As shown in , we combined five of the studies (group A) to show that for each of the five MSF domains the effect size differences in performance between a year of practice (eg, change in performance as a function of post-graduate year 1 to year 2, Senior House Officer to Specialist Registrar)Citation8,Citation15,Citation19–Citation21 ranged from d=0.14 (95% CI 0.40–0.69) for manager skills to d=1.78 (95% CI 1.20–2.30) for communication skills.

Table 2 Random effects model (Cohen’s d) of the MSF domains with different physician years (group A)/different physician performance in two occasions (group B)

When differences between physician/surgeon performance were investigated on two different occasions, we found four studies (group B) that showed differences in clinical performance across the five domain scores of MSF. In particular, Brinkman et alCitation19 compared ratings for 36 pediatric residents on two occasions with regard to the professionalism and communication skills domains, and their results showed that there were consistently large effect size differences between time 1 and time 2. The ratings on these MSF items ranged from d=1.31 for the professionalism domain to d=2.00 for the communication skills domain. Correspondingly, Lockyer et alCitation22 found a range of MSF scores that varied from d=0.01 for physicians over a 5-year period on the professionalism, communication skills, and management domains for self-rating assessment to d=0.66 with the same physicians over the professionalism, communication skills, and interpersonal relationship domains as rated by medical colleagues. Violato et alCitation9 reported a small effect size of d=0.46 when the performance of 250 family physicians was compared after a 5-year interval between MSF assessments.

Criterion (predictive/concurrent) validity of the MSF system

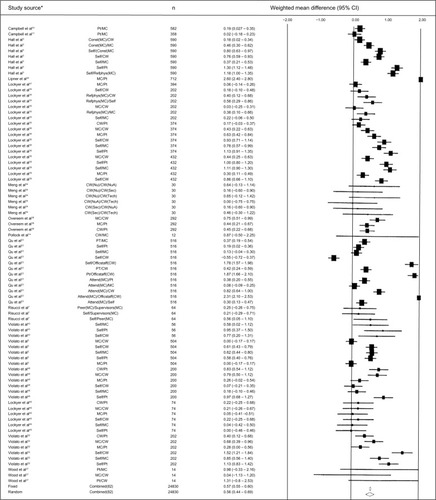

In group C, we combined the outcomes in 21 (60%) studies that investigated the differences in MSF scores provided by different raters (eg, residents, self, medical colleague, coworker, patients) across the five domains identified. Effect size differences in performance between the different raters (eg, comparison of patients with self assessment, medical colleagues to coworkers) ranged from d=0.50 (95% CI 0.47–0.52) for interpersonal relationships to d=0.57 (95% CI 0.55–0.60) for both professionalism and clinical competence. Most of the studies in group C showed that physicians consistently rated themselves lower than did other assessor groups. However, in a study of 258 residents within different specialties reported by Qu et al, residents on self-assessments rated themselves higher than did other raters.Citation23 As shown in the forest plot (), the combined random-effects size calculation for the professionalism domain was “medium” (d=0.66, 95% CI 0.44–0.69).

Figure 2 Random and fixed effects model forrest plots for the MSF “personnel rating differences” for professional measures.

Abbreviations: MSF, multi-source feedback; Pt, patients; MC, medical colleagues; Const, consultant; CW, co-workers; RefPhys, referring physicians; Nu, nursing; NuA, nursing aid; Sec, secretary; Tech, technicians; Officstaff, office staff; Attend, attending.

In group D (), of the 35 studies included in the meta-analysis, four reported data on physician/surgeon performance on MSF in comparison with other criterion measures (eg, OSPE, OSCE). The mean effect size differences were found to be “medium” to “high” across each of the five domains identified on MSF. Effect size differences in performance between domain scores and other examination measurement scores ranged from d=1.28 (95% CI 1.15–1.41) for clinical competence to d=1.43 (95% CI 0.87–2.00) for interpersonal relationships. Yang et alCitation24 found a range of MSF scores that varied from d=0.79 for residents on the domains of professionalism, clinical competence, and communication skills to d=2.07 with the same physicians on the same domains when their MSF scores were compared with other clinical performance measures such as the OSCE.

Table 3 Random effects model (Cohen’s d) of the MSF domains with personnel ratings/academic performance (groups C and D)

Although the Cochran Q test shows significant heterogeneity between the studies included in the four groups, a subgroup analysis to determine the potential differences as a result of moderator variables such as physician/surgeon sex or age was limited by the data reported across the primary studies included in the meta-analysis. Nevertheless, the studies were weighted by their respective sample sizes, and the random-effects model analysis (with greater than 95% CIs) provide a more conservative estimate of the combined effect sizes as illustrated by a forest plot ().

Discussion

In this meta-analysis, the MSF demonstrates evidence of construct validity when used with physicians and surgeons across the years of a residency program or a number of years of practice. Physician/surgeon performance on the MSF domains across a single year of practice showed “small” to “large” effect size differences, with effect sizes ranging from d=0.14 (95% CI 0.40–0.69) in the manager skills domain to d=1.78 (95% CI 1.20–2.30) in the communication skills domain.

The effect size differences between physician/surgeon performance on two occasions (time 1/time 2) ranged from d=0.23 (95% CI 0.13–0.33) for the communication skills domain to d=0.90 (95% CI 0.74–1.10) for the interpersonal relationship domain measure.

The differences in rating for physician/surgeon performance on MSF between different assessor groups (self-assessments, medical colleagues, consultants, patients, and coworkers) showed “medium” effect size differences that ranged from d=0.50 (95% CI 0.47–0.52) for the interpersonal relationship domain to d=0.57 (95% CI 0.55–0.60) for the professionalism and clinical competence domains. In particular, these results were supported by the findings from other assessment methods such as the mini-clinical evaluation exercise (mini-CEX). Ratings with different raters in the mini-CEX have showed that in comparison with faculty evaluator ratings, residents tend to be more lenient and score trainees higher on in-training evaluation checklists.Citation25,Citation26 In our study of the MSF, we found that physicians and surgeons consistently rated themselves lower than did other assessor groups.Citation23 In addition, patients and coworkers typically rated physicians/surgeons more leniently than did other raters, such as medical colleagues or consultants.

The MSF showed evidence of criterion-related validity when compared with other performance examination measures (eg, global examination, OSPE, OSCE). We found a “large” correlation coefficient, with combined effect sizes ranging from d=1.28 (95% CI 1.15–1.41) for the communication skills domain to d=1.43 (95% CI 0.87–2.00) for the interpersonal relationship domain.

The construct-related and criterion-related validity of MSF was supported by the findings outlined within the studies included in one or more of the four group comparisons. As illustrated in the forrest plots for the professionalism domain in group C, not all of the reported differences between personnel ratings were found to be statistically significant. When combined with the outcomes from 19 different studies, however, we found that there was a significant combined random-effects size of d=0.65 (95% CI, 0.44–0.69).

In general, the findings of this meta-analysis shows “medium” combined effect sizes for the construct-related and criterion-related validity of the five main MSF domains identified. Although different questionnaires and different numbers of items were used in MSF across different specialties, they were found to consistently measure similar domains of physician/surgeon performance.Citation15 This feedback process using multiple questionnaires in different type of raters provides a more comprehensive evaluation of clinical practice than can typically be provided by one or few sources.Citation1

Strengths and weaknesses of the study

There are limitations to this meta-analysis. Because we were interested in determining the construct-related and criterion-related validity of MSF as a method for physician/surgeon evaluation, consistency in the use of the evaluation tool varied from a research design perspective. In addition, there was variability in the performance domains measured and in the number of items used to measure each domain depending on the MSF instrument used (ie, ranging from four items to 60 items), the raters used (ie, self, patients, medical colleague, coworker), and whether or not the MSF was being compared with other clinical skill measures (ie, OSCE). To overcome this limitation, the more conservative random-effects size analysis was performed to accommodate for the heterogeneity between the studies as indicated by the significant values obtained using the Cochran Q test. Nevertheless, we were unable to undertake subsequent subgroup analyses to determine where there may have been between-study differences because these data (eg, sex, age of participant) were rarely reported. Although some of the studies had small sample sizes such as sixCitation14 and seven participants,Citation27 this was in part compensated by the 40 and eight raters who completed the questionnaire, respectively, on each of the participants in these studies. To achieve some control over the quality of the studies that were included in this meta-analysis, only papers that had been published in refereed journals were selected.

Implications for clinicians and policymakers

Certain characteristics of health professionals, such as clinical skills, personal communication, and client management, combined with improved performance can be assessed using MSF.Citation8 MSF is a unique form of assessment that has been shown to have both construct-related and criterion-related validity in assessing a multitude of clinical and nonclinical performance domains. In addition, MSF has been shown to enhance changes in clinical performance,Citation15 communication skills,Citation7 professionalism,Citation7 teamworkCitation28, productivity,Citation29 and building trusting relationship with patients.Citation30

Consequently, MSF has been adopted and used extensively as a method for assessment of a variety of domains identified in medical education programs and licensing bodies in the UK, Canada, Europe, and other countries as well. Although MSF has gained widespread acceptance, the literature has raised a number of concerns about its implementation and its validity. Therefore, the availability of evidence to support the validity of the process and the instruments used to date is of crucial importance to enable policymakers to make the decision to implement MSF within their own programs or organizations.

Conclusion and future research

Although MSF appears to be adequate for assessment of a variety of nontechnical skills, this approach is limited to feedback from peers or medical colleagues abilities to assess aspects of clinical skills competence that reflect physicians’/surgeons’ knowledge and non-cognitive behavior. In particular, as part of the process of assessing clinical performance, other methods such as procedures-based assessment or the OSCE should be used in conjunction with the peer MSF questionnaire to ensure accurate assessment of these specific skills.

We are faced with the challenge of ensuring that use of MSF for assessment of physicians and surgeons in practice is reliable and valid. As shown above, MSF has proved to be a useful method for assessing the clinical and nonclinical skills of physicians/surgeons in practice with clear evidence of construct and criterion-related validity. Although MSF is considered to be a useful assessment method, it should not be the only measure used to assess physicians and surgeons in practice. Other reliable and valid methods should be used in conjunction with MSF, in particular to assess procedural skills performance and to overcome the limitation of using a single measure.

Future research should be considered by researchers in order to replicate and extend some of the empirical findings, especially the evidence for criterion-related validity. Criterion-related validity studies looking at correlations between direct observations of behavior or performance and MSF scores are required to add further evidence of validity. Future research on the various MSF instruments available may well include confirmatory factor analysis, which provides stronger construct validity evidence than the principal component factor analyses conducted currently.Citation31 In addition, MSF assessments are entirely questionnaire-based and rely on the judgment of and inference by the assessors and respondents, which are subject to a variety of biases and heuristics. Therefore, generalizability theory should be used in future studies to determine potential sources of error measurement that can occur due to use of different assessors and specialties, as well as the characteristics of the respondents themselves.

Author contributions

All authors contributed to the conception and design of the study. AA and TD acquired the data. All authors analyzed and interpreted the data. KA, AD, and CV provided administrative, technical, or material support and supervised the study. AA and TD drafted the manuscript. All of the authors critically revised the manuscript for important intellectual content and approved the final version submitted for publication.

Disclosure

All authors have no competing interests in this work.

References

- BrackenDWTimmreckCWChurchAHIntroduction: a multisource feedback process modelBrackenDWTimmreckCWChurchAHThe Handbook of Multisource Feedback: The Comprehensive Resource for Designing and Implementing MSF ProcessesSan Francisco, CA, USAJossey-Bass2001

- LockyerJClymanSMultisource feedback (360-degree evaluation)HolmboeESHawkinsREPractical Guide to the Evaluation of Clinical CompetencePhiladelphia, PA, USAMosby2008

- HallWViolatoCLewkoniaRAssessment of physician performance in Alberta: the Physician Achievement ReviewCMAJ1999161525710420867

- FidlerHLockyerJViolatoCChanging physicians practice: the effect of individual feedbackAcad Med19997470271410386101

- ViolatoCLockyerJFidlerHMultisource feedback: a method of assessing surgical practiceBMJ200332654654812623920

- MillerAArcherJImpact of workplace based assessment on doctors education and performance: a systematic reviewBMJ201034116

- RisucciDATortolaniAJWardRJRatings of surgical residents by self, supervisors and peersSurg Gynecol Obstet19891695195262814768

- ArcherJNorciniJSouthgateLHeardSDaviesHMini-PAT (Peer Assessment Tool): a valid component of a national assessment programme in the UK?Adv Health Sci Educ Theory Pract20081318119217036157

- ViolatoCLockyerJFidlerHChange in performance: a 5-year longitudinal study of participants in a multi-source feedback programmeMed Educ2008421007101318823520

- StroupDFBerlinJAMortonSCMeta-analysis of observational studies in epidemiology: a proposal for reportingJAMA20002382008201210789670

- RosenthalRRubinDA simple general purpose display of magnitude of experimental effectJ Educ Psychol198274166169

- DerSimonianRLairdNMeta-analysis in clinical trialsControl Clin Trials198671771883802833

- CohenJStatistical Power Analysis for the Behavioral Sciences2nd edHillsdale, NJ, USALawrence Earlbaum Associates1988

- PollockRADonnellyMBPlymaleMAStewartDHVasconezHC360-degree evaluations of plastic surgery resident accreditation council for graduate medical education competencies: experience using a short formPlast Reconstr Surg200812263964918626387

- ArcherJMcGrawMDaviesHAssuring validity of multisource feedback in a national programmePostgrad Med J20108652653120841329

- ChandlerNHendersonGParkBByerleyJBrownWDSteinerMJUse of a 360-degree evaluation in the outpatient settings: the usefulness of nurse, faculty, patient/family, and resident self-evaluationJ Grad Med Educ20101043043421976094

- CampbellJNarayananABurfordBGrecoMValidation of a multi-source feedback tool for use in general practiceEduc Prim Care20102116517920515545

- WoodLWallDBullockAHassellAWhitehouseACampbellI‘Team observation’: a six-year study of the development and use of multi-source feedback (360-degree assessment) in obstetrics and gynecology training in the UKMed Teach200628e177e18417594543

- BrinkmanWBGeraghtySRLanpherBPEffect of multisource feedback on resident communication skills and professionalismArch Pediatr Adolesc Med2007161444917199066

- ArcherJCNorciniJDaviesHAUse of SPRAT for peer review of paediatricians in trainingBMJ20053301251125315883137

- MassagliTLCarlineJDReliability of a 360-degree evaluation to assess resident competenceAm J Phys Med Rehabil20078684585217885319

- LockyerJViolatoCFidlerHWhat multisource feedback factors influence physicians’ self-assessments? A five-year longitudinal studyAcad Med2007827780

- QuBZhaoYHSunBZAssessment of resident physicians in professionalism, interpersonal and communication skills: a multisource feedbackInt J Med Sci2012922823622577337

- YangYYLeeFYHsuHCAssessment of first-year post-graduate residents: usefulness of multiple toolsJ Chin Med Assoc20117453153822196467

- HatalaRNormanGRIn-training evaluation during an internal medicine clerkshipAcad Med199974Suppl 10118S120S

- HillFKendallKGalbraithKCrossleyJImplementing the undergraduate mini CEX: a tailored approach at Southampton UniversityMed Educ20094332633419335574

- WoodJCollinsJBurnsideESPatient, faculty, and self-assessment of radiology resident performance: a 360-degree method of measuring professionalism and interpersonal/communication skillsAcad Radiol20041193193915288041

- DominickPReillyRMcGourtyJThe effects of peer feedback on team member BehaviourGroup and Organization Management199722508520

- EdwardsMEwenA360 Feedback: The Powerful New Model for Employee Assessment and Performance ImprovementNew York, NY, USAAmacom1996

- WaldmanDPredictors of employee preferences for multi-rater and group-based performance appraisalGroup and Organization Management199722264287

- ViolatoCHeckerKHow to use structural equation modeling in medical education research: a brief guideTeach Learn Med20081936237117935466

- ViolatoCLockyerJFidlerHAssessment of psychiatrists in practice through multisource feedbackCan J Psychiatry20085352553318801214

- CampbellJLRichardsSHDickensAGrecoMNarayananABrearleySAssessing the professional performance of UK doctors: an evaluation of the utility of the General Medical Council patient and colleague questionnairesQual Saf Health Care20081718719318519625

- MengLMetroDGPatelRMEvaluating professionalism and interpersonal and communication skills: implementing a 360-degree evaluation instrument in an anesthesiology residency programJ Grad Med Educ20091021622021975981

- LockyerJViolatoCFidlerHAlakijaPThe assessment of pathologists/laboratory medicine physicians through a multisource feedback toolArch Pathol Lab Med20091331301130819653730

- LockyerJViolatoCFidlerHThe assessment of emergency physicians by a regulatory authorityAcad Emerg Med2006131296130317099191

- ArcherJMcAvoyPFactors that might undermine the validity of patient and multi-source feedbackMed Educ20114588689321848716

- OvereemKWollersheimHCArahOACruijsbergJKGrolRPLombartsKMEvaluation of physicians’ professional performance: an iterative development and validation study of multisource feedback instrumentsBMC Health Serv Res20121211122214259

- LockyerJViolatoCWrightBFidlerHChanRLong-term outcomes for surgeons from 3- and 4-year medical school curriculaCan J Surg20125515

- DaviesHArcherJBatemanASpecialty-specific multi-source feedback: assuring validity, information trainingMed Educ2008421014102018823521

- WenrichMDCarlineJDGilesLMRamseyPGRatings of the performances of practicing internists by hospital-based registered nursesAcad Med1993686806878397633

- LelliottPWilliamsRMearsAQuestionnaires for 360-degree assessment of consultant psychiatrists: development and psychometric propertiesBr J Psychiatry200819315616018670003

- ViolatoCMariniATowesJFeasibility and psychometric properties of using peers, consulting physicians, co-workers, and patients to assess physiciansAcad Med1997728284

- ThomasPAGeboKAHellmannDBA pilot study of peer review in residency trainingJ Gen Intern Med19991455155410491244

- LipnerRSBlankLLLeasBFFortnaGSThe value of patient and peer ratings in recertificationAcad Med200277Suppl 106466

- JoshiRLingFWJaegerJAssessment of a 360-degree instrument to evaluate residents’ competency in interpersonal and communication skillsAcad Med20047945846315107286

- LockyerJViolatoCFidlerHA multi-source feedback program for anesthesiologyCan J Anesth200653333916371607

- ViolatoCLockyerJFidlerHAssessment of pediatricians by a regulatory authorityPediatrics200611779680216510660

- LockyerJLBlackmoreDFidlerHA study of a multi-source feedback system for international medical graduates holding defined licencesMed Educ20064034034716573670