Abstract

Background

How systematic review authors address missing data among eligible primary studies remains uncertain.

Objective

To assess whether systematic review authors are consistent in the way they handle missing data, both across trials included in the same meta-analysis, and with their reported methods.

Methods

We first identified 100 eligible systematic reviews that included a statistically significant meta-analysis of a patient-important dichotomous efficacy outcome. Then, we successfully retrieved 638 of the 653 trials included in these systematic reviews’ meta-analyses. From each trial report, we extracted statistical data used in the analysis of the outcome of interest to compare with the data used in the meta-analysis. First, we used these comparisons to classify the “analytical method actually used” for handling missing data by the systematic review authors for each included trial. Second, we assessed whether systematic reviews explicitly reported their analytical method of handling missing data. Third, we calculated the proportion of systematic reviews that were consistent in their “analytical method actually used” across trials included in the same meta-analysis. Fourth, among systematic reviews that were consistent in the “analytical method actually used” across trials and explicitly reported on a method for handling missing data, we assessed whether the “analytical method actually used” and the reported methods were consistent.

Results

We were unable to determine the “analytical method reviews actually used” for handling missing outcome data among 397 trials. Among the remaining 241, systematic review authors most commonly conducted “complete case analysis” (n=128, 53%) or assumed “none of the participants with missing data had the event of interest” (n=58, 24%). Only eight of 100 systematic reviews were consistent in their approach to handling missing data across included trials, but none of these reported methods for handling missing data. Among seven reviews that did explicitly report their analytical method of handling missing data, only one was consistent in their approach across included trials (using complete case analysis), and their approach was inconsistent with their reported methods (assumed all participants with missing data had the event).

Conclusion

The majority of systematic review authors were inconsistent in their approach towards reporting and handling missing outcome data across eligible primary trials, and most did not explicitly report their methods to handle missing data. Systematic review authors should clearly identify missing outcome data among their eligible trials, specify an approach for handling missing data in their analyses, and apply their approach consistently across all primary trials.

Key Message

Systematic review authors were inconsistent in their methods of handling missing data across included trials.

Most systematic review authors did not explicitly report their methods to handle missing data.

Systematic review authors may simply use what trialists have reported, without consciously planning a method to handle missing data.

Systematic review authors should clearly describe an approach for handling missing data outcomes and apply this approach consistently among eligible trials for their review.

Background

Reporting of missing outcome data in randomized controlled trials (RCTs) is often suboptimal.Citation1 Randomized controlled trials typically report the overall prevalence of study participants that failed to complete the study;Citation2 however, not all outcome measures may be similarly affected. Some trial participants may have experienced one or more outcomes (and have them documented) prior to discontinuing the study prematurely. Also, it is not always clear whether RCT authors followed all participants, such as those who withdrew consent to participate (i.e., whether they have missing data or not).Citation1 Moreover, RCT authors often fail to clearly describe how they handled missing outcome data in their analyses.Citation1,Citation2

The poor reporting of missing outcome data in RCTs necessitates that systematic review authors develop plans to address this issue.Citation3–Citation16 However, a recent methodological survey found that only 25% of systematic review authors reported strategies to address whether certain categories of participants (e.g., withdrew consent, non-compliant) might have missing outcome data, and only 19% of systematic reviews reported a method for handling missing data (e.g., complete case analysis, making assumptions).Citation17

Even when systematic review authors decide to handle missing outcome data in their analysis, they may do so inconsistently across trials included in the same meta-analysis. As an illustrative scenario, for a systematic review that plans to include only participants with available outcome data in their meta-analysis (i.e., use complete case analysis),Citation18 one would expect the denominators of all trials included in that meta-analysis to be restricted to only participants with available outcome data. However, for one trial,Citation19 reviewer authors used the total number randomized for the denominator (despite having participants with missing data), and in another trial,Citation20 they excluded participants with missing data from the denominator. In such a scenario, we observe two main potential problems: (1) the analytical method review authors actually used for handling missing data is inconsistent across trials included in the same meta-analysis; and (2) the analytical method review authors actually used for handling missing data is, for some trials, inconsistent with their methods. These issues may complicate the reproducibility of systematic reviews and bias results. The extent of these problems remains, however, unclear.

Objective

The overall objective of this study was to assess whether the systematic review authors are consistent in the way they handle missing data, both across trials included in the same meta-analysis, and with their reported methods. More specifically, we aimed to: (1) classify the methods systematic review authors actually used for handling missing data for each included trial; (2) assess whether systematic reviews authors explicitly reported on the method of handling missing data; (3) assess the extent to which systematic review authors were consistent in their methods actually used across trials included in the same meta-analysis; and (4) when consistent, assess whether the methods the systematic review authors actually used were consistent with their reported methods (if reported).

Methods

Study Design and Definitions

This methodological study is part of a larger project examining methodological issues related to missing outcome data in systematic reviews and RCTs.Citation21 Our published protocol includes detailed information on the definitions, eligibility criteria, search strategy, selection process, data extraction and data analysis.Citation21 A patient-important outcome is defined as an outcome for which a patient would answer with “yes” to the following question:

If the patient knew that this outcome was the only thing to change with treatment, would the patient consider receiving this treatment if associated with burden, side effects, or cost?Citation21

We defined missing data as outcome data for trial participants that are not available to systematic review authors from the published RCT reports or personal contact with RCT authors. We used our recently published guidanceCitation22 to identify categories of trial participants who might have missing outcome data.

Sample Selection

Our random sample included 50 Cochrane and 50 non-Cochrane systematic reviews published in 2012 that reported a statistically significant, group-level meta-analysis, of a patient-important dichotomous efficacy outcome.Citation17 We retrieved all 653 RCTs included in the 100 meta-analyses of interest.Citation1 Eleven pairs of reviewers extracted data, in duplicate and independently, from the systematic reviews and RCTs and resolved disagreements with the help of a third reviewer. We conducted calibration exercises and used standardized and pilot-tested forms with detailed written instructions to improve reliability of data extraction.

Classifying the “Analytical Method Reviews Actually Used” for Handling Missing Data

Authors of reviews may fail to clearly report their approach to handling missing data. Alternatively, the approach they report in their methods may not correspond with the method they actually used. Therefore, we established the “analytical method reviews actually used” for handling missing data using the following steps:

From each RCT report, we extracted (per study arm) the number of participants randomized, the numerator (i.e. the number of events) used in the analysis of interest, and the number of participants with missing data.

From the meta-analysis (forest plot plus text) and for each arm of all contributing RCTs, we extracted the denominator and the numerator used in the meta-analysis of interest.

We compared the statistical data from the RCT report with data from the meta-analysis.

Based on this comparison, we classified the “analytical method reviews actually used” for handling missing data as:

Unclear, cannot be verified (provided numbers that could not be explained or did not add up to match any of the suggested analytical method actually used).

Complete case analysis.

Making assumptions (e.g., best case scenario, all participants had the event).

Different methods (from the above bullet points) for different categories of participants with missing data.

Not applicable, no missing data.

lists commonly used methods of handling missing outcome data of trial participants. The hypothetical examples in illustrate how different meta-analyses addressing the same study question (i.e. same patients, interventions, comparators and outcomes) may handle missing data from a single eligible RCT, and how we classified the “analytical method reviews actually used” in each case. We also assessed the confidence of data extractors in classifying the analytical method actually used, i.e., whether based on explicit reporting (higher confidence) or best guess (lower confidence).

Table 1 Commonly Used Methods of Handling Missing Outcome Data Among Trial Participants

Table 2 Examples Illustrating How Meta-Analyses Addressing the Same Study Question Might Handle Missing Outcome Data for an Unfavorable Dichotomous Outcome from an RCT Report and Thus Informed Classification of the “Analytical Method Reviews Actually Used”

Also, for systematic reviews that reported on having participants with missing outcome data, we assessed whether systematic review authors used the same denominator and/or numerator as the one(s) reported in the RCTs that contributed to their meta-analysis.

Consistency Between Analytical Methods Reported, and Used, for Handling Missing Outcome Data

After classifying the “analytical method reviews actually used” for handling missing data (aim 1), we assessed whether the authors explicitly reported on the analytical method of handling missing data which, if present, we designated as the “reported analytical method” (aim 2). Second, for each meta-analysis, we assessed whether the “analytical method reviews actually used” for handling missing data was consistent across trials within this meta-analysis (aim 3). If so, we explored whether the “analytical method reviews actually used” was consistent with the “reported analytical method” (aim 4). We displayed the results of the “reported” and “actual” analytical methods in a matrix (see ).

Table 3 Hypothetical Scenarios Illustrating the Process for Judging Consistency Between “Reported” and “Actual” Analytical Methods for Addressing Missing Outcome Data

Statistical Analysis

Using SPSS statistical software, version 21.0,Citation23 we conducted a descriptive analysis (frequencies and percentages) of all collected variables. We also planned to conduct regression analyses to study the association between “consistency between actual and reported method” and characteristics of included systematic reviews.

Results

Our sample of 100 systematic reviews with significant pooled effect estimates included 653 RCTs that informed the meta-analyses of interest, of which we acquired the full-text reports for 638. We have previously reported on the details of these systematic reviewsCitation17 and the included RCTs.Citation1 Briefly, four hundred RCTs (63%) reported on at least one category of participants that were either explicitly not followed-up or with unclear follow-up status. Among these 400 RCTs, the median percentage of participants that were either explicitly not followed-up or with unclear follow-up status was 11.7% (IQR 5.6–23.7%).Citation1 Among trials with missing outcome data, the meta-analyses they contributed to most often used the denominator (81%) and numerator (80%) reported by the RCT ().

Table 4 “Analytical Method Reviews Actually Used” to Handle Missing Data Across RCTs Included in Meta-Analysis

Classifying the “Analytical Method Reviews Actually Used” for Handling Missing Data

We were able to classify the “analytical method reviews actually used” for 241 (38%) of the included RCTs; 67% were classified with lower confidence (best guess) and 33% with higher confidence (based on explicit reporting) (). For the remaining RCTs, 207 (32%) included no participants with missing data (complete follow-up), 161 (25%) provided numbers that could not be explained (did not add up to match any of the suggested analytical method actually used), 5 (1%) had extracted the wrong data (e.g., used data from the wrong outcome), and 24 (4%) provided insufficient information for even a best guess at the method used to handle missing data.

Among the 241 included RCTs for which we were able to classify the “analytical method reviews actually used”, systematic review authors conducted “complete case analysis” in 128 (53%), assumed “none of the participants with missing data had the event of interest” in 58 (24%), and used different methods for different categories of participants with missing data in 51 (21%) trials. In four RCTs (2%) assumptions other than the five we explored () were used.

Only seven of the 100 systematic reviews we assessed explicitly reported on methods to handle missing data in their meta-analysis. Two planned a complete case analysis, two proposed assuming all participants with missing data had the event of interest, and three reported their intention to assume that none of the participants with missing data had the event of interest.

Consistency in Analytical Methods

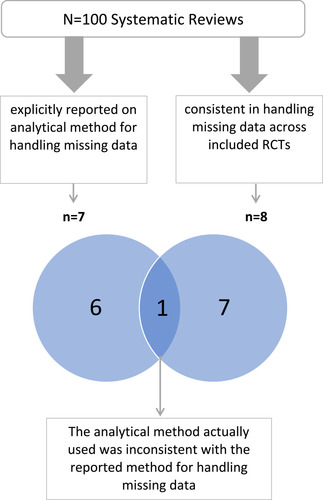

Of the seven systematic reviews that explicitly reported on the analytical method for handling missing data, only one was consistent in handling missing data across all included trials (using complete case analysis) (). However, the analytical method actually used was not consistent with their “reported analytical methods” (“if missing data were unable to be obtained, a result was assumed to have a particular value, such as poor outcome”).Citation24 Of the 93 systematic reviews that did not explicitly report their analytical method of handling missing data, seven were consistent in their actual analytical method for handling missing data across all included trials.

Figure 1 Consistency in analytical methods within the same meta-analysis and versus the reported analytical method.

Due to the low number of reviews that were consistent within the same meta-analysis, we were not able to conduct any regression analysis to study the association between “consistency between actual and reported method” and characteristics of included systematic reviews.

Discussion

Summary of Findings

In this systematic survey of Cochrane and non-Cochrane systematic reviews, we found that missing data of trial participants was inconsistently handled by almost all reviews. Most reviews did not specify an approach for handling missing data, and of the few that did, none applied their approach consistently across eligible trials.

Strengths and Limitations

The main strength of our study is the systematic and transparent methods used, including screening independently and in duplicate, conducting calibration exercises and use of pilot-tested forms for data extraction to increase reliability and applying a systematic strategy for making the numerous classification judgments involved in the study. To our knowledge, this is the first methodological survey exploring how systematic review authors actually dealt with trial missing outcome data in their meta-analysis. Also, this is the first study to assess whether the methods used for handling missing outcome data in the meta-analysis are consistent with the “reported analytical methods”. A limitation of our study is that we considered only dichotomous outcome data. The methods for handling missing continuous data are different and our findings may not be generalizable to continuous outcomes.Citation25,Citation26 Another limitation was our reliance on reviewers’ judgments at different stages of the process (e.g., judgment regarding the actual analytical method used to handle missing data). Our development and application of a logically coherent strategy for making the numerous classification judgments involved in the study may mitigate this concern. Further, our sample included systematic reviews that were published in 2012, and may not reflect more current reviews; however, recent surveys suggest that the reporting, handling, and assessment of risk of bias in relation to missing data have not improved since we acquired the reviews used in our study.Citation16,Citation27-Citation29

Interpretation of Findings

Both the challenge we faced in classifying the “analytical method reviews actually used” (25% of RCTs provided numbers that could not be explained) and the observed inconsistency in handling missing data within the same meta-analysis reflect the failure of reviewers to adopt standardized approaches to reporting and dealing with missing data.Citation22,Citation30,Citation31 This inconsistency may bias the results and could produce disparate findings among different meta-analyses addressing the same research question, even when considering the same trials.Citation32

We uncovered three limitations in how systematic reviews authors handle missing data in their meta-analysis:

Ninety-three percent did not explicitly report on their methods for handling missing data.

Ninety-two percent were inconsistent in the methods used to handle missing data across RCTs within the same meta-analysis.

In the few meta-analyses that did explicitly report a method for handling missing data, none actually applied that method.

We also found that for more than 80% of RCTs with missing outcome data contributing to the meta-analyses of interest, the systematic review authors used the same denominator and numerator as those reported by the trialists. So, systematic review authors may simply use what trialists have reported, without consciously planning a method to handle missing data. As trialists use different approaches to handling missing outcome data of trial participants, this practice might explain why systematic review authors are not consistent with their approach in handling missing data across trials included in the same meta-analysis. In other cases, systematic review authors and trial authors, intending to apply the “intention to treat” principle, include the total number of participants randomized in the denominator while using whatever the trial authors have used in the numerator. Subsequently, they would be implicitly applying “none of the participants with missing data had the event”. Given that this assumption is highly implausible in a real-world context, the confidence in the findings would be lower.Citation33,Citation34

Recommendations for Practice

In order to ensure consistency in handling missing data across trials included in the same meta-analysis, authors should:

Develop a transparent and detailed strategy for handling missing data (e.g., using complete case analysis, applying assumptions);Citation35–Citation38

Refer to available guidance on how to identify participants with missing data from RCT reports;Citation22

Apply their strategy for handling missing data consistently across all trials included in the meta-analysis.

Report clearly on the above.

Conclusion

The large majority of systematic reviews considered in our study did not report a method for handling missing data in their meta-analyses. For the few that did, the actual method used for handling missing outcome data was often inconsistent with their reported methods. As such inconsistency might threaten the validity of the results of systematic reviews, methodologic rigor requires improved adherence to guidance on identifying, reporting, and handling participants with missing outcome data.

Abbreviations

MA, meta-analysis; RCT, randomized controlled trial; SR, systematic review.

Author Contributions

All authors contributed to data analysis, drafting or revising the article, gave final approval of the version to be published, and agree to be accountable for all aspects of the work.

Disclosure

The authors report no conflicts of interest in this work.

References

- Kahale LA, Diab B, Khamis AM, et al. Potentially missing data was considerably more frequent than definitely missing data in randomized controlled trials: a methodological survey. J Clin Epidemiol. 2018;106:18–31.30300676

- Akl EA, Kahale LA, Ebrahim S, et al. Three challenges described for identifying participants with missing data in trials reports, and potential solutions suggested to systematic reviewers. J Clin Epidemiol. 2016;76:147–154. doi:10.1016/j.jclinepi.2016.02.02226944294

- Hussain JA, White IR, Langan D, et al. Missing data in randomised controlled trials evaluating palliative interventions: a systematic review and meta-analysis. Lancet. 2016;387:S53. doi:10.1016/s0140-6736(16)00440-2

- Adewuyi TE, MacLennan G, Cook JA. Non-compliance with randomised allocation and missing outcome data in randomised controlled trials evaluating surgical interventions: a systematic review. BMC Res Notes. 2015;8:403. doi:10.1186/s13104-015-1364-926336099

- Akl EA, Shawwa K, Kahale LA, et al. Reporting missing participant data in randomised trials: systematic survey of the methodological literature and a proposed guide. BMJ Open. 2015;5(12):e008431. doi:10.1136/bmjopen-2015-008431

- Bell ML, Fiero M, Horton NJ, et al. Handling missing data in RCTs; a review of the top medical journals. BMC Med Res Methodol. 2014;14(1):118. doi:10.1186/1471-2288-14-11825407057

- Fielding S, Maclennan G, Cook JA, et al. A review of RCTs in four medical journals to assess the use of imputation to overcome missing data in quality of life outcomes. Trials. 2008;9(1):51. doi:10.1186/1745-6215-9-5118694492

- Fielding S, Ogbuagu A, Sivasubramaniam S, et al. Reporting and dealing with missing quality of life data in RCTs: has the picture changed in the last decade? Qual Life Res. 2016;25(12):2977–2983. doi:10.1007/s11136-016-1411-627650288

- Fiero MH, Huang S, Oren E, et al. Statistical analysis and handling of missing data in cluster randomized trials: a systematic review. Trials. 2016;17(1):72. doi:10.1186/s13063-016-1201-z26862034

- Gewandter JS, McDermott MP, McKeown A, et al. Reporting of missing data and methods used to accommodate them in recent analgesic clinical trials: ACTTION systematic review and recommendations. Pain. 2014;155(9):1871–1877. doi:10.1016/j.pain.2014.06.01824993384

- Karlson CW, Rapoff MA. Attrition in randomized controlled trials for pediatric chronic conditions. J Pediatr Psychol. 2009;34(7):782–793. doi:10.1093/jpepsy/jsn12219064607

- Masconi KL, Matsha TE, Echouffo-Tcheugui JB, et al. Reporting and handling of missing data in predictive research for prevalent undiagnosed type 2 diabetes mellitus: a systematic review. EPMA J. 2015;6(1):7. doi:10.1186/s13167-015-0028-025829972

- Spineli LM. Missing binary data extraction challenges from cochrane reviews in mental health and Campbell reviews with implications for empirical research. Res Synth Methods. 2017;8(4):514–525. doi:10.1002/jrsm.126828961395

- Wahlbeck K, Tuunainen A, Ahokas A, et al. Dropout rates in randomised antipsychotic drug trials. Psychopharmacology. 2001;155(3):230–233. doi:10.1007/s00213010071111432684

- Wood AM, White IR, Thompson SG. Are missing outcome data adequately handled? A review of published randomized controlled trials in major medical journals. Clin Trials. 2004;1(4):368–376. doi:10.1191/1740774504cn032oa16279275

- Ibrahim F, Tom BDM, Scott DL, et al. A systematic review of randomised controlled trials in rheumatoid arthritis: the reporting and handling of missing data in composite outcomes. Trials. 2016;17(1):272. doi:10.1186/s13063-016-1402-527255212

- Kahale LADB, Brignardello-Petersen R, Agarwal A, et al. Systematic reviews do not adequately report, or address missing outcome data in their analyses: a methodological survey. J Clin Epidemiol. 2018. doi:10.1016/j.jclinepi.2018.02.016

- Lafuente‐Lafuente C, Valembois L, Bergmann JF, et al. Antiarrhythmics for maintaining sinus rhythm after cardioversion of atrial fibrillation. Cochrane Database Syst Rev. 2011;3.

- Singh S, Saini RK, DiMarco J, et al. Efficacy and safety of sotalol in digitalized patients with chronic atrial fibrillation. Am J Cardiol. 1991;68(11):1227–1230. doi:10.1016/0002-9149(91)90200-51951086

- Singh BN, Singh SN, Reda DJ, et al. Amiodarone versus sotalol for atrial fibrillation. N Engl J Med. 2005;352(18):1861–1872. doi:10.1056/NEJMoa04170515872201

- Akl EA, Kahale LA, Agarwal A, et al. Impact of missing participant data for dichotomous outcomes on pooled effect estimates in systematic reviews: a protocol for a methodological study. Syst Rev. 2014;3:137. doi:10.1186/2046-4053-3-13725423894

- Kahale LA, Guyatt GH, Agoritsas T, et al. A guidance was developed to identify participants with missing outcome data in randomized controlled trials. J Clin Epidemiol. 2019;115:55–63. doi:10.1016/j.jclinepi.2019.07.00331299357

- IBM Corp. Released 2013. IBM SPSS Statistics for Windows, Version 22.0. Armonk, NY: IBM Corp.

- Tang H, Hunter T, Hu Y, et al. Cabergoline for preventing ovarian hyperstimulation syndrome. Cochrane Database Syst Rev. 2012;2.

- Ebrahim S, Akl EA, Mustafa RA, et al. Addressing continuous data for participants excluded from trial analysis: a guide for systematic reviewers. J Clin Epidemiol. 2013;66(9):1014–21 e1. doi:10.1016/j.jclinepi.2013.03.01423774111

- Ebrahim S, Johnston BC, Akl EA, et al. Addressing continuous data measured with different instruments for participants excluded from trial analysis: a guide for systematic reviewers. J Clin Epidemiol. 2014;67(5):560–570. doi:10.1016/j.jclinepi.2013.11.01424613497

- Sullivan TR, Yelland LN, Lee KJ, et al. Treatment of missing data in follow-up studies of randomised controlled trials: a systematic review of the literature. Clin Trials. 2017;14(4):387–395. doi:10.1177/174077451770331928385071

- Babic A, Tokalic R, Cunha JAS, et al. Assessments of attrition bias in cochrane systematic reviews are highly inconsistent and thus hindering trial comparability. BMC Med Res Methodol. 2019;19(1):76. doi:10.1186/s12874-019-0717-930953448

- Shivasabesan G, Mitra B, O’Reilly GM. Missing data in trauma registries: a systematic review. Injury. 2018;49(9):1641–1647. doi:10.1016/j.injury.2018.03.03529678306

- Akl EA, Carrasco-Labra A, Brignardello-Petersen R, et al. Reporting, handling and assessing the risk of bias associated with missing participant data in systematic reviews: a methodological survey. BMJ Open. 2015;5(9):e009368. doi:10.1136/bmjopen-2015-009368

- Akl EA, Kahale LA, Agoritsas T, et al. Handling trial participants with missing outcome data when conducting a meta-analysis: a systematic survey of proposed approaches. Syst Rev. 2015;4:98. doi:10.1186/s13643-015-0083-626202162

- Khamis AM, El Moheb M, Nicolas J, et al. Several reasons explained the variation in the results of 22 meta-analyses addressing the same question. J Clin Epidemiol. 2019;113:147–158. doi:10.1016/j.jclinepi.2019.05.02331150832

- Guyatt GH, Oxman AD, Vist G, et al. GRADE guidelines: 4. Rating the quality of evidence—study limitations (risk of bias). J Clin Epidemiol. 2011;64(4):407–415. doi:10.1016/j.jclinepi.2010.07.01721247734

- Guyatt GH, Ebrahim S, Alonso-Coello P, et al. GRADE guidelines 17: assessing the risk of bias associated with missing participant outcome data in a body of evidence. J Clin Epidemiol. 2017;87:14–22. doi:10.1016/j.jclinepi.2017.05.00528529188

- Akl EA, Johnston BC, Alonso-Coello P, et al. Addressing dichotomous data for participants excluded from trial analysis: a guide for systematic reviewers. PLoS One. 2013;8(2):e57132. doi:10.1371/journal.pone.005713223451162

- White IR, Carpenter J, Horton NJ. Including all individuals is not enough: lessons for intention-to-treat analysis. Clin Trials. 2012;9(4):396–407. doi:10.1177/174077451245009822752633

- White IR, Higgins JP. Meta-analysis with missing data. Stata J. 2009;9(1):57–69. doi:10.1177/1536867X0900900104

- White IR, Horton NJ, Carpenter J, et al. Strategy for intention to treat analysis in randomised trials with missing outcome data. BMJ. 2011;342(feb07 1):d40. doi:10.1136/bmj.d4021300711