?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

The authors present a new method of recognizing different human facial gestures through their neural activities and muscle movements, which can be used in machine-interfacing applications. Human–machine interface (HMI) technology utilizes human neural activities as input controllers for the machine. Recently, much work has been done on the specific application of facial electromyography (EMG)-based HMI, which have used limited and fixed numbers of facial gestures. In this work, a multipurpose interface is suggested that can support 2–11 control commands that can be applied to various HMI systems. The significance of this work is finding the most accurate facial gestures for any application with a maximum of eleven control commands. Eleven facial gesture EMGs are recorded from ten volunteers. Detected EMGs are passed through a band-pass filter and root mean square features are extracted. Various combinations of gestures with a different number of gestures in each group are made from the existing facial gestures. Finally, all combinations are trained and classified by a Fuzzy c-means classifier. In conclusion, combinations with the highest recognition accuracy in each group are chosen. An average accuracy >90% of chosen combinations proved their ability to be used as command controllers.

Introduction

The interaction between human and computer or machine is of great importance for various fields such as biomedical science and computer, electrical, electronic, or mechanical engineering as well as neuroscience. The most significant reasons of the development of this technology are its efficiency and usability for handicapped and elderly people. The human–machine interface (HMI) is an approach for information transmittal where humans interact with the machine. Designing such interfaces is a challenge and requires a great deal of work to make the interface logical, functional, accessible, and pleasant to use. The most popular HMI modes still rely on keyboard, mouse, and joystick. In recent years, there has been remarkable interest in introducing intuitive interfaces that recognize the user’s body movements and translate them into machine commands. The two main methods of designing these interfaces are techniques based on nonbiomedical or biomedical signals. The “tonguepoint” as well as “headmouse” are examples of nonbiomedical signal devices.Citation1 These approaches are restricted to users with cerebral palsy or spinal vertebrae fusion.Citation2 Patients with severe multiple sclerosis and spinal cord injuries who have limitations in neck movement are unable to use these devices.Citation3 An eye-gaze tracking method has been proposedCitation4 to provide an accurate cursor. With these systems, a video camera is usually used to record images or videos continuously and image-processing methods are applied to analyze the data. In spite of their usability, there are some disadvantages such as limitation of camera field of view, complexity, computational cost, target size, peripheral conditions, lighting, and image quality. Recently, automatic recognition of facial expressions, which has a direct effect and a vital role in HMI, has been considered. In 1999, image-based facial gesture recognition was proposedCitation5 for controlling a powered wheelchair. However, the gesture recognition system required costly high-speed image-processing hardware.

As an alternative, biomedical signals have been employed as other kinds of interfaces in many fields of HMI. These signals can be utilized for neural connection with computers and they are obtained from tissues, organs, or the cell system. Electrooculography (EOG), which measures and determines the eye position electrically, was used as a powerful input device. EOG has been applied to control a cursor,Citation6 but these systems were affected by head and muscle movement, signal drift, and channel crosstalk. Electroencephalography (EEG) has also been considered as a neural linkage to communicate with the computer/machine and is typically called the brain– computer interface (BCI). These systems have been recently developed by removing the requirement for involvement of peripheral nerves and muscles in disabled usersCitation7,Citation8 by applying the modulation of mu and beta rhythms via motor imagery to make BCI control possible. Apart from its benefits, there are some issues with the use of EEGs such as long training time, poor signal-to-noise ratio, and numerous challenges in processing steps.Citation9,Citation10

More recently, electromyography (EMG) has become the new basis of biosignal information for designing HMI/human–computer interfaces (HCI). EMG signals are captured from body muscles such as arm or facial muscles and are translated and converted into machine-input control commands. Much work has been done in the field of interface designing in EMG-based HMI systems, which have been applied to upper and lower limb gestures. Two-dimensional pointers are controlled through wrist movementsCitation11 and an EMG-controlled pointing tool was proposed and developed.Citation12,Citation13 An HMI based on EMG arm gestures within three-dimensional space design was proposed and the final recognition result of this was ~96%.Citation14 An approach with three hand gestures was investigated to control a mouse cursor and the overall accuracy was 97%.Citation15 Controlling a hand prosthesis using hand gestures and pattern recognition had around 90% accuracy.Citation16,Citation17 Control of virtual devices through hand-gesture EMGsCitation18 and an online EMG mouse-controlling computer cursor with six wrist gestures were selected as control commands and the reported average recognition ratio was 97%.Citation19 Hand and finger gesture recognition has been explored with an accuracy of almost 93%.Citation20 More accurate hand-gesture recognition with an accuracy of 99% has been attained.Citation21 All these interfaces were designed based on hand, finger, wrist, and arm gestures. However, this would be unsuitable for people with both lower and upper extreme impairment, most of whom cannot even move their neck. These can be seen as the most difficult cases, as, for these patients, their only way of communication is by facial expression. For these cases, the interface design solution must therefore be based on facial gestures. Some effort has been made to utilize EMG-based facial gestures to develop proper interfaces. The first was an intelligent robotic wheelchair based on EMG gestures and voice control.Citation22 The designed interface had three basic facial expressions: happy, angry, and sad.Citation23 An EMG-based HMI in a mobile robot was tested by asking volunteers to perform ten eye blinks with each eye.Citation24 Moreover, useful control commands from facial gestures during speech have been provided and investigated for HCI application.Citation25 In the same year, a hands-free wheelchair controlled through forehead myosignals was suggested.Citation26 Further, recently, a virtual crane training system controlled through five facial gestures was introducedCitation27 and a facial EMG-based interface has been proposed and used in maximum medical improvement through three facial EMGs.Citation28

The most important facts to be considered throughout the design process are analysis procedure and the flexibility and user-friendliness of these systems. Since real-time control includes processing issues, simplicity, low-cost computation, high speed, and accuracy of analysis are vital factors. Besides, flexibility of these systems mostly relies on the number of control commands; that is, flexibility increases when the number of control commands increases. In addition, the user-friendly systems are more pleasant for the operators since they can choose suitable gestures in each application with a different number of control commands. Therefore, to apply these interfaces in a real HMI system, all of the above issues must be considered to achieve best performance.

Firstly, EMG-recording protocols, preprocessing, and processing of acquired data must be considered the main focus for designing these interfaces. Amongst these, feature extraction and data classification have been always under investigation to optimize the whole procedure and to obtain the best results. As reported previously, due to EMG characteristics, processing methods are restricted, especially in low-level contraction like in facial muscles, where the firing rate and frequency bandwidth of muscles are almost the same. In the field of EMG feature extraction, time-domain features such as mean absolute value (MAV), root mean square (RMS), and variance (VAR) are chosen and applied in much research since they provide a better estimation of EMGsCitation29,Citation30 compared with frequency-domain and time frequency-domain techniques, which are mostly applied in muscle fatigue investigations.Citation31,Citation32 The proper classifier must be accurate and fast enough to attain the real-time prerequisites. There are many tactics available for EMG classification such as neural network, fuzzy, and probabilistic. Suitable classifiers are chosen depending on the patterns that need to be trained, specific applications, and muscle types. The MAV of EMG was extracted and the gestures were discriminated via a threshold with 93% accuracy,Citation22 and, by combining maximum scatter difference, RMS, as well as power spectrum density features with minimum distance method, 94.44% accuracy was achieved in classification.Citation25 Facial gestures were classified into two classes via thresholding tactic and obtained 95.71% recognition ratio.Citation24 Moving RMS features and linear separation technique were used to identify three facial expressionsCitation25 and they achieved 100% recognition accuracy. This 100% accuracy was achieved by employing MAV and support vector machine (SVM) as features and classifier methods, respectively.Citation26 A control accuracy of 93.2% was reported when RMS features and subtractive fuzzy C-mean classifier were exerted to distinguish five facial gestures as control commands.Citation27 In the same year, moderate results with accuracies of 61%, 60.71%, and 56.19% were achieved by employing mean, absolute deviation, standard deviation (SD), and VAR features and K-nearest neighbors, SVM, and multilayer perception classifiers.Citation28 In recent research, RMS features were extracted from eight facial gestures, and the accuracy of two popular classification methods, support vector machine (SVM) and FCM in the field of facial EMG classifications, were compared. Results reported the greater strength of FCM over SVM and confirmed the usefulness of the RMS method in EMG-signal feature extraction.Citation33,Citation34

All previously mentioned work was restricted by two issues. Firstly, they used a fixed number of facial gestures for their purpose, which means that their interface was not flexible enough to be employed by other applications. Secondly, the recognition ratio results relied strictly on included facial gestures and there was a possibility of an increase in performance accuracy by applying other gestures. In this paper, the design of a multipurpose interface is proposed based on eleven voluntary facial gestures. This makes the interface much more flexible since each of the included facial gestures can be used as an accurate input control in various HMI applications where 2–11 control commands are needed. Furthermore, this interface became more reliable through creating different combinations with a different number of facial gestures to find the most accurate combinations. Finally, this interface finds the maximum recognition accuracy for each facial combination consisting of 2–11 facial gestures. The design of the proposed interface is described step by step in the following sections.

Materials and methods

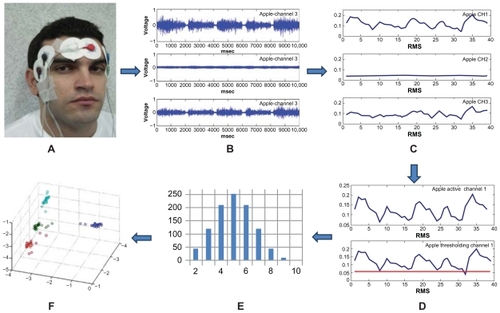

The procedure of designing the proposed interface is shown in . is divided into six main parts. shows subject preparation, site selection, and electrode placement. System setup, data acquisition, and signal-recording protocol from all participants and signal-filtering processes are demonstrated (). Data segmentation, windowing, and feature extraction are carried out (). The use of threshold lines and achieved features from the previous step are sorted () to find active features that are more suitable for training and classification. The multipurpose interface is created by including all possible combinations from all existing facial gestures (). All obtained combinations were trained and classified () to find the most accurate group of facial gestures and a better distribution of them.

Figure 1 Procedure of designing the proposed interface. A shows subject preparation, site selection, and electrode placement. System setup and data acquisition, signal recording protocol from all participants, and signal filtering process are demonstrated in B. Data segmentation, windowing, and feature extraction are carried out in C. The use of threshold lines to find the active features, which are more suitable for training and classification, is shown in D. The multipurpose interface is materialized by making all possible combinations from all existing facial gestures (E). All obtained combinations are trained and classified as illustrated in F to find the most accurate group of facial gestures and a better distribution of them.

Subject preparation, site selection, and electrode placement

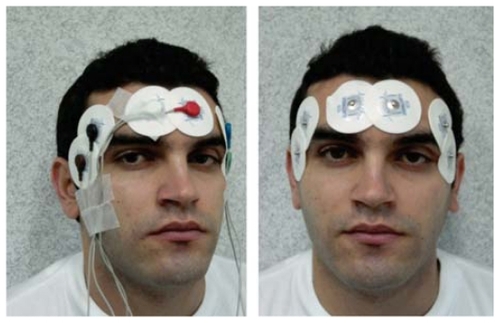

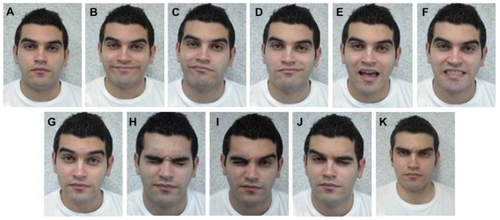

Ten healthy volunteers participated as the main data sources for this work. shows all facial gestures, which are performed by each participant. There are some points that must be considered prior to signal recording for all participants. Since the surface EMG method is used in this work, skin preparation is a significant task for getting a clear signal. An alcohol pad is used to clean any dust and sweat from the selected areas for signal recording to avoid any unwanted artifacts and noise. Conductive and adhesive paste or cream is used on the center of the electrodes before placing them on the skin. Since three recording channels are used, three pairs of electrodes are placed on the desired regions in a bipolar configuration. They are placed on the affective muscles involved in chosen facial gestures/expressions.

Figure 2 All considered facial gestures in this work: (A) natural, (B) smiling, (C) smiling with right side, (D) smiling with left side, (E) open the mouth like saying “a” in “apple,” (F) clenching molar teeth, (G) pulling up the eyebrows, (H) closing both eyes, (I) closing right eye, (J) closing left eye, (K) frowning.

indicates all facial gestures as well as their effective muscle(s)Citation35 and recording channel(s) applied in the current work. According to , three pairs of electrodes must be placed where all considered gestures could be covered. Two pairs of electrodes (channel 1, channel 3) are located on the left and right temporalis muscle, the other pair is placed on the frontalis muscle, and one ground electrode is sited on the bony part of the left wrist. Each of these pairs is attached within a 2 cm interelectrode distance.Citation26,Citation27,Citation33,Citation34 After electrode placement and wire attachment, all wires are taped to the face to decrease any unwelcome wire movement and reduce the number of artifacts. Electrode placement and wire positions are indicated in .

Table 1 Facial gestures used in this work

System setup and signal-recording protocol

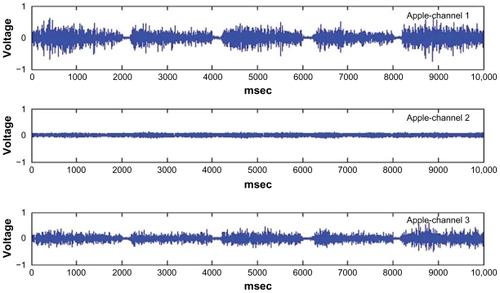

Surface EMG signals are recorded by the BioRadio 150 (CleveMed, Cleveland, OH). The sampling frequency is adjusted at 1000 Hz. To avoid motion artifacts and power-line interference, the low cut-off frequency of the filter is set at 0.1 Hz and a notch filter is applied to remove the 50 Hz component of the signal. Before signal recording, all volunteers rest for 1 minute. Then, they are asked to perform the facial gestures (see ) for 30 seconds (five trial performances over 2 seconds with a 5-second rest between them to eliminate the effect of exhaustion). This procedure is repeated for all participants to record each facial gesture. Acquired signals are saved to a personal computer and are prepared for the next stage. An example of the acquired signal of gesturing “a” in “apple” is depicted in .

Each recording channel has a different shape based on the activity of the muscles involved in the related gesture (). Here, this gesture just affects the recording channels 1 and 3. Channel 2 is inactive and its shape looks like a normal signal. Please note that 20,000 ms parts of all signals that belong to resting stages have been removed and just 2000 ms of active parts are placed next to each other to make continuous signals without rest for a total length of 10,000 ms. All recorded signals are passed through a band- pass filter with a 30-to-450 Hz bandwidth to include the most essential spectrum of EMG signals.Citation27

Data segmentation and feature extraction

Filtered signals are segmented into nonoverlapped 256 ms time sections and RMS value is computed by EquationEquation 1(1) for each section. RMS is a well-known feature to identify the strength of muscle contraction and it offers the extreme probability approximation of amplitude when the signal is modeled as a Gaussian random procedure.

Xn is the achieved raw signal and N is the length of Xn. Therefore, there are 39 features in each channel for each

Active feature selection

After feature extraction (RMS), these features are sieved to collect the active ones. Thus, three threshold lines are designed through Equationequation 2(2) from three normal signal channels (T1, T2, T3).

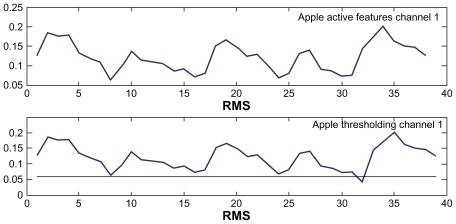

Therefore, RMS from each gesture in channel 1, channel 2, and channel 3 are compared with T1, T2, and T3, respectively. The features greater than the threshold are identified as active. As an example, RMS, threshold line, and active features from gesturing “a” in “apple” in channel 1 are shown in .

Multipurpose interface design

The main goal of the present work is to design a multipurpose interface for various HMI systems such as controlling assistive devices and artificial limbs. As mentioned earlier, facial gestures are considered an interface in this work. The number of input control commands can be different for controlling various devices, machines, robots, and prostheses. For instance, five input commands can be enough (forward, backward, left, right, and stop) to control a wheelchair. In other devices, the input control commands can be more or less. In this work, eleven facial gestures were chosen and each plays an input command role. Various devices that need 2–11 input control commands are supported by this interface. In another words, the proposed interface is designed for several applications. This interface becomes practical by including all combinations of facial gestures and assigning them to different groups that include 2–11 gestures. The groups are trained and classified to find the best combination with the highest recognition accuracy in each group. For instance, if the supposed machine needs two movements, the best two facial gestures with the highest recognition accuracy are chosen. All combinations of all facial gestures with different number of gestures are made as shown in Equationequation 3(3) :

where, n is the number of all facial gestures and k is the number of gestures in each group. Based on this equation, the number of all existing combinations in each group for each participant is indicated in . There are 1013 combinations available to each person.

Table 2 Number of combinations in each group of facial gestures per participant

Normal signal is not counted as a facial gesture and is excluded from the combinations. In each application, normal signal needs to be considered as a command input and there is no need to classify it apart from other facial gestures. The normal signal or rest condition does not produce any signals compared with other signals and it is defined as a neutral-input command, which is usually used to disable the devices. For an interface with nine control commands, there are 10 combinations of facial gestures available, and one of them has the highest classification accuracy (). Suppose that each facial gesture is represented by a number (). shows all combinations of nine facial gestures.

Table 3 All combinations of nine facial gestures

Classification

In this step, all combinations must be trained and classified for each person (). The best combination with the highest recognition accuracy in each group is selected. Each combination contains a different number of facial gestures’ active RMS. These features must be classified and separated from each other as much as possible. There are some concerns with EMG classification such as electrode-dislocation responsiveness and noticeable interruption in real-time control and the classifier must cope with them all. In this work, Fuzzy c-means clustering (FCM) is employed for its capability, simplicity, importance, and its wide use in EMG processing and classification.Citation27,Citation33,Citation34 The most significant characteristic of FCM clustering is that it permits data to belong to two or more clusters and that makes it more flexible than other methods. Class labels always deliver a convenient direction throughout the training procedure, as they do in all supervised training methods. This idea led to the development of a new mode of FCM called “supervised Fuzzy c-means.” In this method, the numbers of cluster centers are given before training as well. By utilizing the obtained active features as input data and adjusting other initial parameters, FCM can compute the location of each cluster center (Vi) and the membership value of each data toward each cluster (Uik). This procedure is repeated until the optimized value of Vi and Uik are found. This technique has already proven its ability to recognize eight facial gesturesCitation33 and it is applied here to design the proposed interface.

Results

To design a multipurpose interface, flexible sets of control commands are required for various applications. The best combinations of facial gestures are assigned to different usages where 2–10 control commands are needed. All 1013 combinations obtained from each participant are trained and classified by FCM in the last stage, and the best combinations containing 2–10 facial gestures with the highest recognition accuracy are chosen. Two criteria are considered to find the best combinations: the first is the classification and recognition performances and the other is the way they are distributed and discriminated in feature space. Each combination of facial gesture can be recognized by its gesture’s labels (). For instance, combination code 7652 includes the four gestures: closing eyes, gesturing “a” in “apple”; “No,” pulling up eyebrows; and smiling with right side. All classification results, average, and SDs of all ten participants with different numbers of facial gestures were collected ().

Table 4 Final classification results of all participants with different number of facial gestures

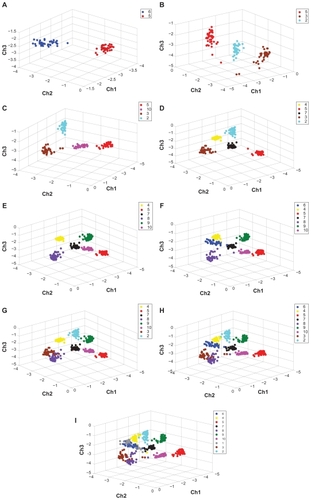

Combinations of two facial gestures

According to , 45 combinations can be made by just two facial gestures for each participant. After training, all of these combinations are distinguished perfectly with 100% recognition accuracy in classification. In other words, results show that the robustness as well as accuracy of all combinations for all participants is identical and the SD is 0. Therefore, based on the application, each of these 45 combinations can be considered as input commands. provides the results of the best combinations for all participants. Most of these combinations are well distributed in feature space as well. illustrates the distribution of the combination code 56 of the first participant.

Combinations of three facial gestures

One hundred and twenty existing combinations for each participant were trained and classified. More than 95% of these combinations for each participant reached 100% classification accuracy. The average result and SD were 100% and 0, respectively. Nevertheless, the work was accomplished with the best combinations where the three classes were well discriminated. For the first participant, combination code 532 is chosen as the best among all and it is illustrated in . reports the results of the best combinations for all participants.

Combinations of four facial gestures

Two hundred and ten combinations of facial gestures were classified for all participants and once again 95% achieved 100% recognition accuracy. Average results and SD of all were 100% and 0, respectively. Combination code 53210 was chosen as the best one. and represent the results of the best combination for all participants and the distribution of the best four facial gestures of the first volunteer, respectively.

Combinations of five facial gestures

Two hundred and fifty-two combinations made by five facial gestures were classified and combination code 45732 was picked as the best with 100% recognition accuracy. indicates the results of the best combination for all participants, average result, and SD. shows the distribution of the best five facial gestures of the first volunteer.

Combinations of six facial gestures

Among all 210 existing combinations made by six facial gestures for each participant, the combination code 4578910 was chosen as the best with 98.03% average recognition accuracy. An SD of 0.5515 was achieved for all volunteers (). demonstrates best distribution of the first participant with the combination code 4578910.

Combinations of seven facial gestures

One hundred and twenty different combinations were constructed with seven facial gestures for each volunteer. The average result reported that the combination code 64578910 provided 97.16% recognition accuracy with an SD of 0.5517 (). shows this combination code for the first participant.

Combinations of eight facial gestures

Forty-five combinations were trained and classified. The average result of the best combination reached 95.18% recognition accuracy with an SD of 0.6217 () with code 234578910. shows the results for the first volunteer.

Combinations of nine facial gestures

Ten combinations were made by nine facial gestures for each volunteer. shows the results of all combinations for the first participant. Combination code 1235678910 is the best combination with 93.1% recognition accuracy. reports the final results for all participants with an average result of 93.02% recognition accuracy and an SD of 0.8084. shows the distribution of this combination for the first participant.

Table 5 All combination results for first participant

Combinations of ten facial gestures

There is just one combination of all facial gestures (without normal signal) for each person. This group set achieved 90.41% recognition accuracy with an SD of 3.1270 for all participants. describes all results attained from all volunteers and depicts the distribution of the first participant’s data distribution. To distribute the concentrated features better while condensing the highly scattered points, a nonlinear transformation logarithm is applied to the features. By increasing the number of facial gestures, the variation between participants’ recognition results increases, which causes an increase in SD. shows the average of the best results from all facial gesture groups of all participants. When the number of facial gestures increases, the classification accuracy becomes lower because when the number of facial gestures is increased, the amount of data as well as the number of clusters becomes higher. The probability of data overlapping in each cluster increases.

Table 6 The average of all results of all participants

Discussion

The main goal of this work is to design a multipurpose interface based on facial EMG gestures for HMI systems. It is accomplished and developed by employing eleven facial gestures and expressions. The obtained results show that this method produces an accurate interface in HMI systems. The main factors that differentiate this interface from others are: it contains more facial gestures, offers variety in choosing suitable facial gestures, is accurate, and can be used in various applications.

compares the research on applied facial EMG gestures as the interface. The comparison of the results shows that the current work has achieved the best performance. In Ang et al, recognition of three facial gesturesCitation23 obtained just 94.44% recognition accuracy while this work achieved 100%. Rezazadeh et al recognized five facial gestures,Citation27 but attained 93.2% while 100% classification accuracy was achieved in this work. Furthermore, its easy implementation, simplicity, high speed and user-friendliness are the advantages of this work compared to other methods. Apart from the above-mentioned points, the best combinations of facial gestures were found for each person in this work. Except for their general utility, various combinations of facial gestures are available to each person and can be applied depending on the user’s condition. In this approach, normal signal is not considered as a gesture and it is not involved in declared combinations. However, it can still be used as a control command in this interface where the system needs to be disabled or put into a standby mode.

Table 7 Comparison of research in this field of study

While this interface can be used by everyone, it is specifically targeted to people with both lower and higher impairments. It is one of the last opportunities for communication for these people, but, fortunately, it can help to improve their quality of life.

Conclusion

A multipurpose interface based on facial expression and gesture recognition was designed and applied in different HMI systems. Eleven facial gestures were chosen and their EMG signals recorded in three bipolar configuration channels. This interface can be used to control any prosthesis, rehabilitation device, or robots where 2–11 control commands are needed. This work developed the earlier approaches by making all combinations of facial gestures for all participants and identifying the one with the highest recognition accuracy and the best data distribution. Facial EMG analysis methods applied in this work provide enough accuracy, speed, and simplicity for utility; therefore, sufficient conditions for device control are prepared. In real-time and online control, especially in HMI systems where there is a user on one side of this system (a majority are elderly or handicapped people), the given interface can cope with all existing limitations.

Disclosure

The authors report no conflicts of interest in this work.

Acknowledgments

The authors would like to thank the Centre of Biomedical Engineering, University of Technology Malaysia (UTM), Institute of Advanced Photonic and King Mongkut’s Institute of Technology (KMITL), Thailand for providing the research facilities. Ehsan Zeimaran has voluntarily participated and consented for using his photograph in this research work. This research work has been supported by UTM’s Tier 1/Flagship Research Grant, International Doctoral Fellowship (IDF), and the Ministry of Higher Education (MOHE) research grant.

References

- SalemCZhaiSAn isometric tongue pointing deviceProceedings of CHI 97March 22–27, 1997Atlanta, GA URL: http://www.sigchi.org/chi97/proceedings/tech-note/cs.htmAccessed August 25, 2011

- BarretoABScargleSDAdjouadiMA practical EMG-based human-computer interface for users with motor disabilitiesJ Rehabil Res Dev2000371536310847572

- LoPrestiEFBrienzaDMAngeloJGilbertsonLNeck range of motion and use of computer head controlJ Rehabil Res Dev200340319921214582524

- MasonKAControl apparatus sensitive to eye movement 1969 US Patent 3-462-6041969

- NakanishiSKunoYShimadaNShiraiYRobotic wheelchair based on observations of both user and environmentProc IROS199999912917

- KruegerTBStieglitzTA naïve and fast human computer interface controllable for the inexperienced – a performance studyConf Proc IEEE Eng Med Biol Soc20072508251118002504

- PalaniappanRBrain computer interface design using band powers extracted during mental tasks, neural engineering2nd International IEEE EMBS ConferenceMarch 16–19, 2005Arlington, VA2005321324

- RasmussenRGAcharyaSThakorNVAccuracy of a brain computer interface in subjects with minimal trainingBioengineering Conference Proceeding of the IEEE 32nd Annual NortheastMay 15, 2006Easton, PA2006167168 IEEE 0-7803-9563-8

- WolpawJRLoebGEAllisonBZBCI meeting 2005 – Workshop on signals and recording methodsIEEE Trans Neural Syst Rehabil Eng200614213814116792279

- GugerCEdlingerGHarkamWNiedermayerIPfurtschellerGHow many people are able to operate an EEG-based brain-computer interface (BCI)?IEEE Trans Neural Syst Rehabil Eng200311214514712899258

- RosenbergRThe biofeedback pointer: EMG control of a two dimensional pointer. Digest of PapersSecond International Symposium on Wearable ComputersOctober 13–20, 2003Pittsburg, PAIEEE1998162163 IEEE 0-8186-9074-7

- FukudaOTsujiTKanekoMAn EMG controlled pointing device using a neural networkIEEE International Conference on Systems, Man, and CyberneticsOctober 12–15, 1999Tokyo, JapanIEEE SMC ‘99 Conference Proceedings199946368

- FukudaOAritaJTsujiTAn EMG-controlled omnidirectional pointing device using a HMM-based neural networkProceedings of the International Joint Conference on Neural NetworksJuly 20–24, 20032003431953200

- AlsayeghOAEMG-based human-machine interface systemProceedings of IEEE International Conference on Multimedia and Expo 2000July 30–August 2, 2000New York, NY220002925928 IEEE0-7803-6536-4

- ItouTTeraoMNagataJYoshidaMMouse cursor control system using EMGProceedings of the 23rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society2001Istanbul, Turkey2200113681369 IEEE 0-7803-7211-5

- WeirRDesign of artificial arms and hands for prosthetic applicationsKutzMStandard Handbook of Biomedical Engineering and DesignNew York, NYMcGraw-Hill200332.132.61

- ChenXZhangXZhaoZYYangJHLantzVWangKQMultiple hand gesture recognition based on surface EMG signalICBBE 1st International Conference on Bioinformatics and Biomedical EngineeringJuly 6–8, 2007Wuhan, China2007506509

- WheelerKRDevice control using gestures sensed from EMG SMCia/03Proceedings of IEEE International Workshop on Soft Computing in Industrial Applications, 2003June 23–25, 200320032126

- KimJ-SJeongHSonWA new means of HCI: EMG-MOUSE IEEEInternational Conference on Systems, Man and CyberneticsThe Hague, The Netherlands20041100104

- TsenovGZeghbibAHPalisFShoylevNMladenovVNeural networks for online classification of hand and finger movements using surface EMG signals8th Seminar on Neural Network Applications in Electrical Engineering, 2006. NEUREL, 2006September 25–27, 2006Belgrade, Serbia and Montenegro2006167171

- NaikGRKumarDKPalaniswamiMMulti run ICA and surface EMG based signal processing system for recognising hand gestures. CIT 20088th IEEE International Conference on Computer and Information Technology, 2008July 8–11, 2008Sydney, Australia2008700705

- MoonILeeMRyuJMunMIntelligent robotic wheelchair with EMG-, gesture-, and voice-based interfacesProceedings of the 2003 lEEE/RSJ International Conference on intelligent Robots and Systems. (IROS 2003)October 27–31, 2003Las Vegas, NV2003324533458

- AngLBPBelenEFBernardoRABoongalingERBrionesGHCoronelJBFacial expression recognition through pattern analysis of facial muscle movements utilizing electromyogram sensors. TENCON 200IEEE Region 10 ConferenceNovember 21–23, 2004Chiang Mai, Thailand32004600603 IEEE 0-7803-8560-8

- FerreiraACelesteCWCheeinAFBastos-FilhoFFSarcinelli-FilhoMCarelliRHuman-machine interfaces based on EMG and EEG applied to robotic systemsJ Neuroeng Rehabil200851018366775

- ArjunanSPKumarDKRecognition of facial movements and hand gestures using surface electromyogram (sEMG) for HCI based applications9th Biennial Conference of the Australian Pattern Recognition Society on Digital Image Computing Techniques and ApplicationsDecember 3–5, 2008Glenelg, Australia200816

- FiroozabadiSMPAsghari OskoeiMRHuHA human-computer interface based on forehead multi-channel bio-signals to control a virtual wheelchairProceedings of the 14th ICBMETehran, Iran13–14 February. 2008272277

- RezazadehIMWangXFiroozabadiMHashemi GolpayeganiMRUsing affective human machine interface to increase the operation performance in virtual construction crane training system: A novel approachJournal of Automation in Construction201020289298

- van den BroekELLis’yVJanssenJHWesterinkJHDMSchutMHTuinenbreijerKAffective man-machine interface: Unveiling human emotions through biosignalsBiomedical Engineering Systems and TechnologiesBerlin, GermanyCommunications in Computer and Information Science, Springer Verlag201052Pt 12147

- ClancyEAHoganNProbability density of the surface electromyogram and its relation to amplitude detectorsIEEE Transactions on Biomedical Engineering61999461999466730739IEEE00189294

- OskoeiMAHuHSupport vector machine-based classification scheme for myoelectric control applied to upper limbIEEE Trans Biomed Eng20085581956196518632358

- SubasiAKiymikMKMuscle fatigue detection in EMG using time– frequency methods, ICA and neural networksJ Med Syst2010344775785

- KnaflitzMBonatoPTime-frequency methods applied to muscle fatigue assessment during dynamic contractionsJ Electromyogr Kinesiol19999533735010527215

- HamediMRezazadehIMFiroozabadiSMPFacial gesture recognition using two-channel biosensors configuration and fuzzy classifier: A pilot studyInternational Conference on Electrical, Control and Computer Engineering (INECCE), 2011June 21–22, 2011Pahang, Malaysia2011338343

- HamediMSheikhHSTanTSKamarulASurface electromyography-based facial expression recognition in bipolar configurationJ Comp Sci20117914071415

- EkmanPFriesenWVFacial Action Coding SystemPalo Alto, CAConsulting Psychologist Press1978