?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Background

Excellence in Research for Australia (ERA) rankings are given to academic journals in which Australian academics publish. This provides a metric on which Australian institutions and disciplines are ranked for international competitiveness. This paper explores the issues surrounding the ERA rankings of allied health journals in Australia.

Methods

We conducted a broad search to establish a representative list of general allied health and discipline-specific journals for common allied health disciplines. We identified the ERA rankings and impact factors for each journal and tested the congruence between these metrics within the disciplines.

Results

Few allied health journals have high ERA rankings (A*/A), and there is variability in the impact factors assigned to journals within the same ERA rank. There is a small group of allied health researchers worldwide, and this group is even smaller when divided by discipline. Current publication metrics may not adequately assess the impact of research, which is largely aimed at clinicians to improve clinical practice. Moreover, many journals are produced by underfunded professional associations, and readership is often constrained by small numbers of clinicians in specific allied health disciplines who are association members.

Conclusion

Allied health must have a stronger united voice in the next round of ERA rankings. The clinical impact of allied health journals also needs to be better understood and promoted as a research metric.

Introduction

There is a worldwide impetus to develop and measure metrics of research excellence.Citation1 For instance, research benchmarking activities have been established over the last 7 years in Australia,Citation2 the UK,Citation3 South Africa,Citation4 Europe,Citation5 and the USA.Citation6 Research metrics have been expressed in different forms in different countries. Despite this lack of standardization, research metrics have implications for universities in terms of their international academic standing, and their competitiveness for infrastructure and research funding. These metrics also have implications for individual researchers in terms of their academic status and credibility, their competitiveness for grants, and academic promotion.

In Australia, lobbying recently commenced for the Excellence in Research for Australia (ERA) ranking assigned to individual journals in the second ERA rankings, due to be published in 2012.Citation7 The history of ERA is described later in this paper. ERA objectivesCitation2 are to:

Establish an evaluation framework that gives government, industry, business, and the wider community assurance of the excellence of research conducted in Australia’s institutions

Provide a national stocktake of discipline areas of research strength and areas where there is an opportunity for development in Australia’s higher education institutions

Identify excellence across the full spectrum of research performance

Identify emerging research areas and opportunities for further development

Allow for comparison of Australia’s research nationally and internationally for all discipline areas.

Based on the initial 2010 round of ERA rankings for journals, we have already observed the impact within our own university, in terms of researchers’ perceived competitiveness for promotion, their success in applying for nationally competitive grants, and their academic standing. Allied health researchers in our School of Health Sciences have been advised that unless they publish in A* or A journals (the top two rankings of ERA journals) they may not be competitive for grants or academic promotion, despite the fact there may be no relevant journals in their discipline in these categories. This has raised concerns that if allied health researchers seek to publish in these high-ranked journals, they may well improve their individual academic standing and contribute to their university’s research ranking, but their work may not be read by the appropriate audience, and therefore it may have minimal impact.

Allied health is a complex and evolving collective of disciplines. It is widely accepted as an umbrella term referring to all health services which are not medicine or nursing.Citation8 Pharmacy and dentistry may or may not be included. Usually, the term “allied health” covers a range of health disciplines with different tasks, ranging from the physical therapies (such as physiotherapy, occupational therapy, and podiatry), to counseling services (such as social work and psychology), manufacturing services (such as orthotics and prosthetics), and diagnostic and support services (such as audiology, pathology, medical physicists, and imaging).Citation8 Because there is no standard definition for allied health, research can be published variably in discipline-specific, multidisciplinary, and health services journals. The discipline-specific journals are often academic vehicles sponsored by professional associations, and thus they can have varied financial, organizational, and academic supports, and readership and publication constraints.

Within the allied health research community, the main reason for publishing is to improve clinical uptake of practices that are evidence based.Citation8,Citation19 Thus, clinicians are often the primary research endpoint, and the findings from translational (applied) research would seem to be best disseminated through clinician-focused journals.Citation2,Citation9,Citation10,Citation19 However, as previously indicated, allied health academics in Australia are now being strongly encouraged to publish in journals which are valued by the academic community as having high publication metrics.Citation2 This potentially creates a tension for allied health researchers internationally, regarding whether they primarily seek academic recognition, or rather clinical impact which might change service quality and health outcomes. Consequently, the ERA ranking process for journals poses issues for Australian allied health researchers and clinicians. There is no one peak allied health academic body which could lobby effectively, so the ERA journal lobbying process raises questions about who is in a position to lobby for overall recognition of allied health research. Moreover, should lobbying be for an improved ERA rank for each discipline-specific allied health journal, or whether there should be a united Australian allied health voice which considers discipline-specific journals, as well as journals in which multidisciplinary health research might be published?

The importance of appropriately disseminating research information in a manner that will influence clinical practice decisions and change practice behaviors has been increasingly noted over the past decade.Citation9–Citation12 Such is the importance of improving the safety and quality of health care by understanding the most appropriate mechanisms of knowledge transfer (from research into clinical practice) that a whole new field of research has emerged (implementation science).Citation9–Citation12 As a consequence, the capacity of publication metrics to express appropriately how the research evidence was disseminated has been questioned in recent international publications.Citation13,Citation16

This paper investigates the ERA publication metrics in allied health research in six core allied health areas for the journals relevant to common fields of research.

Publication metrics

There is ongoing international debate about the best type and use of publication metrics.Citation13–Citation16 In Australia, there are currently two systems used to measure and rank the value of a journal title. The first system relates to the journal impact factor. This is an indicator used to rank, evaluate, and compare journals. Journal impact factors have been used internationally to assist authors when considering journals in which to publish and for libraries to identify potential quality journals to add to their collections. As a result of the work of Garfield and Sher in the early 1960s, journal impact factors have been updated annually and published in the Journal Citation Reports produced by Thomson Reuters since 1975.Citation17 Derived from an analysis of the data held in the Thomson Reuters citations, these have become a way of measuring the relative quality of a journal within a particular subject area or field. The journal impact factor is described as being “based on 2 elements: the numerator, which is the number of citations in the current year to items published in the previous two years, and the denominator, which is the number of substantive articles and reviews published in the same 2 years.”Citation15 Expressed as a calculation, the 2010 impact factors would be determined as follows.

The 3-year time frame for the calculation means that it takes time for new journals to be accepted and have an impact factor. Since the production of Journal Citation Reports in 2007, 5-year impact factors are starting to be provided. An issue for some disciplines is that impact factors are only available for the journals accepted to be in Journal Citation Reports, and the coverage of some fields is limited. It is recognized that there has been an effort to increase coverage in recent years, for instance the number of titles in the Journal Citation Reports for nursing science has gone from 32 titles in 2005 to 72 titles in 2009. As a result of the Thomson Reuters regional content expansion program, the coverage of Australian journals is increasing, with 52 new Australian journals across all subject categories added to the 2009 Journal Citation Reports.

The second metric is the one which is the subject of this paper. Whilst journal impact factors have been used internationally since 1975, changes have occurred in recent years, with individual countries looking to evaluate their research quality. In February 2008, the Australian government announced the new initiative for Australian research quality and evaluation, ie, ERA.Citation2 The Australian Research Council was given the responsibility by the Australian government for the ERA initiative to assess research quality within the Australian higher education institutions. The information gained from this exercise would indicate institutions and disciplines that were internationally competitive and identify emerging areas of research. By August 2010, the full ERA data collection process was completed and submitted for the evaluation of the research in the eight discipline clusters, by committees consisting of experienced and internationally recognized experts. The discipline clusters comprise physical, chemical and earth sciences, humanities and creative arts, engineering and environmental sciences, social, behavioral and economic sciences, mathematical, computing and information sciences, biological sciences and biotechnology, biomedical and clinical health sciences, and public and allied health sciences. The data collection process included the creation of a list of ERA-ranked journals. In formulating the list, the Australian Research Council worked with over 400 Australian and international reviewers. The draft list was then available for universities and other interested groups to review. The final listing categorizes journals by fields of research and divides each category into A*, A, B, or C rankings, with A* being the top 5% of the titles, A the next at 15%, B the next at 30%, and C the bottom at 50%. Journals may be assigned up to three fields of research codes.

Briefly, there is a third approach, ie, actual article citation counts. Listings of these can be found at the Institute for Scientific Information Web of Knowledge Database.Citation17 GarfieldCitation15 comments that: “The use of JIFs instead of actual article citation counts to evaluate individuals is a highly controversial issue.” There is the recognition for researchers that their article was accepted for publication in a high impact journal, but there may be no correlation between the citations for the researcher’s article, which could even be zero, regarding the citations for the journal. Although actual article citation counts are not a metric of journal evaluation, it is worth mentioning as a tool for evaluating scientific integrity without use of journal impact factors or ERA ratings. Variations of this measure include the h index, which is used as a measure to evaluate an individual author’s performance. The h index is based on the number of papers published by the researcher and on how often these papers are cited in papers written by other researchers.

Dilemma of allied health research

For Australian allied health researchers, the ERA ranking of a journal is now becoming the most important consideration when selecting journals in which to publish. When collaborating with international authors, selecting those titles that rank high in ERA but also have a significant impact factor can be a challenge for some fields. Within the allied health journals, many of which appear in the journal citation report science category for rehabilitation, important differences between the two metrics can be observed. In , the 30 rehabilitation journals listed in a recently published analysis of rehabilitation research by Shadgan et alCitation18 (for which Thomson Reuter’s Web of Knowledge database was used) are compared against their ERA rankings and 2009 impact factor rankings (see ). The 100 top cited articles were published in the first eleven journals on the list.Citation18 These journals included: IEEE Transactions on Neural Systems and Rehabilitation Engineering, European Journal of Cancer Care, Archives of Physical Medicine and Rehabilitation, Journal of Rehabilitation Medicine, Journal of Neuroengineering and Rehabilitation, Physical Therapy, American Journal of Physical Medicine and Rehabilitation, Brain Injury, Journal of Orthopaedic and Sports Physical Therapy, Australian Journal of Physiotherapy, and Manual Therapy. Only one of these ranks in the ERA journal list as A and the others are either ranked as B or C. From the full list, there are only two A* and one other A ranked journal ().

Table 1 A list of ERA rankings, their fields of research, and impact factors for rehabilitation journals

This highlights the low ranking of premier rehabilitation journals, for example, Australian Journal of Physiotherapy (now called Journal of Physiotherapy) and Manual Therapy, which are provided to most physiotherapists as part of their professional association membership. Because these journals would have the greatest exposure to practicing clinicians, they are potentially most likely to influence clinical practice.

A specific ranked list for allied health sciences or rehabilitation is not available. This is in contrast with nursing, which has a specific ERA field of research category, and for which the Council of Deans of Nursing and Midwifery (CDNM, Australia and New Zealand) worked closely on providing information for the ERA process. Of the journal titles in the list originally prepared by the CDNM for the ERA process, all are either A* or A in ERA ratings, except for Health Expectations, which does not fall into the nursing category but is in the field of research category of public health and health services (field of research 1117). Other allied health sciences, such as occupational therapy, are mainly placed in the broad ERA field of research category of clinical sciences (field of research 1103), consisting of 1365 journals, as compared with the field of research category of nursing (field of research 1110) with 263 journals in total.

Methods

Data sources

From a range of fields of research, we collated peer-reviewed journal titles relevant to allied health research. The journal titles were then broadly divided into categories which reflected allied health discipline interests. The journal titles were collated independently by two allied health researchers (with different backgrounds, ie, general health sciences [OT], and speech pathology [KW]). The results were compared to ensure completeness. The final list was then discussed with the consultant liaison health librarian (JH). Where differences were identified, the researchers arrived at consensus through discussion. The current impact factor for each journal was then identified by searching the current journal home page, Web of Science, and/or Scopus search engines. We then sourced the current ERA ranking for each journal from the 2010 Australian Research Council ERA list.Citation2

Analysis

All data were entered into a Microsoft Excel® spreadsheet for analysis. We charted the relationship between impact factors and ERA rankings for those journals which had both metrics. We also reported on the percentage of journals which had one metric only. To overcome the issue that some distributions of discipline-specific impact factor values were not normally distributed, we reported the median impact factor (25–75th percentile) in each ERA field of research category. We looked for differences in impact factor values within each discipline, using Kruskal–Wallis one-way analysis of variance tests. Differences were significant at P < 0.05. For ease of reporting, we rounded the impact factor median and percentile values to one decimal place.

Results

Publications associated with six core allied health disciplines were considered in this paper, ie, evidence-based practice in allied health, allied health therapy research (reflecting mostly physiotherapy, occupational therapy, podiatry), medical radiation, nutrition, speech pathology, and social work.

Evidence-based practice in allied health

Of the 13 journals relevant to allied health, only two had impact factors (one A ERA ranked journal, Evidence-based Complementary and Alternative Medicine) and one B journal (Clinical Trials, see ). The ERA A ranked journal had a slightly higher impact factor than the ERA B ranked journal. The remaining journals had been assigned ERA ranks without any measure of citations or readership. We undertook no statistical analysis on this group of journals because of the small number of journals with impact factors.

Table 2 A list of evidence-based practice journals publishing allied health research

Therapies

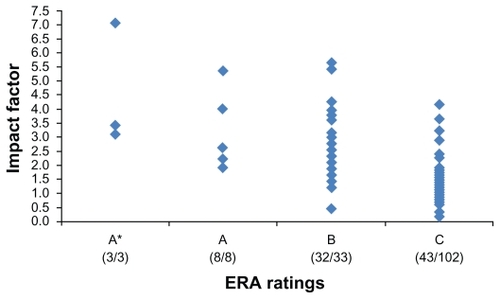

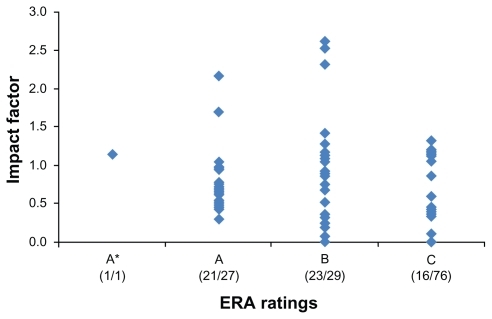

We identified 145 journals that published allied health therapy research, particularly physiotherapy, occupational therapy, and podiatry. Every journal had an ERA ranking (see ). However, the percentage of ERA ranked journals with impact factors was 100% for A* and A ranked journals, 97% for B ranked journals, and 41% for C ranked journals. highlights the incongruence between ERA rankings and impact factors, particularly between A*, A, and B ranked journals. also highlights a broad overlap of lower impact factors in B and C ranked journals. There was a significant difference between the ranked impact factors across the ERA categories (P < 0.05), with the significant difference occurring between the A*, A, and B ERA categories. The median A* ERA journal impact factor was 3.4 (3.3 [25th percentile] to 5.2 [75th percentile]). For the A journals, the median impact factor was 2.6 (2.1 [25th percentile] to 3.0 [75th percentile]), for the B ranked journals it was 2.3 (1.8 [25th percentile] to 2.7 [75th percentile]), and for the C journals it was 1.3 (0.8 [25th percentile] to 1.8 [75th percentile]).

Figure 1 A comparison of the impact factors and ERA rankings for therapy journals (occupational therapy, physiotherapy, and podiatry).

Abbreviation: ERA, Excellence in Research for Australia.

Table 3 A list of journals which could publish allied health therapy research

Medical radiation

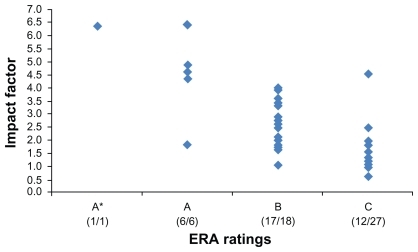

We identified 52 journals in the medical radiation field (see ), all with ERA rankings. The ERA A* and A journals all had impact factors. Impact factors were documented for 94.0% of the B ranked journals and 44.4% of the C ranked journals. charts the relationship between impact factors and ERA rankings, highlighting the overlap between impact factors and ERA rankings in all classifications. The one A* journal impact factor (impact factor 6.3) was analyzed with the A ERA ranked journals, when considering ranked impact factor differences across the ERA categories. The median A ERA journal impact factor was 4.7 (2.0 [25th percentile] to 3.3 [75th percentile]), the B ranked journals median impact factor was 2.6 (2.0 [25th percentile] to 3.3 [75th percentile]) and for the C journals, was 1.3(1.0 [25th percentile] to 1.8 [75th percentile]). There was a significant negative trend in ranked impact factors across each of the ERA categories (P < 0.05).

Figure 2 Impact factors and ERA rankings of medical radiation journals.

Abbreviation: ERA, Excellence in Research for Australia.

Table 4 A list of medical radiation journals, their ERA ratings and impact factors

Nutrition

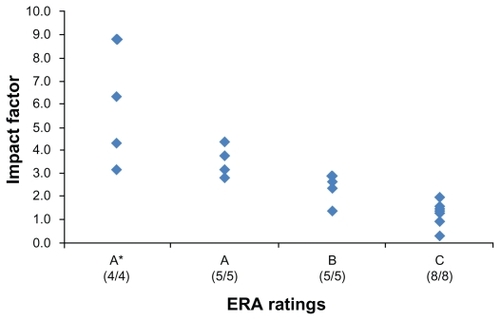

We identified 38 journals in this area, where there was clear congruence between impact factors and ERA rankings (See and ). In this discipline, we found 16 journals with impact factors but no ERA ranking. For those journals with both ERA and an impact factor, apart from considerable overlap between the impact factors for the A* and A journals, the impact factors generally reflected the ERA rank. There was a significant negative trend in impact factors and each ERA ranking, with the median A* ERA journal impact factor being 4.3 (3.1 [25th percentile] to 6.3 [75th percentile]). For the A journals it was 3.1 (3.1 [25th percentile] to 3.7 [75th percentile]), for the B ranked journals was 2.6 (2.4 [25th percentile] to 2.6 [75th percentile]), and for the C journals was 1.4 (1.1 [25th percentile] to 1.7 [75th percentile]). For the journals without ERA rankings, the median impact factor was 1.5 (0.5 [25th percentile] to 2.2 [75th percentile]), suggesting that these journals should have mostly attracted C rankings ().

Figure 3 Impact factors and ERA rankings of nutrition journals.

Abbreviation: ERA, Excellence in Research for Australia.

Table 5 A comparison of the ERA ratings and impact factors for nutrition journals

Speech pathology

We identified 48 speech pathology/language and communication journals, all with ERA rankings. In total, 100% of the A* journals, 83% of the A journals, 86% of the B journals, and 64% of the C journals had impact factors (see and ). There was considerable overlap of impact factors in the different ERA categories, and there were no significant differences in ranked impact factors between the ERA categories. The median A* ERA journal impact factor was 1.3 (1.1 [25th percentile] to 2.3 [75th percentile]), for the A journals was 1.1 (0.7 [25th percentile] to 1.6 [75th percentile]), for the B ranked journals was 1.3 (0.7 [25th percentile] to 2.3 [75th percentile]), and for the C journals was 0.9 (0.6 [25th percentile] to 1.3 [75th percentile]).

Figure 4 Impact factors and ERA rankings of speech pathology journals.

Abbreviation: ERA, Excellence in Research for Australia.

Table 6 A comparison of the ERA ratings and impact factors for speech pathology journals

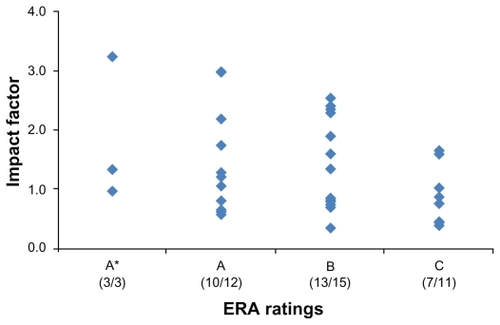

Social work

We identified 143 social work journals, of which all had ERA rankings. All the A* journals, 77.8% of the A journals, 58.9% of the B journals, and 21% of the C journals had impact factors. and outline the associations between ERA rankings and impact factors. As anticipated from the data distribution, there was no association between ERA ranking and impact factor, and no significant difference in ranked impact factors between the ERA categories. The impact factor of the only A* journal was 1.1, the median impact factor of the A journals was 0.7 (0.5 [25th percentile] to 1.0 [75th percentile]), for the B journals was 1.0 (0.4 [25th percentile] to 1.2 [75th percentile]), and for the C journals was 0.6 (0.4 [25th percentile] to 1.1 [75th percentile]).

Figure 5 Comparison of impact factors and ERA rankings of social work journals.

Abbreviation: ERA, Excellence in Research for Australia.

Table 7 A list of the social work journals, their ERA rankings, and impact factors

Summary of results

provides a summary of the analysis, and highlights the disparity within six selected allied health areas with respect to ERA rankings and impact factors. reports the raw numbers of journals in each discipline, in each ERA category, the number of journals with impact factors, and the median impact factor for each category. Significant differences in impact factors are also reported as P-values. In five of the six categories, there were a large number of journals in which allied health research was published, under the appropriate field of research codes. Significant differences and trends in decreasing impact factors in the decreasing ERA categories were found only for therapies, medical radiation, and nutrition. There were insufficient numbers of journals with impact factors to make these calculations in the evidence-based practice area, and there were no differences in impact factors across ERA categories for speech pathology or social work journals. There were 16 journals (42% of total journals) in nutrition without any ERA rank, although their impact factors suggest an equivalency with ERA rank C.

Table 8 Summary of ERA categories, median impact factors, and significant differences

Discussion

This paper highlights the difficulties for allied health academics in establishing research excellence via publication in Australia. The limitations of this research are its focus on selected fields of research, only five clinical areas of allied health, and one general area (evidence-based practice). Other allied health researchers may have selected different journals for inclusion in the list or different fields of research. However, 439 journals were considered, the liaison librarian provided an independent view of the validity of journal inclusion, and the pattern of impact factor disjoint with ERA ranking was relatively consistent. The strengths of this research are that it is the first paper we know of that has raised the issue of the need for a collaborative allied health voice to consider publication metrics appropriately to overcome the small clinical and research allied health workforce and the allied health focus on knowledge translation. Our research highlights how little is known about who is publishing in allied health journals, why these journals are chosen, who is reading these publications, and what difference the research is making to clinical practice decisions.

We have previously reported the relatively small clinical workforce in allied health disciplines in Australia compared with medicine and nursing,Citation8 as well as the even smaller number of Australian allied health academics who are publishing their research.Citation19 Given these workforce constraints, the question arising from the ERA ranking process seems to be: Should Australian allied health researchers’ focus be on lobbying the Australian Research CouncilCitation2 for improved rankings of individual allied health journals, should their focus be on allied health journals as a collective, or should questions be asked about how to measure clinical impact and knowledge translation as elements of publication metrics?

Despite finding over 400 journals in which selected allied health disciplines might publish (either in discipline-specific research or multidisciplinary research), there are potentially many hundreds more journals, had we considered other allied health research areas.Citation3,Citation4 The relationship we report between ERA rankings and impact factors in allied health generally suggests that the higher the ERA ranking, the higher the academic impact of the journal is likely to be. However, not every journal had an impact factor and an ERA ranking, and the expected negative linear relationship between ERA categories and impact factors did not occur in every allied health discipline (eg, speech pathology and social work). This suggests that more negotiation is required to rank allied health journals appropriately in terms of their visibility, research quality, impact, and clinical importance for the 2012 round of the ERA process.

It is unknown what the impact of any allied health journal is on clinical practice, because there has been no research in this area. Therefore, there is no current metric to estimate impact in a clinical environment or to measure changed behaviors. With the ERA focus being on international benchmarking and competitiveness, it seems essential for allied health to estimate the extent of the clinical readership of allied health journals, and to measure the difference the current evidence-base makes to translation of knowledge into clinical practice.Citation8–Citation16,Citation19

Effective evidence (knowledge) translation is the key to improved health care processes and outcomes.Citation9–Citation12 It has been reported that translational (applied) research does not necessarily lead to multiple citations, because the clinicians who are digesting the research may not be researching or publishing themselves.Citation9–Citation12,Citation19 We contend that because of its clinical origins, allied health research aims to stimulate clinical change first and research directions second, whereas basic and bench research is about stimulating the next wave of preclinical research. Therefore, allied health clinicians may never cite a publication themselves, even though it may have had considerable impact on their practice, because so few are likely to publish. Therefore, the current publication metrics may not be the best way to promulgate information on “true” impact in allied health, ie, knowledge translation, improved health care practices, safety, and health outcomes.Citation2,Citation9–Citation12 With the present allied health ERA metrics, it does not seem possible to achieve both academic prestige and a measure of knowledge translation, because the low citation rates of allied health journals reported in this paper are often the most widely read clinical allied health journals. This situation is in direct contrast with journals commonly read by general practitioners (ie, Medical Journal of Australia, and Australian Family Physician), which both attract ERA A rankings.Citation2

Conclusion

We provide three key messages from this research. The first is that there are inconsistent relationships between impact factors and ERA rankings in five selected allied health disciplines, and that many ERA rankings have been made in the absence of much information about journal size, readership, or likely impact. The second message is that, to assign the 2012 ERA rankings to journals appropriately, allied health disciplines need to present a united voice, which does not just lobby for individual research areas or discipline journals. This voice could comprise leading allied health researchers and clinicians who have an understanding of publication metrics, allied health tasks and roles, knowledge translation, and clinical uptake of evidence. This group could use the results presented in this paper as a starting point to identify discipline-specific allied health professional journals and key multidisciplinary journals which might publish allied health research and influence clinicians. This group could then lobby the Australian Research Council to address the incongruity between impact factors and ERA rankings, and to promote the journals which publish good quality papers and are likely to influence allied health clinical practice. The third message is that it is essential that publication and research excellence metrics go beyond academic impact, and include measures of translational research. Only by doing this will the true impact of allied health research be measured. Therefore, measures of knowledge translation are urgently required, and should be components additional to the current metric calculations.

We agree that excellence in allied health research must be a goal, and that it must be measurable. However, any metric should include the relevance of the research to health care, and include knowledge of who uses the research, why, and how. Users may not just be other researchers, and therefore measures of knowledge translation and clinical impact are essential in comprehensive and high quality publication metrics, to integrate research and clinical imperatives.

Disclosure

The authors report no conflicts of interest in this work.

References

- Worldwide Universities NetworkRealising the global university112007 Available at: http://www.wun.ac.uk/Accessed April 8, 2011

- Australian Research CouncilThe excellence in research for Australia (ERA) initiative Available at: http://www.arc.gov.au/eraAccessed April 8, 2011

- Higher Education Funding Council for EnglandResearch excellence framework Available at: http://www.hefce.ac.uk/research/ref/Accessed April 8, 2011

- South African Medical Research CouncilMedia release on the occasion of the launch of the 2005/6 Annual Report of The South African Medical Research Council Available at: http://www.mrc.ac.za/pressreleases/2006/9pres2006.htmAccessed April 8, 2011

- RuskoahoHResearch training for innovation – towards a framework for doctoral education, Finland as an example Available at: http://www.researchexcellence.eu/documents/RES5_HeikkiRuskoaho.pdfAccessed April 8, 2011

- National Science FoundationStar metrics: New way to measure the impact of federally funded research Available at: http://www.nsf.gov/news/news_summ.jsp?cntn_id=117042Accessed April 8, 2011

- The University of SydneyAustralian Research Council Excellence in Research Australia rankings Available at: http://sydney.edu.au/research_support/performance/era/2012.shtmlAccessed April 8, 2011

- TurnbullCGrimmer-SomersKKumarSMayELawDAshworthEAllied, scientific and complementary health professionals: A new model for Australian allied healthAust Health Rev2009331273719203331

- GrimshawJMShirranLThomasREChanging provider behaviour: An overview of systematic reviews of interventionsMed Care200139Suppl 2II24511583120

- GrolRWensingMEcclesMImproving Patient CareLondon, UKElsevier2005

- GrahamIDLoganJHarrisonMBLost in knowledge translation: Time for a mapJ Contin Educ Health Prof2006261132416557505

- LavisJRossSMcLeodCIGildinerAMeasuring the impact of health researchJ Health Serv Res Policy20038316517012869343

- PontilleDTornyDThe controversial policies of journal ratings: Evaluating social sciences and humanitiesResearch Evaluation2010195347360 Available at: http://www.ingentaconnect.com/content/beech/rev/2010/00000019/00000005/art00004Accessed May 12, 2011

- SandströmUSandströmEThe field factor: Towards a metric for academic institutionsResearch Evaluation2009183243250 Available at: http://www.ingentaconnect.com/content/beech/rev/2009/00000018/00000003/art00009Accessed May 12, 2011

- GarfieldEThe history and meaning of the journal impact factorJAMA20062951909316391221

- RizkallahJSinDDIntegrative approach to quality assessment of medical journals using impact factor, eigenfactor, and article influence scoresPLoS One201054e1020420419115

- Web of Knowledge Available at: http://wokinfo.com/Accessed April 18, 2011

- ShadganBRoigMHajghanbariBReidWDTop-cited articles in rehabilitationArch Phys Med Rehabil201091580681520434622

- GrimmerKKumarSAllied health task-related evidenceJournal of Social Work Research and Evaluation200562143154