Abstract

For over a decade, organizations have attempted to include the measurement and reporting of health outcome data in contractual agreements between funders and health service providers, but few have succeeded. This research explores the utility of collecting health outcomes data that could be included in funding contracts for an Australian Community Care Organisation (CCO). An action-research methodology was used to trial the implementation of outcome measurement in six diverse projects within the CCO using a taxonomy of interventions based on the International Classification of Function. The findings from the six projects are presented as vignettes to illustrate the issues around the routine collection of health outcomes in each case. Data collection and analyses were structured around Donabedian’s structure–process–outcome triad. Health outcomes are commonly defined as a change in health status that is attributable to an intervention. This definition assumes that a change in health status can be defined and measured objectively; the intervention can be defined; the change in health status is attributable to the intervention; and that the health outcomes data are accessible. This study found flaws with all of these assumptions that seriously undermine the ability of community-based organizations to introduce routine health outcome measurement. Challenges were identified across all stages of the Donabedian triad, including poor adherence to minimum dataset requirements; difficulties standardizing processes or defining interventions; low rates of use of outcome tools; lack of value of the tools to the service provider; difficulties defining or identifying the end point of an intervention; technical and ethical barriers to accessing data; a lack of standardized processes; and time lags for the collection of data. In no case was the use of outcome measures sustained by any of the teams, although some quality-assurance measures were introduced as a result of the project.

Introduction

The use of the term “health outcomes” has increased exponentially since the early 1980s as a result of its broad range of applications in a number of fields of health and health services research. Health outcomes have evolved to encompass almost every aspect of health or by-product of the health service delivery process, from quality of life to mortality, health service utilization, and hospital readmission rates.Citation1 Health outcomes are perceived to have several important functions in the delivery of health care, including monitoring the success of the health service at a population levelCitation2,Citation3 and enabling health service providers to demonstrate their impact at a clinical level.Citation4 This paper reports on the attempt by a large Australian community care organization (CCO) to introduce the routine reporting of health outcomes that could ultimately be included in purchasing contracts between the CCO and the funder.

The Sunshine Statement of the Australian Health Ministers’ Advisory Committee defines a health outcome broadly as: “a change in the health of an individual, or a group of people or population, which is wholly or partially attributable to an intervention or series of interventions.”Citation5 Notable variations from this definition include one by Best, who defines health outcomes from the perspective of individuals to include aspects of comfort, accessibility, and appropriateness of care, highlighting the role of the non-health aspects of care in achieving an outcome as well as the importance of including all stakeholder perspectives in health outcome measurement.Citation6 McCallum proposed that health outcomes should not necessarily be dependent on an intervention and that a change in health status can arise from an intervention or “lack of intervention, on the natural history of a condition.”Citation7

In 1981, the World Health Organization released the Global Strategy for Health for All by the Year 2000,Citation8 which resulted in the establishment of a range of health goals and targets.Citation9 In Australia, the focus on improving health outcomes became a mechanism for the allocation of health resources through the Australian Health Goals and Targets program. In response, the states adopted the Health Goals and Targets approach, which led to an expectation that health service organizations should be accountable for their contribution to health outcomes.

The challenges of accurately monitoring the Australian Health Goals and Targets at a national level was acknowledged by the Australian health ministers in 1992:

Achieving change will take time, not least because of the paucity of useful measures of health outcome which could serve as a basis for funding decisions.Citation9

In 1995, the National Health Goals and Targets were renamed National Health Priority Areas due to a number of difficulties implementing the Health Goals and Targets. Specifically, there was a lack of national reporting requirements, too many indicators, and a lack of emphasis on treatment and the ongoing management of disease.Citation10

The National Health Goals and Targets program illustrates the health outcome approach to resource allocation at a national level. The program was credited with increasing the emphasis on health outcomes at state and national levels. However, the Commonwealth lacked clear direction for implementation and accountability to achieve the desired outcomes and accurately monitor their progress.

New South Wales (NSW) was the first state in Australia to move to outcome-based funding, introducing the NSW Health Outcomes Initiative in 1991. This initiative built on the National Health Goals and Targets to identify specific priority areas that were proposed as an “outcomes based accountability mechanism.”Citation11 A NSW Health discussion paper on the use of targets for health outcomes identified several barriers to their collection, including inadequate consultation; poor assessment of information needs; and a lack of clear accountability for the achievement of the specified goals and targets.Citation11

NSW funded a wide range of projects under outcome-based funding; however, it ultimately moved away from an emphasis on funding health outcomes to performance indicators due to difficulties linking government activities and health-related outcomes.

The health of the community is influenced by many factors which predominantly fall outside the sphere of influence of the health system […]. This makes the development of meaningful linkages between government activities and performance at this level difficult.Citation12

At a network level, the Divisions of General Practice attempted to introduce outcome-based funding in 1996.Citation13 The Divisions of General Practice were regionally based networks designed to support GPs working together to improve the quality and continuity of care, meet local health needs, promote preventive care, and respond to community needs.Citation14 The project had the following aim:

A move to outcomes based funding would entail a program approach based on defined and agreed outcomes in a number of key areas in which change could be measured over time.Citation13

A review of the proposed outcome indicatorsCitation15 found that a lack of information technology infrastructure in Australian general practice was a barrier to the collection of outcome-based indicators. For instance, many of the indicators required the identification of patients with certain characteristics, such as a diagnosis of diabetes or a particular age and sex. This information was necessary both to form a denominator for change in rates of the condition and to help the identification of target populations for the delivery of preventive services such as immunization or screening. The low rates of computerization of general practice at the time of this report limited the introduction of these initiatives. Other challenges identified included the lack of appropriately tested clinical practice guidelines and indicators; the difficulties attributing outcomes, such as hospitalization, to general practice interventions; and limitations of data quality and accuracy at all levels. The few indicators that were endorsed were those with clear relationships between the outcome and the indicator, such as rates of cervical screening and immunization.

In 1999, the Commonwealth Department of Health and Aged Care released an implementation guide for the 21 divisions piloting the outcome-based funding approach.Citation16 In comparison with the highly prescriptive outcomes and performance indicators of the 1997 consultation phase, the implementation guide was broad, and allowed the Divisions of General Practice a great deal of autonomy in the delivery of their care.

These examples highlight a number of technical and practical barriers to the use of health outcomes or outcome-related performance-indicator data at national, state, and organizational levels. The enthusiasm for the collection of health outcome data appears to have preceded the information systems, appropriate measures, indicators, and technical skills necessary to facilitate the routine collection of outcome- or process-related information.

To date, there is still little evidence of the widespread, effective uptake of routine health outcome measures in clinical settings by community-based health care providers. A recent systematic review of the barriers and facilitators of routine measurement of health outcomes by allied health practitionersCitation4 identified several barriers to the routine collection of health outcomes. These included knowledge, educational, and perceived barriers to the collection of outcome data; support and priority for outcome use; practical considerations; and patient considerations. Most of the studies reported within this review were discipline-specific, rather than organizationally focused.

The measurement of health outcomes by health services continues to be promoted at the highest policy levels in several jurisdictions. For instance, the recent United Kingdom Department of Health White Paper Equity and Excellence: Liberating the NHS claims that:

There will be a relentless focus on clinical outcomes. Success will be measured, not through bureaucratic process targets, but against results that really matter to patients.Citation3

The routine collection of patient-reported outcome measures forms part of the NHS’s standard for acute careCitation17 for the purpose of quality improvement. Several other health providers and organizations are adopting patient-reported outcome measures for quality, audit, benchmarking, and accountability purposes.Citation17,Citation18

To date, no published studies have systematically examined the barriers and facilitators to the routine collection of outcome data at a whole community health organization level.

Background to the CCO

The CCO is the major provider of health and disability services in the region, which had a population of approximately 330,000 people at the time of undertaking the study. The services delivered by each health service program are summarized in . Over 1000 staff deliver services from approximately 70 different sites across the region.

Table 1 The community care organization service description

Each program employs a range of health service providers who deliver a variety of services to defined target groups. For example, the Alcohol and Drug Program (ADP) employs doctors, case workers, and nurses to deliver interventions designed to minimize the potential harm arising from alcohol and drug use. The interventions include methadone dispensing, withdrawal services, medical services, counseling, and case management. As well, the ADP is responsible for the provision of information and support for other service providers in the community whose clients may be at some form of risk from alcohol or drug use. In contrast, the staff employed by the Rehabilitation Program include physiotherapists, speech pathologists, psychologists, occupational therapists, and exercise physiologists. They provide intensive rehabilitative services within the hospital, some outpatient care, including vocational rehabilitation and driver retraining, and equipment and aids. These two examples highlight the widely varied roles of the health service programs.

Legislative reforms introduced in 1996 saw the administrative separation of the CCO (a health service provider) from the purchaser, the local health department. The CCO and regional hospital became part of a statutory board that employed the chief executive officer that managed the CCO. The health department was the major purchaser of services from the CCO, and therefore the major source of revenue for the CCO. Therefore, most of the activities of the CCO as a health care-providing organization were stipulated by contractual agreements with the health department through the CCO Services Board.

The accountability structure of the CCO is based on a matrix model. Patient care is delivered by individual health care providers (or teams) who report to team leaders. Team leaders are responsible for their team outcomes, and report via a program director to the chief executive officer. The chief executive officer is responsible to the local health board for delivery of the contracted outputs and outcomes across all the programs.

The move towards health outcome-based purchasing was made explicit in the organizational vision of the CCO, who, rather than the historical focus on throughput as a measure of health-service accountability, aimed to move towards the inclusion of health outcomes in their purchasing contracts. This requirement was reflected in the purchase contract between the CCO and the regional health department, which emphasized accountability for the achievement of specific service outputs and agreed customer outcomes.

These statements set out to change the entire basis of health service accountability in the CCO. However, there was no guidance as to what health outcomes were important or how they were to be measured or reported. The purchaser relied on the knowledge within the CCO of their services and health service structures for the identification of health outcomes that could be used in purchasing contracts. This study reports on the approach used to implement the routine use of health outcome measures in the CCO, and the implications for this type of study in a community setting based on data collected between 1998 and 2001.

Methods

A mixed-methods approach was used to implement and evaluate the health outcome approach across the CCO. The overarching structure for the implementation was action research, which combines the processes of data gathering and interpretation with actionCitation19 to intervene in social systems to “solve problems” and “improve conditions.”Citation20 An important principle of action research is that it involves stakeholders intimately in the research process, as this ensures maximum ownership and understanding of the problems and commitment to solutions, which is vital in facilitating change. For brevity, this paper reports on the overall structure of the implementation across the CCO.

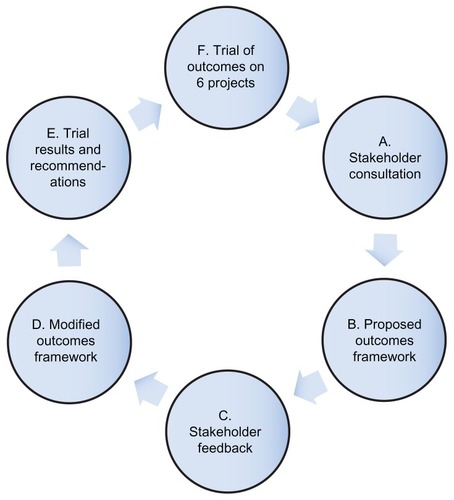

An initial consultation phase included a review of the literature, discussions with other agencies, and extensive formal meetings with health service managers and providers from the CCO. The feedback from the consultation phase was used to develop the first-draft outcome framework. This was piloted and reviewed in consultation with stakeholders, resulting in the framework presented in this study.

The outcome framework was based on being able to define reproducible components of the care process. The widespread use of standard care plans and clinical pathways within the service suggested that several services had a best-practice way of delivering health care within the CCO. The initial framework was based on the Donabedian concept of linking structures, processes, and outcomes,Citation21 which was implemented through clinical pathways or standard care plans. This framework was based on the premise that if standardized processes were adhered to, they would serve as a proxy for the achievement of the health outcome.

The framework was circulated to all programs for provider feedback. Several services could not use this framework because they lacked reproducible processes, particularly those services whose primary roles were to optimize client participation, such as the Disability Program and the ADP. In these cases, the reproducible processes were based on client assessment or the way the client moved through the health service, rather than the way the treatment was delivered. Where best-practice processes did exist, there was little published evidence to support them, therefore, it was not clear whether the intervention would actually lead to the desired outcome.Citation22 Indeed, the providers reported that the care plans were based more on their own experience than the use of published evidence.

To overcome the problems identified, the interventions were categorized according to the International Classification of Functioning, Disability and Health (ICF),Citation23 with processes and outcomes linked to each. The framework was then re-presented to each program in the context of the flowchart described in . Using the ICF to describe a continuum of health service types, rather than simply seeing all health services from within a homogeneous framework, led to widespread acceptance of the framework and an increased understanding of what the project was trying to achieve. These interventions were subsequently amalgamated into four main service types: restorative, rehabilitative, integrative, and preventive services.

Table 2 Continuum of health service types within the community care organization

The data-collection process involved identifying a project and consulting with providers and in some cases patients to define what the processes and outcomes of care should be, and to identify ways of measuring these. Projects were selected on the basis that each health service program and each level of the ICF framework was represented.

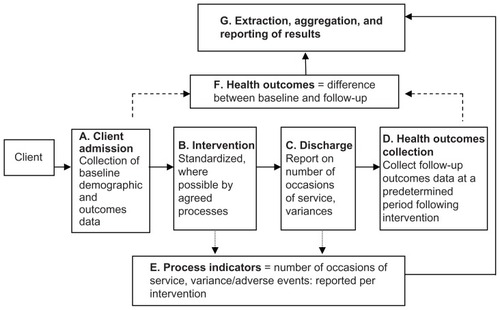

Each project had a key contact person. Staff were trained in the respective outcome measures for their service, and at the end of each project were consulted about the implications of the project for their practice. Due to the variation in the types and nature of the interventions, multiple methods of data collection were required, and these were tailored to the individual needs of the intervention. describes the steps undertaken to collect the outcome and process data.

In an attempt to achieve consistent reporting, several allied and community health classification systems were investigated, including International Classification of Disease, version 10; National Allied Health Casemix Committee Indicators for Intervention; the Department of Veterans’ Affairs item numbers; and the Community Health Information System. At the time, none of these was sufficiently developed or appropriate to meet the requirements of the project.Citation24

For each project, detailed process and outcome data were recorded, and key stakeholders within each program were interviewed at the end of the project to ascertain their perceptions of the utility of the tools, the processes of data collection, and issues pertaining to the routine collection of outcome data within their setting. Ethics or quality council approval was obtained for each project individually in accordance with the ethics and research governance guidelines of the CCO.

Each of the projects resulted in a detailed report that was provided to the project stakeholders and the CCO executive. The purpose of this paper is to examine the issues faced by all of the projects to explore empirically the barriers to the routine collection of health outcome data in a clinical setting. Thus, the projects are presented descriptively as vignettes illustrating the key points regarding the implementation of the outcome approach in a range of different settings. The data are further analyzed using the data-collection framework () as a thematic template to synthesize the issues around the routine collection of health outcome data across the projects at each stage of the data-collection process. The themes extracted from all of the studies were extracted into a table ().

Table 3 Summary of health outcome projects

Results

Six projects were chosen in consultation with program directors and their quality-improvement teams or middle managers. presents the projects that were considered or commenced as part of the health outcome project and summarizes the learning regarding the collection of health outcome data for each of the vignettes.

Vignette one: pediatric dental outcomes

The pediatric dental outcome project did not commence. However, the narrative illustrates some of the difficulties in both identifying appropriate health outcome measures and determining a reasonable time frame in which the outcomes can be measured.

Children under the age of 5 years with dental caries generally require heavy sedation or a general anesthetic for any oral procedure. This project was selected because the senior dentist was interested in the outcomes of the treatment of dental caries for children under sedation. This appeared to be a clearly defined intervention with relatively reproducible processes: oral surgery under sedation or anesthesia, for which the outcome was the restoration of oral function through the treatment of dental caries.

The major limitation was the identification of appropriate outcome measures that could be captured within the time period of the project. The obvious outcomes were the cessation of pain and restoration of oral function in the children. However, the dentists reported that most pediatric caries are asymptomatic. The most popular oral health tool at the time was the Oral Health Impact Profile;Citation25 however, in its early format it was not appropriate for children, due to the adult concepts and constructs it includes. The dentists reported that the important outcomes of oral health status at age 5 years were the oral functioning of the child once they had their secondary dentition at around the age of 12 years; however, this time frame put it well outside the scope of this project. Short-term indicators, such as the recurrence of dental caries over a 12-month period, whilst useful for the dental service, related more to dental education than the performance of dental surgery.

The inability to determine or measure appropriate outcomes meant that the project did not commence. This project highlighted the difficulty in identifying clear health outcome measures specifically for this intervention and population group, and the difficulty in identifying outcome measures that could be collected in a suitable time period for reporting.

Vignette two: innersole study – integrated health care program

Innersoles are dispensed by podiatrists to reduce pain, improve function, and ideally reduce the amount of ongoing podiatric care required. The innersole project used a validated, disease-specific, health status questionnaire as well as innersole-specific questions to measure the adherence to and health outcomes of the provision of innersoles.Citation26,Citation27 The innersole project was the first of the outcome projects implemented, and it was used to pilot the system of health outcome data collection and to test the relationship between process and outcome measures.

Podiatrists were asked to administer the outcome questionnaire over a 5-month period to all patients requiring innersoles. Clients were given a survey when they received the innersoles and sent a follow-up questionnaire by mail 3 months later. A retrospective file audit was used to determine the number of occasions of podiatry service received by the client after the receipt of innersoles. As there was no database to identify clients who received specific interventions such as innersoles, the senior podiatrist kept a paper record of eligible clients during the intervention period.

Only 27 out of a potential 150 clients (18%) were recruited into the trial by podiatrists. Follow-up was achieved with 20 (74%) of those clients after extensive mail and telephone contact. No client refused to participate in the study; however, podiatrists reported that they forgot to ask the clients to participate, did not have enough time, or perceived that the client would not be able to answer the questions due to language or eyesight problems, so decided to exclude them.

The scores indicated an overall improvement in foot-health status, as did the innersole-specific questionnaire; however, the changes in scores were difficult to interpret, due to the small sample size and a lack of comparative or normative data. On examination of data from some of the individual cases, it was apparent that the changes should be interpreted with caution. For example, one client showed substantial improvements in every domain, but reported that she never actually wore her innersoles. Instead, she had undergone surgery to correct the foot problems caused by rheumatoid arthritis, the surgery being responsible for her improved foot-health status.

The podiatrists did not find the quantitative data from the questionnaire useful; however, they were able to respond to the qualitative feedback received from clients, such as dissatisfaction or discomfort with the innersoles. Podiatrists do not normally receive this feedback, given that many of the clients have already been discharged within 3 months of the receipt of their innersoles.

None of the podiatrists looked at the questionnaires that were completed by the clients. This was in part because the data need to be manipulated by customized software to provide summary scores and the podiatrists did not have the information system availability to facilitate this during the clinical intervention.

The measurement of health service processes, such as the number of occasions of service and adherence to the “innersole care plan,” was captured through an audit of patient files. The quality of the routine data reported in patient files varied greatly. For instance, dates of the intervention were required to determine length of stay and service intensity, but were not always recorded. The innersole care plan was present in only one file of the 27 clients who received innersoles. The small sample size resulting from the poor rates of dissemination created difficulties with analysis, interpretation, and generalizability of the findings.

The results of this project were not reported to the purchaser, nor were any of the process indicators included in purchasing contracts. Instead, the results were used to improve the practice of the podiatrists by including client follow-up at 3 months as part of the episode of care.

Vignette three: client care planning

The ADP introduced a new standardized client planning process involving a detailed social, physical, and psychological assessment. The new plan included the documentation of a client care plan that described individual client goals and captured achievement against these goals. This was to be the main source of outcome information.

This project aimed to determine whether there was a relationship between the achievement of client goals (outcomes), the completion of the client planning process, and the numbers of occasions of service (processes). This project involved three quarterly retrospective file audits of 100 consecutive client files. Providers received extensive training in the use of the new client planning system, were informed of the file audit in advance, and were consulted regarding the auditable items. The results of each audit were fed back to providers.

At the first audit, the client care plan was complete in only 10% of files, increasing to 30% at the second audit. The lack of completed care plans meant that client goals and outcomes were reported in an ad hoc way, if at all, making data collection for this project difficult. The forms were modified in response to staff concerns and reintroduced with training by the program managers. Staff reported that they did not like the modified forms.

The third audit demonstrated no improvement in key areas, such as the use of the care plan, and the results had worsened in some fields. Providers reported that completion of the care planning documentation was a waste of time. One provider said, “What’s the point – who ever looks at them?” Providers differed in their perception of the role of the care plan. For instance, nursing staff working in drug-withdrawal services felt that the goals of their care were obvious (ie, to support drug withdrawal and harm-minimization) and the processes of achieving those outcomes were essentially the same for all clients. They felt that the client care plan was most necessary at the point of client discharge and handover, because clients often had social needs, such as housing requirements. However, the nurses said that although they normally attempted to address the clients’ social requirements at discharge, it was not their role to do this. The nurses felt that the documentation of these issues in the client care plan would formalize their changed nursing tasks, and this would create dispute over their roles. The nurses acknowledged that inconsistent documentation at discharge meant that client needs were addressed in an ad hoc way.

Managers perceived that the client care plan would provide clients with clear goals and expectations of the care that they received from the service. This in turn would provide greater structure to the provision of client care by working towards and achieving client goals in a systematic way. This study showed that only a small proportion of the ADP counselors and case managers actually work this way. Instead, they write several pages of unstructured narrative about the client interaction, but do not document goals or the expectations of either the client or the case manager.

It was difficult to define the interventions and quantify the outcomes of care. The ADP includes a wide range of services, including detoxification and methadone support, which are medically supported interventions with outcomes such as “harm minimization.” Many clients receive interventions that provide a supportive role, such as improving self-esteem, developing coping skills, or dealing with abusive relationships, for which the outcomes are difficult to objectify. In most cases, even when outcomes could be quantified, they were not, and the achievement of subjective client goals was reported in less than 5% of client files.

Client files could not always be found using the client record database. The ADP counselors reported that they do not always accurately report client details so that they can maintain client confidentiality and because of the possibility that client files can be subpoenaed for legal reasons. They did not want to document potentially incriminating material.

Client follow-up was difficult to ascertain. Fewer than half of all clients continue their treatment with the ADP, despite documented attempts by the provider to contact them. Whether the client planning process actually improves outcomes is not necessarily relevant in this case. Subsequent to this project, adherence to client care planning became part of the purchase contract for the ADP.

Vignette four: Intake and Assessment Comprehensive Assessment Project

The Intake and Assessment Unit (IAU) Comprehensive Assessment Project was designed to follow up the outcomes of an assessment-and-referral service that forms part of a single point of entry to the program.Citation28 The IAU undertakes a comprehensive assessment on any client requiring two or more services. The goals of the assessment process are to make appropriate referrals to increase client independence and prevent institutionalization, as well as provide some restorative and preventive care. The ideal outcome of the assessment process is referral to appropriate services to achieve the client goals.

Due to the one-off nature of the assessment, the IAU assessors wanted to know the outcome of their assessment process. The IAU Comprehensive Assessment Project was designed to examine the relationship between the rates of use of the health services to which the clients were referred (processes), the rates of goal achievement self-reported, and health-related quality of life (outcomes). Outcomes were measured using the Dartmouth COOP charts, which are a series of pictorial charts that have been validated for use with a wide range of populations.Citation29 They were chosen by the staff as the most appropriate outcome tool in this setting. The COOP charts were administered to patients at the time of the initial assessment and then 3 months later.

Of 114 eligible clients, 67 were recruited into the trial (59%). Some assessors did not recruit any patients; however, those who did expressed no difficulty with the process. The major barrier to recruitment reported was the additional time to administer the Dartmouth COOP chart (7 minutes on average). Importantly, the key contact person for this project (the team leader) changed jobs in the middle of the data-collection period and the assessors stopped recruiting patients.

Three-monthly follow-up was possible for 38 (57%) of clients. The poor follow-up rate was due to the death of some clients and the high proportion of service users that moved into higher-level care as a result of the assessment. All but five of the 38 clients contacted had achieved their goals. The only unmet goals over which the CCO had any influence were those of one couple who felt that their goals were not identified appropriately in the first place.

There was no relationship between the achievement of goals or the improvement in the COOP scores (outcomes) or use of services (processes).Citation28 Health-related quality-of-life scores improved slightly for the cohort; however, it is likely that the more infirm clients were those who died or moved into residential care.

The COOP charts were well accepted by IAU staff. They liked the simple format and the relative ease of use of the questionnaire, although stopped using them as soon as the project leader changed jobs. There was little relationship between the COOP chart scores and the actual outcomes as reported by the clients. The domains of the COOP were not sensitive to the actual outcomes of the receipt of services and did not cover a wide enough spectrum of quality-of-life domains to be meaningful. For example, one client reported that security was important. This was improved by installing a peephole in her front door and a security wire door. She was highly satisfied with the outcome of care, and her goals were achieved, but this was not reflected in her outcome measures because the question of security was not included.

The aim of contacting clients 3 months after the intervention was to determine whether they had achieved their goals and accessed the service/s to which they were referred. An important outcome of this project was the provision of increased or additional care to 13 clients as a result of the 3-month follow-up to determine the outcomes of care. During the 3-month period, a number of clients’ health service needs changed; for instance, one man had a myocardial infarction. Another was a carer whose partner had died. None knew how to access further care to meet their new needs. The follow-up facilitated contact with appropriate services. In other words, the measurement of outcomes became a part of the next process of care. As a result of this project, all clients now receive 3-month telephone follow-up as part of the routine assessment process, both to determine the achievement of client goals and facilitate ongoing care if necessary.

Vignette five: wound-outcome project

Wound management is undertaken by nurses within the Integrated Health Care Program. The aim of the wound-outcome project was to reduce practice variation and improve wound healing whilst reducing the cost of wound dressings to the program.

This project investigated the relationship between adherence to a wound clinical pathway (process), the time for wound healing, and the cost of dressings used for particular wound types (outcomes). Regular nursing care for wound management included reporting on processes and outcomes using an existing standardized care plan for wound management. A file audit at the start of the project found that much of the data was not easily available. Despite having a previous standardized wound care plan, data were only present in 12 of the files, and those that did exist did not all contain the same protocols. For instance, some contained pages that were updated in different years from the original record. Nurses who treated wounds in the ambulatory clinics used different forms to the nurses involved in the delivery of home-based wound care. This meant that the results could not be compared across different service settings.

The care plan itself provided no mechanisms for the nurses to document wound progress over time (ie, changes in wound size, exudate, etc). Additionally, wound status at discharge was recorded in only six of the files audited.

The nurses piloted a wound-specific outcome measure to obtain patient perceptions of wound outcomes. Nurses found the instrument time-consuming to administer and inappropriate for patients with long-standing leg ulcers. Therefore, the steering committee asked us to focus on routinely collected data and omit the patient perceptions questionnaire.

As a result of the initial file audit, new processes were introduced to improve the quality and rates of the routine reporting on wound processes and outcomes. The nurses adopted a more recently developed wound care pathway that solved the problem of standardized data collection between ambulatory and home-based care and provided the information necessary to determine the outcomes of care. A monthly internal audit system was introduced to ensure that the wound care plans were used and completed.

At the final audit, the new wound care pathway was used in only 28%, or 21 of a total of 73 files of clients with wounds. This project highlighted the fact that even when clear processes of care are used, such as the standardized wound care plan, they are not necessarily appropriate to support the documentation and achievement of goals. Secondly, without mechanisms to monitor the adherence to the model, there is no way to ensure that the processes are being adhered to. The CCO determined that the new care pathway constituted best practice for the documentation and delivery of wound care. Regardless of the impact on client outcomes, the specified processes of care were adhered to in less than 30% of cases, which prevented accurate data collection about health service processes and outcomes.

Vignette six: nutrition screening tool

This project differed from the other interventions in that it evaluated a new intervention – a screening tool and information booklet – designed to prolong the duration of exclusive breastfeeding of newborn babies. The resources were developed by dieticians in response to feedback that parents were receiving conflicting information about the nutritional needs of newborn babies. The goals of the booklet and screening tool were to encourage exclusive breastfeeding of infants for at least 4 months, as specified by the then World Health Organization guidelines, and that when solids were introduced, the baby received an “appropriate” texture and variety of food. The application of the nutrition screening tools coincided with the 6-week and 6-month immunization schedules. Extensive piloting of the tools found that they took an additional 15 minutes to administer. The cost of reprinting the booklet, combined with the relatively high resource burden of introducing the screening tools, meant that the program wanted to ensure their effectiveness before their widespread implementation.

The 3-month data-collection period (n = 150) found that almost 90% of parents introduced solids when the infant was older than 4 months, and the few exceptions were often due to childhood allergies. This meant that the main goal of the project, to increase the age of introduction of solids, was unnecessary for all but 10% of the existing client group. The second outcome variable, the texture and variety of food, could not be accurately measured at the 6-month screen, because in some cases parents had only just started to introduce solids to their child. Additionally, the nurses had difficulty judging the appropriateness of the texture and variety of foods.

The results showed that that the initially perceived need for the service did not actually exist. However, subsequent to completing the evaluation of the service, the World Health Organization changed its guidelines for the introduction of solids for infants. In other words, they changed the outcome that was being examined, highlighting the subjective and changing nature of some community health outcomes.Citation30 The screening tools were introduced into the intervention region for a 3-month period to collect baseline data on the actual and expected age of introduction of solids, as well as to pilot the instruments more comprehensively.

The outcome of the project was to eliminate the second screening tool and relabel it “guided nutritional questionnaire,” which could be used at the discretion of the nurse. The feedback from the nurses was that the tools were useful to identify parental concerns and direct them to appropriate resources.

The importance of this project for the collection of health outcome data was the need to clearly identify the requirement for a new service and evaluate this service before investing in its development. The evolving nature of health and health research means that the “best” outcomes of care can vary according to the latest research and/or policy decisions.

Service changes as a result of the outcome project

Following the completion of the health outcomes report to the CCO, the organization made the completion of a client care plan compulsory for every client. No systems of monitoring were introduced to enforce this standard, although an information system has subsequently been introduced. Other changes arose as a result of the individual outcome projects:

Wound-management protocols and documentation have been standardized across the whole of the service, and internal auditing processes were introduced to improve rates of adherence to the protocols.

Clients who undergo a comprehensive assessment within the Intake and Assessment Unit now have a routine 3-month telephone follow-up to determine their achievement of goals or changed health service needs.

The Alcohol and Drug Program modified its client planning protocol.

There was extensive education of staff across the CCO on the importance of quality documentation, identification of and adherence to set processes, and documentation of client outcomes.

The production of health outcome data requires an interaction between the patient and health service provider, documentation of the outcome, and extraction and reporting.

The approach to health outcome data collection is reproduced in to highlight the issues that arose at each level of the proposed model. Each letter in the figure denotes a stage in the process of the collection of health outcome data, and the issues arising at each stage are presented in .

Table 4 Issues arising at each stage of the data-collection process

Discussion

The findings from this and previous attempts by organizations to implement a health outcome approach to health service accountability illustrate several of the challenges in implementing the outcome approach.

Powell et alCitation31 describe the pitfalls of using routinely available data for reporting health care quality. Routinely collected data have the appeal that they are often readily available, low cost, available for retrospective and diverse geographic or other contextual comparisons, and do not suffer the ethical problems often encountered when data are primarily collected for research purposes. However, Powell et al also identify several problems associated with the use of routine data for comparative purposes. These include difficulty interpreting the causes of variations between findings, the potential insensitivity or inappropriateness of tools or data sources, inconsistencies in the approaches to data collection, and the potential for reporting biases. This CCO had no routinely collected data that were available as a basis for meaningful comparison across the whole service. The measures and approaches that were introduced highlighted several problems around the collection of health outcome data for reporting purposes.

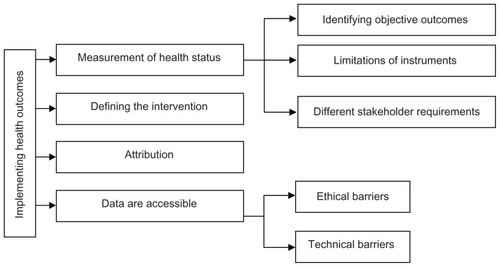

The definition of health outcomes as “a change in health status which is attributable to an intervention or a series of interventions”Citation5 is based on four assumptions about health outcome measurement:

A change in health status can be measured or objectified in some way.

The intervention can be defined.

The change in health status can be attributed to the defined intervention.

The health outcome data are accessible.

These assumptions highlight a number of practical barriers to the collection of health outcome data for the purpose of ensuring contractual accountability, which are expanded on below ().

Measurement of health status

For health outcomes to be quantified, the difference in health status before and after the intervention must be measured. The barriers to the measurement of outcomes highlighted within the case studies can be summarized under three headings:

Difficulties identifying measurable outcomes of care

Limitations of instruments designed to measure health outcomes

Different stakeholder requirements for the use of outcome data.

Difficulties identifying measurable outcomes of care

Numerous health outcome measures have been developed to help solve the difficult problem of measuring health status. However, not all outcomes lend themselves to measurement, and not one measure can accommodate all the possible outcomes of care. The lack of evidence to link the outcomes to the intervention means that knowing what to measure is difficult.

This study highlighted the continuum of intervention and outcome types possible within a community health setting (). At one end of the spectrum, there are reproducible interventions with objective outcomes of care, such as wound healing. This is an outcome that is easily defined, easily measured, and relatively unambiguous. At the other end of the continuum are the interventions that are totally dependent on the physical, social, and environmental context of the patient. In this case, a combination of patient goals, available resources, and the ability of the health service provider to access those resources will determine both the intervention and the outcome of that intervention. The effectiveness of the intervention is judged by the achievement of the agreed goals of client and provider. The quantification of health outcome data raises two important issues in the exploration of the routine use of health outcomes in a clinical setting: the privileging of quantitative data over qualitative, and the questionable clinical value of the quantitative results once they have been aggregated. As the podiatry case study demonstrated, qualitative data can be valuable to the health service provider; however, it needs to be provided in a usable format and in a timely way to have direct relevance to the patient–provider interaction from which it was derived.

Limitations of instruments designed to measure health outcomes

The limitations to the use of health outcome instruments identified in this study were: the problems identifying instruments that accurately reflect the actual outcomes of care; encouraging the providers to use these instruments; and finally the issues around data collection and manipulation.

Where measurement of health outcomes relied on introducing new instruments to the clinical interaction, the rates of administration of the instruments were poor. Providers forgot to give out the questionnaires, did not have time, or felt that they were not appropriate for particular clients. If health outcome data are to be collected and aggregated for reporting purposes outside the clinical intervention, it is important that they are from a representative sample of the population receiving the intervention. Providers require increased time to administer the additional instruments if they are used.

Many outcome tools require additional data entry and manipulation for the results to become accessible and meaningful. If the results cannot be accessed immediately because they need to be taken away from the clinical setting for manipulation, they lose value as a clinical tool for health service providers and are unlikely to be used. Additionally, not all interventions involve a scheduled follow-up phase, so additional administration is required to capture the actual outcome of the intervention.

Different stakeholder requirements for the use of outcome data

The use of health outcome measures that utilize predefined domains of health status assumes that people value the same qualities of health or outcomes of care. This has implications both for the application of specific instruments for each patient as well as the audience for the results. Differences in stakeholder requirements mean that not one single measure of health outcomes will be appropriate for all settings or purposes. Patients will interpret their well-being and their outcomes through a value filter that is determined by their health condition, sex, cultural background, and individual expectations. Health service providers have different requirements of health outcomes to managers and purchasers. This means that the content and the way the information is presented will need to be tailored to the specific audience.

Attribution

One of the major criticisms of the use of health outcomes in justifying health service expenditure and allocation is the issue of attribution. The prerequisites for attributing an outcome to an intervention are that there is a known relationship between health outcomes and specific treatments, and there is an assurance of the quality of care so that the expected outcomes are achieved.Citation22

Whilst there has been significant growth in evidence-based medicine and research into the effectiveness of interventions, most community-based health service interventions still lack high-quality evidence.Citation32 The paucity of evidence for the majority of allied health interventions increases this problem in a community health setting. Russell argues that basing judgments about performance on health outcomes that are not clearly attributable to an intervention is unethical and may actually result in reduced equity of resource allocation.Citation33

Even where health outcomes have been linked through research to a health service intervention, it is sometimes difficult to determine whether the outcome would have occurred anyway, or may have occurred for other reasons. Several studies have demonstrated that factors other than the intervention contribute to the measured outcomes.Citation31,Citation34

Difficulties attributing the outcomes to the intervention are the reason that most performance indicators are based on process indicators. However, these have been criticized on the basis that they do not necessarily reflect what the intervention is aiming to achieve.Citation26

Accessibility of data

Several technical barriers prevent the access to and extraction of patient-outcome data. These can be grouped under the headings of physical and ethical barriers.

Physical barriers include provider adherence to the data collection: geographic dispersion of providers, insufficient information technology systems and poor file management. Ethical barriers include patients and providers refusing to consent to provide the information, and legal considerations around what was actually reported in the file.

Ideally, health outcome data would be accessible from a single database or through data linkage. Health outcome measurement and reporting is dependent on the sharing of information derived from the interaction between a patient and their health care provider. Apart from the previously described difficulties of actually measuring the outcomes, a culture in which health service providers are prepared to share information is necessary.Citation35

The reality is that most allied health data are not accessible, and in the majority of cases health outcome data are not routinely collected. This project has identified that data accessibility is limited by file-management systems, the quality of recording of data, lack of sophisticated information systems, and lack of consensus on exactly what should be reported.

Conclusion

This project has highlighted a number of barriers to use of health outcomes in health service management, and confirms the themes highlighted in earlier studies around the challenges of routine collection of health outcome data for external reporting purposes. The use of health outcomes in purchasing contracts implies that the collection of health outcomes can be and is undertaken at a clinical level. This is true in some cases; however, the extraction of health outcome data from the professional–patient interaction is fraught with difficulties. The assumption that health service providers measure or at least document health outcomes routinely has proven to be incorrect within most of these studies. In the majority of cases, the collection of health outcome data, and indeed the documentation of standardized process information such as the date of the intervention, took substantial training of staff. Presumably, before health service organizations can even attempt to include health outcomes in their purchasing and contracts, the more basic requirements of accurate and reliable data collection must be met.

Disclosure

The author reports no conflicts of interest in this work.

References

- MilneRClarkeACan readmission rates be used as an outcome indicator?BMJ19903016761113911402252926

- HarrisRBridgmanCAhmadMIntroducing care pathway commissioning to primary dental care: measuring performanceBr Dent J201121111E2222158196

- Department of HealthEquity and Excellence: Liberating the NHSLondonHer Majesty’s Stationery Office2010

- DuncanEMurrayJThe barriers and facilitators to routine outcome measurement by allied health professionals in practice: a systematic reviewBMC Health Serv Res20121219622506982

- AHMAC (Australian Health Ministers’ Advisory Council) 1993AHMAC Sunshine StatementAHMAC Health Outcomes Seminar1993 Feb 3–4

- BestJThe matter of outcomeMed J Aust1988148126506523380045

- McCallumAWhat is an outcome and why look at them?Crit Public Health1993444

- World Health OrganizationGlobal Strategy for Health for All by the Year 2000GenevaWHO1981

- NutbeamCWiseMBaumanAHarrisELeederSRGoals and Targets for Australia’s Health in the Year 2000 and BeyondSydneyCommonwealth Department of Health, Housing and Community Service, Department of Public Health1993

- Australian Institute of Health and Welfare and Commonwealth Department of Health and Aged CareFirst Report on National Health Priority Areas 1996CanberraAIHW1996

- NSW Department of HealthA Discussion Paper on Health OutcomesSydneyNSW Department of Health1991

- New South Wales Council on the Cost of GovernmentNSW Government Indicators of Service Efforts and Accomplishments: HealthSydneyNew South Wales Council on the Cost of Government2000

- Commonwealth Department of Health and Family ServicesOutcomes Based Funding for Divisions of General Practice – Background/Briefing Paper for the First Outcomes Based Funding Development WorkshopCanberraCommonwealth Department of Health and Family Services1997

- YoungDLiawTThe organisation of general practiceGeneral Practice in AustraliaCanberraCommonwealth Department of Health and Family Services1996

- WellerDHoltPCrottyMBeilbyJWardJSilagyCOutcomes-Based Funding for Divisions of General PracticeAdelaideAustralian Cochrane Centre, Flinders University of South Australia1997

- Commonwealth Department of Health and Aged CareDivisions of General Practice Program: Implementation Guide For Outcomes Based FundingCanberraCommonwealth Department of Health and Aged Care1999

- Department of HealthGuidance on the Routine Collection of Patient Reported Outcome Measures (PROMs)LondonDepartment of Health2009

- DawsonJDollHFitzpatrickRJenkinsonCCarrAJThe routine use of patient reported outcome measures in healthcare settingsBMJ2010340c18620083546

- GummessonDQualitative Methods in Management ResearchThousand Oaks (CA)Sage2000

- FrenchWBellCOrganisation Development: Behavioural Science Interventions for Organisational ImprovementUpper Saddle River, NJPrentice Hall1999

- DonabedianAConcepts of Health Care Quality: A PerspectiveWashingtonInstitute of Medicine, National Academy of Sciences1974

- HarveyRHealth outcomes, health information and health fundingIntegrating Health Outcomes Measurement in Routine Health CareAugust 13–14, 1996Canberra, Australia

- World Health OrganizationInternational Classification of Functioning, Disability and Health (ICF)GenevaWHO2001

- NancarrowSAMoranABoyceRAHow can third party funders monitor the quality and outcomes of allied health service provision?Proceedings of the Global Healthcare ConferenceAugust 27, 2012Singapore

- SladeGDSpencerJADevelopment and evaluation of the Oral Health Impact ProfileCommunity Dent Health19941113118193981

- NancarrowSAPractical barriers to the collection of health outcomes data in a clinical setting using non-casted innersoles as a case studyAustralas J Podiatr Med20013524349

- BennettPJPattersonCWearingSBalgioniTDevelopment and validation of a questionnaire designed to measure foot-health statusJ Am Podiatr Med Assoc19988884194289770933

- NancarrowSAMeasuring the outcomes of care in older people following a single assessment processInt J Ther Rehabil20051211479484

- LennonOCCareyACreedADurcanSBlakeCReliability and validity of COOP/WONCA functional health status charts for stroke patients in primary careJ Stroke Cerebrovasc Dis201120546547320813545

- NancarrowSAThe evaluation of an intervention to prevent the early introduction of solids to babiesPract Dev Health Care200323148155

- PowellADaviesHThomsonRUsing routine comparative data to assess the quality of health care: understanding and avoiding common pitfallsQual Saf Health Care200312212212812679509

- US CongressThe Quality Of Medical Care: Information for ConsumersWashington, DCOffice of Technology Assessment1988

- RussellEThe ethics of attribution: the case of health care outcome indicatorsSoc Sci Med1998479116111699783859

- JankowskiRWhat do hospital admission rates say about primary care?BMJ19993197202676810398606

- WoodBThe information revolution, health reform and doctor-manager relationsPublic Policy Adm1999141113