?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Purpose:

To determine whether sham pads used as controls in randomized clinical trials of vibratory stimulation to treat patients with sleep loss associated with restless legs syndrome perform differently than placebo pills used in comparable restless legs syndrome drug trials.

Patients and methods:

Sham pad effect sizes from 66 control patients in two randomized clinical trials of vibratory stimulation were compared with placebo responses from 1,024 control patients in 12 randomized clinical drug trials reporting subjective sleep measurement scales. Control patient responses were measured as the standardized difference in means corrected for correlation between beginning and ending scores and for small sample sizes.

Results:

For parallel randomized clinical trials, sham effects in vibratory stimulation trials were not significantly different from placebo effects in drug trials (0.37 and 0.31, respectively, Qbetween subgroups =0.25, PQ≥0.62). Placebo effect sizes were significantly smaller in crossover drug trials than sham effect sizes in parallel vibratory stimulation trials (0.07 versus 0.37, respectively, Qbetween subgroups =4.59, PQ≤0.03) and placebo effect sizes in parallel drug trials (0.07 versus 0.31, respectively, Qbetween subgroups =5.50, PQ≤0.02).

Conclusion:

For subjective sleep loss assessments in parallel trials, sham pads in vibratory stimulation trials performed similarly to placebo pills in drug trials. Trial design (parallel versus crossover) had a large influence on control effect sizes. Placebo pills in crossover drug trials had significantly smaller effect sizes than sham pads in parallel vibratory stimulation trials or placebo pills in parallel drug trials.

Introduction

Background

Randomized clinical trials (RCTs) are nearly universally focused on the difference between an active treatment and an inactive or control treatment. If the active treatment effect size is large and the inactive effect size is small, the trial is a success. However, if the active treatment effect and the inactive treatment effect are both large, the trial is a failure. Clearly, the magnitude of the inactive or control treatment effect is of great importance in any RCT. In drug trials, control patients are given an inactive or a “placebo” pill that looks like the active pill but is pharmacologically inert. In physical medicine studies, control patients are exposed to a device that looks like, or is a “sham” of the physical treatment, but does not provide active treatment.

It has been suggested by Deyo et al that for drug trials, blinding of investigators and patients is generally successful.Citation1 On the other hand, the same authors state, “Finding credible ‘placebo’ alternatives to physical therapy … may be difficult or impossible.” Kaptchuk et al examined the medical literature to determine whether or not physical medicine treatment shams have a greater therapeutic effect than drug therapy placebos, but the results were inconclusive.Citation2 In a separate publication directly comparing the effect of a physical medicine treatment sham to a drug therapy placebo, Kaptchuk et al concluded that the sham device had a greater effect than the placebo pills on self-reported symptoms.Citation3

Trial designs themselves may also exert a significant influence on control effects. Deyo et al have speculated that crossover (CO) trials that expose all patients to both active and control treatments will be biased since the patients can compare their experiences with the two treatments, which may enable them to distinguish active from control treatments.Citation1

Rationale

We previously described the therapeutic effectiveness of vibratory stimulation (VS) therapy (the difference between treatment and control groups) for sleep problems associated with restless legs syndrome (RLS) and have compared that effectiveness to RLS drug therapy.Citation4,Citation5 We found that the magnitude of sleep improvement with a vibrating pad (treatment) was greater than with a nonvibrating (sham) pad and was comparable to sleep improvement with US Food and Drug Administration-approved drugs in patients with moderately severe primary RLS. Pad assignment (treatment pad versus sham pad) and pad assignment belief (patient belief that a treatment or sham pad was assigned) both influenced improvement in Medical Outcomes Study Sleep Problems Index II (MOS-II)Citation6–Citation8 sleep scores; however, pad assignment belief was more influential.Citation9 Others have similarly reported the influence of patient belief on RCT outcomes.Citation10

Thus, we now examine inactive (control) effect sizes, comparing sham effect sizes in VS trials to placebo effect sizes in RLS drug trials. In addition, we will examine the influence of trial design (parallel versus CO designs) on control effect sizes and discuss the ramifications of these findings for RLS sleep studies.

Objective

This meta-analysis asks the question: Do sham pads used as controls in VS trials of patients with RLS sleep problems perform differently than placebos used in comparable parallel and CO RLS drug trials?

Methods

RCT screening

To compare VS sham pad effects to the published drug placebo effects reported by Fulda and Wetter, we reexamined data from control patients in two previously reported VS trials and compared them to the individual trials identified by Fulda and Wetter.Citation4,Citation5,Citation11

Measurement of placebo and sham effect sizes

To measure placebo effect sizes of inert pills used in RLS drug trials, Fulda and Wetter calculated the magnitude of standardized mean change in sleep quality scores between baseline and endpoint separately for 1,024 control subjects across five subjective sleep instruments in 12 RLS drug trials.Citation11 Corrections to effect sizes were made for correlation between baseline and endpoint scores and for small sample size bias.Citation12,Citation13 From their calculations, they arrived at placebo effect sizes on sleep scales with 95% confidence intervals (CIs). To compare subjective sleep scores for sham pad effect sizes with placebo effect sizes for inert pills, we followed the computational methods described by Fulda and WetterCitation11 and applied those methods to the sleep scores of 66 control patients in the VS trials.

Statistical analysis

Heterogeneity testing

Heterogeneity in treatment effect was evaluated with the I2 statistic (Comprehensive Meta-Analysis V2 Software, Biostat, Inc., Englewood, NJ, USA).Citation14 I2 values ranged from 0% to 100%, with ≤25%, 50%, and ≥75% corresponding to low, medium, and high heterogeneity, respectively.Citation15 To compensate for insensitivity of the I2 statistic for small sample sizes, when PQ<0.10, the null hypothesis of homogeneity was rejected and studies were considered heterogeneous. For all other statistical tests, significance cutoff was at P≤0.05.

Meta-analysis models

Outcome measures were directly compared by random-effects statistical models. Subgroups were indirectly compared using the Qbetween subgroups-statistic.Citation14

Measurement of sleep improvement

For the VS trials, sleep problems were measured with the MOS-II sleep problems index.Citation7,Citation8 Differences in MOS-II scores from baseline to endpoint (change scores) were calculated for sham groups and converted to standardized mean differences using the baseline standard deviations. Change scores were also corrected for baseline and endpoint correlation and for small sample size bias.Citation12,Citation16 The MOS sleep inventory is a patient-reported, 12-question, paper-and-pencil testCitation8 that has been shown to be reliable and valid for measuring sleep problems in patients with RLS.Citation6 The MOS-II scale contains 9 of the 12 inventory questions, represents all of the qualitative sleep concepts in the inventory, and reflects the inventory’s most exhaustive measure of sleep problems. In the current analysis, which follows the Fulda and Wetter convention,Citation11 improvement in a sleep score was calculated as a positive number, indicating a reduction in sleep difficulty; the greater the positive number, the greater the subjective sleep improvement.

For the 12 drug trials, five different subjective measures of sleep quality were included: 1) the two-question “sleep adequacy” items in the MOS sleep inventory, 2) the Schlaffragebogen A “sleep quality” scale, 3) the “satisfaction with sleep” item of the RLS-6 scale, 4) a visual analog “satisfaction with sleep” scale, and 5) a diary-derived sleep quality scale.Citation11

Null hypotheses tested

Hypothesis for control types (inactive sham pads versus inert pills):

Efficacy of VS sham pads compared to drug placebos (indirect subgroup comparisons)

Hypothesis for trial designs (parallel versus CO):

Efficacy of parallel RCT compared to CO trials (indirect subgroup comparison)

where SΔMC is the standardized difference in mean change between initial and final sleep quality scores, corrected for initial and final score correlation and for small sample sizes.

Results

Trials selected

Details of the placebo analysis of 12 RLS drug trialsCitation17–Citation28 and the two VS trialsCitation4,Citation29 have been previously published. Controls for the drug trials were pharmacologically inert pills identical in appearance to study drugs. Controls for the VS trials were non-vibrating pads that were identical in appearance to vibrating pads, but which did not produce vibration. In SMI-001 the sham pads produced patient-controlled sound; in SMI-002, patient-controlled light.Citation4,Citation29 The VS trials demonstrated low heterogeneity (I2=0.0%); the 12 drug trials, moderate heterogeneity (I2=70.7%, PQ<0.0001). Drug trial heterogeneity was not a function of trial date, parallel versus CO trial design, trial size, drug studied, or subjective sleep scale used and could not be explained by our analysis of the published data.

Sensitivity analyses

Trials with many different subjective sleep indices, all but one of which have not been validated in RLS populations, were included in the analysis. To determine whether non-validated sleep scales exerted an influence, control effect sizes for the eight trials that used validated MOS subscales were compared with the remaining trials that did not use a validated sleep scale. No significant difference in control effect size was seen between the trials that used MOS subscales and those that did not (0.270 versus 0.304, respectively, PQ≥0.69). Similarly, trials varied considerably in the size of patient enrollment. However, meta-regression of control effect size as a function of control patient enrollment demonstrated no significant relationship (slope =0.0004, P≥0.24).

Outcomes

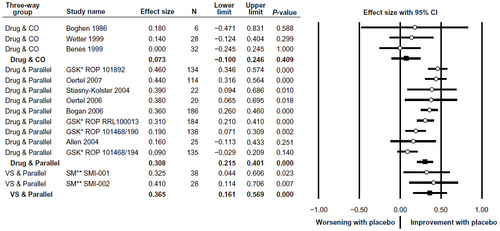

is a forest plot of effect sizes, with CIs for individual trials and for three trial subgroups: CO drug trials, parallel drug trials, and parallel VS trials. Improvement in subjective sleep quality scores is shown as positive values. Control effect sizes were significantly greater than zero for the parallel drug trials (P≤0.0001, ) and for parallel VS trials (P≤0.0001, ). Although control effect sizes were slightly larger for shams than for inert pills, 0.365 and 0.308, respectively, the difference between these two parallel trial subgroups was not significant (PQ≥0.62, ). Therefore, hypothesis H01 was accepted for parallel VS trials compared to parallel drug trials.

Figure 1 Forest plot of effect sizes by trial and trial subgroup.

Abbreviations: CI, confidence interval; CO, crossover; VS, vibratory stimulation.

Table 1 Subgroup effect sizes for CO drug trials, parallel drug trials, and parallel VS trials

Table 2 Pairwise subgroup effect size comparisons between trial subgroups

In contrast to the parallel studies, control effect sizes were not significantly different than zero for the CO drug trials (0.073, P≥0.41, ). In these trials, placebos had no significant therapeutic effect. When sham effect sizes in the parallel VS trials were compared with placebo effect sizes in CO drug trials, placebo effects in CO drug trials were significantly smaller (0.365 versus 0.073, respectively, PQ≤0.03, and ). Therefore, hypothesis H01 was rejected for parallel VS trials compared with CO drug trials. Placebos in CO drug trials also had significantly smaller effect sizes than placebos in parallel drug trials (0.073 versus 0.308, respectively, PQ≤0.02, and ). Therefore, hypothesis H02 was rejected for parallel drug trials compared to CO drug trials.

Discussion

For parallel trial designs, control treatment effect sizes were quite similar for placebo pills and sham pads (0.308 and 0.365, respectively, PQ≥0.62). Indirect comparisons of RLS drug and VS trials are, therefore, justified, so long as the compared trials are of parallel design. For example, the indirect comparison of VS and drug studies made by Burbank et alCitation5 would be valid because the compared trials were both parallel designs.

By contrast, placebo groups in CO drug trials had little or no therapeutic effect on subjective sleep measures (effect size =0.073, −0.1000 to 0.246, 95% CI). The lack of significant therapeutic effect in the control arms of these studies raises the suspicion that blinding was unsuccessful. If so, it is impossible to make indirect comparisons of these trials with other studies. Moreover, the primary, direct comparison of their respective treatment arms and control arms comes into question.

It appears that patients in the CO drug trials identified which treatment was the control treatment and which the active one. In the beginning of these trials, half of the patients were randomized to a drug and half were randomized to a placebo. At the half-way point, a week-long “washout” period was inserted, during which no treatment was given. Following the washout period, patients who were initially randomized to a drug were given the placebo, and patients initially randomized to the placebo were given the active drug. Because all the drugs that were studied have soporific effects that occur within an hour or so after ingestion,Citation30,Citation31 patients may have been able to distinguish the active drug from the placebo following CO. For those who received the active drug in the first half of the trial, it is likely that loss of the soporific effect signaled to them that they were receiving the placebo in the second half, which biased them toward reporting non-improvement following CO. Similarly, for those who had received a placebo in the first half of the trial, receiving a pill that caused sleepiness may have suggested to them that they were receiving the active drug in the second half, which biased them to report improvement following CO.

Accurate patient beliefs about the drugs received following CO could have influenced trial results. As previously demonstrated,Citation9 once RLS patients develop a belief about the type of treatment they received (active versus control), their sleep inventory scores are strongly influenced. Patients who believed they had been given a placebo reported little sleep improvement. Patients who believed they were given the active treatment reported substantial sleep improvement.

It is possible that CO designs in RLS drug trials can only maintain adequate patient blinding if the active treatment has no discernable effect (in these studies, sleepiness) or the placebo has a soporific effect that is comparable to that of the active treatment, rather than being a completely inert pill. In CO studies, bias toward treatment might also be minimized by using a different outcome measurement, such as objectively recording sleep efficiency in a sleep laboratory, rather than relying on patient-reported, subjective sleep measurement scales.

With their presentation of independent standardized effect sizes for control groups, Fulda and Wetter created a statistic that may help evaluate blinding in RLS trials.Citation11 The average drug trial placebo effect size of 0.308 (0.215–0.401 95% CI) and the average VS trial sham effect size of 0.365 (0.161–0.569 95% CI) set a relatively narrow range of values against which any new study of sleep disturbance in RLS patients could be judged. If, for example, a new study had a control effect size that was zero or nearly zero, as reported in three of the Fulda and Wetter CO trials and in two of their parallel trials, one might suspect that sometime during the course of the trial, patients discerned whether they received the active or the control treatment. It would seem unreasonable to assume that trial blinding was successful if a trial had a control effect size of zero or nearly zero. Of course, additional measures should be used to evaluate blinding in any trial, such as measuring compliance with treatment and follow-up schedules or determining which study arm each patient guessed they had been assigned to using questionnaires. However, such additional measures aside, simply examining standardized effect size for control patients may provide useful clues about blinding adequacy.

In addition to the well-known general limitations of meta-analyses, limitations specific to the current meta-analysis exist.Citation14 The primary limitation is the fact that we included only the drug trials chosen by Fulda and Wetter.Citation11 Those trials were moderately heterogeneous, which could not be explained by Fulda and Wetter nor by us. Heterogeneity argues against trials being integrated though meta-analysis. However, our decision to use the same set of previously selected trials allowed comparison to a known, published standard. Another limitation is the examination of only one outcome variable: sleep problems. It may well be that other measurements of discomfort in RLS patients would not follow the same patterns observed in sleep inventories. In addition to showing that sleep loss measures for sham patients were smaller in CO trials than in parallel trials, Fulda and Wetter also demonstrated that effect sizes for RLS severity measures were smaller in CO trials than in parallel trials.Citation11 This observation suggests that our results may not be limited to sleep problem measures alone.

Conclusion

Sham pads in parallel VS trials performed similarly to placebo pills in parallel drug trials for subjective sleep loss assessments in patients with RLS. Therefore, so long as RLS trials are of parallel design, subjective sleep loss assessment in VS trials can be indirectly compared to sleep problems in drug trials through meta-analysis. Trial design (parallel versus CO) influenced control effect sizes. Placebo pills in CO drug trials had significantly smaller effect sizes than sham pads in parallel randomized VS trials and significantly smaller effect sizes than placebo pills in parallel drug trials. CO trial designs in the study of subjective measures of sleep problems in RLS patients may be biased toward showing a treatment effect because patients may be able to discern treatment from placebo, causing control arms in CO trials to have little or no therapeutic effect.

Disclosure

Financial support for the study was provided by Sensory Medical, Inc, San Clemente, CA, USA. The author is the Chief Executive Officer of Sensory Medical, Inc, and a minority shareholder. The author reports no other conflicts of interest.

References

- Deyo RA, Walsh NE, Schoenfeld LS, Ramamurthy S. Can trials of physical treatments be blinded? The example of transcutaneous electrical nerve stimulation for chronic pain. Am J Phys Med Rehabil. 1990;69:6–10.

- Kaptchuk TJ, Goldman P, Stone DA, Stason WB. Do medical devices have enhanced placebo effects? J Clin Epidemiol. 2000;53:786–792.

- Kaptchuk TJ, Stason WB, Davis RB, et al. Sham device v inert pill: randomised controlled trial of two placebo treatments. BMJ. 2006;332:391–397.

- Burbank F, Buchfuhrer MJ, Kopjar B. Sleep improvement for restless legs syndrome patients. Part I: Pooled analysis of two prospective, double-blind, sham-controlled, multi-center, randomized clinical studies of the effects of vibrating pads on RSL symptoms. Journal of Parkinsonism and Restless Legs Syndrome. 2013;3:1–10.

- Burbank F, Buchfuhrer MJ, Kopjar B. Improving sleep for patients with restless legs syndrome. Part II. Meta-analysis of vibration therapy and drugs approved by the FDA for treatment of restless legs syndrome. J Parkinsonism Restless Legs Syndrome. 2013;3:11–22.

- Allen RP, Kosinski M, Hill-Zabala CE, Calloway MO. Psychometric evaluation and tests of validity of the Medical Outcomes Study 12-item Sleep Scale (MOS sleep). Sleep Med. 2009;10:531–539.

- Hays RD, Stewart AL. Sleep measures. In Stewart AL, Ware JE, Jr, editors. Measuring Functioning and Well-Being: The Medical Outcomes Study approach. Durham: Duke University Press; 1998:235–259.

- Hays RD, Martin SA, Sesti AM, Spritzer KL. Psychometric properties of the Medical Outcomes Study Sleep measure. Sleep Med. 2005;6:41–44.

- Burbank F. Sleep improvement for restless legs syndrome patients. Part III: Effects of treatment assignment belief on sleep improvement in restless legs syndrome patients. A mediation analysis. J Parkinsonism Restless Legs Syndrome. 2013;3:23–29.

- Ernst E. Belief can move mountains. Eval Health Prof. 2005;28:7–8.

- Fulda S, Wetter TC. Where dopamine meets opioids: a meta-analysis of the placebo effect in restless legs syndrome treatment studies. Brain. 2008;131:902–917.

- Morris SB, DeShon RP. Combining effect sizes in meta-analysis with repeated measures and independent groups designs. Psychol Methods. 2002;7:105–125.

- Hedges LV. Estimation of effect size from a series of independent experiments. Psychol Bull. 1982;92:490–499.

- Borenstein M, Hedges LV, Higgins J, Rothstein H. Introduction to Meta-Analysis. West Sussex, UK: John Wiley & Sons Ltd; 2009.

- Higgins JP, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327:557–560.

- Hedges LV. Distribution theory for Glass’s estimator of effect size and related estimators. J Educ Stat. 1981;6:107–128.

- Oertel WH, Stiasny-Kolster K, Bergtholdt B, et al. Efficacy of pramipexole in restless legs syndrome: a six-week, multicenter, randomized, double-blind study (effect-RLS study). Mov Disord. 2007;22:213–219.

- Allen R, Becker PM, Bogan R, et al. Ropinirole decreases periodic leg movements and improves sleep parameters in patients with restless legs syndrome. Sleep. 2004;27:907–914.

- GlaxoSmithKline. Results summary for RRL100013. Available from: http://www.gsk-clinicalstudyregister.com/quick-search-list.jsp?item=100013&type=GSK+Study+ID&studyId=100013. Accessed November 8, 2013.

- GlaxoSmithKline. Results summary for ROP101892. Available from: http://www.gsk-clinicalstudyregister.com/quick-search-list.jsp?item=101892&type=GSK+Study+ID&studyId=101892. Accessed November 8, 2013.

- Bogan RK, Fry JM, Schmidt MH, Carson SW, Ritchie SY. Ropinirole in the treatment of patients with restless legs syndrome: a US-based randomized, double-blind, placebo-controlled clinical trial. Mayo Clin Proc. 2006;81:17–27.

- Stiasny-Kolster K, Benes H, Peglau I, et al. Effective cabergoline treatment in idiopathic restless legs syndrome. Neurology. 2004;63:2272–2279.

- Oertel WH, Benes H, Bodenschatz R, et al. Efficacy of cabergoline in restless legs syndrome: a placebo-controlled study with polysomnography (CATOR). Neurology. 2006;67:1040–1046.

- Boghen D, Lamothe L, Elie R, Godbout R, Montplaisir J. The treatment of the restless legs syndrome with clonazepam: a prospective controlled study. Can J Neurol Sci. 1986;13:245–247.

- Wetter TC, Stiasny K, Winkelmann J, et al. A randomized controlled study of pergolide in patients with restless legs syndrome. Neurology. 1999;52:944–950.

- Benes H, Kurella B, Kummer J, Kazenwadel J, Selzer R, Kohnen R. Rapid onset of action of levodopa in restless legs syndrome: a double-blind, randomized, multicenter, crossover trial. Sleep. 1999;22:1073–1081.

- GlaxoSmithKline. Results summary for 101468/190. Available from: http://www.gsk-clinicalstudyregister.com/quick-search-list.jsp?item=101468%2f190&type=GSK+Study+ID&studyId=101468%2f190. November 8, 2013.

- GlaxoSmithKline. Results summary for 101468/194. Available from: http://www.gsk-clinicalstudyregister.com/quick-search-list.jsp?item=101468%2f194&type=GSK+Study+ID&studyId=101468%2f194. Accessed November 8, 2013.

- Sensory Medical. NCT00s877916 and NCT01145651 trial summaries. 2012. Available from: http://www.sensorymedical.com. Accessed March 29, 2012.

- Kales A, Manfredi RL, Vgontzas AN, Baldassano CF, Kostakos K, Kales JD. Clonazepam: sleep laboratory study of efficacy and withdrawal. J Clin Psychopharmacol. 1991;11:189–193.

- Ondo WG, Dat Vuong K, Khan H, Atassi F, Kwak C, Jankovic J. Daytime sleepiness and other sleep disorders in Parkinson’s disease. Neurology. 2001;57:1392–1396.