Abstract

Background

Patient satisfaction is crucial for the acceptance, use, and adherence to recommendations from teleconsultations regarding health care requests and triage services.

Objectives

Our objectives are to systematically review the literature for multidimensional instruments that measure patient satisfaction after teleconsultation and triage and to compare these for content, reliability, validity, and factor analysis.

Methods

We searched Medline, the Cumulative Index to Nursing and Allied Health Literature, and PsycINFO for literature on these instruments. Two reviewers independently screened all obtained references for eligibility and extracted data from the eligible articles. The results were presented using summary tables.

Results

We included 31 publications, describing 16 instruments in our review. The reporting on test development and psychometric characteristics was incomplete. The development process, described by ten of 16 instruments, included a review of the literature (n=7), patient or stakeholder interviews (n=5), and expert consultations (n=3). Four instruments evaluated factor structure, reliability, and validity; two of those four demonstrated low levels of reliability for some of their subscales.

Conclusion

A majority of instruments on patient satisfaction after teleconsultation showed methodological limitations and lack rigorous evaluation. Users should carefully reflect on the content of the questionnaires and their relevance to the application. Future research should apply more rigorously established scientific standards for instrument development and psychometric evaluation.

Introduction

In recent years, telephone consultation and triage have gained popularity as a means for health care delivery.Citation1,Citation2 Teleconsultations and triage refer to “the process where calls from people with a health care problem are received, assessed, and managed by giving advice or via a referral to a more appropriate service.”Citation3 The main motive for introducing such services was to help callers to self-manage their health problems and to reduce unnecessary demands on other health care services. Teleconsultation and triage are frequently used in the context of out-of-hours primary care services.Citation4 They result in the counseling of patients about the appropriate level of care (general practitioner, specialized physician, other health care providers, [such as therapists], or hospital care), the appropriate time-to-treat (ranging from emergency care to seeking an appointment within a few weeks), or the potential for self-care. Several randomized controlled trials showed that teletriage is safe and effective,Citation5–Citation7 and a systematic review suggested that at least one-half of the calls can be handled by telephone advice alone.Citation8

The patients’ opinions on the quality of such services are crucial for their acceptance, use, and adherence to the recommendations resulting from the teleconsultation.Citation9,Citation10 Instruments to measure patient satisfaction have been developed for a broad range of settings. However, these instruments cannot easily be transferred into the teleconsultation setting, which systematically differs in two respects: 1) decisions in teleconsultation and triage rely heavily on medical history-taking as the main – and sometimes only – diagnostic tool, so excellent communication and history-taking skills are crucial in this setting; 2) teleconsultation and triage services generally relate to the appearance of new health problems and less frequently address long-term management for which patients usually attend face-to-face care.Citation1

Patient satisfaction is a multidimensional construct.Citation11,Citation12 Global indices (single-item instruments) have been shown to be unreliable for the measurement of patient satisfaction in health care and to disguise the fact that judgments on different aspects of care may vary.Citation10,Citation13 Instruments assessing patient satisfaction after teleconsultation and triage need to cover the perceived quality of the communication skills, of the telephone advice (eg, helpfulness and feasibility of the recommendation), and of the organizational issues of the service, such as access or waiting time.Citation10 In a previous review, methodological issues related to the measurement of patient satisfaction with health care were systematically collected.Citation10 Several problems were addressed, such as how different ways of conducting surveys affect response rates and consumers’ evaluations. However, the review did not include detailed information on patient satisfaction questionnaires, nor did it give specific recommendations related to questionnaire use. A more recent systematic review in 2006 on patient satisfaction with primary care out-of-hours services presented four questionnaires, all with important limitations in their development and evaluation process.Citation4

However, out-of-hours care is only a small part of teleconsultation and triage services. Furthermore, none of the previous reviews explicitly followed up on research that modified and reevaluated existing instruments. Therefore, the aim of our study was to systematically review the scientific literature for multidimensional instruments that measure patient satisfaction after teleconsultation and triage for a health problem and to compare their development process, content, and psychometric properties.

Methods

Literature search

We searched Medline, the Cumulative Index to Nursing and Allied Health Literature, and PsycINFO (query date of January 31, 2013) for relevant literature. The search terms were related to “patient satisfaction”, “questionnaire”, and “triage” (). We reviewed the reference lists of all publications included in the final review for relevant articles. Furthermore, we searched the Internet for additional material, in particular for follow-up research, the refinement of the included instruments via authors’ names, and the names of the instruments.

Study selection and data collection process

The pool of potentially relevant articles identified via databases, reference lists, and Internet searches was evaluated in detail regarding whether or not the articles were original research articles, whether or not they described instruments for assessing patient satisfaction after an encounter between a health professional and a patient or his proxy over the phone, and whether or not they were intended for self-administered or interviewer-administered use ().Citation14 As we were interested in multidimensional instruments, we excluded global indices (single-item measures). We included telephone and video consultations, as well as out-of-hours services that performed triage by phone. Out-of-hours services were defined as any request for medical care on public holidays, Sundays, and at a defined time on weekdays and Saturdays (for example, weekdays from 7 pm to 7 am and Saturdays from 1 pm onward). We included studies that reported the development of the instrument (called “development studies”) and studies that applied the instrument for outcome assessment (called “outcome studies”). We did not apply any language restriction. Two reviewers (MAI, EB) independently screened the references for eligibility, extracted the data, and allocated the instrument items to the predefined domains. Discrepancies were solved by consensus.

Table 1 Characteristics of the identified publications

Data extraction and analysis

We extracted the following information:

Descriptive information: author; year of publication; country of origin; setting; staff providing the service; type of administration of the questionnaire; participants; and timing of administering the instrument after the encounter ().

Instrument content: number of items per domain; number of domains covered per study; total number of items; mean items per domain; number of studies that covered a certain domain with at least one question ().

Table 2 Instrument content (related to teleconsultation)

Details of the development process: such as literature review, consultation with experts, consensus, focus group meetings, or individual interviews; piloting; and rating scale ().

Table 3 Descriptive information of the instruments

Recruitment strategy and handling of nonresponders: inclusion and exclusion criteria; consecutive recruitment of patients; response rate; and nonresponse analysis ().

Table 4 Recruitment strategy and handling of nonresponders

Psychometric properties: item nonresponse; factor structure; reliability (ie, interrater, test/retest, intermethod, and internal consistency reliability); and validity (ie, construct, content, criterion validity) ().

Table 5 Psychometric properties

The data was tabulated and summarized in a descriptive way.

First, we listed all primary studies and extracted basic information. Outcome studies – that evaluated the same instrument in various settings and populations – were grouped under the corresponding development study. When several studies referred to the same instrument, we used the development study to extract data for the following steps.

Second, we analyzed the content domains of the instruments. Based on a systematic review, published by Garratt et al, we created a list of nine domains (access to the service, attitude of health professional, attitude of patient, perceived quality of the communication, individual information [such as sociodemographic or clinical patient data], management [such as waiting time], overall satisfaction, perceived quality of professional skills of the staff, perceived quality of the telephone advice [such as helpfulness and feasibility of the recommendation]), and other.Citation4 Two reviewers independently attributed each item of the instruments to one domain. The aim of this procedure was to describe, to characterize, and to compare the content of patient satisfaction instruments for which no factor-analysis results were reported. We did not use these dimensions as a prerequisite for instruments to be included in our review.

Third, we explored the development process of the instrument, the scoring scheme of the instrument, and the performance of a piloting. When we identified only one study to an instrument, we extracted the data from this publication, regardless of whether it was a development study or an outcome study.

Fourth, we assessed the recruitment strategy and handling of nonresponders in those publications that reported statistical results for psychometric properties. This type of information is useful for interpretation of the statistical results so that – for those studies not reporting on factor structure, reliability, or validity – we did not detail recruitment strategy and handling of nonresponders.

Fifth, we tabulated any type of psychometric property that we identified in any type of publication. For the interpretation of Cronbach’s alpha values, an estimate of the reliability of an instrument, we used the categories: excellent (>0.9); good (0.8–0.9); acceptable (0.7–0.8); questionable (0.6–0.7); poor (0.5–0.6); and unacceptable (<0.5).Citation15 An item-total correlation of <0.3 was considered poor, indicating that the corresponding item does not correlate well with the overall scale.Citation16

Results

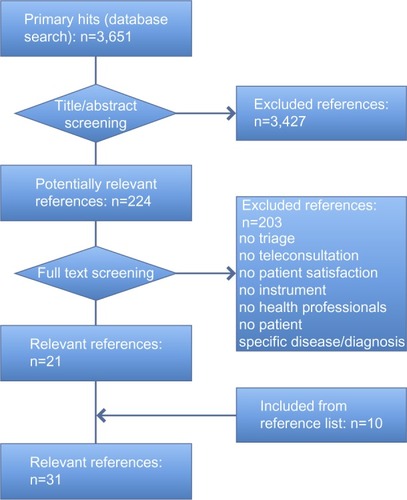

Our search identified 3,651 references. We screened 224 full-text publications for eligibility and, ultimately, included 31 studies – with a total of 17,797 patients – that reported on 16 different multidimensional instruments on patient satisfaction after teleconsultation and triage (; ). All but one article was published in the English language; this article was published in Swedish.Citation17

Basic information

The instruments were developed in seven different countries: five instruments derived from the United Kingdom;Citation6,Citation18–Citation21 four instruments from the United States;Citation22–Citation25 two from Sweden;Citation17,Citation26 two from the Netherlands;Citation27,Citation28 and one instrument from each of Australia,Citation29 France,Citation30 and Norway.Citation31 Also, seven of the 16 instruments (44%) were used by subsequent studies.Citation17,Citation18,Citation20,Citation21,Citation23,Citation25,Citation27 The most frequently used instrument, the McKinley 1997 questionnaire,Citation20 was applied in six subsequent studiesCitation32–Citation37 and served as a basis for a shortened scale ().Citation21

In most studies (14 of 16 instruments, 88%), the questionnaires were distributed per email or in a paper form for self-administration.Citation6,Citation17–Citation19,Citation21–Citation28,Citation30,Citation31 In three studies, both a self-administered and an interviewer-administered version were used.Citation20,Citation23,Citation25 The number of respondents per study varied between 20 and 3,294 persons. Also, 18 of the 31 publications (58%) applied instruments in the context of out-of-hours services, where centers triage patients from several general practices or a specific region.Citation18–Citation21,Citation27–Citation29,Citation31–Citation41

Eight publications described patient satisfaction after the consultation provided by the teleconsultation centers.Citation17,Citation19,Citation25,Citation26,Citation39,Citation42–Citation44 Other settings include: the management of same-day appointments;Citation6 the provision of teleconsultation services by physicians outside of specialized telemedicine institutions;Citation19,Citation23,Citation45,Citation46 maritime telemedicine;Citation30 prison medicine;Citation24 and teledermatology services.Citation23 The timing of instrument administration varied considerably across the studies. In addition, 16 publications (52%) reported the distribution of the questionnaires within 7 days of the consultation,Citation6,Citation19–Citation24,Citation27,Citation32–Citation34,Citation36,Citation37,Citation40–Citation42 seven studies (23%) between 14–28 days,Citation17,Citation18,Citation26,Citation28,Citation38,Citation39,Citation44 and one publication (3%) reported a latency of 4–16 months.Citation30 Also, seven (23%) studies did not report on the timing of the instrument’s administration ().Citation25,Citation29,Citation35,Citation43,Citation45,Citation46

Content of the instrument

We assessed the content of the instrument on nine prespecified domains. On average, an instrument covered five domains (range, three to nine) with 14 items per instrument (range, three to 37), and 2.3 items per domain (range, one to 15) ().

The most frequent domains, covered with at least one item, were the “perceived quality of the communication” (14 of 16 instruments, 88%),Citation6,Citation17–Citation20,Citation22,Citation24–Citation31 followed by the “overall satisfaction” (12 of 16 instruments, 75%),Citation17,Citation18,Citation20–Citation26,Citation28–Citation31 and the “perceived quality of the telephone advice” (12 of 16 instruments, 75%).Citation6,Citation17–Citation21,Citation25–Citation31 The following additional domains were covered by more than one-half of the instruments: the “attitude of the health professional;” the “attitude of the patient;” “management;” and “professional skills.” This indicated a focus of interest across the different instrument development teams. Only one instrument covered all nine domains.Citation18

The instruments varied widely in the number of items they included per domain. Seven instruments included mostly one or two items per domain;Citation6,Citation17,Citation19,Citation21–Citation23,Citation26 whereas, the study on the top end included a mean of 4.1 items per domain.Citation18

Development process

Only ten of the 16 instruments (63%) provided details about the development process, such as a review of the literature (n=7), interviews of patients or stakeholders (n=5), or consultations with experts (n=3).Citation18,Citation20,Citation21,Citation24–Citation28,Citation30,Citation31 Seven studies reported the use of more than one method.Citation18,Citation20,Citation21,Citation26–Citation28,Citation31 Eleven of 16 studies (69%) performed a piloting of the instrument.Citation18–Citation22,Citation25–Citation27,Citation29–Citation31 Likert scales were predominantly used for the scoring (seven of 16 instruments, 44%). Other rating modes included yes/no options (n=2), categorical answers (n=2), numerical rating scales (n=3), visual analog scale (n=1), or smiley faces (n=1). One instrument included open-ended questions ().Citation30

Recruitment strategy and handling of nonresponders

Nine studiesCitation18,Citation20,Citation21,Citation24,Citation26–Citation28,Citation31,Citation42 gave information about their psychometric properties; therefore, their recruitment strategy and handling of nonresponders are further evaluated here. Inclusion criteria were comparable, as all studies addressed unselected patients who had received teleconsultation and triage services.

All but three publications explicitly reported the consecutive recruitment of patients.Citation24,Citation25,Citation31 The exclusion criteria (five of nine studies, 55%) were related to the feasibility of the study (for example, wrong address, serious illness of the patient).Citation18,Citation21,Citation26–Citation28 The mean response rate was 60% and varied from 100%Citation42 to 38%.Citation46

The nonresponse analysis in four of nine studies (44%) detected sociodemographic but no clinical differences between the studies’ responders and nonresponders. However, these analyses were conflicting. One study reported respondents to be older and more affluent without any differences in sex.Citation18 In two studies, the response rates were lower in men invited to participate.Citation28,Citation46 In a fourth study, women and young adults were less likely to participate.Citation27 Forgetfulness was identified as the most frequent reason for nonresponse ().Citation27,Citation28

Psychometric properties

For nine instruments, at least some information about the main psychometric properties was reported: item nonresponse; factor structure; reliability/internal consistency; and validity ().

Item nonresponse: six of the nine studies (67%) reported on nonresponses.Citation18,Citation20,Citation21,Citation24,Citation27,Citation31 In some studies, item nonresponse was more problematic than in others. For example, one study reported complete data from only 43% of the respondents,Citation21 while nonresponse rates for individual items ranged from a few percent up to about one-fifth of the respondents.Citation18,Citation27,Citation31

Factor structure: seven of the nine studies (78%) reported factor structures from a formal factor or principal component analysis,Citation18,Citation20,Citation24,Citation26–Citation28,Citation31 with a multifactorial structure and a median of 3.3 factors (range one to six) related to teleconsultation per instrument. The factors related to: communication (“interaction,” “satisfaction with communication and management,” “information exchange,” n=5); overall satisfaction (n=3); management (“delay until visit,” “initial contact person,” “service,” n=3); access to service (n=2); attitude of health professional (n=2); telephone advice (“product,” n=1); and individual information (“urgency of complaint;” n=1). The correlation between the number of items and the resulting number of factors was low (r=0.16). For instance, one high-item instrument with 37 itemsCitation18 identified only two factors that explained 72% of the variance; whereas, another instrument with 20 itemsCitation20 reported a structure with six factors, which explained 61% of the variance.

Reliability measures: all nine instruments provided reliability measures – one study for both the total scale and the subscales; two studies for the total scale; and the remaining studies for the subscales only. The Cronbach’s alpha values for the total scales were acceptable,Citation42 good,Citation26 or excellent.Citation21 Cronbach’s alpha values for most of the factor subscales were above 0.7. However, three of the seven studies – evaluating the reliability of the subscales – revealed questionableCitation20,Citation28 and unacceptableCitation26 Cronbach’s alpha values for individual subscales. One study provided results for inter-item correlation with correlation coefficients ranging from 0.45–0.89, indicating a good internal consistency of the scale.Citation18 Three studies additionally reported item-total correlations which ranged from 0.53–0.92, supporting the internal consistency of these instruments.Citation18,Citation27,Citation31 Three publications investigated the test/retest reliability and reported correlation coefficients for subscales of >0.7, which are considered satisfactory or better.Citation18,Citation20,Citation27 For single subscales, however, correlation coefficients were <0.7, indicating limitations in test/retest reliability.Citation18,Citation20

Validity measures: in five of the eight instruments (63%) the scales correlated well with related constructs indicating construct validity. For example, higher scores correlated with simple measures of overall satisfaction.Citation18,Citation20,Citation21,Citation28,Citation31 Other scales correlated well with the patients’ ages, the duration of the consultation, difficulties in contact by phone, waiting times, the amount of information received during the teleconsultation, the fulfillment of expectations or the transfer to a face-to-face visit. One study examined the convergent validity and found that sub-scores of the instrument were moderately correlated.Citation18 Only one of eight studies (13%) investigated the concurrent validity by comparing a shortened scale with the original instrument and reported modest intraclass correlation coefficients of 0.38–0.54.Citation21

Discussion

This systematic review reports on 16 instruments used for the multidimensional assessment of 17,797 patients, regarding patient satisfaction after teleconsultation and triage for a health problem. The review identified four instruments with comprehensive information on their development and psychometric properties.Citation18,Citation20,Citation28,Citation31

The selection of the most appropriate instrument will probably depend on the purpose of the instrument – whether it is thought for routine assessments after a consultation, for periodic application as a quality control measure, or as a research instrument. For example, a 37-item instrument demonstrated good internal consistency and an indication of validity. However, the proportion of missing items was very large for some items; the test/retest reliability may have been limited, and the instrument had only two factors.Citation18 This instrument may be selected for research purposes or for routine assessments, if multidimensionality is not the main focus of the evaluation. Another ten-item instrument, in contrast, showed four factors, good internal consistency, and construct validity (without evaluating the test/retest reliability).Citation31 Due to its brevity and test evaluation results, this instrument may be suitable for most purposes. The most frequently used instrument (20 items) demonstrated high-item completion rates, a six-factorial structure, and construct validity. However, several subscales only had a very limited internal consistency.Citation20 An alternative 22-item instrument with a six-factor structure also showed construct validity, with a questionable internal consistency of one subscale and without information on the item completion rates.Citation28 However, both instruments may be selected if the multidimensionality of patient satisfaction assessment is of the utmost importance.

As only seven instruments used a formal factor analysis to identify the relevant underlying constructs, we applied a pragmatic approach for attributing the content of the remaining nine instruments to a list of domains from a systematic review.Citation31 This methodology confirmed the most frequently detected domains from the factor analysis (“communication,” “overall satisfaction,” and “management”) and identified additional domains as relevant for users. These are: “perceived quality of the telephone advice;” “attitude of health professional;” “attitude of patient;” and “professional skills.” Depending on their specific interests, the coverage of these domains may be an additional criterion for users to select any of those instruments.

Although most of the instruments had been developed over the last decade – a decade with an increased awareness for the need of methodological rigor in psychometric instrument development and testingCitation47 – many studies lacked details on the development process, had minimal information on the instruments’ reliability, and only one-half of the instruments presented the validity of the existing scales. Factor structure, reliability, and validity were only reported for one-quarter of the instruments. No study evaluated the extent to which a score on the instrument predicts the associated outcome measures (predictive validity), which would allow conclusions about the patients’ adherence to the recommendations or the health service use.Citation14 The recruitment strategy and handling of nonresponders were comparable across the studies.

In his systematic review of patient satisfaction questionnaires for out-of-hours care in 2007, Garratt identified four instruments that reported some data on reliability and validity;Citation20,Citation21,Citation27,Citation28 all were included in this review.Citation4 Garratt concluded that all of those studies had limitations regarding their development process and their evaluation of psychometric properties. Even though several years have passed, our review has to confirm these limitations. Despite extensive searching, we did not find any attempts to further modify, reevaluate, and improve the instruments with limited reliability or redundant items – except in one study. That study reduced a 38-item questionnaireCitation20 to a shorter version with only eight items.Citation21 Six of the 16 instruments identified in this review were published in subsequent years.Citation17,Citation18,Citation22,Citation26,Citation30,Citation31 Of these, three instruments reported both methodological and psychometric data, two of which provide evidence of acceptable reliability and validity.Citation18,Citation31

Measuring patient satisfaction after teleconsultation and triage is a challenging endeavor. The assessment needs to focus on the quality of the service without being contaminated by the actions of subsequent health care providers or the severity and the natural course of the health problem. For instance, timing the administration of the questionnaire can be crucial. In the review, the delivery of the questionnaire varied between immediate inquiries to a latency of up to 16 months postconsultation. There is conflicting evidence regarding to what degree the timing of administration may confound the measurement of patient satisfaction. Previous work suggests that a potential timing effect depends on the health status of patients and the initial problem they sought help for.Citation10 Applied to our review, this would suggest that the optimal timing would be relatively shortly after the teleconsultation (ie, <1 week), as longer time intervals may increase memory problems for details of the teleconsultation, and the course of the medical problem may confound the perceived quality of the encounter.

Our review is based on a comprehensive literature search that included expert contacts and no language restrictions. Study selection, quality assessment, and data extraction with pretested forms – performed independently by two researchers – limited bias and transcription errors. Our ad hoc analysis of the instruments without formal factor analysis confirmed the domains identified in the studies with a formal factor analysis, but it identified other relevant domains with face validity. Our review was limited to instruments published in scientific journals. However, more instruments are likely to be in use. A recent survey among medical academic centers in the USA revealed a frequent use of internal instruments.Citation48 However, if these internal instruments had been thoroughly developed and formally evaluated, we assume they would have been published in a scientific journal.

If the measurement results are to be used for a comparison of different teleconsultation centers or of physicians within these centers or to demonstrate improvements in patient satisfaction over time, the instruments must undergo rigorous development and evaluation processes. Presently, this is the case for only a minority of these instruments. For example, the Patient-Reported Outcome Measurement Information System (PROMIS) instruments’ development and psychometric scientific standards provide a set of criteria for the development and evaluation of psychometric tests.Citation49 Specifically, this includes reporting on the details of the development process, including the definition of the target concept and the conceptual model, the testing of reliability and validity parameters, and the reevaluations after potential refinements of the initial instrument. High-quality multidimensional assessment instruments should be consequently used in future trials to generate valid and comparable evidence of patient satisfaction with teleconsultation. This also includes a follow-up on patient satisfaction over time.

Conclusion

The status of appraisal of the instruments for measuring patient satisfaction after teleconsultation and triage – identified in the present systematic review – varies from comprehensive test evaluations to fragmentary and even missing data on factor structure, reliability, and validity. This review may serve as a starting point for selecting the instrument that best suits the intended purpose in terms of content and context. It offers pooled information and methodological advice to instrument developers with an interest in developing the long-needed assessment instrument.

Acknowledgments

We would like to thank Stefan Posth, Odense, Denmark, and Philipp Kubens, Freiburg, Germany, for their translation of Danish and Swedish article information.

Supplementary material

Table S1 Medline search algorithm

Disclosure

MAI and EB are employees of a private institution providing teleconsultation and triage. RK reports no conflict of interest in this work.

References

- ahrqgov [homepage on the Internet]Telemedicine for the Medicare population: updateAgency for Healthcare Research and Quality (AHRQ) [updated February 2006]. Available from: http://www.ahrq.gov/downloads/pub/evidence/pdf/telemedup/telemedup.pdfAccessed October 16, 2013

- DeshpandeAKhojaSMcKibbonAJadadARReal-Time (Synchronous) Telehealth in Primary Care: Systematic Review of Systematic ReviewsOttawa, CanadaCanadian Agency for Drugs and Technologies in Health2008 Available from: http://www.cadth.ca/media/pdf/427A_Real-Time-Synchronous-Telehealth-Primary-Care_tr_e.pdf

- Nurse telephone triage in out of hours primary care: a pilot studySouth Wiltshire Out of Hours Project (SWOOP) GroupBMJ199731470751981999022437

- GarrattAMDanielsenKHunskaarSPatient satisfaction questionnaires for primary care out-of-hours services: a systematic reviewBr J Gen Pract20075754274174717761062

- LattimerVGeorgeSThompsonFSafety and effectiveness of nurse telephone consultation in out of hours primary care: randomised controlled trial. The South Wiltshire Out of Hours Project (SWOOP) GroupBMJ19983177165105410599774295

- McKinstryBWalkerJCampbellCHeaneyDWykeSTelephone consultations to manage requests for same-day appointments: a randomised controlled trial in two practicesBr J Gen Pract20025247730631011942448

- ThompsonFGeorgeSLattimerVOvernight calls in primary care: randomised controlled trial of management using nurse telephone consultationBMJ19993197222140810574857

- BunnFByrneGKendallSTelephone consultation and triage: effects on health care use and patient satisfaction [review]Cochrane Database Syst Rev20044CD00418015495083

- KraaiIHLuttikMLde JongRMJaarsmaTHillegeHLHeart failure patients monitored with telemedicine: patient satisfaction, a review of the literatureJ Card Fail201117868469021807331

- CrowRGageHHampsonSThe measurement of satisfaction with healthcare: implications for practice from a systematic review of the literatureHealth Technol Assess2002632124412925269

- WareJEJrSnyderMKWrightWRDaviesARDefining and measuring patient satisfaction with medical careEval Program Plann198363–424726310267253

- Linder-PelzSStrueningEThe multidimensionality of patient satisfaction with a clinic visitJ Community Health198510142544019824

- LockerDDuntDTheoretical and methodological issues in sociological studies of consumer satisfaction with medical careSoc Sci Med1978124A283292675282

- NunnallyJCBernsteinIHPsychometric Theory3rd edNew York, NYMcGraw Hill1994

- KlinePThe Handbook of Psychological Testing2nd edLondonRoutledge1999

- EverittBSThe Cambrige Dictionary of Statistics2nd edCambridge, MAUniversity Press2002

- RahmqvistMHusbergMEffekter av sjukvårdsrådgivning per telefon: En analys av rådgivningsverksamheten 1177 i Östergötland och JämtlandCMT Rapport20093

- CampbellJLDickensARichardsSHPoundPGrecoMBowerPCapturing users’ experience of UK out-of-hours primary medical care: piloting and psychometric properties of the Out-of-hours Patient QuestionnaireQual Saf Health Care200716646246818055892

- DixonRAWilliamsBTPatient satisfaction with general practitioner deputising servicesBMJ19882976662151915223147058

- McKinleyRKManku-ScottTHastingsAMFrenchDPBakerRReliability and validity of a new measure of patient satisfaction with out of hours primary medical care in the United Kingdom: development of a patient questionnaireBMJ199731470751931989022436

- SalisburyCBurgessALattimerVDeveloping a standard short questionnaire for the assessment of patient satisfaction with out-of-hours primary careFam Pract200522556056915964865

- DixonRFStahlJEA randomized trial of virtual visits in a general medicine practiceJ Telemed Telecare200915311511719364890

- HicksLLBolesKEHudsonSPatient satisfaction with teledermatology servicesJ Telemed Telecare200391424512641892

- MekhjianHTurnerJWGailiunMMcCainTAPatient satisfaction with telemedicine in a prison environmentJ Telemed Telecare199951556110505370

- MoscatoSRDavidMValanisBTool development for measuring caller satisfaction and outcome with telephone advice nursingClin Nurs Res200312326628112918650

- StrömMBaigiAHildinghCMattssonBMarklundBPatient care encounters with the MCHL: a questionnaire studyScand J Caring Sci201125351752421338380

- Moll van CharanteEGiesenPMokkinkHPatient satisfaction with large-scale out-of-hours primary health care in The Netherlands: development of a postal questionnaireFam Pract200623443744316641129

- van UdenCJAmentAJHobmaSOZwieteringPJCrebolderHFPatient satisfaction with out-of-hours primary care in the NetherlandsBMC Health Serv Res200551615651997

- KeatingeDRawlingsKOutcomes of a nurse-led telephone triage service in AustraliaInt J Nurs Pract200511151215610339

- DehoursEValléBBounesVUser satisfaction with maritime telemedicineJ Telemed Telecare201218418919222604271

- GarrattAMDanielsenKForlandOHunskaarSThe Patient Experiences Questionnaire for Out-of-Hours Care (PEQ-OHC): data quality, reliability, and validityScand J Prim Health Care20102829510120433404

- SalisburyCPostal survey of patients’ satisfaction with a general practice out of hours cooperativeBMJ19973147094159415989186172

- ShipmanCPayneFHooperRDaleJPatient satisfaction with out-of-hours services; how do GP co-operatives compare with deputizing and practice-based arrangements?J Public Health Med200022214915410912552

- McKinleyRKRobertsCPatient satisfaction with out of hours primary medical careQual Health Care2001101232811239140

- McKinleyRKStevensonKAdamsSManku-ScottTKMeeting patient expectations of care: the major determinant of satisfaction with out-of-hours primary medical care?Fam Pract200219433333812110550

- GlynnLGByrneMNewellJMurphyAWThe effect of health status on patients’ satisfaction with out-of-hours care provided by a family doctor co-operativeFam Pract200421667768315528288

- ThompsonKParahooKFarrellBAn evaluation of a GP out-of-hours service: meeting patient expectations of careJ Eval Clin Pract200410346747415304147

- CampbellJRolandMRichardsSDickensAGrecoMBowerPUsers’ reports and evaluations of out-of-hours health care and the UK national quality requirements: a cross sectional studyBr J Gen Pract200959558e8e1519105911

- KellyMEgbunikeJNKinnersleyPDelays in response and triage times reduce patient satisfaction and enablement after using out-of-hours servicesFam Pract201027665266320671002

- GiesenPMoll van CharanteEMokkinkHBindelsPvan den BoschWGrolRPatients evaluate accessibility and nurse telephone consultations in out-of-hours GP care: determinants of a negative evaluationPatient Educ Couns200765113113616939708

- SmitsMHuibersLOude BosAGiesenPPatient satisfaction with out-of-hours GP cooperatives: a longitudinal studyScand J Prim Health Care201230420621323113756

- BeaulieuRHumphreysJEvaluation of a telephone advice nurse in a nursing faculty managed pediatric community clinicJ Pediatr Health Care200822317518118455066

- ReinhardtACThe impact of work environment on telephone advice nursingClin Nurs Res201019328931020601639

- RahmqvistMErnesäterAHolmströmITriage and patient satisfaction among callers in Swedish computer-supported telephone advice nursingJ Telemed Telecare201117739740221983224

- LópezCValenzuelaJICalderónJEVelascoAFFajardoRA telephone survey of patient satisfaction with realtime telemedicine in a rural community in ColombiaJ Telemed Telecare2011172838721139016

- McKinstryBHammersleyVBurtonCThe quality, safety and content of telephone and face-to-face consultations: a comparative studyQual Saf Health Care201019429830320430933

- CellaDYountSRothrockNPROMIS Cooperative GroupThe Patient-Reported Outcomes Measurement Information System (PROMIS): progress of an NIH Roadmap cooperative group during its first two yearsMed Care2007455 Suppl 1S3S1117443116

- DawnAGLeePPPatient satisfaction instruments used at academic medical centers: results of a surveyAm J Med Qual200318626526914717384

- National Institutes of HealthPROMIS Instrument Development and Validation Scientific StandardsSilver Spring, MDNational Institutes of Health2013 Available from: http://www.nihpromis.org/Documents/PROMISStandards_Vers2.0_Final.pdfAccessed October 16, 2013