Abstract

Background

The emergence of new technologies, such as artificial intelligence (AI), may manifest as technology panic in some people, including adolescents who may be particularly vulnerable to new technologies (the use of AI can lead to AI dependence, which can threaten mental health). While the relationship between AI dependence and mental health is a growing topic, the few existing studies are mainly cross-sectional and use qualitative approaches, failing to find a longitudinal relationship between them. Based on the framework of technology dependence, this study aimed to determine the prevalence of experiencing AI dependence, to examine the cross-lagged effects between mental health problems (anxiety/depression) and AI dependence and to explore the mediating role of AI use motivations.

Methods

A two-wave cohort program with 3843 adolescents (Male = 1848, Mage = 13.21 ± 2.55) was used with a cross-lagged panel model and a half-longitudinal mediation model.

Results

17.14% of the adolescents experienced AI dependence at T1, and 24.19% experienced dependence at T2. Only mental health problems positively predicted subsequent AI dependence, not vice versa. For AI use motivation, escape motivation and social motivation mediated the relationship between mental health problems and AI dependence whereas entertainment motivation and instrumental motivation did not.

Discussion

Excessive panic about AI dependence is currently unnecessary, and AI has promising applications in alleviating emotional problems in adolescents. Innovation in AI is rapid, and more research is needed to confirm and evaluate the impact of AI use on adolescents’ mental health and the implications and future directions are discussed.

Introduction

AI is a set of computer programs designed to think and mimic human behavior such as learning, reasoning, and self-correction.Citation1 AI appears in people’s daily lives in various forms, such as voice assistants (eg, Apple Siri, Xiaomi’s Xiaoai, Baidu’s Duer, Microsoft Xiaoice, etc.),Citation2 social robots (eg, Pepper),Citation3,Citation4 chatbots or companion chatbots (eg, Replica, Mitsuku)Citation5,Citation6, AI-powered video,Citation7 and health-focused AI (eg, Help4Mood, Woebot).Citation8 AI is increasingly popular among adolescents, with 49.3% of 12- to 17-year-olds using voice assistants embedded in digital media (eg, Amazon Echo).Citation9 Fifty-five percent of adolescents use voice assistants more than once a day to conduct searches.Citation10 Unlike older technologies (TV, social media, smartphones, computers), AI not only facilitates human interaction but can become a social actor or agent that communicates directly with adolescents.Citation11 This will have a profound impact on adolescent development.Citation12–14 Adolescents are in a unique period of changes in brain structure and functionCitation15 and they are still in the process of recovering from the impact of Covid-19 (eg, lockdown anxiety, lack of mental health services),Citation16 which makes them vulnerable to digital technologies and emotional problems.Citation17–19 Meanwhile, adolescents are surrounded by a natural AI environment, where generative AI technology is rapidly being used in various contexts for learning, entertainment and personalized recommendations,Citation20,Citation21 which is different from the use of traditional technologies. For example, they could use AI to complete homework, but students are concerned that the generative AI could affect their motivation, creativity and critical thinking, and even lead to widespread cheating (eg, AI generating fake content or videos).Citation22,Citation23 It is therefore important to explore young people’s views on “destructive” AI as defined by adults. While technology dependence is an extremely important public health issue among adolescents in traditional ICT (eg, internet, smartphones, computers, social media), it is unclear whether adolescents develop AI dependence and how it relates to mental health problems. Furthermore, AI is a technology with potential to alleviate emotional or mental health problems in adolescents,Citation24,Citation25 and it is unclear how mental health problems influence the development of AI dependence (eg, via the mediating role of motivation to use AI).

The literature suggests that users tend to develop dependent or addictive AI use (eg, emotional dependence on chatbots, attachment to social chatbots, dependence on conversational AI).Citation12,Citation25–31 Based on the framework of technology dependence (eg, problematic smartphone use, problematic internet use), AI dependence is defined as excessive use of AI technologies that leads to dependence and addictive trend, which may have negative consequences (eg, interpersonal problems and mental health distress).Citation25,Citation27–29 Technology dependence is associated with a wide range of negative outcomes, including mental health problems, sleep problems, poor task performance, physical pain, and disruption of real-life relationships.Citation32 Recent literature is also concerned with the negative aspects of AI dependence, such as the threat to real-life relationships and emotional health.Citation28,Citation29 One of the most important questions is whether AI dependence contributes to mental health distress, similar to technology dependence.Citation28 When a new technology emerges, the public and society may experience technology panic, as suggested by technology determinism theory, and then the public and society invest enormous resources and materials to explore the issue.Citation33 Technology panic is the fear that emerging new technologies, such as AI, will encourage adolescents to become addicted to them, just as radio addiction did in an earlier era.Citation33 A typical example in the ear of AI is that adolescents’ AI use can develop into AI dependence or addiction, which can threaten their mental health. However, there is a lack of empirical research on the relationship between AI dependence and mental health distress, and the prevalence of AI dependence in the population is unknown.Citation28 Investigating the prevalence of AI dependence and clarifying the relationship between the two would be beneficial for addressing AI technology panic and providing suggestions for future AI dependence research.

Several studies have preliminarily explored the relationship between AI dependence and mental health problems. However, there is no consensus about whether AI dependence leads to mental health problems or vice versa. Regarding the impact of AI dependence on mental health, those who use more AI have higher social capital than non-AI users, which is beneficial for health.Citation30 In contrast, some argue that AI dependence may threaten people’s interpersonal connections and negatively affect their mental health.Citation26,Citation29,Citation34 Regarding the impact of mental health problems on AI dependence, Hu et al found that social anxiety can lead to AI dependence via the mediating roles of loneliness and rumination.Citation27 In contrast, some have found that AI dependence develops when people use AI to alleviate emotional problems in pursuit of better health.Citation29,Citation35 Overall, most of these studies have considered specific AI technologies (eg, Replika as a chatbot)Citation5,Citation26,Citation29 and lack an overall assessment of AI dependence, as users use multiple types of AI (eg, chatbots, social robots, or voice assistants). Second, most studies on the relationship between AI dependence and mental health problems are cross-sectional or use qualitative analysis (eg, interviews, text analysis),Citation5,Citation26,Citation29 preventing the causal relationship between them from being determined. Third, the populations in these studies mainly comprised adults, and few included adolescents. Finally, the uniqueness of human-like competence and warmth distinguishes AI from traditional technologies (eg, smartphones, social media),Citation11,Citation36 indicating the potential novelty of the relationship between AI dependence and mental health. The nature of technology panic around the use of AI can not be easily answered by researchers considering only the framework of traditional technologies while neglecting the uniqueness of this new technology.Citation33 Therefore, this study considers the similarities and differences between AI technologies and older technologies to identify the unique relationship between AI dependence and mental health problems. We argue that AI dependence is unlikely to lead to subsequent mental health problems due to AI’s human-like abilities, whereas mental health problems may lead to subsequent AI dependence because AI can be a tool for coping with emotional problems (see Mental Health and AI Dependence for further illustration).

As suggested by the I-PACE model,Citation37 motivation is one of the most important factors contributing to technology dependenceCitation38 and may mediate the relationship between mental health problems and technology dependence.Citation37 Empirical studies have also shown that the motivation to use technology mediates the relationship between mental health conditions and technology dependence.Citation38–40 However, it is unclear whether the motivation to use AI mediates the impact of mental health problems on AI dependence. Following established theories and previous research on technology dependence, this study uses a half-longitudinal mediation approach to examine the mediating roles of four motivations for AI use (social, escape, entertainment, and instrumental motivations).

Mental Health and AI Dependence

To explore the relationship between AI dependence and mental health problems, it would be helpful to borrow from the literature on technology dependence.Citation27,Citation28 However, when focusing on AI dependence, it is advisable to clarify the differences and similarities between AI technology and older technologies such as smartphones and computers.Citation33 While traditional technologies enable computer-mediated interactions (humans interacting with other humans through the medium of technology), AI technologies go beyond this and are characterized by human-human-like communicator interactions (AI technologies are considered to be direct social communicators).Citation11 This suggests that AI technology has similarities to older technologies (eg, both can be treated as technologies for coping with emotional problems)Citation41,Citation42 and unique features not present in traditional technologies (eg, AI is more competent and warm during interactions and gives human-like feedback).Citation11,Citation42,Citation43 We propose that the similarities between them suggest that mental health problems may impact AI dependence, while the uniqueness of AI suggests that AI dependence has a “particular” impact on mental health problems.

Mental Health Problems Can Predict AI Dependence

Self-medication theoryCitation44 suggests that when faced with depression or anxiety, individuals use drugs to self-medicate and eventually become addicted to them. Similarly, children and adolescents with technology dependence use smartphones to “medicate” their stress and emotional problems.Citation45,Citation46 Compensatory internet theory suggests that people go online to escape from real-life problems or alleviate dysphoric moods, eventually developing addictive use of the internet or technology.Citation47 Due to the similarities between AI technologies and traditional technologies, AI can also be used to address emotional problems, which increases the risk of developing AI dependence. For example, existing research has shown that depression and anxiety can drive AI use in adolescents and adults, which has the potential to develop into AI dependence.Citation25,Citation26,Citation29,Citation30,Citation34 Therefore, we hypothesize the following:

H1: Mental health problems (anxiety and depression) at T1 positively predict adolescents’ AI dependence at T2.

AI Dependence Fails to Predict Mental Health Problems

In the context of technology dependence, research has shown that excessive use of online technology or digital media can replace daily offline interactions with others and time in other surroundings, which has a negative impact on mental health.Citation48 However, recent research suggests that the use of conversational AI will not replace individuals’ real-life relationships but instead increase their social capital.Citation30 The difference between traditional ICT and AI technology may shed some light on the effects of technology dependence on mental health problems. ICT is computer-mediated, under social interaction conditions, ICT usually leads to negative outcomes such as a lack of informative feedback, a lack of affective cues, and disinhibited behaviors (eg, swearing, insults),Citation49 which may make it difficult to alleviate users’ emotional problems. Even under nonsocial interaction conditions, excessive use of technology can lead to perceived stress (eg, digital stress), making users depressed and anxious.Citation50,Citation51

AI technology can provide higher quality interaction because it is designed to be more human-like in its role.Citation11 This technology is highly competent in communication and problem solving and highly empathetic in providing emotional and social support.Citation2,Citation5,Citation6,Citation26 Moreover, interaction with AI is less demanding and complicated than interaction with real people since AI is designed for serving users.Citation27 For example, a recent study showed that human-AI interactions produce fewer negative emotions than face-to-face human interactions, while computer-mediated interactions do not.Citation52 Thus, relying on AI technologies reduces the risk of new emotional problems, as their feedback is usually positive and stable.Citation3–5,Citation52 Overall, AI is beneficial in alleviating emotional problems and preventing the development of new mental health problems. However, recent studies have shown that dependence on AI can lead to emotional problems.Citation26,Citation29 It is important to note that these studies are qualitative and cross-sectional, and it is unclear whether these effects persist in the long term. Thus, based on the uniqueness of AI technology, we hypothesized the following:

H2: Adolescents’ AI dependence at T1 does not predict mental health problems at T2.

AI Use Motivations as Mediators from Mental Health to AI Dependence

In the I-PACE model of behavioral addiction, psychopathology conditions can trigger addictive behaviors, and an individual’s specific motivation can contribute to the development of addictive behaviors.Citation37 Psychopathology conditions can promote individuals’ specific motivations and further contribute to the development of addictive behaviors, suggesting a mediating role for specific motivations.Citation37

In the AI literature on motivation, Cho et al found that motivations for using AI speakers included escaping daily problems, maintaining social relationships, accessing information and learning, entertainment and relaxation, and seeking practicality.Citation53 Choi and Drumwright found that motivations for AI use include social interaction, life efficiency, and access to information.Citation54 Ng and Lin found that hedonic gratification, social gratification, and functional gratification are the three main motivations that drive users to access controversial AI.Citation55 Social motivation, emotion regulation motivation, pass-time motivation, and information and enhancement motivation were the most cited motivations in the development of technology dependence.Citation38 Based on this and the literature on technology dependence, motivations for AI use can be categorized into four types: 1) escape motivation (eg, to escape from daily problems, as suggested by self-escape theory); 2) social motivation (eg, to maintain social relationships, social gratification, as suggested by need-to-belong theory); 3) entertainment motivation (eg, hedonic gratification, entertainment and relaxation, as suggested by use and gratification theory); and 4) instrumental motivation (eg, functional gratification, the pursuit of utility, and information acquisition, as suggested by use and gratification theory). Different types of motivation may play different mediating roles in the relationship between mental health problems and AI dependence, as suggested in the technology dependence literature.Citation56,Citation57 We detail the mediating roles of motivation for AI use as follows.

AI Social Motivation

Attachment theory was first developed to explain the relationship between parents and children and suggests that because children are immature and ill-equipped to deal with threats, they develop behaviors to remain close to their caregivers (parents) to receive their protection, warmth, and care to help them survive.Citation58 When faced with emotional problems, attachment mechanisms are activated,Citation26 and adolescents may find attachment and belonging in other social agents (eg, AI as a social actor.Citation43 Research suggests that human-AI relationships are similar to human-human relationships.Citation2 By using AI as a social actor, adolescents have the potential to increase their social connection to technology and rely on AI technology.Citation29,Citation59 Based on attachment theory, research on AI chatbots has shown that users form emotional reliance on chatbots (eg, Replika chatbot) when faced with emotional problems.Citation26

Need-to-belong theory also proposes that individuals have an innate need to belong, which drives them to interact with others (eg, parents, peers, teachers, etc.) to satisfy their need to connect and belong.Citation60 Previous research has shown that belonging reflects users’ feelings of attachment to technology (eg, SNS), which is an important indicator of the development of technology dependence.Citation61 Thus, we hypothesized the following:

H3: Mental health problems (T1) positively predict AI social motivation (T2), and AI social motivation (T1) positively predicts AI dependence (T2). The mediating effect of AI social motivation is significant.

AI Escape Motivation

Self-escape theoryCitation62,Citation63 is commonly used to explain self-destructive behaviors in adolescents (eg, substance use and problematic internet use). The theory suggests that when adolescents experience an unpleasant state, such as anxiety or depression, they react to this state by attempting to escape from negative thoughts into a state of relatively numb cognitive deconstruction, leading to a range of problematic behaviors (eg, drinking, sexual offending, delinquency, etc.).Citation63 This theory has recently been extended to explain technology dependence.Citation56,Citation64,Citation65 Internet compensatory theory also suggests that people go online or use digital media to escape from real-life issues or to alleviate emotional problems, which ultimately leads to technology dependence.Citation40,Citation47,Citation66 Thus, we hypothesized the following:

H4: Adolescent mental health problems (T1) positively predict AI escape (T2), and AI escape (T1) positively predicts AI dependence (T2). The mediating effect of AI escape motivation is significant.

AI Entertainment Motivation and AI Instrumental Motivation

Use and gratification theoryCitation67 has been widely used to explain why people tend to use technology and eventually develop a technology dependence.Citation68,Citation69 This theory suggests that people have many basic needs, such as entertainment and information seeking. Technologies such as smartphones and AI provide various platforms for people to satisfy their entertainment and information-seeking motivations, such as searching for information, listening to music, and playing games.Citation14,Citation55,Citation70,Citation71 When faced with emotional problems, adolescents tend to motivate themselves to entertain or relax, offsetting negative emotions by using smartphones or social media.Citation72 However, using technology for entertainment (eg, playing games) carries the risk of becoming addicted to technology use.Citation56,Citation73 Accordingly, we hypothesize that the following:

H5: Adolescents’ mental health problems (T1) positively predict AI entertainment (T2), and AI entertainment (T1) positively predicts AI dependence (T2). The mediating effect of AI entertainment motivation is significant.

Previous research has shown that adolescents use digital media and AI to satisfy their basic needs for information-seeking and convenience utility,Citation12,Citation56,Citation68 which can be summarized as instrumental motivation. In the technology dependence literature, instrumental motivation is less likely to be identified as an antecedent to the development of technology dependence than other motivations (eg, escape, entertainment, socialization).Citation56,Citation74 In addition, depressed individuals are more likely to prefer other motivations (eg, escape, entertainment) to cope with emotional problems, with instrumental motivation producing fewer relieving effects due to its focus on specific functions of technologies.Citation75 And depressed adolescents are less likely to perform instrumental activities on social media (eg, doing homework or studying).Citation76 Based on this, we hypothesize the following:

H6: Mental health problems (T1) do not predict AI instrumental motivation (T2), and AI instrumental motivation (T1) does not predict AI dependence (T2). The mediating effect of AI instrumental motivation is not significant.

The Present Study

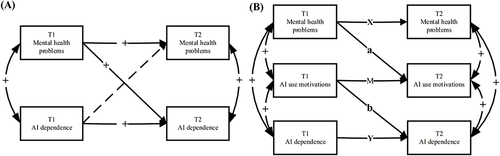

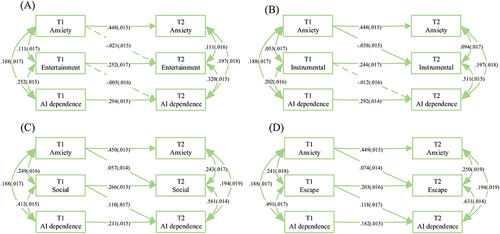

This study used a two-wave longitudinal design in a large sample of adolescents to test the hypothesized cross-lagged effects between AI dependence and mental health problems (depression and anxiety) (see ) and then used a half-longitudinal mediation model to test the longitudinal mediating role of motivations for AI use in the relationship between mental health problems and AI dependence (see ). The mediating role of four types of motivation for AI use (AI escape motivation, AI social motivation, AI entertainment motivation, and AI instrumental motivation) was examined. This study contributes to the literature by filling the research gaps in the relationship between AI dependence and mental health problems. In addition, this study will provide further insight into how mental health problems affect AI dependence.

Methods

Participants

Participants were taken from the “Cohort Study on Developmental Characteristics, Influences, and Outcomes of Children and Adolescents’ Use of Modern Information Communication Technology (ICT)” project in the State Key Laboratory of Cognitive Neuroscience and Learning at Beijing Normal University. They came from the northern (2.1%), central (52.3%), and southwestern (45.6%) regions of China. This cohort study included 5057 adolescents (male = 2514) in wave 1 (spring semester 2022) and 3843 adolescents (male =1848) in wave 2 (autumn semester 2022). A total of 1209 adolescents dropped out at T2, and the final number of participants in the later analysis was 3843 adolescents (male = 1848, Mage = 13.21 ± 2.55). T test revealed that at T2, the adolescents who responded and those who dropped out differed at T1 for anxiety (t = 2.91, p = 0.004, Cohen’s d = 0.095), depression (t = 3.84, p < 0.001, Cohen’s d = 0.124), AI dependence (t = 2.14, p = 0.033, Cohen’s d = 0.071), AI escape motivation (t = 2.46, p = 0.014, Cohen’s d = 0.081), age (t = −4.19, p < 0.001, Cohen’s d = 0.111), and gender (t = −4.64, p < 0.001, Cohen’s d = −0.160) but did not differ at T1 for AI instrumental motivation (t = 0.20, p = 0.844, Cohen’s d = 0.007) or AI entertainment motivation (t = −0.52, p = 0.605, Cohen’s d = −0.017). However, the effect sizes for all T tests were extremely small.Citation77 In terms of demographics, 67.5% of the participants had an annual household income of less than CNY 100,000, 26.4% between CNY 100,000 and CNY 300,000, and 6.1% over CNY 300,000. In terms of parental education, 80.5% of their fathers and 83.3% of their mothers had less than a university degree.

Procedure

Volunteer teachers from the school assisted the researchers with data collection. Teachers and researchers distributed paper questionnaires to students in their classes, the teachers introduced the questionnaire to students, and the students completed the questionnaire independently. All responses were provided in a paper-pencil format. The students took part in this survey voluntarily, and if they felt uncomfortable, they could choose to stop answering at any time. Informed consent was obtained from all participating students and their parents. IRB approval from the State Key Laboratory of Cognitive Neuroscience and Learning at Beijing Normal University was obtained for this study and the procedure was in line with the Declaration of Helsinki. In the AI questionnaire, the adolescents were presented with the definition of AI (adapted from literatureCitation1): “Artificial intelligence is the use of machines (especially computer systems) to simulate the process of human intelligence, including learning (acquiring information and rules for using the information), reasoning (using rules to reach approximate or definitive conclusions), and self-correction. Specific examples include personal assistants (such as Siri, Xiaoai, Xiaodu, Tmall Genie, etc.), intelligent auxiliary equipment, chat robots (such as ChatGPT, ERNIE Bot, etc.), social robots (such as service robot) etc”.

Measurement

Depression

Depression in adolescents was assessed using the Patient Health Questionnaire-9 (PHQ-9).Citation78 The scale contains 9 items (eg, “Feeling down, depressed, irritable, or hopeless”; “Poor appetite, weight loss, or overeating”), each representing a different depressive symptom. The scale uses a 4-point Likert scale (0 = “not at all”, 1 = “several days”, 2= “more than half of the days”, and 3= “nearly every day”), with higher total scores indicating higher levels of depression. The scale showed good reliability (αT1 = 0.896, αT2 = 0.930) and validity (CFIT1 = 0.967, TLIT1 = 0.950, RMSEAT1 = 0.075; CFIT2 = 0.975, TLIT2 = 0.962, RMSEAT2 = 0.079).

Anxiety

The Generalized Anxiety Disorder-7 scale (GAD-7)Citation79 was used to measure anxiety in adolescents. The GAD-7 consists of 7 items (eg, “Feeling nervous, anxious or on edge”, “Worrying too much about different things”), and a 4-point Likert scale (1 = “Not at all”, 4 = “Nearly every day”) was used to rate these items (αT1 = 0.909, αT2 = 0.942), and the CFA showed good construct validity (CFIT1 = 0.981, TLIT1 = 0.969, RMSEAT1 = 0.078; CFIT2 = 0.985, TLIT2 = 0.976, RMSEAT2 = 0.081).

AI Dependence

The AI dependence scale was adapted from the Smartphone Addiction Scales.Citation80,Citation81 The AI dependence scale consists of five items (eg, “I have tried to reduce the amount of time I spend on AI but failed” and “I feel anxious and upset when I cannot use artificial intelligence”). The scale items were selected based on the concept of technology dependence.Citation82 Five items expressing the core symptoms of smartphone addiction or problematic smartphone use were selectedCitation80,Citation83,Citation84 and then adapted into the AI dependence scale. In this study, the AI Dependence Scale showed good reliability (αT1 = 0.881, αT2 = 0.953) and construct validity (CFIT1 = 0.955, TLIT1 = 0.987, RMSEAT1 = 0.059; CFIT2 = 0.992, TLIT2 = 0.980, RMSEAT2 = 0.066) when using a 4-point Likert scale (1 = “strongly disagree”, 4 = “strongly agree”). Detailed items and CFA loadings are provided in the Appendix (see Tables S1 and S2).

AI Use Motivation

The AI Use Motivation Scale was revised from the Artificial Intelligence Speaker Use Motivation Scale.Citation85 The revised AI Use Motivation Scale consists of four dimensions: 1) escape motivation, which is the motivation to use AI to escape from daily problems (eg, “I use AI as a way to escape from family, friends, or other problems”); 2) social motivation, which is the motivation to use AI to find the sense of social connection and belonging (eg, “I use AI as a way to avoid being alone”); 3) entertainment motivation, which is the motivation to use AI for entertainment activities and enjoyment (eg, “I use AI to entertain myself and relax ”); and 4) instrumental motivation, which is the motivation to use AI to search for information and gain knowledge (eg, “I use AI to search for and obtain the information I need”). Each dimension consists of three items on a 4-point Likert scale (1 = “strongly disagree”, 4 = “strongly agree”). In this study, the AI use motivation scale showed good reliability (αT1 = 0.862 ~ 0.895, αT2 = 0.882 ~ 0.935) and construct validity (CFIT1 = 0.992, TLIT1 = 0.980, RMSEAT1 = 0.092; CFIT2 = 0.970, TLIT2 = 0.957, RMSEAT2 = 0.084). Detailed items and CFA loadings were provided in the Appendix (see Tables S1 and S2).

Analytic Procedure

First, the description and correlation between variables were carried out using SPSS 24.0. Second, a cross-lagged panel model (CLPM) and a half-longitudinal mediation model were constructed using Mplus 8.4. A cross-lagged panel model was used to examine the longitudinal relationship between mental health problems and AI dependenceCitation86 (see ). The CLPM includes both autoregressive effects and cross-lagged effects. The former is the effect of a variable at T1 on the same variable at T2, while the latter is the effect of a variable at T1 on another variable at T2, controlling for autoregressive effects. A half-longitudinal mediation modelCitation86–88 was used to examine the longitudinal mediation effects of AI use motivation (see ). The model examines the effect of a (the effect of X at T1 on M at T2) *b (the effect of M at T1 on Y at T2) to identify longitudinal mediation while controlling for the autoregressive effects of X, M, and Y. The advantages of a half-longitudinal design facilitate a cost-effective solution to our research problem. According to the World Health Organization,Citation89 gender, age, and subjective SES (“How would you rate the socioeconomic status of your family in this town?” (1 = “very poor”, 5 = “very good”)) are three key factors affecting adolescent mental health and were controlled for as covariates in the CLPM and half-longitudinal mediation models. Finally, effect sizes were determined according to the criteria.Citation90 For the longitudinal cross-lagged model, the effect size is small if |β| is between 0.03 and 0.07, medium if |β| is between 0.07 and 0.12, and large if |β| is greater than 0.12.Citation90

Results

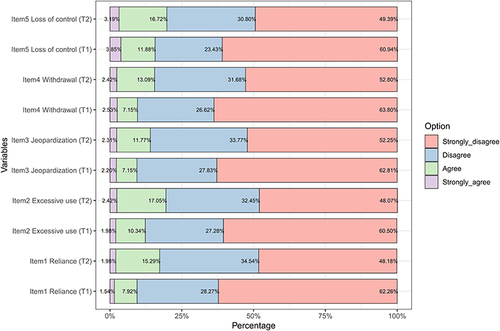

Prevalence of AI Dependence and Descriptions of Other Variables

showed the prevalence of AI dependence among adolescents. Across the five items, 9.56% of the adolescents reported subjective dependence on AI at T1, increasing to 17.27% at T2; 12.32% reported excessive AI use at T1, increasing to 19.74% at T2; 9.35% reported AI use jeopardizing their studies and interpersonal relationships at T1, increasing to 14.08% at T2; 9.68% of the adolescents at T1 reported withdrawal symptoms after stopping AI use, increasing to 15.51% at T2; and 15.73% of the adolescents at T1 reported a loss of control over AI use, increasing to 19.91% at T2. showed that the means and SDs of AI dependence at T1 and T2 were 1.52 ± 0.62 and 1.70 ± 0.72, respectively. Using a mean score greater than 2 as the cutoff for subjective AI dependence, the results indicated that 17.14% of the adolescents experienced AI dependence at T1, increasing to 24.19% at T2. In general, adolescent dependence on AI was not a universal phenomenon but showed an increasing trend.

Table 1 The Descriptive Statistics and Correlation Results of the Variables

Figure 2 Prevalence of AI dependence among adolescents by item.

showed that the adolescents’ anxiety and depression decreased slightly at T2; their AI use motivation (escape motivation, social motivation, entertainment motivation) also increased slightly, but their instrumental motivation was stable. The correlations between the variables were significant, except for the correlations between T1 AI dependence and T2 instrumental motivation (r = 0.02, p = 0.150) and between T1 AI dependence and T2 entertainment motivation (r = 0.03, p = 0.067).

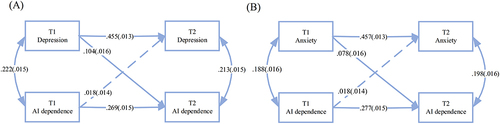

Results from the Cross-Lagged Model

The results () showed cross-lagged models between depression and AI dependence and between anxiety and AI dependence. Both the former (χ2 = 27.211, df = 6, p < 0.001; CFI = 0.991, TLI = 0.978; RMSEA = 0.030, 90% CI [0.019, 0.042]) and latter (χ2 =31.122, df = 6, p < 0.001; CFI = 0.989, TLI = 0.972; RMSEA = 0.033, 90% CI [0.022, 0.045]) models showed good fit. shows that after controlling for autoregressive effects, depression at T1 predicted AI dependence at T2 (β = 0.104, p < 0.001), whereas AI dependence at T1 did not predict depression at T2 (β = 0.018, p = 0.188). shows that after controlling for autoregressive effects, anxiety at T1 predicted AI dependence at T2 (β = 0.078, p < 0.001), whereas AI dependence at T1 failed to predict anxiety at T2 (β = 0.018, p = 0.191).

Figure 3 The cross-lagged effects between mental health problems and AI dependence.

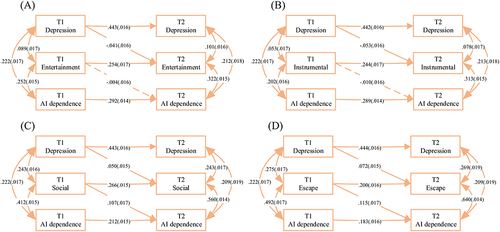

Results from Half-Longitudinal Mediation

For the depression → AI use motivation → AI dependence model, mediation models of AI entertainment motivation () (χ2 = 37.761, df = 4, p < 0.001; CFI = 0.991, TLI = 0.924; RMSEA = 0.047, 90% CI [0.034, 0.061]), AI instrumental motivation () (χ2 = 35.827, df = 4, p < 0.001; CFI = 0.991, TLI = 0.927; RMSEA = 0.046, 90% CI [0.033, 0.060]), AI social motivation () (χ2 = 53.686, df = 4, p < 0.001; CFI = 0.991, TLI = 0.922; RMSEA 0.057, 90% CI = [0.044, 0.071]), and AI escape motivation () (χ2 = 70.845, df = 4, p < 0.001; CFI = 0.989, TLI = 0.910; RMSEA = 0.066, 90% CI [0.053, 0.080]) all showed acceptable fits.

Figure 4 The results of the depression → AI use motivation → AI dependence model.

After controlling for autoregressive effects, T1 depression negatively predicted T2 AI entertainment motivation (β = −0.041, p = 0.011), whereas T1 entertainment motivation failed to predict T2 AI dependence (β = −0.004, p = 0.804). T1 depression negatively predicted T2 AI instrumental motivation (β = −0.053, p = 0.001), whereas T1 AI instrumental motivation failed to predict T2 AI dependence (β = −0.010, p = 0.535). T1 depression predicted T2 AI social (β = 0.050, p = 0.001) and AI escape (β = 0.072, p < 0.001) motivation, and T1 AI social motivation (β = 0.107, p < 0.001) and T1 AI escae motivation (β = 0.115, p < 0.001) both predicted T2 AI dependence. The bootstrap mediation test () showed that the mediation effects of AI social motivation (indirect effect = 0.005, p = 0.001, 95% CI [0.002, 0.006]) and AI escape motivation (indirect effect = 0.008, p < 0.001, 95% CI [0.006, 0.015]) were significant, whereas AI entertainment motivation (indirect effect = 0.000, p = 0.826, 95% CI [−0.001, 0.002]) and AI instrumental motivation (indirect effect = 0.000, p = 0.529, 95% CI [−0.001, 0.001]) did not play a mediating role.

Table 2 The Indirect Effect of Half-Longitudinal Mediation Analysis

For the Anxiety → AI Use Motivation → AI Dependence model, mediation models of AI entertainment motivation () (χ2 = 22.707, df = 4, p < 0.001; CFI = 0.994, TLI = 0.953; RMSEA = 0.036, 90% CI [0.023, 0.050]), AI instrumental motivation () (χ2 = 22.106, df = 4, p < 0.001; CFI = 0.995, TLI = 0.956; RMSEA = 0.034, 90% CI [0.021, 0.049]), AI social motivation () (χ2 = 37.363, df = 4, p < 0.001; CFI = 0.993, TLI = 0.946; RMSEA = 0.047, 90% CI [0.034, 0.061]), AI escape motivation () (χ2 = 56.826, df = 4, p < 0.001; CFI = 0.991, TLI = 0.926; RMSEA = 0.059, 90% CI [0.046, 0.073]) all showed acceptable fit.

Figure 5 The results of the anxiety → AI use motivation → AI dependence model.

After controlling for the autoregressive effects, T1 anxiety failed to predict T2 AI entertainment motivation (β = −0.021, p = 0.170), and T1 AI entertainment motivation failed to predict T2 AI dependence (β = −0.005, p = 0.777). T1 anxiety negatively predicted T2 AI instrumental motivation (β = −0.038, p = 0.011), whereas T1 AI instrumental motivation failed to predict T2 AI dependence (β = −0.012, p = 0.467). T1 anxiety predicted T2 social motivation (β = 0.057, p < 0.001) and T2 escape motivation (β = 0.074, p < 0.001), and T1 AI social motivation (β = 0.110, p < 0.001) and T1 AI escape motivation (β = 0.118, p < 0.001) predicted T2 AI dependence. The bootstrap test () showed that AI entertainment motivation (indirect effect = 0.000, p = 0.822, 95% CI [−.001, 0.002]) and AI instrumental motivation (indirect effect = 0.000, p = 0.560, 95% CI [−.001, 0.001]) were not mediators, whereas the mediation effects of AI social motivation (indirect effect = 0.006, p = 0.002, 95% CI [0.003, 0.009]) and AI escape motivation (indirect effect = 0.009, p < 0.001, 95% CI [0.006, 0.013]) were significant.

Discussion

This study used two waves of longitudinal data to explore the relationship between mental health problems and AI dependence and the mechanisms involved. We found that adolescent AI dependence increased over time, and 17.14% of the adolescents experienced AI dependence at T1 and 24.19% at T2. Only mental health problems (anxiety and depression) positively predicted later AI dependence, and not vice versa. AI escape motivation and AI social motivation mediated the relationship between mental health problems and AI dependence, whereas AI entertainment motivation and AI instrumental motivation did not. These findings provide a preliminary exploration of AI dependence and its relationship to psychological distress, with theoretical and practical implications.

Relationship Between Mental Health Problems and AI Dependence

The results showed that the effect of mental health problems on AI dependence was longitudinal, and unlike most previous studies in the technology dependence framework (eg, a bidirectional relationship between depression and problematic smartphone use),Citation91 no bidirectional relationship was found between them. Both H1 and H2 were supported. This is consistent with previous arguments that adolescents with emotional problems tend to use technology to cope and are at a higher risk of developing technology dependence.Citation91 Similarly, when in emotional distress, adolescents may perceive AI (chatbot, social robot) to be a friend or partner.Citation43 They turn to AI to share their emotions and increase self-disclosure for support, and the AI provides companionship, advice, safe spaces, and empathy to the adolescent.Citation5,Citation24 This ultimately promotes attachment to and dependence on AI.Citation29

AI dependence did not show any longitudinal prediction of mental health problems, in contrast to the findings of some qualitative research. Replika users reported distress and negative emotions when they became overly dependent on the chatbot.Citation29 Reliance on AI can also disrupt or replace real-life relationships, which can threaten users’ mental health,Citation26 while some studies suggest that using AI can increase individuals’ social capital and benefit their mental health.Citation30 Why do our results contradict the findings of previous studies? There are several possible reasons. First, previous studies were cross-sectional and used a qualitative approachCitation26,Citation29 to show the concurrent effects of AI dependence on mental health problems. Such effects may be short-term (eg, a few days) and diminish in the long term. For example, Croes and Antheunis (2021) recently showed that in longitudinal interactions with social robots, people initially experience relationship formation and then form relationships that diminish over time because they find the chatbot less attractive and empathetic and show less self-disclosure. This reduction in interaction with AI may decrease the effects of AI dependence on mental health problems over a long period. Second, whereas previous findings were derived from studies of one AI technology (eg, a voice assistant, social robot, or chatbot), the present study conducted an overall evaluation of AI technology. Specific and general evaluations of AI technologies may have different influences. Third, the development of AI is at an early stage, with the prevalence of experienced AI dependence being very low (as shown in this study) and therefore hardly a threat to mental health. Finally, most previous research on AI dependence and mental health problems has been conducted with adult samples, unlike the adolescent sample in this study.

Mediating Role of Motivation to Use AI

Escape motivation showed a mediating effect between mental health problems and AI dependence. This result supports H3 and is consistent with previous findings and research on technology dependenceCitation56,Citation63–65 that adolescents turn to AI technology to escape emotional problems when experiencing depression or anxiety. Several studies have examined the effects of entertainment, instrumental, and social interaction motivations on actual AI use and intentions to continue using AI (risk of AI dependence)Citation92,Citation93 while ignoring the effects of escape motivation on AI dependence. The present study fills this gap and identified the mediating role of escape motivation in the relationship between adolescent mental health problems and AI dependence.

Social motivation also mediates the relationship between mental health problems and AI dependence, supporting H4. This is consistent with the core principle of attachment theory and need-to-belonging theory, which suggest that when faced with emotional problems or stress, adolescents use AI as a social actor, become attached to AI to feel secure and find connections with others to alleviate their negative emotions and psychological distress (eg, loneliness).Citation26,Citation29 As suggested, social motivation is indeed a force that increases users’ intentions to continue using AI.Citation93

Both entertainment and instrumental motivation failed to mediate the relationship between mental health problems and AI dependence, rejecting H5 and supporting H6. Our findings are inconsistent with research on technology dependence, which suggests that mental health distress may develop into technology dependence through entertainment motivation.Citation68,Citation69 Recent research may shed light on this, with both longitudinal and cross-sectional studies showing that entertainment motivation can increase ongoing AI use intentionsCitation92,Citation93 but not subsequent actual AI use.Citation92 The failure to increase the actual use of AI suggests that entertainment motivation contributes little to the formation of dependence on AI. There may also be another explanation. AI has had a shorter development time than previous technologies. While the development of traditional technologies (eg, smartphones) has a long history in creation, innovation, and application in our society, indicating mature systems and entertainment design, AI products are still at an early stage of development with less mature features and less well-designed entertainment, especially for adolescents (eg, most AI products are designed for adultsCitation12).

For instrumental motivation, the insignificant mediation supports H6 and previous technology literature that shows mental health problems to be less likely to motivate individuals to pursue instrumental needs (eg, seeking information) than social connection or escape needs.Citation75 Instrumental use of technology also has less impact on adolescents’ technology dependence than entertainment and social motivation.Citation56

Implications, Future Directions, and Limitations

This study fills a gap in the literature on AI dependence by examining, for the first time, the prevalence of AI dependence among adolescents and the longitudinal relationship between AI dependence and mental health problems among adolescents, as well as the mechanisms between the two. The increasing trend of AI dependence among adolescents argues for more attention to be paid to this issue in the future, but there should be no undue panic, as the impact of AI dependence on psychological distress is limited at this stage. This is also the first study to examine AI dependence as a whole rather than assessing a specific AI product.Citation5,Citation27,Citation29,Citation35 AI technology has increasingly penetrated young people’s homes and daily lives, and the results of this study could provide some guidance and suggestions for research and practice on the topic of AI dependence and mental health among young people. The findings have theoretical implications by showing differences between AI technology and older technologies, such as smartphones or computers, in terms of their relationships with mental health problems and technology use. This may support the uniqueness of human-AI interactions by highlighting AI as not only a mediator but also a direct communicator/actor in social interactions.Citation11,Citation36 In addition, theories and literature on the technology dependence framework were examined in the context of AI technologies, suggesting a potential guiding role of traditional technologies in exploring current issues related to AI technologies. Moreover, from a more ecological perspective (rather than a laboratory perspective), this study may also indicate that the use of AI can help alleviate negative emotions and address mental health problems in adolescents,Citation4,Citation5,Citation12,Citation24 showing the promising applications of AI in adolescent emotional problems.

In terms of future research, first, research on the general and specific assessment of AI technology should be given equal weight, as AI technologies and related products are diverse and have different functions, and young people do not use just one type of AI technology. Focusing on these two aspects will facilitate a better understanding of the impact of AI on youth development. Second, the development of AI technology is still in its early stages, and it may be too early to properly talk about AI panic. Instead, future research should focus on how the further development of AI affects the development of young people, as AI technology is innovating rapidly. Third, mental health should focus on not only the psychopathological aspects but also positive indicators (eg, well-being or life satisfaction). Current indicators (anxiety/depression) focus on negative emotions, and future research should also explore the relationship between positive mental health indicators and AI dependence.

This study has several limitations. First, the data in this study were self-reported, and subjective bias may have affected the results. Second, this study was based on Chinese adolescents, whereas technology use among adolescents varies by country and culture (eg, adoption, type, etc.).Citation94 Third, the participants who dropped and stayed at follow-up showed differences in some variables at T1, but the effect size of this discrepancy was too small to be considered.

Conclusion

The prevalence of subjectively perceived AI dependence in adolescents was 17.14% at T1 and 24.19% at T2, indicating an upward trend. AI dependence in adolescents was not predictive of subsequent anxiety and depression, suggesting panic over AI technology is unnecessary at present. In contrast, mental health problems had a positive prospective effect on AI dependence, which was mediated by motivations for AI use. Specifically, AI escape motivation and AI social motivation mediated the longitudinal effect between anxiety and AI dependence as well as the effect between depression and AI dependence, whereas AI entertainment motivation and AI instrumental motivation showed no mediation towards anxiety and depression. Overall, the effect sizes for the cross-lagged and mediation effects were medium.

Data Accessibility Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Ethics

The questionnaire and methodology for this study were approved by the Institutional Review Board (IRB) of the State Key Laboratory of Cognitive Neuroscience and Learning of Beijing Normal University (Ethics approval number: CNL_A_0003_008). And the informed consent has been appropriately obtained.

Disclosure

The authors report no conflicts of interest in this work.

Additional information

Funding

References

- Gillath O, Ai T, Branicky M, Keshmiri S, Davison R, Spaulding R. Attachment and trust in artificial intelligence. Comput Human Behav. 2021;115:106607. doi:10.1016/j.chb.2020.106607

- Song X, Xu B, Zhao Z. Can people experience romantic love for artificial intelligence? An empirical study of intelligent assistants. Inf Manag. 2022;59(103595). doi:10.1016/j.im.2022.103595

- Björling EA, Thomas K, Rose EJ, Cakmak M. Exploring Teens as Robot Operators, Users and Witnesses in the Wild. Front Robot AI. 2020;7:1–15. doi:10.3389/frobt.2020.00005

- Laban G, Kappas A, Morrison V, Cross ES. Human-Robot Relationship: long-term effects on disclosure, perception and well-being. PsyArXiv. 2022. doi:10.31234/osf.io/6z5ry

- Ta V, Griffith C, Boatfield C, et al. User experiences of social support from companion chatbots in everyday contexts: thematic analysis. J Med Internet Res. 2020;22(3):1–10. doi:10.2196/16235

- Croes EAJ, Antheunis ML. Can we be friends with Mitsuku? A longitudinal study on the process of relationship formation between humans and a social chatbot. J Soc Pers Relat. 2021;38(1):279–300. doi:10.1177/0265407520959463

- Dao XQ, Le NB, Nguyen TMT. AI-Powered MOOCs: video Lecture Generation. ACM Int Conf Proceeding Ser. 2021;95–102. doi:10.1145/3459212.3459227

- Ahmed A, Hassan A, Aziz S, et al. Chatbot features for anxiety and depression: a scoping review. Health Informatics J. 2023;29(1):1–17. doi:10.1177/14604582221146719

- Kats R Are Kids and Teens Using Smart Speakers?; 2018. Available from: https://www.insiderintelligence.com/content/the-smart-speaker-series-kids-teens-infographic. Accessed March 1, 2024.

- Bleu N 44 Latest Voice Search Statistics For 2023. bloggingwizard; 2023. Available from: https://bloggingwizard.com/voice-search-statistics/. Accessed March 1, 2024.

- Guzman AL, Lewis SC. Artificial intelligence and communication:A Human-Machine Communication research agenda. New Media Soc. 2020;22(1):70–86. doi:10.1177/1461444819858691

- UNICEF. Adolescent Perspectives on Artificial Intelligence; 2021. Available from: https://www.unicef.org/globalinsight/stories/adolescent-perspectives-artificial-intelligence. Accessed March 1, 2024.

- JAGW. AI and Tomorrow’s Jobs; 2023. Available from: https://www.myja.org/news/latest/survey-teens-and-ai. Accessed March 1, 2024.

- Hasse A, Cortesi S, Lombana-Bermudez A, Gasser U Youth and artificial intelligence: where we stand.; 2019. Available from: https://cyber.harvard.edu/publication/2019/youth-and-artificial-intelligence/where-we-stand. Accessed 1 March 2024.

- Dai J, Scherf KS. Puberty and functional brain development in humans: convergence in findings? Dev Cogn Neurosci. 2019;39:100690. doi:10.1016/j.dcn.2019.100690

- Shidhaye R. ScienceDirect Global priorities for improving access to mental health services for adolescents in the post-pandemic world. Curr Opin Psychol. 2023;53:101661. doi:10.1016/j.copsyc.2023.101661

- Sisk LM, Gee DG. Stress and adolescence: vulnerability and opportunity during a sensitive window of development. Curr Opin Psychol. 2022;44:286–292. doi:10.1016/j.copsyc.2021.10.005

- Crone EA, Konijn EA. Media use and brain development during adolescence. Nat Commun. 2018;9(1):1–10. doi:10.1038/s41467-018-03126-x

- Orben A, Przybylski AK, Blakemore SJ, Kievit RA. Windows of developmental sensitivity to social media. Nat Commun. 2022;13(1):1–10. doi:10.1038/s41467-022-29296-3

- Hamilton I. Artificial Intelligence In Education: teachers’ Opinions On AI In The Classroom. Forbes Advis. 2023.

- Schiel J, Bobek BL, Schnieders JZ. High School Students’ Use and Impressions of AI Tools. 2023;1–38. Available from: extension://cdonnmffkdaoajfknoeeecmchibpmkmg/assets/pdf/web/viewer.html?file=https%3A%2F%2F<uri>www.act.org%2Fcontent%2Fdam%2Fact%2Fsecured%2Fdocuments%2FHigh-School-Students-Use-and-Impressions-of-AI-Tools-Accessible.pdf. Accessed March 1, 2024.

- The Learning Network. What Students are Saying About ChatGPT? The New York Times; 2023.

- Lo CK. What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature. Educ Sci. 2023;13(4). doi:10.3390/educsci13040410

- Björling EA, Ling H, Bhatia S, Matarrese J. Sharing stressors with a social robot prototype: what embodiment do adolescents prefer? Int J Child-Computer Interact. 2021;28:100252. doi:10.1016/j.ijcci.2021.100252

- Wiederhold BK. “Alexa, Are You My Mom?” the Role of Artificial Intelligence in Child Development. Cyberpsychol Behav Soc Net. 2018;21(8):471–472. doi:10.1089/cyber.2018.29120.bkw

- Xie T, Pentina I. Attachment Theory as a Framework to Understand Relationships with Social Chatbots: a Case Study of Replika. Proc 55th Hawaii Int Conf Syst Sci. 2022. doi:10.24251/hicss.2022.258

- Hu B, Mao Y, Kim KJ. How social anxiety leads to problematic use of conversational AI: the roles of loneliness, rumination, and mind perception. Comput Human Behav. 2023;143747. doi:10.1016/j.chb.2023.107760

- Sandoval EB. Addiction to Social Robots: a Research Proposal. ACM/IEEE Int Conf Human-Robot Interact. 2019;526–527. doi:10.1109/HRI.2019.8673143

- Laestadius L, Bishop A, Gonzalez M, Illenčík D, Campos-Castillo C. Too human and not human enough: a grounded theory analysis of mental health harms from emotional dependence on the social chatbot Replika. New Media Soc. 2022;1(3):1–19. doi:10.1177/14614448221142007

- Ng YL. Exploring the association between use of conversational artificial intelligence and social capital: survey evidence from Hong Kong. New Media Soc. 2022;1(2):1–16. doi:10.1177/14614448221074047

- Xie T, Pentina I, Hancock T. Friend, mentor, lover: does chatbot engagement lead to psychological dependence? J Serv Manag. 2023;34(4):806–828. doi:10.1108/JOSM-02-2022-0072

- Busch PA, Mccarthy S. Antecedents and consequences of problematic smartphone use: a systematic literature review of an emerging research area. Comput Human Behav. 2021;114:106414. doi:10.1016/j.chb.2020.106414

- Orben A. The Sisyphean Cycle of Technology Panics. Perspect Psychol Sci. 2020;15(5):1143–1157. doi:10.1177/1745691620919372

- Pemberton A. Artificial intelligence helpful to teens? 2023. Newsday Reporter Available from: https://newsday.co.tt/2023/03/31/artificial-intelligence-helpful-to-teens/. Accessed March 1, 2024.

- Skjuve M, Følstad A, Fostervold KI, Brandtzaeg PB. My Chatbot Companion - a Study of Human-Chatbot Relationships. Int J Hum Comput Stud. 2021;149. doi:10.1016/j.ijhcs.2021.102601

- Sundar SS, Lee EJ. Rethinking Communication in the Era of Artificial Intelligence. Hum Commun Res. 2022;48(3):379–385. doi:10.1093/hcr/hqac014

- Brand M, Wegmann E, Stark R, et al. The Interaction of Person-Affect-Cognition-Execution (I-PACE) model for addictive behaviors: update, generalization to addictive behaviors beyond internet-use disorders, and specification of the process character of addictive behaviors. Neurosci Biobehav Rev. 2019;104:1–10. doi:10.1016/j.neubiorev.2019.06.032

- Sullivan BM, George AM. The Association of Motives with Problematic Smartphone Use: a Systematic Review. Cyberpsychology J Psychosoc Res Cybersp. 2023;17(1):2. doi:10.5817/CP2023-1-2

- Wang Y, Liu B, Zhang L, Zhang P. Anxiety, Depression, and Stress Are Associated With Internet Gaming Disorder During COVID-19: fear of Missing Out as a Mediator. Front Psychiatry. 2022;13:827519. doi:10.3389/fpsyt.2022.827519

- Elhai JD, Levine JC, Hall BJ. The relationship between anxiety symptom severity and problematic smartphone use: a review of the literature and conceptual frameworks. J Anxiety Disord. 2019;62:45–52. doi:10.1016/j.janxdis.2018.11.005

- Wadley G, Smith W, Koval P, Gross JJ. Digital Emotion Regulation. Curr Dir Psychol Sci. 2020;294:412–418. doi:10.1177/0963721420920592

- Zhao T, Cui J, Hu J, Dai Y, Zhou Y. Is Artificial Intelligence Customer Service Satisfactory? Insights Based on Microblog Data and User Interviews. Cyberpsychol Behav Soc Net. 2022;25(2):110–117. doi:10.1089/cyber.2021.0155

- Kim A, Cho M, Ahn J, Sung Y. Effects of Gender and Relationship Type on the Response to Artificial Intelligence. Cyberpsychol Behav Soc Net. 2019;22(4):249–253. doi:10.1089/cyber.2018.0581

- Goeders NE. The impact of stress on addiction. Eur Neuropsychopharmacol. 2003;13(6):435–441. doi:10.1016/j.euroneuro.2003.08.004

- Sun J, Liu Q, Yu S. Child neglect, psychological abuse and smartphone addiction among Chinese adolescents: the roles of emotional intelligence and coping style. Comput Human Behav. 2019;90:74–83. doi:10.1016/j.chb.2018.08.032

- Zhou N, Cao H, Wu L. A Four-Wave, Cross-Lagged Model of Problematic Internet Use and Mental Health Among Chinese College Students: disaggregation of Within-Person and Between-Person Effects. Dev Psychol. 2020;56(5):1009–1021. doi:10.1037/dev0000907.supp

- Kardefelt-Winther D. A conceptual and methodological critique of Internet addiction research: towards a model of compensatory internet use. Comput Human Behav. 2014;31(1):351–354. doi:10.1016/j.chb.2013.10.059

- Kraut R, Patterson M, Lundmark V, Kiesler S, Mukopadhyay T, Scherlis W. Internet Paradox:A Social Technology That Reduces Social Involvement and Psychological Well-Being ? S Afr Med J. 1998;53(9):1017–1031. doi:10.1037//0003-066X.53.9.1017

- Kiesler S, Siegel J, McGuire TW. Social psychological aspects of computer-mediated communication. Am Psychol. 1984;39(10):1123–1134. doi:10.1037/0003-066X.39.10.1123

- Huang S, Lai X, Ke L, et al. Smartphone stress: concept, structure, and development of measurement among adolescents. Cyberpsychology J Psychosoc Res Cybersp. 2022;16(5):1.

- Nick EA, Kilic Z, Nesi J, Telzer EH, Lindquist KA, Prinstein MJ. Adolescent Digital Stress: frequencies, Correlates, and Longitudinal Association With Depressive Symptoms. J Adolesc Heal. 2022;70(2):336–339. doi:10.1016/j.jadohealth.2021.08.025

- Drouin M, Sprecher S, Nicola R, Perkins T. Is chatting with a sophisticated chatbot as good as chatting online or FTF with a stranger? Comput Human Behav. 2022;128:107100. doi:10.1016/j.chb.2021.107100

- Cho Y. Trajectory of Smartphone Dependency and Associated Factors in School-Aged Children. Korean J Child Stud. 2019;40(6):49–62. doi:10.5723/kjcs.2019.40.6.49

- Choi TR, Drumwright ME. “OK, Google, why do I use you?” Motivations, post-consumption evaluations, and perceptions of voice AI assistants. Telemat Informatics. 2021;62:101628. doi:10.1016/j.tele.2021.101628

- Ng YL, Lin Z. Exploring conversation topics in conversational artificial intelligence–based social mediated communities of practice. Comput Human Behav. 2022;134:107326. doi:10.1016/j.chb.2022.107326

- Meng H, Cao H, Hao R, et al. Smartphone use motivation and problematic smartphone use in a national representative sample of Chinese adolescents: the mediating roles of smartphone use time for various activities. J Behav Addict. 2020;9(1):163–174. doi:10.1556/2006.2020.00004

- Zhang MX, Wu AMS. Effects of childhood adversity on smartphone addiction: the multiple mediation of life history strategies and smartphone use motivations. Comput Human Behav. 2022;134:107298. doi:10.1016/j.chb.2022.107298

- Hazan C, Shaver PR. Attachment as an organizational framework for research on close relationships attachment as an organizational framework for research on close relationships. Psychol Inq. 1994;5(1):1–22. doi:10.1207/s15327965pli0501

- Kim E, Koh E. Avoidant attachment and smartphone addiction in college students: the mediating effects of anxiety and self-esteem. Comput Human Behav. 2018;84:264–271. doi:10.1016/j.chb.2018.02.037

- Baumeister RF, Leary MR. The Need to Belong: desire for Interpersonal Attachments as a Fundamental Human Motivation. Psychol Bull. 1995;117(3):497–529. doi:10.1037/0033-2909.117.3.497

- Gao W, Liu Z, Li J. How does social presence influence SNS addiction? A belongingness theory perspective. Comput Human Behav. 2017;77:347–355. doi:10.1016/j.chb.2017.09.002

- Baumeister RF. Suicide as escape from self. Psychol Rev. 1990;97(1):90–113. doi:10.1037/0033-295X.97.1.90

- Baumeister RF. Escaping the Self: Alcoholism, Spirituality, Masochism, and Other Flights from the Burden of Selfhood. Harper Collins; 1991.

- Kwon JH, Chung CS, Lee J. The effects of escape from self and interpersonal relationship on the pathological use of internet games. Community Ment Health J. 2011;47(1):113–121. doi:10.1007/s10597-009-9236-1

- Walburg V, Mialhes A, Moncla D. Does school-related burnout influence problematic Facebook use? Child Youth Serv Rev. 2016;61:327–331. doi:10.1016/j.childyouth.2016.01.009

- Teng Z, Pontes HM, Nie Q, Griffiths MD, Guo C. Depression and anxiety symptoms associated with internet gaming disorder before and during the COVID-19 pandemic: a longitudinal study. J Behav Addict. 2021;10(1):169–180. doi:10.1556/2006.2021.00016

- Blumler JG. The role of theory in uses and gratifications studies. Communic Res. 1979;6(1):9–36. doi:10.1177/009365027900600102

- Whiting A, Williams D. Why people use social media: a uses and gratifications approach. Qual Mark Res an Int J. 2013;16(4):362–369. doi:10.1108/QMR-06-2013-0041

- Karmakar I. A review of IT addiction in IS research. AMCIS 2020 Proceedings. 2020;19:1–10.

- Huang S, Lai X, Zhao X, et al. Beyond Screen Time: exploring the Associations between Types of Smartphone Use Content and Adolescents’ Social Relationships. Int J Environ Res Public Health. 2022;19(15):8940. doi:10.3390/ijerph19158940

- Lai X, Nie C, Huang S, Yao Y, Li Y, Dai X. Classes of problematic smartphone use and information and communication technology(ICT) self-efficacy. J Appl Dev Psychol. 2022;83(19):101481. doi:10.1016/j.appdev.2022.101481

- Leung L. Stressful Life Events, Motives for Internet Use, and Social Support Among Digital Kids. CyberPsychol Behav. 2007;10(2):204–215. doi:10.1089/cpb.2006.9967

- Fischer-Grote L, Kothgassner OD, Felnhofer A. Risk factors for problematic smartphone use in children and adolescents: a review of existing literature. Neuropsychiatrie. 2019;33(4):179–190. doi:10.1007/s40211-019-00319-8

- Soh PCH, Charlton JP, Chew KW. The influence of parental and peer attachment on internet usage motives and addiction. First Monday. 2014;19(7). doi:10.5210/fm.v19i7.5099

- Kim JH, Seo M, David P. Alleviating depression only to become problematic mobile phone users: can face-to-face communication be the antidote? Comput Human Behav. 2015;51(PA):440–447. doi:10.1016/j.chb.2015.05.030

- Gözde H, Gürbüz A, Demir T, Özcan BG. Use of social network sites among depressed adolescents. Behav Inf Technol. 2017;36(5):517–523. doi:10.1080/0144929X.2016.1262898

- Cohen J. A power primer. Psychol Bull. 1992;112:155–159. doi:10.1037/0033-2909.112.1.155

- Andreas JB, Brunborg GS. Depressive symptomatology among Norwegian adolescent boys and girls: the patient health Questionnaire-9 (PHQ-9) psychometric properties and correlates. Front Psychol. 2017;8(887):1–11. doi:10.3389/fpsyg.2017.00887

- Spitzer RL, Kroenke K, Williams JBW, Löwe B. A brief measure for assessing generalized anxiety disorder: the GAD-7. Arch Intern Med. 2006;166(10):1092–1097. doi:10.1001/archinte.166.10.1092

- Kwon M, Kim DJ, Cho H, Yang S. The smartphone addiction scale: development and validation of a short version for adolescents. PLoS One. 2013;8(12):1–7. doi:10.1371/journal.pone.0083558

- Kim D, Lee Y, Lee J, Nam JEK, Chung Y. Development of Korean smartphone addiction proneness scale for youth. PLoS One. 2014;9(5):1–8. doi:10.1371/journal.pone.0097920

- Lin YH, Lin SH, Yang CHC, Kuo TBJ. Psychopathology of Everyday Life in the 21st Century: smartphone Addiction. In: Montag C, Reuter M, editors. Internet Addiction: Neuroscientific Approaches and Therapeutical Implications Including Smartphone Addiction. Springer Nature; 2017.

- Huang S, Lai X, Xue Y, Zhang C, Wang Y. A network analysis of problematic smartphone use symptoms in a student sample. J Behav Addict. 2021;9(4):1032–1043. doi:10.1556/2006.2020.00098

- Huang S, Lai X, Li Y, et al. Do the core symptoms play key roles in the development of problematic smartphone use symptoms. Front Psyciatry. 2022;13:959103. doi:10.3389/fpsyt.2022.959103

- Cho CH, Lee SY, Keel YH. A study on consumers’ perception of and use motivation of Artificial Intelligence (AI) speaker. J Korea Contents Assoc. 2019;19(3):138–154.

- Preacher KJ. Advances in mediation analysis: a survey and synthesis of new developments. Annu Rev Psychol. 2015;66:825–852. doi:10.1146/annurev-psych-010814-015258

- Cole DA, Maxwell SE. Testing Mediational Models with Longitudinal Data: questions and Tips in the Use of Structural Equation Modeling. J Abnorm Psychol. 2003;112(4):558–577. doi:10.1037/0021-843X.112.4.558

- Roeder KM, Cole DA. Prospective Relation Between Peer Victimization and Suicidal Ideation: potential Cognitive Mediators. Cognit Ther Res. 2018;42(6):769–781. doi:10.1007/s10608-018-9939-0

- Currie C, Zanotti C, Currie D, et al. Social Determinants of Health and Well-Being among Young People. Health Behaviour in School-Aged Children (HBSC) Study: international Report from the 2009/2010 Survey. Int J Med. 2012. doi:10.1358/dof.2005.030.10.942816

- Orth U, Meier LL, Bühler JL, et al. Effect Size Guidelines for Cross-Lagged Effects. Psychol Methods. 2022. doi:10.1037/met0000499

- Shin M, Juventin M, Ting J, Chu W, Manor Y, Kemps E. Online media consumption and depression in young people: a systematic review and meta-analysis. Comput Human Behav. 2022;128:107129. doi:10.1016/j.chb.2021.107129

- Ng YL. A longtidudianl model of continued acceptance of conversational artificial intelligence. SSRN. 2023;1–35. doi:10.2139/ssrn.4348453

- Xie Y, Zhao S, Zhou P, Liang C. Understanding Continued Use Intention of AI Assistants. J Comput Inf Syst. 2023;1(2):1–14. doi:10.1080/08874417.2023.2167134

- Jackson LA, Liang WJ. Cultural differences in social networking site use: a comparative study of China and the United States. Comput Human Behav. 2013;29(3):910–921. doi:10.1016/j.chb.2012.11.024