Abstract

A significant body of evidence from cross-linguistic and developmental studies converges to suggest that co-speech iconic gesture mirrors language. This paper aims to identify whether gesture reflects impaired spoken language in a similar way. Twenty-nine people with aphasia (PWA) and 29 neurologically healthy control participants (NHPs) produced a narrative discourse, retelling the story of a cartoon video. Gesture and language were analysed in terms of semantic content and structure for two key motion events. The aphasic data showed an influence on gesture from lexical choices but no corresponding clausal influence. Both the groups produced gesture that matched the semantics of the spoken language and gesture that did not, although there was one particular gesture–language mismatch (semantically “light” verbs paired with semantically richer gesture) that typified the PWA narratives. These results indicate that gesture is both closely related to spoken language impairment and compensatory.

Introduction

The relationship between gesture and spoken language is an inveterate topic in linguistic and psycholinguistic research, and investigation into the gestural abilities of people with aphasia (PWA) stretches back decades (e.g. Cicone, Wapner, Foldi, Zurif, & Gardner, Citation1979). Co-speech iconic gestures are of particular interest because these are the gestures which occur spontaneously alongside language, depicting attributes of the events or objects under discussion without being conventionalised symbols (McNeill, Citation1992). For example, in English a spoken sentence such as, “he swung over to the other side”, might be accompanied by a gesture where the hands move from right-to-left in an arc-shape. Gesture and language co-ordinate in terms of both meaning and timing (Streeck, Citation1993) and this synchrony raises questions about how the two modalities encode different aspects of meaning (linguistic and imagistic) and yet operate in collaboration.

Gesture and language are manifestly not the same thing but, as the above example shows, they are intrinsically linked. Two main groups of theories exist in the literature about the nature of this link. (1) Some researchers (de Ruiter, Citation2000; Kita & Özyürek, Citation2003; McNeill, Citation2000) have suggested that gesture arises pre-linguistically during conceptual preparations for speaking but is influenced by language parameters (such as clause structure and lexical semantics) via feedback from linguistic processing. In this framework, the link occurs at the interface between thinking and speaking and so can be summarised as the “Interface Hypothesis”. (2) Others (Butterworth & Hadar, Citation1989; Hadar & Butterworth, Citation1997; Krauss & Hadar, Citation1999; Krauss, Chen, & Gottesman, Citation2000) suggest that gesture arises closer to language during lexical retrieval, a framework that can be summarised as the “Lexical Hypothesis” (see de Ruiter and de Beer (Citation2013) for a recent detailed argument for reducing these sets of theories to two meaningfully different hypotheses). In the current paper, these two main hypotheses are differentiated by making a direct comparison between the key dataset in the gesture literature (Kita & Özyürek, Citation2003) and new corresponding data from the current study on a large group of speakers with aphasia.

It may be argued that there is no meaningful distinction between a gesture which originates from lexical retrieval processes and one which originates from the interface between thinking and speaking, if indeed that interface is influenced by language parameters. Although this would be the case if all the models licensed feedback from language to thought, the Lexical Hypothesis models posit one-directional processing so, in the case of fluent speech, there is no online interaction between language and conceptualisation. In the case of lexical retrieval difficulties, the Lexical Hypothesis suggests either that gesture provides a semantic boost (Butterworth & Hadar, Citation1989; Hadar & Butterworth, Citation1997; Krauss & Hadar, Citation1999; Krauss et al., Citation2000), or a “re-run” through conceptual and/or lexical selection processes (Hadar & Butterworth, Citation1997). This distinction is important because the Interface Hypothesis thereby provides a mechanism (through feedback) for gesture to either reflect linguistic choices made in the face of language difficulty or to compensate for them. In the Lexical Hypothesis, because of the lack of feedback, gestures cannot themselves adapt to difficulties experienced in the language system, but instead their role is to maintain key semantic information whilst a new conceptualisation (Hadar & Butterworth, Citation1997) or new lexical search process (Butterworth & Hadar, Citation1989; Hadar & Butterworth, Citation1997; Krauss & Hadar, Citation1999; Krauss et al., Citation2000) is carried out. This is a significant distinction between the two main hypotheses and the most relevant one if these models are to be used to explore gesture and language impaired data.

The key dataset in this debate comes from Kita & Özyürek (Citation2003), who videoed healthy adult speakers of English, Turkish and Japanese, talking about two events depicted in a cartoon in which a character changes location (“swinging” and “rolling down”). They found that speakers used gesture which matched the semantic and syntactic packaging of information in their spoken language. The language used to describe motion events across languages can (optionally) include information about the change of location, the path (the start point, route or end point of the movement) and the manner in which the movement is carried out (Talmy, Citation1988, Citation2000). Manner meaning is multidimensional (Slobin, Citation2004; Talmy, Citation2000) including information about trajectory (“swing”) motor pattern (e.g. “fly”), rate (e.g. “run”) and attitude (e.g. “sneak”). Further, the semantics of a manner verb can include any number and combination of these components, or it can contain none of them (e.g. “go”), and the combination will vary across languages.

The manner verbs “swing” and “roll” were of interest to Kita & Özyürek (Citation2003) because these verbs package manner and path information were in two distinct ways. “Swing” is of interest because in English (but not all other languages) the arc-shaped trajectory of the motion (i.e. the manner) is encoded in the lemma (the encoding of path information was not under scrutiny in their study). “Roll” is of interest because in English, manner and path information are commonly encoded in the same clause, and when both are expressed, manner information is most likely to be encoded in the verb and path information in the prepositional phrase. Although this is not the only means by which English speakers have to describe a “rolling down” event, it is the linguistically unmarked option.

In Kita & Özyürek’s study (Citation2003), the English speakers all used the word “swing” whilst the Japanese and Turkish speakers, who lacked an equivalent word in those languages, used words similar to “go” and “fly” which include information about the change of location or motor pattern but do not include the arc meaning. The authors found that all but one of the 14 English speakers who gestured for this event exclusively used an arc-shaped movement, whereas less than a quarter of the Japanese speakers and less than half of the Turkish speakers produced arc gestures exclusively. Instead, the majority of participants in the Japanese/Turkish groups preferred to use either arc gestures in combination with straight gestures, or exclusively straight gestures. Kita and Özyürek (Citation2003) argued that the Japanese and Turkish participants’ more frequent selection of straight gestures reflected the semantics of the motion event in their languages influencing gesture choices.

As to the “rolling down” event, the 14 English speakers who gestured used the verb “roll” with a prepositional phrase (e.g. “down the hill’) in a single syntactic clause, whereas all but one of the 14 Japanese speakers and 15/16 of Turkish speakers used two clauses. The accompanying gesture of each group of speakers showed some similarities and some key differences across speakers of the different languages: the majority of speakers (around 70%) from all the three groups gestured both manner and path information in a single gesture (e.g. hand circling in the air while tracing a downward trajectory), however whereas this gesture type was used on its own by the majority of English speakers (80%), it was used in combination with either a manner-only or path-only gesture by the majority of Japanese (80%) and Turkish (65%) speakers. Kita and Özyürek (Citation2003) claim that the gestural patterns of speakers of Japanese and Turkish mirror the way key information was packaged in separate clauses in their verbal descriptions.

Looking more broadly at the cross-linguistic research, it appears that although there is substantial cross-linguistic variability in the rate (and level of detail) at which manner and path are expressed verbally (Slobin, Citation2006), when speakers gesture, they tend to prefer to gesture only one of these components at a time. Moreover, speakers across languages tend to favour the gestural expression of path information over manner (Chui, Citation2012), a pattern that has also been shown in the narratives of English speakers for other motion events (Cocks, Dipper, Middleton, & Morgan, Citation2011; Cocks, Dipper, Pritchard, & Morgan, Citation2013; Dipper, Cocks, Rowe, & Morgan, Citation2011). The tendency of all the three groups of speakers in Kita and Özyürek (Citation2003) to use gestures where manner and path are simultaneously depicted is therefore uncommon, and the tendency of the English speakers to do so exclusively is also particularly marked. This markedness may reflect the unusual nature of the cartoon event being described as “rolling” by these speakers, in which the main character swallows a bowling ball which then propels him down a hill. The character himself does not roll but the ball inside him does, creating a particular narrative or pragmatic impetus to depict the manner of movement, an impetus which could account for the high frequency of speakers using gestures depicting both manner and path information.

How can the data from speakers with aphasia add to this theoretical description of language and gesture relationships? Aphasia is a language impairment acquired through damage to the brain, most commonly from stroke, which can affect spoken language production. Specific features of language are usually damaged whilst others remain intact, meaning language can be impaired at individual levels of processing. For example, some speakers will have a clear idea about the meaning they want to express whilst being unable to access the words which represent that meaning, causing them to make lexical errors. A common lexical error across aphasia types is to substitute a difficult to retrieve verb with a semantically “light” verb containing little semantic information, such as come, go, make, take, get, give, do, have, be, put (Berndt, Haendiges, Mitchum, & Sandson, Citation1997). Other speakers with aphasia may know the words and their meaning, but have lost the links between those words and the clause structures in which they would be used, causing them to make clausal errors. Although these error types are characteristic of different types of aphasia, they are not confined to a single type and can be found across aphasia types, or can be produced by the same speaker. An example of both error types being produced by a single speaker comes from Dipper et al. (Citation2011), where the participant exhibits lexical difficulty (“the /ka/ the /ke/ the /pu/ um pissy er pussy cat no the um bird”) as well as clausal difficulty (“he rolls and keeps going falling falling falling falling”).

In order to evaluate the theories of gesture production outlined above, it is necessary to make a distinction between gestures which reflect the semantics of an individual word and those which reflect a larger unit of meaning. The reason for this is that, if a gesture coordinates with a unit larger than a single word, then it is likely to originate in conceptual processing at a stage before meaning is matched with individual lexical items (Hadar, Wenkert-Olenik, Krauss, & Soroker, Citation1998; Kita & Özyürek, Citation2003). On the other hand, when gestural meaning reflects lexical meaning, it is possible that the gesture originates more directly from the lexical selection process (Butterworth & Hadar, Citation1989). Since lexical errors can be differentiated from clausal errors in the spoken language of people with aphasia, it is possible to differentiate gestures accompanying lexical errors from those accompanying clausal errors and thus to distinguish between the Lexical Hypothesis and the Interface Hypothesis.

The Interface Hypothesis has only been directly tested with speakers with aphasia in two single case studies (discussed below) and not by larger-scale studies. The evidence base from large-scale gesture studies in aphasia is at present equivocal as to which model is more accurate. There is evidence of aphasia affecting the frequency of co-speech gesturing, with a body of evidence indicating that as a group, people with aphasia use more iconic gestures per word than do healthy controls (Feyereison, 1983; Hadar, Burstein, Krauss, & Soroker, Citation1998; Kemmerer, Chandrasekaran, & Tranel, Citation2007; Lanyon & Rose Citation2009; Pedelty, Citation1987; Sekine, Rose, Foster, Attard, & Lanyon, Citation2013) but this analysis does not help us to distinguish between the two hypotheses outlined above. The studies which look at the language profile of speakers with aphasia and attempt to relate specific aspects of it to gesture do provide some evidence pointing to a role for lexical knowledge, although they are agnostic about the mechanism for this effect. There is conflicting evidence about whether specific gesture types are associated with clinical aphasia sub-types but interestingly there is increasing consensus that (a) word-finding difficulty in aphasia produces an increase in iconic gestures; and (b) people with aphasia who have retained lexical semantic knowledge produce more meaning-laden gestures, including iconic co-speech gesture, than those with impaired semantics (Cocks et al., Citation2013; Hadar et al., Citation1998; Hogrefe, Ziegler, Weidinger, & Goldenberg, Citation2012; Sekine et al., Citation2013).

Two single-case studies (Dipper et al., Citation2011; Kemmerer et al., Citation2007), both of speakers with aphasia who primarily have word-finding difficulties, have considered the “swing” and “roll” events from the Tweety & Sylvester cartoon story used in the present study. For the “rolling down” event, neither participant was able to express both manner and path information in a single grammatical clause, with the participant in Kemmerer et al. (Citation2007) using an incomplete sentence lacking a verb (“He … down, whoa!”) and the participant in Dipper et al. (Citation2011) using two clauses (“…and he rolls and keeps going falling, falling, falling”). In the accompanying gesture both participants depicted only manner information (with the participant in the Kemmerer et al. (Citation2007) study also adding a separate path-only gesture, but not conflating the two). In both cases, gesture reflected the spoken language because manner and path information was neither verbally expressed together (in a clause) nor expressed together gesturally. This pattern is consistent with the idea, formalised in the Interface Hypothesis, that co-verbal gesture reflects lexical and syntactic choices made at the moment of speaking. However, one set of data is also consistent with Lexical Hypothesis – the match between the use of the word “roll” and the manner gesture in the Dipper et al. (Citation2011) case study. For the “swinging” event, the participants from both studies verbally produced “go” whilst gesturing an arc-shaped trajectory. In contrast to the pattern found for “roll”, for “swing” the co-speech gesture does not reflect spoken language because it depicts information not available in the semantics of the path verb spoken alongside it. These latter findings provide support for de Ruiter’s (Citation2000, Citation2006) version of the Interface Hypothesis, in which lexical difficulties lead to a compensatory effect in the accompanying gesture in order to achieve the original communicative intention. In the Lexical Hypothesis, the gesturing of an arc-shaped trajectory would be explained in terms of an attempt to boost the semantics of “swing” or to maintain its key features whilst a new conceptualisation or lexical search was undertaken.

From these two single-case studies of speakers with aphasia, there is partial evidence that co-speech gesture can both augment impaired language by providing missing semantic detail (“swing”); and can mirror impaired language (“roll”). Although it may seem that these two accounts are in competition, it is possible that both are processes used by speakers according to changing pragmatic and narrative demands and, in speakers with aphasia, according to the changing demands of linguistic processing. These possibilities need further investigation using larger groups of speakers.

The current study

The Kita and Özyürek (Citation2003) methodology was used to determine whether co-speech iconic gestures produced by native English speakers with aphasia reflected their lexical errors and/or their clausal errors in order to distinguish between two competing hypotheses about the gesture–language link. In addition, an additional analysis focussing on the semantic match between gesture and spoken language was also included. In line with previous cross-linguistic research, the gesture of speakers with aphasia was only expected to differ from neurologically healthy speakers where their language also differed. The following predictions were made:

In line with the Interface Hypothesis (but not the Lexical Hypothesis), when speakers with aphasia made clausal errors in talking about the “roll” event (resulting in manner and path information not being combined into a single clause) their gesture would also separately convey this information.

In terms of lexical errors, speakers with aphasia would be likely to replace the words “swing” and “roll” with alternatives which are less semantically specified (Berndt et al., Citation1997). The Interface Hypothesis predicts that when speakers with aphasia substitute the target verbs with semantically light verbs, their gestures would sometimes reflect this error by also containing only basic information about the event, but at other times would compensate by providing extra information. The Lexical Hypothesis would predict that only the latter pattern (compensation) would be evident.

Two distinct types of mismatch between the semantic content of gesture and language are possible: semantically rich language paired with light gesture and light language paired with rich gesture. The Lexical Hypothesis predicts that the first pairing will be rare in both the neurological healthy speakers and those with aphasia, because the hypothesis is based on the assumption that iconic gesture occurs in response to lexical retrieval difficulty, and in such contexts the gesture will provide the key information. In contrast, the Interface Hypothesis would suggest that both pairings are likely to occur in the narratives of neurologically healthy speakers as alternative ways to achieve a communicative intention; but for speakers with aphasia it will be more likely (due to linguistic difficulties) that the communicative intention would be accomplished with the second pairing.

Methodology

Participants

Twenty-nine people with aphasia (PWA) and 29 neurologically healthy controls (NHP) living in London and the South East of the UK participated in this study. These participants were recruited for a broader investigation of gesture production in aphasia, some of the results of which are reported in Cocks et al. (Citation2013). Participants were required to have normal hearing and vision (with correction), normal motor movements and no history of cognitive or other impairment that would affect gesturing. The groups were matched in terms of age, gender and education. The average age of participants was 60 years (PWA: mean = 60.9, s.d. = 14.85; NHP: mean = 59.69, s.d. = 13.63). Each group was similarly balanced in terms of gender (PWA: 12 female and 17 male; NHP: 18 female and 11 male) and all but one (from the PWA group) had completed secondary level education or higher. No participant presented with limb apraxia, and all participants used English as their first language and were able to give informed consent to participate.

The participants with aphasia were all more than a year post-stroke (16 months–32 years) were recruited via community stroke groups, and had mild–moderate aphasia of various types. Although not formally tested, no participant self-reported any difficulties with speech, such as verbal apraxia, and none were observed during the study. Please refer to for a summary of PWA scores and aphasia classifications on the Western Aphasia Battery – Revised (Kertesz, Citation2006), as well as information about their performance on the background tasks.

Table 1. Summary of participants’ background test scores.

Materials and procedure

Participants were shown the Sylvester and Tweety cartoon, “Canary Row”. The video was shown to participants in eight clips to reduce working-memory load, and they were then asked to retell each clip to someone who had not seen it. A description of each of the eight scenes is included in the Appendix. Gesture was not mentioned in the instruction. The descriptions of the key “swing” and “roll” events in each participant’s description were identified by one researcher, and gestures and language depicting these events were separately analysed. All gestures that did not occur alongside language or that were not iconic were discounted.Footnote1 The resulting set of gestures was then categorised as follows: for the “swing” event the gesture was categorised as either “Arc-shaped” or “Straight”; and for the “roll” event the categories were “Path only”, “Manner only” or “Manner and path conflated”. The language was coded for the verb used and for the structure of the clause. All coding was checked blind by a second rater, with 87% agreement, and disagreements were resolved via discussion.

Results

The “swing” scene

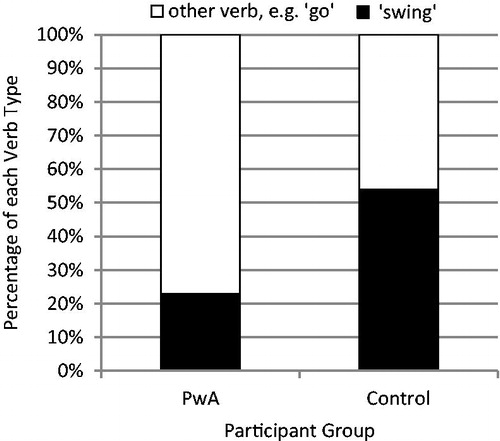

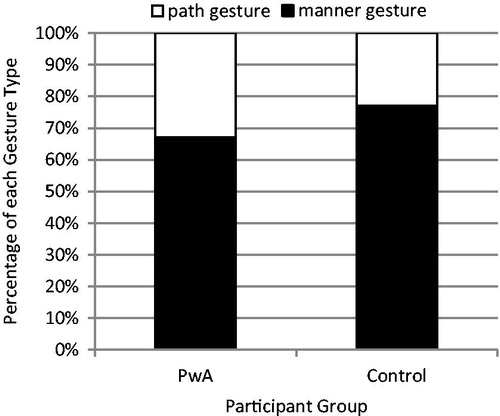

A one-tailed Fisher’s exact test indicated that controls used the word “swing” significantly more often when describing the “swing” event than the PWA (controls = 54%, PWA = 23%), p < 0.001. Twice as many PWA as controls gestured during their description of the “swing” scene (PWA = 76%, Controls = 38%Footnote2). A similar percentage of both groups used arc-shaped gestures when describing this scene (PWA = 68%, Controls = 77%). The words used by each group are presented in , and the semantic content of their gestures in . In contrast to the current study, all 14 of the comparator participants (i.e. the English speakers in Kita and Özyürek (Citation2003)) said “swing” alongside a gesture with an arc-shaped trajectory.

The “roll” scene

Lexical level

There were no significant differences between groups in either language or gesture for this scene. To verbally describe the “roll” scene, most participants used a word other than “roll”, usually “go” (PWA = 70%, Controls = 52%). The majority of participants in both groups gestured when describing the “roll” event (PWA = 62%, Controls = 72%), and the majority gestured only path information (PWA = 80%; Controls = 88%).

The lexical choices made by the participants in this study differed from those of the American English speaking participants in Kita and Özyürek (Citation2003), where all but one of these comparator participants used the word “roll”. Two separate Fishers exact tests indicated that path gestures were significantly more prevalent for the two groups in the present study than for the comparator participants (PWA = 80%, Comparators = 33%, p < 0.001; Controls = 88%, Comparators = 33%, p < 0.001). Manner–Path conflating gestures were extremely rare (one token from the PWA and two from the controls), which was in marked contrast to all three of the groups in the study by Kita and Özyürek (Citation2003).

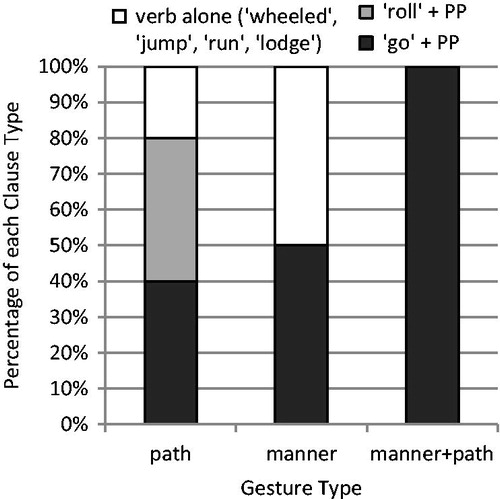

Clausal level

Although the American participants in Kita and Özyürek (Citation2003) and the controls in the present study all produced verbal descriptions comprising a verb and a prepositional phrase in a single clause, this was not the case for all the PWA. Eleven of the 18 verbal descriptions of “roll” that were produced with gesture by PWA did include a verb (either “go” or “roll”) with a prepositional phrase (for example, “down the hill”), but there were also seven instances where a verb was produced on its own without a prepositional phrase. The accompanying gesture was mostly path only across all types of clause. shows how the different gesture types were distributed across these clause types in the narratives produced by PWA.

Semantic match

To further investigate the effect of aphasia on gesture, the data were subjected to a second analysis not included in Kita and Özyürek (Citation2003). In this analysis, the gesture was considered in terms of its semantic match with the accompanying verbal language. The main verbs were coded based on semantic weight, with the verbs come, go, take and get coded as semantically light and all others coded as semantically heavy (Berndt et al., Citation1997; Hostetter, Alibali, & Kita, Citation2007). Because there is no corresponding heavy/light distinction made in gesture categorisation, a coding decision was made based on similarity with semantically light language: in spoken language “light” verbs are highly frequent and convey basic information about events or states, such as the fact that something moved, and so the gestures most similar to this in terms of both frequency and paucity of semantics conveyed are path gestures. See for examples of light and heavy language and gesture, and for the procedure for categorising matches and mismatches.

Table 2. Examples of light/heavy language and gesture.

Table 3. Gesture–language matches and mismatches.

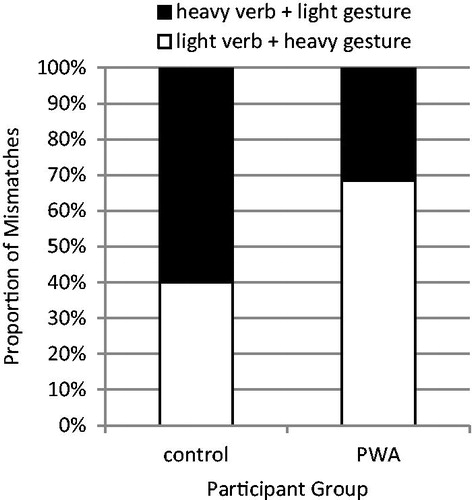

Because this was not an analysis carried out by Kita and Özyürek (Citation2003), only data from the present study were considered. Both the groups produced more matches than mismatches overall (PWA = 57%, Controls = 59%), with no significant difference in these proportions. The mismatched gesture and language data were further considered to look at the relative semantic weight carried by the gesture and the language in these cases (). A one-tailed Fisher’s exact test indicated PWA paired semantically light verbs with semantically heavy gestures significantly more often than controls (PWA = 68%, Controls = 40%, p < 0.001).

Summary

For the “swing” event, participants with aphasia used the word “swing” less often than did the controls who, like the comparator participants in Kita and Özyürek (Citation2003), used it on the majority of occasions. Arc-shaped trajectory gestures were the most common for all English speakers (those with aphasia and healthy speakers in both this study and the study by Kita and Özyürek (Citation2003)) when describing the swing scene. For the “roll” event, both participant groups in the present study used the word “roll” less often than the comparator participants in Kita and Özyürek (Citation2003). Both the groups in the present study also differed from those in Kita and Özyürek study, using mostly path gestures when describing the “roll” scene. However, this is consistent with the fact that the word “roll” was also used less. There were no differences between the participant groups in the overall proportion of gesture–language matches. For the mismatches, however, there was a significant group difference: PWA used semantically “light” verbs with semantically richer gestures more often than controls.

Discussion

The literature suggests two potential relationships between gesture and language (1) gesture arises during conceptual preparations for speaking (the Interface Hypothesis) or (2) gestures are generated from lexical semantics (the Lexical Hypothesis). The data presented here comprise the first equivalent dataset from speakers with aphasia to test these competing theories. The results indicate that gesture is closely-related to the content of spoken language even in the case of aphasia, and that sometimes gesture reflects the semantic properties of the impaired language but at other times it is compensatory and as such these results tend to favour the Interface Hypothesis over the Lexical Hypothesis.

To reach this conclusion, two key events (“swing” and “roll”) were considered using both an established analysis and a new investigation considering the semantic match between gesture and the spoken language that accompanies it. The current study found that the minority of speakers with aphasia used the word “roll” and, an even smaller group gestured manner information. Conversely, although the minority of speakers with aphasia used the word “swing”, the majority gestured the arc-like trajectory. These findings indicate that there is a relationship between lexico-semantics and gesture but that this is not the only influence, because at times, the gesture augmented the semantic content of the spoken language – see below for discussion of how the two Hypotheses account for these findings. The different link between gesture and spoken language for each motion event will also be unpacked in the sections that follow.

In describing the “roll” event, both speakers with aphasia and controls overwhelmingly opted to gesture path but not manner information. This could be accounted for by the corresponding infrequent use of the target manner verb by both the groups of participants. In addition, it should be recalled that the “roll” event depicted in the cartoon is not a typical “rolling” event because it involves the main character swallowing a bowling ball which then propels him down a hill. It was argued in an earlier section of the current paper that the unusual nature of the event may create a narrative impetus to describe the manner of motion, but this impetus was not apparent in the present study in the sense that neither group overwhelmingly used the word “roll”. The unusual nature of the event would have also made the lexical choice difficult for all speakers and particularly so for the speakers with aphasia, and it may be the case that the lack of a strong lexico-semantic influence from one specific word-form (is the character rolling or is he tumbling, bowling or falling down the hill?) had an effect on the gesture. Note that it would have been possible for the gesture to have compensated for the lack of a suitable verb by depicting the features of the event that were most salient or most relevant to the narrative (i.e. both the manner of movement and the downward path) but this was not the preferred choice of the speakers in the present study. Instead, the choice of a verb whose meaning does not contain manner information (e.g. “go”) seems to have led to a corresponding absence of manner meaning in the gesture. This pattern is consistent with Kita and Özyürek’s version of the Interface Hypotheses in which the choice of the word “go” would have influenced the gesture to depict path information, and would be explained by de Ruiter as a decision related to the communicative intention (i.e. that the path of movement was the more important part of the message).

The Lexical Hypothesis cannot explain this gesture–speech pairing so easily: if, as is likely, the difficulty here for both speakers with aphasia and controls is the lack of a readily available lexical label then the Lexical hypothesis would predict that the gesture would contain the relevant core semantic features of the event. The post-semantic lexical route of Hadar and Butterworth (Citation1997) involves those key features (e.g. path and manner) being held in imagistic memory whilst an attempt is made to match a lexeme to the lemma; and the conceptual route involves a refocusing on the core features (e.g. manner and path) when selection fails. In both cases the most likely outcome would be a gesture containing both manner and path information.

The lexico-semantic knowledge about the word “swing” appears to have been much stronger for all our English-speaking participants. In the case of the Japanese and Turkish speakers in Kita and Özyürek (Citation2003), the authors argue that the lack of a lexical label “swing” to describe the arc-shaped motion discouraged those speakers from depicting the arc in their gestures. For the speakers with aphasia in the present study, the word “swing” might have become unavailable or difficult to access due to impairment, but would once have been present in the lexicon. Our data indicate that this inaccessible word nevertheless exerted a semantic influence on the accompanying gesture. This reflects the strength of the lemma (the lexical meaning) regardless of whether the speaker can access the spoken form of the word (lexeme). The lemma exists (or once existed) in their lexicon even though it cannot now be retrieved. In support of this proposal, there were also a notable number of occasions in which the healthy English speakers in the present study did not use the verb “swing” but gestured an arc-shaped motion. Again, this is most likely due to the fact that the lexical label, although not chosen, would be available in their lexicon. If there was an influence from the lemma “swing” even when the lexeme “go” was produced then the gestural content (an arc) is consistent with both the Interface Hypothesis and the Lexical Hypothesis.

It was also predicted that the narratives from people with aphasia would contain instances of intact language processing at the clausal level, and that when this was the case, they would show the same patterns of semantic match between gesture and clause structure as healthy speakers. For the “roll” event, if the language combined a manner verb with a prepositional phrase then gesture would also combine manner and path information. The data did not support this prediction because, when the speakers with aphasia used such structures, they accompanied their verbal description with gesture depicting only path information. This pattern is in conflict with the Interface Hypothesis, in which the combination of manner and path information in a single clause verbally predicts the same combination in gesture. It should be noted, however, that there were fewer instances of manner verbs being used in combination with prepositional phrases than expected, because of the tendency of speakers with aphasia to either use verbs without prepositions, or to use verbs with no manner semantics (e.g. “go”) alongside prepositions. This resulted in limited data with which to test the Interface Hypothesis. In future research, it would be interesting to explore whether a memory store for manner information was impaired in the participants with aphasia, suggesting that not only was it absent from their descriptions, but that it may not have been encoded. However, it is also true that the latter pattern (“go” and a prepositional phrase) was also more prevalent in the data of the control group than the literature had led us to expect for English speakers.

The differences between the data from the control group in the present study (the majority of whom were British English speakers) and the American English speakers reported in Kita and Özyürek (Citation2003) were unexpected and, in the case of “roll” in particular, were striking. These differences could stem from various sources. Firstly they could have been the result of the difference between the mode of presentation of the cartoon used in Kita and Özyürek’s study (presented as a whole) and our presentation of it (in eight sections) which could have disrupted the narrative flow. It is also possible that the difference in nationality between participant groups was a confounding factor, but if this were the case it would challenge the Interface Hypothesis in which gesture reflects purely linguistic factors, such as clause structure. The differences between the control data presented here and the comparative dataset reported by Kita and Özyürek (Citation2003) may instead have been an artefact of the participant selection process. Kita and Özyürek (Citation2003) indicate that they only included participants who gestured for both key scenes (page 20, paragraph 4 and page 23, paragraph 3), rather than including all participants who meet the selection criteria and then analysing every occasion in their narratives on which gesture was produced alongside relevant language (as is the case in the present study). A final possibility is an effect of age on gesture. It is likely that the mean age of the participant group in the present study was considerably higher than the comparator group in Kita and Özyürek (2003), because of the relatively high mean age of the group of speakers with aphasia to which participants were matched. There is evidence that aging impacts on gesture production in terms of frequency (Cohen & Borsoi, Citation1996; Feyereisen & Harvard, Citation1999) but no corresponding evidence relating to the match of gesture with verbal language, which is the possibility under discussion in the current study.

Our analysis of the mismatched gesture and language pairings, in which either a semantically rich word (“roll” or “swing”) was paired with a semantically light gesture (path information only) or vice versa, similarly showed a different pattern for each word. So for “roll” it did not matter what information the language conveyed; path predominated in the gesture. For “swing” on the other hand, although the language tended to be semantically light (“go”), information about the arc-shaped trajectory was consistently gestured. The latter mismatch could be taken as evidence that gesture can compensate for lexical difficulty, and indeed there is further evidence for gestural compensation in our data. Firstly, although both the PWA and the control participants produced descriptions where the gesture provided more meaning than language, controls produced as many pairings where the opposite relationship held but the PWA did not. This suggests that, in the language of neurologically healthy people, it is common for verbal language and gesture to share the semantic burden and moreover to take turns at carrying relatively more semantic weight. Moreover these findings suggest that this is not the case for speakers with aphasia for whom it is often difficult for verbal language to carry the semantic burden alone.

There was a second feature of the data which appeared to indicate gestural compensation for semantic deficiency in verbal language. When compared to controls, the participants with aphasia used the word “swing” less often but gestured twice as much. This increase in the frequency of gesture tended to occur in the context of other signs of word-finding difficulty, such as hesitation, repetition and circumlocution (see Murray and Clark (Citation2006), for a list of indicators of word retrieval difficulty), and this increase in situations of word finding difficulty reflects the pattern found beyond this single verb, in the wider narratives produced by the participants with aphasia (as reported in Cocks et al. (Citation2013)). It is also a pattern seen in the gesture of other people with aphasia reported in Lanyon and Rose (Citation2009), who found word-retrieval difficulties were associated with a higher frequency of all types of gesture.

It might be argued that the participants with aphasia in the present study, although similar in terms of aphasia severity, formed a disparate group in terms of aphasia type and that this may have clouded the results. Previous studies have examined the idea that aphasia type and/or aphasia severity might impact on gesture production. For example, people with non-fluent aphasia have been shown to have a high proportion of representative gestures, including iconic gestures (e.g. Behrmann & Penn, Citation1984) as compared to people with fluent aphasia (e.g. Cicone et al., Citation1979). However many of these studies include small numbers of participants, and the type of gesture under investigation varies across studies, limiting the comparability of their results to the present study which focussed on co-speech iconic gesture. A more recent, larger, study by Sekine and colleagues (Sekine & Rose Citation2013; Sekine et al., Citation2013) examining a comprehensive range of gestures and aphasia types indicated that neither overall aphasia severity nor the degree of word retrieval impairment significantly predicts gesture production patterns. Where aphasia type appeared to have an impact on the gesture the PWA produced, the differences were across gesture type (for example deictic versus pantomime gestures) rather than within the category of co-speech iconic gestures. In fact the category of gesture coded by Sekine and colleagues which is most relevant to the iconic co-speech gestures under investigation here were the iconic observer viewpoint gestures, and this gesture type was produced by the vast majority of people across the whole range of aphasia types (except their single participant with Global aphasia). Sekine and colleagues findings therefore support our decision to consider the PWA group as a whole.

This paper presents a new dataset from speakers with aphasia describing the two key manner-of-motion events which have been extensively studied in the gesture field, and it indicates that co-speech gesture can sometimes reflect the semantic properties of impaired language (“roll”) and at other times compensate for it (“swing”). The findings for both a reflective and a compensatory role are important both clinically and theoretically, and they support the Interface Hypothesis over the Lexical Hypothesis, allowing as it does for an online interaction between language and conceptualisation to achieve a communicative intention. Whilst there is plenty of evidence here that both lexical semantics and impaired lexical semantics are involved in this interaction, a so have an influence on co-speech iconic gesture, the evidence about the role of larger units of meaning (such as the clause) is weaker. These results refine explanations of how language and gesture work together by showing how language impairment influences gesture, and highlighting the key role of the lemma. The data presented here suggest that lexical semantic knowledge exerts a strong influence over both language and co-speech iconic gesture; that gesture can either reflect or augment information expressed verbally; and that in aphasia the compensatory role of co-speech gesture is especially important.

Declaration of interest

The authors report no declarations of interest.

This study was supported by the Dunhill Medical Trust (Grant Reference: R171/0710).

Notes

1We discarded only those gestures produced in absolute isolation from speech (i.e. with no attempts at speech either during the gesture or within 3 s preceding or following). Gesture produced during gaps in the speech of less than 3 s, such as those gaps common during word retrieval events, were retained.

2Two of the 11 control participants described the target event twice, each time with gesture, and so there are 13 gesture–language tokens in the analysis for the control group.

References

- Behrmann, M., & Penn, C. (1984). Non-verbal communication in aphasia. British Journal of Disorders of Communication, 19, 155–176

- Berndt, R., Haendiges, A., Mitchum, C., & Sandson, J. (1997). Verb retrieval in aphasia 2: Relationship to sentence processing. Brain & Language, 56, 107–137

- Bickerton, W. L., Riddoch, M. J., Samson, D., Balani, A. B., Mistry, B., & Humphreys, G. W. (2012). Systematic assessment of apraxia and functional predictions from the Birmingham Cognitive Screen. Journal of Neurology, Neurosurgery & Psychiatry, 83, 513--521

- Butterworth, B., & Hadar, U. (1989). Gesture, speech, and computational stages: A reply to McNeill. Psychological Review, 96, 168–174

- Chui, K. (2012). Cross-Linguistics comparison of representations of motion in language and gesture. Gesture, 12, 40–61

- Cicone, M., Wapner, W., Foldi, N., Zurif, E., & Gardner, H. (1979). The relation between gesture and language in aphasic communication. Brain and Language, 8, 324–349

- Cocks, N., Dipper, L., Middleton, R., & Morgan, G. (2011). The impact of aphasia on gesture production: A case of conduction aphasia. International Journal of Language and Communication Disorders, 46, 423–436

- Cocks, N., Dipper, L., Pritchard, M., & Morgan, G. (2013). The impact of impaired semantic knowledge on spontaneous iconic gesture production. Aphasiology, 27, 1050–1069

- Cohen, R., & Borsoi, D. (1996). The role of gestures in description-communication: A cross-sectional study of aging. Journal of Nonverbal Behavior, 20, 45–63

- de Ruiter, J. P. (2000). The production of gesture and speech. In D. McNeill (Ed.), Language and gesture (pp. 248--311). Cambridge: Cambridge University Press

- de Ruiter, J. P. (2006). Can gesticulation help aphasic people speak, or rather, communicate? Advances in Speech–Language Pathology, 8, 124–127

- de Ruiter, J. P., & de Beer, C. (2013). A critical evaluation of models of gesture and speech production for understanding gesture in aphasia. Aphasiology, 27, 1015--1030

- Dipper, L., Cocks, N., Rowe, M., & Morgan, G. (2011). What can co-speech gestures in Aphasia tell us about the relationship between language and gesture? A single case study of a participant with Conduction Aphasia. Gesture, 11, 123–147

- Druks, J., & Masterson, J. (2000). Object and action naming battery. Psychology Press: Hove

- Feyereisen, P. (1983). Manual activity during speaking in aphasic subjects. International Journal of Psychology, 18, 545--556

- Feyereisen, P., & Havard, I. (1999). Mental imagery and production of hand gestures while speaking in younger and older adults. Journal of Nonverbal Behavior, 23, 153–171

- Hadar, U., & Butterworth, B. (1997). Iconic gestures, imagery, and word retrieval in speech. Semiotica, 115, 147--172

- Hadar, U., Burstein, A., Krauss, R., & Soroker, N. (1998). Ideational gestures and speech in brain-damaged subjects. Language and Cognitive Processes, 13, 59--76

- Hadar, U., Wenkert-Olenik, D., Krauss, R., & Soroker, N. (1998). Gesture and the processing of speech: Neuropsychological evidence. Brain and Language, 62, 107–126

- Hogrefe, K., Ziegler, W., Weidinger, N., & Goldenberg, G. (2012). Non-verbal communication in severe aphasia: Influence of aphasia, apraxia, or semantic processing?. Cortex, 48, 952–962

- Hostetter, A., Alibali, M., & Kita, S. (2007). Does sitting on your hands make you bite your tongue? The effects of gesture inhibition on speech during motor descriptions. Paper presented at the Proc. 29th meeting of the Cognitive Science Society (pp. 1097--1102). Mawhah, NJ: Erlbaum

- Kemmerer, D., Chandrasekaran, B., & Tranel, D. (2007). A case of impaired verbalization but preserved gesticulation of motion events. Cognitive Neuropsychology, 24, 70–114

- Kertesz, A. (2006). Western aphasia battery-revised. Austin, TX: Pro-Ed

- Kita, S., & Özyürek, A. (2003). What does cross-linguistic variation in semantic co-ordination of speech and gesture reveal?: Evidence of an interface representation of spatial thinking and speaking. Journal of Memory and Language, 48, 16–32

- Krauss, R. M., & Hadar, U. (1999). The role of speech-related arm/hand gestures in word retrieval. In R. Campbell & L. Messing (Eds.), Gesture, speech, and sign (pp. 93–116). Oxford: Oxford University Press

- Krauss, R. M., Chen, Y., & Gottesman, R. F. (2000). Lexical gestures and lexical access: A process model. In D. McNeill (Ed.), Language and gesture (pp. 261–283). Cambridge: Cambridge University Press

- Lanyon, L., & Rose, M. L. (2009). Do the hands have it? The facilitation effects of arm and hand gesture on word retrieval in aphasia. Aphasiology, 23, 809--822

- Lyle, R. C. (1981). A performance test for assessment of upper limb function in physical rehabilitation treatment and research. International Journal of Rehabilitation Research, 4, 483--492

- McNeill, D. (1992). Hand and mind: What gestures reveal about thought. Chicago: Chicago University Press

- McNeill, D. (Ed.). (2000). Language and gesture (Vol. 2). Cambridge: Cambridge University Press

- Murray, L., & Clark, H. (2006). Neurogenic disorders of language: Theory driven clinical practise. New York, NY: Thomson Delmar Learning

- Pedelty, L. L. (1987). Gesture in aphasia. Doctoral dissertation, University of Chicago, Department of Behavioral Sciences, Committee on Cognition and Communication

- Poeck, K. (1986). The clinical examination for motor apraxia. Neuropsychologia, 24, 129--134

- Sekine, K., & Rose, M. L. (2013). The relationship of aphasia type and gesture production in people with aphasia. American Journal of Speech-Language Pathology, 22, 662–672

- Sekine, K., Rose, M., Foster, A., Attard, M., & Lanyon, L. (2013). Gesture production patterns in aphasic discourse: In-depth description and preliminary predictions. Aphasiology, 27, 1031–1049

- Slobin, D. I. (2004). The many ways to search for a frog: Linguistic typology and the expression of motion events. In S. Strömqvist & L. Verhoeven (Eds.), Relating events in narrative: Typological and contextual perspectives. Mahwah, NJ: Lawrence Erlbaum Associates

- Slobin, D. (2006). What makes manner of motion salient? Explorations in linguistic typology, discourse, and cognition. In M. Hickmann & S. Robert (Eds.), Space in languages: Linguistic systems and cognitive categories (pp. 59–81). Amsterdam, The Netherlands: John Benjamins

- Streeck, J. (1993). Gesture as communication I: Its coordination with gaze and speech. Communications Monographs, 60, 275–299

- Talmy, L. (1988). Force dynamics in language and cognition. Cognitive Science, 12, 49--100

- Talmy, L. (2000). Toward a cognitive semantics: Vol. II: Typology and process in concept structuring. Cambridge, MA: MIT Press

Appendix: Description of the Sylvester and Tweety Cartoon Stimulus

Scene 1: Binoculars

The cat looks through binoculars, at the bird in a building on the other side of the road. The bird is also looking through binoculars at the cat. The cat runs to the bird’s building but is thrown out because the sign said “no cats and dogs”.

Scene 2: Drainpipe

The bird swings in his cage, singing. The cat climbs up the drainpipe and stands on the window sill conducting with his finger. The bird sees the cat and flies out of his cage. Granny hits the cat on the head with an umbrella, and throws him out of the window.

Scene 3: Bowling Ball

The cat paces back & forth on the pavement, trying to work out a plan to catch the bird. He climbs inside the drainpipe, up to the bird’s windowsill. The bird sees the cat, and he gets a bowling ball. The bird puts the bowling ball down the drainpipe. The cat swallows the bowling ball, and falls out of the drainpipe with the ball inside him. The cat rolls down the road into the bowling alley where he knocksover all the pins (skittles).

Scene 4: Monkey

The cat paces back & forth outside the bird's apartment. He sees a man with an accordion and a monkey on the other side of the road. The cat entices the monkey with a banana, and steals the monkey's clothes. The cat puts on the monkey's clothes and climbs the drainpipe to the bird's apartment again. The cat acts like a monkey and convinces Granny, and she gives him a coin. When she realises it is the cat she hits him on the head with her umbrella.

Scene 5: Bellboy

Granny phones the reception to ask for a bell boy to collect her luggage. The cat hears that the call came from room 158, dresses as a bell boy and knocks on the door. The cat takes the suitcase and covered birdcage. He then throws the suitcase away and carries the birdcage downstairs to the alley. The cat uncovers the birdcage and finds Granny in there. She hits the cat with her umbrella and chases him down the street.

Scene 6: Catapult

The cat makes a catapult or fulcrum with a plank and a box. He stands on one side of the plank (catapult) and throws a weight onto the other side of the plank. This makes him shoot up into the air to the bird's window. The cat grabs the bird as he falls back down. He runs away with the bird but the weight lands on him flattening his head. The bird escapes.

Scene 7: Swing

The cat looks through a telescope at the bird. He draws a diagram. He then gets a rope and swings across to the other building. He is not high enough to reach the window so he hits the wall and slides back down onto the floor.

Scene 8: Trolleycar

The cat paces again. He climbs up the pole to the tram wires to try to walk across them to the bird's apartment. The tram/trolley bus comes and the cat runs away getting electric shocks. Granny and the bird are driving the tram, chasing the cat.