Introduction

When one of us (A.A.) served as chairman of our society (Upsala läkareförening) for a 3-year period some 15 years ago, one of the most challenging tasks was to find a reasonable and fruitful handling strategy for our journal, which at that time was 135 years old. Actually the question, expressed by a task force (Gunnar Ronquist, editor; Bengt Westermark, vice rector; Arne Andersson, chairman) in an editorial on the subject (Citation1), was: should the journal be kept alive or should we let it die? Actually, we asked our colleagues to go on ‘investigating, writing, and publishing’ and preferably in the Upsala Journal of Medical Sciences because ‘we don’t intend to perish’. Tools in that survival strategy included first of all starting electronic publishing. We also tried to stimulate different award winners to write reviews on their research for our journal. One more important move was to introduce special issues on comprehensive research with extramural classes at our faculty. The first one of that sort was that on ‘Diabetes research in Uppsala’ with Claes Hellerström serving as guest editor.

After some years we realized that electronic publishing also had to include the initial manuscript handling. That led us to sign up with a highly experienced publisher, Informa Healthcare, to run a ScholarOne-based manuscript centre with standardized digital procedures for manuscript submission, peer review, editorial decisions, manuscript production, and the use of a very handy and informative web page. And all this at a cost that was in fact lower than we had paid previously. With this strategy shift, we faced a fascinating change 5 years later. The submission rate of new manuscripts increased by a factor of 10, and we immediately decided to go from a release of three issues per year to one issue per quarter. Very soon we also noticed that numbers of all sorts of citations increased. In order to enhance the attraction of the journal—by that we meant attracting manuscripts from teams with highly visible research—we had to enter the field of ‘Impact factor mania’ (Citation2), or ‘Impactitis’ (Citation3) as some people prefer to call it. Thus, when discussing these issues with potential submitters of manuscripts to our journal the most common comment we heard was that ‘your impact factor (IF) value is too low’. And basically they were right. At that time our IF values were well below 0.50.

This article will present figures for various aspects of the daily running of a journal of this kind and size. Comparisons have also been made with other, mainly Uppsala-based, journals to see how different handling strategies affect the performance figures of those particular journals. By using such information to make improvements to our journal management, we hope to increase further the impact of our esteemed publication, which is 150 years old this year.

Journal report

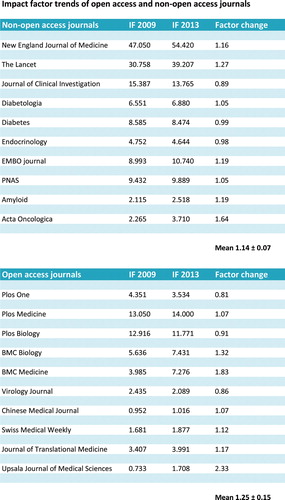

One very useful piece of information in scholarly publishing is the publisher’s annual report with statistics on more or less every aspect of journal management. We have extracted some basic data () from the latest report of November 2014 and added some internal data. In times when almost every experienced scientist receives a couple of invitations each week to publish their latest articles in different new, obscure journals, we are glad to report a fairly constant and high inflow of new submissions—some 250–300 on an annual basis. Authors from a total of 41 different countries submitted their papers last year, documenting that our journal indeed is a true international publication. Besides contributions from Sweden, there are very many from Turkey and China as well. One understands previous predictions that by the year 2020 every fifth biomedical publication will be written by Chinese authors. The high figure for manuscripts from Turkey most certainly reflects the fact that there are some 60 medical faculties in that country.

Figure 1. Data from journal report presented by the publisher concerning submitting countries, rejection rate, handling strategies, and lead times for Upsala Journal of Medical Sciences.

The backbone of scholarly publishing is the use of classical peer review for selection of papers for publication. The system, though, has limitations, one of which is that of convincing suitable people to contribute. The editor’s responsibility is then to avoid the use of reviewers for decisions that appear obvious at a quick glance. In our hands, that would mean that about half of the submitted papers would be rejected without a traditional review using two referees (). To that should be added a handful of papers that never manage to pass the ‘ScholarOne’ filter checking that authors have followed the instructions for authors reasonably well. About 20% of the submitted papers have been sent out for classical review. Some papers—last year about 10%—were accepted as submitted, but it has to be kept in mind that a great majority of them were invited papers. All in all, it means that the official rejection rate of our journal is fairly high—about 80%—but the figure is, for different reasons, a bit shaky. One reason for the high number of rejected papers, of course, is that many of them have faults of different kinds. Perhaps less experienced and trained scientists have chosen our journal since we do not charge authors any submission fees despite the fact that we publish under the premises of open access. In order to keep authors happy we strive for quick decisions and information. Therefore, it is with considerable satisfaction that we can report on fairly short lead times—2 weeks to the first decision and 3 weeks to the final decision ().

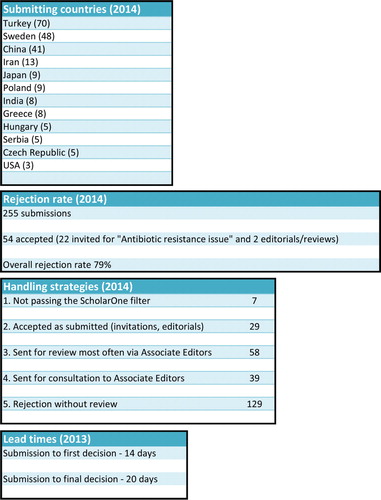

One more advantage with a well-designed web page for a scholarly journal, and especially an open access journal, is that numbers of downloads of separate articles can be followed in great detail. It is not surprising to see that the most downloaded papers are quite newly released. Thus, when viewing download figures for our journal during 2014 we found that 5 out of 10 most downloaded papers had been published the same year (). One of them (Citation4) was published as late as in the third issue of that 2014 volume. One more token of the immediate interest for this article among the general public was that it was the subject of one of the ‘Sunday Scientific Reports’ of our local newspaper authored by Åke Spross.

Figure 2. A: Most downloaded articles 2014 from the web page of Upsala Journal of Medical Sciences. B: Downloading countries the same year.

There is, however, one remarkable exception from the assumption that much-downloaded papers also become the most cited ones, and that is a more than 30-year-old Italian study (Citation5). For several years this almost non-cited publication (according to Thomson Reuters, there are a total of two citations of this paper) has been number one in our top download tables. It is tempting to speculate that reasons other than pure interest in the scientific content of the report have brought about the downloads. Such events have been designated ‘crawlers’, and it has been claimed that it should be possible to correct for this type of contamination of these attention-based figures, making them more useful for qualitative assessments of scientific publications. It is also interesting and stimulating to see that those who most frequently download our articles are US citizens ().

Citations

When Eugene Garfield some 60 years ago first launched his idea of a citation index for scientific publications he probably could not have dreamt what an enormous impact such instruments would have on scholarly publishing. Garfield himself has summarized experiences and feelings on the use of the journal impact factor (Citation6), and that article was concluded with a citation from a paper by Hoeffel (Citation7). The last sentence of that citation states that ‘The use of impact factor as a measure of quality is widespread because it fits well with the opinion we have in each field of the best journals in our speciality’. Memories from the early days of research education for one of us (A.A.) reveal that there was an informal journal ranking system ‘built in the walls’ of the laboratory. Some journals were regarded as esteemed, and others were extremely exclusive and in practice impossible even to think of as possible candidates for submission. Problems come when using the journal impact factor for evaluations of individual scientists and in particular their specific publications. So, regardless of all conflicting opinions, we have to take notice of our own impact figures and perhaps also adapt the journal operation to be compatible with the maintenance of honourable measures.

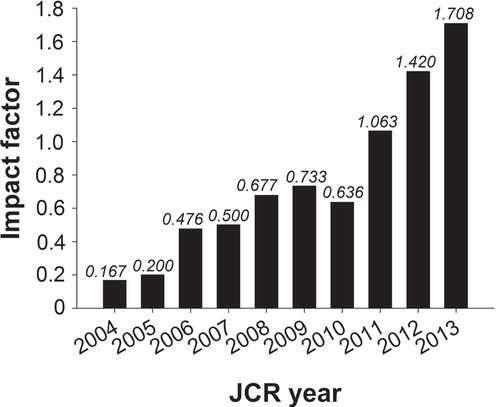

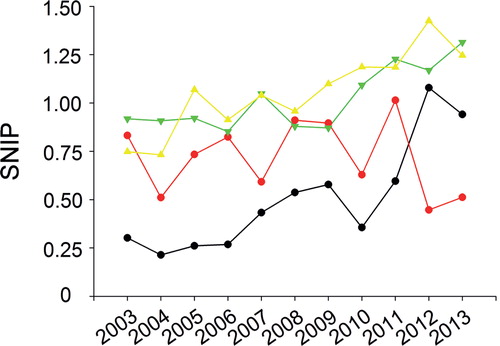

We have had the pleasure of reporting increasing IF figures more or less every year since 2003 (). The first critical level to pass was that of 1.0—a value of great importance in our own internal faculty budget formula. In 2011 that event could be celebrated, and as a token of appreciation we served champagne at our annual editorial board meeting that year. Looking back we can see a 10-fold increase of the impact factor over the last 10 years. We are at present approaching the 2.0 level, which was previously regarded as the lowest figure for ‘esteemed journals’ as defined by the Swedish funding agencies.

One objection that should be kept in mind when using the impact factor instrument is that the whole business is run by a commercial, private company. The influence of different scientific organizations on how to run it is probably very small. Our own experience is, however, that Thomson Reuters are keen and willing to help when you suggest a correction due to inaccuracies in their databases (missing issues, invalid citations, etc.). It is anyhow of interest to direct some attention to other databases as well. Among the few that exist, the Scopus database run by Elsevier has been available for some years. As we pointed out in a recent editorial on newly released impact figures (Citation8), a major problem with them is that knowledge on how these figures have been calculated is meagre. Nevertheless, we found that there was a parallelism in the increase of our impact factor and the Scimago Journal Rank figure.

Another parameter reported in Scopus is the so-called SNIP factor. SNIP stands for source normalized impact per paper. This indicator measures the average citation impact of the articles of a journal. It has been calculated by Leiden University’s Centre for Science and Technology Studies (CWTS) based on the Scopus bibliographic database produced by Elsevier. Unlike the well-known journal impact factor, SNIP corrects for differences in citation practices between scientific fields, thereby allowing for more accurate between-field comparisons of citation impact. As with the SCI figure there is a brisk increase of this factor for our journal as well—a 2–3-fold increase over the last 5 years (). Three other Uppsala-based journals—Acta Oncologica, Acta Dermato-Venereologica, and Amyloid—display more constant figures.

Figure 4. Source normalized impact per paper (SNIP) for four different Uppsala-based biomedical journals: red circles = Amyloid; green triangles = Acta Oncologica; yellow triangles = Acta Dermato-Venereologica; black circles = Upsala Journal of Medical Sciences.

One more piece of information that can be extracted from the Scopus database is the percentage of not-cited papers for different journals. We have recently pointed out (Citation8) that substantial numbers (about one-third) of the total number of publications in one of the most esteemed journals (New England Journal of Medicine) were not cited at all. According to Eugene Garfield, out of 38 million items cited from 1900 to 2005 half were not cited at all and only 0.5% more than 200 times (Citation6). We are very pleased to find that our publications are cited to a very high extent—only between 10% and 20% have not been cited 3–10 years after publication (Citation8).

One more issue of interest in this context is to what extent self-citations have been included in figures of this type. In the traditional measures released in late July from Thomson Reuters of journal impact factor figures self-citations are included, but for each journal there is information on their self-citation rates. Of our total cites 3% were self-cites, a figure that is fairly common amongst journals in our subject category—medicine, general and internal. Whether the editor’s possible use of so-called coercive citations (forced citations of articles in their own journals) influences these figures to any major extent is difficult to judge. There is, however, evidence to suggest that journals have been excluded from these ranking lists due to proven use of this behaviour. We are happy to say that, to the best of our knowledge, we do not apply this practice.

Impact of publication characteristics on citation figures

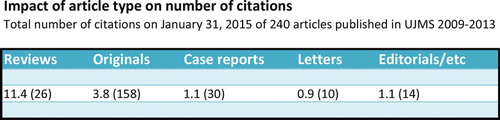

When editors talk to scientists about problems with low impact figures the answer has always been—go for more reviews. When the Diabetologia editorial office moved to Claes Hellerström and his group in Uppsala in the mid-1980s, we were told by the publisher that the number of original articles should be reduced as much as possible and be replaced by reviews. The idea was to increase the impact factor to the level of its American counterpart. However, it is not that simple, so we decided to look at citation figures for different types of articles in Upsala Journal of Medical Sciences. We then categorized all articles published in our journal for a 5-year period with regard to type of article, research subject, and also number of authors. Out of 240 published items, 26 were denominated reviews, and the average citation frequency of these articles was more than 11 over this 5-year period (). This is well above the corresponding figure for original articles, i.e. 3.8 citations, and it supports previous assumptions on this matter. To become a highly cited review it is, however, quite evident that a review has to be written by a very distinguished person in the field on a topic of great relevance for the moment. Quite evidently, also in conformity with usual statements, case reports are very seldom cited. When remembering that letters are as much cited without burdening the denominator of the impact factor, it is not surprising to see this category is increasing at the expense of classical case reports. In our journal we, however, intend to keep the case report window open for cases from our own hospital.

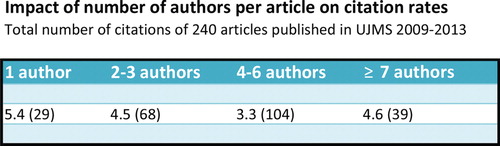

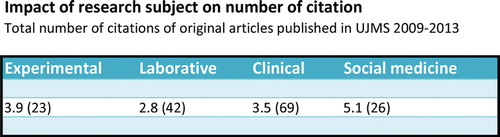

We also tried to figure out as to whether the number of authors on the papers to any extent influenced the citation figures. There were no obvious differences with regard to this article characteristic (), but it is worthy of note that the highest figure was found for single-author papers. Most probably that reflects the fact that many of these articles were reviews. We also looked for differences with regard to the subject of the article. Four categories of research were delineated. Some articles covered more than one subject area, and therefore not all articles published during this timespan have been included. No great differences were found between the different subject areas (). Somewhat surprisingly, articles dealing with social medicine aspects had the highest citation figures. Perhaps one explanation for this is the fact that our most cited papers had to be excluded because they were reviews with a broad scope of topics.

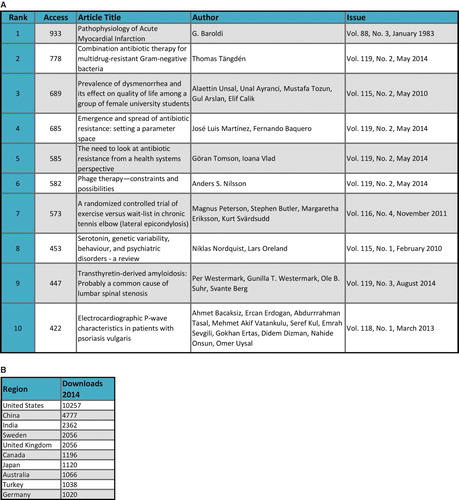

Impact of open access

When we decided to go for a professional publisher and to use an electronic manuscript centre we were quite clear about using open access for our performance. In combination with the scanning of our volume archive in order to give everyone the opportunity to read all our recent articles for free on the web page of the journal, the open access feature of our journal most certainly worked as very efficient advertising for the existence of our journal. When we also refrained from charging authors any kind of publication fee it was quite logical that papers started to flood our inbox. But basically we were an open access journal even before being published by Informa. It was therefore of interest to see whether citation figures for other journals had been influenced over the years by the use of open access. In a very ‘non-scientific’ way we looked at the development of the impact figure over a 5-year period (2009–2013) of 10 journals of interest that have been open access all the time and 10 other journals being non-open access over the same time period (). There was a small difference between the two types of journals. Open access journals tended to increase their impact factors more than non-open access ones. Besides the selection bias in our study groups, open access journals are most often ‘younger’ and thus still in a phase of natural growth. We are quite convinced that we have benefited from the openness of our journal. However, it then has to be kept in mind that we are privileged in the sense that we are owned and run by a non-profit society.

How to proceed from these observations?

As can be seen from this presentation, editors live in busy times at present. New journals pop up like mushrooms, and the existence of predatory journals makes it necessary to explain to everyone that our task is not to create wealth or make shareholders happy. On top of that, there seems to be a kind of a war out there where an endless number of editors/journals are offering you space for your scientific articles. Remember the times when you spent hours and days trying to figure out where to submit your paper that had been rejected on the grounds of being too conventional but otherwise sound.

Our journal operates from a very solid platform. One hundred and fifty years of scholarly publishing means that we are not ephemeral and planning should be long-term. We will go on printing the quarterly issues of the journal for the sake of the members of the society. In order to keep up the good reputation of the journal and perhaps also the curiosity of potential submitters, we have to be aware of the importance of the impact factor. There are no self-playing pianos! Inclusion of contributions as before from prize winners in the faculty and also an annual special issue will meet the need for reviews. It would also be nice to publish more editorials and commentaries. However, the major bulk of publications still has to be original articles. Finding out what is new knowledge which can be of great benefit to the scientific community is the task of the whole editorial staff. Seeing the number of citations for such papers escalating is probably the best reward for the editor.

Declaration of interest: The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the paper.

References

- Andersson A, Ronquist G, Westermark B. On the revival of an old and venerable journal. Ups J Med Sci. 2000;105:3–4.

- Casadevall A, Fang FC. Causes for the persistence of impact factor mania. MBio. 2014;5:e00064–14.

- van Dienst PJ, Holzel H, Burnett D, Crockner J. Impactitis: new cures for an old disease. J Clin Pathol. 2001;54:817–19.

- Westermark P, Westermark G, Suhr OB, Berg S. Transthyretin-derived amyloidosis: probably a common cause of lumbar spinal stenosis. Ups J Med Sci. 2014;119:223–8.

- Baroldi G. Pathophysiology of acute myocardial infarction. Ups J Med Sci. 1983;88:159–68.

- Garfield E. The history and meaning of the journal impact factor. JAMA. 2006;295:90–3.

- Hoeffel C. Journal impact factors. Allergy. 1998;53:1225.

- Andersson A. Further improvements of our journal performance figures. Ups J Med Sci. 2014;119:295–7.