Abstract

Over the last dozen years, many national and international expert groups have considered specific improvements to risk assessment. Many of their stated recommendations are mutually supportive, but others appear conflicting, at least in an initial assessment. This review identifies areas of consensus and difference and recommends a practical, biology-centric course forward, which includes: (1) incorporating a clear problem formulation at the outset of the assessment with a level of complexity that is appropriate for informing the relevant risk management decision; (2) using toxicokinetics and toxicodynamic information to develop Chemical Specific Adjustment Factors (CSAF); (3) using mode of action (MOA) information and an understanding of the relevant biology as the key, central organizing principle for the risk assessment; (4) integrating MOA information into dose–response assessments using existing guidelines for non-cancer and cancer assessments; (5) using a tiered, iterative approach developed by the World Health Organization/International Programme on Chemical Safety (WHO/IPCS) as a scientifically robust, fit-for-purpose approach for risk assessment of combined exposures (chemical mixtures); and (6) applying all of this knowledge to enable interpretation of human biomonitoring data in a risk context. While scientifically based defaults will remain important and useful when data on CSAF or MOA to refine an assessment are absent or insufficient, assessments should always strive to use these data. The use of available 21st century knowledge of biological processes, clinical findings, chemical interactions, and dose–response at the molecular, cellular, organ and organism levels will minimize the need for extrapolation and reliance on default approaches.

Introduction

Since the time of the seminal publication on human health risk assessment by the U.S. National Academy of Sciences/National Research Council (NRC, Citation1983), the risk assessment landscape, once bereft of methods, insights, and research, is now brimming—to the point where expert committees are needed to sort through the resultant cornucopia. Many of these committee deliberations are carefully and thoughtfully drafted, being based on a well-planned series of research efforts and workshops. Other deliberations are more limited, with some useful insights gained at the expense of other ideas in need of better articulation or reconsideration. Specifically with regards to dose–response assessment, one of the component parts of the NRC (Citation1983) paradigm, some committee recommendations have been mutually supportive, while others are clearly contradictory. The contradictory recommendations sometimes lead to differences in the development of risk assessment methods, often lead to differing interpretations of risk information, and occasionally result in remarkably different risk management decisions for the same chemical substance.

Understanding the biological basis of disease and its profile in humans is foremost in any medical discipline, with toxicology and epidemiology being no exception. In cases where the medical science lies more with prevention, such as with these latter two disciplines, understanding the etiology of any disease is often more difficult due in part to multiple potential origins and the interplay of risk factors. For example, in evaluating the significance of body weight loss in a 2-year study, where the chemical is in the food and the experimental animal can eat as much as it wants, a risk assessor should consider this loss as adverse only in relationship to the health of control animals, since often, the controls will overeat and not be as healthy as the experimental animals. Similarly, low-dose extrapolation of epidemiology data should consider the underlying biology and information on the presence or absence of precursor endpoints in the dose range of interest and other available Mode of Action (MOA) information, and not rely on linear regressions without prejudice. The guidance documents and committee reports discussed in this article provide perspectives on how to incorporate biological information on normal physiology and disease mechanisms to interpret toxicological and epidemiologic information.

Evolving technologies, such as those suggested by the NRC report for Toxicity Testing in the 21st Century (NRC, Citation2007a), can also help elucidate the biological basis of disease and inform the assessment of response in sensitive humans at low doses. The current defaults that toxicologists and epidemiologists often use for their dose–response assessments should not constrain the use of the full extent of this new technology. Likewise, risk assessment theory has similarly evolved. Specifically, risk assessment scientists now routinely promote the following: (1) development of a problem formulation (PF) step prior to the assessment to focus effort and resources, (2) use of chemical-specific adjustment factors (CSAFs) from empirical data rather than default uncertainty factors, (3) consideration of MOA information early in the assessment process, and (4) evaluation of dose–response assessment with human relevance (HR) frameworks. These evolved concepts have been developed by a number of national, international, and multinational scientific bodies, and encouraged by the NRC (Citation2007a, Citation2009) and many others, such as the Alliance for Risk Assessment (ARA, Citation2013). They now form the basis of risk assessment work worldwide, and are the standards against which new assessments should be judged.

These four concepts will also serve as an integrating structure for this discourse, which will address areas of consistency and areas of conflict among the various committee and agency recommendations.

As in any scientific review, it is important to specify what topics will not be covered. In this review, we will not discuss in any depth, screening level dose–response assessment (other than Hazard Index (HI)), exposure assessment, risk characterization, or risk communication, despite the importance of these topics. Nor will we focus on radiation standards of the National Ambient Air Quality Standards (NAAQS) of the US EPA. In the case of the radiation standards, the latest guidance document from the Committee on Biological Effects of Ionizing Radiation VII (BEIR, Citation2006) is available. In the case of the NAAQS, Bachmann (Citation2007) summarizes the history of setting NAAQS, and McClellan (Citation2011) emphasizes the role of scientific information in informing the EPA Administrator’s policy judgments on the level and statistical form of the NAAQS for a particular indicator and averaging time for a specific criteria pollutant.

Rather, we will focus on hazard identification and dose–response assessment, including the dichotomy of the practice in some organizations of separate default cancer and non-cancer extrapolations, and differing approaches to protecting sensitive individuals. Concordant recommendations among various committees will be highlighted; conflicting recommendations will be resolved, if possible, on the biological basis of adverse effect and through an understanding of the underlying PF/CSAF/MOA/HR frameworks.

Selected committee deliberations

Problem formulation linked to risk management solution

The concept of including problem formulation and a planning and scoping exercise prior to beginning the analysis phase of a risk assessment is generally embraced positively by all parties engaged in or affected by risk assessment or risk management decisions. Many parties, both outside and inside of the government (particularly at the U.S. Environmental Protection Agency; US EPA) have presented visions of how these pre-assessment elements would be incorporated, in principle, into the process. These visions are remarkably consistent with one another (see US EPA, Citation1992, Citation1998, 2000, Citation2006a, Citation2007; NRC, Citation1993, Citation1994, Citation1996, Citation2008a, Citation2009). The authors, however, have seen a significant level of concern expressed by parties outside of the agency that US EPA is only paying lip service to its purported commitment to implementing problem formulation and planning and scoping into its risk assessment/risk management process. In contrast to this perception by some, we assert that the US EPA routinely includes problem formulation, planning and scoping in its risk assessment and management work, as described in the remainder of this section.

In the first of an ever-growing series of publications from the NRC, the authors of the 1983 NRC report observed that risk assessments and related regulatory decisions issued by federal agencies have been “bitterly controversial.” Among the Committee’s key recommendations was “that regulatory agencies take steps to establish and maintain a clear conceptual [emphasis added] distinction between assessment of risks and consideration of risk management alternatives; that is, the scientific findings and policy judgments embodied in risk assessments should be explicitly distinguished from the political, economic, and technical considerations that influence the design and choice of regulatory strategies.”

Since then, risk assessments and related regulatory decisions issued by federal agencies have continued to be the subject of heated criticism. Among the aspects criticized is an ongoing and apparent dissonance between the construct and content of the hazard/risk assessment and the construct of the regulatory decision. In US EPA’s experience, this criticism has been leveled both from within the agency and from many outside sources, including the affected stakeholders. As a 1994 NRC report noted “Several commenters have concluded that the conceptual separation of risk assessment and risk has resulted in procedural separation to the detriment of the process.”

Based in part on this series of NRC reports, the US EPA began using the concept of problem formulation about twenty years ago, with the goal of helping to provide risk assessments that better fit the decision-makers’ needs (US EPA, Citation1992; NRC, Citation1993). The USEPA’s framework for ecological risk assessment, later incorporated into the agency’s 1998 ecological risk assessment guidelines, described an initial phase, to occur before any effort is expended on the risk assessment itself, as problem formulation.

Problem formulation includes a preliminary characterization of exposure and effects, as well as examination of scientific data and data needs, policy and regulatory issues, and site-specific factors to define the feasibility, scope, and objectives for the ecological risk assessment. The level of detail and the information that will be needed to complete the assessment also are determined (US EPA, Citation1992).

This phase was meant to include a planning discussion between the risk assessor(s) and the risk manager(s), not for the risk manager to provide the expected “answer” but, rather, to clarify expectations by laying out for all participants information such as what is already known, what data need to be developed and the context in which this information would be used. Importantly, these guidelines acknowledge that “interested parties,” in addition to the agency’s risk assessors and risk managers, may “take an active role in planning, particularly in goal development.” The guidelines describe interested parties, also called “stakeholders,” as:

Federal, State, tribal, and municipal governments, industrial leaders, environmental groups, small-business owners, landowners, and other segments of society concerned about an environmental issue at hand or attempting to influence risk management decisions. Their involvement, particularly during management goal development, may be key to successful implementation of management plans since implementation is more likely to occur when backed by consensus. Local knowledge, particularly in rural communities, and traditional knowledge of native peoples can provide valuable insights about ecological characteristics of a place, past conditions, and current changes. This knowledge should be considered when assessing available information during problem formulation (USEPA, Citation1998).

Within US EPA, only the Office of Pesticide Programs retains, with rare exception, both the risk assessment and risk management functions related to its legislative mandates (as per PF-C and MD). The other offices whose regulatory responsibilities depend, in part, on risk assessment, have yielded some, if not all, of their assessment tasks to a separate office. It could be said that this “solution” actually has impeded the agency from implementing its own problem formulation/planning and scoping framework(s) in many specific instances, because of the absence of adequate collaboration and coordination between the risk assessors and the risk managers.

As noted above, although the US EPA had embraced formulation as the first step in developing a risk assessment, a series of NRC reports over the last two decades appear to express the opinion that problem formulation is only infrequently practiced by the US EPA and others conducting risk assessments. While this criticism might have been warranted at the time the 1994 and 1996 NRC reports were developed, it was misguided by the time the 2009 NRC report was underway. The existence of several generic guidance documents and many existing examples of their application (detailed below) seems to have been missed or ignored.

Improved planning and attention to the uses of the risk assessment were recommended by the NRC committee studying the US EPA’s implementation of the 1990 Clean Air Act amendments (NRC, Citation1994); it stated that such planning will aid in efficient resource allocation. That committee recommended that “the ‘Red Book’ paradigm should be supplemented by applying a cross-cutting approach that addresses the following six themes in the planning and analysis phases: default options, validation, data needs, uncertainty, variability, and aggregation.” Finally, the Committee expressed support for implementation of a tiered, iterative risk assessment approach.

The importance of problem formulation in the early stages of a risk assessment, and incorporation of an iterative process with feedback was further emphasized in the 1996 NRC report. In addition, the Presidential/Congressional Commission on Risk Assessment and Risk Management (Citation1997) emphasized the importance of this initial step in designing a risk assessment, stating, “The problem/context stage is the most important step in the [Commission’s] Risk Management Framework.” Both the NRC and Presidential/Congressional Commission committees noted the importance of including all affected parties in the discussion, early and often, rather than restricting the discussion solely to agency risk assessors and risk managers. This does not necessarily mean that these affected parties will have a seat at the table when the final assessment or regulatory decision is made, but, rather, that they have had an opportunity to provide information that may help to make the assessment and associated decision(s) more complete and robust. Particularly good examples of substantive stakeholder involvement in planning and executing risk assessment and regulatory decisions can be seen in the processes employed by US EPA’s Office of Solid Waste and Emergency Response as its regional offices develop site-specific assessments (US EPA, Citation1997, Citation1999, Citation2001) and by the Office of Pesticide Programs as it implements the 1996 Food Quality Protection Act (US EPA, Citation2011a, Citation2011b, Citation2011c).

The 2009 NRC report focuses a great deal of attention on the design of risk assessments, devoting an entire chapter to this topic. It includes a schematic described as a “framework for risk-based decision-making that maximizes the utility of risk assessment.” Inferred to be a novel approach to this issue, the NRC framework looks remarkably like the framework schematics included in many of USEPA’s already-published guidance documents (e.g. US EPA, Citation1992, Citation1998, 2000, Citation2001, Citation2003a, Citation2006a, Citation2007). Each of these frameworks usually includes three general phases, the first presenting concepts of problem formulation, planning and scoping, the second reflecting the risk assessment phase and, the third focused on the integration of other relevant factors (e.g. economics, technology, political considerations) to reach and communicate the management decision(s). The NRC (Citation2009) Committee noted that the conceptual framework is missing from other agency guidance, although it is unclear to what “other guidance” they were referring. The NRC framework, however, does incorporate a level of detail not seen in most of USEPA’s framework documents, including specific questions in each of the three phases (Phase I: Problem formulation and scoping; Phase II: Planning and conduct of the risk assessment; Phase III: Risk Management).

Furthermore, the NRC Committee was very clear that it saw value in crafting a risk assessment that “ensures that its level and complexity are consistent with the needs to inform decision-making.” The 2009 NRC framework also reinforces the importance of having “formal provisions for internal and external stakeholder involvement at all stages.” The Committee also recommended that USEPA pay increased attention to the design of risk assessment in its formative stages and that USEPA adopt a framework for risk-based decision-making that embeds the Red Book risk assessment paradigm into a process with (1) initial problem formulation and scoping, (2) upfront identification of risk-management options, and (3) use of risk assessment to discriminate among these options.

Unfortunately, these recommendations do not necessarily mean that the NRC framework is better than existing ones, including those of US EPA. In fact, the agency is often asking the same questions when it implements its frameworks for specific cases, but one needs to read and study the specific case to understand its application.

Furthermore, although problem formulation was initially addressed at US EPA in the context of ecological risk assessment, a number of agency-wide and/or Office of Research and Development guidance documents that include an analysis phase for both ecological and human health risk assessment now incorporate the concept of problem formulation as the critical first step in the risk assessment process. Some examples of generic guidance include the Risk Characterization Handbook (US EPA, 2000), the Framework for Cumulative Risk Assessment (US EPA, Citation2003a), the Framework for Assessing Health Risks of Environmental Exposures to Children (US EPA, Citation2006a) and the Framework for Metals Risk Assessment (US EPA, Citation2007). The Risk Characterization Handbook contains several case studies of both human health and ecological concerns, each of which includes a discussion of how problem formulation was implemented. The Framework for Assessing Health Risks of Environmental Exposures to Children was developed as the result of a collaborative effort with the International Life Sciences Institute (ILSI), which sponsored a multi-stakeholder, multi-disciplinary workshop to craft the framework (Daston et al., Citation2004; Olin & Sonawane, Citation2003).

Moreover, most US EPA program offices and regions also have crafted a set of principles tailored to their specific circumstances (e.g. US EPA Citation1999, Citation2001, Citation2011d). Examples include:

The Office of Pesticide Program’s (OPP’s) Pesticides Registration Review Process, implemented after completion of the Food Quality Protection Act-mandated tolerance reassessment (US EPA, Citation2006b); currently there are dockets open for 240 registered active ingredients undergoing reevaluation of their regulatory status (US EPA, Citation2012b);

The process of the Office of Air Quality Planning and Standards (OAQPS) for reviewing the National Ambient Air Quality Standards (NAAQS; US EPA, Citation2009); this process is currently being used in the reassessment of lead (US EPA Citation2011e) and the oxides of nitrogen (US EPA, Citation2012c);

The Office of Water’s (OW’s) draft framework for integrated municipal and wastewater plans of its National Pollutant Discharge Elimination System (NPDES) program (US EPA, Citation2012d); and

The Multi-criteria Integrated Resource Assessment (MIRA) approach employed by Region III (US EPA, Citation2003b); specific examples of its application are listed on the Region’s MIRA website(http://www.epa.gov/reg3esd1/data/mira.htm).

The concept of problem formulation also has been embraced internationally through the leadership of the World Health Organization (WHO), especially its International Programme on Chemical Safety (IPCS), with significant involvement from US EPA. Recent publications that acknowledge problem formulation as a critical component of the risk assessment/risk management paradigm include:

Integrated Risk Assessment (Birnbaum et al., Citation2001; Suter et al., Citation2003);

Environmental Health Criteria 237- Principles for Evaluating Health Risks in Children Associated with Exposure to Chemicals (WHO IPCS, Citation2006);

Uncertainty and Data Quality in Exposure Assessment. Part 1. Guidance Document on Characterizing and Communicating Uncertainty in Exposure Assessment, Harmonization Project Document No. 6 (WHO IPCS, Citation2008);

Environmental Health Criteria 239 - Principles for Modeling Dose–Response for the Risk Assessment of Chemicals (WHO IPCS, Citation2009a);

Environmental Health Criteria 240 - Principles and Methods for the Risk Assessment of Chemicals in Food (WHO IPCS, Citation2009b; Renwick et al, Citation2003);

Characterization and Application of Physiologically Based Pharmacokinetic (PBPK) Models in Risk Assessment. (WHO IPSC, Citation2010);

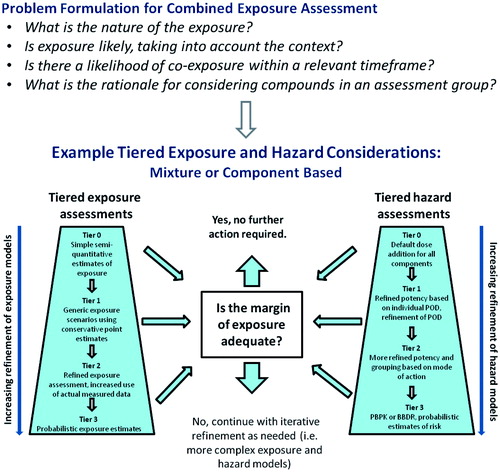

Risk Assessment of Combined Exposure to Multiple Chemicals: A WHO/IPCS Framework (Meek et al., Citation2011);

Guidelines for Drinking-water Quality-Fourth Edition (WHO, Citation2011).

Microbial Risk Assessment Guideline Pathogenic Microorganisms With Focus on Food and Water (USDA, Citation2012).

Expert groups and world health organizations have nearly always used a problem formulation construct in the deliberations of their assessment work, but this construct has not always been apparent or consistent.

Recommendations that have emerged from this analysis and related efforts are:

The concept of problem formulation as a prelude to a risk assessment work is generally, and should be uniformly, embraced globally by all health organizations.

Differences in risk management decisions, and in the products of the individual components of hazard characterization, dose–response assessment, exposure assessment, and risk characterizations, should be expected based on different problem formulations.

Risk management input on problem formulation, with its associated planning and scoping, is essential in order for risk assessment scientists to develop useful information. This upfront identification of risk management options should not be seen as changing or subverting the scientific process of risk assessment.

Evolution of The “Safe” Dose and Its Related Safety Factor(s)

The concept of a safe dose is based upon the identification of a thresholdFootnote1 for an adverse effect.Footnote2 This threshold is based on an experimentally determined Lowest Observed Adverse Effect Level (LOAEL), and its matching experimentally determined subthreshold dose, the No Observed Adverse Effect Level (NOAEL), the latter of which is adjusted to the safe dose through the use of a composite safety factor that is determined based on the available data. This concept has been in use since the late 1950s to establish safe dose in order to protect public health from potential chemical exposures. Exceedances of these safe doses have been used to describe situations of potential risk associated with such exposures to the public. This concept was built on two major assumptions: that protecting against the critical effectFootnote3 protects against subsequent adverse effects, and that the use of a safety factor (now commonly referred to as uncertainty factor) lowers the acceptable exposure level to a resultant “safe” dose, that is, one below the range of the possible thresholds of the critical effect in humans, including sensitive subgroups.

This safe dose was called the Acceptable Daily Intake (ADI) and was used for oral exposure to chemical contaminants and approved food additives. Several historical accounts describe early deliberations on this concept (e.g. Clegg, Citation1978; Dourson & DeRosa, Citation1991; Kroes et al., Citation1993; Lu, Citation1988; Truhaut, Citation1991; Zielhuis & van der Kreek, Citation1979). Although quite useful, a general problem with this concept has been that its key features, that is, the element of judgment required to define a NOAEL, and determination of an appropriate safety factor based upon the content and quality of the underlying database, did not allow a ready incorporation of dose–response data to refine the estimate. Starting after the 1970s, several initially separate series of research efforts or deliberations occurred that prompted the evolution of the safe dose and related safety factor concept.

The first effort started with Zielhuis & van der Kreek (Citation1979) who investigated the use of safety factors in the occupational setting. Similar to these investigators, the US EPA separately reviewed oral toxicity data for human sensitivity, experimental animals to human extrapolation, insufficient study length (e.g. 90-day study only), and absence of dose levels without adverse effects (Dourson & Stara, Citation1983). Typically, the use of all of these factors would occur during the derivation of a “safe dose” for data-poor chemicals. Afterwards, in light of the then-recent NRC (Citation1983) publication, US EPA changed its parlance to better reflect a separation of risk assessment and risk management. “Safety factor” became “uncertainty factor” and “ADI” became “Reference DoseFootnote4 (RfD)” (Barnes & Dourson, Citation1988). Other organizations (e.g. U.S. Food and Drug Administration, WHO/Food and Agriculture Organization Joint Expert Committee on Food Additives, and Joint Meeting on Pesticide Residues) have retained the original terminology, however.

US EPA expanded the approach to include the Reference Concentration (RfC), a “safe” concentration in air analogous to the RfD, using dosimetric adjustments to the inhaled experimental animal concentration to improve the extrapolation to humans (Jarabek, Citation1994, Citation1995a, Citationb; Jarabek et al., Citation1989). This yielded, for the first time, a consistent and scientifically credible replacement of part of the uncertainty factor for extrapolation from experimental animal to human, reflecting data-informed differences in biology. This transition was codified by US EPA with its publication of methods for development of inhalation RfCs (US EPA, Citation1994, with an update 2012g); a text on both RfDs and RfCs followed (US EPA, Citation2002a).

A Margin of Exposure (MOE) analysis is also often developed in chemical risk assessment for non-cancer toxicity, and, occasionally, for non-genotoxic carcinogens. A MOE is developed by dividing the NOAEL or benchmark dose (BMD) of the critical effect by the expected or measured exposures in humans. Conventionally, the default target MOE is drawn from uncertainty factors of 10 each for inter- and intra-species extrapolation, or other factors as appropriate for the critical effect of concern, to assess whether a sufficient MOE is attained to ensure safety. More recently, the MOE has also been used for genotoxic carcinogens (EFSA, Citation2012), applying a similar approach.

Another related effort started in the early 1990s with the seminal publications of Renwick (Citation1991, Citation1993). Renwick proposed replacement of the traditional 10-fold uncertainty factors addressing variability (experimental animal to human extrapolation or within human variability) with default subfactors for either toxicokinetics or toxicodynamics. In turn, these default subfactors could be replaced with chemical-specific data, when available.

As part of its harmonizationFootnote5 project, the WHO IPCS implemented a slightly modified Renwick approach (IPCS, Citation1994), followed by a decade-long series of workshops, case studies, and reviews that culminated in the development of methods for developing Chemical-Specific Adjustment Factors (CSAFs; IPCS, Citation2005). This work was built on numerous, often related, publications (e.g. Dourson et al., Citation1998; Ginsberg et al., Citation2002; Hattis et al., Citation1999; Kalberlah & Schneider, Citation1998; Naumann et al., Citation2005; Renwick, Citation1998a; Renwick & Lazarus, Citation1998b; Renwick et al., Citation2000, Citation2001; Silverman et al., Citation1999; Zhao et al., Citation1999). The IPCS effort propelled several countries to improve their process of non-cancer dose–response assessment (Health Canada by Meek et al., Citation1994; US EPA, Citation2002a, Citation2011e). Other groups have also followed the IPCS paradigm, such as NSF International (Ball, Citation2011).

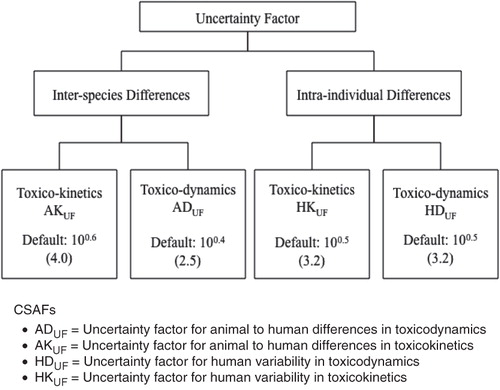

The IPCS (Citation2005) CSAF guidance resulting from this effort specifies the approach for evaluating the adequacy of the data for replacing one or more of the four subfactors addressing variability by chemical-specific or chemical-related data. Each subfactor is independently evaluated to determine if the data are sufficient to generate a CSAF, or whether a default factor needs to be used, as shown in .

Figure 1. The Chemical Specific Adjustment Factor (CSAF) scheme of the International Programme on Chemical Safety (Citation2005). The individual toxicokinetic and toxicodynamic factors are defaults to be replaced with chemical specific data, which can lead to data-derived values that are less than, equal to, or greater than the default value.

The numerical value for a CSAF is dictated by the data and could range from less than 1 for interspecies differences to considerably more than the default subfactor for any or all of them. As a consequence, the composite uncertainty factor may be either less than or more than the usual default value, which is typically 100. If the composite factor is less than the usual default value (i.e. <100) for a particular critical effect, IPCS (Citation2005) recommends an evaluation of other endpoints to which the usual default value might be applied, since one of these other endpoints might then become the critical effect that determines the RfD, RfC, or Tolerable Daily Intake (TDI). Although suitable data may be available only on occasion, analysis of available data on a chemical using the framework presented in the IPCS (Citation2005) guidance provides a useful method of assessing the overall adequacy of the data for risk assessment purposes. In addition, the IPCS guidance can help direct research to identify and fill data gaps that would improve development of the safe dose. A CSAF-type approach can also be used to refine interspecies dosimetry for cancer assessments regardless of the low-dose extrapolation approach.

During this time, several other publications investigated and further developed uncertainty factors. For example, the development of a fifth area of uncertainty, that of toxicity database deficiency, was described (Baird et al., Citation1996; Dourson et al., Citation1992, Citation1996). US EPA (Citation2002b) and Fenner-Crisp (Citation2001) also published on the Food Quality Protection Act (FQPA) safety factor, showing that the hazard portion of this safety factor is addressed by proper application of this database deficiency uncertainty factor, in conjunction with the uncertainty factor intended to address human inter-individual variability in susceptibility.Footnote6 This conclusion was also reached by Dourson et al. (Citation2002). Also, during this time Swartout et al. (Citation1998) published an approach for developing a probabilistic description for individual and combined factors; Lewis et al. (Citation1990) and Lewis (Citation1993) discussed the development of adjustment factors based on data; and Pieters et al. (Citation1998) conducted a statistical analysis of toxicity data in an evaluation of the uncertainty factor for subchronic-to-chronic extrapolation.

Recommendations that have emerged from this analysis and related efforts are:

CSAF guidelines exist for using chemical-specific or chemical-related data to characterize interspecies differences and human variability and replace default uncertainty factors. Application of these guidelines should be a standard part of developing toxicity values, as indeed they already are for many.

Scientifically based defaults are important and useful when data are insufficient to develop an adequate CSAF.

Additional factors may be utilized to account for database deficiencies such as insufficient study length (e.g. 90-day study only), absence of dose levels without adverse effects, available effects are clinically severe, or lack of data on key endpoints (e.g. developmental toxicity). Typically, these factors are applied during the derivation of a “safe dose” for data-poor chemicals.

From critical effects to mode of action (MOA)

Risk assessment is in a state of scientific rebirth, and in order to understand the drivers behind this trend, it helps to look back over the evolution of the regulatory risk assessment process. Beginning with the 1950s, FDA and others relied on the concept of a critical target organ, or a “critical effect” (refer to footnote 3) as described later by USEPA (e.g. Barnes & Dourson, Citation1988). Due in part to limitations in standard toxicity testing methods at the time, the critical effect was typically an overt toxic effect, resulting in an endpoint now referred to as an “apical effect”, and often had direct clinical relevance.

As additional toxicological information was published, scientific judgment became important in distinguishing adaptive and compensatory effects from adverse effects and in identifying the critical effect and its relevant precursor effects. shows how these effects relate to each other. Although this severity continuum has been generally accepted, a key limitation to its use is that the definition of adverse versus adaptive effects often generates controversy for individual chemicals, and often remains a challenge to toxicologists and risk assessors alike, even when an appreciation of the underlying clinical disease is considered.

Table 1. Continuum of effects associated with any exposure to xenobiotics reflecting a sequence of effects of differing severity (ARA, 2012).

A recent publication from an ILSI/HESI Committee, chartered to specifically address definitions of adverse versus adaptive, recommended the following language (Keller et al., Citation2012):

Adverse Effect: A change in morphology, physiology, growth, development, reproduction, or life span of a cell or organism, system, or (sub)population that results in an impairment of functional capacity, an impairment of the capacity to compensate for additional stress, or an increase in susceptibility to other influences;

Adaptive Response: In the context of toxicology, the process whereby a cell or organism responds to a xenobiotic so that the cell or organism will survive in the new environment that contains the xenobiotic without impairment of function.

This suggested language needs to be further interpreted, however, since on the face of the definition, it appears to suggest that the death of a single cell is potentially adverse, whereas redundancy within an organ would argue that it is not (Rhomberg et al., Citation2011). A useful interpretation perhaps is to consider that an adverse effect results in the impairment of the functional capacity of the organism or higher levels of organization. This interpretation would be more consistent with the definition of adverse found in . The Key Events Dose–Response Framework (KEDRF; Julian et al., Citation2009) provides another means to delineate and analyze the component elements of dose–response leading up to an adverse effect and factors influencing those events (e.g. dose level and frequency, thresholds, the degree and fidelity of DNA repair, homeostatic mechanisms).

Another approach to enhance this interpretation was published by Boekelheide & Andersen (Citation2010) because of the increasing volume of new, high-throughput data. They considered the key challenge to be the ability to distinguish between acceptable (i.e. homeostatic, adaptive and perhaps compensatory) perturbations of a pathway and excessive (i.e. critical effect) or adverse or clinically relevant effects or perturbations. Furthermore, they discussed new approaches to evaluate dose–response relationships as functions of the probabilities of biological system failure, determined in a stepwise manner through assays that measure progressive perturbation along toxicity pathways. From a biological systems perspective, it may be useful to construct such pathway analyses based on homeostasis as the organizing circuitry or network. In this manner, the dose–response of biological system failure is dictated by processes overwhelming homeostasis. From such a perspective, the “cascade of failures” of Boekelheide & Andersen (Citation2010) ensues only when homeostasis is overwhelmed. These changes in the definition of “adverse” with the use of different types of data illustrates how one aspect of problem formulation might change the underlying biology is better understood.

That adverse effects are the product of a cascade of failures in protective processes, has also been discussed by others. Examples include error-prone or lack of DNA repair of a pro-mutagenic DNA adduct (Pottenger & Gollapudi, Citation2010), or failure of homeostasis and subsequent induction of fatty liver (Rhomberg, Citation2011). In addition, various methods have been proposed or are being developed to utilize more relevant biological data to construct models for predicting apical adverse responses, including many in silico approaches, molecular or mechanistic data from cells or tissues, or early biomarkers (Aldridge et al., Citation2006; Alon, Citation2007; Andersen & Krewski, 2009; Kirman et al., Citation2010; Yang et al., Citation2006). Most recently, US EPA’s ToxCast™ program has published a number of preliminary prediction models (Martin et al., Citation2009, Citation2011; Shah et al., Citation2011; Sipes et al., Citation2011).

The migration away from the conventional use of critical effects, or perhaps the integration of genomics data into the current severity scheme of , will likely require sophisticated methodologies, given the complexity of processes underlying biological pathways or networks. Prior to this, however, these newer test methods must be shown to be scientifically valid and the prediction models must be shown to have the requisite degree of scientific confidence necessary to support regulatory decisions. As discussed by Bus & Becker (Citation2009), approaches that should be considered for method validation and predictivity include those discussed by the NRC (Citation2007b) for toxicogenomics and the Organization for Economic Cooperation and Development (OECD) principles and guidance for the validation of quantitative structure activity relationships (OECD, Citation2007).These methods and prediction models hold great promise, and significant progress continues to be made to develop and build scientific confidence in them. However, the challenges are significant. The analysis by Thomas et al. (Citation2012a) concluded “… the current ToxCast phase I assays and chemicals have limited applicability for predicting in vivo chemical hazards using standard statistical classification methods. However, if viewed as a survey of potential molecular initiating events and interpreted as risk factors for toxicity, the assays may still be useful for chemical prioritization.”

A second key limitation of this severity continuum is that it focuses on apical, high-dose effects. In particular, it does not always address the problems arising from making inferences from high-dose animal toxicity studies to environmentally relevant exposures. While it is now well recognized that dose transitions and non-linearities in dose–response (Slikker et al., Citation2004a,Citationb) should be integrated into extrapolation of effects from high-dose animal toxicity studies to very much lower human exposures, this was not always the case. In fact, early approaches to quantitative risk assessment, such as those described in the US EPA (Citation1986a) cancer risk assessment guidelines, did not focus on the biology per se, because, at that point in time, the scarcity of mechanistic data and the limited theoretical understanding of the biological complexity of carcinogenesis made it too challenging to address these issues adequately. Although these older guidelines allowed for the use of chemical-specific data, assessments typically applied a default linear modeling approach for carcinogens when critical information about mode of action, genotoxicity or other relevant biological knowledge was unavailable, limited, or of insufficient quality. With a dearth of information, as was typical in those days of risk assessment, a general mind-set to apply defaults was pervasive. However, as described further later in this section, the growing availability of mechanistic information and increased understanding of the biology of disease processes places greater responsibility on risk assessors to utilize all the available effects data (from homeostatic, adaptive, compensatory, critical, adverse and clinical outcomes) within the focus and limitations identified in the problem formulation. Unfortunately, in some US government programs the default approaches have been so ingrained that it has proven very difficult to incorporate this newer, biologically based information and methods.

Although the US EPA(Citation1986a) cancer risk assessment guidelines and related early US EPA publications for non-cancer toxicity (Barnes & Dourson, Citation1988) emphasized defaults, they provided a framework for considering integration of data obtained from different study types. Thus, these guidelines were intended to be sufficiently flexible to accommodate new knowledge and assessment methodologies as such methods were developed. One advantage of these first steps was to reduce the required effort in hazard identification by concentrating on a single, manageable piece of information: the critical effect. By focusing the risk assessment on a single critical effect and setting risk values to be protective for that critical effect, it was presumed that exposed populations would be protected against all other apical effects of concern, as such effects would require higher doses to manifest. The US EPA (Citation1986a) guidelines also allowed for the incorporation of mechanistic data in place of default extrapolation procedures despite the fact that such data were rarely available at the time.

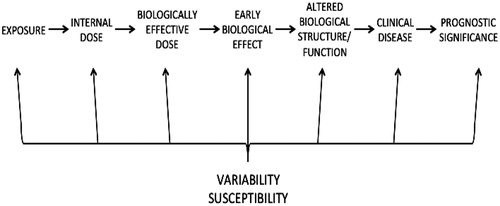

Schulte (Citation1989) and NRC (Citation1989) opened a new chapter in risk assessment by providing a structure for considering the series of steps that occurs between exposure and the toxic effect () [adapted from Schulte, Citation1989]. These steps delineate areas for acquisition of data illuminating how a chemical might cause the observed effects. Specific and quantifiable biomarkers related to each specific step can be used to replace the “black box” between exposure and effect. The NRC (Citation1989) report classified biomarkers as markers of exposure, markers of effect, and markers of susceptibility.

Figure 2. Series of steps that occurs between exposure and the effect of clinical disease and prognostic significance. Adapted from Schulte (Citation1989).

Schulte’s pathologic progression diagram laid the foundation in part for work by US EPA, IPCS, and others attempting to determine the type and level of information needed to use non-default approaches. A key concept in this evolution was a focus on MOA rather than mechanism of action. While a mechanism of action reflects the detailed, molecular understanding of a biological pathway, the MOA characterizes a more general understanding of how the chemical acts. The MOA is defined as a sequential series of key events, with a key event being defined as an empirically observable and quantifiable precursor step that is a necessary (but not necessarily sufficient) element of the MOA or is a biologically based marker for such an element. Determination of dose–response for key events is an important aspect of establishing an MOA. The US EPA cancer guidelines (USEPA, Citation1996, Citation2005) are key documents describing the potential applications of MOA data. Specifically, these guidance documents recommend using data as the starting point where possible (data before defaults), and focusing upon assessment of weight of evidence, with the goal of applying the MOA approach to all appropriate data.

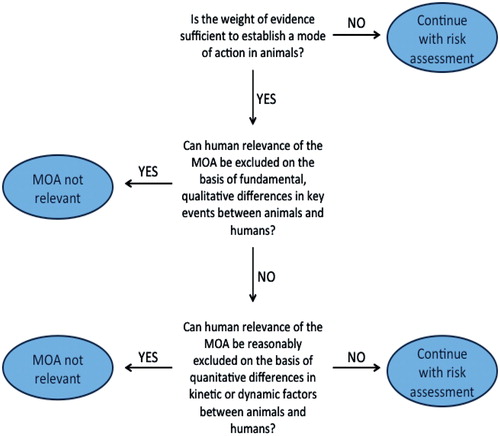

During the same time period, a number of projects at ILSI and IPCS further developed the MOA approach, initially for carcinogens (Sonich-Mullin et al., Citation2001), and then for non-carcinogens (Seed et al., Citation2005), with particular emphasis on using MOA information to evaluate HR, culminating in the development of the mode of action/human relevance framework (MOA/HRF) (Meek et al., Citation2003; IPCS, Citation2006; Sonich-Mullin et al., Citation2001). In this framework () [from WHO IPCS, Citation2007], one first uses the modified Hill criteria to determine whether the data are sufficient to determine the acting MOA in experimental animals. If the MOA is established in an experimental animal model, the HR framework goes on to evaluate whether the HR of the MOA can be excluded, first based on fundamental, qualitative differences in key events between animals and humans, and then based on quantitative differences.

Figure 3. The mode of action/human relevance framework (MOA/HRF). Adapted from WHO (Citation2007).

Both qualitative and quantitative differences in MOA and resulting responses should be considered. If the HR cannot be excluded, then the MOA is assumed to be applicable to humans, and then quantitative toxicokinetic or toxicodynamic data can be used to replace defaults with CSAFs. Qualitatively, if a MOA is determined to not be relevant to humans, then that MOA can be excluded from the human health risk assessment (e.g. male rat kidney tumors caused by alpha 2u-globulin nephropathy—Hard et al., Citation1993). Other MOAs or endpoints caused by that chemical of concern can then be evaluated to determine whether they are relevant to humans. One clear strength of this approach is that both chemical-specific information and a general understanding of biology and physiology are used to address fundamental questions regarding the MOA, dose–response, and toxicity of a specific chemical. In the future, advanced mechanistic-based molecular screening approaches may increasingly reveal quantitative differences between human-based assays and animal-based assays that may improve the accuracy of risk assessments.

The MOA/HRF continues to be refined as experience is gained in its application. For example, it is now recognized that absolute responses to the framework questions are not needed. Instead, the MOA/HRF questions provide a structure for describing the degree of confidence and uncertainties associated with application of available data in risk assessments (Meek & Klaunig, Citation2010). Another new element of this approach is recognition of the importance of “modulating factors,” such as polymorphisms, pre-existing disease states, and concurrent chemical exposures, which can affect susceptibility to risk (Meek, Citation2008). Detailed examples of modulating factors provided by Meek (Citation2008) included differences in the presence and activity of enzymes in biotransformation pathways, competing pathways of biotransformation, and cell proliferation induced by coexisting pathology. The MOA/HRF can also be used to aid in identifying populations or life stages that may have increased susceptibility.

Recently, the KEDRF was developed as an extension of the MOA/HRF (Boobis et al., Citation2009; Julien et al., Citation2009). This framework considers the dose–response and variability associated with each key event to better understand and potentially quantitate the impact of each of these factors on the risk assessment as a whole. For example, in considering mutation as a potential key event, one considers whether mutation is likely an early rate- or dose-limiting step, or whether it is secondary to other effects, such as cytotoxicity and compensatory cell proliferation (Meek & Klaunig, Citation2010). Furthermore, the KEDRF can be used to compare the dose necessary to elicit the key event(s) in relation to doses actually experienced in real-world exposures.

A number of advantages exist to the use of MOA data and the MOA/HRF/KEDRF or a similar framework. First, in-depth assessments can be conducted with it. Second, consideration of MOA issues can aid in developing and refining research strategies (Meek, Citation2008). For example, as an example of the interplay between problem formulation and biological considerations, discussions between risk assessors and research scientists can improve the efficiency of risk assessments by focusing resources on tiered and/or targeted approaches that are more efficient and reduce animal use (Meek, Citation2008; Meek & Klaunig, Citation2010), as envisioned by NRC (Citation2007a). Focusing on earlier, potentially more sensitive biological endpoints that represent key events will facilitate the use of data directly from environmentally relevant human exposures, and/or the use of in vitro model systems using human-derived tissues or cells. Such approaches would not only have increased relevance to human physiology, they also would have the potential to be used in high- or medium-throughput formats. Carmichael et al. (Citation2011) noted that even today, standard test protocols do not always provide the information needed to support a MOA analysis. Better incorporation of MOA information is facilitated by the increased understanding of the multiple ways in which such data can be incorporated into risk assessment, as well as in the early focus on hazard characterization.

Another advantage to the use of MOA data is that extensive research over the last 30 years can be reviewed to test the default linear and non-linear low dose extrapolation procedures. This has been done and non-linear MOAs for chemical carcinogens appear to be more scientifically justified, when compared with the default linear procedure, in a number of instances (Boobis et al., Citation2009; Cohen & Arnold, Citation2011). Cohen & Arnold (Citation2011) conclude that for non-DNA reactive carcinogens, “[i]n each of these instances studied in detail, the carcinogenic effect is because of an increase in cell proliferation. This can either be by a direct mitogenic effect (involving hormones and/or growth factors) or can be because of toxicity and regeneration.” They further state that knowledge garnered from research on mode of action that distinguishes DNA-reactive from non-DNA-reactive carcinogens “ …. forms the basis for the distinction of potential risks to humans in regulatory decision making.”

The KEDRF provides one structure for describing the degree of confidence and uncertainties associated with reliance on such knowledge and data in lieu of the linear default. For many substances that produce cancer in laboratory animal studies, even for those that may cause point mutations in genotoxicity assays, assessors are failing to objectively describe the evidence for alternatives to linear low-dose extrapolation (Boobis et al., Citation2009; Cohen & Arnold, Citation2011; Swenberg et al., Citation2011). Determining the most appropriate model(s) and approach(es) for regulatory risk assessment for a specific substance will be guided by statutes, policies and scientific knowledge. For either DNA-reactive or non-DNA-reactive substances, the statistical characterization of the low-dose dose–response relationship for tumorigenesis in vivo would require prohibitively large numbers of lab animals. Therefore, our expanding knowledge of the pathogenesis of cancer (Cohen & Arnold, Citation2011; Hanahan & Weinberg, Citation2000) and experimental data sets which evaluate MOAs in the carcinogenic process (e.g. biomarkers of DNA damage, cell-proliferation, pathway addiction, clonal expansion, DNA-methylation, tumor suppressor gene expression) are key to profiling substances according to patterns of biological responses. These profiles can be compared to the profiles of prototypical chemical carcinogens, and in this manner, empirical dose–response data of a substance can be integrated with knowledge of MOA, both broadly and for the specific chemical, to enhance the scientific basis of risk assessment. Scientific knowledge of MOA today is simply too advanced to support undue reliance upon a default-driven system for evaluating carcinogenic or non-carcinogenic risks to humans.

Although the use of MOA has been growing substantially, scientific hurdles to increased regulatory acceptance of MOA-based approaches remain. Such hurdles include lack of empirical data to define the shape of the dose–response curve at low, environmentally relevant exposures, and incomplete knowledge of what constitutes “scientific sufficiency” for the purpose of defining a MOA and its presumed low-dose dose–response relationship for regulatory risk assessment purposes. A 2009 workshop to address this general issue of integration of MOA into risk assessment made the following recommendations (Carmichael et al., Citation2011):

Establish a group of experts from a variety of backgrounds to generate a database of accepted MOAs and to identify minimum data requirements needed to characterize a chemical’s MOA;

Generate guidance documents describing the appropriate means by which MOA data can be incorporated into chemical risk assessments;

Promote a shift in current risk assessment practices to focus on hazard characterization using MOA data; also, identify what information could be provided by standard toxicity tests to inform the MOA evaluation;

Utilize a tiered and flexible framework to collect and apply MOA data to assessments;

Develop predictive methods for MOA based on evaluation of early key events;

Optimize use of data collected in human trials or clinical studies; and

Globally harmonize MOA terminology.

Furthermore, the NRC report entitled Toxicity Testing in the 21st Century: A Vision and a Strategy (NRC, Citation2007a), aims to harness MOA information ultimately to generate a battery of in vitro tests to evaluate chemical-specific toxicity, concomitantly reducing the need for whole animal studies and focusing research on biological pathways. A number of other consensus reports and guidelines also support measures to increase the focus on MOA as the central organizing principle, and use of in vitro data to reduce animal use, although the general consensus of these reports is that animal testing would not be eliminated, at least not in the near term (Carmichael et al., Citation2011; NRC, Citation2009; US EPA, Citation2005; WHO IPCS, Citation2007).

For the most part, with the exception of genotoxicity assays, the application of in vitro data directly into risk assessment is in its infancy. For such data to be effectively incorporated into hazard characterization and dose–response assessment, they will have to be vetted against traditional approaches and harmonized with clinical practice. As such approaches are proven valid over time, they are expected to streamline the risk assessment process itself, allowing for more efficient assessments and read-across interpretations among chemical groups that share MOAs. In addition, the use of cell culture models to address risk assessment will ultimately reduce the need for studies conducted in animals, minimizing animal usage to more focused, MOA studies. Moreover, such approaches facilitate prioritization of chemicals based on anticipated risk to human health.

Recommendations that have emerged from this analysis and related efforts are:

Focus must shift away from identification of only a toxicant-induced apical effect (critical effect) towards identification of a sequence of key events/MOA as the organizing principle for risk assessment.

Development and acceptance of standardized definitions are essential for adverse effect, adaptive response, and MOA, and for how such data may be integrated with clinical knowledge in order to improved risk assessment.

Identification of early, driving key events in toxicity/biological pathways will be necessary to apply MOA as the organizing principle. To effectively analyze such key events, a refined context of the dose necessary to elicit them is needed in relation to doses actually experienced from real-world exposures.

Low-dose extrapolation: transition from defaults to mode of action (MOA) understanding

Underlying assumptions

As noted above, the default approach for non-cancer dose–response assessment assumes a threshold for an adverse effect and uses uncertainty factors to estimate a safe dose, while current default dose–response approaches for cancer assessment often assumes that no threshold exists, resulting in a linear extrapolation from the observed animal data to low doses, especially if genotoxicity has been demonstrated or not adequately ruled out. Although recent publications have demonstrated many examples of in vitro and in vivo non-linear or even threshold dose–response for gene mutations and micronucleus formation induced by DNA reactive chemicals (Bryce et al., Citation2010; Doak et al., Citation2007; Gocke & Müller, Citation2009; Gollapudi et al., Citation2013; Pottenger et al., Citation2009), the linear dose–response approach has traditionally been selected based on this assumption of no threshold, and the resulting linear extrapolation is considered the most conservative. These two divergent approaches for dose–response assessment reflect not only these different assumptions, but also the fundamental nature of the cellular damage and the body’s ability to handle such damage. Different regulatory policies follow.

Linear extrapolation for cancer, for example, is based on a stochastic assumption: that the potential for critical damage to DNA is a matter of chance, and that this probability depends only on dose in a linear relationship, so that a doubling of dose results in a directly proportional increase in the chance of critical DNA damage (Dourson & Haber, Citation2010; US EPA, Citation1976; US EPA, Citation1986a; US EPA, Citation2005). It further assumes that a single heritable change to DNA can induce malignant transformation, leading to cancer. Other factors, such as an individual’s repair capacity or a chemical’s toxicokinetics are assumed to be independent of dose, so that the risk per unit dose is constant in the low-dose range. As further discussed by Dourson & Haber (Citation2010), low-dose linear extrapolation is a convenient health-protective approach. However, factors such as the efficiency of DNA repair, rate of cell proliferation, and chemical-specific toxicokinetics indicate that even if the dose–response for cancer is linear at low (environmentally relevant or lower) doses, the slope of that line is likely to be lower than the slope of the line extrapolating from the animal tumor data to zero (Swenberg et al., Citation1987). Cohen & Arnold (Citation2011) note that DNA-reactive carcinogens produce “strikingly non-linear dose–response” curves, due in part to an acceleration of damage, or lack of repair at higher doses when compared to lower doses. Fortunately, the new biological tools available now and in the near future will be capable of experimentally testing the assumption that DNA-reactive substances demonstrate linearity at low doses. For example, recent work on directly DNA-reactive radiation effects demonstrate non-linear dose–response for a variety of molecular events such as base lesions, micronuclei, homologous recombination, and gene expression changes following low-dose exposures (Olipitz et al., Citation2012). Outcomes of these and other experiments challenge the need for maintaining the dichotomy between cancer and non-cancer toxicities, and between genotoxic and non-genotoxic chemicals with respect to potential carcinogenic risk to humans at environmentally relevant exposures.

In contrast to mutagenic effects initiated by chemicals directly interacting with DNA, the safe dose assessment for non-cancer endpointsFootnote7 assumes that cells have many molecules of each protein and other targets. And, thus, damage to a single molecule is not expected to lead to a damaged cell. In fact, if damage to one molecule of a single cell were sufficient to cause it to die, redundancy in the target organ would mean that the cell’s death is not adverse, as more fully explicated by Rhomberg et al. (Citation2011). Based on the redundancy of target molecules and target cells, together with the capacity for repair, regeneration or replacement, these adverse effects are assumed to have a threshold. In addition, the sigmoidal dose–response curve often produced by quantal data (apical adverse effects) in linear space occurs as a result of the variability in individual responses and underlying genomic plasticity, reflecting differences in sensitivity to a given chemical. In the highly unlikely occurrence of no differences in sensitivity among individuals, the population dose–response would be expected to be a step function, with no response below a certain dose, and up to 100% response above that dose. Such responses are seldom, if ever, seen, thus supporting the assumption that the sigmoidal response curve for quantal data is influenced by individual variability in response.

Using mode of action (MOA) information

At the molecular level, log dose–response curves are typically sigmoidal because the response is the result of the ligand binding (reversibly) to a single receptor site and thus directly proportional to receptor binding (law of mass action; Balakrishnan, Citation1991). Furthermore, when response is mediated by a cascade of messengers following the initial binding of the ligand to the receptor, as long as the subsequent responses are the result of the messenger molecule binding to a single binding site, according to the law of mass action, the dose–response curve will be the same sigmoid shape as the initial receptor binding dose–response.

However, depending on the mechanism, the shape of the dose–response curve for the ultimate toxic effect (the apical effect) will vary, and as Conolly and Lutz (Citation2004) note, “Actions of a toxic agent in an organism are multifaceted, the reaction of the organism accordingly is pleiotropic, the dose–response is the result of a superimposition of all interactions that pertain.” Thus, it is important to articulate the MOA and analyze the corresponding key events. This may be particularly true in carcinogenesis, where, “six essential alterations in cell physiology collectively dictate malignant growth: self-sufficiency in growth signals, insensitivity to growth-inhibitory (antigrowth) signals, evasion of programmed cell death (apoptosis), limitless replicative potential, sustained angiogenesis and tissue invasion and metastasis” (Hanahan & Weinberg, Citation2000).

While these different default approaches reflect different underlying assumptions, there is general agreement on the preference for use of MOA to inform the dose–response assessment. Both of the recent NRC reports (NRC, Citation2007a, Citation2009) acknowledge the importance of using MOA to inform risk assessment, including improving animal to human extrapolations (or removing the need for such extrapolation) and characterizing the impact of human variability on these extrapolations. In fact, many recent guidance documents and committee recommendations point to the importance of incorporating MOA data into risk assessment approaches (e.g. Seed et al., Citation2005; US EPA, Citation2005; WHO IPCS, Citation2007).

To the degree that differences exist among these recommendations, they occur mostly in the application of MOA data in risk assessment. According to NRC (Citation2007a), US EPA (Citation2005) and others, MOA is the central driver, upon which decisions about dose–response assessment should be based. In contrast, the NRC (Citation2009), while stating that MOA evaluations are central, recommends the use of low-dose linear extrapolation as a default for non-cancer toxicity, and as the preferred default approach for harmonizingFootnote8 cancer and non-cancer dose–response assessment. Both of these NRC (Citation2009) recommendations appear to run counter to toxicological and biological principles (Rhomberg et al., Citation2011). Furthermore, these recommendations fail to address differences in the assumptions underlying the two default extrapolation procedures as discussed above.

Perhaps not surprisingly, these recommendations of NRC (Citation2009) also run counter to other recommendations to establish harmonized default risk assessment paradigms (Crump et al., Citation1997, Citation1998; IPCS, Citation2006; Meek, Citation2008; NRC, Citation2007a; US EPA, Citation2005). In fact, based on these publications, and given what is now known about toxicity mechanisms, DNA damage and repair, and homeostasis, a biological case can be made that the preferred default approach would be to harmonize non-cancer and cancer assessments using the KEDRF approach, or if insufficient information exists for the KEDRF, then on the basis of expected thresholds or non-linearities for adverse effect.

For example, Rhomberg et al. (Citation2011) published a critique of the NRC (Citation2009) report emphasizing that low-dose linearity for non-cancer effects was the exception, not the rule, and therefore, not an adequate basis for a universal default position. These authors counter the NRC (Citation2009) recommendation that low-dose linear is the scientifically justified default based on (1) considerations of distributions of inter-individual variability, (2) interaction with background disease processes, and (3) undefined chemical background additivity. Rhomberg et al. (Citation2011) show: (1) that the “additivity-to-background” rationale for linearity only holds if it is related to a specific MOA, which has certain properties that would not be expected for most non-cancer effects (e.g. there is a background incidence of the disease in the unexposed population that occurs via the same pathological process as the effects induced by exposure); (2) that variations in sensitivity in a population tend to only broaden, not linearize, the dose–response relationship; (3) that epidemiological evidence of purported linear or no-threshold effects at low exposures in humans, despite non-linear exposure-response in the experimental dose range in animal testing for similar endpoints, is most likely attributable to exposure measurement error rather than a true linear association. In fact, only implausible distributions of inter-individual variation in parameters governing individual sigmoidal response could ever result in a low dose linear dose–response. The last NRC (Citation2009) justification (i.e. undefined chemical background additivity) is also discounted as a justification by Dourson & Haber (Citation2010), since such background is better addressed by standard risk assessment methods for chemical mixtures. Indeed the dual NRC (Citation2009) recommendations to use low-dose linear extrapolation as a default for non-cancer toxicity, and as the preferred default approach for harmonization, work against US EPA’s mixtures guidelines that recommend adding individual chemical dose–response assessments together in the form of a HI.

Of the two different NRC (Citation2007a, Citation2009) approaches to harmonization of cancer and non-cancer risk assessment, the approach recommended by the NRC (Citation2007a) and others, to harmonize using MOA as the organizing principle, appears scientifically stronger. By relying on MOA as the harmonizing principle, the focus is more on the relevant biology rather than mathematical or statistical tools. A useful example of this preferred approach to cancer and non-cancer risk assessments based on US EPA (Citation2005) guidance is found in the published propylene oxide (PO) cancer MOA risk assessment (Sweeney et al., Citation2009). PO is a nasal respiratory irritant, and the PO cancer MOA is a complex series of biological responses driven by PO induction of severe, sustained GSH depletion in target rat nasal mucosa, which leads to nasal respiratory epithelial cell proliferation concomitant with significant irritation, and eventually to nasal tumors. The induction of cell proliferation and nasal irritation is identified as the critical key event and has been characterized a having a “practical threshold”; thus the harmonized cancer/non-cancer risk assessment relies on determination of exposure limits low enough to protect against induction of nasal irritation, which will then protect from both non-cancer and cancer effects (Sweeney et al., Citation2009). In this case, the MOA based on sustained cell proliferation was used to inform the risk assessment despite the fact that PO is capable of causing genetic damage. The authors concluded that the MOA data were sufficient in this case to justify a threshold model for dose–response assessment, instead of the default linear no-threshold model. Several authoritative bodies have cited this article and have accepted the threshold MOA for PO-induced cancer, including the European Union Scientific Committee on Occupational Exposure Levels (SCOEL, Citation2010) and the German MAK Commission (MAK, Citation2012).

Dose–response modeling

Linear extrapolation is a default policy choice that is intended to be health-protective in the face of uncertainties. Its use in this regard is considered to protect public health. However, a number of demonstrated non-linearities or thresholds exist in the biology of cancer, even for chemicals acting via a mutagenic MOA. Such non-linearities or thresholds can occur as a result of numerous biological processes, including uptake, transport, metabolism, excretion, receptor binding and DNA repair and other cellular defense mechanisms. Thus, when considering the entire dose–response curve, linear extrapolation from the apical endpoint of cancer needs to be carefully considered in relationship to the available evidence regarding the MOA and the resulting shape of the dose–response curve (Dourson & Haber, Citation2010; Hattis, Citation1990; Slikker et al., Citation2004a). The emphasis on MOA, then, is not determining whether non-linearities or thresholds exist, but more on how best to capture modern knowledge and understanding of the underlying biology related to the chemical’s dose–response curve and its ultimate relevance to adverse health outcomes.

Slikker et al. (Citation2004a, Citation2004b) described this issue with the idea of dose-dependent transitions. Not unlike the NAS (2009), they noted that quantal dose–response curves can often be thought of as “serial linear relationships,” due to the transitions between mechanistically linked, saturable, rate-limiting steps leading from exposure to the apical toxic effect. To capture this biology, Slikker et al. (Citation2004a) recommended that MOA information could be used to identify a “transition dose” to be used as a point of departure for risk assessments instead of a NOAEL/LOAEL/BMDL. This transition dose, if suitably adjusted to reflect species differences and within human variability, might serve as a basis for subsequent risk management actions.

The key events dose–response framework (KEDRF; Boobis et al., Citation2009; Julien et al., Citation2009) further incorporates a biological understanding by using MOA data and information on shape of the dose–response for key events to inform an understanding of the shape of the dose–response for the apical effect. This applies both to fitting the dose–response curve to the experimental data in the range of observation as well as for extrapolation. Advantages of the KEDRF approach include the focus on biology and MOA, consideration of outcomes at individual and population levels, and reduction of reliance on default assumptions. The KEDRF focuses on improving the basis for choosing between linear and non-linear extrapolation, if needed, and, perhaps more importantly, extending available dose–response data on biological transitions for early key events in the pathway to the apical effect; in short, another way to extend the relevant dose–response curve to lower doses.

Biologically based modeling can be used to yet further improve the description of a chemical’s dose–response. PBPK modeling predicts internal measures of dose (a dose metric), which can then be used in a dose–response assessment of a chemical’s toxicity, and so can directly capture the impact of kinetic non-linearities on tissue dose. This information can be used for such applications as improving interspecies extrapolations, characterization of human variability, and extrapolations across exposure scenarios (Bois et al., Citation2010; Lipscomb et al., Citation2012). PBPK models can also be used to test the plausibility of different dose metrics, and thus the credibility of hypothesized MOAs. Recent guidance documents and reviews (IPCS, Citation2010; McLanahan et al., Citation2012; USEPA, Citation2006c) provide guidance on best practices for characterizing, evaluating, and applying PBPK models. Additional extrapolation to environmentally relevant doses can be addressed with PBPK modeling.

Biologically based dose–response (BBDR) modeling adds a mathematical description of the toxicodynamic effects of the chemical to a PBPK model, thus linking predicted internal/tissue dose to toxicity response. Perhaps the best-known BBDR model is that for nasal tumors from inhalation exposure to formaldehyde (Conolly et al., Citation2003), which builds from the Moolgavkar-Venzon-Knudson (MVK) model of multistage carcinogenesis (Moolgavkar & Knudson, Citation1981).The formaldehyde BBDR predicts a threshold, or at most a very shallow dose–response curve, for the tumor response despite evidence of formaldehyde-induced genetic damage. MVK modeling of naphthalene, focusing on tumor type and joint operation of both genotoxic and cytotoxic MOAs, is illustrative of an MOA approach that can be taken to quantitatively evaluate risk (Bogen, Citation2008). Further, Bogen (Citation2008) demonstrates how to quantify the potential upper-bound, low dose, non-threshold (genotoxic) contribution to increase in tumor risk. Such approaches may be useful for quantitative risk evaluations for a number of substances where two or more MOAs may be involved. Such approaches are encouraged by guidelines for cancer risk assessment (EPA, 2005).

Other approaches that are less data-intense can use chemical-specific or chemical-related information to extend the dose–response curve into the range (or near the range) of the exposures of interest. These approaches allow one to use mechanistic data more directly to evaluate dose–response, without having to evoke default approaches of linear or non-linear extrapolation. Such biologically informed empirical dose–response modeling approaches have the goal of improving the quantitative description of the biological processes determining the shape of the dose–response curve for chemicals for which it is not feasible to invest the resources to develop and verify a BBDR. An advantage of these approaches is using quantitative data on early events (biomarkers) to extend the overall dose–response curve to lower doses using biology, rather than being limited to the default choices of linear extrapolation or uncertainty factors.

In one demonstration of this sort of approach, Allen et al. (submitted), outlined a hypothesized series of key events describing the MOA for lung tumors resulting from exposure to titanium dioxide (TiO2), building on the MOA evaluation of Dankovic et al. (Citation2007). Allen et al. used a series of linked “cause-effect” functions, fit using a likelihood approach, to describe the relationships between successive key events and the ultimate tumor response. This approach was used to evaluate a hypothesized pathway for biomarker progression from a biomarker of exposure (lung burden), through several intermediate potential biomarkers of effect, to the clinical effect of interest (lung tumor production). Similar work has been published by Shuey et al. (Citation1995) and Lau et al. (Citation2000) on the developmental toxicity of 5-fluorouracil.

Another approach to biologically informed empirical dose–response modeling was demonstrated by Hack et al. (Citation2010), who used a Bayesian network model to integrate diverse types of data and conduct a biomarker-based exposure-dose–response assessment for benzene-induced acute myeloid leukemia (AML). The network approach was used to evaluate and compare individual biomarkers and quantitatively link the biomarkers along the exposure-disease continuum. This work provides a quantitative approach for linking changes in biomarkers of effect both to exposure information and to changes in disease response. Such linkage can provide a scientifically valid point of departure that incorporates precursor dose–response information without being dependent on the difficult issue of a definition of adversity for precursors.

Even less computationally intensive mechanistic approaches are possible. For example, Strawson et al. (Citation2003) evaluated the implications of exceeding the RfD for nitrate, for which the critical effect is methemoglobinemia in infants. They based their analysis on information on the amount of hemoglobin in an infant’s body and the amount of nitrate required to oxidize hemoglobin to an adverse level; extrapolation was not needed, since data are available for the target population (human infants) in the adverse effect range.